fb6af2d0335de35e606bcafdf764bcf1.ppt

- Количество слайдов: 37

Network Ensembles (Committees) for Improved Classification and Regression Radwan E. Abdel-Aal Computer Engineering Department November 2006

Contents • Data-based Predictive Modeling - Approach, advantages, Scope, and Main tools • Need for high prediction accuracy • The network ensemble (committee) approach - Need for diversity among members and How to achieve it • Some Results - Classification: Medical diagnosis - Regression: Electric peak load forecasting • Summary

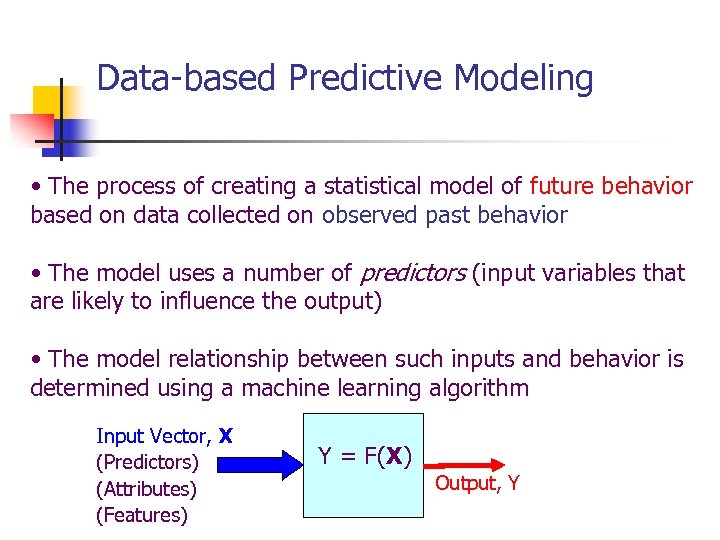

Data-based Predictive Modeling • The process of creating a statistical model of future behavior based on data collected on observed past behavior • The model uses a number of predictors (input variables that are likely to influence the output) • The model relationship between such inputs and behavior is determined using a machine learning algorithm Input Vector, X (Predictors) (Attributes) (Features) Y = F(X) Output, Y

Advantages over other modeling approaches • Thorough theoretical knowledge is not necessary • Less user intervention (Let the data speak!) (No biases or pre-assumptions on relationships) • Better handling of nonlinearities, complexities • Greater tolerance to noise, uncertainties (Soft Computing) • Faster and easier to develop • Utilizes the loads of computerized historical data available now in many disciplines

Scope n n n Environmental: - Pollution monitoring, Weather forecasting Finance and business: - Loan assessment, Fraud detection, Market forecasting - Basket analysis, Product targeting, Efficient mailing Engineering: - Process modeling and optimization, Load forecasting - Machine diagnostics, Predictive maintenance Medical and Bio Informatics - Screening, Diagnosis, Prognosis, Therapy, Gene classification Internet: - Web access analysis, Site personalization

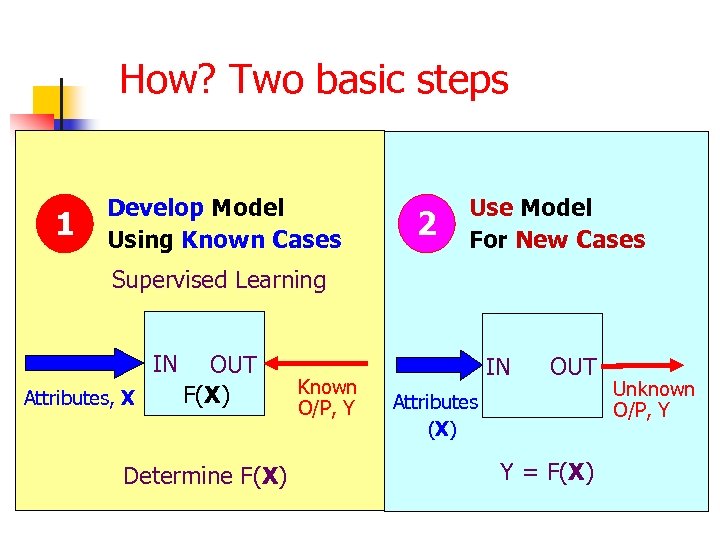

How? Two basic steps 1 Develop Model Using Known Cases 2 Use Model For New Cases Supervised Learning IN Attributes, X OUT F(X) Determine F(X) Known O/P, Y IN OUT Attributes (X) Y = F(X) Unknown Rock Y O/P, Properties

Data-based Predictive Modeling by supervised Machine learning n n n Database of solved examples (input-output) Preparation: cleanup, transform, derive new attributes Split data into a training and a test set Training: Develop model on the training set Evaluation: See how the model fares on the test set Actual use: Use promising model on new input data to estimate unknown output

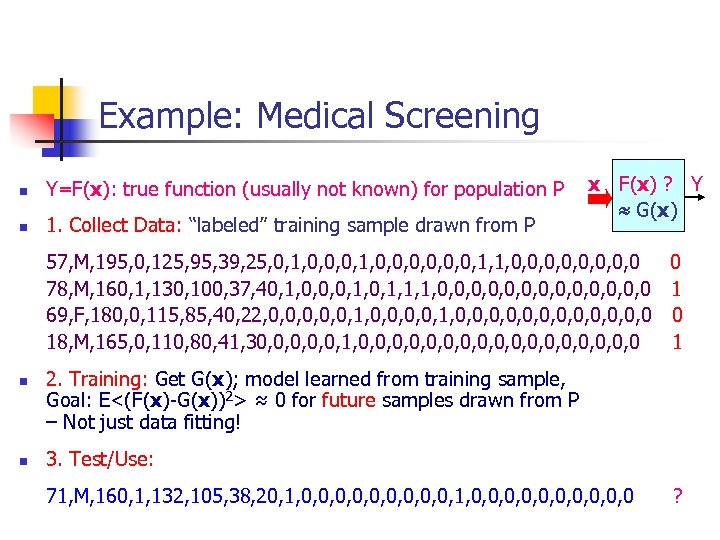

Example: Medical Screening n Y=F(x): true function (usually not known) for population P n 1. Collect Data: “labeled” training sample drawn from P x F(x) ? Y G(x) 57, M, 195, 0, 125, 95, 39, 25, 0, 1, 0, 0, 1, 1, 0, 0, 0, 0 0 78, M, 160, 1, 130, 100, 37, 40, 1, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0 1 69, F, 180, 0, 115, 85, 40, 22, 0, 0, 0, 1, 0, 0, 0, 0, 0 0 18, M, 165, 0, 110, 80, 41, 30, 0, 0, 1, 0, 0, 0, 0, 0 1 n n 2. Training: Get G(x); model learned from training sample, Goal: E<(F(x)-G(x))2> ≈ 0 for future samples drawn from P – Not just data fitting! 3. Test/Use: 71, M, 160, 1, 132, 105, 38, 20, 1, 0, 0, 0, 0, 0, 0 ?

Data-Based Modeling Tools (Learning Paradigms) Decision Trees n Nearest-Neighbor Classifiers n Support Vector Machines n Neural Networks n Abductive Networks n

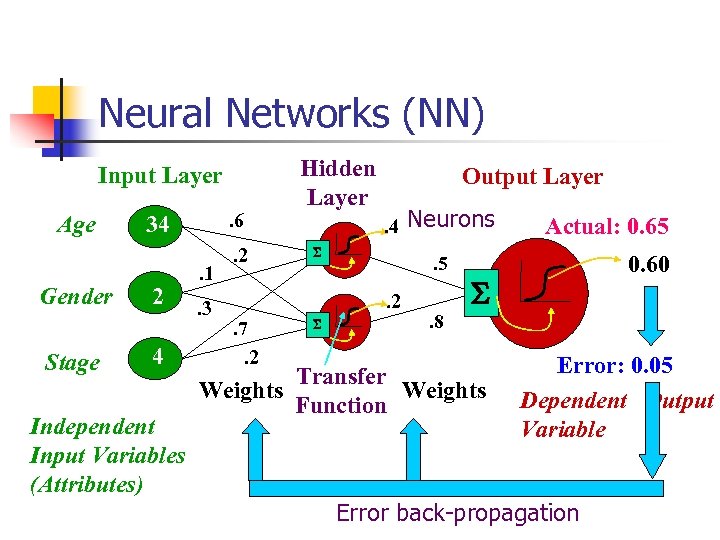

Neural Networks (NN) Input Layer Age Gender Stage . 6 34 2 4 Independent Input Variables (Attributes) . 1. 3 . 2 Hidden Layer Output Layer. 4 S . 5. 2 . 7. 2 S Neurons . 8 Actual: 0. 65 0. 60 S Transfer Weights Function Error: 0. 05 Dependent Output Variable Error back-propagation

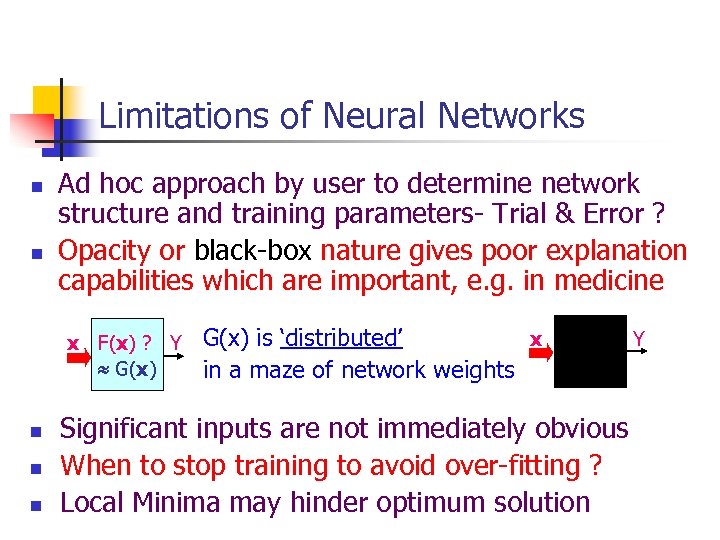

Limitations of Neural Networks n n Ad hoc approach by user to determine network structure and training parameters- Trial & Error ? Opacity or black-box nature gives poor explanation capabilities which are important, e. g. in medicine x F(x) ? Y G(x) n n n G(x) is ‘distributed’ x in a maze of network weights Significant inputs are not immediately obvious When to stop training to avoid over-fitting ? Local Minima may hinder optimum solution Y

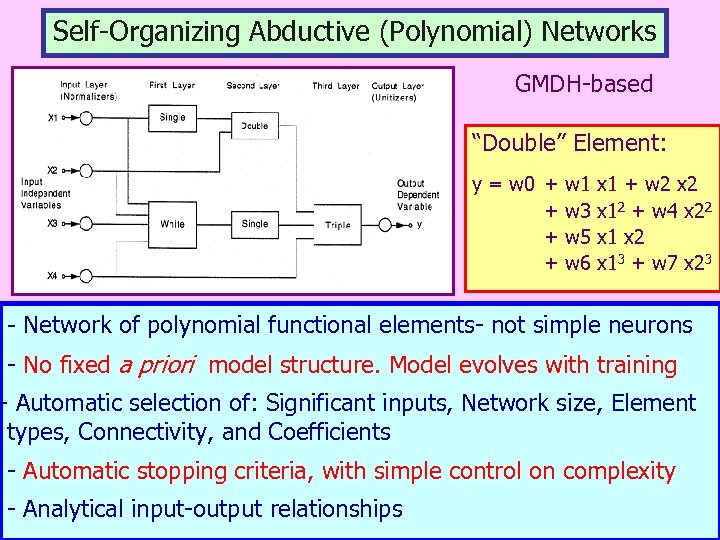

Self-Organizing Abductive (Polynomial) Networks GMDH-based “Double” Element: y = w 0 + + w 1 w 3 w 5 w 6 x 1 + w 2 x 12 + w 4 x 22 x 1 x 2 x 13 + w 7 x 23 - Network of polynomial functional elements- not simple neurons - No fixed a priori model structure. Model evolves with training - Automatic selection of: Significant inputs, Network size, Element types, Connectivity, and Coefficients - Automatic stopping criteria, with simple control on complexity - Analytical input-output relationships

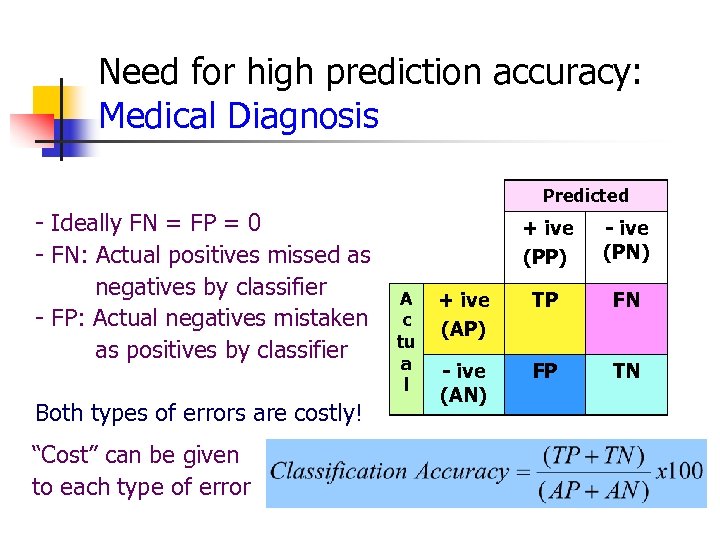

Need for high prediction accuracy: Medical Diagnosis Predicted - Ideally FN = FP = 0 - FN: Actual positives missed as negatives by classifier - FP: Actual negatives mistaken as positives by classifier Both types of errors are costly! “Cost” can be given to each type of error + ive (PP) A c tu a l - ive (PN) + ive (AP) TP FN - ive (AN) FP TN

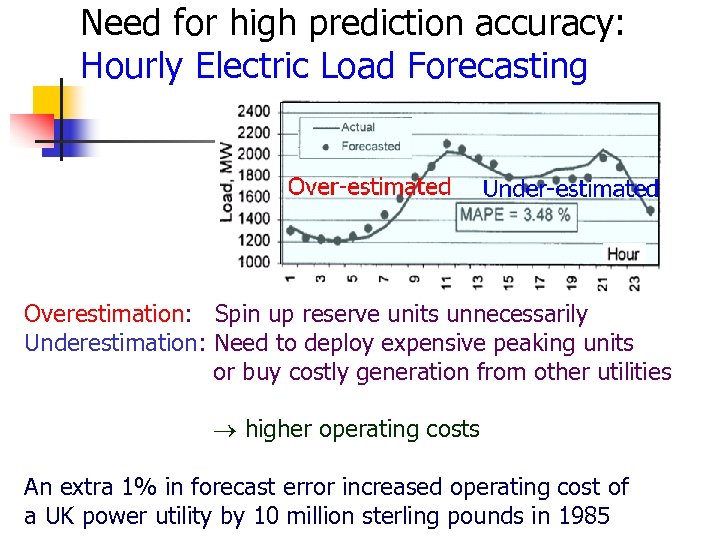

Need for high prediction accuracy: Hourly Electric Load Forecasting Overestimation: Spin up reserve units unnecessarily Underestimation: Need to deploy expensive peaking units or buy costly generation from other utilities higher operating costs An extra 1% in forecast error increased operating cost of a UK power utility by 10 million sterling pounds in 1985

How to ensure good predictive models? n n n Use effective predictors Use representative datasets for model training and evaluation Large training and evaluation datasets Pre-process datasets to remove outliers, errors, etc. and perform normalization, transformations, etc. Avoid over-fitting during training (i. e. use parsimonious models) Use proven learning algorithms

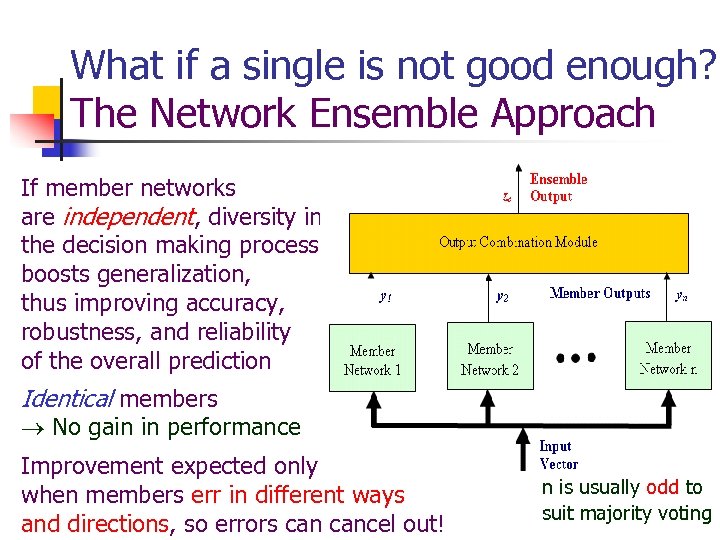

What if a single is not good enough? The Network Ensemble Approach If member networks are independent, diversity in the decision making process boosts generalization, thus improving accuracy, robustness, and reliability of the overall prediction Identical members No gain in performance Improvement expected only when members err in different ways and directions, so errors cancel out! n is usually odd to suit majority voting

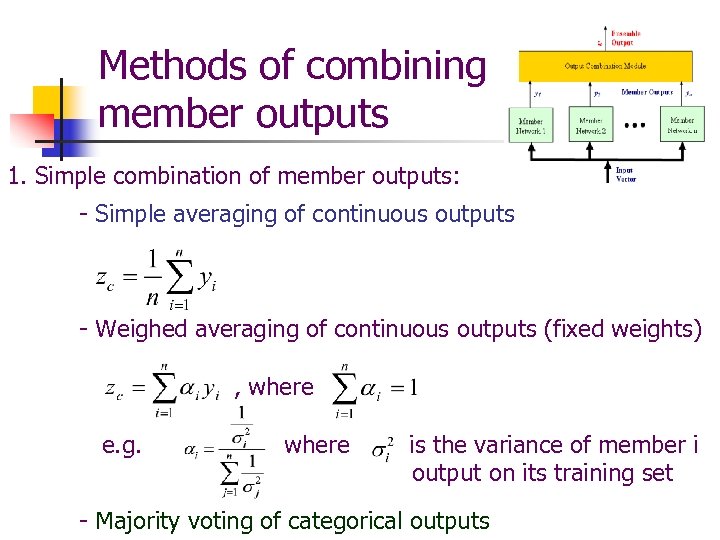

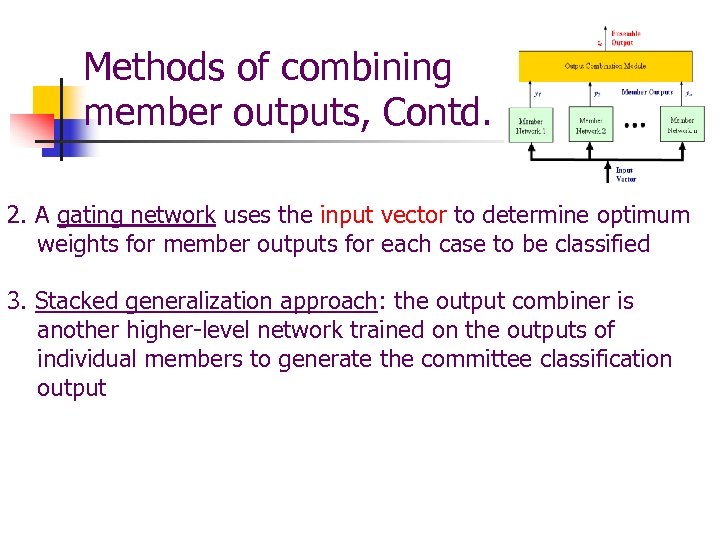

Methods of combining member outputs 1. Simple combination of member outputs: - Simple averaging of continuous outputs - Weighed averaging of continuous outputs (fixed weights) , where e. g. where is the variance of member i output on its training set - Majority voting of categorical outputs

Methods of combining member outputs, Contd. 2. A gating network uses the input vector to determine optimum weights for member outputs for each case to be classified 3. Stacked generalization approach: the output combiner is another higher-level network trained on the outputs of individual members to generate the committee classification output

Network Ensembles: The need for diversification The committee error can be shown to have two components: - One measuring the average generalization error of individual members - The other measuring the disagreement among outputs of individual members Therefore: Individual members should ideally be uncorrelated or even negatively correlated (Diversity) An ideal committee would consist of: - Highly accurate members, - which disagree among themselves as much as possible Possible tradeoffs between the above two requirements

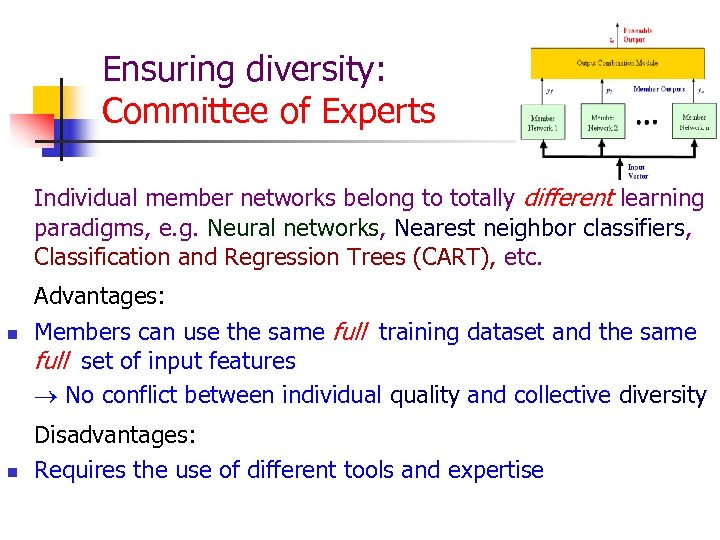

Ensuring diversity: Committee of Experts Individual member networks belong to totally different learning paradigms, e. g. Neural networks, Nearest neighbor classifiers, Classification and Regression Trees (CART), etc. n n Advantages: Members can use the same full training dataset and the same full set of input features No conflict between individual quality and collective diversity Disadvantages: Requires the use of different tools and expertise

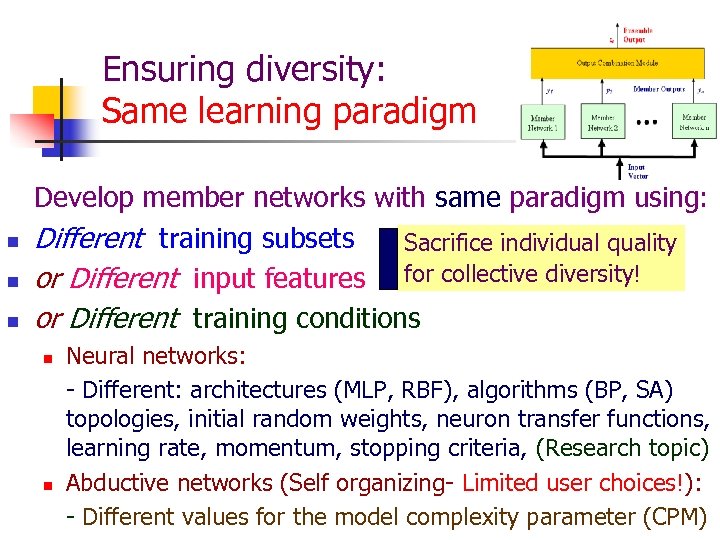

Ensuring diversity: Same learning paradigm n n n Develop member networks with same paradigm using: Different training subsets Sacrifice individual quality or Different input features for collective diversity! or Different training conditions n n Neural networks: - Different: architectures (MLP, RBF), algorithms (BP, SA) topologies, initial random weights, neuron transfer functions, learning rate, momentum, stopping criteria, (Research topic) Abductive networks (Self organizing- Limited user choices!): - Different values for the model complexity parameter (CPM)

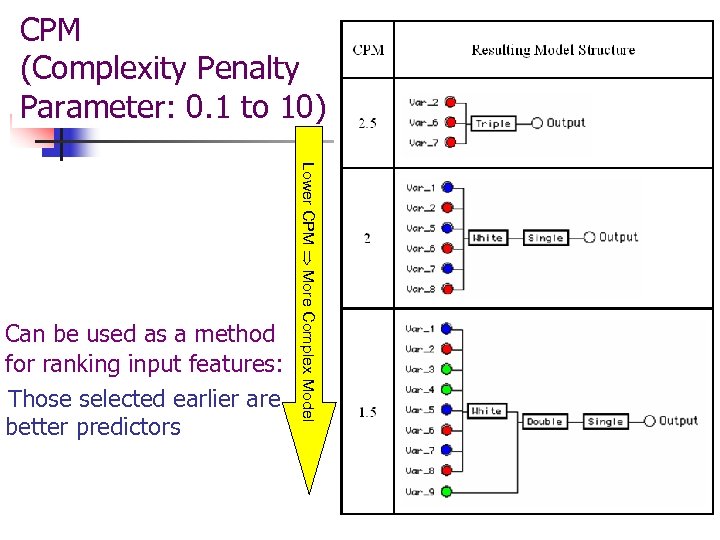

CPM (Complexity Penalty Parameter: 0. 1 to 10) Lower CPM More Complex Model Can be used as a method for ranking input features: Those selected earlier are better predictors

Some Results: Classification for Medical Diagnosis 1. The Pima Indians Diabetes Dataset from the UCI Machine Learning Repository n n n 768 cases: (669 for training and 99 for evaluation) 8 numerical attributes on physiological measurements and medical test results A binary class variable (Diabetic: 1, Not Diabetic: 0) Percentage of positives in the total set: 34. 9% Typical classification accuracies reported for C 4. 5 decision tree tool: 74. 6%

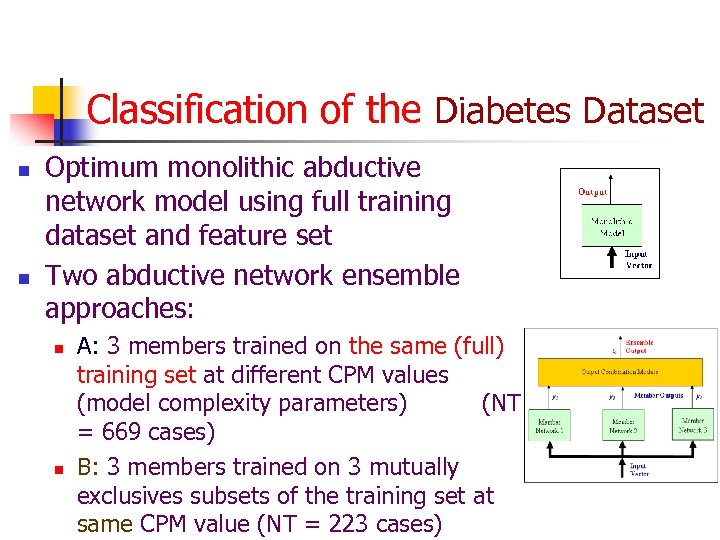

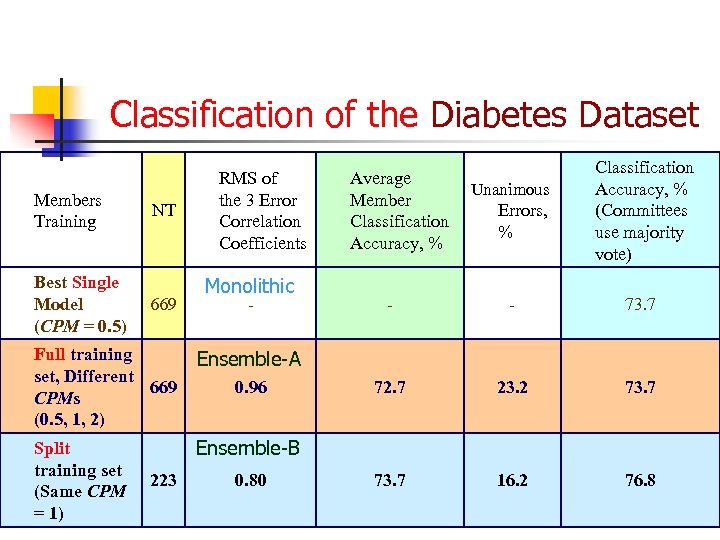

Classification of the Diabetes Dataset n n Optimum monolithic abductive network model using full training dataset and feature set Two abductive network ensemble approaches: n n A: 3 members trained on the same (full) training set at different CPM values (model complexity parameters) (NT = 669 cases) B: 3 members trained on 3 mutually exclusives subsets of the training set at same CPM value (NT = 223 cases)

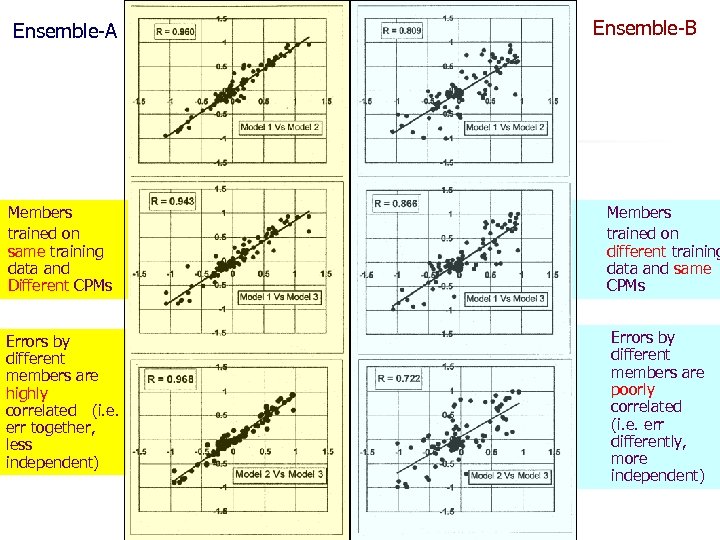

Ensemble-A Ensemble-B Members trained on same training data and Different CPMs Members trained on different training data and same CPMs Errors by different members are highly correlated (i. e. err together, less independent) Errors by different members are poorly correlated (i. e. err differently, more independent)

Classification of the Diabetes Dataset Members Training Best Single Model (CPM = 0. 5) NT 669 RMS of the 3 Error Correlation Coefficients Monolithic - Full training set, Different 669 CPMs (0. 5, 1, 2) Unanimous Errors, % Classification Accuracy, % (Committees use majority vote) - - 73. 7 72. 7 23. 2 73. 7 16. 2 76. 8 Ensemble-A Split training set (Same CPM = 1) Average Member Classification Accuracy, % Ensemble-B 223 0. 96 0. 80

Some Results: Classification for Medical Diagnosis 2. The Cleveland Heart Disease Dataset from the UCI Machine Learning Repository n n n 270 cases: (190 for training and 80 for evaluation) 13 numerical attributes A binary class variable: Presence 1/Absence 0 of heart disease Percentage of positives in the total set: 44. 4 % Typical classification accuracies reported with neural networks: 81. 8%

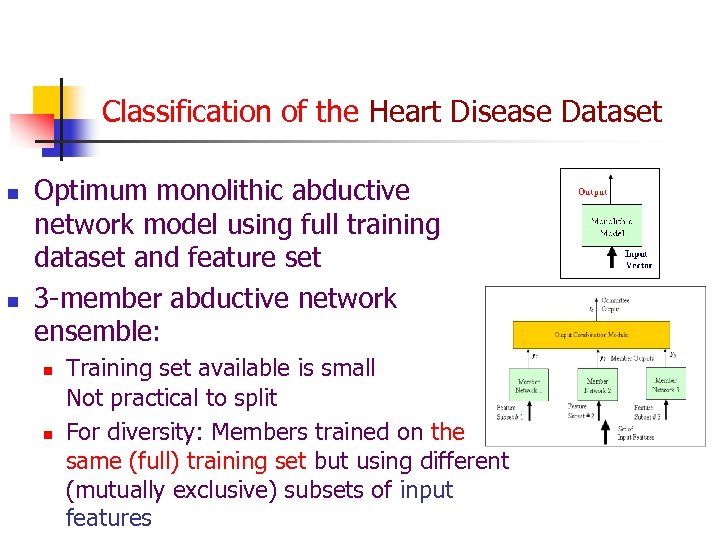

Classification of the Heart Disease Dataset n n Optimum monolithic abductive network model using full training dataset and feature set 3 -member abductive network ensemble: n n Training set available is small Not practical to split For diversity: Members trained on the same (full) training set but using different (mutually exclusive) subsets of input features

Classification of the Heart Disease Dataset n n n To ensure good (and uniform) quality of all member networks, good quality input features must be distributed uniformly amongst members First, rank the input feature subset based on predictive quality Then distribute the features on the 3 members fairly

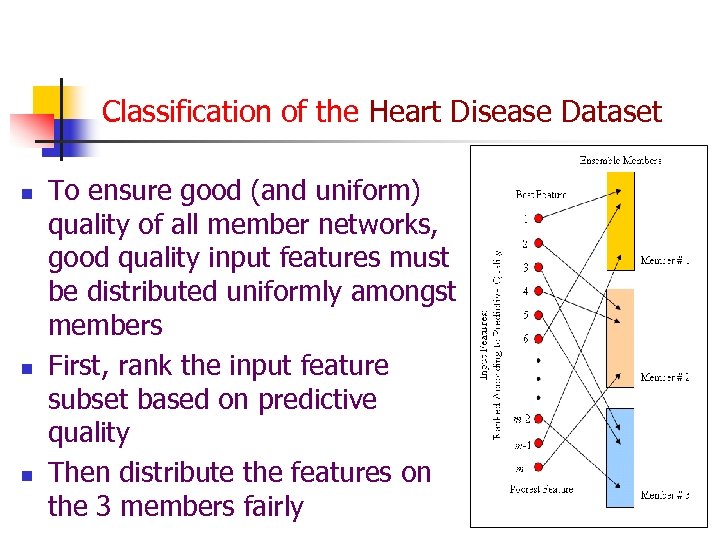

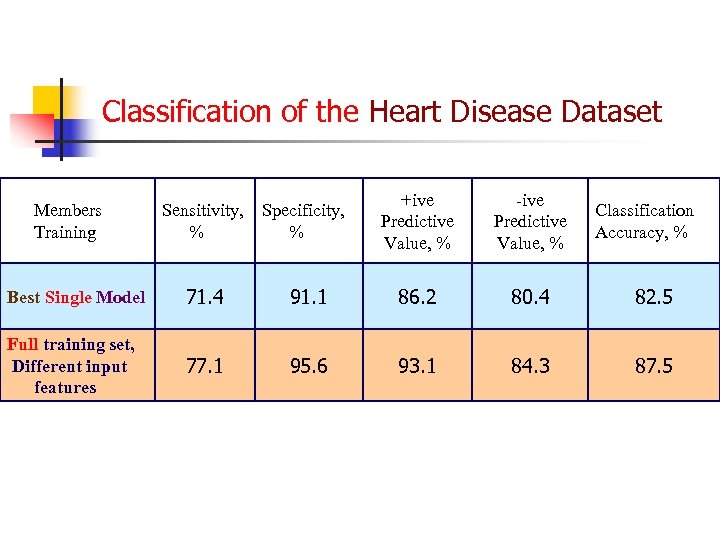

Classification of the Heart Disease Dataset Sensitivity, % Specificity, % +ive Predictive Value, % -ive Predictive Value, % Best Single Model 71. 4 91. 1 86. 2 80. 4 82. 5 Full training set, Different input features 77. 1 95. 6 93. 1 84. 3 87. 5 Members Training Classification Accuracy, %

Some Results: Regression: Electrical Load Forecasting n Short term: Hours, a week n Medium term: Months, a year n Long term: Up to 20 years Short term (ST) Forecasting: - Hourly load profile - Daily peak load ( ) Weekend Weekdays ST Forecasts useful for scheduling: n Generator unit commitment n Short-term maintenance n Fuel allocation n Evaluating interchange transactions in deregulated markets

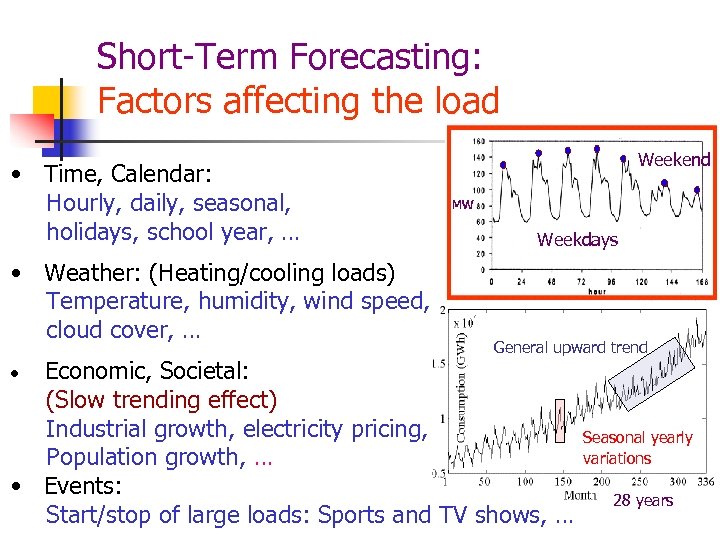

Short-Term Forecasting: Factors affecting the load • Time, Calendar: Hourly, daily, seasonal, holidays, school year, … • Weather: (Heating/cooling loads) Temperature, humidity, wind speed, cloud cover, … Weekend Weekdays General upward trend Economic, Societal: (Slow trending effect) Industrial growth, electricity pricing, Population growth, … • Events: Start/stop of large loads: Sports and TV shows, … Seasonal yearly variations 28 years

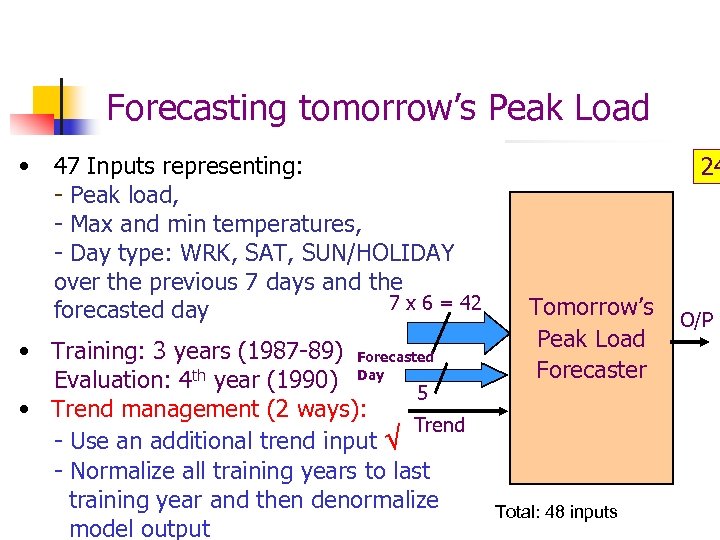

Forecasting tomorrow’s Peak Load • 47 Inputs representing: - Peak load, - Max and min temperatures, - Day type: WRK, SAT, SUN/HOLIDAY over the previous 7 days and the 7 x 6 = 42 forecasted day • Training: 3 years (1987 -89) Forecasted Evaluation: 4 th year (1990) Day 5 • Trend management (2 ways): Trend - Use an additional trend input - Normalize all training years to last training year and then denormalize model output 24 Tomorrow’s Peak Load Forecaster Total: 48 inputs O/P

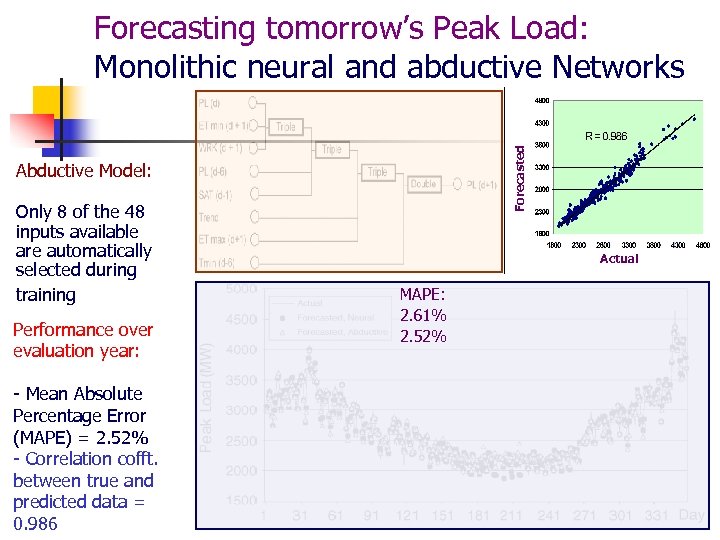

Forecasted Predicted Forecasting tomorrow’s Peak Load: Monolithic neural and abductive Networks Abductive Model: Only 8 of the 48 inputs available are automatically selected during training Performance over evaluation year: - Mean Absolute Percentage Error (MAPE) = 2. 52% - Correlation cofft. between true and predicted data = 0. 986 Actual, MW MAPE: 2. 61% 2. 52%

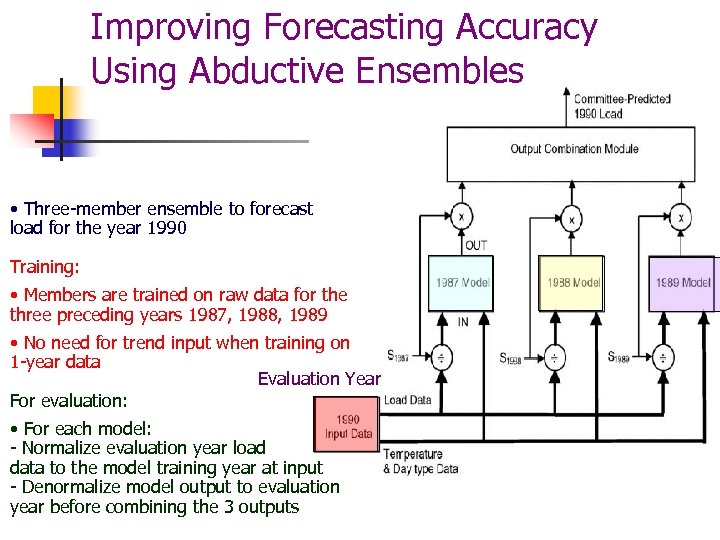

Improving Forecasting Accuracy Using Abductive Ensembles • Three-member ensemble to forecast load for the year 1990 Training: • Members are trained on raw data for the three preceding years 1987, 1988, 1989 • No need for trend input when training on 1 -year data Evaluation Year For evaluation: • For each model: - Normalize evaluation year load data to the model training year at input - Denormalize model output to evaluation year before combining the 3 outputs

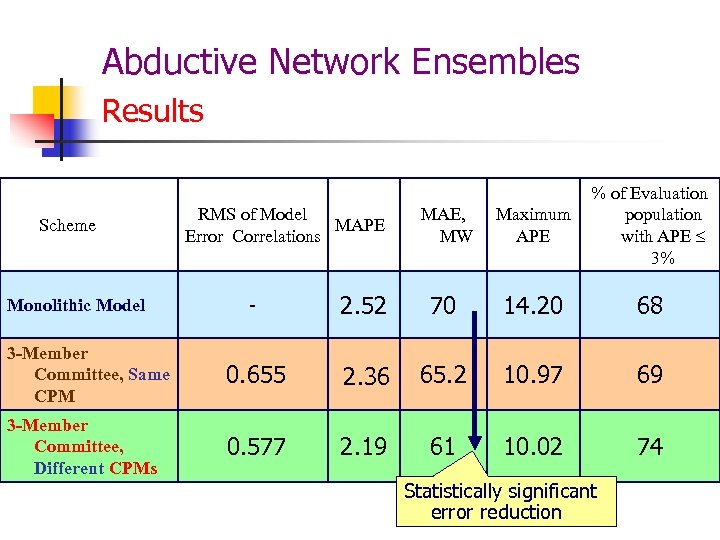

Abductive Network Ensembles Results Scheme RMS of Model MAPE Error Correlations MAE, MW Maximum APE % of Evaluation population with APE 3% - 2. 52 70 14. 20 68 3 -Member Committee, Same CPM 0. 655 2. 36 65. 2 10. 97 69 3 -Member Committee, Different CPMs 0. 577 2. 19 61 10. 02 74 Monolithic Model Statistically significant error reduction

Summary n n n Network ensembles (committees) can lead to significant performance gains in classification and regression Members need to be both accurate and independent Independence is more difficult to achieve with abductive compared to neural networks Effective ways to achieve this are: Different training datasets, different input features, and different model complexity (CPMs) Demonstrated the technique on medical data (classification) and electrical load forecasting (regression)

fb6af2d0335de35e606bcafdf764bcf1.ppt