77829b174fbb3d12565075658ffac4ad.ppt

- Количество слайдов: 76

Network Developments and Network Monitoring in Internet 2 Eric Boyd Director of Performance Architecture and Technologies Internet 2

Network Developments and Network Monitoring in Internet 2 Eric Boyd Director of Performance Architecture and Technologies Internet 2

Overview • Internet 2 Network • Performance Middleware: Supporting Network-Based Science • Internet 2 Network Observatory

Overview • Internet 2 Network • Performance Middleware: Supporting Network-Based Science • Internet 2 Network Observatory

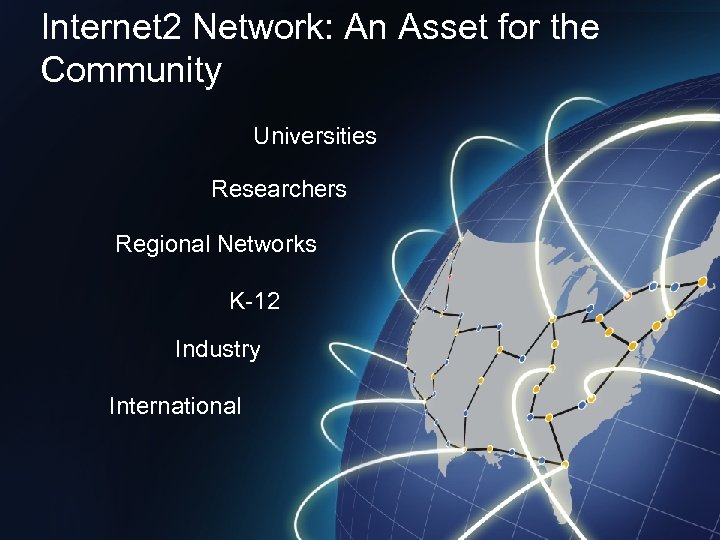

Internet 2 Network: An Asset for the Community Universities Researchers Regional Networks K-12 Industry International

Internet 2 Network: An Asset for the Community Universities Researchers Regional Networks K-12 Industry International

Internet 2 Network • Hybrid optical and IP network • Dynamic and static wavelength services • Fiber, equipment dedicated to Internet 2; Level 3 maintains network and service level • Platform supports production services and experimental projects

Internet 2 Network • Hybrid optical and IP network • Dynamic and static wavelength services • Fiber, equipment dedicated to Internet 2; Level 3 maintains network and service level • Platform supports production services and experimental projects

Internet 2 Network - Layer 1 Internet 2 Network Optical Switching Node Level 3 Regen Site Internet 2 Redundant Drop/Add Site ESnet Drop/Add Site

Internet 2 Network - Layer 1 Internet 2 Network Optical Switching Node Level 3 Regen Site Internet 2 Redundant Drop/Add Site ESnet Drop/Add Site

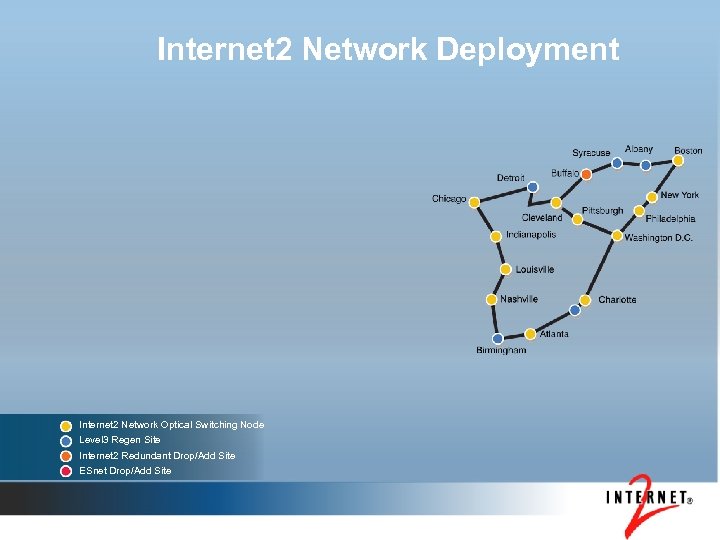

Internet 2 Network Deployment Internet 2 Network Optical Switching Node Level 3 Regen Site Internet 2 Redundant Drop/Add Site ESnet Drop/Add Site

Internet 2 Network Deployment Internet 2 Network Optical Switching Node Level 3 Regen Site Internet 2 Redundant Drop/Add Site ESnet Drop/Add Site

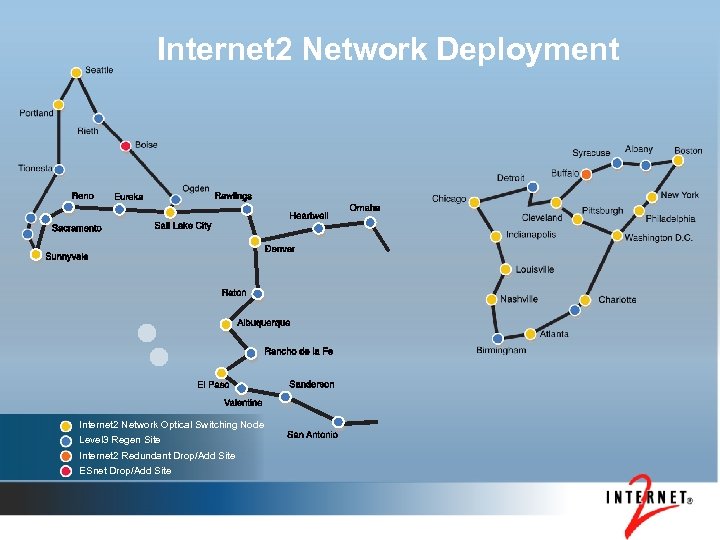

Internet 2 Network Deployment Internet 2 Network Optical Switching Node Level 3 Regen Site Internet 2 Redundant Drop/Add Site ESnet Drop/Add Site

Internet 2 Network Deployment Internet 2 Network Optical Switching Node Level 3 Regen Site Internet 2 Redundant Drop/Add Site ESnet Drop/Add Site

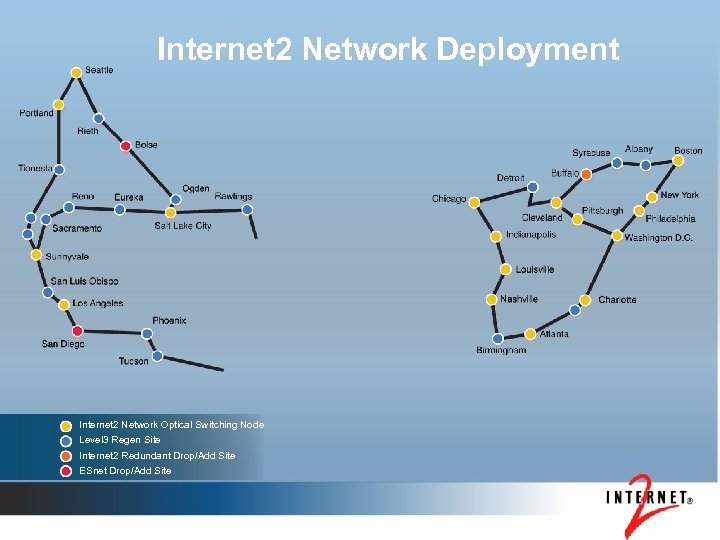

Internet 2 Network Deployment Internet 2 Network Optical Switching Node Level 3 Regen Site Internet 2 Redundant Drop/Add Site ESnet Drop/Add Site

Internet 2 Network Deployment Internet 2 Network Optical Switching Node Level 3 Regen Site Internet 2 Redundant Drop/Add Site ESnet Drop/Add Site

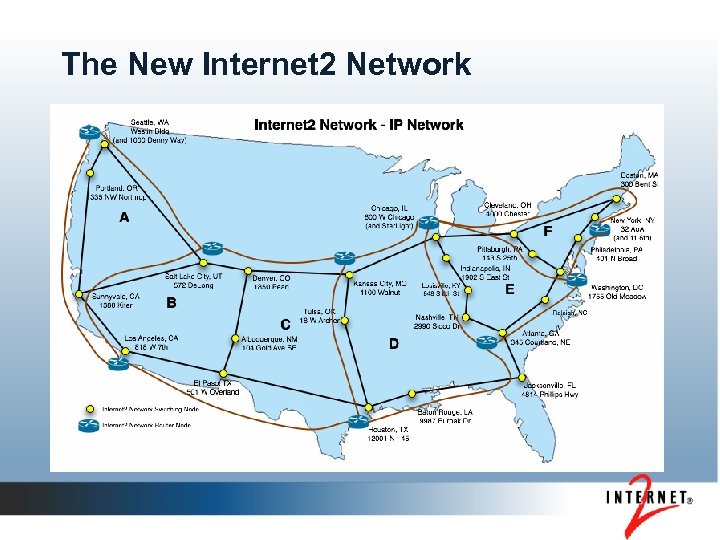

The New Internet 2 Network

The New Internet 2 Network

A New Wrinkle • Internet 2 exploring a merger with National Lambda Rail (NLR) • Goal: Consolidate national higher education and research networking organizations • Technical team is exploring what the merged technical infrastructure will look like

A New Wrinkle • Internet 2 exploring a merger with National Lambda Rail (NLR) • Goal: Consolidate national higher education and research networking organizations • Technical team is exploring what the merged technical infrastructure will look like

Overview • Internet 2 Network • Performance Middleware: Supporting Network-Based Science • Internet 2 Network Observatory

Overview • Internet 2 Network • Performance Middleware: Supporting Network-Based Science • Internet 2 Network Observatory

Network-Based Science • Science is a global community • Networks links scientists • Collaborative research occurs across network boundaries • For the scientist, the value of the network is the achieved network performance • Scientists should not have to focus on the network; good end-to-end performance should be a given

Network-Based Science • Science is a global community • Networks links scientists • Collaborative research occurs across network boundaries • For the scientist, the value of the network is the achieved network performance • Scientists should not have to focus on the network; good end-to-end performance should be a given

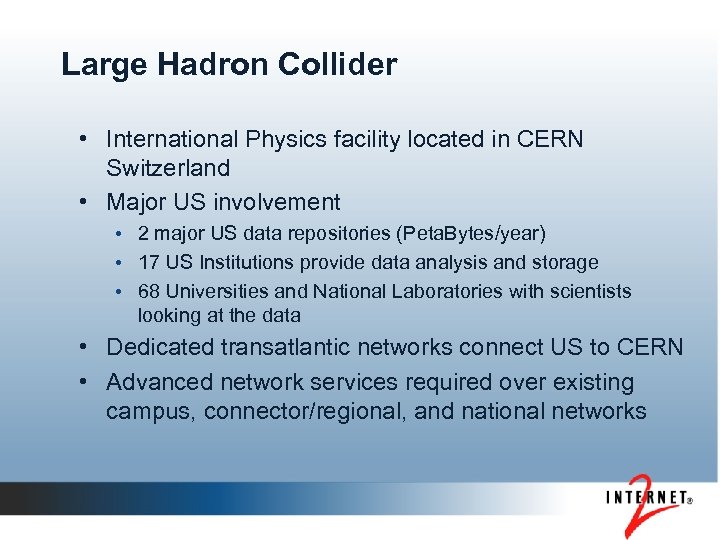

Large Hadron Collider • International Physics facility located in CERN Switzerland • Major US involvement • 2 major US data repositories (Peta. Bytes/year) • 17 US Institutions provide data analysis and storage • 68 Universities and National Laboratories with scientists looking at the data • Dedicated transatlantic networks connect US to CERN • Advanced network services required over existing campus, connector/regional, and national networks

Large Hadron Collider • International Physics facility located in CERN Switzerland • Major US involvement • 2 major US data repositories (Peta. Bytes/year) • 17 US Institutions provide data analysis and storage • 68 Universities and National Laboratories with scientists looking at the data • Dedicated transatlantic networks connect US to CERN • Advanced network services required over existing campus, connector/regional, and national networks

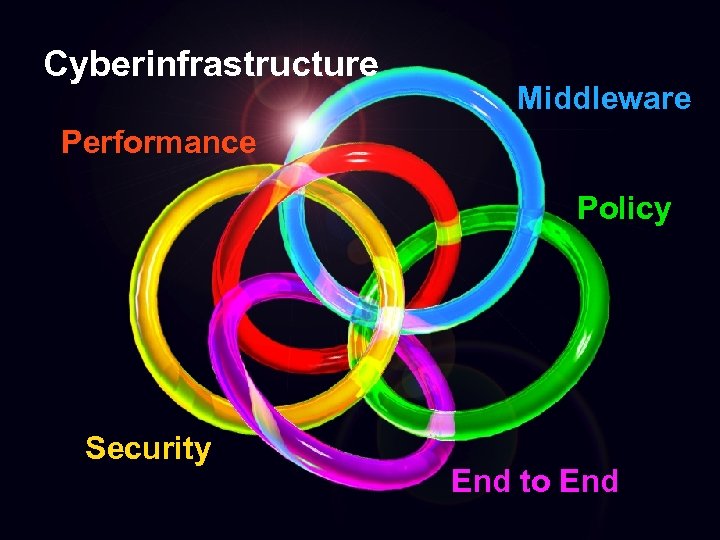

Cyberinfrastructure Middleware Performance Policy Security End to End

Cyberinfrastructure Middleware Performance Policy Security End to End

Achieving Good End-to-End Performance • Internet 2 consists of: • • Campuses Corporations Regional networks Internet 2 backbone network • Our members care about connecting with: • Other members • Government labs & networks • International partners • The Internet 2 community cares about making all of this work

Achieving Good End-to-End Performance • Internet 2 consists of: • • Campuses Corporations Regional networks Internet 2 backbone network • Our members care about connecting with: • Other members • Government labs & networks • International partners • The Internet 2 community cares about making all of this work

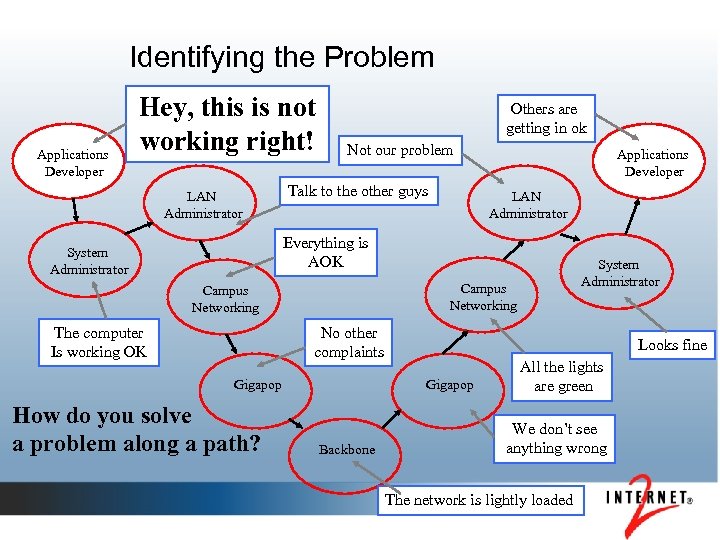

Identifying the Problem Applications Developer Hey, this is not working right! LAN Administrator Others are getting in ok Not our problem Talk to the other guys Applications Developer LAN Administrator Everything is AOK System Administrator Campus Networking The computer Is working OK No other complaints Gigapop How do you solve a problem along a path? System Administrator Looks fine Gigapop Backbone All the lights are green We don’t see anything wrong The network is lightly loaded

Identifying the Problem Applications Developer Hey, this is not working right! LAN Administrator Others are getting in ok Not our problem Talk to the other guys Applications Developer LAN Administrator Everything is AOK System Administrator Campus Networking The computer Is working OK No other complaints Gigapop How do you solve a problem along a path? System Administrator Looks fine Gigapop Backbone All the lights are green We don’t see anything wrong The network is lightly loaded

Status Quo • Performance is excellent across backbone networks • Performance is a problem end-to-end • Problems are concentrated towards the edge and in network transitions • We need to: • Diagnose: Understand limits of performance • Address: Work with members and application communities to address those performance issues

Status Quo • Performance is excellent across backbone networks • Performance is a problem end-to-end • Problems are concentrated towards the edge and in network transitions • We need to: • Diagnose: Understand limits of performance • Address: Work with members and application communities to address those performance issues

Vision: Performance Information is … • Available • People can find it (Discovery) • “Community of trust” allows access across administrative domain boundaries (AA) • Ubiquitous • Widely deployed (Paths of interest covered) • Reliable (Consistently configured correctly) • Valuable • Actionable (Analysis suggests course of action) • Automatable (Applications act on data)

Vision: Performance Information is … • Available • People can find it (Discovery) • “Community of trust” allows access across administrative domain boundaries (AA) • Ubiquitous • Widely deployed (Paths of interest covered) • Reliable (Consistently configured correctly) • Valuable • Actionable (Analysis suggests course of action) • Automatable (Applications act on data)

Goal: No more mystery … • Increase network awareness • Set user expectations accurately • Reduce diagnostic costs • Performance problems noticed early • Performance problems addressed efficiently • Network engineers can see & act outside their turf • Transform application design • Incorporate network intuition into application behavior

Goal: No more mystery … • Increase network awareness • Set user expectations accurately • Reduce diagnostic costs • Performance problems noticed early • Performance problems addressed efficiently • Network engineers can see & act outside their turf • Transform application design • Incorporate network intuition into application behavior

Strategy: Build & Empower the Community Decouple the Problem Space: • Analysis and Visualization • Performance Data Sharing • Performance Data Generation Grow the Footprint: • Clean APIs and protocols between each layer • Widespread deployment of measurement infrastructure • Widespread deployment of common performance measurement tools

Strategy: Build & Empower the Community Decouple the Problem Space: • Analysis and Visualization • Performance Data Sharing • Performance Data Generation Grow the Footprint: • Clean APIs and protocols between each layer • Widespread deployment of measurement infrastructure • Widespread deployment of common performance measurement tools

Tactics: Leverage position • Internet 2 is leveraged to help provide diagnostic information for “US backbone” portion of problem • Create *some* diagnostic tools (BWCTL, NDT, OWAMP) • Make network data as public as is reasonable • Work on efforts to more widely make performance data available (perf. SONAR) • Contribute to ‘base’ perf. SONAR development (partnership with ESnet, Europe, and Brazil) • Contribute to standards for performance information sharing (Open Grid Forum Network Measurement Working Group) • Integrate ‘our’ diagnostic tools as ‘good’ example of perf. SONAR services

Tactics: Leverage position • Internet 2 is leveraged to help provide diagnostic information for “US backbone” portion of problem • Create *some* diagnostic tools (BWCTL, NDT, OWAMP) • Make network data as public as is reasonable • Work on efforts to more widely make performance data available (perf. SONAR) • Contribute to ‘base’ perf. SONAR development (partnership with ESnet, Europe, and Brazil) • Contribute to standards for performance information sharing (Open Grid Forum Network Measurement Working Group) • Integrate ‘our’ diagnostic tools as ‘good’ example of perf. SONAR services

From the scientist’s perspective On behalf of the scientist, network engineer or application can easily/automatically: • Discover additional monitoring resources • Authenticate locally • Authorized to use remote network resources to a limited extent • Acquire performance monitoring data from remote sites via standard protocol • Innovate where needed • Customize the analysis and visualization

From the scientist’s perspective On behalf of the scientist, network engineer or application can easily/automatically: • Discover additional monitoring resources • Authenticate locally • Authorized to use remote network resources to a limited extent • Acquire performance monitoring data from remote sites via standard protocol • Innovate where needed • Customize the analysis and visualization

Internet 2 End-to-End Performance Initiative (E 2 Epi) • Includes: • • Internet 2 staff Internet 2 members Federal partners International partners • Building: • Performance monitoring tools • Performance middleware frameworks • Performance improvement tools

Internet 2 End-to-End Performance Initiative (E 2 Epi) • Includes: • • Internet 2 staff Internet 2 members Federal partners International partners • Building: • Performance monitoring tools • Performance middleware frameworks • Performance improvement tools

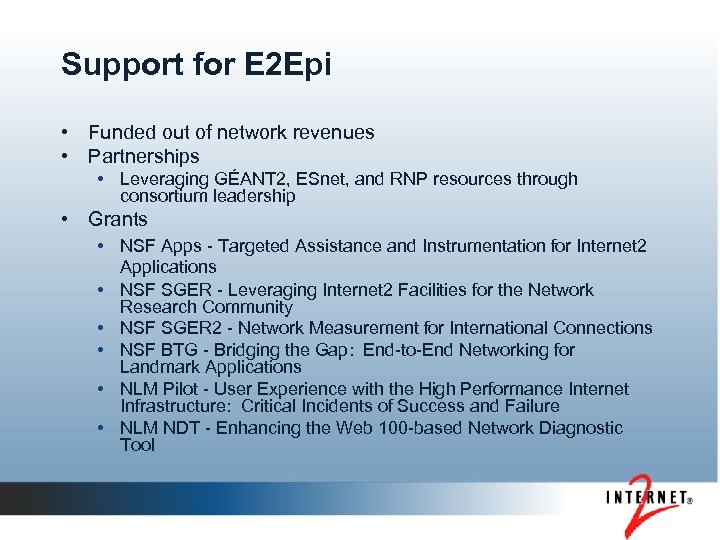

Support for E 2 Epi • Funded out of network revenues • Partnerships • Leveraging GÉANT 2, ESnet, and RNP resources through consortium leadership • Grants • NSF Apps - Targeted Assistance and Instrumentation for Internet 2 Applications • NSF SGER - Leveraging Internet 2 Facilities for the Network Research Community • NSF SGER 2 - Network Measurement for International Connections • NSF BTG - Bridging the Gap: End-to-End Networking for Landmark Applications • NLM Pilot - User Experience with the High Performance Internet Infrastructure: Critical Incidents of Success and Failure • NLM NDT - Enhancing the Web 100 -based Network Diagnostic Tool

Support for E 2 Epi • Funded out of network revenues • Partnerships • Leveraging GÉANT 2, ESnet, and RNP resources through consortium leadership • Grants • NSF Apps - Targeted Assistance and Instrumentation for Internet 2 Applications • NSF SGER - Leveraging Internet 2 Facilities for the Network Research Community • NSF SGER 2 - Network Measurement for International Connections • NSF BTG - Bridging the Gap: End-to-End Networking for Landmark Applications • NLM Pilot - User Experience with the High Performance Internet Infrastructure: Critical Incidents of Success and Failure • NLM NDT - Enhancing the Web 100 -based Network Diagnostic Tool

Current Activities • Analysis/Diagnostic tools • Performance tools • Software distributions to enable partner network organizations to participate • Google Summer of Code • New network deployment of measurement infrastructure on new observatory

Current Activities • Analysis/Diagnostic tools • Performance tools • Software distributions to enable partner network organizations to participate • Google Summer of Code • New network deployment of measurement infrastructure on new observatory

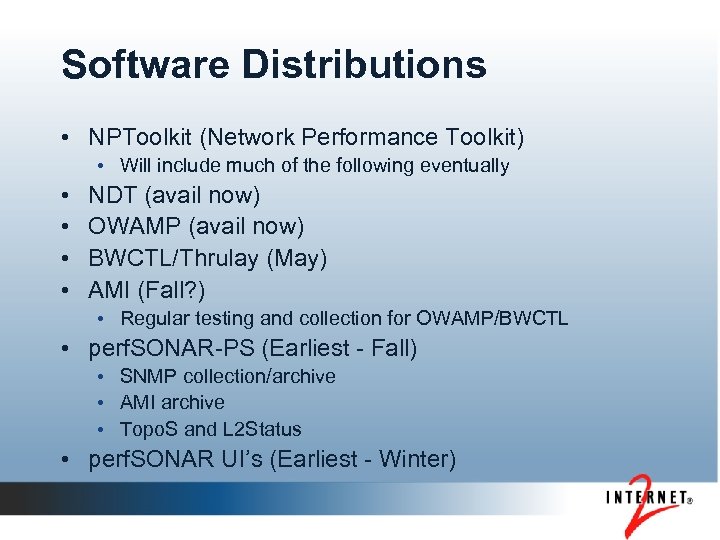

Software Distributions • NPToolkit (Network Performance Toolkit) • Will include much of the following eventually • • NDT (avail now) OWAMP (avail now) BWCTL/Thrulay (May) AMI (Fall? ) • Regular testing and collection for OWAMP/BWCTL • perf. SONAR-PS (Earliest - Fall) • SNMP collection/archive • AMI archive • Topo. S and L 2 Status • perf. SONAR UI’s (Earliest - Winter)

Software Distributions • NPToolkit (Network Performance Toolkit) • Will include much of the following eventually • • NDT (avail now) OWAMP (avail now) BWCTL/Thrulay (May) AMI (Fall? ) • Regular testing and collection for OWAMP/BWCTL • perf. SONAR-PS (Earliest - Fall) • SNMP collection/archive • AMI archive • Topo. S and L 2 Status • perf. SONAR UI’s (Earliest - Winter)

Google Summer of Code 5 Projects • NDT enhancements • Phoebus protocol enhancements • Chrolog (user-space timestamp) • OWAMP (Java Client) • perf. SONAR/cacti interface

Google Summer of Code 5 Projects • NDT enhancements • Phoebus protocol enhancements • Chrolog (user-space timestamp) • OWAMP (Java Client) • perf. SONAR/cacti interface

OWAMP (3. 0 c) • One-way latencies • Full support of RFC 4656 • Deployment Status • Abilene: all remaining nms 4 hosts • New network newy and chic (nms-rlat) • Software available at: http: //e 2 epi. internet 2. edu/owamp/

OWAMP (3. 0 c) • One-way latencies • Full support of RFC 4656 • Deployment Status • Abilene: all remaining nms 4 hosts • New network newy and chic (nms-rlat) • Software available at: http: //e 2 epi. internet 2. edu/owamp/

BWCTL (1. 2 b) • Throughput Test Controller • Pending Software release • Additional throughput tools • Iperf/thrulay/nuttcp • More tolerant of questionable clocks • Deployment Status • Abilene: open TCP testing • New network - awaiting new software release

BWCTL (1. 2 b) • Throughput Test Controller • Pending Software release • Additional throughput tools • Iperf/thrulay/nuttcp • More tolerant of questionable clocks • Deployment Status • Abilene: open TCP testing • New network - awaiting new software release

What is perf. SONAR? • Performance Middleware • perf. SONAR is an international consortium in which Internet 2 and GÉANT 2 are founders and leading participants • perf. SONAR is a set of protocol standards for interoperability between measurement and monitoring systems • perf. SONAR is a set of open source web services that can be mixed-and-matched and extended to create a performance monitoring framework

What is perf. SONAR? • Performance Middleware • perf. SONAR is an international consortium in which Internet 2 and GÉANT 2 are founders and leading participants • perf. SONAR is a set of protocol standards for interoperability between measurement and monitoring systems • perf. SONAR is a set of open source web services that can be mixed-and-matched and extended to create a performance monitoring framework

perf. SONAR Design Goals • • Standards-based Modular Decentralized Locally controlled Open Source Extensible Applicable to multiple generations of network monitoring systems • Grows “beyond our control” • Customized for individual science disciplines

perf. SONAR Design Goals • • Standards-based Modular Decentralized Locally controlled Open Source Extensible Applicable to multiple generations of network monitoring systems • Grows “beyond our control” • Customized for individual science disciplines

perf. SONAR Integrates • • Network measurement tools Network measurement archives Discovery Authentication and authorization Data manipulation Resource protection Topology

perf. SONAR Integrates • • Network measurement tools Network measurement archives Discovery Authentication and authorization Data manipulation Resource protection Topology

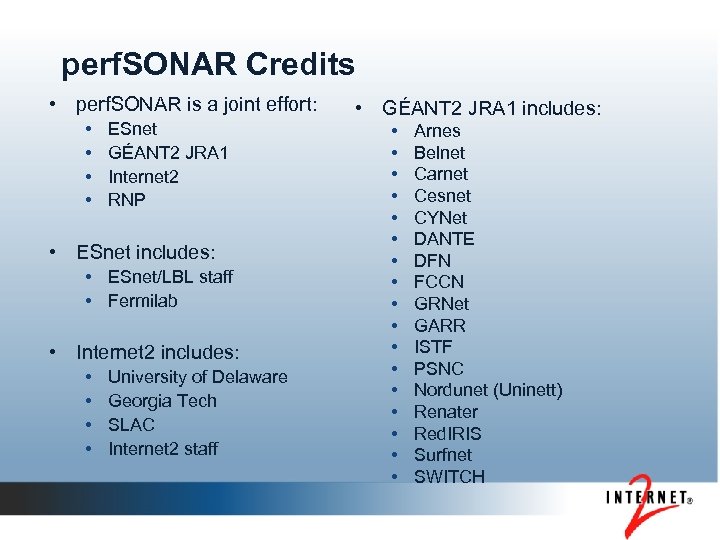

perf. SONAR Credits • perf. SONAR is a joint effort: • • ESnet GÉANT 2 JRA 1 Internet 2 RNP • ESnet includes: • ESnet/LBL staff • Fermilab • Internet 2 includes: • • University of Delaware Georgia Tech SLAC Internet 2 staff • GÉANT 2 JRA 1 includes: • • • • • Arnes Belnet Carnet Cesnet CYNet DANTE DFN FCCN GRNet GARR ISTF PSNC Nordunet (Uninett) Renater Red. IRIS Surfnet SWITCH

perf. SONAR Credits • perf. SONAR is a joint effort: • • ESnet GÉANT 2 JRA 1 Internet 2 RNP • ESnet includes: • ESnet/LBL staff • Fermilab • Internet 2 includes: • • University of Delaware Georgia Tech SLAC Internet 2 staff • GÉANT 2 JRA 1 includes: • • • • • Arnes Belnet Carnet Cesnet CYNet DANTE DFN FCCN GRNet GARR ISTF PSNC Nordunet (Uninett) Renater Red. IRIS Surfnet SWITCH

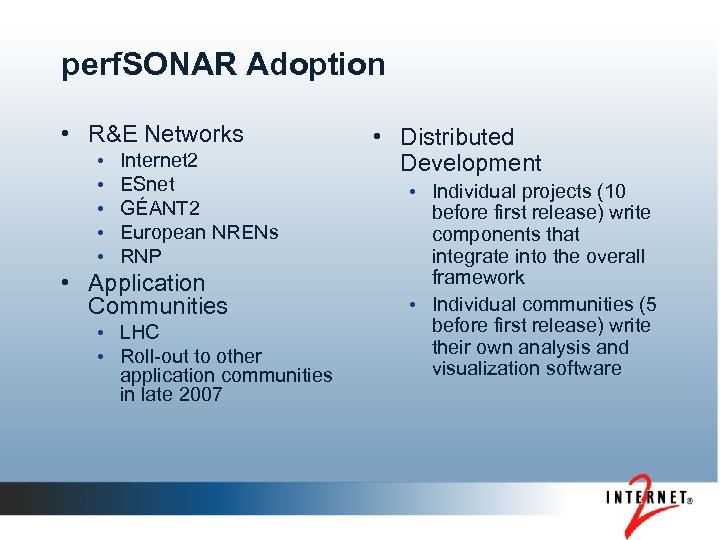

perf. SONAR Adoption • R&E Networks • • • Internet 2 ESnet GÉANT 2 European NRENs RNP • Application Communities • LHC • Roll-out to other application communities in late 2007 • Distributed Development • Individual projects (10 before first release) write components that integrate into the overall framework • Individual communities (5 before first release) write their own analysis and visualization software

perf. SONAR Adoption • R&E Networks • • • Internet 2 ESnet GÉANT 2 European NRENs RNP • Application Communities • LHC • Roll-out to other application communities in late 2007 • Distributed Development • Individual projects (10 before first release) write components that integrate into the overall framework • Individual communities (5 before first release) write their own analysis and visualization software

perf. SONAR-PS* • perf. SONAR (Perl Services) Why? • Adoption of Java Services difficult • Many network administrators don’t do Java, but are fluent in Perl) • Services more directly targeted at the data available from Internet 2 observatory deployment.

perf. SONAR-PS* • perf. SONAR (Perl Services) Why? • Adoption of Java Services difficult • Many network administrators don’t do Java, but are fluent in Perl) • Services more directly targeted at the data available from Internet 2 observatory deployment.

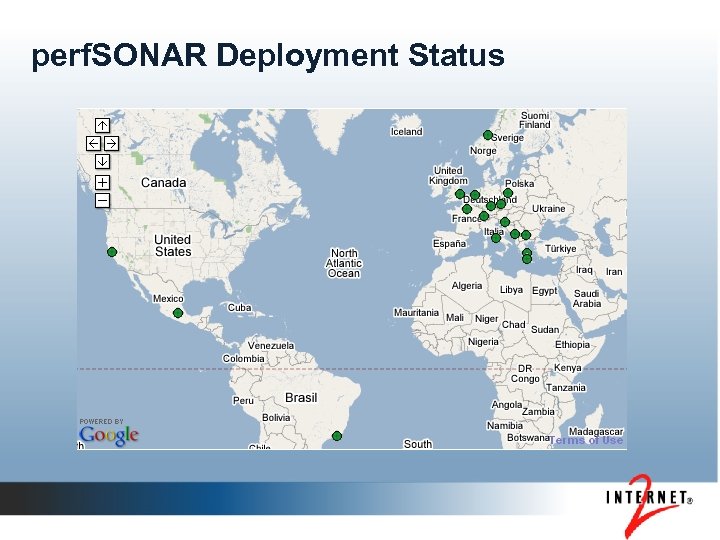

perf. SONAR Deployment Status

perf. SONAR Deployment Status

• Demo …

• Demo …

Overview • Internet 2 Network • Performance Middleware: Supporting Network-Based Science • Internet 2 Network Observatory

Overview • Internet 2 Network • Performance Middleware: Supporting Network-Based Science • Internet 2 Network Observatory

History and Motivation • Original Abilene racks included measurement devices • Included a single (somewhat large) PC • Early OWAMP, Surveyor measurements • Optical splitters at some locations • Motivation was primarily operations, monitoring, and management - understanding the network and how well it operates • Data was collected and maintained whenever possible • Primarily a NOC function • Available to other network operators to understand the network • It became apparent that the datasets were valuable as a network research tool

History and Motivation • Original Abilene racks included measurement devices • Included a single (somewhat large) PC • Early OWAMP, Surveyor measurements • Optical splitters at some locations • Motivation was primarily operations, monitoring, and management - understanding the network and how well it operates • Data was collected and maintained whenever possible • Primarily a NOC function • Available to other network operators to understand the network • It became apparent that the datasets were valuable as a network research tool

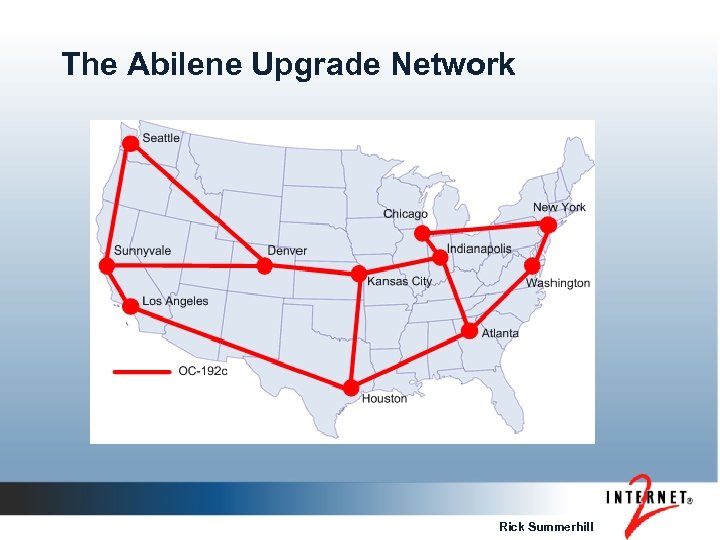

The Abilene Upgrade Network Rick Summerhill

The Abilene Upgrade Network Rick Summerhill

Upgrade of the Abilene Observatory • An important decision was made during the Abilene upgrade process (Juniper T-640 routers and OC-192 c) • Two racks, one of which was dedicated to measurement • Potential for research community to collocate equipment • Two components to the Observatory • Collocation - network research groups are able to collocate equipment in the Abilene router nodes • Measurement - data is collected by the NOC, the Ohio ITEC, and Internet 2, and made available to the research community

Upgrade of the Abilene Observatory • An important decision was made during the Abilene upgrade process (Juniper T-640 routers and OC-192 c) • Two racks, one of which was dedicated to measurement • Potential for research community to collocate equipment • Two components to the Observatory • Collocation - network research groups are able to collocate equipment in the Abilene router nodes • Measurement - data is collected by the NOC, the Ohio ITEC, and Internet 2, and made available to the research community

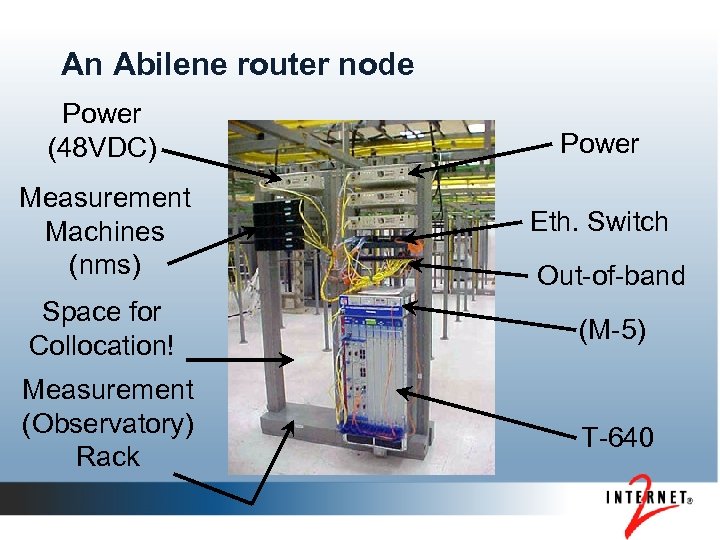

An Abilene router node Power (48 VDC) Power Measurement Machines (nms) Eth. Switch Space for Collocation! Measurement (Observatory) Rack Out-of-band (M-5) T-640

An Abilene router node Power (48 VDC) Power Measurement Machines (nms) Eth. Switch Space for Collocation! Measurement (Observatory) Rack Out-of-band (M-5) T-640

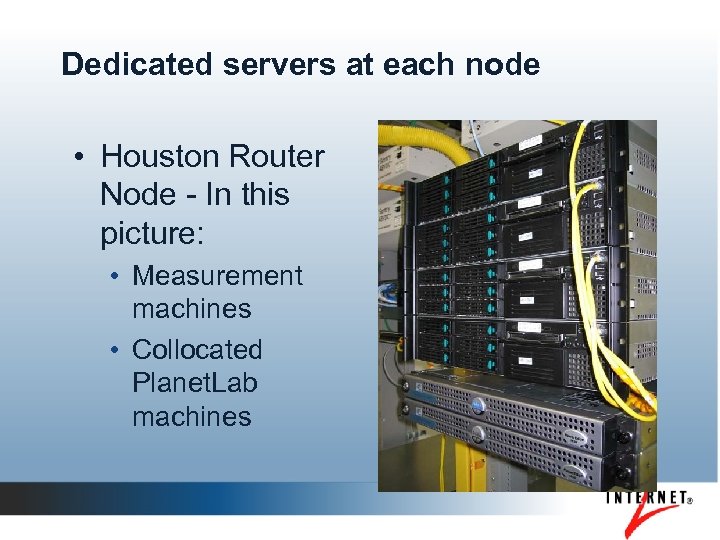

Dedicated servers at each node • Houston Router Node - In this picture: • Measurement machines • Collocated Planet. Lab machines

Dedicated servers at each node • Houston Router Node - In this picture: • Measurement machines • Collocated Planet. Lab machines

Example Research Projects • Collocation projects • Planet. Lab – Nodes installed in all Abilene Router Nodes. See http: //www. planet-lab. org • The Passive Measurement and Analysis Project (PMA) The Router clamp. See http: //pma. nlanr. net • Projects using collected datasets. See http: //abilene. internet 2. edu/observatory/researchprojects. html • “Modular Strategies for Internetwork Monitoring” • “Algorithms for Network Capacity Planning and Optimal Routing Based on Time-Varying Traffic Matrices” • “Spatio-Temporal Network Analysis” • “Assessing the Presence and Incidence of Alpha Flows in Backbone Networks”

Example Research Projects • Collocation projects • Planet. Lab – Nodes installed in all Abilene Router Nodes. See http: //www. planet-lab. org • The Passive Measurement and Analysis Project (PMA) The Router clamp. See http: //pma. nlanr. net • Projects using collected datasets. See http: //abilene. internet 2. edu/observatory/researchprojects. html • “Modular Strategies for Internetwork Monitoring” • “Algorithms for Network Capacity Planning and Optimal Routing Based on Time-Varying Traffic Matrices” • “Spatio-Temporal Network Analysis” • “Assessing the Presence and Incidence of Alpha Flows in Backbone Networks”

The New Internet 2 Network • Expanded Layer 1, 2 and 3 Facilities • Includes SONET and Wave equipment • Includes Ethernet Services • Greater IP Services • Requires a new type of Observatory

The New Internet 2 Network • Expanded Layer 1, 2 and 3 Facilities • Includes SONET and Wave equipment • Includes Ethernet Services • Greater IP Services • Requires a new type of Observatory

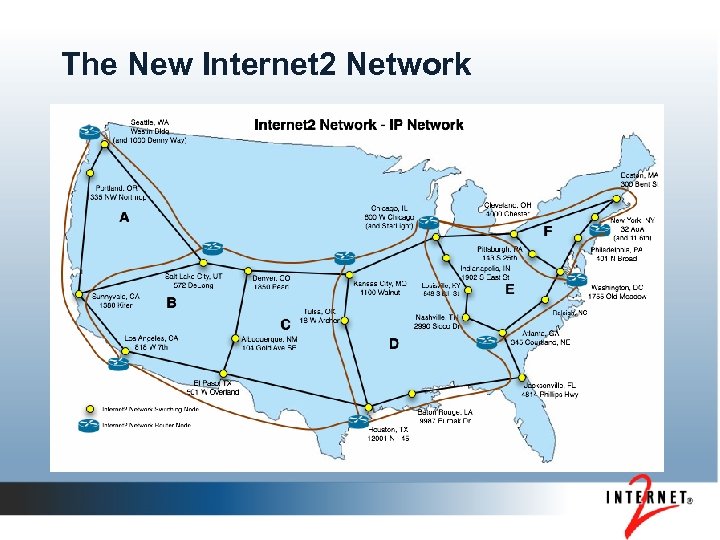

The New Internet 2 Network

The New Internet 2 Network

The New Internet 2 Observatory • Seek Input from the Community, both Engineers and Network Researchers • Current thinking is to support three types of services • Measurement (as before) • Collocation (as before) • Experimental Servers to support specific projects for example, Phoebus (this is new) • Support different types of nodes: • Optical Nodes • Router Nodes

The New Internet 2 Observatory • Seek Input from the Community, both Engineers and Network Researchers • Current thinking is to support three types of services • Measurement (as before) • Collocation (as before) • Experimental Servers to support specific projects for example, Phoebus (this is new) • Support different types of nodes: • Optical Nodes • Router Nodes

The New York Node - First Installment

The New York Node - First Installment

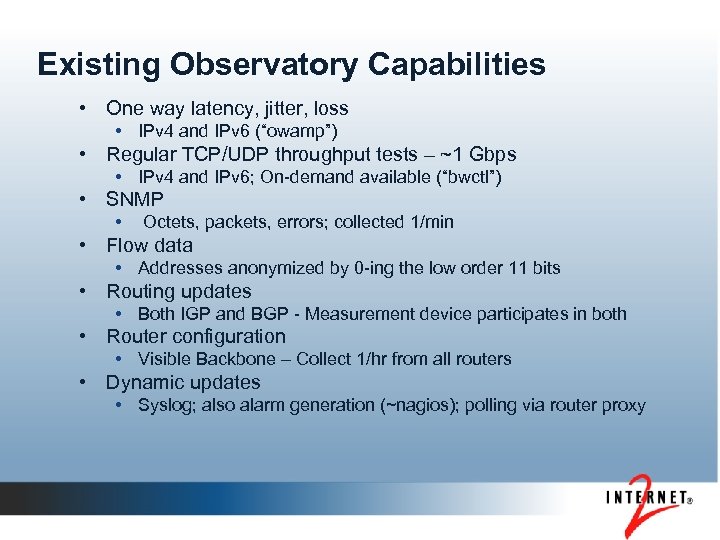

Existing Observatory Capabilities • One way latency, jitter, loss • IPv 4 and IPv 6 (“owamp”) • Regular TCP/UDP throughput tests – ~1 Gbps • IPv 4 and IPv 6; On-demand available (“bwctl”) • SNMP • Octets, packets, errors; collected 1/min • Flow data • Addresses anonymized by 0 -ing the low order 11 bits • Routing updates • Both IGP and BGP - Measurement device participates in both • Router configuration • Visible Backbone – Collect 1/hr from all routers • Dynamic updates • Syslog; also alarm generation (~nagios); polling via router proxy

Existing Observatory Capabilities • One way latency, jitter, loss • IPv 4 and IPv 6 (“owamp”) • Regular TCP/UDP throughput tests – ~1 Gbps • IPv 4 and IPv 6; On-demand available (“bwctl”) • SNMP • Octets, packets, errors; collected 1/min • Flow data • Addresses anonymized by 0 -ing the low order 11 bits • Routing updates • Both IGP and BGP - Measurement device participates in both • Router configuration • Visible Backbone – Collect 1/hr from all routers • Dynamic updates • Syslog; also alarm generation (~nagios); polling via router proxy

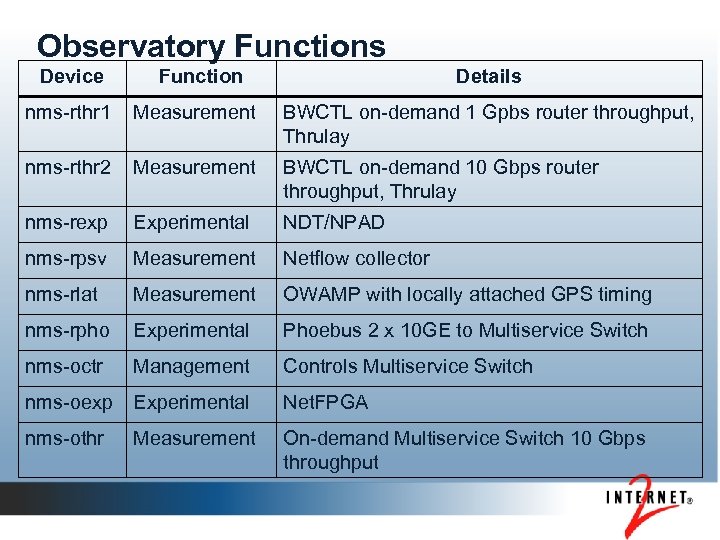

Observatory Functions Device Function Details nms-rthr 1 Measurement BWCTL on-demand 1 Gpbs router throughput, Thrulay nms-rthr 2 Measurement BWCTL on-demand 10 Gbps router throughput, Thrulay nms-rexp Experimental NDT/NPAD nms-rpsv Measurement Netflow collector nms-rlat Measurement OWAMP with locally attached GPS timing nms-rpho Experimental Phoebus 2 x 10 GE to Multiservice Switch nms-octr Management Controls Multiservice Switch nms-oexp Experimental Net. FPGA nms-othr Measurement On-demand Multiservice Switch 10 Gbps throughput

Observatory Functions Device Function Details nms-rthr 1 Measurement BWCTL on-demand 1 Gpbs router throughput, Thrulay nms-rthr 2 Measurement BWCTL on-demand 10 Gbps router throughput, Thrulay nms-rexp Experimental NDT/NPAD nms-rpsv Measurement Netflow collector nms-rlat Measurement OWAMP with locally attached GPS timing nms-rpho Experimental Phoebus 2 x 10 GE to Multiservice Switch nms-octr Management Controls Multiservice Switch nms-oexp Experimental Net. FPGA nms-othr Measurement On-demand Multiservice Switch 10 Gbps throughput

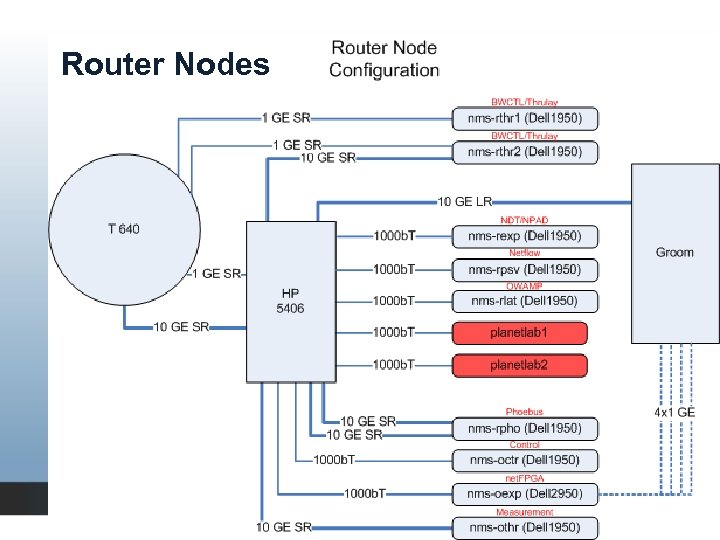

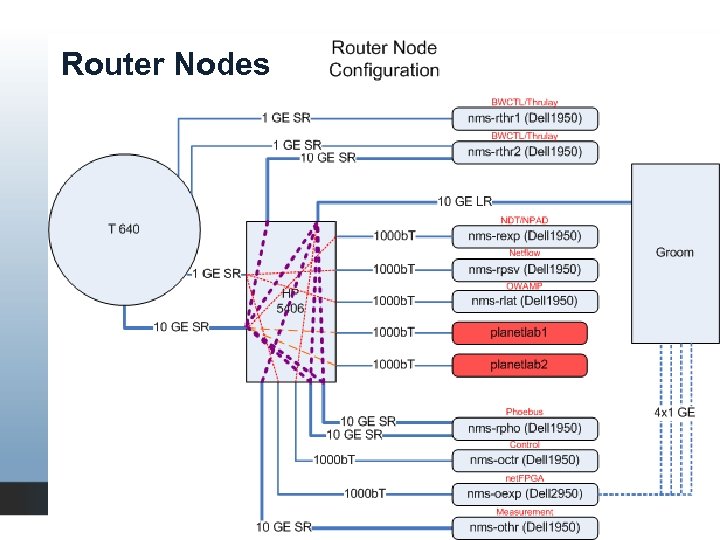

Router Nodes

Router Nodes

Router Nodes

Router Nodes

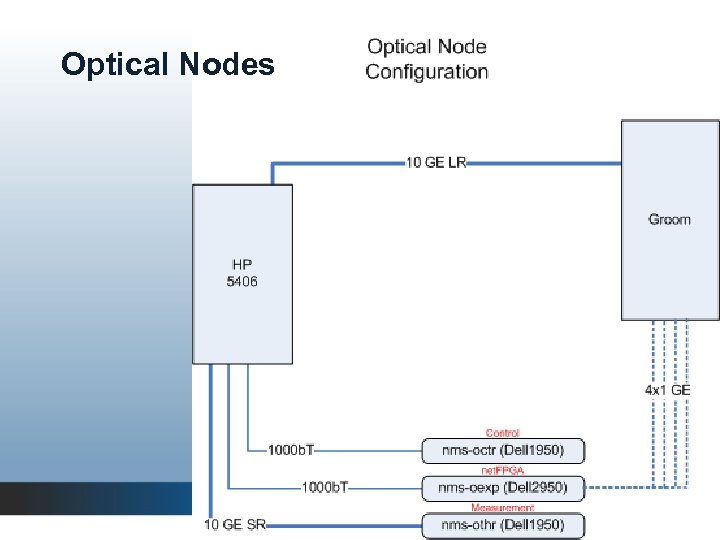

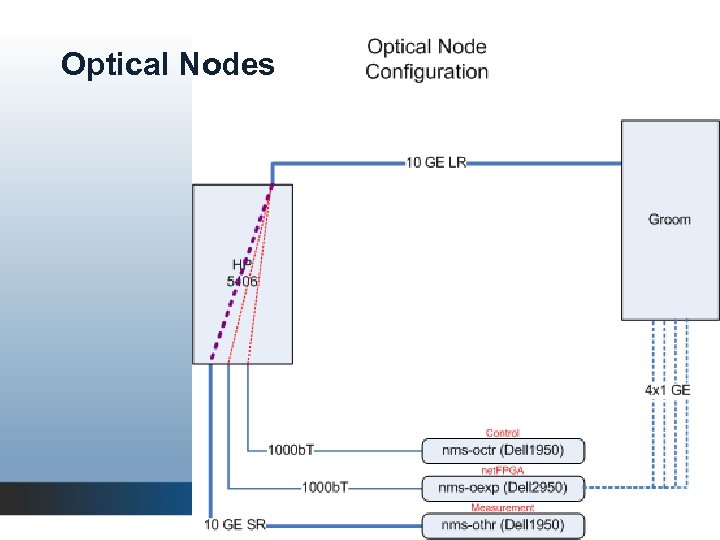

Optical Nodes

Optical Nodes

Optical Nodes

Optical Nodes

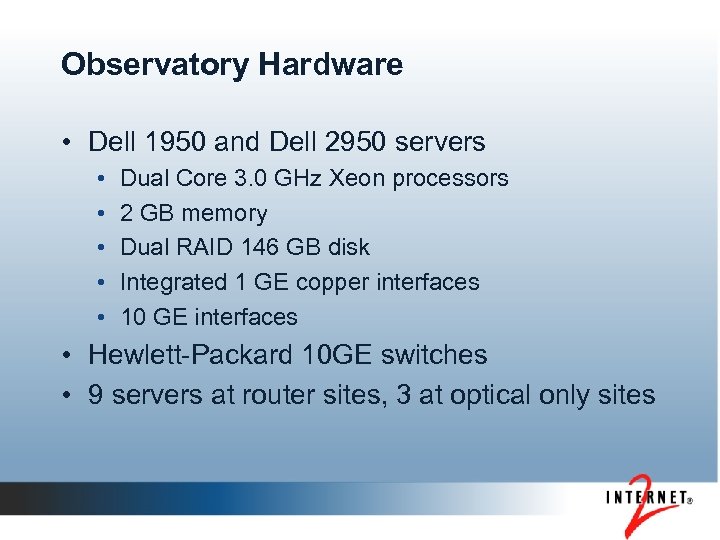

Observatory Hardware • Dell 1950 and Dell 2950 servers • • • Dual Core 3. 0 GHz Xeon processors 2 GB memory Dual RAID 146 GB disk Integrated 1 GE copper interfaces 10 GE interfaces • Hewlett-Packard 10 GE switches • 9 servers at router sites, 3 at optical only sites

Observatory Hardware • Dell 1950 and Dell 2950 servers • • • Dual Core 3. 0 GHz Xeon processors 2 GB memory Dual RAID 146 GB disk Integrated 1 GE copper interfaces 10 GE interfaces • Hewlett-Packard 10 GE switches • 9 servers at router sites, 3 at optical only sites

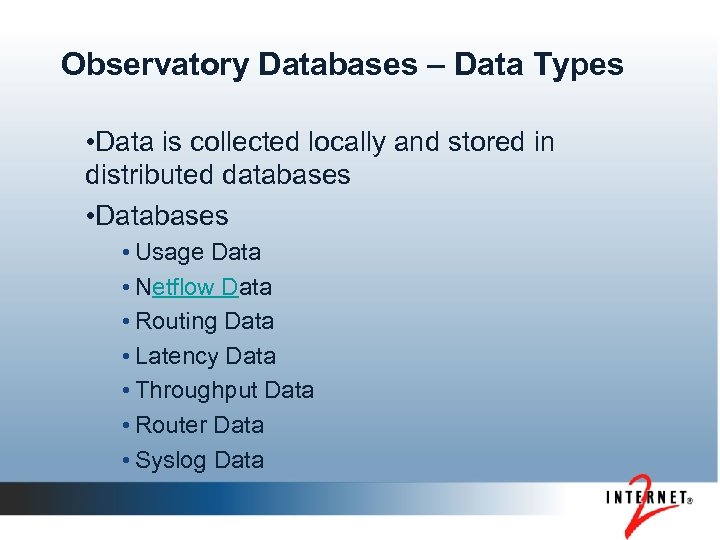

Observatory Databases – Datа Types • Data is collected locally and stored in distributed databases • Databases • Usage Data • Netflow Data • Routing Data • Latency Data • Throughput Data • Router Data • Syslog Data

Observatory Databases – Datа Types • Data is collected locally and stored in distributed databases • Databases • Usage Data • Netflow Data • Routing Data • Latency Data • Throughput Data • Router Data • Syslog Data

Uses and Futures • Some uses of existing datasets and tools • • Quality Control Network Diagnosis Network Characterization Network Research • Consultation with researchers • Open questions

Uses and Futures • Some uses of existing datasets and tools • • Quality Control Network Diagnosis Network Characterization Network Research • Consultation with researchers • Open questions

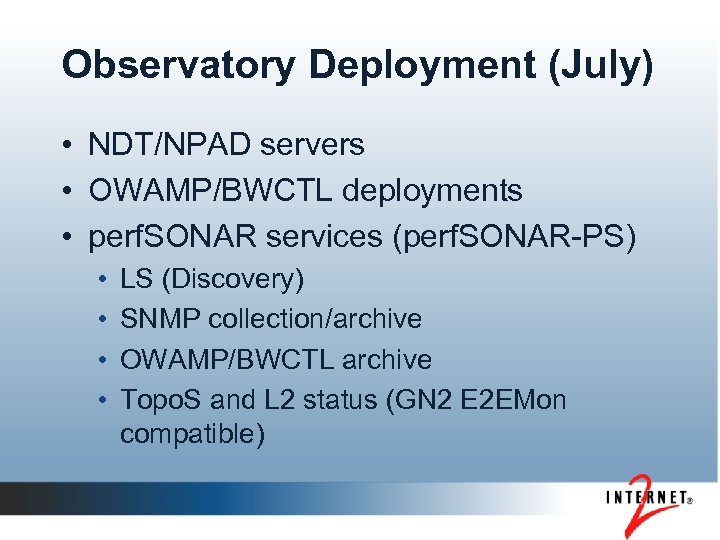

Observatory Deployment (July) • NDT/NPAD servers • OWAMP/BWCTL deployments • perf. SONAR services (perf. SONAR-PS) • • LS (Discovery) SNMP collection/archive OWAMP/BWCTL archive Topo. S and L 2 status (GN 2 E 2 EMon compatible)

Observatory Deployment (July) • NDT/NPAD servers • OWAMP/BWCTL deployments • perf. SONAR services (perf. SONAR-PS) • • LS (Discovery) SNMP collection/archive OWAMP/BWCTL archive Topo. S and L 2 status (GN 2 E 2 EMon compatible)

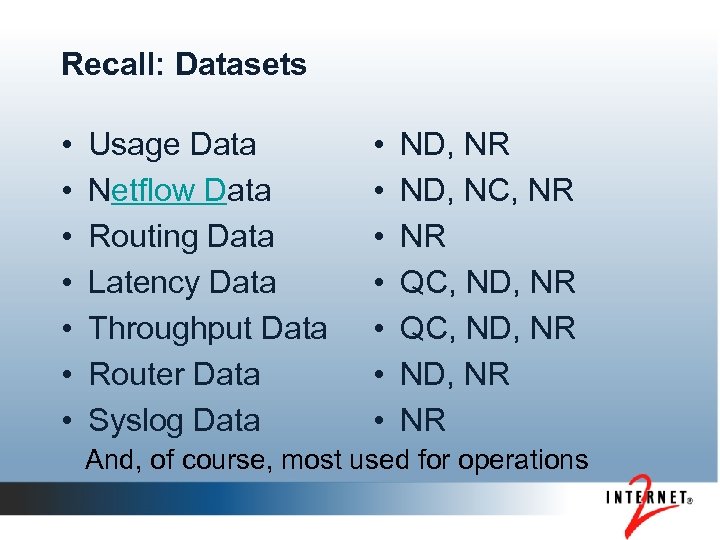

Recall: Datasets • • Usage Data Netflow Data Routing Data Latency Data Throughput Data Router Data Syslog Data • • ND, NR ND, NC, NR NR QC, ND, NR NR And, of course, most used for operations

Recall: Datasets • • Usage Data Netflow Data Routing Data Latency Data Throughput Data Router Data Syslog Data • • ND, NR ND, NC, NR NR QC, ND, NR NR And, of course, most used for operations

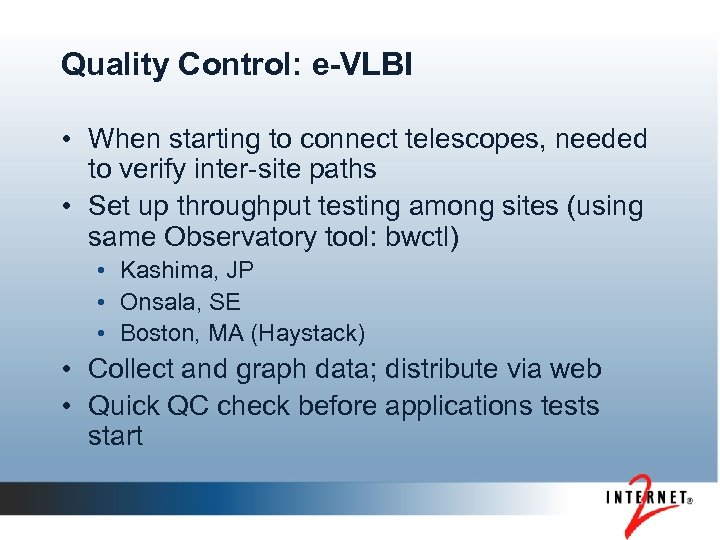

Quality Control: e-VLBI • When starting to connect telescopes, needed to verify inter-site paths • Set up throughput testing among sites (using same Observatory tool: bwctl) • Kashima, JP • Onsala, SE • Boston, MA (Haystack) • Collect and graph data; distribute via web • Quick QC check before applications tests start

Quality Control: e-VLBI • When starting to connect telescopes, needed to verify inter-site paths • Set up throughput testing among sites (using same Observatory tool: bwctl) • Kashima, JP • Onsala, SE • Boston, MA (Haystack) • Collect and graph data; distribute via web • Quick QC check before applications tests start

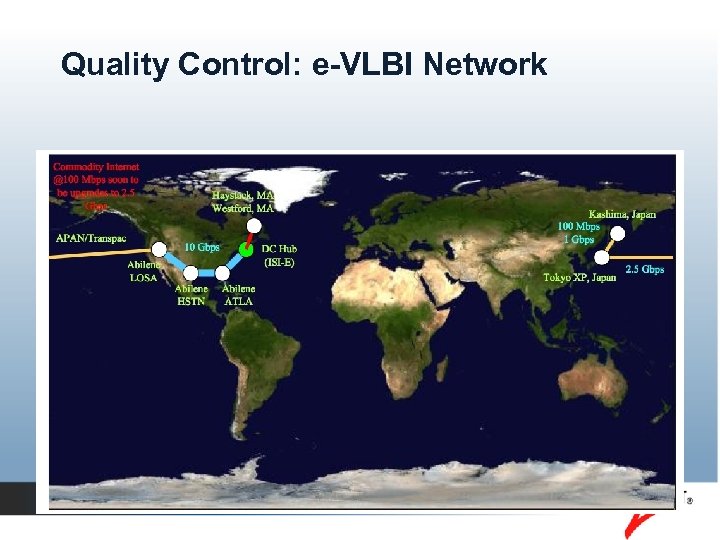

Quality Control: e-VLBI Network

Quality Control: e-VLBI Network

Quality Control: e. VLBI Result • Automated monitoring allowed view of network throughput variation over time • Highlights route changes, network outages • Automated monitoring also helps to highlight any throughput issues at end points: • e. g. Network Interface Card failures, untuned TCP Stacks • Integrated monitoring provides overall view of network behavior at a glance

Quality Control: e. VLBI Result • Automated monitoring allowed view of network throughput variation over time • Highlights route changes, network outages • Automated monitoring also helps to highlight any throughput issues at end points: • e. g. Network Interface Card failures, untuned TCP Stacks • Integrated monitoring provides overall view of network behavior at a glance

Network Diagnosis: e-VLBI • • • Target at the time: 50 Mbps Oops: Onsala-Boston: 1 Mbps Divide and Conquer Verify Abilene backbone tests look good Use Abilene test point in Washington DC Eliminated European and trans-Atlantic pieces • Focus on problem: found oversubscribed link

Network Diagnosis: e-VLBI • • • Target at the time: 50 Mbps Oops: Onsala-Boston: 1 Mbps Divide and Conquer Verify Abilene backbone tests look good Use Abilene test point in Washington DC Eliminated European and trans-Atlantic pieces • Focus on problem: found oversubscribed link

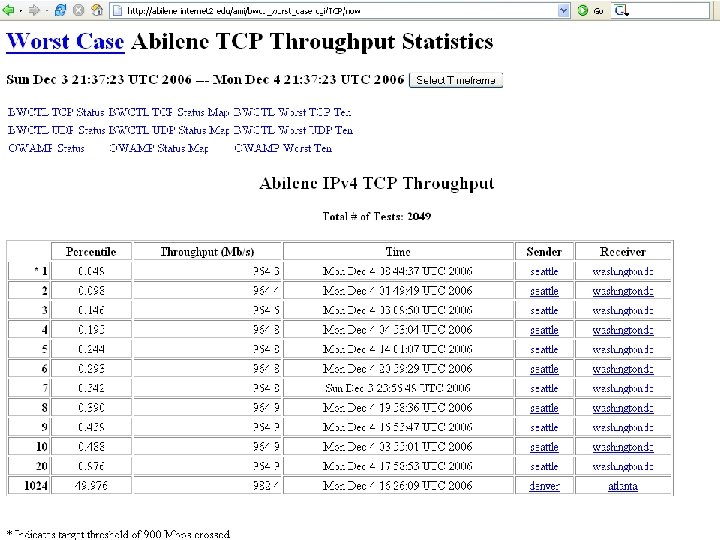

Quality Control: IP Backbone • Machines with 1 GE interfaces, 9000 MTU • Full mesh • IPv 4 and IPv 6 • Expect > 950 Mbps TCP • Keep list of “Worst 10” • If any path < 900 Mbps for two successive testing intervals, throw alarm

Quality Control: IP Backbone • Machines with 1 GE interfaces, 9000 MTU • Full mesh • IPv 4 and IPv 6 • Expect > 950 Mbps TCP • Keep list of “Worst 10” • If any path < 900 Mbps for two successive testing intervals, throw alarm

Quality Control: Peerings • Internet 2 and ESnet have been watching the latency across peering points for a while. • Internet 2 and DREN have been preparing to do some throughput and latency testing • During the course of this set up, found interesting routing and MTU size issues

Quality Control: Peerings • Internet 2 and ESnet have been watching the latency across peering points for a while. • Internet 2 and DREN have been preparing to do some throughput and latency testing • During the course of this set up, found interesting routing and MTU size issues

Network Diagnosis: End Hosts • NDT, NPAD servers • Quick check from a host that has a browser • Easily eliminate (or confirm) last mile problems (buffer sizing, duplex mismatch, …) • NPAD can find switch limitations, provided the server is close enough

Network Diagnosis: End Hosts • NDT, NPAD servers • Quick check from a host that has a browser • Easily eliminate (or confirm) last mile problems (buffer sizing, duplex mismatch, …) • NPAD can find switch limitations, provided the server is close enough

Network Diagnosis: Generic • Generally looking for configuration & loss • Don’t forget security appliances • Is there connectivity & reasonable latency? (ping -> OWAMP) • Is routing reasonable (traceroute, proxy) • Is host reasonable (NDT; NPAD) • Is path reasonable (BWCTL)

Network Diagnosis: Generic • Generally looking for configuration & loss • Don’t forget security appliances • Is there connectivity & reasonable latency? (ping -> OWAMP) • Is routing reasonable (traceroute, proxy) • Is host reasonable (NDT; NPAD) • Is path reasonable (BWCTL)

Network Characterization • Flow data collected with flow-tools package • All data not used for security alerts and analysis [REN-ISAC] is anonymized • Reports from anonymized data available (see truncated addresses) • Additionally, some Engineering reports

Network Characterization • Flow data collected with flow-tools package • All data not used for security alerts and analysis [REN-ISAC] is anonymized • Reports from anonymized data available (see truncated addresses) • Additionally, some Engineering reports

Network Research Projects • Major consumption • Flows • Routes • Configuration • Nick Feamster (while at MIT) • Dave Maltz (while at CMU) • Papers in SIGCOMM, INFOCOM • Hard to track folks that just pull data off of web sites

Network Research Projects • Major consumption • Flows • Routes • Configuration • Nick Feamster (while at MIT) • Dave Maltz (while at CMU) • Papers in SIGCOMM, INFOCOM • Hard to track folks that just pull data off of web sites

Lots of Work to be Done • Internet 2 Observatory realization inside racks set for initial deployment, including new research projects (Net. FPGA, Phoebus) • Software and links easily changed • Could add or change hardware depending on costs • Researcher tools, new datasets • Consensus on passive data

Lots of Work to be Done • Internet 2 Observatory realization inside racks set for initial deployment, including new research projects (Net. FPGA, Phoebus) • Software and links easily changed • Could add or change hardware depending on costs • Researcher tools, new datasets • Consensus on passive data

Not Just Research • Operations and Characterization of new services • Finding problems with stitched together VLANs • Collecting and exporting data from Dynamic Circuit Service. . . • Ciena performance counters • Control plane setup information • Circuit usage (not utilization, although that is also nice) • Similar for underlying Infinera equipment • And consider inter-domain issues

Not Just Research • Operations and Characterization of new services • Finding problems with stitched together VLANs • Collecting and exporting data from Dynamic Circuit Service. . . • Ciena performance counters • Control plane setup information • Circuit usage (not utilization, although that is also nice) • Similar for underlying Infinera equipment • And consider inter-domain issues

Sharing Observatory Data We want to make Internet 2 Network Observatory Data: • Available: • Access to existing active and passive measurement data • Ability to run new active measurement tests • Interoperable: • Common schema and semantics, shared across other networks • Single format • XML-based discovery of what’s available

Sharing Observatory Data We want to make Internet 2 Network Observatory Data: • Available: • Access to existing active and passive measurement data • Ability to run new active measurement tests • Interoperable: • Common schema and semantics, shared across other networks • Single format • XML-based discovery of what’s available

Internet 2 Deployment Status • Focus is on development of services for Internet 2 new network and integration with Indiana NOC • Submitting a proposal to NSF for additional funding • Target: July 2007 as new Internet 2 network goes operation • • • OWAMP MA BWCTL MA/MP IU-based Topology Service Multi-LS NOC Alarm Transformation Service

Internet 2 Deployment Status • Focus is on development of services for Internet 2 new network and integration with Indiana NOC • Submitting a proposal to NSF for additional funding • Target: July 2007 as new Internet 2 network goes operation • • • OWAMP MA BWCTL MA/MP IU-based Topology Service Multi-LS NOC Alarm Transformation Service

More Information • Eric Boyd • eboyd@internet 2. edu • 734 -352 -7032 • • http: //e 2 epi. internet 2. edu/ http: //bwctl. internet 2. edu http: //ndt. internet 2. edu/ http: //owamp. internet 2. edu/ http: //vfer. internet 2. edu/ http: //www. perfsonar. net/ http: //nwmg. internet 2. edu

More Information • Eric Boyd • eboyd@internet 2. edu • 734 -352 -7032 • • http: //e 2 epi. internet 2. edu/ http: //bwctl. internet 2. edu http: //ndt. internet 2. edu/ http: //owamp. internet 2. edu/ http: //vfer. internet 2. edu/ http: //www. perfsonar. net/ http: //nwmg. internet 2. edu