0efad191ee6b028c68bea720a49092a8.ppt

- Количество слайдов: 41

Negotiated Privacy CS 551/851 CRyptography. Applications. Bistro Mike Mc. Nett 30 March 2004 • Stanislaw Jarecki, Pat Lincoln, Vitaly Shmatikov. Negotiated Privacy. • Dawn Xiaodong Song, David Wagner, Adrian Perrig. Practical Techniques for Searches on Encrypted Data. • Brent R. Waters, Dirk Balfanz, Glenn Durfee, and D. K. Smetters. Building an Encrypted and Searchable Audit Log.

Negotiated Privacy Necessary? • World Wide Web Consortium (W 3 C) Platform for Privacy Preferences (P 3 P) Project (http: //www. w 3. org/P 3 P/) “The Platform for Privacy Preferences Project (P 3 P), … is emerging as an industry standard providing a simple, automated way for users to gain more control over the use of personal information on Web sites they visit. … P 3 P enhances user control by putting privacy policies where users can find them, in a form users can understand, most importantly, enables users to act on what they see. “ NOTE: 10 February 2004, W 3 C P 3 P 1. 1 First public Working Draft

Why is it Really Necessary? “The way to have good and safe government, is not to trust it all to one, but to divide it among the many. . . [It is] by placing under every one what his own eye may superintend, that all will be done for the best. ” Thomas Jefferson to Joseph Cabell (Feb. 2, 1816) It’s necessary because Mr. Jefferson said so!

Outline • • Application Areas Options for Privacy Management What Negotiated Privacy Is Not Implementation Details Limitations Conclusion

Application Areas • Health data (diseases, bio-warfare, epidemics, drug interactions, etc. ) • Banking (money laundering, tax avoidance, etc. ) • National security (terrorist tracking, money transfers, etc. ) • Digital media (copies, access rights, etc. ) • Note: Many applications require – Security – Guarantees of privacy

Options for Privacy Management • Trust the collectors / analysts (people / organizations accessing the data)? IRS, DMV, Wal. Mart • Trust the users for which the data is about? P 3 P • Combination of the above? – Negotiate what is reportable and what isn’t

What Negotiated Privacy Is • Provide personal data escrow of private data by the subjects of monitoring • Pre-negotiated thresholds (interested parties) • Conditional release: Meet threshold “unlock” private data • Ensures both accuracy and privacy • Only allows authorized queries (i. e. , has a threshold been met? )

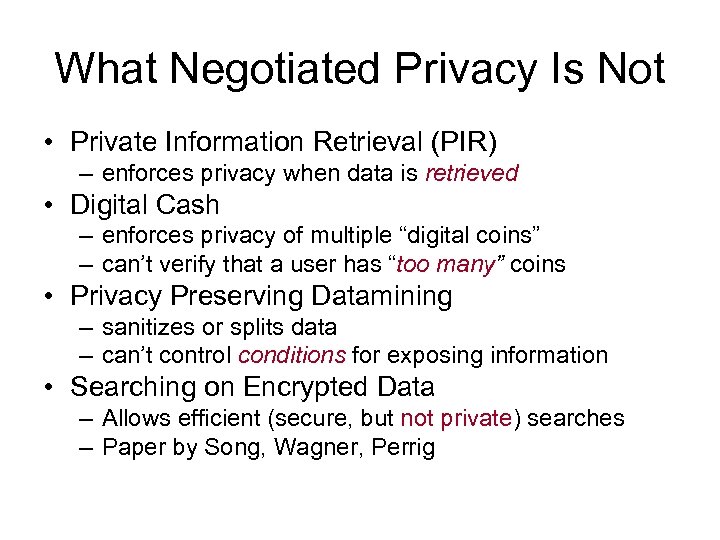

What Negotiated Privacy Is Not • Private Information Retrieval (PIR) – enforces privacy when data is retrieved • Digital Cash – enforces privacy of multiple “digital coins” – can’t verify that a user has “too many” coins • Privacy Preserving Datamining – sanitizes or splits data – can’t control conditions for exposing information • Searching on Encrypted Data – Allows efficient (secure, but not private) searches – Paper by Song, Wagner, Perrig

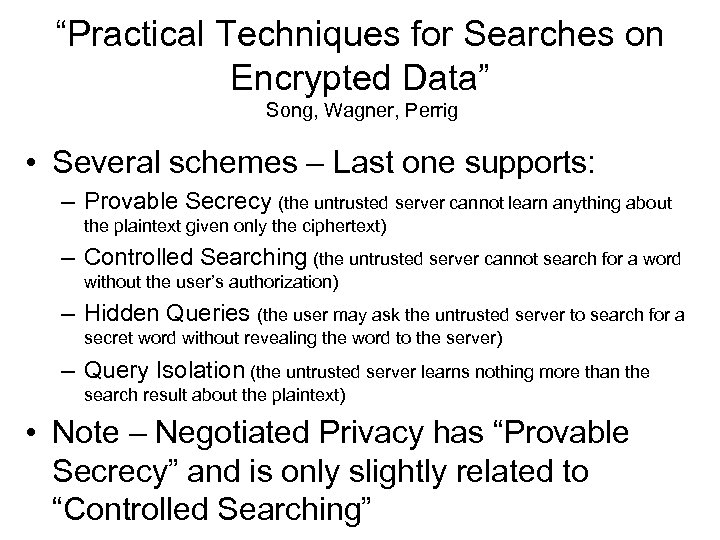

“Practical Techniques for Searches on Encrypted Data” Song, Wagner, Perrig • Several schemes – Last one supports: – Provable Secrecy (the untrusted server cannot learn anything about the plaintext given only the ciphertext) – Controlled Searching (the untrusted server cannot search for a word without the user’s authorization) – Hidden Queries (the user may ask the untrusted server to search for a secret word without revealing the word to the server) – Query Isolation (the untrusted server learns nothing more than the search result about the plaintext) • Note – Negotiated Privacy has “Provable Secrecy” and is only slightly related to “Controlled Searching”

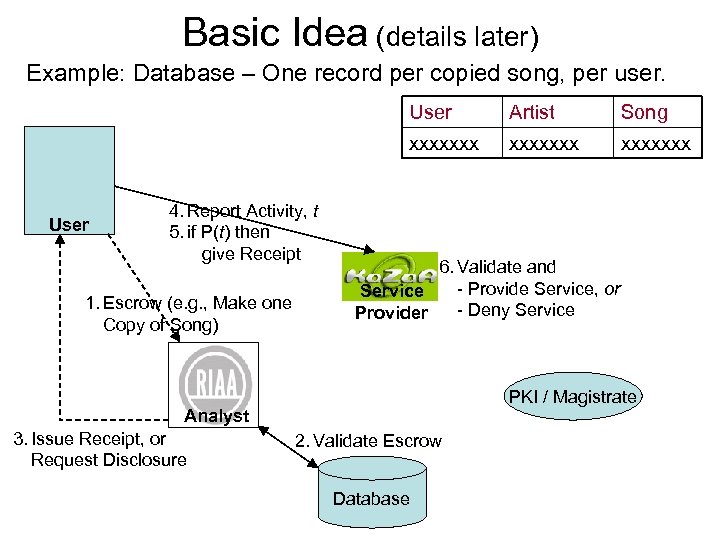

Basic Idea (details later) Example: Database – One record per copied song, per user. User 4. Report Activity, t 5. if P(t) then give Receipt 1. Escrow (e. g. , Make one Copy of Song) Song xxxxxxx User Artist xxxxxxx 6. Validate and - Provide Service, or Service - Deny Service Provider PKI / Magistrate Analyst 3. Issue Receipt, or Request Disclosure 2. Validate Escrow Database

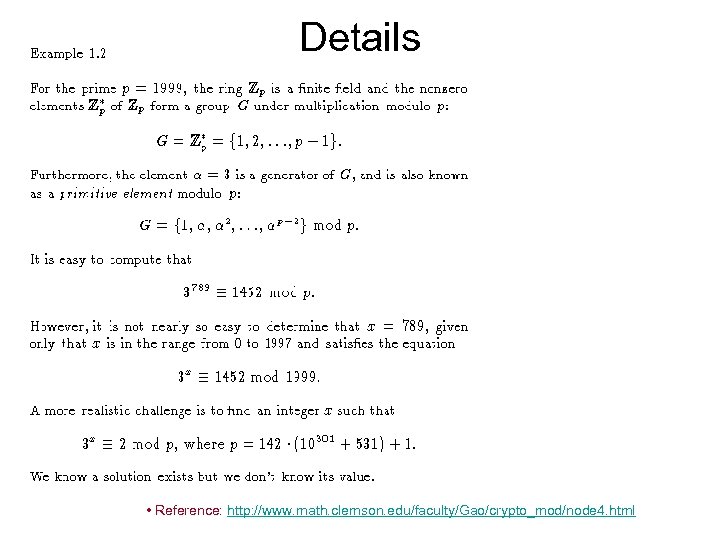

Details • Reference: http: //www. math. clemson. edu/faculty/Gao/crypto_mod/node 4. html

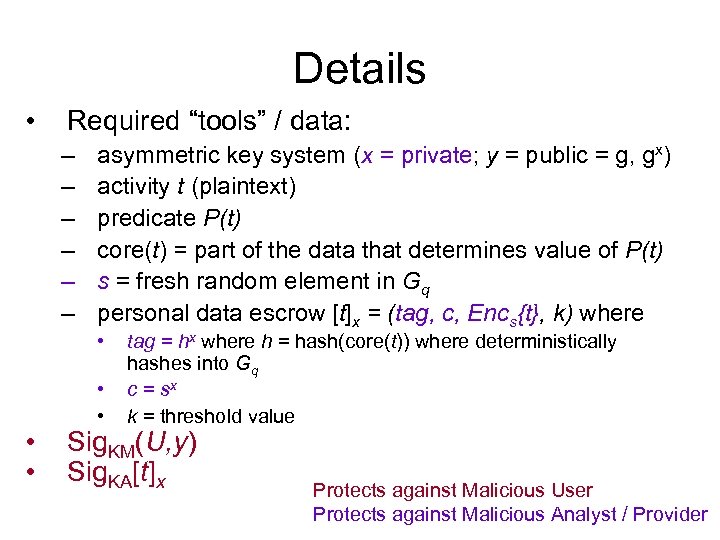

Details • Required “tools” / data: – – – asymmetric key system (x = private; y = public = g, gx) activity t (plaintext) predicate P(t) core(t) = part of the data that determines value of P(t) s = fresh random element in Gq personal data escrow [t]x = (tag, c, Encs{t}, k) where • • • tag = hx where h = hash(core(t)) where deterministically hashes into Gq c = sx k = threshold value Sig. KM(U, y) Sig. KA[t]x Protects against Malicious User Protects against Malicious Analyst / Provider

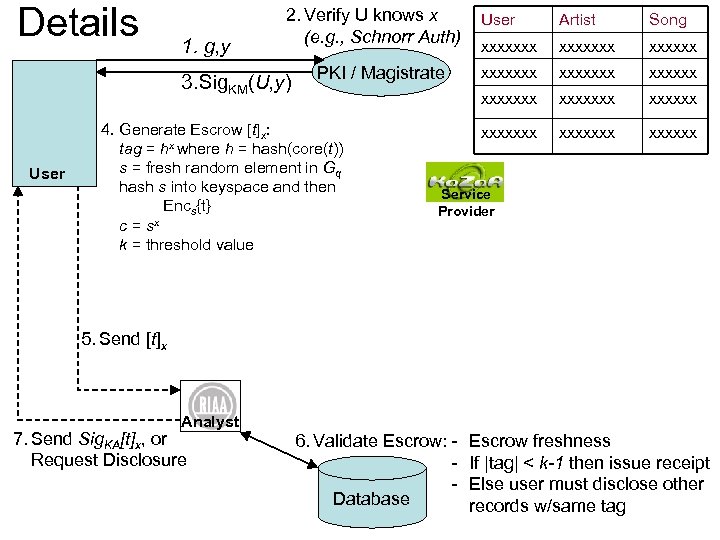

Details 1. g, y 2. Verify U knows x (e. g. , Schnorr Auth) 3. Sig. KM(U, y) User 4. Generate Escrow [t]x: tag = hx where h = hash(core(t)) s = fresh random element in Gq hash s into keyspace and then Encs{t} c = sx k = threshold value Artist Song xxxxxxx xxxxxxx xxxxxxx PKI / Magistrate User xxxxxxx Service Provider 5. Send [t]x Analyst 7. Send Sig. KA[t]x, or Request Disclosure 6. Validate Escrow: Database Escrow freshness If |tag| < k-1 then issue receipt Else user must disclose other records w/same tag

Details User 3. Sig. KM(U, y) User PKI / Magistrate 8. Report Activity t if P(t) then give s, Sig. KA([t]x), Sig. KM(U, y) and proof (tag=hx, c=sx, and y=gx) 5. Send [t]x 7. Issue Receipt, or Request Disclosure Analyst 6. Validate Escrow Database xxxxxxx xxxxxxx 1. g, y Song xxxxxxx Verify U knows x (e. g. , Schnorr Auth) Artist xxxxxxx 2. xxxxxxx Service Provider 9. Verify signatures Verify identity is U Verify t matches activity Verify reported k is correct for this activity Compute h = hash(core(t)) Verify proof information (tag=hx, c=sx, y=gx) 10. Provide Service, or Deny Service

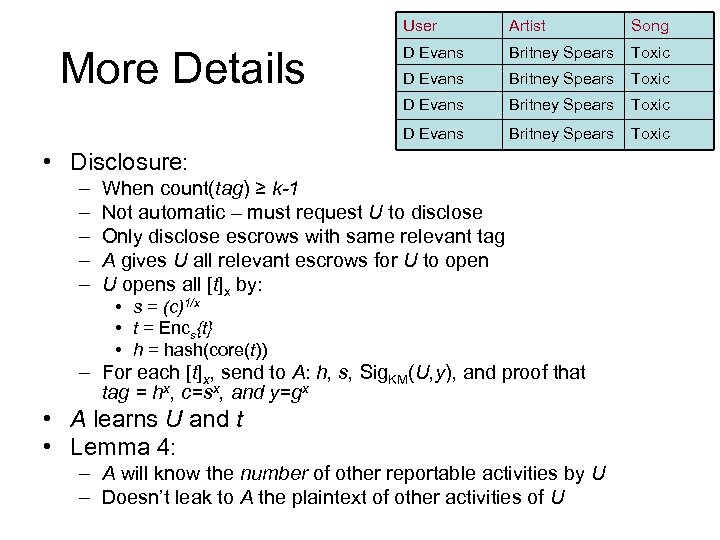

User Song D Evans xxxxxx Britney xxxxxx Spears Toxic xxxxxx D Evans xxxxxx More Details Artist Britney xxxxxx Spears Toxic xxxxxx • Disclosure: – – – When count(tag) ≥ k-1 Not automatic – must request U to disclose Only disclose escrows with same relevant tag A gives U all relevant escrows for U to open U opens all [t]x by: • s = (c)1/x • t = Encs{t} • h = hash(core(t)) – For each [t]x, send to A: h, s, Sig. KM(U, y), and proof that tag = hx, c=sx, and y=gx • A learns U and t • Lemma 4: – A will know the number of other reportable activities by U – Doesn’t leak to A the plaintext of other activities of U

Limitations • Social, legal, etc. questions • Upfront threshold & query negotiations are required • Query limitations – dynamic queries are difficult (impossible? ? ) • Can’t do “group” thresholds (since all must have same tag) • No automatic disclosure of records (but could go to magistrate, if necessary) • U gets escrow, but decides not to get served • Can’t completely stop impersonations (use biometrics? ? ) • Doesn’t stop threats due to collusion among entities

Conclusion • Good initial move towards supporting reasonable negotiated privacy • Provides unique functionality for niche applications • Don’t ask Dave for copies of his music

Searching on Encrypted Data Presented by Leonid Bolotnyy March 30, 2004 @UVA

Outline • Practical Techniques for Searches on Encrypted Data • Building an Encrypted and Searchable Audit Log

Practical Techniques for Searches on Encrypted Data

Goals • Provable Security – Untrusted server learns nothing about the plaintext given only ciphertext • Controlled Searching – Untrusted server cannot perform the search without user authorization • Hidden Queries – Untrusted server does not know the query • Query Isolation – Untrusted server does not learn more than the search results

Basic Scheme Encryption

Basic Scheme Search and Decryption • To Search: • To Decrypt:

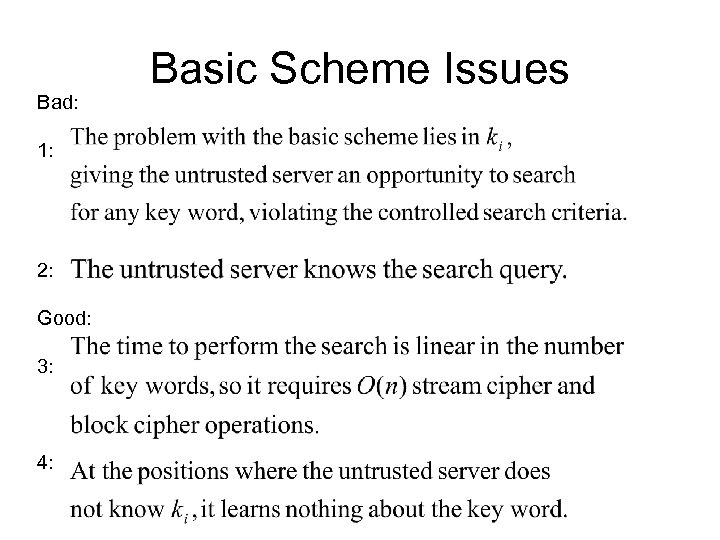

Bad: 1: 2: Good: 3: 4: Basic Scheme Issues

Controlled Searching • How do we decrypt now? • The issue of hiding search queries is still unresolved.

Hidden Searches • The problem with decryption still remains

Solving Decryption Problem

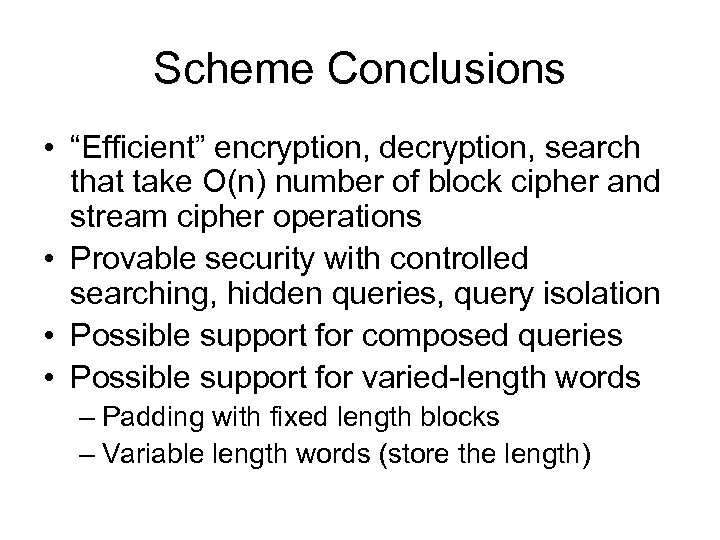

Scheme Conclusions • “Efficient” encryption, decryption, search that take O(n) number of block cipher and stream cipher operations • Provable security with controlled searching, hidden queries, query isolation • Possible support for composed queries • Possible support for varied-length words – Padding with fixed length blocks – Variable length words (store the length)

Building an Encrypted and Searchable Audit Log

Reasons to Encrypt Audit Logs • Log may be stored at not completely trusted (secure) site • To prevent tampering with the log • To restrict access to the log – Allow only access to certain parts of the log – Allow only certain entities to access the log

Characteristics of a Secure Audit Log • Temper Resistant – Guarantee that only the authorized entity can create entries and once created, entries cannot be altered • Verifiable – Allow verification that all entries are present and has not been altered • Searchable with data access control – Allow log to be “efficiently” searched only by authorized entities

Notation and Setup

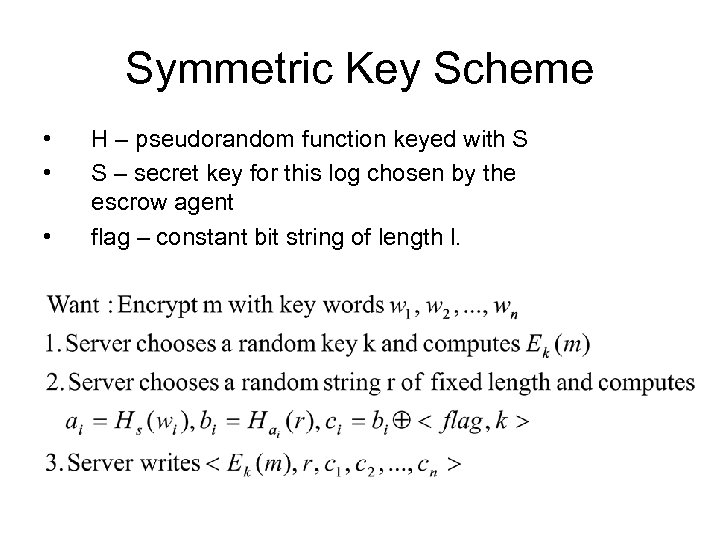

Symmetric Key Scheme • • • H – pseudorandom function keyed with S S – secret key for this log chosen by the escrow agent flag – constant bit string of length l.

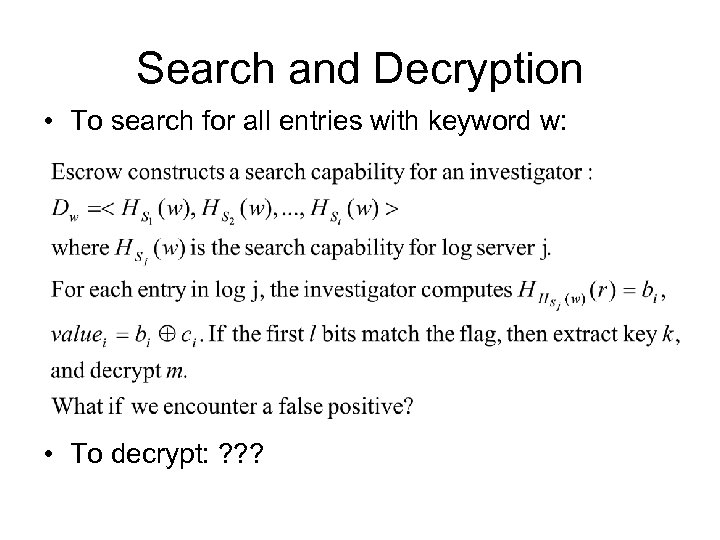

Search and Decryption • To search for all entries with keyword w: • To decrypt: ? ? ?

Issues and Problems • flag size and possibility of false positives • Capabilities for different key words appear random • Adversary may be is able to learn S which is known to the server • Updating keys requires constant connection to the escrow agent + numerous keys management problem + high search time • STORE AS LITTLE SECRET INFORMATION ON THE SERVER AS POSSIBLE

Identity Based Encryption • Identity Based Encryption allows arbitrary strings to be used as public keys • Master secret key stored with a trusted escrow agent allows generation of a private key after the public key has been selected

IBE Setup and Key generation • Setup: • Key generation:

IBE Encryption and Decryption • Encryption • Decryption

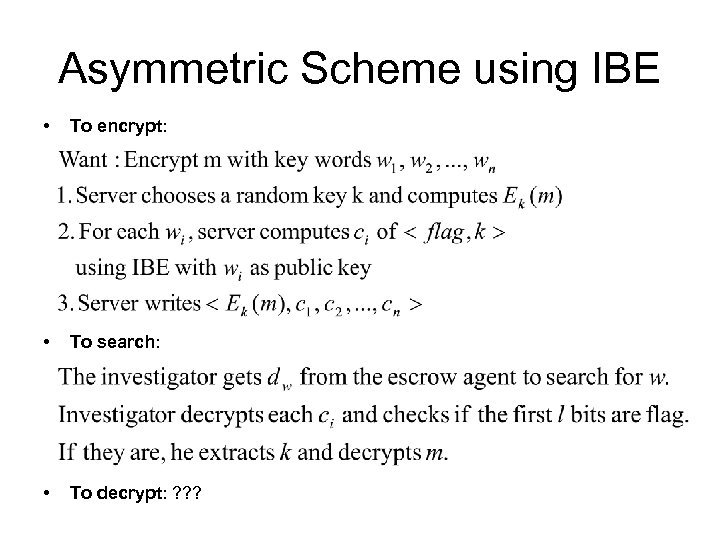

Asymmetric Scheme using IBE • To encrypt: • To search: • To decrypt: ? ? ?

Comments on the IBE Scheme • Note: • Each server stores only public parameters • Compromising the server does not allow attacker to search the data • Possible to separate the search and decryption by encrypting the key using some other public key (requires an extra access to the escrow agent for decryption) • A drawback: Tremendous increase in computation time

Scheme Optimizations • Pairing Reuse • Indexing • Randomness Reuse

0efad191ee6b028c68bea720a49092a8.ppt