8b9c1cc23c9ccdaa25e731f6bdd80b12.ppt

- Количество слайдов: 36

NAYOSE: A System for Reference Disambiguation of Proper Nouns Appearing on Web Pages AIRS 2006 Oct. 16 -18, 2006 Singapore Shingo Ono, Minoru Yoshida, and Hiroshi Nakagawa The University of Tokyo, Japan 1

Table of Contents 1. 2. 3. 4. Motivation NAYOSE System Results Conclusion 2

Motivation • Have you ever had trouble when you have used a common name as a query in a search engine? 3

Shingo Ono Doctor Baseball Player Foreign student Me 4

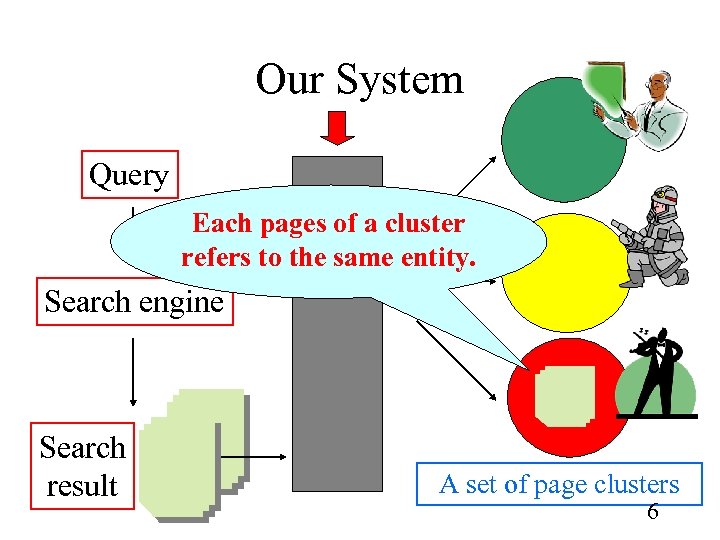

Problem and our solution • When different real-world entity has the same name, the reference from the name to the entity can be ambiguous. • We considered NAYOSE System on the Web – The system gives results clusters of Web pages. 5

Our System Query Each pages of a cluster refers to the same entity. Search engine Search result A set of page clusters 6

![Related Works u. Use information from documents • • [Bagga and Baldwin, 1998] – Related Works u. Use information from documents • • [Bagga and Baldwin, 1998] –](https://present5.com/presentation/8b9c1cc23c9ccdaa25e731f6bdd80b12/image-7.jpg)

Related Works u. Use information from documents • • [Bagga and Baldwin, 1998] – Naive VSM [Mann and Yarowsky, 2003] – Biographic data [Niu et al. , 2004] – Personal info. [Wan et al. , 2005] – Middle name oriented u. Use information from Web structure • [Bekkerman and Mac. Callum, 2005] – Link structure and double clustering 7

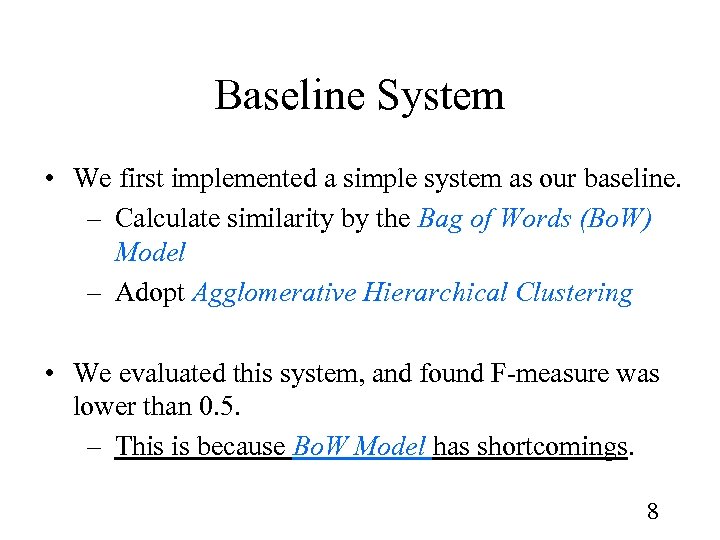

Baseline System • We first implemented a simple system as our baseline. – Calculate similarity by the Bag of Words (Bo. W) Model – Adopt Agglomerative Hierarchical Clustering • We evaluated this system, and found F-measure was lower than 0. 5. – This is because Bo. W Model has shortcomings. 8

Proposed Methods Bag of Words Model only focused on the words frequency. There were other profitable information in document, such as: • Word positions – Local Context Matching • Word meanings – Named Entities Matching 9

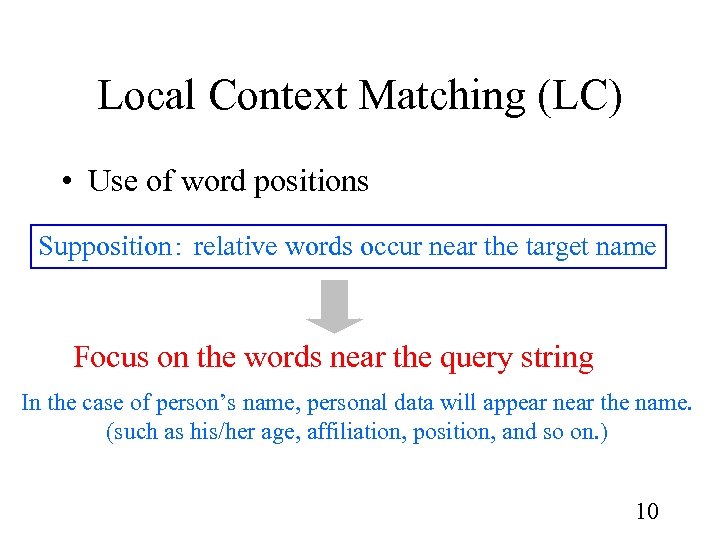

Local Context Matching (LC) • Use of word positions Supposition: relative words occur near the target name Focus on the words near the query string In the case of person’s name, personal data will appear near the name. (such as his/her age, affiliation, position, and so on. ) 10

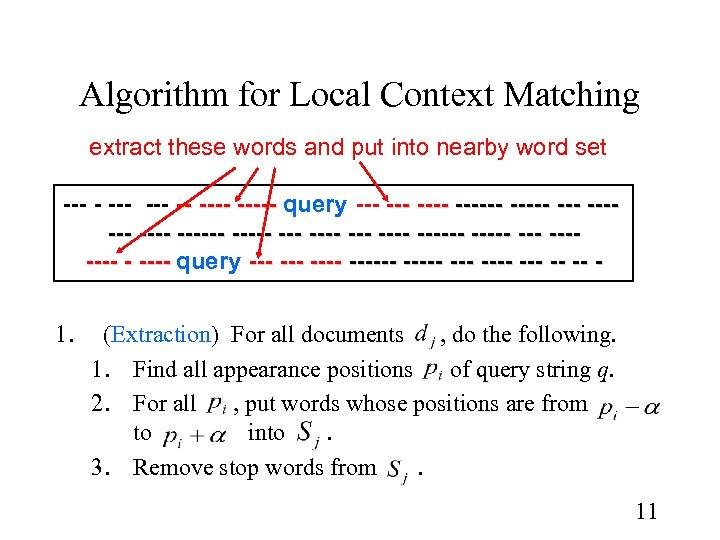

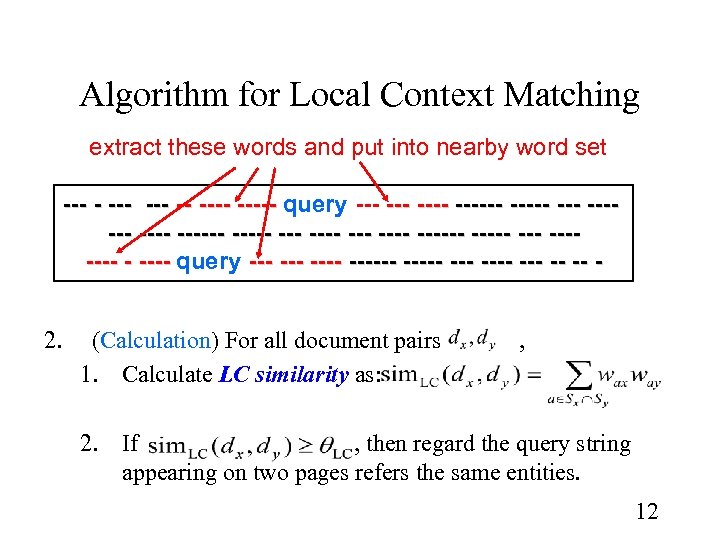

Algorithm for Local Context Matching extract these words and put into nearby word set --- ----- query --- ------ ----- ----- ------- - ---- query --- ------ --- ---- -- -- - 1. (Extraction) For all documents , do the following. 1. Find all appearance positions of query string q. 2. For all , put words whose positions are from to into. 3. Remove stop words from. 11

Algorithm for Local Context Matching extract these words and put into nearby word set --- ----- query --- ------ ----- ----- ------- - ---- query --- ------ --- ---- -- -- - 2. (Calculation) For all document pairs 1. Calculate LC similarity as: , 2. If , then regard the query string appearing on two pages refers the same entities. 12

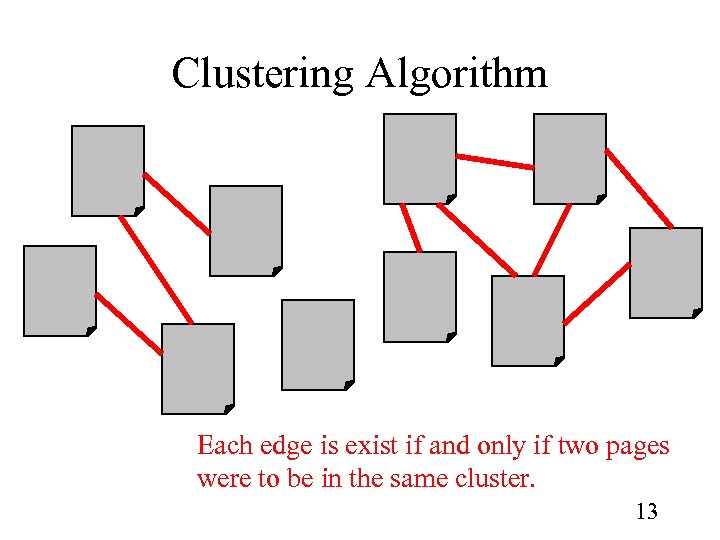

Clustering Algorithm Each edge is exist if and only if two pages were to be in the same cluster. 13

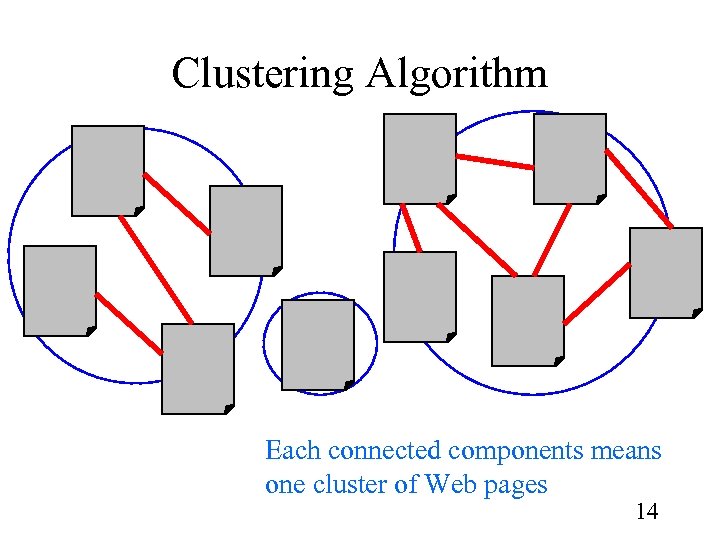

Clustering Algorithm Each connected components means one cluster of Web pages 14

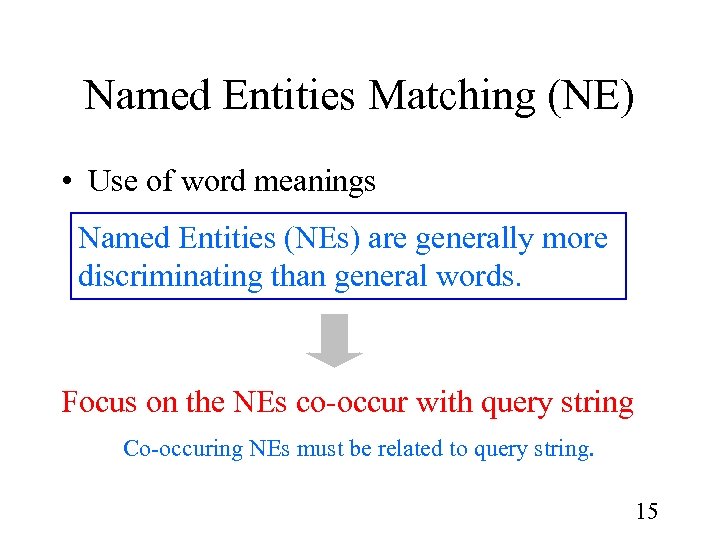

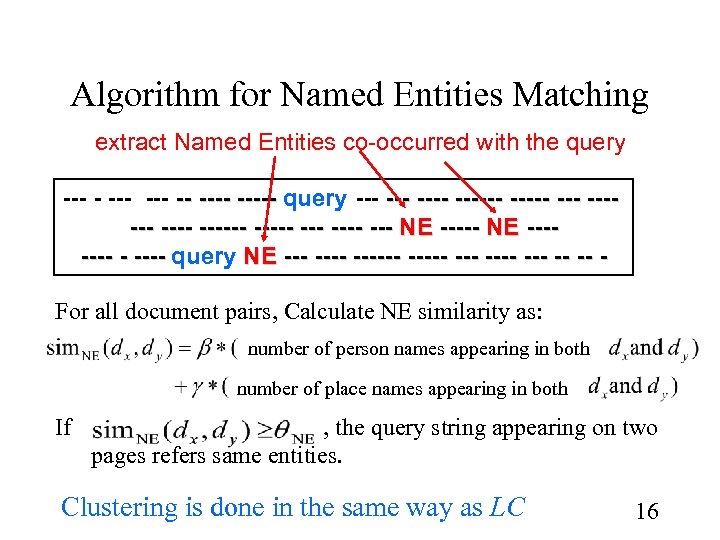

Named Entities Matching (NE) • Use of word meanings Named Entities (NEs) are generally more discriminating than general words. Focus on the NEs co-occur with query string Co-occuring NEs must be related to query string. 15

Algorithm for Named Entities Matching extract Named Entities co-occurred with the query --- ----- query --- ------ ----- --- NE ------- - ---- query NE ----- ---- -- -- For all document pairs, Calculate NE similarity as: number of person names appearing in both number of place names appearing in both If , the query string appearing on two pages refers same entities. Clustering is done in the same way as LC 16

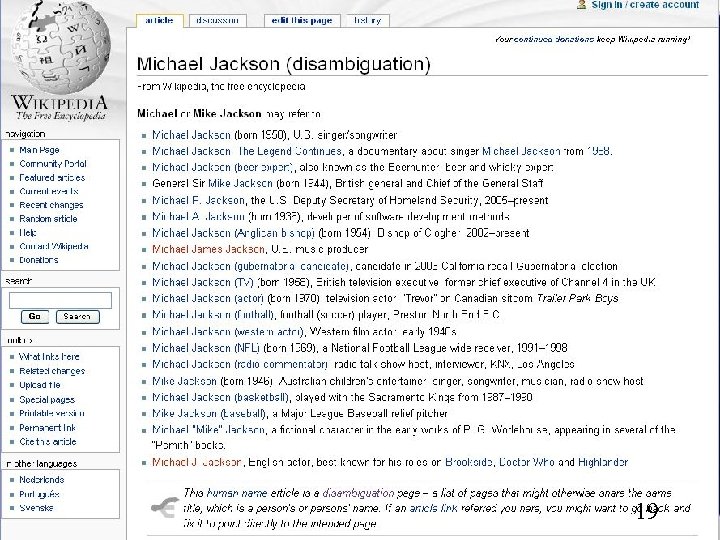

Filtering Junk Pages • There are meaningless pages on the Web. • The meaningless pages cause errors on the reference disambiguation. 17

18

19

Filtering Junk Pages • There are meaningless pages on the Web. • The meaningless pages cause errors on the reference disambiguation. Ø Removing junk pages with filtering rules. 20

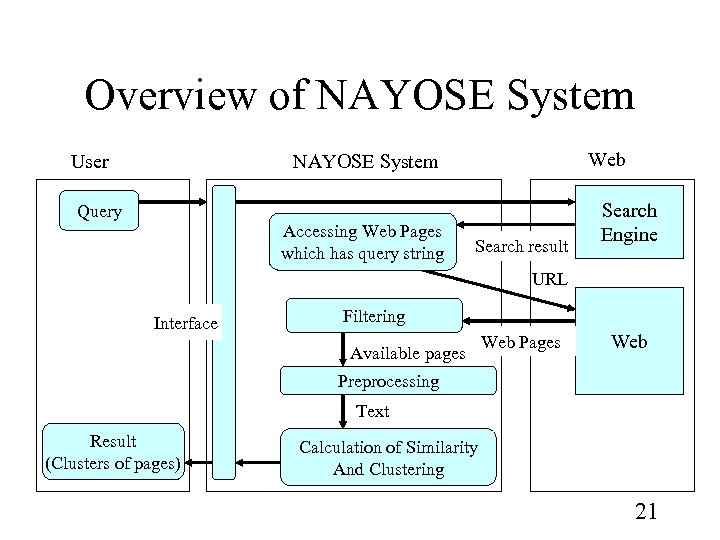

Overview of NAYOSE System Web NAYOSE System User Query Accessing Web Pages which has query string Search result Search Engine URL Interface Filtering Available pages Web Preprocessing Text Result (Clusters of pages) Calculation of Similarity And Clustering 21

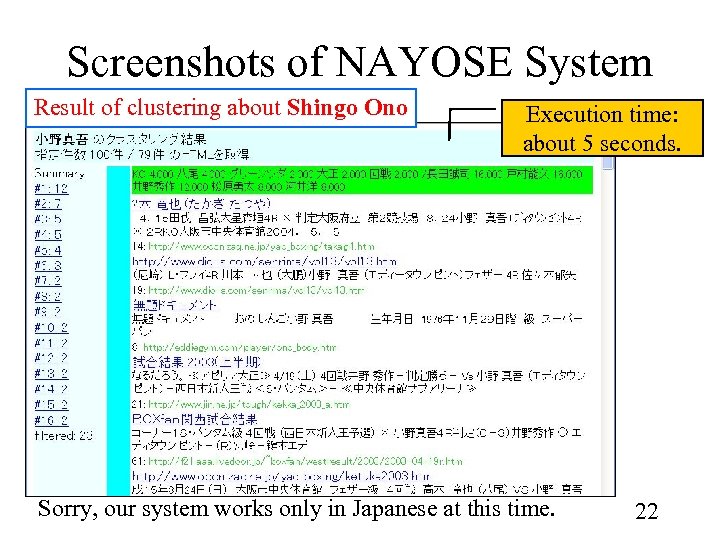

Screenshots of NAYOSE System Result of clustering about Shingo Ono Execution time: about 5 seconds. Sorry, our system works only in Japanese at this time. 22

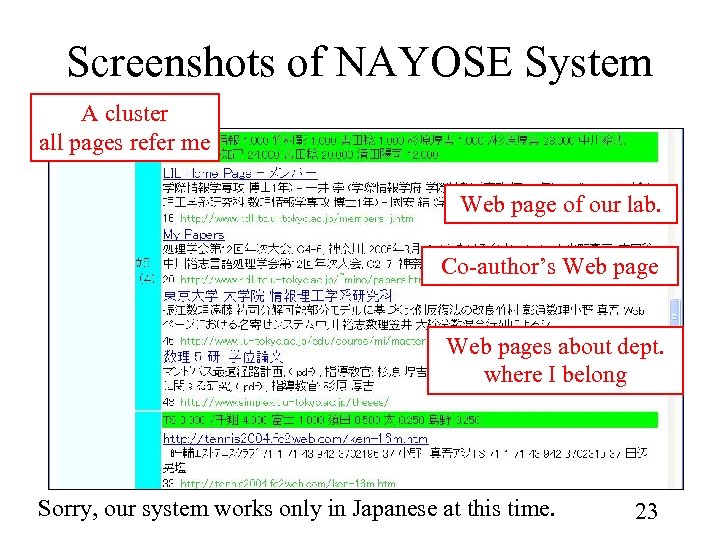

Screenshots of NAYOSE System A cluster all pages refer me Web page of our lab. Co-author’s Web pages about dept. where I belong Sorry, our system works only in Japanese at this time. 23

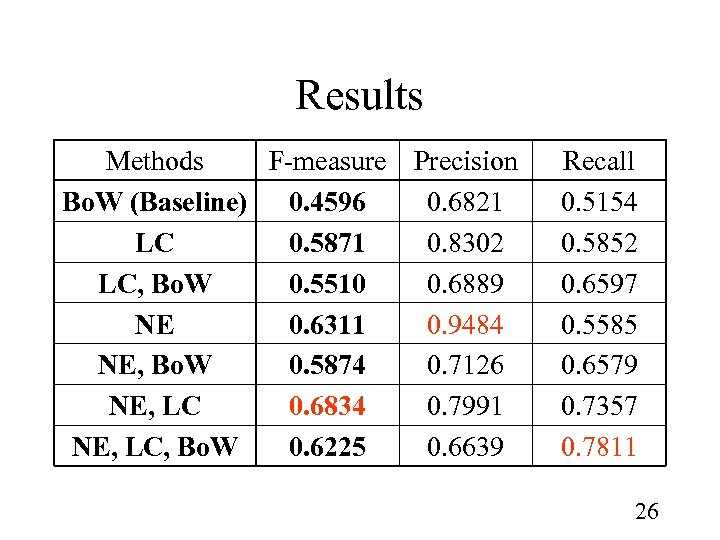

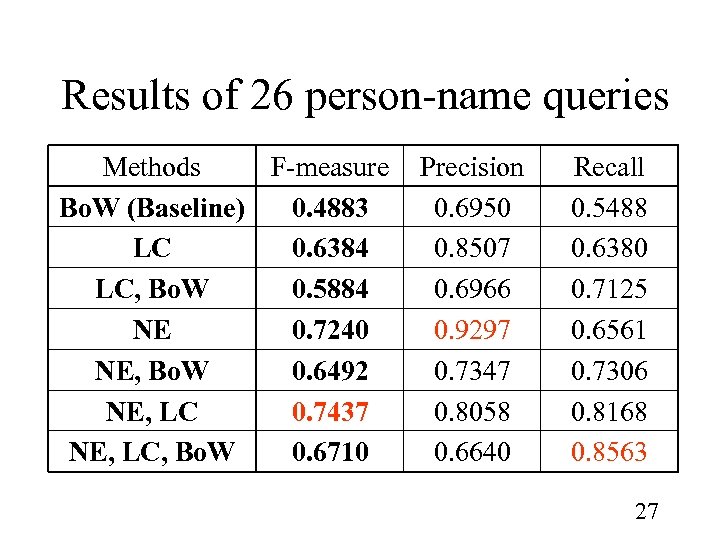

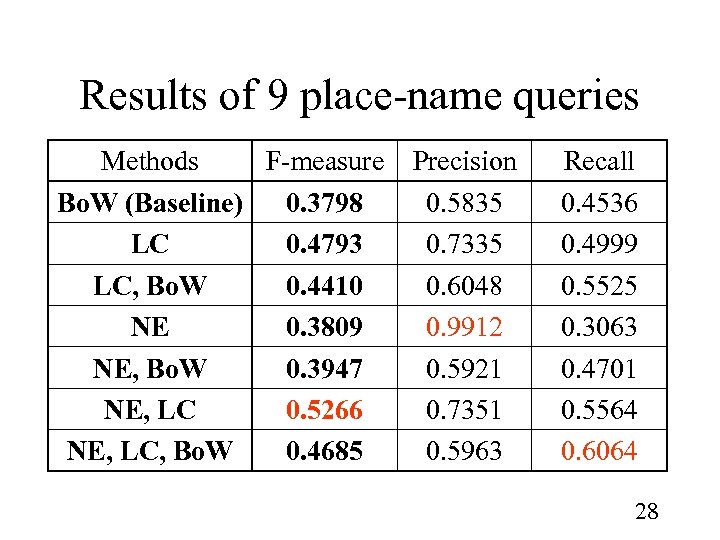

Evaluation • Data set: – Each data set composed of the top 100 – 200 results from search engines. – We collected 3859 pages on 37 queries. (28 person names and 9 place names) – We annotated data set by the hands. – We did not use artificial data set, but real-world data set. 24

Evaluation • Purpose: – Which methods (Bo. W, LC, NE, and combination of them) is the best? • Metrics: – Precision (P), Recall (R) and F-measure (F) – Metrics were calculated as [Larsen and Aone, 1999]. 25

Results Methods F-measure Precision Bo. W (Baseline) 0. 4596 0. 6821 LC 0. 5871 0. 8302 LC, Bo. W 0. 5510 0. 6889 NE 0. 6311 0. 9484 NE, Bo. W 0. 5874 0. 7126 NE, LC 0. 6834 0. 7991 NE, LC, Bo. W 0. 6225 0. 6639 Recall 0. 5154 0. 5852 0. 6597 0. 5585 0. 6579 0. 7357 0. 7811 26

Results of 26 person-name queries Methods F-measure Bo. W (Baseline) 0. 4883 LC 0. 6384 LC, Bo. W 0. 5884 NE 0. 7240 NE, Bo. W 0. 6492 NE, LC 0. 7437 NE, LC, Bo. W 0. 6710 Precision 0. 6950 0. 8507 0. 6966 0. 9297 0. 7347 0. 8058 0. 6640 Recall 0. 5488 0. 6380 0. 7125 0. 6561 0. 7306 0. 8168 0. 8563 27

Results of 9 place-name queries Methods F-measure Bo. W (Baseline) 0. 3798 LC 0. 4793 LC, Bo. W 0. 4410 NE 0. 3809 NE, Bo. W 0. 3947 NE, LC 0. 5266 NE, LC, Bo. W 0. 4685 Precision 0. 5835 0. 7335 0. 6048 0. 9912 0. 5921 0. 7351 0. 5963 Recall 0. 4536 0. 4999 0. 5525 0. 3063 0. 4701 0. 5564 0. 6064 28

Thank you! 29

(Appendix) How to do clustering when two or three methods were applied • In the case of combination of Bo. W and NE/LC – NE/LC methods were applied first, and Bo. W was then applied to the NE/LC result. • In the case of combination of NE and LC – Clustering were done at the same time. Ø Detail will be described in next slide 30

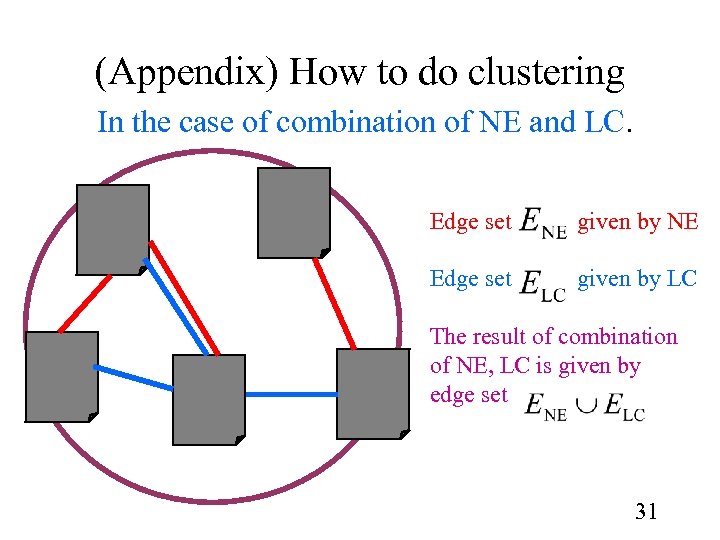

(Appendix) How to do clustering In the case of combination of NE and LC. Edge set given by NE Edge set given by LC The result of combination of NE, LC is given by edge set 31

Motivation • Have you ever had trouble when you have used a common name as a query in a search engine? – We can access target Web page, but this often forces us to do hard and time consuming work. – When different real-world entity (person/ place/ organization) has the same name, the reference from the name to the entity can be ambiguous. 32

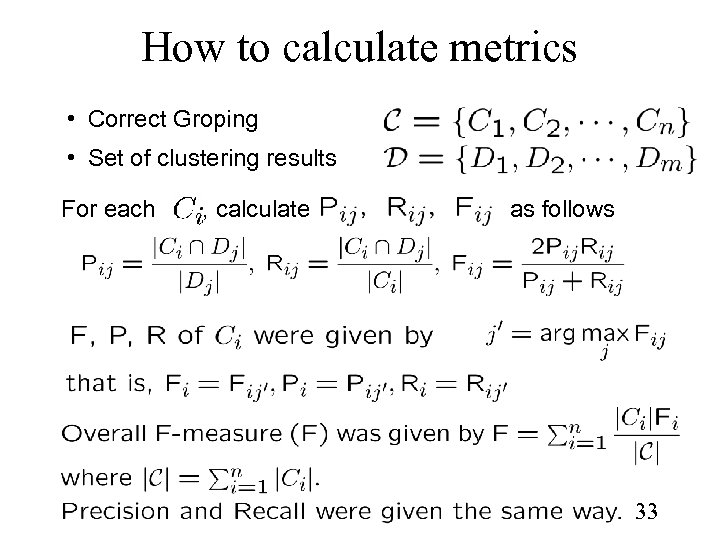

How to calculate metrics • Correct Groping • Set of clustering results For each , calculate as follows 33

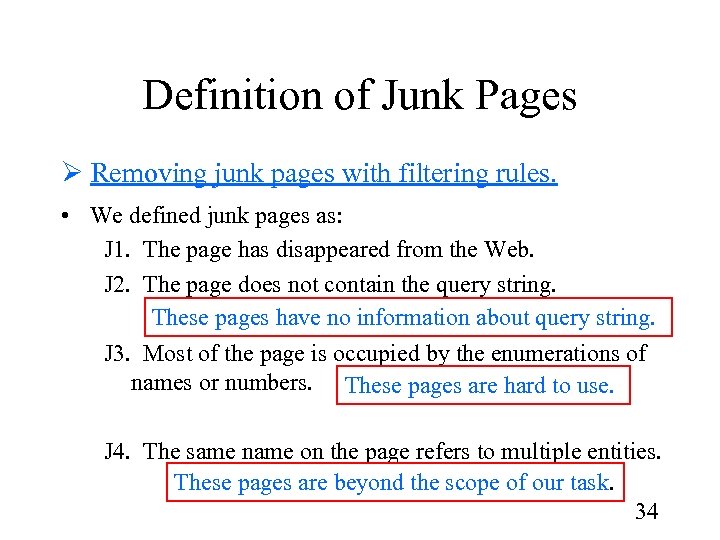

Definition of Junk Pages Ø Removing junk pages with filtering rules. • We defined junk pages as: J 1. The page has disappeared from the Web. J 2. The page does not contain the query string. These pages have no information about query string. J 3. Most of the page is occupied by the enumerations of names or numbers. These pages are hard to use. J 4. The same name on the page refers to multiple entities. These pages are beyond the scope of our task. 34

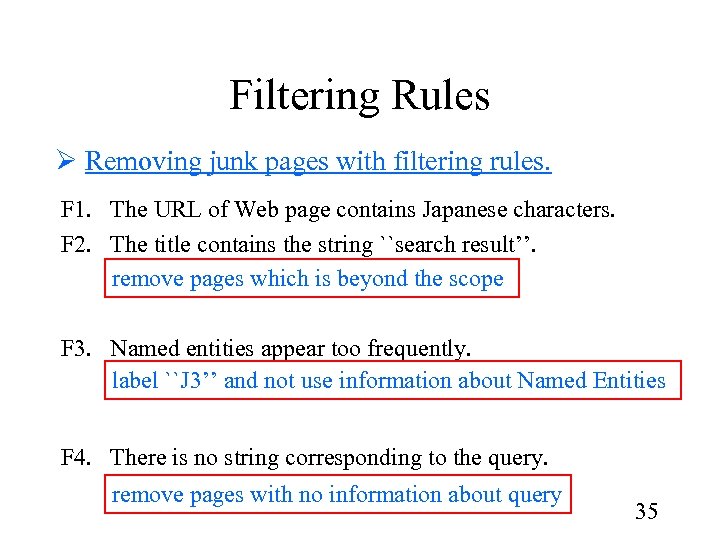

Filtering Rules Ø Removing junk pages with filtering rules. F 1. The URL of Web page contains Japanese characters. F 2. The title contains the string ``search result’’. remove pages which is beyond the scope F 3. Named entities appear too frequently. label ``J 3’’ and not use information about Named Entities F 4. There is no string corresponding to the query. remove pages with no information about query 35

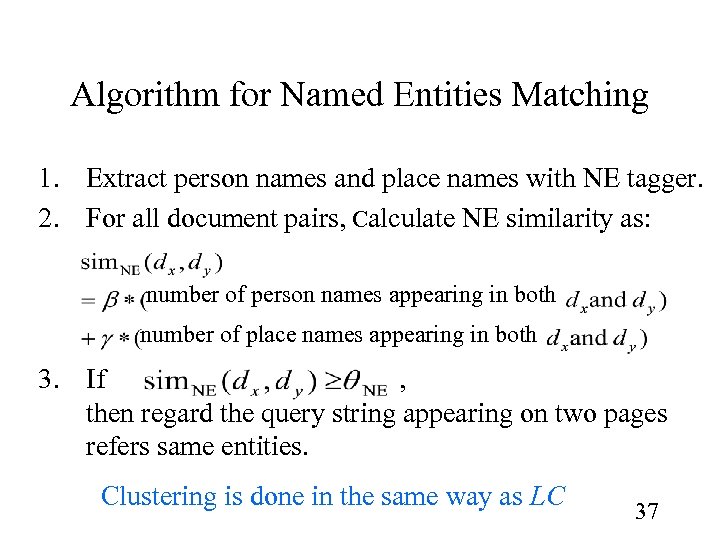

Algorithm for Named Entities Matching 1. Extract person names and place names with NE tagger. 2. For all document pairs, Calculate NE similarity as: number of person names appearing in both number of place names appearing in both 3. If , then regard the query string appearing on two pages refers same entities. Clustering is done in the same way as LC 37

8b9c1cc23c9ccdaa25e731f6bdd80b12.ppt