1a2c109b6e321667870274b121220d06.ppt

- Количество слайдов: 26

Native Infiniband Storage John Josephakis, VP, Data Direct Networks St. Louis – November 2007 1

Summary § § 2007 Infiniband basics IB Storage Protocols File System Scenarios DDN Silicon Storage Appliance 2

Infiniband Basics § Quick introduction – RDMA – Infiniband § RDMA Storage Protocols – SRP (SCSI RDMA Protocol) – i. SER (i. SCSI Extensions for RDMA) 2007 3

RDMA § RDMA (Remote Direct Memory Access) – Enables access to main memory via direct memory access (zero-copy networking) – No CPU, cache or context switching overhead – High Throughput – Low Latency – Application data delivered directly to network 2007 4

Infiniband § Collapses Network And Channel Fabrics Into One Consolidated Interconnect – Bidirectional, Double-data Rate (DDR) & Quad-data Rate (QDR) § Low-latency, Very High Performance, Serial I/O § Greatly Reduces Complexity and Cost – Huge Performance Reduces Storage System Count – Single Interconnect Adapter for Server to Server and Storage 2007 # IB lanes Bandwidth per Lane 1 SDR. 25 GB/s DDR. 5 GB/s QDR 1 GB/s. 4 X 1 GB/s. 2 GB/s. 4 GB/s. 8 X 2 GB/s. 4 GB/s. 8 GB/s. 12 X 3 GB/s. 6 GB/s. 12 GB/s. 1. Source: Info. Stor 5

SRP (SCSI RDMA Protocol) – SRP == SCSI over Infiniband – Similar to FCP (SCSI over Fibre Channel) except that CMD Information Unit includes addresses to get/place data – Initiator drivers available with IB Gold and Open. IB 2007 6

SRP (SCSI RDMA Protocol) § Advantages – – – 2007 Native Infiniband protocol No new hardware required Requests carry buffer information All data transfers occur through Infiniband RDMA No Need for Multiple Packets No flow control for data packets necessary 7

i. SER (i. SCSI extensions for RDMA) § i. SER leverages on i. SCSI management and discovery – Zero-Configuration, global storage naming (SLP, i. SNS) – Change Notifications and active monitoring of devices and initiators – High-Availability, and 3 levels of automated recovery – Multi-Pathing and storage aggregation – Industry standard management interfaces (MIB) – 3 rd party storage managers – Security (Partitioning, Authentication, central login control, . . ) 2007 8

i. SCSI mapping for i. SER Protocol frames (RDMA) i. SCSI PDU i. SCSI Mapping to i. SER / RDMA Transport BHS AHS X HD Data In HW RC Send X DD In HW RC RDMA Read/Write § i. SER eliminates the traditional i. SCSI/TCP bottlenecks : – Zero copy using RDMA – CRC calculated by hardware – Work with message boundaries instead of streams – Transport protocol implemented in hardware (minimal CPU cycles per IO) 2007 9

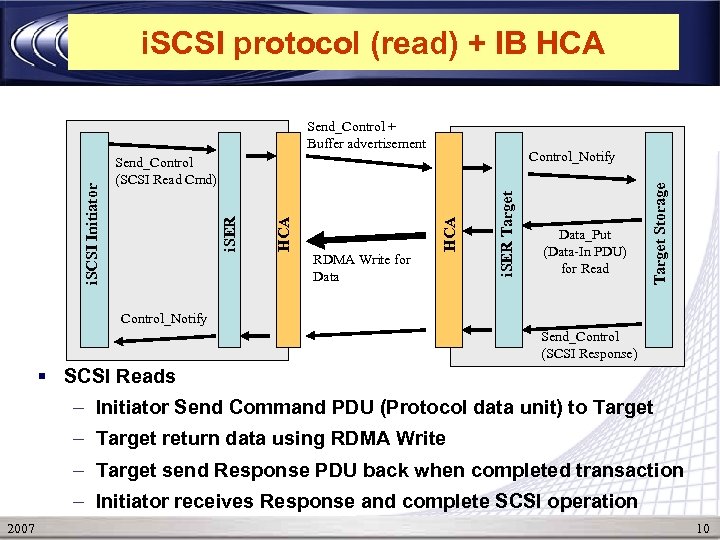

i. SCSI protocol (read) + IB HCA i. SER Target HCA RDMA Write for Data_Put (Data-In PDU) for Read Target Storage Control_Notify Send_Control (SCSI Read Cmd) i. SER i. SCSI Initiator Send_Control + Buffer advertisement Control_Notify Send_Control (SCSI Response) § SCSI Reads – Initiator Send Command PDU (Protocol data unit) to Target – Target return data using RDMA Write – Target send Response PDU back when completed transaction – Initiator receives Response and complete SCSI operation 2007 10

IB/FC and IB/IP Routers – IB/FC and IB/IP routers make Infiniband integration easy in existing i. SCSI fabrics – Both Service Location Protocol (SLP) and i. SNS (Internet Storage Name Service) allow for smooth i. SCSI discovery in presence of Infiniband 2007 11

i. SCSI Discovery with Service Location Protocol (SLP) § Client Broadcast: I’m xx where is my storage ? § FC Routers discover FC SAN § Relevant i. SCSI Targets & FC gateways respond § Client may record multiple possible targets & Portals i. SCSI Client IB to FC Routers IB to IP Router Native IB RAID Portal – a network end-point (IP+port), indicating a path 2007 Gb. E Switch FC Switch 12

i. SCSI Discovery with Internet Storage Name Service (i. SNS) § § § FC Routers discover FC SAN i. SCSI Targets & FC gateways report to i. SNS Server Client asks i. SNS Server: I’m xx where is my storage ? i. SNS responds with targets and portals Resources may be divided into domains Changes notified immediately (SCNs) i. SCSI Client i. SNS Server IB to FC Routers IB to IP Router Native IB RAID i. SNS or SLP run over IPo. IB or Gb. E, and can span both networks 2007 Gb. E Switch FC Switch 13

SRP vs i. SER § Both SRP and i. SER make use of RDMA – Source and Destination Addresses in the SCSI transfer – Zero memory copy § SRP Uses – Direct server connections – Small controlled environments – Products: DDN, LSI, Mellanox, … § i. SER Uses – Large switch connected Networks – Discovery fully supported – Products: Voltaire/Falconstor, … 2007 14

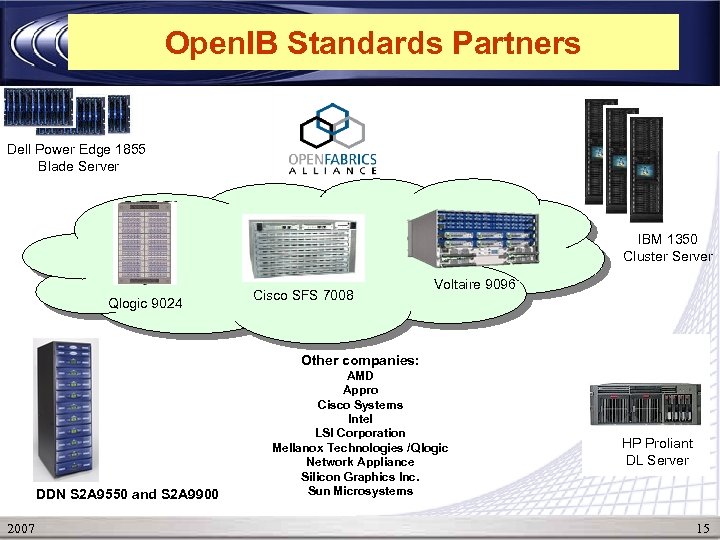

Open. IB Standards Partners Dell Power Edge 1855 Blade Server IBM 1350 Cluster Server Qlogic 9024 Cisco SFS 7008 Voltaire 9096 Other companies: DDN S 2 A 9550 and S 2 A 9900 2007 AMD Appro Cisco Systems Intel LSI Corporation Mellanox Technologies /Qlogic Network Appliance Silicon Graphics Inc. Sun Microsystems HP Proliant DL Server 15

Silicon Storage Appliance Storage System The Storage System Difference 2007 16

IB and S 2 A 9550 -S 2 A 9900 § Native IB and RDMA (and FC-4) § Greatly Reduces File System Complexity and Cost – Huge Performance Reduces Storage System Count – Power. LUNs Reduce Number Of LUNs & Striping Required § Supports >1000 Fibre-Channel Disks and SATA Disks § Parallelism Provides Inherent Load-Balancing, Multi. Pathing and Zero-Time Failover § Supports All Open Parallel and Shared SAN File Systems (and Some that Aren’t So Open) § Supports All Common Operating Systems – Linux, Windows, IRIX, AIX, Solaris, Tru 64, OS-X and more 2007 17

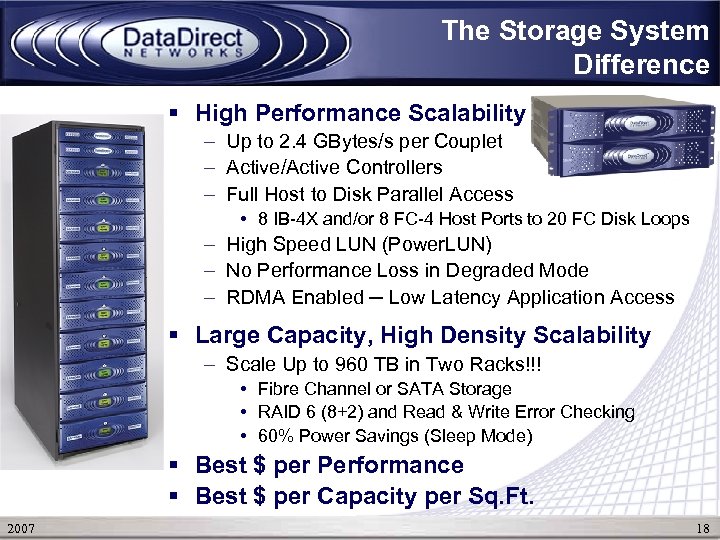

The Storage System Difference § High Performance Scalability – Up to 2. 4 GBytes/s per Couplet – Active/Active Controllers – Full Host to Disk Parallel Access • 8 IB-4 X and/or 8 FC-4 Host Ports to 20 FC Disk Loops – High Speed LUN (Power. LUN) – No Performance Loss in Degraded Mode – RDMA Enabled ─ Low Latency Application Access § Large Capacity, High Density Scalability – Scale Up to 960 TB in Two Racks!!! • Fibre Channel or SATA Storage • RAID 6 (8+2) and Read & Write Error Checking • 60% Power Savings (Sleep Mode) § Best $ per Performance § Best $ per Capacity per Sq. Ft. 2007 18

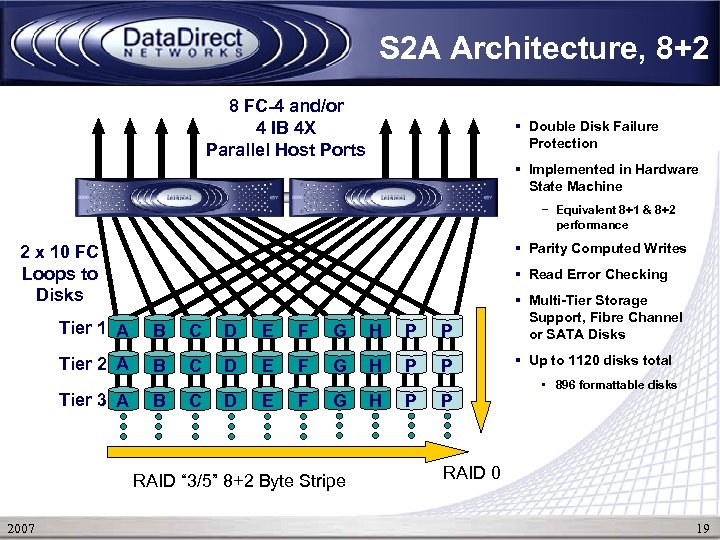

S 2 A Architecture, 8+2 8 FC-4 and/or 4 IB 4 X Parallel Host Ports § Double Disk Failure Protection § Implemented in Hardware State Machine − Equivalent 8+1 & 8+2 performance § Parity Computed Writes 2 x 10 FC Loops to Disks § Read Error Checking Tier 1 A B C D E F G H P P Tier 2 A B C D E F G H P P Tier 3 A B C D E F G RAID “ 3/5” 8+2 Byte Stripe 2007 H P P § Multi-Tier Storage Support, Fibre Channel or SATA Disks § Up to 1120 disks total • 896 formattable disks RAID 0 19

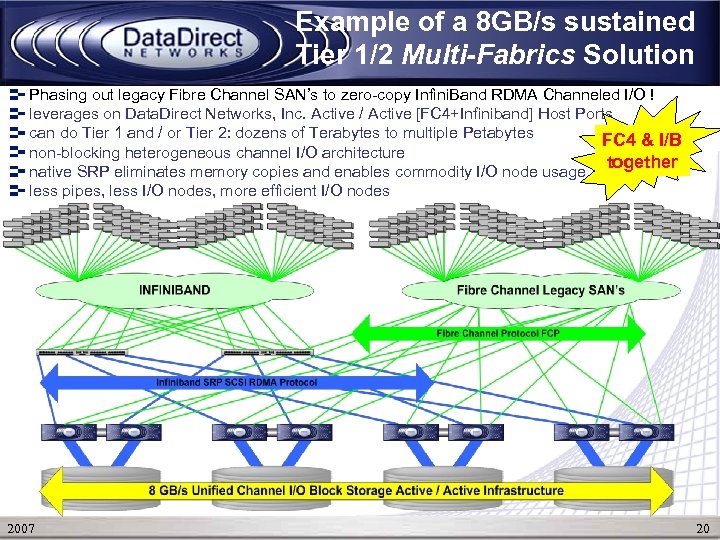

Example of a 8 GB/s sustained Tier 1/2 Multi-Fabrics Solution Phasing out legacy Fibre Channel SAN’s to zero-copy Infini. Band RDMA Channeled I/O ! leverages on Data. Direct Networks, Inc. Active / Active [FC 4+Infiniband] Host Ports can do Tier 1 and / or Tier 2: dozens of Terabytes to multiple Petabytes FC 4 & I/B non-blocking heterogeneous channel I/O architecture together native SRP eliminates memory copies and enables commodity I/O node usage less pipes, less I/O nodes, more efficient I/O nodes 2007 20

Infiniband-less File System Scenario CPU Nodes § Standard SCSI Socket Block-Level Transfers File System Memory Legacy FC Fabric RAID System Multiple Copies Socket Buffer Adapter Buffer I/O Node I/O Server Nodes Multiple Copies Adapter Buffer Socket Buffer RAID Cache Raw Disk 2007 21

Infiniband based File System Scenario 1 CPU Nodes § RDMA Block-Level Transfers Zero Memory Copy allows the storage system to RDMA data directly into file system memory space which can then be RDMAed to the client side as well File System Memory Infiniband Fabric I/O Node RAID Cache 2007 RAID 22

Infiniband based File System Scenario 2 CPU Nodes Infiniband Fabric File System MDS RAID Cache Raw Disk 2007 RAID § RDMA Block-Level Transfers Zero Memory Copy Zero Server Hops 23

Infiniband Environments 2. 4+GB/s Throughput per HPCSS Data Building Block SDR or DDR Infiniband Network Thousands of File. Ops/s Standby Customer Supplied 10/1000 Mbit Ethernet Network HPCSS Metadata Service § § § 2007 Metadata Storage Holds 1. 5 M Files in Standard Configuration: More Optional Customer Must Supply 2 PCI-E Infiniband Cards (one per MDS) Customer Must Supply Separate Ethernet Management Network for Failover Services HPCSS Data Building Block § Disk Configured Separately: – Bundle Disk Solutions Available – 8 Host Ports Required § Customer Must Supply qty 4 PCI-E Infiniband Cards (one per OSS) § Customer Must Supply Separate Ethernet Management Network for Failover Services 24

IB Performance Results § Different Settings but here is what we have S 2 A 9550 with Lustre or GPFS (S 2 A with SRP) ~2. 4 -2. 6 GB/sec per S 2 A 9550 ~One stream: about 650 MB/sec We have observed similar performance with both although GPFS is still behind Lustre in IB deployments With the upcoming S 2 A 9900 using DDR 2 we expect: ~5 -5. 6 GB/sec per S 2 A 9900 ~one Stream about 1. 25 GB/sec via each IB PCIe Bus with one HCA • • • 2007 Over the next 3 -9 months we expect significant improvements in both as File Systems will better utilize larger clock sizes. At the present time Lustre is really the only File System that has an IB NAL. Above numbers are an average between FC and SATA disks assuming best case scenarios. Obviously for small random I/O IB technology and SATA disks are not optimal and those numbers can be as low as 1 GB/sec per S 2 A or 200 MB/sec per stream 25

Reference Sites § § § LLNL Sandia ORNL NASA DOD NSF Sites – NCSA, PSC, TACC, SDSC, IU § EMEA – CEA, AWE, Dresden, Cineca, DKRZ 2007 26

1a2c109b6e321667870274b121220d06.ppt