45136ae9c614e5e9c5af6fb8a2ac36cf.ppt

- Количество слайдов: 97

National Sun Yat-Sen University

National Sun Yat-Sen University

System Support for Scalable, Reliable and Highly Manageable Internet Services Chu-Sing Yang Department of Computer Science and Engineering National Sun Yat-Sen University

System Support for Scalable, Reliable and Highly Manageable Internet Services Chu-Sing Yang Department of Computer Science and Engineering National Sun Yat-Sen University

Outline l l Introduction Proposed System – Request Routing Mechanism – Management System l l l Content-aware Intelligence Work in Progress Conclusion 3

Outline l l Introduction Proposed System – Request Routing Mechanism – Management System l l l Content-aware Intelligence Work in Progress Conclusion 3

Background The Internet has become the most important clientserver application platform l More and more Internet services emerge l The trend toward exponential growth of Internet users at higher speed continues l The Internet is becoming a mission-critical business delivery infrastructure l The desire for using the Web to conduct business transactions or deliver services is increasing at an amazing rate. Huge demand for high performance, scalable, highly reliable Internet servers l 4

Background The Internet has become the most important clientserver application platform l More and more Internet services emerge l The trend toward exponential growth of Internet users at higher speed continues l The Internet is becoming a mission-critical business delivery infrastructure l The desire for using the Web to conduct business transactions or deliver services is increasing at an amazing rate. Huge demand for high performance, scalable, highly reliable Internet servers l 4

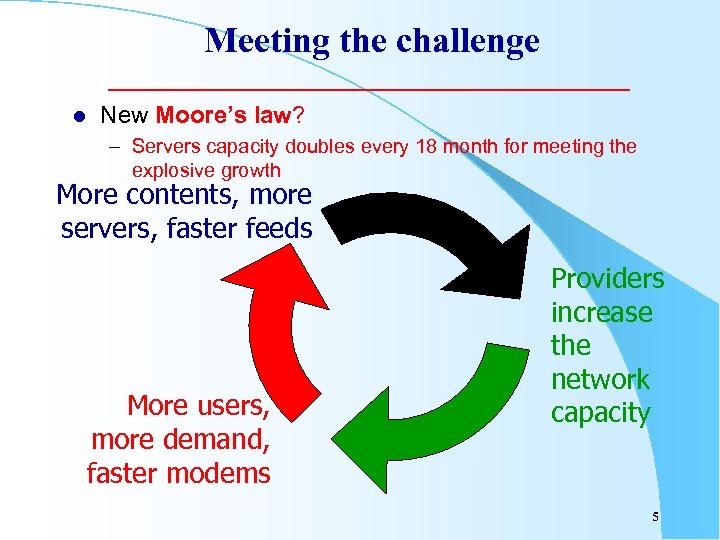

Meeting the challenge l New Moore’s law? – Servers capacity doubles every 18 month for meeting the explosive growth More contents, more servers, faster feeds More users, more demand, faster modems Providers increase the network capacity 5

Meeting the challenge l New Moore’s law? – Servers capacity doubles every 18 month for meeting the explosive growth More contents, more servers, faster feeds More users, more demand, faster modems Providers increase the network capacity 5

Web has new requirements Before l l l An economical platform for Information sharing and publish (non-critical information) 90 percents of information represented by text and images Unfrequent maintenance and updates Security is not important No guarantee on service availability Highly available performance Now l l l An important platform for critical services More sophisticated content, e. g. , larger percentage or dynamic content or streaming data Content change frequently Security becomes great concern Companies are evaluated even on the basis of their websites Explosive growth of user population 6

Web has new requirements Before l l l An economical platform for Information sharing and publish (non-critical information) 90 percents of information represented by text and images Unfrequent maintenance and updates Security is not important No guarantee on service availability Highly available performance Now l l l An important platform for critical services More sophisticated content, e. g. , larger percentage or dynamic content or streaming data Content change frequently Security becomes great concern Companies are evaluated even on the basis of their websites Explosive growth of user population 6

Meeting the challenge l l The first-generation Web infrastructure was never designed to handle the unique traffic patterns of the Web, which today accounts for 80% of Internet usage. Most current medium & large Web service providers are suffering from server overload – Yahoo, Google, Altavista, CNN, Microsoft, …. . – A single monolithic server system is difficult to cope with these challenges. We need a scalable Internet Service Architecture guaranteeing the service expectation of all Web services! 7

Meeting the challenge l l The first-generation Web infrastructure was never designed to handle the unique traffic patterns of the Web, which today accounts for 80% of Internet usage. Most current medium & large Web service providers are suffering from server overload – Yahoo, Google, Altavista, CNN, Microsoft, …. . – A single monolithic server system is difficult to cope with these challenges. We need a scalable Internet Service Architecture guaranteeing the service expectation of all Web services! 7

Essential Requirements of Internet Server l l l High Performance Scalability High reliability Robustness Qo. S 8

Essential Requirements of Internet Server l l l High Performance Scalability High reliability Robustness Qo. S 8

Scalable Server Architecture l l l Feasible solution: Server Cluster (server farm) Collection of independent computer systems working together as if a single system. Advantages – Scalable: grow on demand – Highly available: redundancy – Cost-effective l Most current medium & large Web service providers take this architecture. This trend is accelerating! 9

Scalable Server Architecture l l l Feasible solution: Server Cluster (server farm) Collection of independent computer systems working together as if a single system. Advantages – Scalable: grow on demand – Highly available: redundancy – Cost-effective l Most current medium & large Web service providers take this architecture. This trend is accelerating! 9

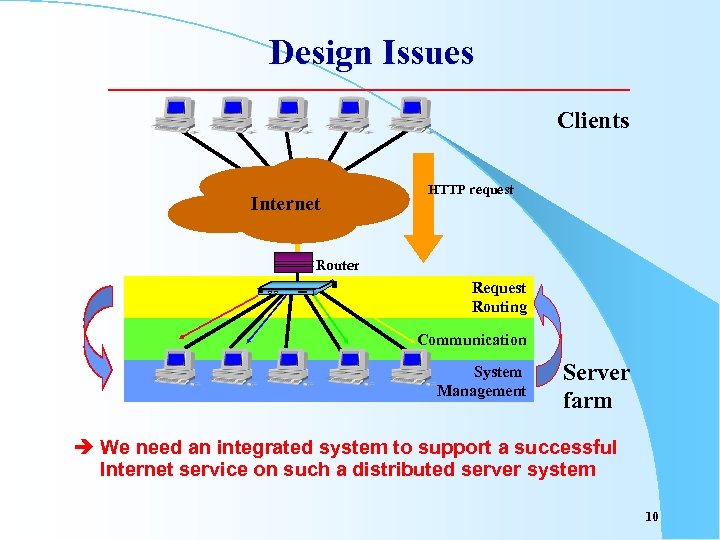

Design Issues Clients Internet HTTP request Router Request Routing Communication System Management Server farm è We need an integrated system to support a successful Internet service on such a distributed server system 10

Design Issues Clients Internet HTTP request Router Request Routing Communication System Management Server farm è We need an integrated system to support a successful Internet service on such a distributed server system 10

Our Solutions --Content-aware Web Cluster System l Content-aware Distributor (Server Load Balancer) – – – l Content-aware routing (Layer-7 routing) Sophisticated load balancing Service Differentiation Qo. S Fault resilience Transaction support Distributed Server Management System – – – System management Intelligent Content placement and management Supporting differentiated service Supporting Qo. S Fault management 11

Our Solutions --Content-aware Web Cluster System l Content-aware Distributor (Server Load Balancer) – – – l Content-aware routing (Layer-7 routing) Sophisticated load balancing Service Differentiation Qo. S Fault resilience Transaction support Distributed Server Management System – – – System management Intelligent Content placement and management Supporting differentiated service Supporting Qo. S Fault management 11

Outline l l Introduction Proposed System CRequest routing mechanism – Management System l l l Content-aware Intelligence Work in Progress Conclusion 12

Outline l l Introduction Proposed System CRequest routing mechanism – Management System l l l Content-aware Intelligence Work in Progress Conclusion 12

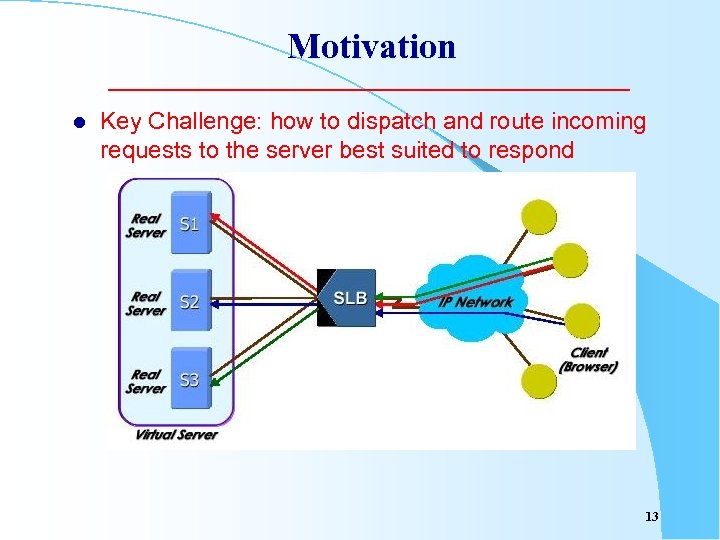

Motivation l Key Challenge: how to dispatch and route incoming requests to the server best suited to respond 13

Motivation l Key Challenge: how to dispatch and route incoming requests to the server best suited to respond 13

Desirable Properties l l l l User transparency Backward compatible Fast Access Scalability Robustness Availability Reliability Qo. S Support 14

Desirable Properties l l l l User transparency Backward compatible Fast Access Scalability Robustness Availability Reliability Qo. S Support 14

Client-side Approach l l Customized browser (e. g. Netscape) Java applet – HAWA (From AT&T) – Smart client (From U. C. Berkeley) l Advantage – low overhead – Global-wide solution l Problems – create increased network traffic Ö Ö applet transmission extra querying between applet and servers for state information – insensitive to server’s state 15

Client-side Approach l l Customized browser (e. g. Netscape) Java applet – HAWA (From AT&T) – Smart client (From U. C. Berkeley) l Advantage – low overhead – Global-wide solution l Problems – create increased network traffic Ö Ö applet transmission extra querying between applet and servers for state information – insensitive to server’s state 15

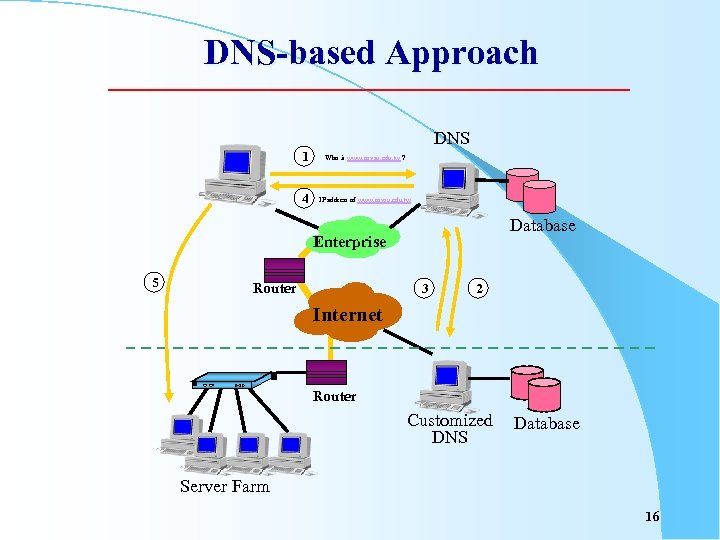

DNS-based Approach DNS 1 Who is www. nsysu. edu. tw ? 4 IP address of www. nsysu. edu. tw Database Enterprise 5 Router 3 2 Internet Router Customized DNS Database Server Farm 16

DNS-based Approach DNS 1 Who is www. nsysu. edu. tw ? 4 IP address of www. nsysu. edu. tw Database Enterprise 5 Router 3 2 Internet Router Customized DNS Database Server Farm 16

DNS-based Approach l Advantage – Ease of implementation – Low overhead – Global-wide solution l Problems – Hostname to IP address mapping can be cached by DNS server Ö Ö lead to significant load imbalance change in DNS information propagate slowly through the Internet. That is, if the backend sever is failure or removed, Internet as a whole may not aware it. – It is difficult to detect failure and load information of back-end nodes 17

DNS-based Approach l Advantage – Ease of implementation – Low overhead – Global-wide solution l Problems – Hostname to IP address mapping can be cached by DNS server Ö Ö lead to significant load imbalance change in DNS information propagate slowly through the Internet. That is, if the backend sever is failure or removed, Internet as a whole may not aware it. – It is difficult to detect failure and load information of back-end nodes 17

HTTP Redirection l l l One special response codes called redirection defined in the HTTP protocol can be used for directing a request. Through HTTP redirection, we can make a server to instruct the client to send the request to another location instead of returning the requested data. Problems – a request may require two or more connections for getting the desired service, thus this approach will increase the response time and network traffic. – the node serving this mechanism may become the impediment to scaling the server. 18

HTTP Redirection l l l One special response codes called redirection defined in the HTTP protocol can be used for directing a request. Through HTTP redirection, we can make a server to instruct the client to send the request to another location instead of returning the requested data. Problems – a request may require two or more connections for getting the desired service, thus this approach will increase the response time and network traffic. – the node serving this mechanism may become the impediment to scaling the server. 18

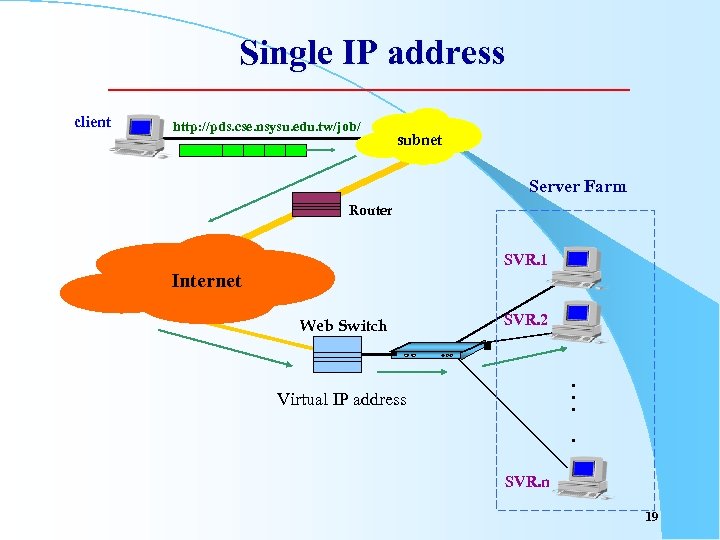

Single IP address client http: //pds. cse. nsysu. edu. tw/job/ subnet Server Farm Router SVR. 1 ‧‧‧ Internet Web Switch SVR. 2 ‧ ‧ Virtual IP address SVR. n ‧‧‧ 19

Single IP address client http: //pds. cse. nsysu. edu. tw/job/ subnet Server Farm Router SVR. 1 ‧‧‧ Internet Web Switch SVR. 2 ‧ ‧ Virtual IP address SVR. n ‧‧‧ 19

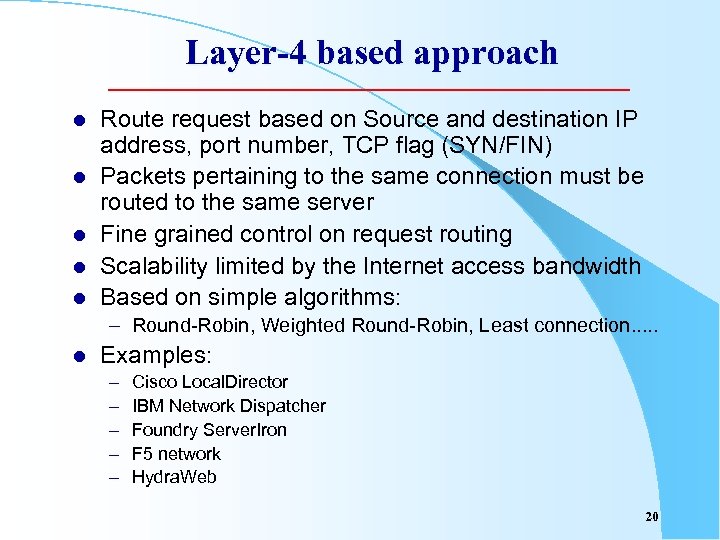

Layer-4 based approach l l l Route request based on Source and destination IP address, port number, TCP flag (SYN/FIN) Packets pertaining to the same connection must be routed to the same server Fine grained control on request routing Scalability limited by the Internet access bandwidth Based on simple algorithms: – Round-Robin, Weighted Round-Robin, Least connection. . . l Examples: – – – Cisco Local. Director IBM Network Dispatcher Foundry Server. Iron F 5 network Hydra. Web 20

Layer-4 based approach l l l Route request based on Source and destination IP address, port number, TCP flag (SYN/FIN) Packets pertaining to the same connection must be routed to the same server Fine grained control on request routing Scalability limited by the Internet access bandwidth Based on simple algorithms: – Round-Robin, Weighted Round-Robin, Least connection. . . l Examples: – – – Cisco Local. Director IBM Network Dispatcher Foundry Server. Iron F 5 network Hydra. Web 20

Issues Ignored by Existing Schemes l l l Session Integrity Sophisticated Load Balancing Quality of Service Fault Resilience Content Deployment and Management These observations lead to the inevitable conclusion : the request routing mechanism should factor in content of request in making decisions [Yang and Luo, IWI’ 99]. 21

Issues Ignored by Existing Schemes l l l Session Integrity Sophisticated Load Balancing Quality of Service Fault Resilience Content Deployment and Management These observations lead to the inevitable conclusion : the request routing mechanism should factor in content of request in making decisions [Yang and Luo, IWI’ 99]. 21

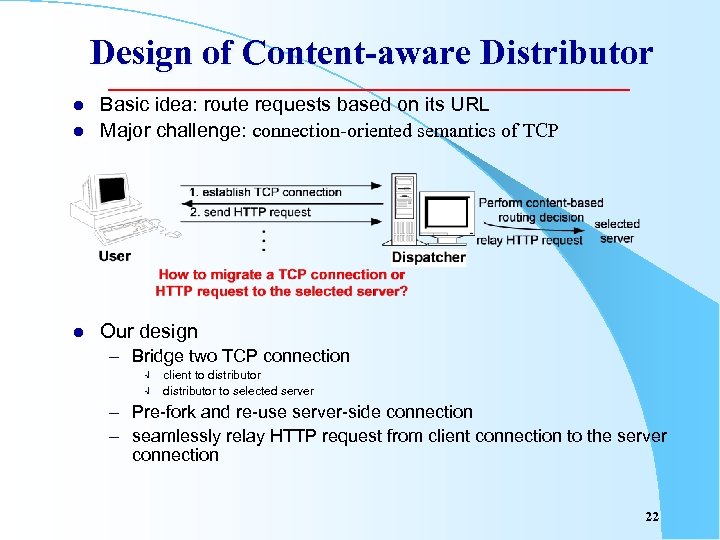

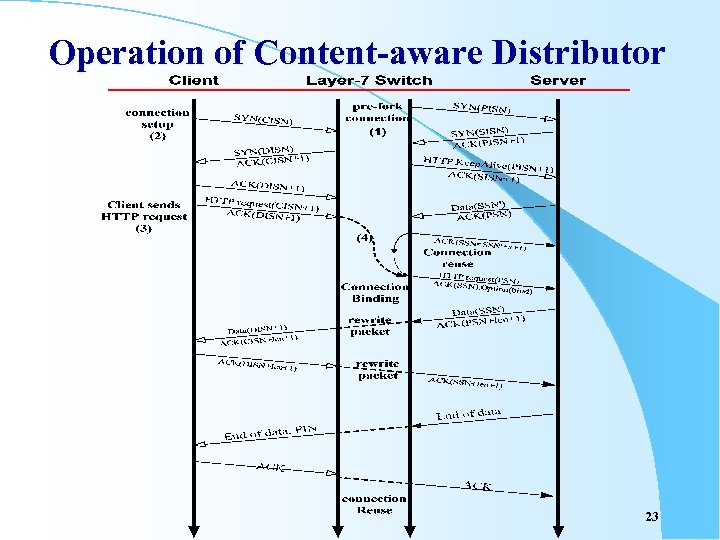

Design of Content-aware Distributor l Basic idea: route requests based on its URL Major challenge: connection-oriented semantics of TCP l Our design l – Bridge two TCP connection Ö Ö client to distributor to selected server – Pre-fork and re-use server-side connection – seamlessly relay HTTP request from client connection to the server connection 22

Design of Content-aware Distributor l Basic idea: route requests based on its URL Major challenge: connection-oriented semantics of TCP l Our design l – Bridge two TCP connection Ö Ö client to distributor to selected server – Pre-fork and re-use server-side connection – seamlessly relay HTTP request from client connection to the server connection 22

Operation of Content-aware Distributor 23

Operation of Content-aware Distributor 23

Make Routing Decision l l Parse HTTP Request Make routing decision – Select a destination server – Select a pre-forked connection 24

Make Routing Decision l l Parse HTTP Request Make routing decision – Select a destination server – Select a pre-forked connection 24

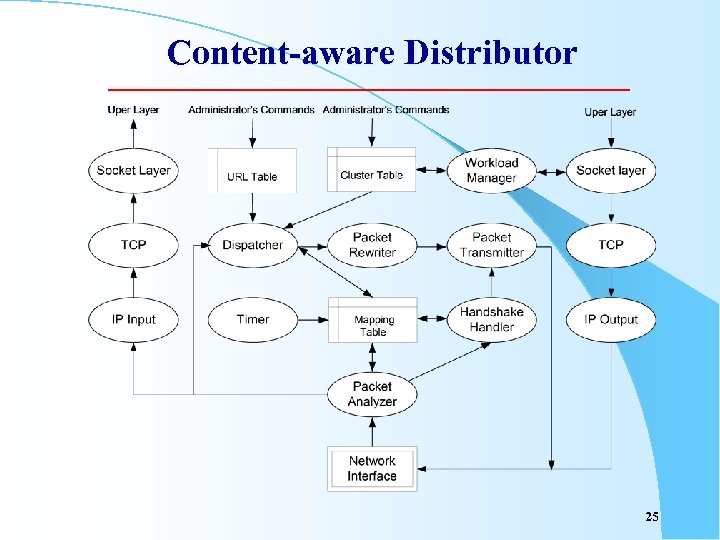

Content-aware Distributor 25

Content-aware Distributor 25

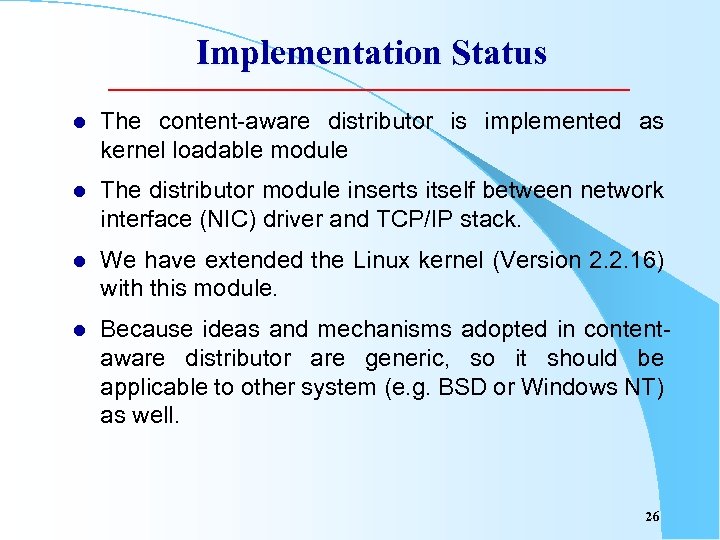

Implementation Status l The content-aware distributor is implemented as kernel loadable module l The distributor module inserts itself between network interface (NIC) driver and TCP/IP stack. l We have extended the Linux kernel (Version 2. 2. 16) with this module. l Because ideas and mechanisms adopted in contentaware distributor are generic, so it should be applicable to other system (e. g. BSD or Windows NT) as well. 26

Implementation Status l The content-aware distributor is implemented as kernel loadable module l The distributor module inserts itself between network interface (NIC) driver and TCP/IP stack. l We have extended the Linux kernel (Version 2. 2. 16) with this module. l Because ideas and mechanisms adopted in contentaware distributor are generic, so it should be applicable to other system (e. g. BSD or Windows NT) as well. 26

Challenges of Content-aware Routing l How can we build the content-aware intelligence into the distributor for making routing decision? – Content type, size, priority, location, … – Should be configurable, extensible, comprehensive l How can the distributor perfom request distribution based on the content-aware intelligence? – Parsing HTTP header of each request – Should be fast, efficient 27

Challenges of Content-aware Routing l How can we build the content-aware intelligence into the distributor for making routing decision? – Content type, size, priority, location, … – Should be configurable, extensible, comprehensive l How can the distributor perfom request distribution based on the content-aware intelligence? – Parsing HTTP header of each request – Should be fast, efficient 27

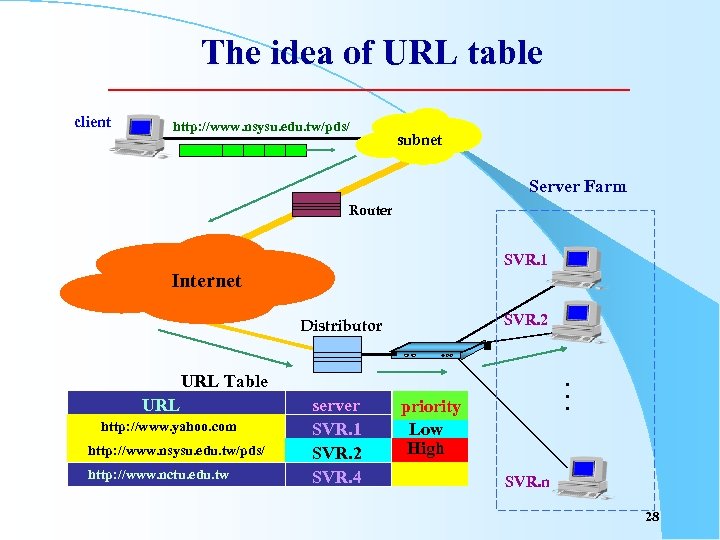

The idea of URL table client http: //www. nsysu. edu. tw/pds/ subnet Server Farm Router SVR. 1 ‧‧‧ Internet SVR. 2 Distributor URL Table URL http: //www. yahoo. com http: //www. nsysu. edu. tw/pds/ http: //www. nsysu. edu. tw http: //www. nctu. edu. tw server SVR. 1 SVR. 2 SVR. 4 ‧ ‧ ‧ priority Low High SVR. 2 SVR. n ‧‧‧ 28

The idea of URL table client http: //www. nsysu. edu. tw/pds/ subnet Server Farm Router SVR. 1 ‧‧‧ Internet SVR. 2 Distributor URL Table URL http: //www. yahoo. com http: //www. nsysu. edu. tw/pds/ http: //www. nsysu. edu. tw http: //www. nctu. edu. tw server SVR. 1 SVR. 2 SVR. 4 ‧ ‧ ‧ priority Low High SVR. 2 SVR. n ‧‧‧ 28

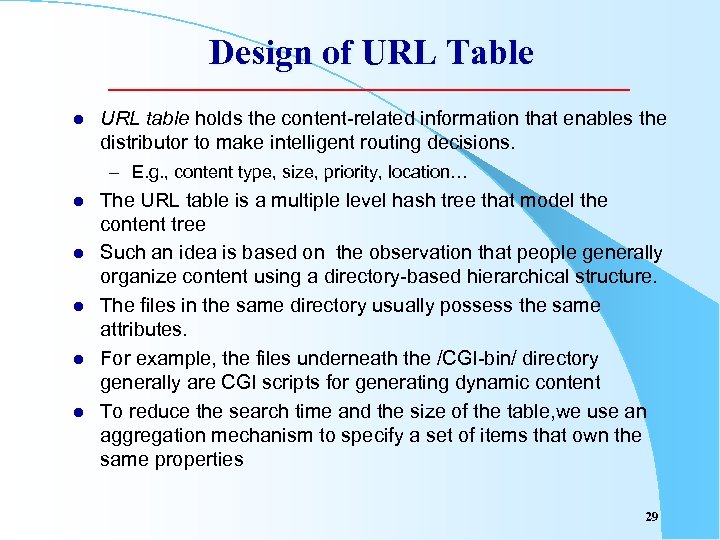

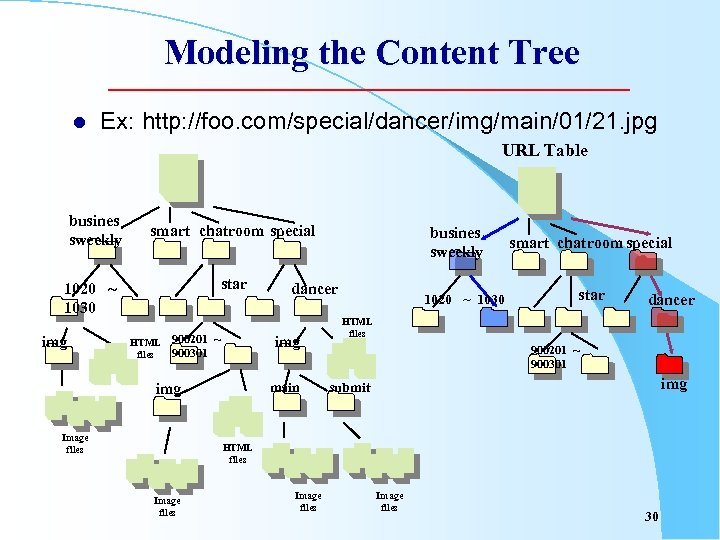

Design of URL Table l URL table holds the content-related information that enables the distributor to make intelligent routing decisions. – E. g. , content type, size, priority, location… l l l The URL table is a multiple level hash tree that model the content tree Such an idea is based on the observation that people generally organize content using a directory-based hierarchical structure. The files in the same directory usually possess the same attributes. For example, the files underneath the /CGI-bin/ directory generally are CGI scripts for generating dynamic content To reduce the search time and the size of the table, we use an aggregation mechanism to specify a set of items that own the same properties 29

Design of URL Table l URL table holds the content-related information that enables the distributor to make intelligent routing decisions. – E. g. , content type, size, priority, location… l l l The URL table is a multiple level hash tree that model the content tree Such an idea is based on the observation that people generally organize content using a directory-based hierarchical structure. The files in the same directory usually possess the same attributes. For example, the files underneath the /CGI-bin/ directory generally are CGI scripts for generating dynamic content To reduce the search time and the size of the table, we use an aggregation mechanism to specify a set of items that own the same properties 29

Modeling the Content Tree l Ex: http: //foo. com/special/dancer/img/main/01/21. jpg URL Table busines sweekly smart chatroom special star 1020 ~ 1030 img HTML files 900201 ~ 900301 Image files dancer img main img busines sweekly 1020 ~ 1030 smart chatroom special star dancer HTML files 900201 ~ 900301 img submit HTML files Image files 30

Modeling the Content Tree l Ex: http: //foo. com/special/dancer/img/main/01/21. jpg URL Table busines sweekly smart chatroom special star 1020 ~ 1030 img HTML files 900201 ~ 900301 Image files dancer img main img busines sweekly 1020 ~ 1030 smart chatroom special star dancer HTML files 900201 ~ 900301 img submit HTML files Image files 30

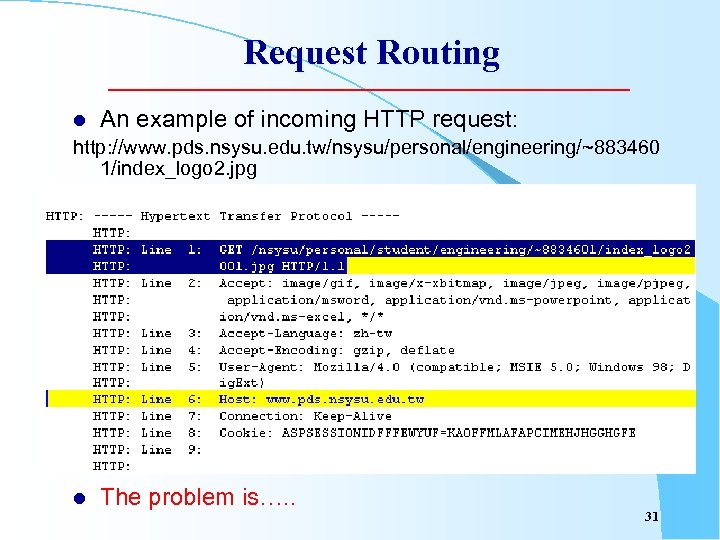

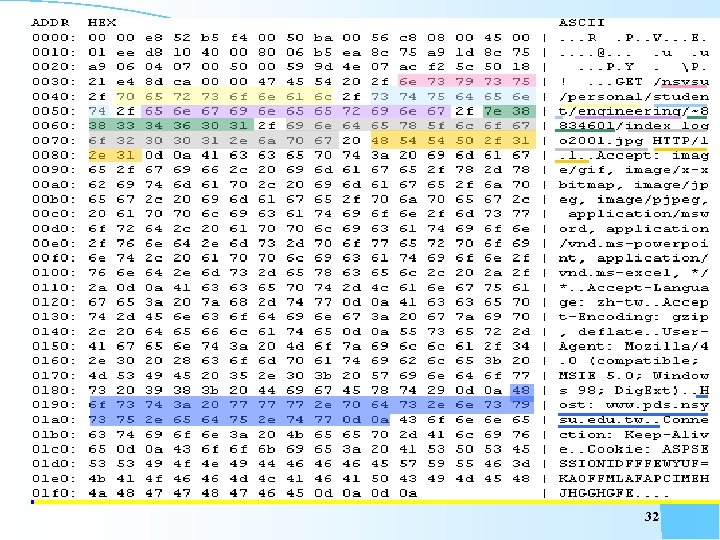

Request Routing l An example of incoming HTTP request: http: //www. pds. nsysu. edu. tw/nsysu/personal/engineering/~883460 1/index_logo 2. jpg l The problem is…. . 31

Request Routing l An example of incoming HTTP request: http: //www. pds. nsysu. edu. tw/nsysu/personal/engineering/~883460 1/index_logo 2. jpg l The problem is…. . 31

32

32

URL Parsing is expensive!! l Performing content-aware routing implies that some kind of string searching and matching algorithm is required. – Such a time-consuming function is expensive in a heavy traffic web site. l l Our experience showed that the system performance would be severely degraded if we implement some URL parsing functions in the distributor. You will loss 7/8 ths of your Web switch’s performance if you turn on its URL parsing function. ~~F 5 Lab 33

URL Parsing is expensive!! l Performing content-aware routing implies that some kind of string searching and matching algorithm is required. – Such a time-consuming function is expensive in a heavy traffic web site. l l Our experience showed that the system performance would be severely degraded if we implement some URL parsing functions in the distributor. You will loss 7/8 ths of your Web switch’s performance if you turn on its URL parsing function. ~~F 5 Lab 33

The Idea of the URL Formalization l l Generally, the reason for using the variable-length string to name a file or directory is just because it is mnemonic, thereby making it easier for humans to remember. . In most cases, an HTTP request is issued when the browser follows a link: either explicitly, when the user clicks on an anchor, or implicitly, via an embedded image or object. Most URLs are invisible to the users, they don’t care about what name it has. The name is only meaningful to the content provider. Therefore, we can convert the original name to a formalized form. 34

The Idea of the URL Formalization l l Generally, the reason for using the variable-length string to name a file or directory is just because it is mnemonic, thereby making it easier for humans to remember. . In most cases, an HTTP request is issued when the browser follows a link: either explicitly, when the user clicks on an anchor, or implicitly, via an embedded image or object. Most URLs are invisible to the users, they don’t care about what name it has. The name is only meaningful to the content provider. Therefore, we can convert the original name to a formalized form. 34

click 35

click 35

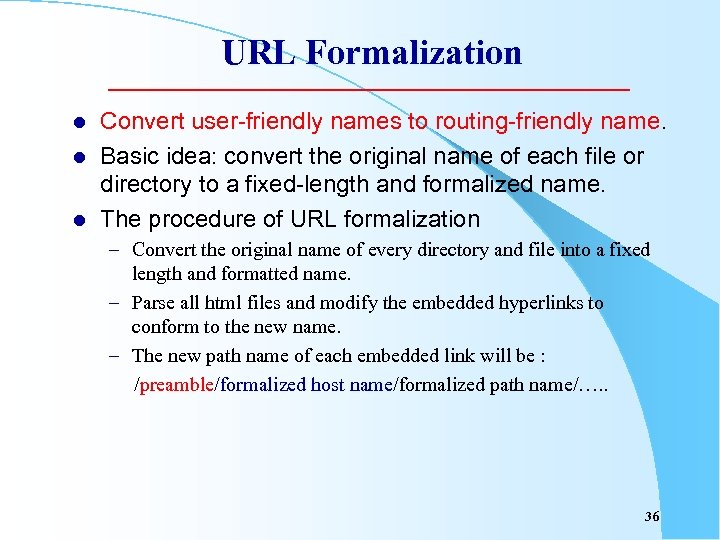

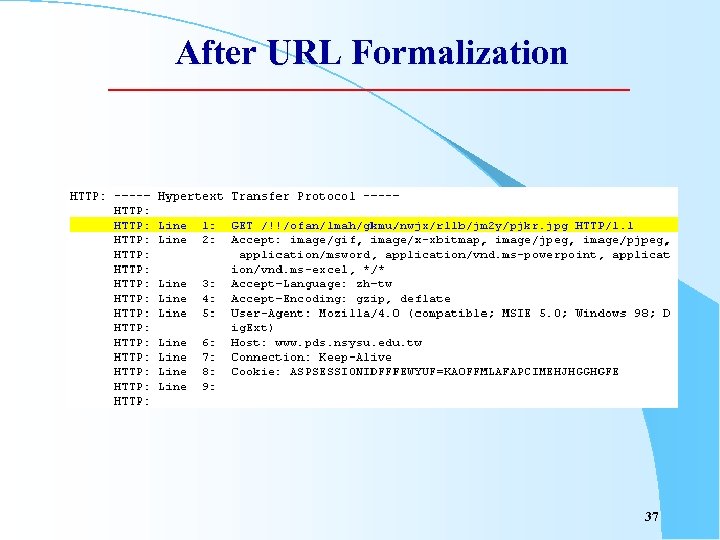

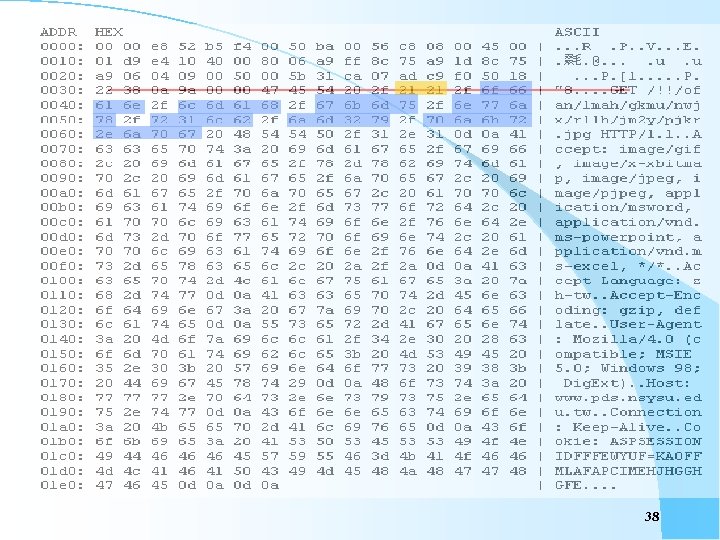

URL Formalization l l l Convert user-friendly names to routing-friendly name. Basic idea: convert the original name of each file or directory to a fixed-length and formalized name. The procedure of URL formalization – Convert the original name of every directory and file into a fixed length and formatted name. – Parse all html files and modify the embedded hyperlinks to conform to the new name. – The new path name of each embedded link will be : /preamble/formalized host name/formalized path name/…. . 36

URL Formalization l l l Convert user-friendly names to routing-friendly name. Basic idea: convert the original name of each file or directory to a fixed-length and formalized name. The procedure of URL formalization – Convert the original name of every directory and file into a fixed length and formatted name. – Parse all html files and modify the embedded hyperlinks to conform to the new name. – The new path name of each embedded link will be : /preamble/formalized host name/formalized path name/…. . 36

After URL Formalization 37

After URL Formalization 37

38

38

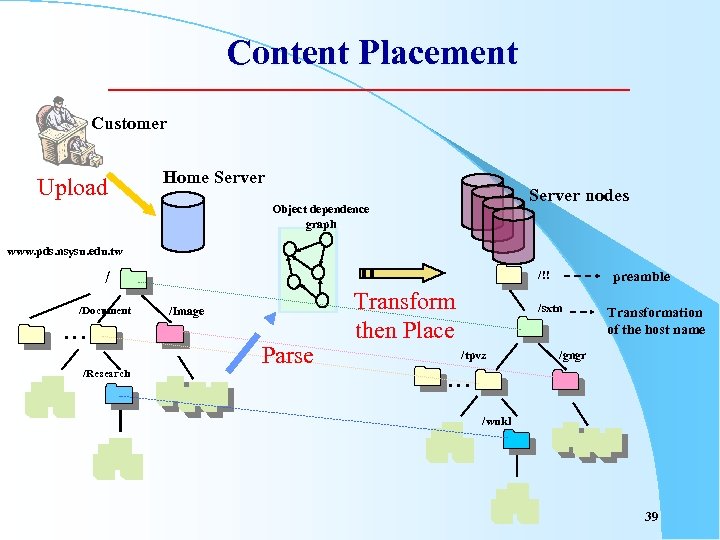

Content Placement Customer Upload Home Server nodes Object dependence graph www. pds. nsysu. edu. tw / /Document … /Research /!! /Image Parse Transform then Place preamble /sxtn /tpvz Transformation of the host name /gngr … /wukl 39

Content Placement Customer Upload Home Server nodes Object dependence graph www. pds. nsysu. edu. tw / /Document … /Research /!! /Image Parse Transform then Place preamble /sxtn /tpvz Transformation of the host name /gngr … /wukl 39

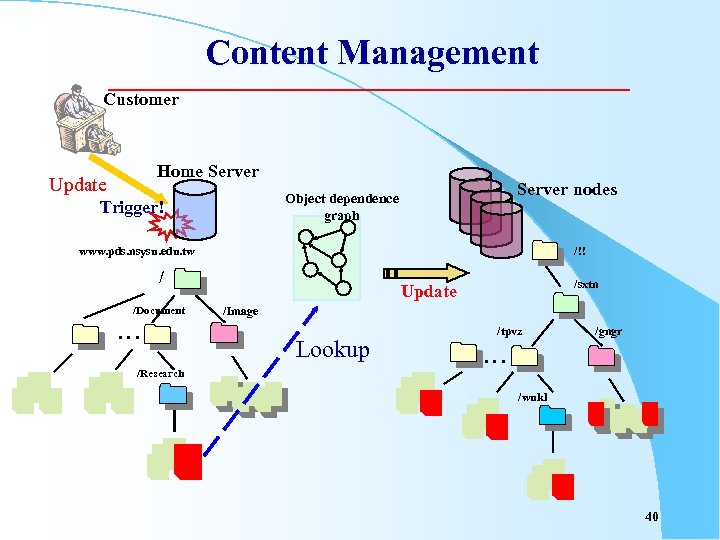

Content Management Customer Home Server Update Server nodes Object dependence graph Trigger! www. pds. nsysu. edu. tw /!! / /Document … /Research /sxtn Update /Image Lookup /tpvz /gngr … /wukl 40

Content Management Customer Home Server Update Server nodes Object dependence graph Trigger! www. pds. nsysu. edu. tw /!! / /Document … /Research /sxtn Update /Image Lookup /tpvz /gngr … /wukl 40

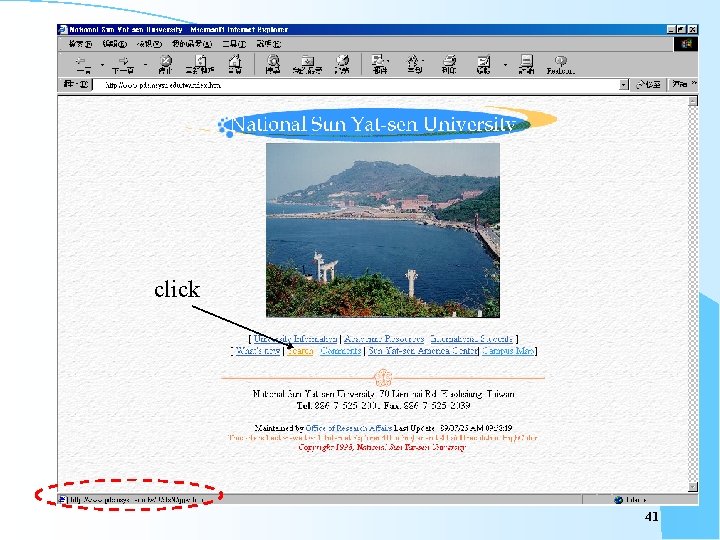

click 41

click 41

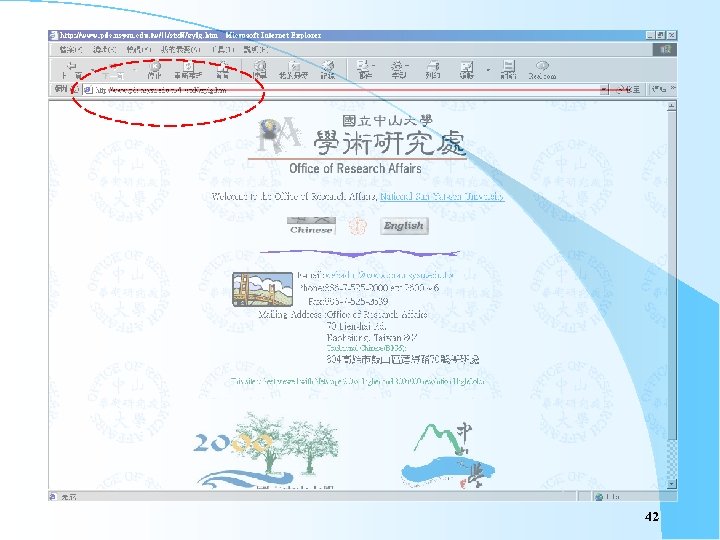

42

42

Advantages of URL Formalization l The fixed-length formalized names are easier for the distributor to process. – We even can implement the routing function in hardware for performance boosting. l l l Placing the host name in the first level of the path name can further speed up the routing decision. Combined with the well-designed URL table, the dispatcher can quickly retrieve related information to make routing decision. Be particularly useful in Web hosting service environment 43

Advantages of URL Formalization l The fixed-length formalized names are easier for the distributor to process. – We even can implement the routing function in hardware for performance boosting. l l l Placing the host name in the first level of the path name can further speed up the routing decision. Combined with the well-designed URL table, the dispatcher can quickly retrieve related information to make routing decision. Be particularly useful in Web hosting service environment 43

Outline l l Introduction Proposed System – Request Routing Mechanism ? Management System l l l Content-aware Intelligence Work in Progress Conclusion 44

Outline l l Introduction Proposed System – Request Routing Mechanism ? Management System l l l Content-aware Intelligence Work in Progress Conclusion 44

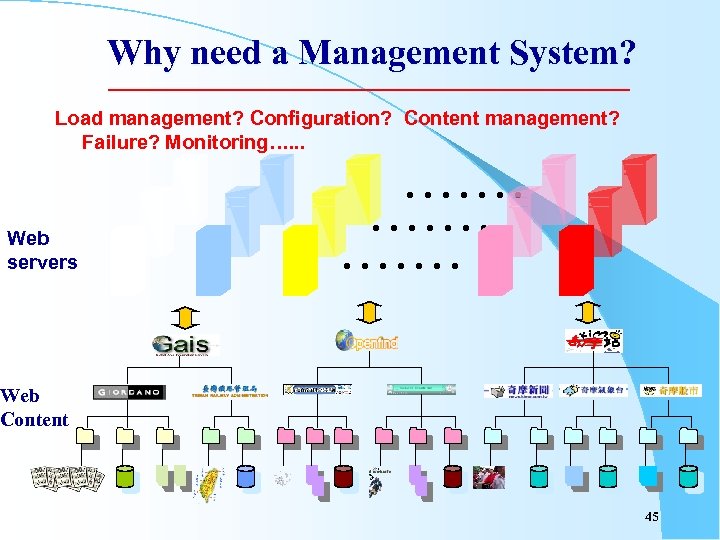

Why need a Management System? Load management? Configuration? Content management? Failure? Monitoring…. . . Web servers . . Web Content 45

Why need a Management System? Load management? Configuration? Content management? Failure? Monitoring…. . . Web servers . . Web Content 45

Required Functions l System configuration – ease to configure – status visualization l Content placement and management – be able to deploy content on each node according to its capability – service differentiation – Dynamically change the content placement according to the load – Support the content-aware routing l Monitoring – real-time statistics – log analysis – site usage statistics 46

Required Functions l System configuration – ease to configure – status visualization l Content placement and management – be able to deploy content on each node according to its capability – service differentiation – Dynamically change the content placement according to the load – Support the content-aware routing l Monitoring – real-time statistics – log analysis – site usage statistics 46

Required Functions (cont’) l Performance management – – l monitoring analysis and statistics event (poor performance or overloaded) automatic tuning Failure management – – – diagnosis server failure identification network analysis and protocol analysis content verification (object/link analysis) monitoring and alarm 47

Required Functions (cont’) l Performance management – – l monitoring analysis and statistics event (poor performance or overloaded) automatic tuning Failure management – – – diagnosis server failure identification network analysis and protocol analysis content verification (object/link analysis) monitoring and alarm 47

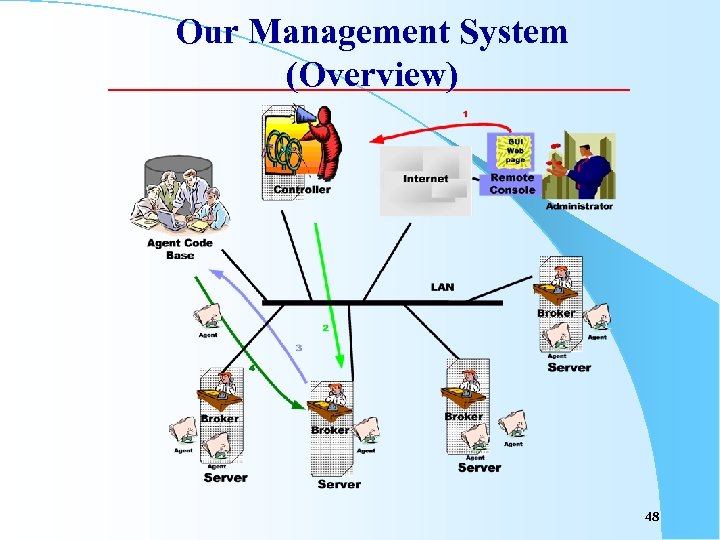

Our Management System (Overview) 48

Our Management System (Overview) 48

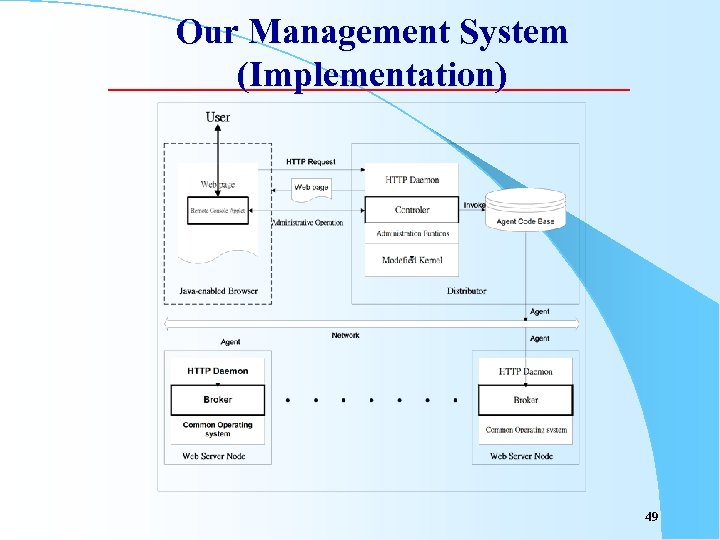

Our Management System (Implementation) 49

Our Management System (Implementation) 49

Our Management System l Controller(Java Application) – Communicate with the distributor – control center l Broker(Java Application) – running on Web server node – monitoring – execute downloading agent l Agent (Java class) – Each administrative function is implemented in the form of a Java class termed agent l Remote console (Java Applet) – an easy-to-use GUI for web site manager to maintain and manage the system. 50

Our Management System l Controller(Java Application) – Communicate with the distributor – control center l Broker(Java Application) – running on Web server node – monitoring – execute downloading agent l Agent (Java class) – Each administrative function is implemented in the form of a Java class termed agent l Remote console (Java Applet) – an easy-to-use GUI for web site manager to maintain and manage the system. 50

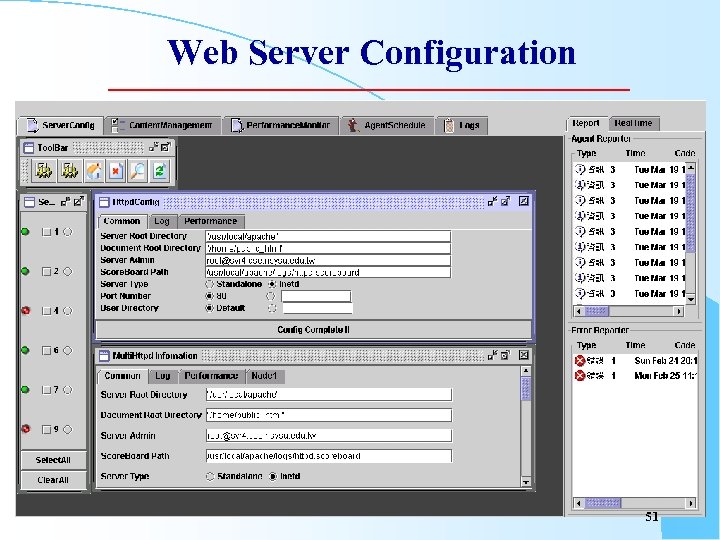

Web Server Configuration 51

Web Server Configuration 51

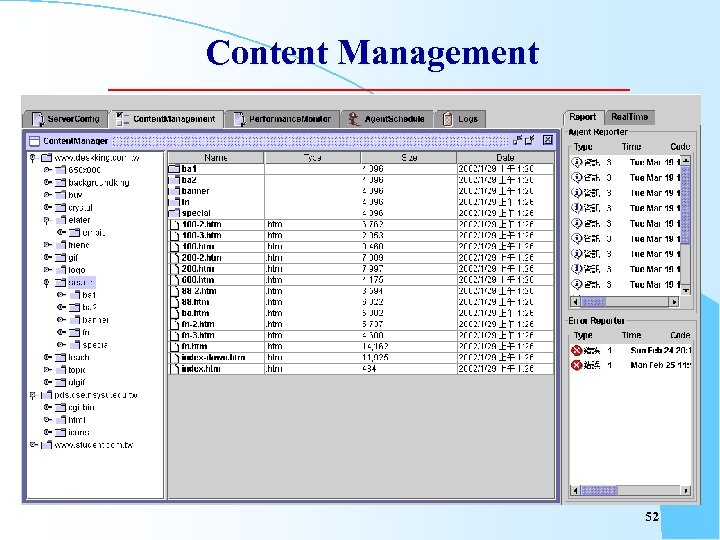

Content Management 52

Content Management 52

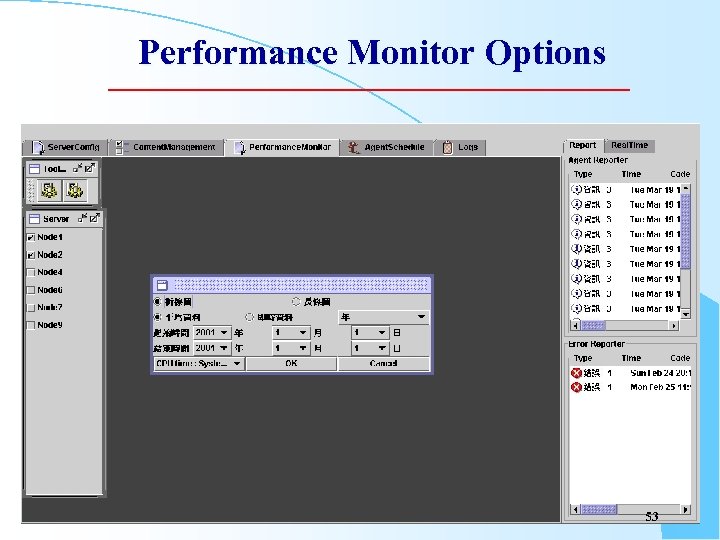

Performance Monitor Options 53

Performance Monitor Options 53

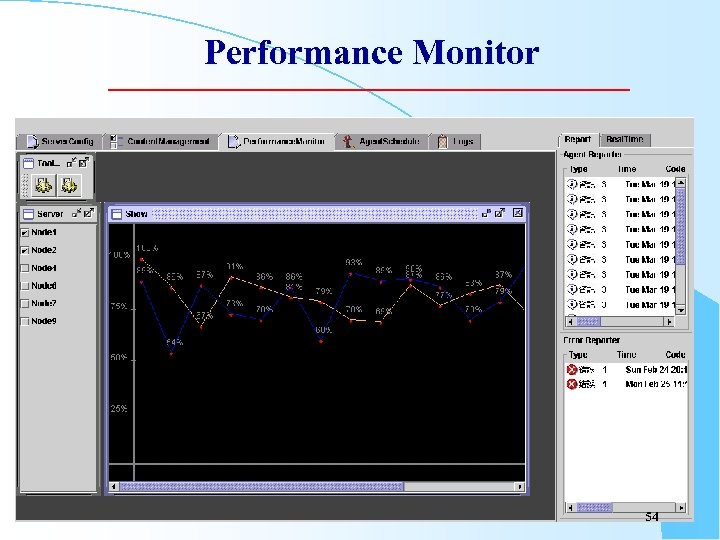

Performance Monitor 54

Performance Monitor 54

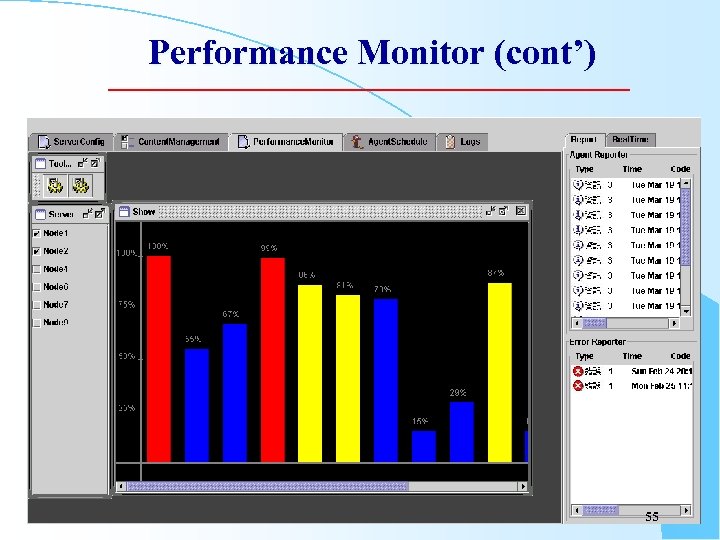

Performance Monitor (cont’) 55

Performance Monitor (cont’) 55

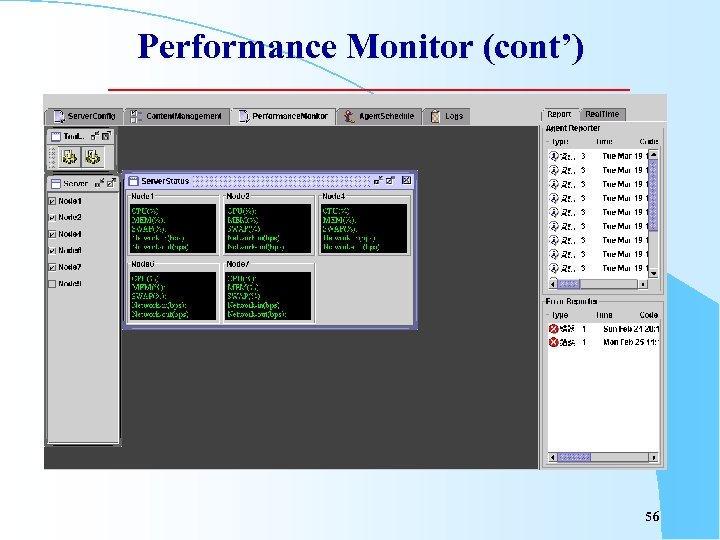

Performance Monitor (cont’) 56

Performance Monitor (cont’) 56

Features of Our Management System l Platform independent – Implementing the daemon in Java can relieve the concerns related to heterogeneity of the target platforms l l Support comprehensive management functions Enables the complete management of a web site via a standard browser (from any location) – Support tracking and visualization of the system’s configuration and state – Produce a single, coherent view of the partitioned content l l Extensibility Support URL Formalization 57

Features of Our Management System l Platform independent – Implementing the daemon in Java can relieve the concerns related to heterogeneity of the target platforms l l Support comprehensive management functions Enables the complete management of a web site via a standard browser (from any location) – Support tracking and visualization of the system’s configuration and state – Produce a single, coherent view of the partitioned content l l Extensibility Support URL Formalization 57

Outline l l Introduction Proposed System – Request Routing Mechanism – Management System ? Content-aware Intelligence l Work in Progress l Conclusion 58

Outline l l Introduction Proposed System – Request Routing Mechanism – Management System ? Content-aware Intelligence l Work in Progress l Conclusion 58

Current Status We have implemented the following content-aware intelligence in our system: l Affinity-Based request routing l Content placement and management – Dispersed Content Placement – Content Segregation l Fault Resilience 59

Current Status We have implemented the following content-aware intelligence in our system: l Affinity-Based request routing l Content placement and management – Dispersed Content Placement – Content Segregation l Fault Resilience 59

Affinity-Based Request Routing l l l An important factor to consider: serving a request from the disk is far slower than serving the request from the memory cache. With the content-aware mechanism, it is possible to direct requests for a given item of content to the server that already have data cached in main memory. Achieving load balancing and locality 60

Affinity-Based Request Routing l l l An important factor to consider: serving a request from the disk is far slower than serving the request from the memory cache. With the content-aware mechanism, it is possible to direct requests for a given item of content to the server that already have data cached in main memory. Achieving load balancing and locality 60

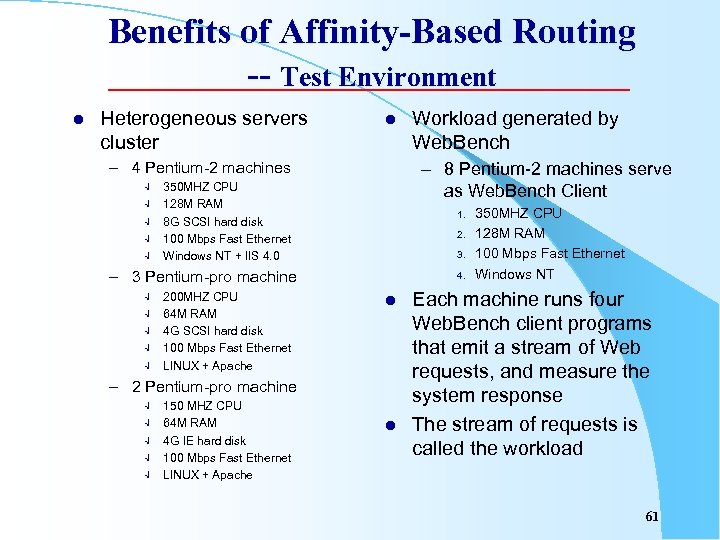

Benefits of Affinity-Based Routing -- Test Environment l Heterogeneous servers cluster l – 4 Pentium-2 machines Ö Ö Ö – 8 Pentium-2 machines serve as Web. Bench Client 350 MHZ CPU 128 M RAM 8 G SCSI hard disk 100 Mbps Fast Ethernet Windows NT + IIS 4. 0 1. 2. 3. – 3 Pentium-pro machine Ö Ö Ö 200 MHZ CPU 64 M RAM 4 G SCSI hard disk 100 Mbps Fast Ethernet LINUX + Apache 4. l – 2 Pentium-pro machine Ö Ö Ö 150 MHZ CPU 64 M RAM 4 G IE hard disk 100 Mbps Fast Ethernet LINUX + Apache Workload generated by Web. Bench l 350 MHZ CPU 128 M RAM 100 Mbps Fast Ethernet Windows NT Each machine runs four Web. Bench client programs that emit a stream of Web requests, and measure the system response The stream of requests is called the workload 61

Benefits of Affinity-Based Routing -- Test Environment l Heterogeneous servers cluster l – 4 Pentium-2 machines Ö Ö Ö – 8 Pentium-2 machines serve as Web. Bench Client 350 MHZ CPU 128 M RAM 8 G SCSI hard disk 100 Mbps Fast Ethernet Windows NT + IIS 4. 0 1. 2. 3. – 3 Pentium-pro machine Ö Ö Ö 200 MHZ CPU 64 M RAM 4 G SCSI hard disk 100 Mbps Fast Ethernet LINUX + Apache 4. l – 2 Pentium-pro machine Ö Ö Ö 150 MHZ CPU 64 M RAM 4 G IE hard disk 100 Mbps Fast Ethernet LINUX + Apache Workload generated by Web. Bench l 350 MHZ CPU 128 M RAM 100 Mbps Fast Ethernet Windows NT Each machine runs four Web. Bench client programs that emit a stream of Web requests, and measure the system response The stream of requests is called the workload 61

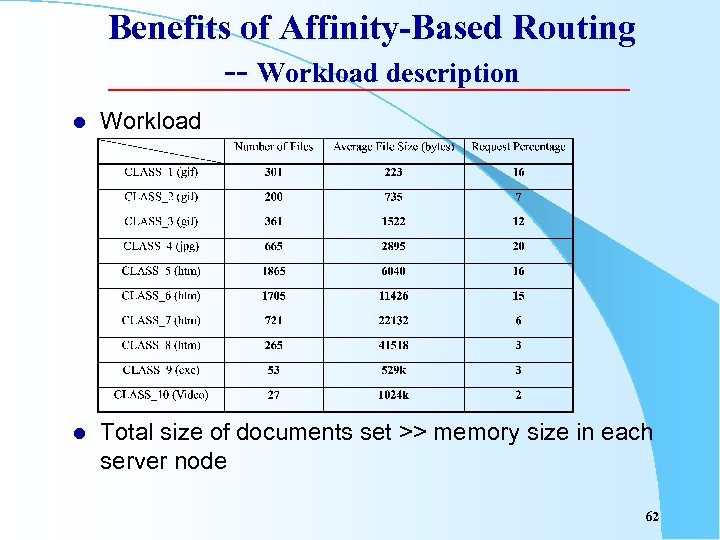

Benefits of Affinity-Based Routing -- Workload description l Workload l Total size of documents set >> memory size in each server node 62

Benefits of Affinity-Based Routing -- Workload description l Workload l Total size of documents set >> memory size in each server node 62

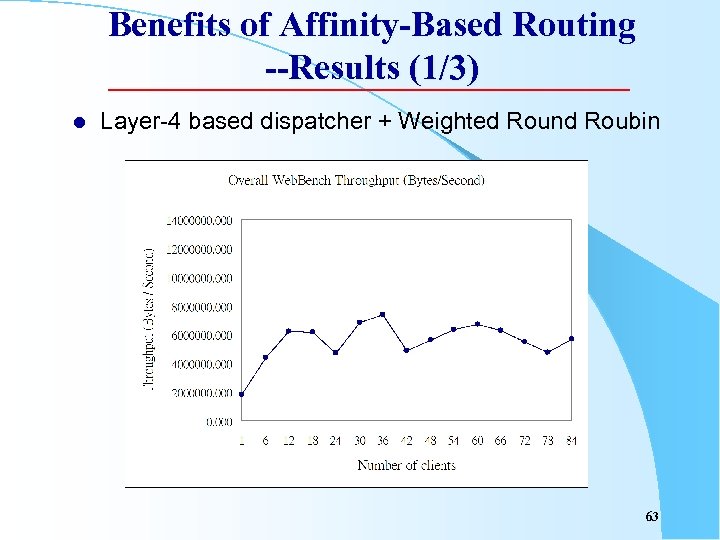

Benefits of Affinity-Based Routing --Results (1/3) l Layer-4 based dispatcher + Weighted Round Roubin 63

Benefits of Affinity-Based Routing --Results (1/3) l Layer-4 based dispatcher + Weighted Round Roubin 63

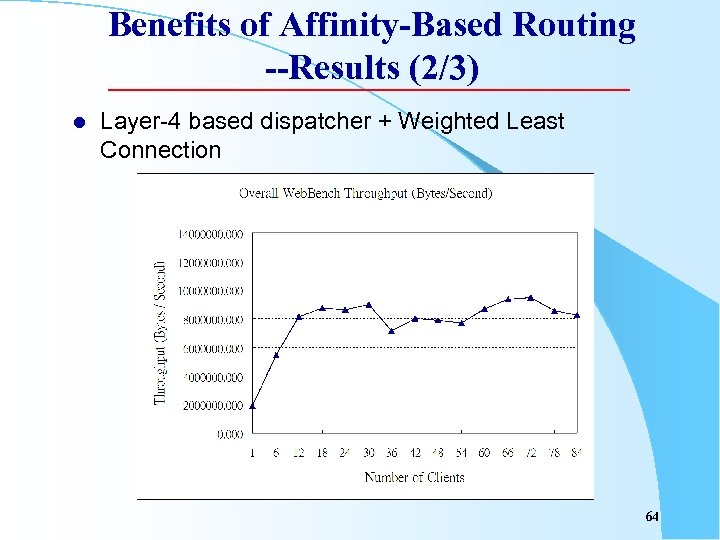

Benefits of Affinity-Based Routing --Results (2/3) l Layer-4 based dispatcher + Weighted Least Connection 64

Benefits of Affinity-Based Routing --Results (2/3) l Layer-4 based dispatcher + Weighted Least Connection 64

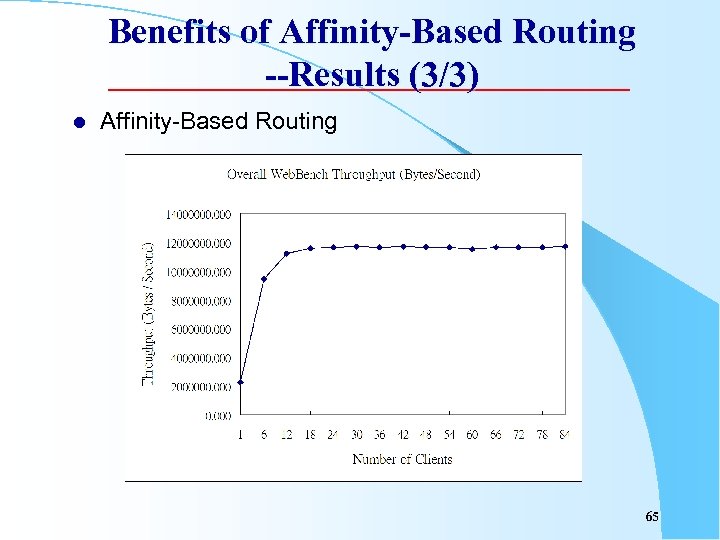

Benefits of Affinity-Based Routing --Results (3/3) l Affinity-Based Routing 65

Benefits of Affinity-Based Routing --Results (3/3) l Affinity-Based Routing 65

Content Placement and Management l l An important factors in efficient utilization of a distributed server and achieving better performance is to be able to deploy content on each node according to its capability, and then direct clients to the best suited server. Challenge: how to place and manage content in such a distributed server system, in particular, such servers tend to be more heterogeneous 66

Content Placement and Management l l An important factors in efficient utilization of a distributed server and achieving better performance is to be able to deploy content on each node according to its capability, and then direct clients to the best suited server. Challenge: how to place and manage content in such a distributed server system, in particular, such servers tend to be more heterogeneous 66

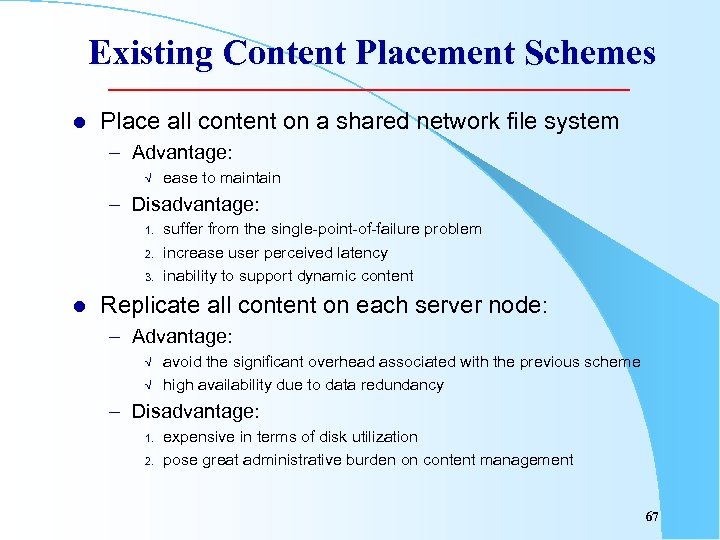

Existing Content Placement Schemes l Place all content on a shared network file system – Advantage: Ö ease to maintain – Disadvantage: 1. 2. 3. l suffer from the single-point-of-failure problem increase user perceived latency inability to support dynamic content Replicate all content on each server node: – Advantage: Ö Ö avoid the significant overhead associated with the previous scheme high availability due to data redundancy – Disadvantage: 1. 2. expensive in terms of disk utilization pose great administrative burden on content management 67

Existing Content Placement Schemes l Place all content on a shared network file system – Advantage: Ö ease to maintain – Disadvantage: 1. 2. 3. l suffer from the single-point-of-failure problem increase user perceived latency inability to support dynamic content Replicate all content on each server node: – Advantage: Ö Ö avoid the significant overhead associated with the previous scheme high availability due to data redundancy – Disadvantage: 1. 2. expensive in terms of disk utilization pose great administrative burden on content management 67

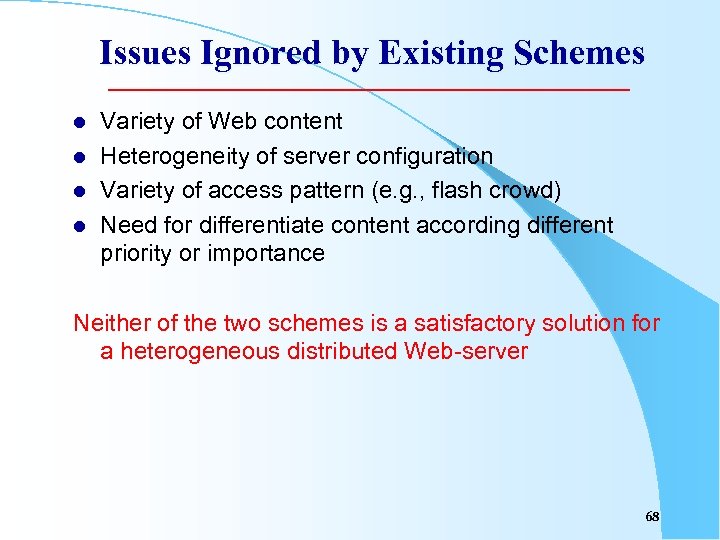

Issues Ignored by Existing Schemes l l Variety of Web content Heterogeneity of server configuration Variety of access pattern (e. g. , flash crowd) Need for differentiate content according different priority or importance Neither of the two schemes is a satisfactory solution for a heterogeneous distributed Web-server 68

Issues Ignored by Existing Schemes l l Variety of Web content Heterogeneity of server configuration Variety of access pattern (e. g. , flash crowd) Need for differentiate content according different priority or importance Neither of the two schemes is a satisfactory solution for a heterogeneous distributed Web-server 68

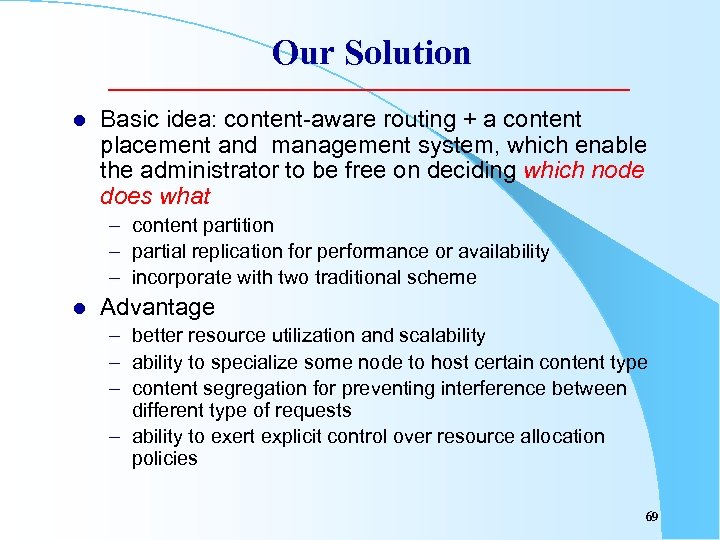

Our Solution l Basic idea: content-aware routing + a content placement and management system, which enable the administrator to be free on deciding which node does what – content partition – partial replication for performance or availability – incorporate with two traditional scheme l Advantage – better resource utilization and scalability – ability to specialize some node to host certain content type – content segregation for preventing interference between different type of requests – ability to exert explicit control over resource allocation policies 69

Our Solution l Basic idea: content-aware routing + a content placement and management system, which enable the administrator to be free on deciding which node does what – content partition – partial replication for performance or availability – incorporate with two traditional scheme l Advantage – better resource utilization and scalability – ability to specialize some node to host certain content type – content segregation for preventing interference between different type of requests – ability to exert explicit control over resource allocation policies 69

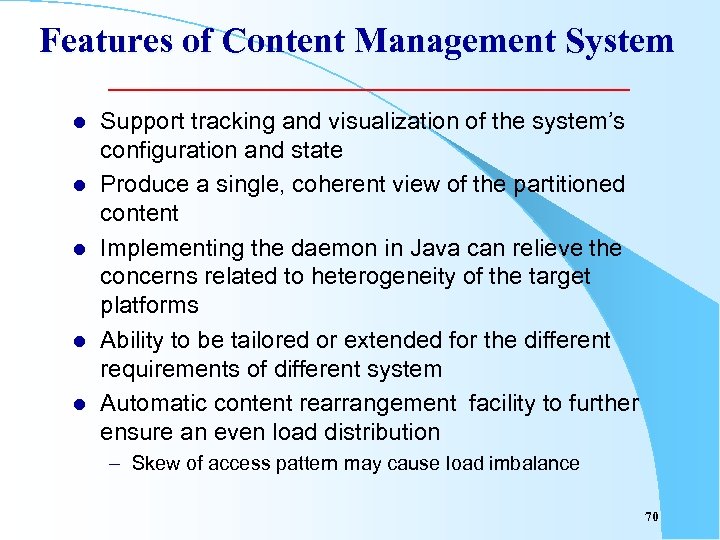

Features of Content Management System l l l Support tracking and visualization of the system’s configuration and state Produce a single, coherent view of the partitioned content Implementing the daemon in Java can relieve the concerns related to heterogeneity of the target platforms Ability to be tailored or extended for the different requirements of different system Automatic content rearrangement facility to further ensure an even load distribution – Skew of access pattern may cause load imbalance 70

Features of Content Management System l l l Support tracking and visualization of the system’s configuration and state Produce a single, coherent view of the partitioned content Implementing the daemon in Java can relieve the concerns related to heterogeneity of the target platforms Ability to be tailored or extended for the different requirements of different system Automatic content rearrangement facility to further ensure an even load distribution – Skew of access pattern may cause load imbalance 70

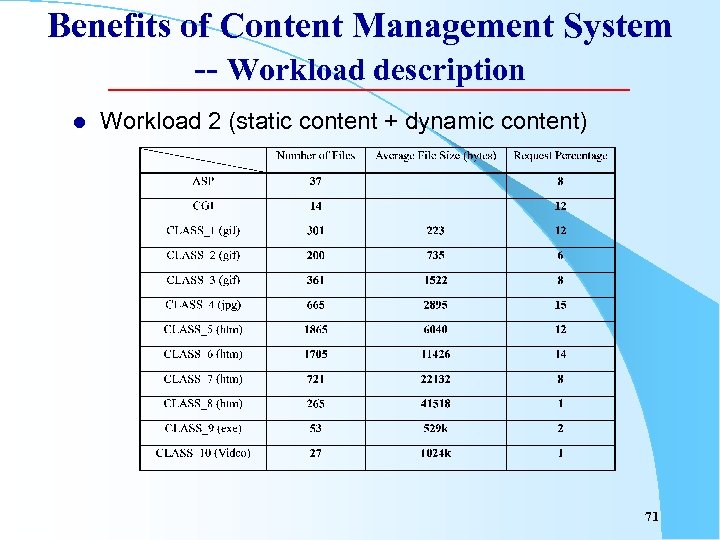

Benefits of Content Management System -- Workload description l Workload 2 (static content + dynamic content) 71

Benefits of Content Management System -- Workload description l Workload 2 (static content + dynamic content) 71

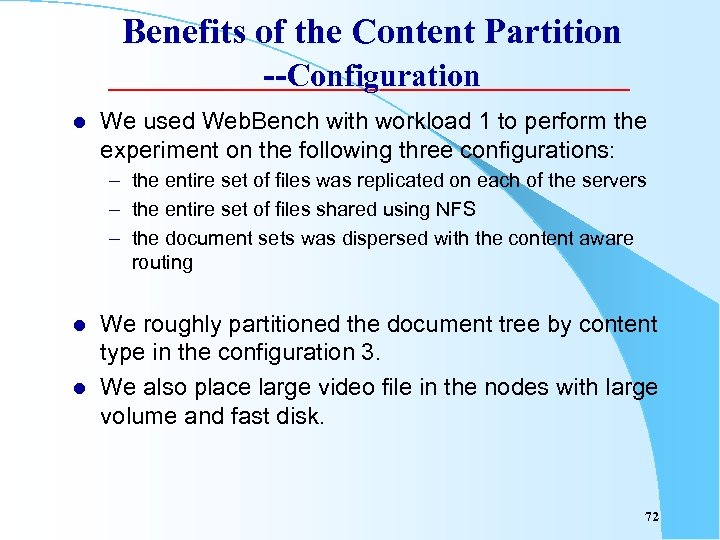

Benefits of the Content Partition --Configuration l We used Web. Bench with workload 1 to perform the experiment on the following three configurations: – the entire set of files was replicated on each of the servers – the entire set of files shared using NFS – the document sets was dispersed with the content aware routing l l We roughly partitioned the document tree by content type in the configuration 3. We also place large video file in the nodes with large volume and fast disk. 72

Benefits of the Content Partition --Configuration l We used Web. Bench with workload 1 to perform the experiment on the following three configurations: – the entire set of files was replicated on each of the servers – the entire set of files shared using NFS – the document sets was dispersed with the content aware routing l l We roughly partitioned the document tree by content type in the configuration 3. We also place large video file in the nodes with large volume and fast disk. 72

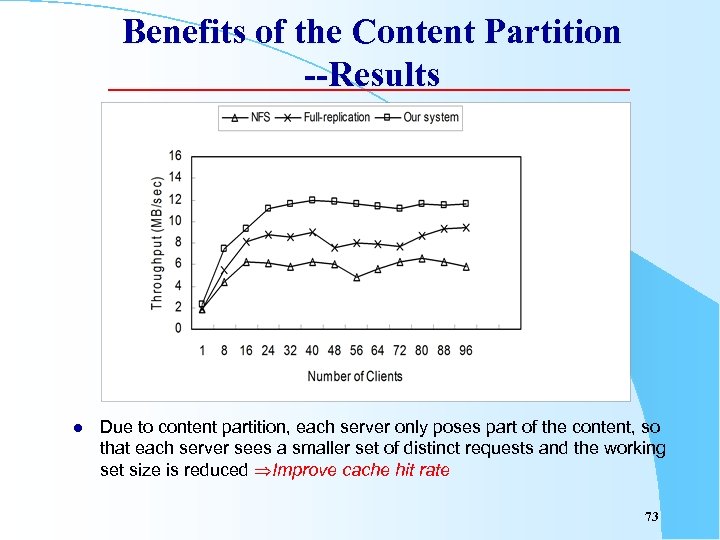

Benefits of the Content Partition --Results l Due to content partition, each server only poses part of the content, so that each server sees a smaller set of distinct requests and the working set size is reduced Improve cache hit rate 73

Benefits of the Content Partition --Results l Due to content partition, each server only poses part of the content, so that each server sees a smaller set of distinct requests and the working set size is reduced Improve cache hit rate 73

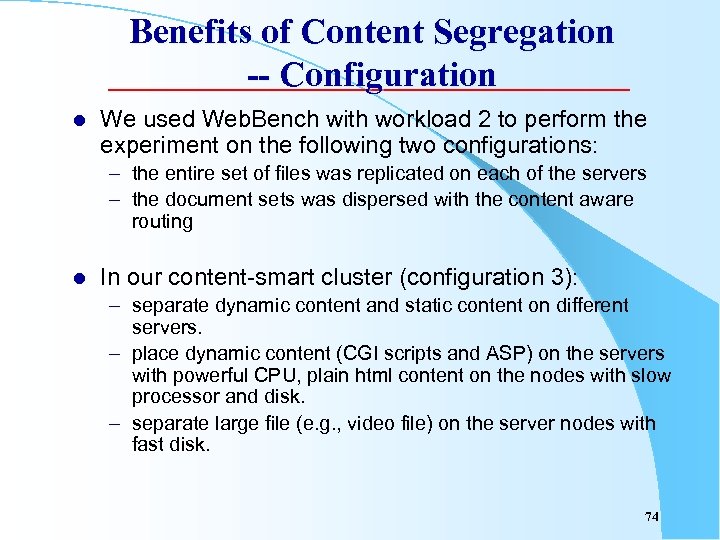

Benefits of Content Segregation -- Configuration l We used Web. Bench with workload 2 to perform the experiment on the following two configurations: – the entire set of files was replicated on each of the servers – the document sets was dispersed with the content aware routing l In our content-smart cluster (configuration 3): – separate dynamic content and static content on different servers. – place dynamic content (CGI scripts and ASP) on the servers with powerful CPU, plain html content on the nodes with slow processor and disk. – separate large file (e. g. , video file) on the server nodes with fast disk. 74

Benefits of Content Segregation -- Configuration l We used Web. Bench with workload 2 to perform the experiment on the following two configurations: – the entire set of files was replicated on each of the servers – the document sets was dispersed with the content aware routing l In our content-smart cluster (configuration 3): – separate dynamic content and static content on different servers. – place dynamic content (CGI scripts and ASP) on the servers with powerful CPU, plain html content on the nodes with slow processor and disk. – separate large file (e. g. , video file) on the server nodes with fast disk. 74

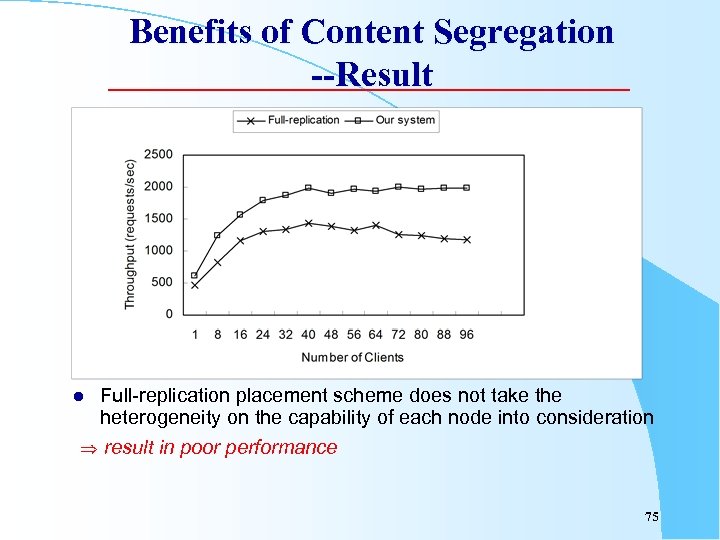

Benefits of Content Segregation --Result l Full-replication placement scheme does not take the heterogeneity on the capability of each node into consideration result in poor performance 75

Benefits of Content Segregation --Result l Full-replication placement scheme does not take the heterogeneity on the capability of each node into consideration result in poor performance 75

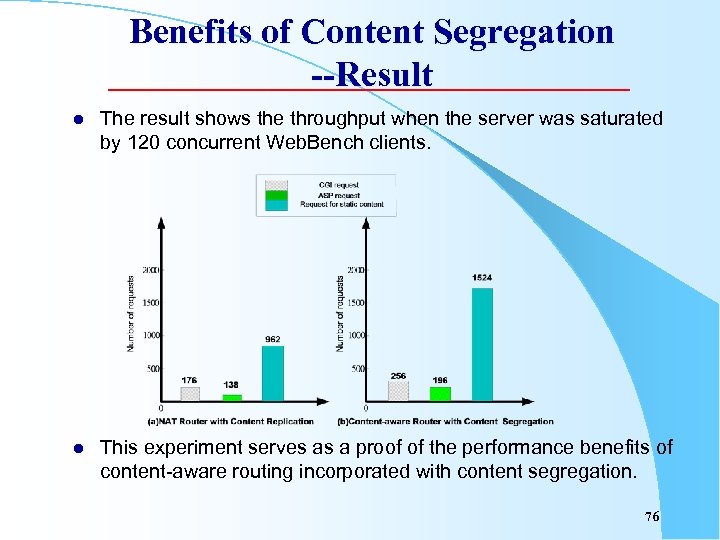

Benefits of Content Segregation --Result l The result shows the throughput when the server was saturated by 120 concurrent Web. Bench clients. l This experiment serves as a proof of the performance benefits of content-aware routing incorporated with content segregation. 76

Benefits of Content Segregation --Result l The result shows the throughput when the server was saturated by 120 concurrent Web. Bench clients. l This experiment serves as a proof of the performance benefits of content-aware routing incorporated with content segregation. 76

Fault Resilience l l The existing server-clustering solutions are not highly reliable, but merely highly available. They offer no guarantee about fault resilience for the service. – Although the server failure can be easily detected and transparently replaced with the available redundant component, however, any ongoing requests on the failed server will be lost. l In addition to detecting and masking the failures, an ideal fault-tolerant Internet server should enable the outstanding requests on the failed node to be smoothly migrated and then recovered on another working node. 77

Fault Resilience l l The existing server-clustering solutions are not highly reliable, but merely highly available. They offer no guarantee about fault resilience for the service. – Although the server failure can be easily detected and transparently replaced with the available redundant component, however, any ongoing requests on the failed server will be lost. l In addition to detecting and masking the failures, an ideal fault-tolerant Internet server should enable the outstanding requests on the failed node to be smoothly migrated and then recovered on another working node. 77

Analysis l l To support fault resilience, we think the routing mechanism should be extended to support two important capabilities: checkpointing and faultrecovery. Challenges: – the cost is very expensive if we log every incoming request for checkpointing Þ The request routing mechanism should be content-aware, so that it can differentiate varieties of requests and provide a corresponding fault-resilience guarantee. – how to recover a Web request from a failed server node to continue execution in another working node Þ Request and its TCP connection should be smoothly and transparently migrated to another node 78

Analysis l l To support fault resilience, we think the routing mechanism should be extended to support two important capabilities: checkpointing and faultrecovery. Challenges: – the cost is very expensive if we log every incoming request for checkpointing Þ The request routing mechanism should be content-aware, so that it can differentiate varieties of requests and provide a corresponding fault-resilience guarantee. – how to recover a Web request from a failed server node to continue execution in another working node Þ Request and its TCP connection should be smoothly and transparently migrated to another node 78

FT-capable Distributor l l l Goal : enable the outstanding requests on the failed node to be smoothly migrated to and then recovered on another working node. We think the request routing mechanism, needed in the sever cluster, is the suitable position to realize the fault-resilience capability. We combine the capabilities of content-aware routing, checkpointing, and fault recovery to propose a new mechanism named Fault-Tolerance capable distributor. 79

FT-capable Distributor l l l Goal : enable the outstanding requests on the failed node to be smoothly migrated to and then recovered on another working node. We think the request routing mechanism, needed in the sever cluster, is the suitable position to realize the fault-resilience capability. We combine the capabilities of content-aware routing, checkpointing, and fault recovery to propose a new mechanism named Fault-Tolerance capable distributor. 79

Fault Recovery l l We divide web requests into two types, stateless and stateful request, and then provide corresponding solution to each category. Stateless requests – static content – dynamic content l Stateful requests – transaction-based services – the heart of a large number of important web services (e. g. , E-commerce) 80

Fault Recovery l l We divide web requests into two types, stateless and stateful request, and then provide corresponding solution to each category. Stateless requests – static content – dynamic content l Stateful requests – transaction-based services – the heart of a large number of important web services (e. g. , E-commerce) 80

Fault Recovery—Static Requests l l A majority of Web requests are to static objects, such as HTML files, images, and videos. If one server node fails in the middle of a static request, we use the following mechanism to recover this request on another node. – select a new server – select an idle pre-forked connection connected with the target server – infer how many bytes has been successfully received by the client (from information in the mapping table) – issues a range request on the new server-side connection to the selected server node. 81

Fault Recovery—Static Requests l l A majority of Web requests are to static objects, such as HTML files, images, and videos. If one server node fails in the middle of a static request, we use the following mechanism to recover this request on another node. – select a new server – select an idle pre-forked connection connected with the target server – infer how many bytes has been successfully received by the client (from information in the mapping table) – issues a range request on the new server-side connection to the selected server node. 81

Fault Recovery—Dynamic Requests l l l Dynamic content: response pages are created on demand (e. g. , CGI scripts, ASP), mostly based on client-provided arguments. Distributor will log user arguments conveyed in the dynamic requests. Recovery mechanism – select a new server – select an idle pre-forked connection connected with the target server – replay with the logged arguments 82

Fault Recovery—Dynamic Requests l l l Dynamic content: response pages are created on demand (e. g. , CGI scripts, ASP), mostly based on client-provided arguments. Distributor will log user arguments conveyed in the dynamic requests. Recovery mechanism – select a new server – select an idle pre-forked connection connected with the target server – replay with the logged arguments 82

Fault Recovery—Dynamic Requests (cont’) l l We found the previous approach is problematic in some situations. The major problem is that some dynamic requests are not “idempotent”. – the result of two successive requests with the same arguments is different. l It is needed to force the client to give up the data that it has received and then re-receive the new response page. – it will not be user-transparent and compatible with the existing browser. l We tackle this problem by making the distributor node to be a reverse proxy and “store-and-then-forward” the response page. 83

Fault Recovery—Dynamic Requests (cont’) l l We found the previous approach is problematic in some situations. The major problem is that some dynamic requests are not “idempotent”. – the result of two successive requests with the same arguments is different. l It is needed to force the client to give up the data that it has received and then re-receive the new response page. – it will not be user-transparent and compatible with the existing browser. l We tackle this problem by making the distributor node to be a reverse proxy and “store-and-then-forward” the response page. 83

Stateful Requests l l l In some cases, the user does not browse a number of independent statically or dynamically generated pages, but is guided through a session controlled by a server-side program (e. g. , a CGI script) associated with some shared states. These session-based services are generally based on so-called three-tier architecture. Recovering a session in the three-tier architecture is a more challenging problem. 84

Stateful Requests l l l In some cases, the user does not browse a number of independent statically or dynamically generated pages, but is guided through a session controlled by a server-side program (e. g. , a CGI script) associated with some shared states. These session-based services are generally based on so-called three-tier architecture. Recovering a session in the three-tier architecture is a more challenging problem. 84

Fault Recovery—Stateful Requests l First of all, the web site manager should define a session for which fault resilience is required. – Via the GUI of a management system – the configuration information will be stored in the URL table l When the distributor finds a request belonging to a session, it will “tag” this client and then direct all consequent requests from the client to one of the “twin servers”, until it finds a request conveying the “end” action. 85

Fault Recovery—Stateful Requests l First of all, the web site manager should define a session for which fault resilience is required. – Via the GUI of a management system – the configuration information will be stored in the URL table l When the distributor finds a request belonging to a session, it will “tag” this client and then direct all consequent requests from the client to one of the “twin servers”, until it finds a request conveying the “end” action. 85

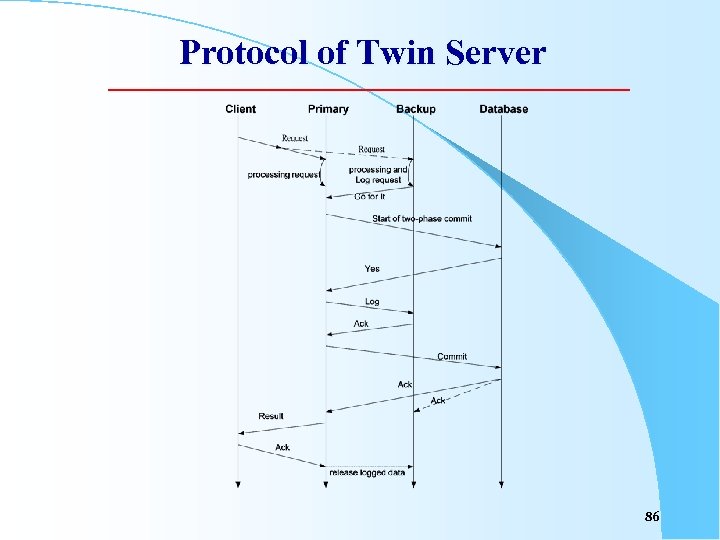

Protocol of Twin Server 86

Protocol of Twin Server 86

Workload Description l We created a workload that models the workload characterization (e. g. , file size, request distribution, file access frequency, etc. ) of representative Web servers l About 6000 unique files of which the total size is about 116 MB. 87

Workload Description l We created a workload that models the workload characterization (e. g. , file size, request distribution, file access frequency, etc. ) of representative Web servers l About 6000 unique files of which the total size is about 116 MB. 87

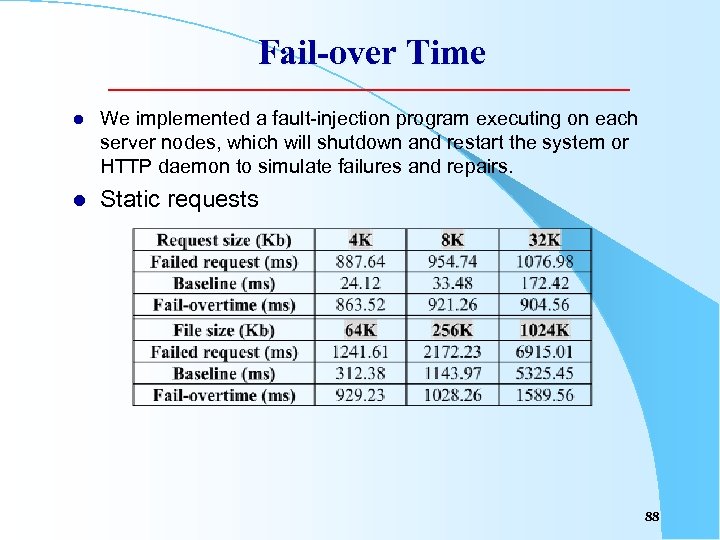

Fail-over Time l We implemented a fault-injection program executing on each server nodes, which will shutdown and restart the system or HTTP daemon to simulate failures and repairs. l Static requests 88

Fail-over Time l We implemented a fault-injection program executing on each server nodes, which will shutdown and restart the system or HTTP daemon to simulate failures and repairs. l Static requests 88

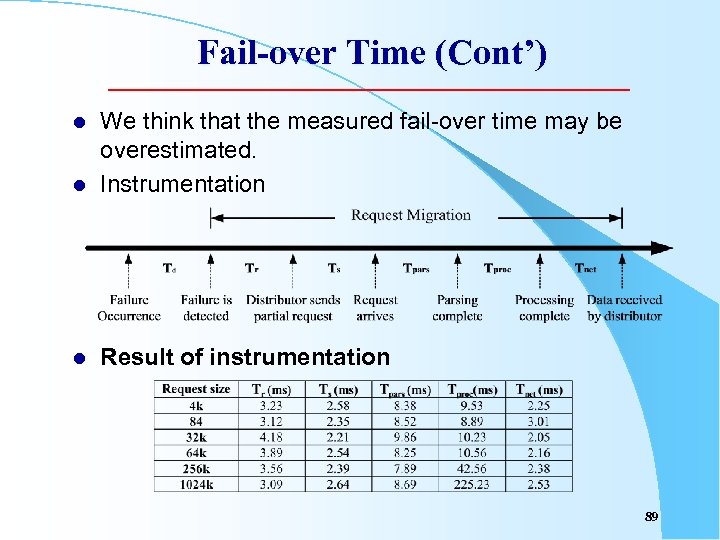

Fail-over Time (Cont’) l We think that the measured fail-over time may be overestimated. Instrumentation l Result of instrumentation l 89

Fail-over Time (Cont’) l We think that the measured fail-over time may be overestimated. Instrumentation l Result of instrumentation l 89

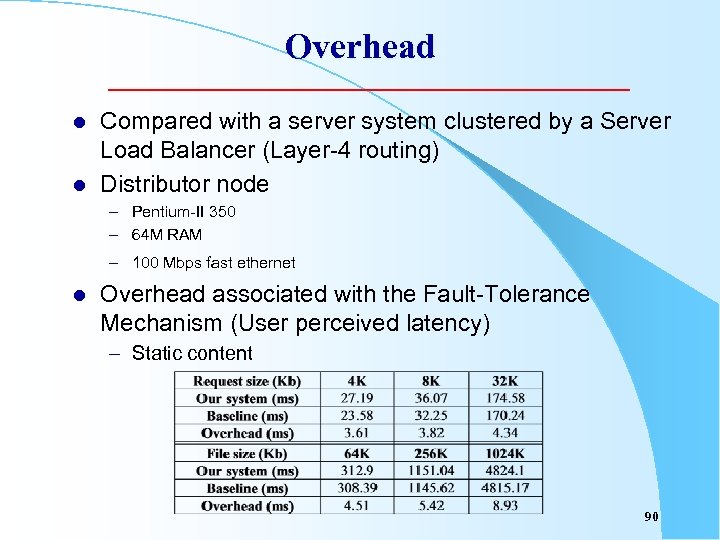

Overhead l l Compared with a server system clustered by a Server Load Balancer (Layer-4 routing) Distributor node – Pentium-II 350 – 64 M RAM – 100 Mbps fast ethernet l Overhead associated with the Fault-Tolerance Mechanism (User perceived latency) – Static content 90

Overhead l l Compared with a server system clustered by a Server Load Balancer (Layer-4 routing) Distributor node – Pentium-II 350 – 64 M RAM – 100 Mbps fast ethernet l Overhead associated with the Fault-Tolerance Mechanism (User perceived latency) – Static content 90

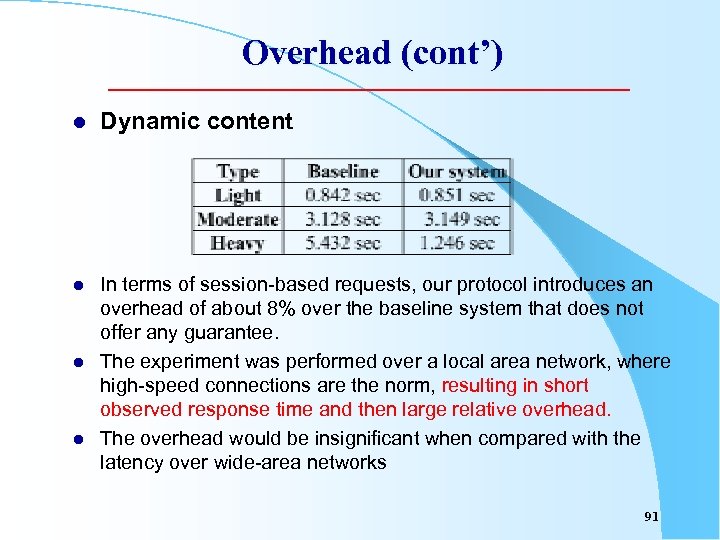

Overhead (cont’) l Dynamic content l In terms of session-based requests, our protocol introduces an overhead of about 8% over the baseline system that does not offer any guarantee. The experiment was performed over a local area network, where high-speed connections are the norm, resulting in short observed response time and then large relative overhead. The overhead would be insignificant when compared with the latency over wide-area networks l l 91

Overhead (cont’) l Dynamic content l In terms of session-based requests, our protocol introduces an overhead of about 8% over the baseline system that does not offer any guarantee. The experiment was performed over a local area network, where high-speed connections are the norm, resulting in short observed response time and then large relative overhead. The overhead would be insignificant when compared with the latency over wide-area networks l l 91

Overhead (Throughput) l Peak throughput – Layer-4 cluster: 2489 requests/sec – Our system : 2378 requests/sec – It shows that our fault tolerance mechanism does not cause significant performance degradation. l l At the period of peak throughput, the CPU utilization of the distributor is 67%, and the consumed memory of our system is slightly larger (only 2. 3 Mbytes) than that of the layer-4 dispatcher. This means that our mechanism is not a performance bottleneck. – In fact, we found the performance bottleneck in our experiment is the network interface of the distributor node. 92

Overhead (Throughput) l Peak throughput – Layer-4 cluster: 2489 requests/sec – Our system : 2378 requests/sec – It shows that our fault tolerance mechanism does not cause significant performance degradation. l l At the period of peak throughput, the CPU utilization of the distributor is 67%, and the consumed memory of our system is slightly larger (only 2. 3 Mbytes) than that of the layer-4 dispatcher. This means that our mechanism is not a performance bottleneck. – In fact, we found the performance bottleneck in our experiment is the network interface of the distributor node. 92

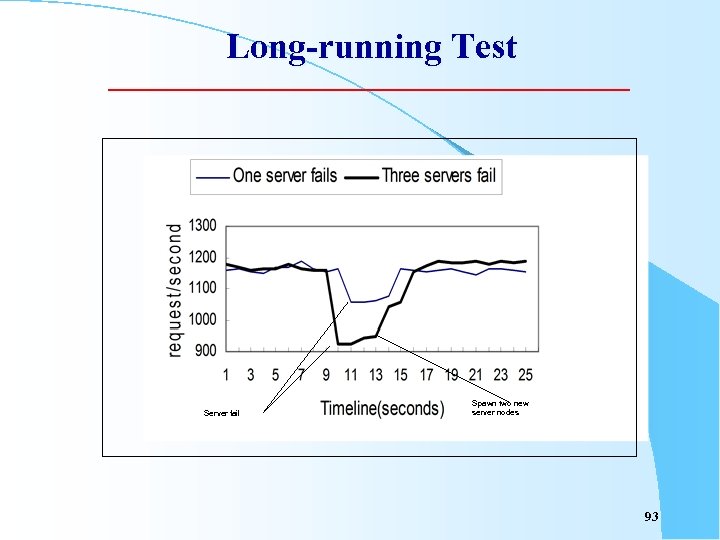

Long-running Test Server fail Spawn two new server nodes 93

Long-running Test Server fail Spawn two new server nodes 93

Service Reliability Our system guarantees service reliability at three levels: l The management system provide a status detection mechanism that can detect and mask the server failures. l A request-failover mechanism enables an ongoing Web request to be smoothly migrated and recovered on another server node in the presence of server failure or overload. l A mechanism to prevent the single-point-of-failure. 94

Service Reliability Our system guarantees service reliability at three levels: l The management system provide a status detection mechanism that can detect and mask the server failures. l A request-failover mechanism enables an ongoing Web request to be smoothly migrated and recovered on another server node in the presence of server failure or overload. l A mechanism to prevent the single-point-of-failure. 94

Work in progress l Load Balancing – Sophisticated Load Balancing in distributor – Dynamically content rearrangement facility to further ensure an even load distribution or Qo. S requirement l l l Security Quality of Service support Service Level Agreement – enable the content owners to specify their specific requirements such as bandwidth usage, content type, number or placement of content replicas, or required degrees of service reliability. l l We are implementing the related mechanisms to configure the management policy to meet the complex requirements of different customers. Hardware Support 95

Work in progress l Load Balancing – Sophisticated Load Balancing in distributor – Dynamically content rearrangement facility to further ensure an even load distribution or Qo. S requirement l l l Security Quality of Service support Service Level Agreement – enable the content owners to specify their specific requirements such as bandwidth usage, content type, number or placement of content replicas, or required degrees of service reliability. l l We are implementing the related mechanisms to configure the management policy to meet the complex requirements of different customers. Hardware Support 95

Contributions l l l Content-aware request routing mechanism Java-based management system Idea of URL table Performance speedup by URL formalization Enabling fault resilience for Web services Enhance reliability for Internet services Content-aware Load balancing algorithm Qo. S support Transaction-based services support System robustness Service Level Agreement and System policy 96

Contributions l l l Content-aware request routing mechanism Java-based management system Idea of URL table Performance speedup by URL formalization Enabling fault resilience for Web services Enhance reliability for Internet services Content-aware Load balancing algorithm Qo. S support Transaction-based services support System robustness Service Level Agreement and System policy 96

Conclusion l l Web service providers must gradually move to more sophisticated services as the content of a Web site or e-business operations become more complex. The Internet service supported by our system will be – Scalable Ö Ö Cluster-based architecture Efficient content-aware routing – Reliable Ö Ö Ö Failure detection Request failover System robustness – Highly manageable Ö Java-based management system 97

Conclusion l l Web service providers must gradually move to more sophisticated services as the content of a Web site or e-business operations become more complex. The Internet service supported by our system will be – Scalable Ö Ö Cluster-based architecture Efficient content-aware routing – Reliable Ö Ö Ö Failure detection Request failover System robustness – Highly manageable Ö Java-based management system 97