2813e3037b7c156b7f039119855a0c44.ppt

- Количество слайдов: 25

National Energy Research Scientific Computing Center (NERSC) The GUPFS Project at NERSC GUPFS Team NERSC Center Division, LBNL November 2003 1

National Energy Research Scientific Computing Center (NERSC) The GUPFS Project at NERSC GUPFS Team NERSC Center Division, LBNL November 2003 1

Global Unified Parallel File System (GUPFS) • Global/Unified – A file system shared by major NERSC production systems – Using consolidated storage and providing unified name space – Automatically sharing user files between systems without replication – Integration with HPSS and Grid is highly desired • Parallel – File system providing performance that is scalable as the number of clients and storage devices increase 2

Global Unified Parallel File System (GUPFS) • Global/Unified – A file system shared by major NERSC production systems – Using consolidated storage and providing unified name space – Automatically sharing user files between systems without replication – Integration with HPSS and Grid is highly desired • Parallel – File system providing performance that is scalable as the number of clients and storage devices increase 2

GUPFS Project Overview • Five year project introduced in NERSC Strategic Proposal • Purpose to make advanced scientific research using NERSC systems more efficient and productive • Simplify end user data management by providing a shared disk file system in NERSC production environment • An evaluation, selection, and deployment project – May conduct or support development activities to accelerate functionality or supply missing functionality 3

GUPFS Project Overview • Five year project introduced in NERSC Strategic Proposal • Purpose to make advanced scientific research using NERSC systems more efficient and productive • Simplify end user data management by providing a shared disk file system in NERSC production environment • An evaluation, selection, and deployment project – May conduct or support development activities to accelerate functionality or supply missing functionality 3

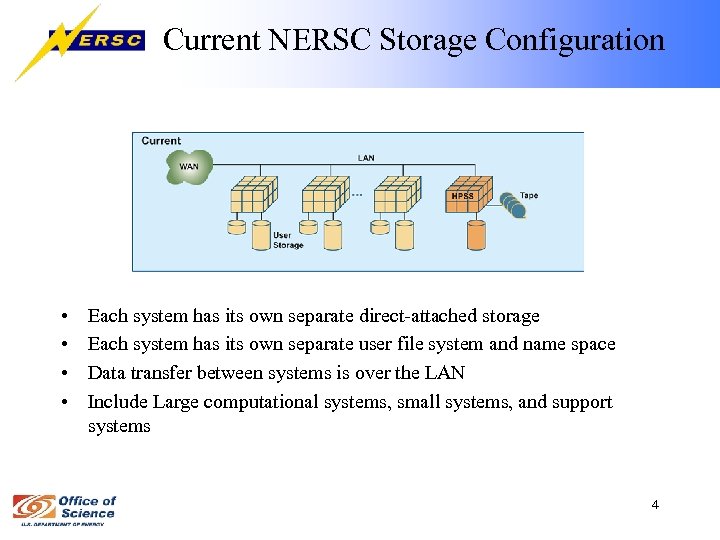

Current NERSC Storage Configuration • • Each system has its own separate direct-attached storage Each system has its own separate user file system and name space Data transfer between systems is over the LAN Include Large computational systems, small systems, and support systems 4

Current NERSC Storage Configuration • • Each system has its own separate direct-attached storage Each system has its own separate user file system and name space Data transfer between systems is over the LAN Include Large computational systems, small systems, and support systems 4

NERSC Storage Vision • • Single storage pool, decoupled from NERSC computational systems – Diverse file access - supporting both home file systems and large scratch file system – Flexible management of storage resource – All systems have access to all storage – require different fabric – Buy new storage (faster and cheaper) only as we need it High performance large capacity storage – Users see same file from all systems – No need for replication – Visualization server has access to data as soon as it is created Integration with mass storage – Provide direct HSM and backups through HPSS without impacting computational systems Potential Geographical distribution 5

NERSC Storage Vision • • Single storage pool, decoupled from NERSC computational systems – Diverse file access - supporting both home file systems and large scratch file system – Flexible management of storage resource – All systems have access to all storage – require different fabric – Buy new storage (faster and cheaper) only as we need it High performance large capacity storage – Users see same file from all systems – No need for replication – Visualization server has access to data as soon as it is created Integration with mass storage – Provide direct HSM and backups through HPSS without impacting computational systems Potential Geographical distribution 5

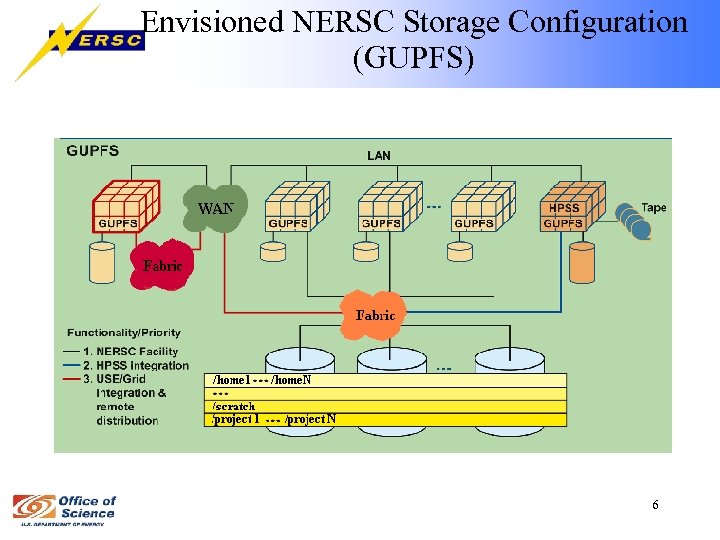

Envisioned NERSC Storage Configuration (GUPFS) 6

Envisioned NERSC Storage Configuration (GUPFS) 6

Where We Are Now • At the beginning of the 3 rd year of the three-year technology evaluation phase • Evaluation of technology components needed for GUPFS: – Shared File System, – SAN Fabric, and – Storage • Constructed complex testbed environment simulating envisioned NERSC environment • Developed testing methodologies for evaluation • Identifying and testing appropriate technologies in all three areas • Collaborating with vendors to fix problems and influence their development in directions beneficial to HPC 7

Where We Are Now • At the beginning of the 3 rd year of the three-year technology evaluation phase • Evaluation of technology components needed for GUPFS: – Shared File System, – SAN Fabric, and – Storage • Constructed complex testbed environment simulating envisioned NERSC environment • Developed testing methodologies for evaluation • Identifying and testing appropriate technologies in all three areas • Collaborating with vendors to fix problems and influence their development in directions beneficial to HPC 7

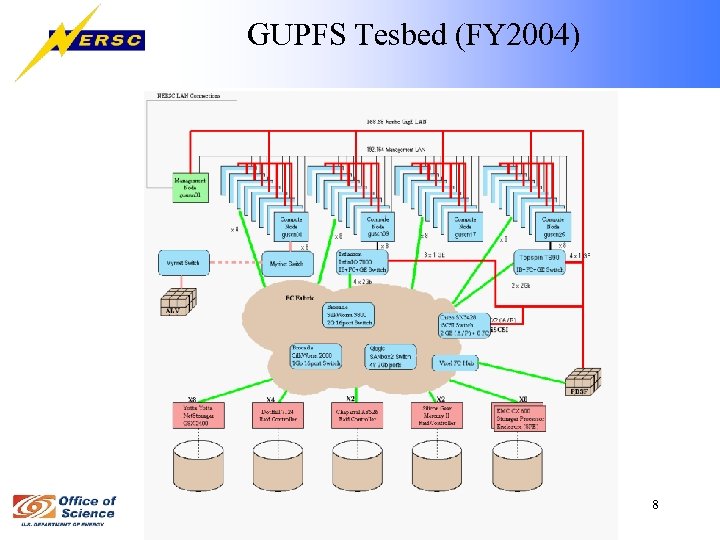

GUPFS Tesbed (FY 2004) 8

GUPFS Tesbed (FY 2004) 8

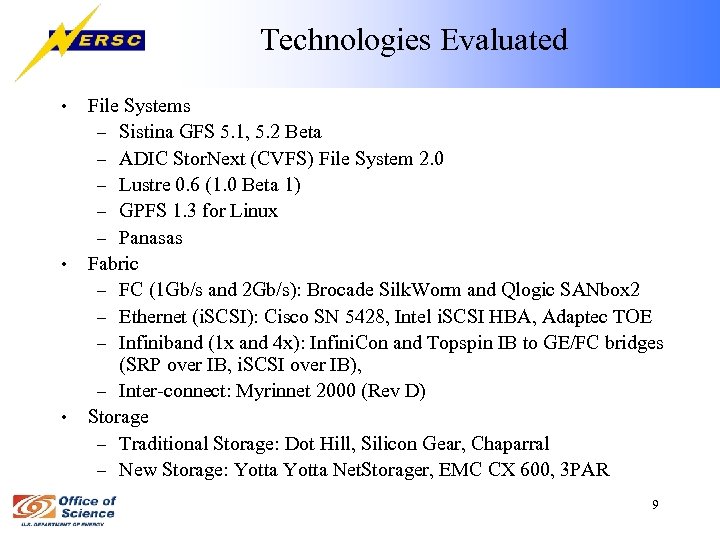

Technologies Evaluated • • • File Systems – Sistina GFS 5. 1, 5. 2 Beta – ADIC Stor. Next (CVFS) File System 2. 0 – Lustre 0. 6 (1. 0 Beta 1) – GPFS 1. 3 for Linux – Panasas Fabric – FC (1 Gb/s and 2 Gb/s): Brocade Silk. Worm and Qlogic SANbox 2 – Ethernet (i. SCSI): Cisco SN 5428, Intel i. SCSI HBA, Adaptec TOE – Infiniband (1 x and 4 x): Infini. Con and Topspin IB to GE/FC bridges (SRP over IB, i. SCSI over IB), – Inter-connect: Myrinnet 2000 (Rev D) Storage – Traditional Storage: Dot Hill, Silicon Gear, Chaparral – New Storage: Yotta Net. Storager, EMC CX 600, 3 PAR 9

Technologies Evaluated • • • File Systems – Sistina GFS 5. 1, 5. 2 Beta – ADIC Stor. Next (CVFS) File System 2. 0 – Lustre 0. 6 (1. 0 Beta 1) – GPFS 1. 3 for Linux – Panasas Fabric – FC (1 Gb/s and 2 Gb/s): Brocade Silk. Worm and Qlogic SANbox 2 – Ethernet (i. SCSI): Cisco SN 5428, Intel i. SCSI HBA, Adaptec TOE – Infiniband (1 x and 4 x): Infini. Con and Topspin IB to GE/FC bridges (SRP over IB, i. SCSI over IB), – Inter-connect: Myrinnet 2000 (Rev D) Storage – Traditional Storage: Dot Hill, Silicon Gear, Chaparral – New Storage: Yotta Net. Storager, EMC CX 600, 3 PAR 9

Parallel I/O Tests • I/O Tests used for file system and storage evaluation: – Cache Write – Cache Read – Cache Rotate Read – Disk Write – Disk Read – Disk Rotate Read 10

Parallel I/O Tests • I/O Tests used for file system and storage evaluation: – Cache Write – Cache Read – Cache Rotate Read – Disk Write – Disk Read – Disk Rotate Read 10

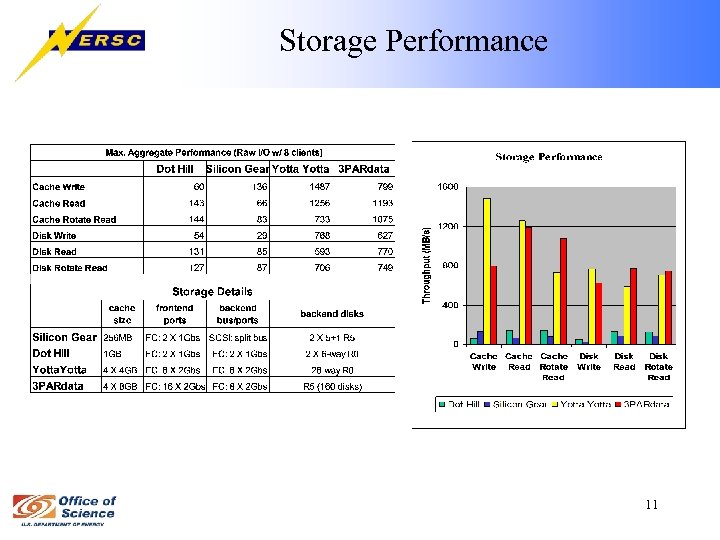

Storage Performance 11

Storage Performance 11

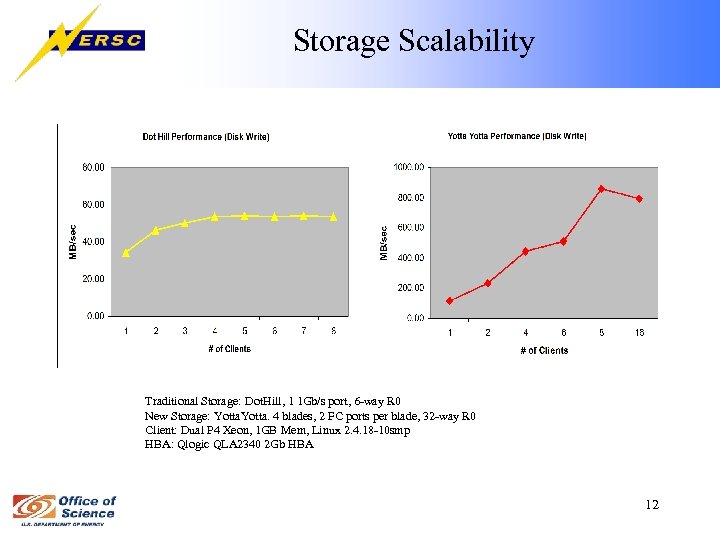

Storage Scalability Traditional Storage: Dot. Hill, 1 1 Gb/s port, 6 -way R 0 New Storage: Yotta. 4 blades, 2 FC ports per blade, 32 -way R 0 Client: Dual P 4 Xeon, 1 GB Mem, Linux 2. 4. 18 -10 smp HBA: Qlogic QLA 2340 2 Gb HBA 12

Storage Scalability Traditional Storage: Dot. Hill, 1 1 Gb/s port, 6 -way R 0 New Storage: Yotta. 4 blades, 2 FC ports per blade, 32 -way R 0 Client: Dual P 4 Xeon, 1 GB Mem, Linux 2. 4. 18 -10 smp HBA: Qlogic QLA 2340 2 Gb HBA 12

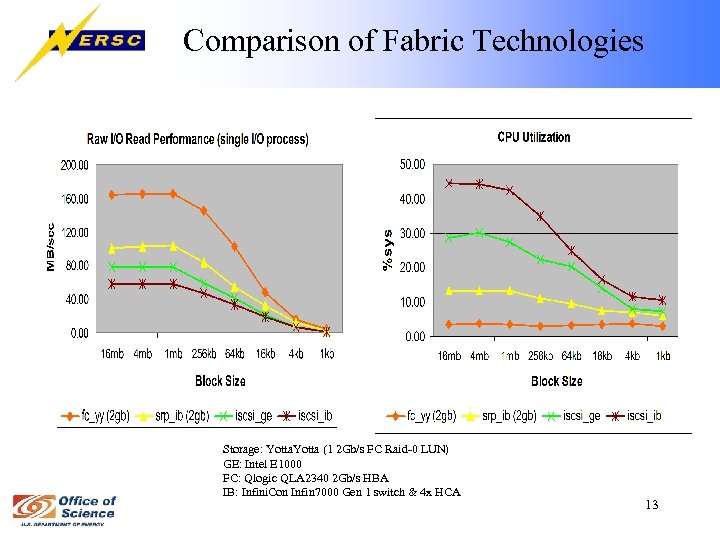

Comparison of Fabric Technologies Storage: Yotta (1 2 Gb/s FC Raid-0 LUN) GE: Intel E 1000 FC: Qlogic QLA 2340 2 Gb/s HBA IB: Infini. Con Infin 7000 Gen 1 switch & 4 x HCA 13

Comparison of Fabric Technologies Storage: Yotta (1 2 Gb/s FC Raid-0 LUN) GE: Intel E 1000 FC: Qlogic QLA 2340 2 Gb/s HBA IB: Infini. Con Infin 7000 Gen 1 switch & 4 x HCA 13

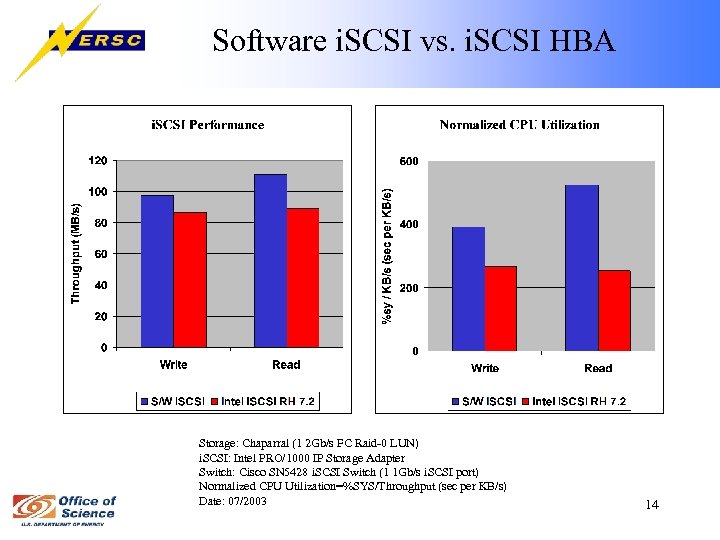

Software i. SCSI vs. i. SCSI HBA Storage: Chaparral (1 2 Gb/s FC Raid-0 LUN) i. SCSI: Intel PRO/1000 IP Storage Adapter Switch: Cisco SN 5428 i. SCSI Switch (1 1 Gb/s i. SCSI port) Normalized CPU Utilization=%SYS/Throughput (sec per KB/s) Date: 07/2003 14

Software i. SCSI vs. i. SCSI HBA Storage: Chaparral (1 2 Gb/s FC Raid-0 LUN) i. SCSI: Intel PRO/1000 IP Storage Adapter Switch: Cisco SN 5428 i. SCSI Switch (1 1 Gb/s i. SCSI port) Normalized CPU Utilization=%SYS/Throughput (sec per KB/s) Date: 07/2003 14

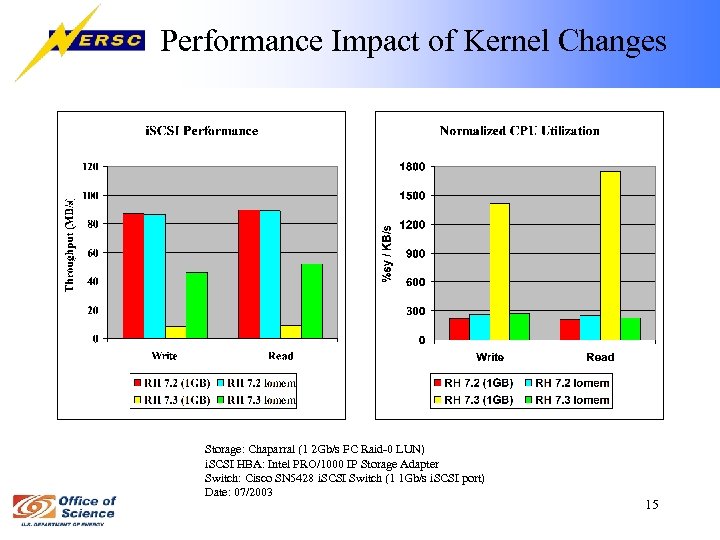

Performance Impact of Kernel Changes Storage: Chaparral (1 2 Gb/s FC Raid-0 LUN) i. SCSI HBA: Intel PRO/1000 IP Storage Adapter Switch: Cisco SN 5428 i. SCSI Switch (1 1 Gb/s i. SCSI port) Date: 07/2003 15

Performance Impact of Kernel Changes Storage: Chaparral (1 2 Gb/s FC Raid-0 LUN) i. SCSI HBA: Intel PRO/1000 IP Storage Adapter Switch: Cisco SN 5428 i. SCSI Switch (1 1 Gb/s i. SCSI port) Date: 07/2003 15

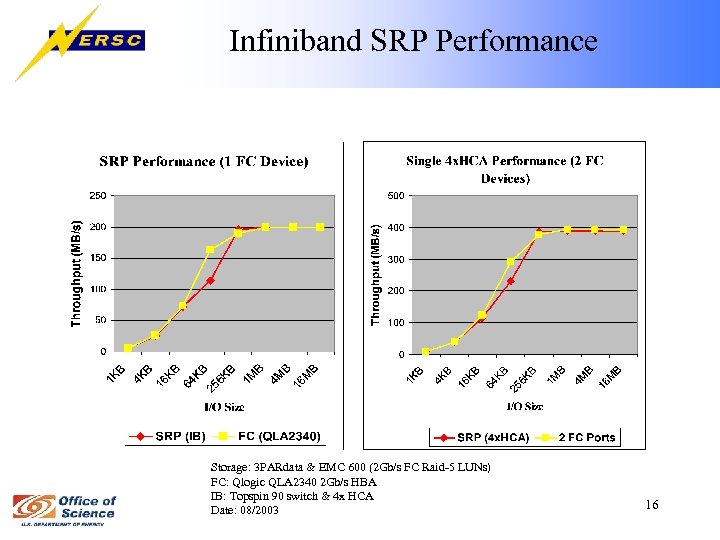

Infiniband SRP Performance Storage: 3 PARdata & EMC 600 (2 Gb/s FC Raid-5 LUNs) FC: Qlogic QLA 2340 2 Gb/s HBA IB: Topspin 90 switch & 4 x HCA Date: 08/2003 16

Infiniband SRP Performance Storage: 3 PARdata & EMC 600 (2 Gb/s FC Raid-5 LUNs) FC: Qlogic QLA 2340 2 Gb/s HBA IB: Topspin 90 switch & 4 x HCA Date: 08/2003 16

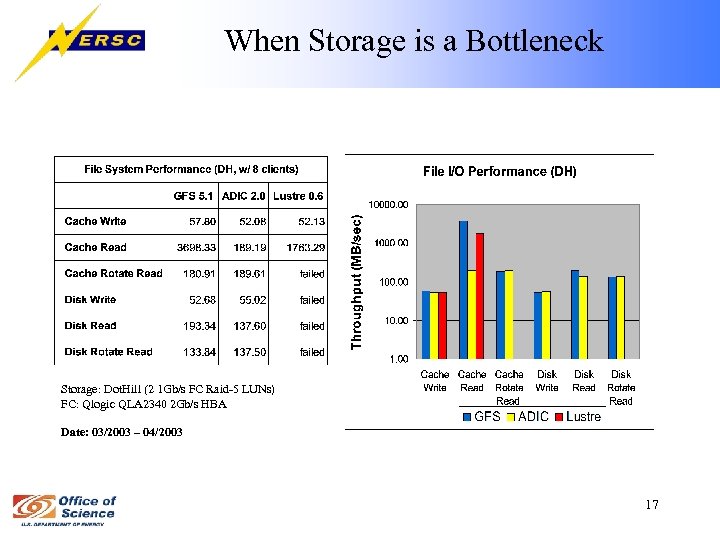

When Storage is a Bottleneck Storage: Dot. Hill (2 1 Gb/s FC Raid-5 LUNs) FC: Qlogic QLA 2340 2 Gb/s HBA Date: 03/2003 – 04/2003 17

When Storage is a Bottleneck Storage: Dot. Hill (2 1 Gb/s FC Raid-5 LUNs) FC: Qlogic QLA 2340 2 Gb/s HBA Date: 03/2003 – 04/2003 17

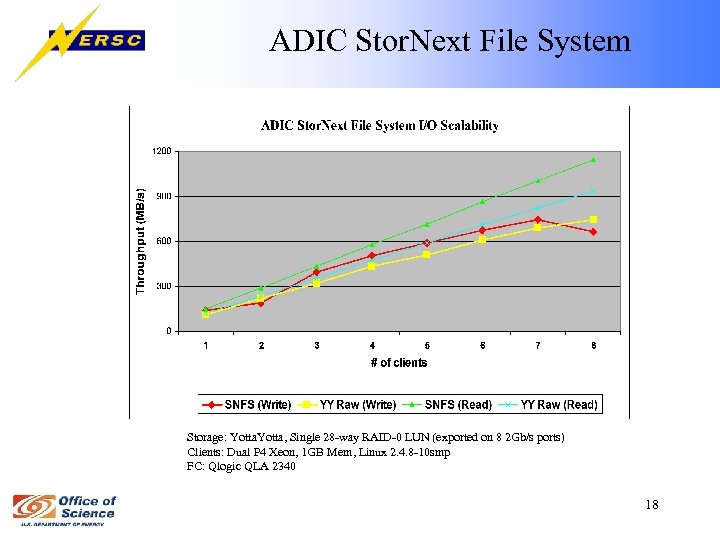

ADIC Stor. Next File System Storage: Yotta, Single 28 -way RAID-0 LUN (exported on 8 2 Gb/s ports) Clients: Dual P 4 Xeon, 1 GB Mem, Linux 2. 4. 8 -10 smp FC: Qlogic QLA 2340 18

ADIC Stor. Next File System Storage: Yotta, Single 28 -way RAID-0 LUN (exported on 8 2 Gb/s ports) Clients: Dual P 4 Xeon, 1 GB Mem, Linux 2. 4. 8 -10 smp FC: Qlogic QLA 2340 18

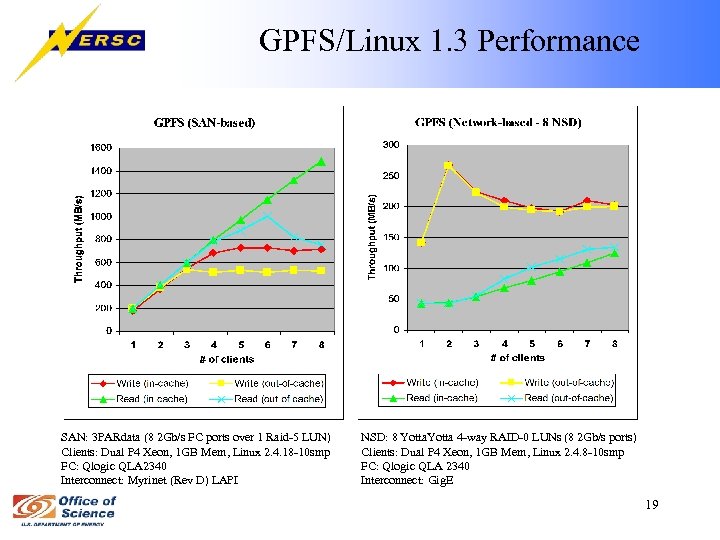

GPFS/Linux 1. 3 Performance SAN: 3 PARdata (8 2 Gb/s FC ports over 1 Raid-5 LUN) Clients: Dual P 4 Xeon, 1 GB Mem, Linux 2. 4. 18 -10 smp FC: Qlogic QLA 2340 Interconnect: Myrinet (Rev D) LAPI NSD: 8 Yotta 4 -way RAID-0 LUNs (8 2 Gb/s ports) Clients: Dual P 4 Xeon, 1 GB Mem, Linux 2. 4. 8 -10 smp FC: Qlogic QLA 2340 Interconnect: Gig. E 19

GPFS/Linux 1. 3 Performance SAN: 3 PARdata (8 2 Gb/s FC ports over 1 Raid-5 LUN) Clients: Dual P 4 Xeon, 1 GB Mem, Linux 2. 4. 18 -10 smp FC: Qlogic QLA 2340 Interconnect: Myrinet (Rev D) LAPI NSD: 8 Yotta 4 -way RAID-0 LUNs (8 2 Gb/s ports) Clients: Dual P 4 Xeon, 1 GB Mem, Linux 2. 4. 8 -10 smp FC: Qlogic QLA 2340 Interconnect: Gig. E 19

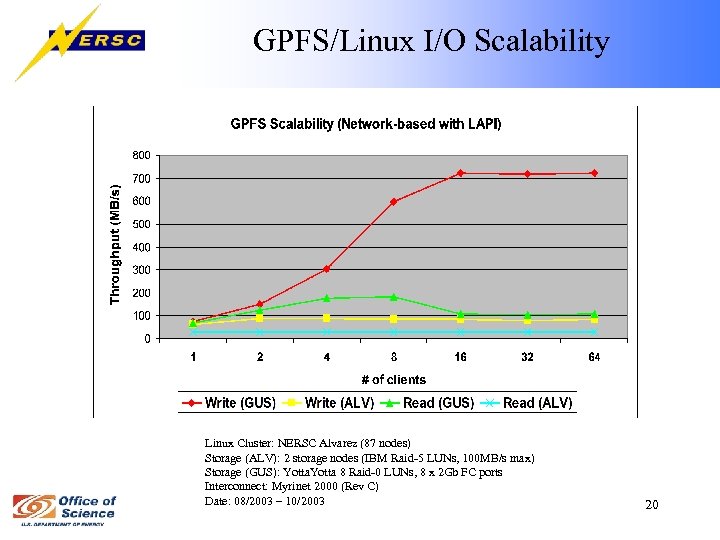

GPFS/Linux I/O Scalability Linux Cluster: NERSC Alvarez (87 nodes) Storage (ALV): 2 storage nodes (IBM Raid-5 LUNs, 100 MB/s max) Storage (GUS): Yotta 8 Raid-0 LUNs, 8 x 2 Gb FC ports Interconnect: Myrinet 2000 (Rev C) Date: 08/2003 – 10/2003 20

GPFS/Linux I/O Scalability Linux Cluster: NERSC Alvarez (87 nodes) Storage (ALV): 2 storage nodes (IBM Raid-5 LUNs, 100 MB/s max) Storage (GUS): Yotta 8 Raid-0 LUNs, 8 x 2 Gb FC ports Interconnect: Myrinet 2000 (Rev C) Date: 08/2003 – 10/2003 20

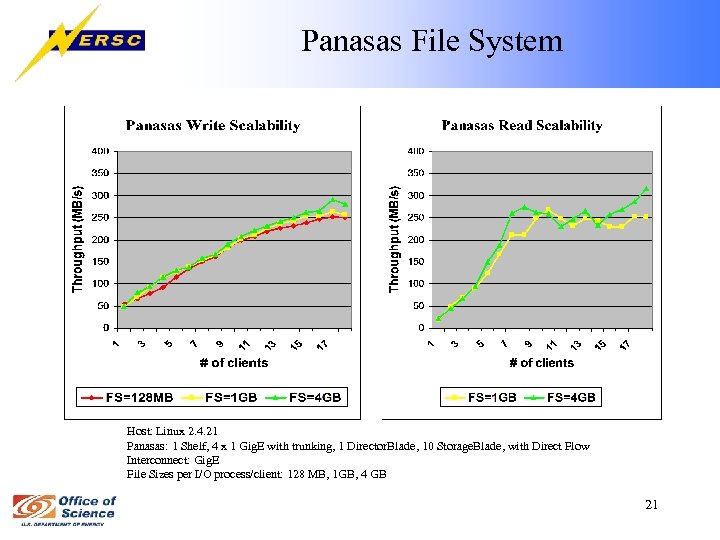

Panasas File System Host: Linux 2. 4. 21 Panasas: 1 Shelf, 4 x 1 Gig. E with trunking, 1 Director. Blade, 10 Storage. Blade, with Direct Flow Interconnect: Gig. E File Sizes per I/O process/client: 128 MB, 1 GB, 4 GB 21

Panasas File System Host: Linux 2. 4. 21 Panasas: 1 Shelf, 4 x 1 Gig. E with trunking, 1 Director. Blade, 10 Storage. Blade, with Direct Flow Interconnect: Gig. E File Sizes per I/O process/client: 128 MB, 1 GB, 4 GB 21

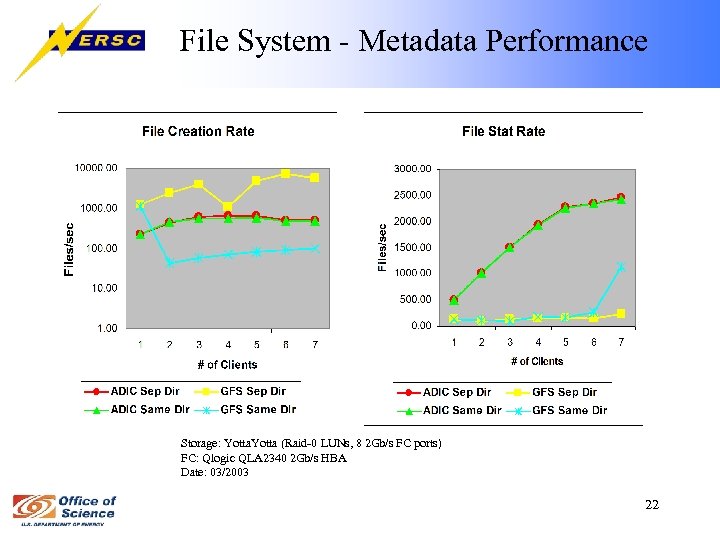

File System - Metadata Performance Storage: Yotta (Raid-0 LUNs, 8 2 Gb/s FC ports) FC: Qlogic QLA 2340 2 Gb/s HBA Date: 03/2003 22

File System - Metadata Performance Storage: Yotta (Raid-0 LUNs, 8 2 Gb/s FC ports) FC: Qlogic QLA 2340 2 Gb/s HBA Date: 03/2003 22

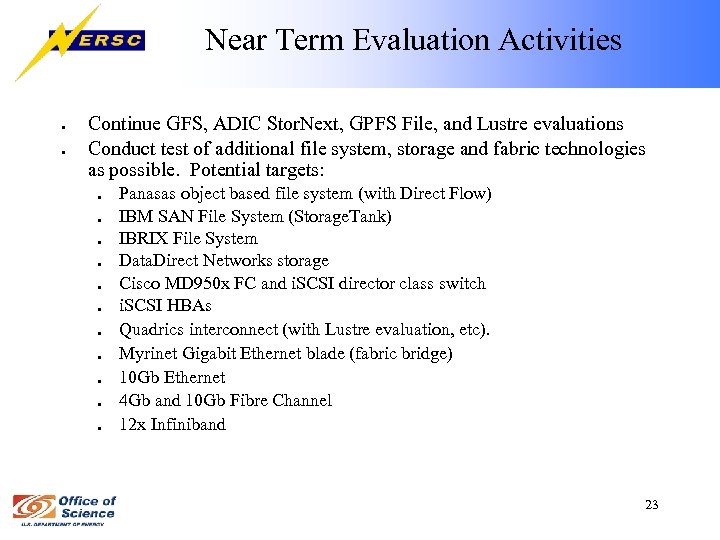

Near Term Evaluation Activities ● ● Continue GFS, ADIC Stor. Next, GPFS File, and Lustre evaluations Conduct test of additional file system, storage and fabric technologies as possible. Potential targets: ● ● ● Panasas object based file system (with Direct Flow) IBM SAN File System (Storage. Tank) IBRIX File System Data. Direct Networks storage Cisco MD 950 x FC and i. SCSI director class switch i. SCSI HBAs Quadrics interconnect (with Lustre evaluation, etc). Myrinet Gigabit Ethernet blade (fabric bridge) 10 Gb Ethernet 4 Gb and 10 Gb Fibre Channel 12 x Infiniband 23

Near Term Evaluation Activities ● ● Continue GFS, ADIC Stor. Next, GPFS File, and Lustre evaluations Conduct test of additional file system, storage and fabric technologies as possible. Potential targets: ● ● ● Panasas object based file system (with Direct Flow) IBM SAN File System (Storage. Tank) IBRIX File System Data. Direct Networks storage Cisco MD 950 x FC and i. SCSI director class switch i. SCSI HBAs Quadrics interconnect (with Lustre evaluation, etc). Myrinet Gigabit Ethernet blade (fabric bridge) 10 Gb Ethernet 4 Gb and 10 Gb Fibre Channel 12 x Infiniband 23

Thank You • GUPFS Project Web Site – http: //www. nersc. gov/projects/gupfs • GUPFS FY 02 Technical Report – http: //www. nersc. gov/aboutnersc/pubs/GUPFS_02. pdf • Contact Info: – Project Lead: Greg Butler (gbutler@nersc. gov) 24

Thank You • GUPFS Project Web Site – http: //www. nersc. gov/projects/gupfs • GUPFS FY 02 Technical Report – http: //www. nersc. gov/aboutnersc/pubs/GUPFS_02. pdf • Contact Info: – Project Lead: Greg Butler (gbutler@nersc. gov) 24

GUPFS Team Greg Butler (GButler@nersc. gov, Project Lead) Will Baird (WPBaird@lbl. gov) Rei Lee (RCLee@lbl. gov) Craig Tull (CETull@lbl. gov) Michael Welcome (MLWelcome@lbl. gov) Cary Whitney (CLWhitney@lbl. gov) 25

GUPFS Team Greg Butler (GButler@nersc. gov, Project Lead) Will Baird (WPBaird@lbl. gov) Rei Lee (RCLee@lbl. gov) Craig Tull (CETull@lbl. gov) Michael Welcome (MLWelcome@lbl. gov) Cary Whitney (CLWhitney@lbl. gov) 25