f3853f0b0a245b5a52d0152e712e50ba.ppt

- Количество слайдов: 36

Named Entity Recognition for Daninsh in a CG framework Eckhard Bick Southern Denmark University lineb@hum. au. dk

Named Entity Recognition for Daninsh in a CG framework Eckhard Bick Southern Denmark University lineb@hum. au. dk

Topics • Dan. Gram system overview • Distributed NER techniques: - pattern matching - lexical - CG-rules • Evaluation and outlook

Topics • Dan. Gram system overview • Distributed NER techniques: - pattern matching - lexical - CG-rules • Evaluation and outlook

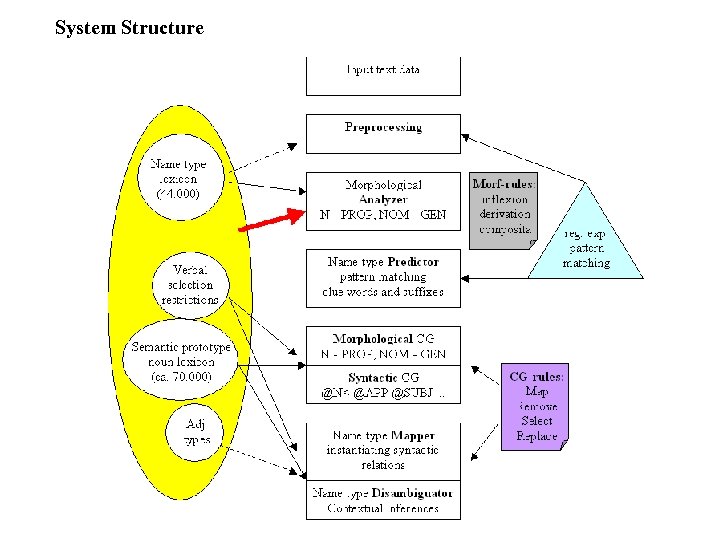

System Structure

System Structure

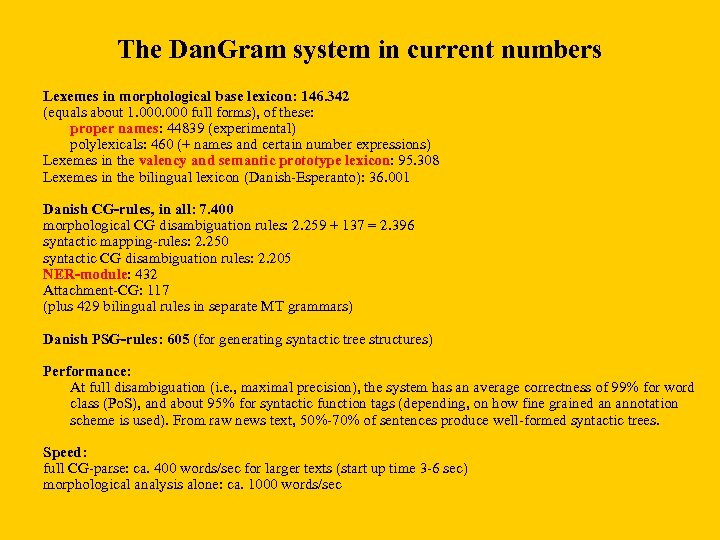

The Dan. Gram system in current numbers Lexemes in morphological base lexicon: 146. 342 (equals about 1. 000 full forms), of these: proper names: 44839 (experimental) polylexicals: 460 (+ names and certain number expressions) Lexemes in the valency and semantic prototype lexicon: 95. 308 Lexemes in the bilingual lexicon (Danish-Esperanto): 36. 001 Danish CG-rules, in all: 7. 400 morphological CG disambiguation rules: 2. 259 + 137 = 2. 396 syntactic mapping-rules: 2. 250 syntactic CG disambiguation rules: 2. 205 NER-module: 432 Attachment-CG: 117 (plus 429 bilingual rules in separate MT grammars) Danish PSG-rules: 605 (for generating syntactic tree structures) Performance: At full disambiguation (i. e. , maximal precision), the system has an average correctness of 99% for word class (Po. S), and about 95% for syntactic function tags (depending, on how fine grained an annotation scheme is used). From raw news text, 50%-70% of sentences produce well-formed syntactic trees. Speed: full CG-parse: ca. 400 words/sec for larger texts (start up time 3 -6 sec) morphological analysis alone: ca. 1000 words/sec

The Dan. Gram system in current numbers Lexemes in morphological base lexicon: 146. 342 (equals about 1. 000 full forms), of these: proper names: 44839 (experimental) polylexicals: 460 (+ names and certain number expressions) Lexemes in the valency and semantic prototype lexicon: 95. 308 Lexemes in the bilingual lexicon (Danish-Esperanto): 36. 001 Danish CG-rules, in all: 7. 400 morphological CG disambiguation rules: 2. 259 + 137 = 2. 396 syntactic mapping-rules: 2. 250 syntactic CG disambiguation rules: 2. 205 NER-module: 432 Attachment-CG: 117 (plus 429 bilingual rules in separate MT grammars) Danish PSG-rules: 605 (for generating syntactic tree structures) Performance: At full disambiguation (i. e. , maximal precision), the system has an average correctness of 99% for word class (Po. S), and about 95% for syntactic function tags (depending, on how fine grained an annotation scheme is used). From raw news text, 50%-70% of sentences produce well-formed syntactic trees. Speed: full CG-parse: ca. 400 words/sec for larger texts (start up time 3 -6 sec) morphological analysis alone: ca. 1000 words/sec

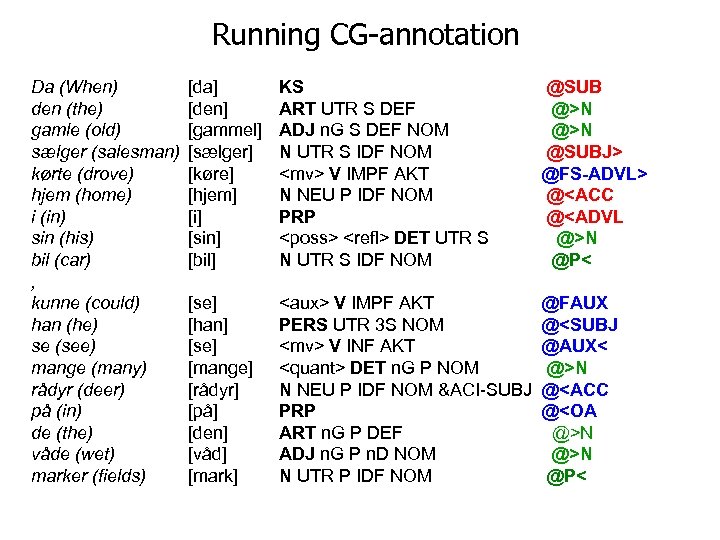

Running CG-annotation Da (When) den (the) gamle (old) sælger (salesman) kørte (drove) hjem (home) i (in) sin (his) bil (car) , kunne (could) han (he) se (see) mange (many) rådyr (deer) på (in) de (the) våde (wet) marker (fields) [da] [den] [gammel] [sælger] [køre] [hjem] [i] [sin] [bil] KS ART UTR S DEF ADJ n. G S DEF NOM N UTR S IDF NOM

Running CG-annotation Da (When) den (the) gamle (old) sælger (salesman) kørte (drove) hjem (home) i (in) sin (his) bil (car) , kunne (could) han (he) se (see) mange (many) rådyr (deer) på (in) de (the) våde (wet) marker (fields) [da] [den] [gammel] [sælger] [køre] [hjem] [i] [sin] [bil] KS ART UTR S DEF ADJ n. G S DEF NOM N UTR S IDF NOM

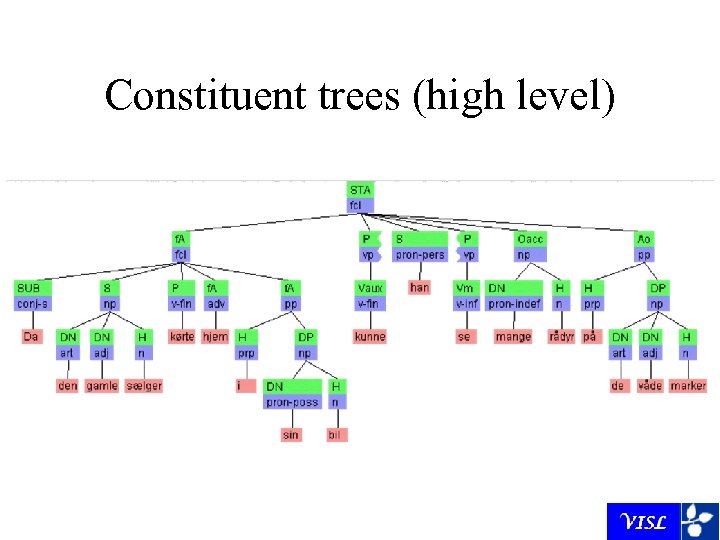

Constituent trees (high level)

Constituent trees (high level)

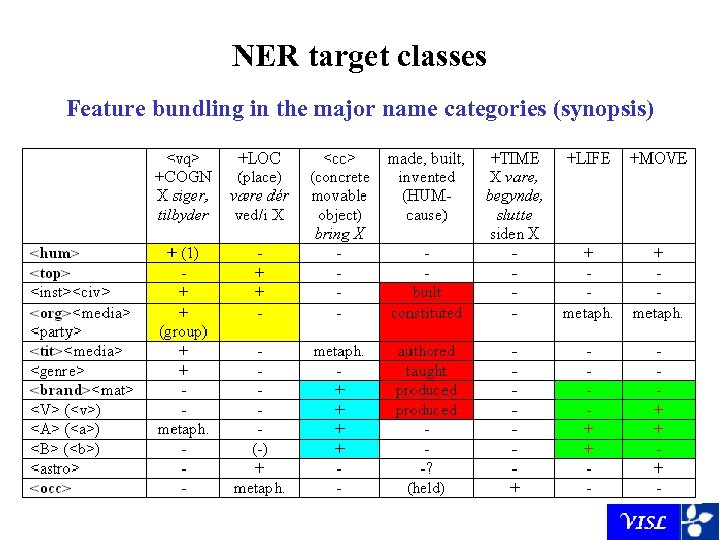

NER target classes Feature bundling in the major name categories (synopsis)

NER target classes Feature bundling in the major name categories (synopsis)

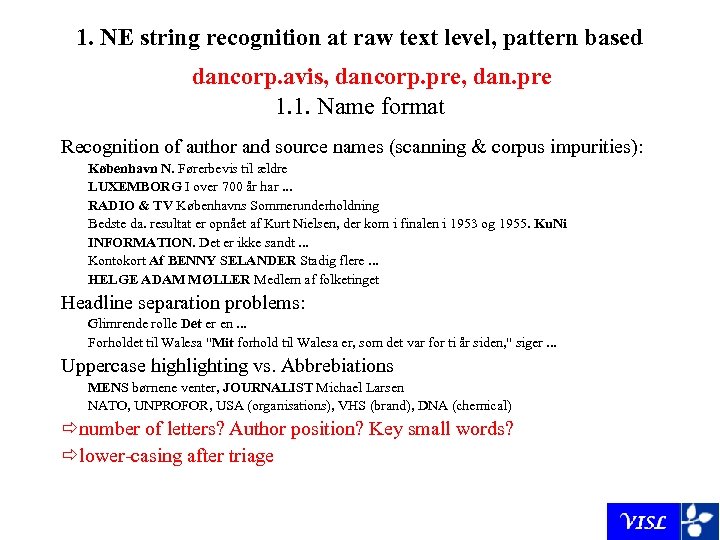

1. NE string recognition at raw text level, pattern based dancorp. avis, dancorp. pre, dan. pre 1. 1. Name format Recognition of author and source names (scanning & corpus impurities): København N. Førerbevis til ældre LUXEMBORG I over 700 år har. . . RADIO & TV Københavns Sommerunderholdning Bedste da. resultat er opnået af Kurt Nielsen, der kom i finalen i 1953 og 1955. Ku. Ni INFORMATION. Det er ikke sandt. . . Kontokort Af BENNY SELANDER Stadig flere. . . HELGE ADAM MØLLER Medlem af folketinget Headline separation problems: Glimrende rolle Det er en. . . Forholdet til Walesa "Mit forhold til Walesa er, som det var for ti år siden, " siger. . . Uppercase highlighting vs. Abbrebiations MENS børnene venter, JOURNALIST Michael Larsen NATO, UNPROFOR, USA (organisations), VHS (brand), DNA (chemical) number of letters? Author position? Key small words? lower-casing after triage

1. NE string recognition at raw text level, pattern based dancorp. avis, dancorp. pre, dan. pre 1. 1. Name format Recognition of author and source names (scanning & corpus impurities): København N. Førerbevis til ældre LUXEMBORG I over 700 år har. . . RADIO & TV Københavns Sommerunderholdning Bedste da. resultat er opnået af Kurt Nielsen, der kom i finalen i 1953 og 1955. Ku. Ni INFORMATION. Det er ikke sandt. . . Kontokort Af BENNY SELANDER Stadig flere. . . HELGE ADAM MØLLER Medlem af folketinget Headline separation problems: Glimrende rolle Det er en. . . Forholdet til Walesa "Mit forhold til Walesa er, som det var for ti år siden, " siger. . . Uppercase highlighting vs. Abbrebiations MENS børnene venter, JOURNALIST Michael Larsen NATO, UNPROFOR, USA (organisations), VHS (brand), DNA (chemical) number of letters? Author position? Key small words? lower-casing after triage

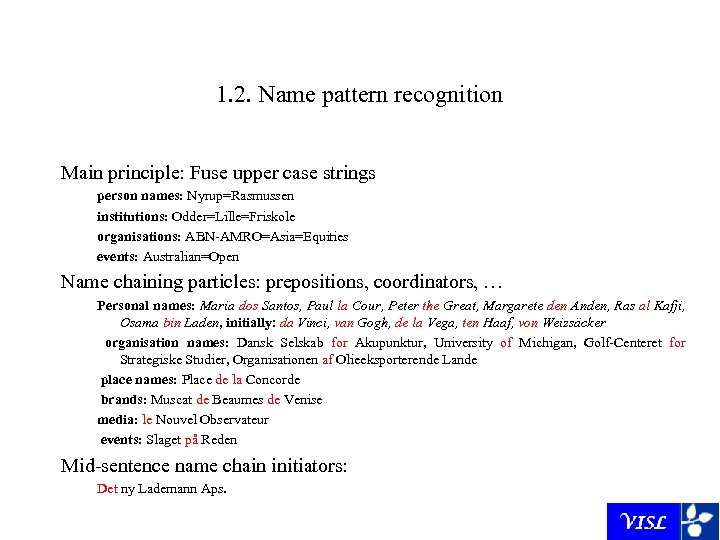

1. 2. Name pattern recognition Main principle: Fuse upper case strings person names: Nyrup=Rasmussen institutions: Odder=Lille=Friskole organisations: ABN-AMRO=Asia=Equities events: Australian=Open Name chaining particles: prepositions, coordinators, … Personal names: Maria dos Santos, Paul la Cour, Peter the Great, Margarete den Anden, Ras al Kafji, Osama bin Laden, initially: da Vinci, van Gogh, de la Vega, ten Haaf, von Weizsäcker organisation names: Dansk Selskab for Akupunktur, University of Michigan, Golf-Centeret for Strategiske Studier, Organisationen af Olieeksporterende Lande place names: Place de la Concorde brands: Muscat de Beaumes de Venise media: le Nouvel Observateur events: Slaget på Reden Mid-sentence name chain initiators: Det ny Lademann Aps.

1. 2. Name pattern recognition Main principle: Fuse upper case strings person names: Nyrup=Rasmussen institutions: Odder=Lille=Friskole organisations: ABN-AMRO=Asia=Equities events: Australian=Open Name chaining particles: prepositions, coordinators, … Personal names: Maria dos Santos, Paul la Cour, Peter the Great, Margarete den Anden, Ras al Kafji, Osama bin Laden, initially: da Vinci, van Gogh, de la Vega, ten Haaf, von Weizsäcker organisation names: Dansk Selskab for Akupunktur, University of Michigan, Golf-Centeret for Strategiske Studier, Organisationen af Olieeksporterende Lande place names: Place de la Concorde brands: Muscat de Beaumes de Venise media: le Nouvel Observateur events: Slaget på Reden Mid-sentence name chain initiators: Det ny Lademann Aps.

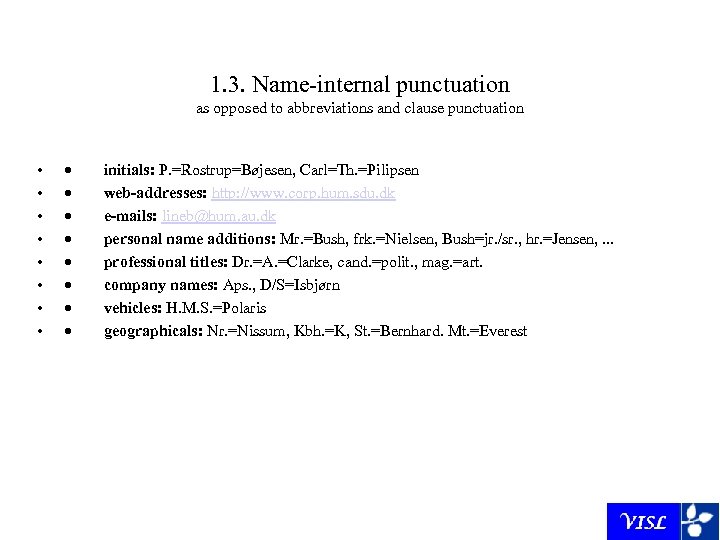

1. 3. Name-internal punctuation as opposed to abbreviations and clause punctuation • • · initials: P. =Rostrup=Bøjesen, Carl=Th. =Pilipsen · web-addresses: http: //www. corp. hum. sdu. dk · e-mails: lineb@hum. au. dk · personal name additions: Mr. =Bush, frk. =Nielsen, Bush=jr. /sr. , hr. =Jensen, . . . · professional titles: Dr. =A. =Clarke, cand. =polit. , mag. =art. · company names: Aps. , D/S=Isbjørn · vehicles: H. M. S. =Polaris · geographicals: Nr. =Nissum, Kbh. =K, St. =Bernhard. Mt. =Everest

1. 3. Name-internal punctuation as opposed to abbreviations and clause punctuation • • · initials: P. =Rostrup=Bøjesen, Carl=Th. =Pilipsen · web-addresses: http: //www. corp. hum. sdu. dk · e-mails: lineb@hum. au. dk · personal name additions: Mr. =Bush, frk. =Nielsen, Bush=jr. /sr. , hr. =Jensen, . . . · professional titles: Dr. =A. =Clarke, cand. =polit. , mag. =art. · company names: Aps. , D/S=Isbjørn · vehicles: H. M. S. =Polaris · geographicals: Nr. =Nissum, Kbh. =K, St. =Bernhard. Mt. =Everest

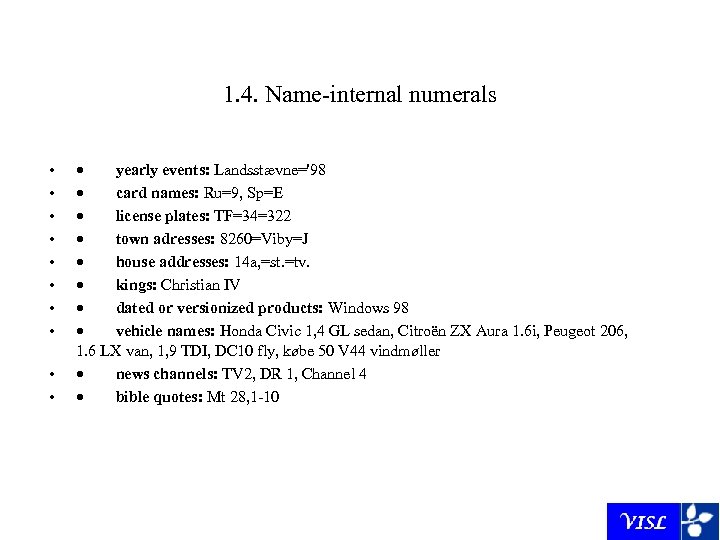

1. 4. Name-internal numerals • • • · yearly events: Landsstævne='98 · card names: Ru=9, Sp=E · license plates: TF=34=322 · town adresses: 8260=Viby=J · house addresses: 14 a, =st. =tv. · kings: Christian IV · dated or versionized products: Windows 98 · vehicle names: Honda Civic 1, 4 GL sedan, Citroën ZX Aura 1. 6 i, Peugeot 206, 1. 6 LX van, 1, 9 TDI, DC 10 fly, købe 50 V 44 vindmøller · news channels: TV 2, DR 1, Channel 4 · bible quotes: Mt 28, 1 -10

1. 4. Name-internal numerals • • • · yearly events: Landsstævne='98 · card names: Ru=9, Sp=E · license plates: TF=34=322 · town adresses: 8260=Viby=J · house addresses: 14 a, =st. =tv. · kings: Christian IV · dated or versionized products: Windows 98 · vehicle names: Honda Civic 1, 4 GL sedan, Citroën ZX Aura 1. 6 i, Peugeot 206, 1. 6 LX van, 1, 9 TDI, DC 10 fly, købe 50 V 44 vindmøller · news channels: TV 2, DR 1, Channel 4 · bible quotes: Mt 28, 1 -10

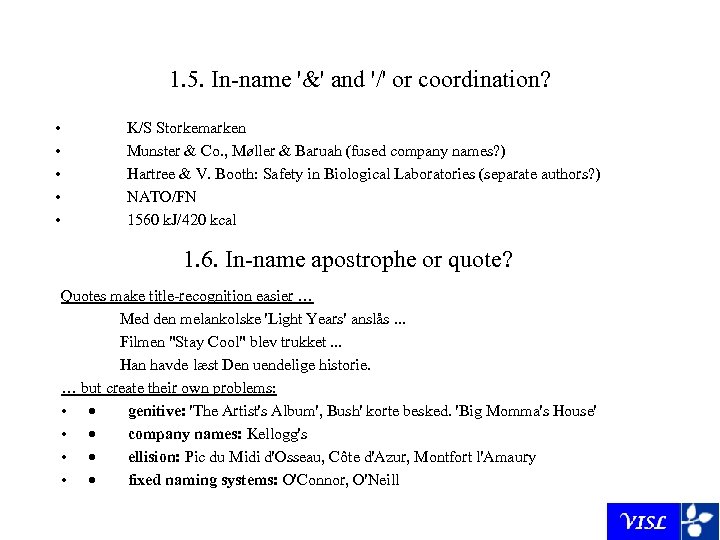

1. 5. In-name '&' and '/' or coordination? • • • K/S Storkemarken Munster & Co. , Møller & Baruah (fused company names? ) Hartree & V. Booth: Safety in Biological Laboratories (separate authors? ) NATO/FN 1560 k. J/420 kcal 1. 6. In-name apostrophe or quote? Quotes make title-recognition easier … Med den melankolske 'Light Years' anslås. . . Filmen "Stay Cool" blev trukket. . . Han havde læst Den uendelige historie. … but create their own problems: • · genitive: 'The Artist's Album', Bush' korte besked. 'Big Momma's House' • · company names: Kellogg's • · ellision: Pic du Midi d'Osseau, Côte d'Azur, Montfort l'Amaury • · fixed naming systems: O'Connor, O'Neill

1. 5. In-name '&' and '/' or coordination? • • • K/S Storkemarken Munster & Co. , Møller & Baruah (fused company names? ) Hartree & V. Booth: Safety in Biological Laboratories (separate authors? ) NATO/FN 1560 k. J/420 kcal 1. 6. In-name apostrophe or quote? Quotes make title-recognition easier … Med den melankolske 'Light Years' anslås. . . Filmen "Stay Cool" blev trukket. . . Han havde læst Den uendelige historie. … but create their own problems: • · genitive: 'The Artist's Album', Bush' korte besked. 'Big Momma's House' • · company names: Kellogg's • · ellision: Pic du Midi d'Osseau, Côte d'Azur, Montfort l'Amaury • · fixed naming systems: O'Connor, O'Neill

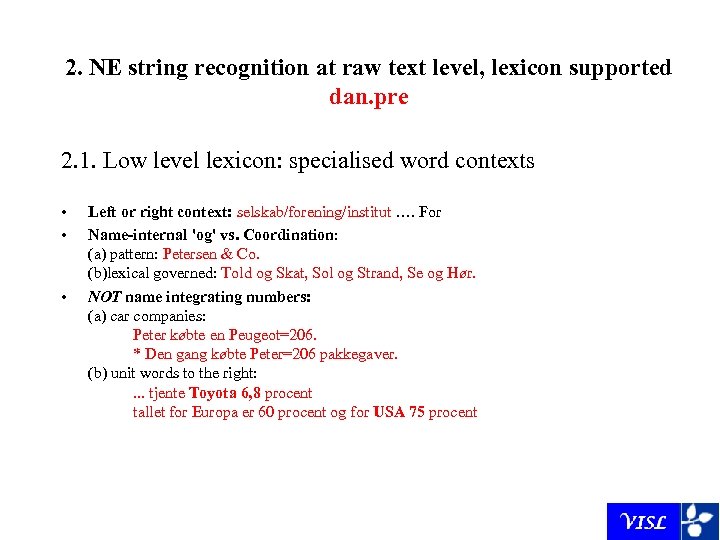

2. NE string recognition at raw text level, lexicon supported dan. pre 2. 1. Low level lexicon: specialised word contexts • • • Left or right context: selskab/forening/institut …. For Name-internal 'og' vs. Coordination: (a) pattern: Petersen & Co. (b)lexical governed: Told og Skat, Sol og Strand, Se og Hør. NOT name integrating numbers: (a) car companies: Peter købte en Peugeot=206. * Den gang købte Peter=206 pakkegaver. (b) unit words to the right: . . . tjente Toyota 6, 8 procent tallet for Europa er 60 procent og for USA 75 procent

2. NE string recognition at raw text level, lexicon supported dan. pre 2. 1. Low level lexicon: specialised word contexts • • • Left or right context: selskab/forening/institut …. For Name-internal 'og' vs. Coordination: (a) pattern: Petersen & Co. (b)lexical governed: Told og Skat, Sol og Strand, Se og Hør. NOT name integrating numbers: (a) car companies: Peter købte en Peugeot=206. * Den gang købte Peter=206 pakkegaver. (b) unit words to the right: . . . tjente Toyota 6, 8 procent tallet for Europa er 60 procent og for USA 75 procent

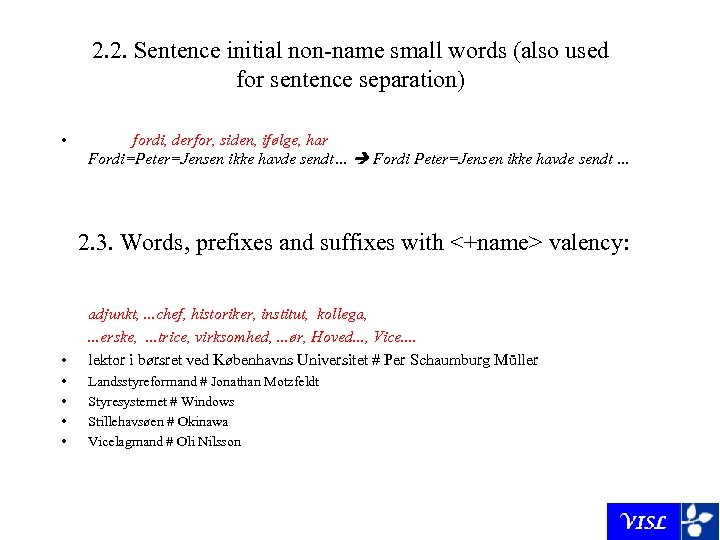

2. 2. Sentence initial non-name small words (also used for sentence separation) • fordi, derfor, siden, ifølge, har Fordi=Peter=Jensen ikke havde sendt… Fordi Peter=Jensen ikke havde sendt … 2. 3. Words, prefixes and suffixes with <+name> valency: • adjunkt, . . . chef, historiker, institut, kollega, . . . erske, . . . trice, virksomhed, . . . ør, Hoved. . . , Vice. . lektor i børsret ved Københavns Universitet # Per Schaumburg Müller • • Landsstyreformand # Jonathan Motzfeldt Styresystemet # Windows Stillehavsøen # Okinawa Vicelagmand # Oli Nilsson

2. 2. Sentence initial non-name small words (also used for sentence separation) • fordi, derfor, siden, ifølge, har Fordi=Peter=Jensen ikke havde sendt… Fordi Peter=Jensen ikke havde sendt … 2. 3. Words, prefixes and suffixes with <+name> valency: • adjunkt, . . . chef, historiker, institut, kollega, . . . erske, . . . trice, virksomhed, . . . ør, Hoved. . . , Vice. . lektor i børsret ved Københavns Universitet # Per Schaumburg Müller • • Landsstyreformand # Jonathan Motzfeldt Styresystemet # Windows Stillehavsøen # Okinawa Vicelagmand # Oli Nilsson

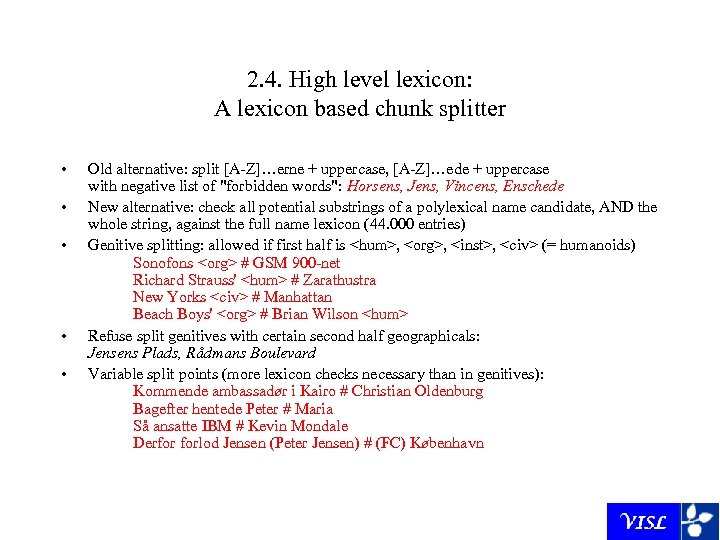

2. 4. High level lexicon: A lexicon based chunk splitter • • • Old alternative: split [A-Z]…erne + uppercase, [A-Z]…ede + uppercase with negative list of "forbidden words": Horsens, Jens, Vincens, Enschede New alternative: check all potential substrings of a polylexical name candidate, AND the whole string, against the full name lexicon (44. 000 entries) Genitive splitting: allowed if first half is

2. 4. High level lexicon: A lexicon based chunk splitter • • • Old alternative: split [A-Z]…erne + uppercase, [A-Z]…ede + uppercase with negative list of "forbidden words": Horsens, Jens, Vincens, Enschede New alternative: check all potential substrings of a polylexical name candidate, AND the whole string, against the full name lexicon (44. 000 entries) Genitive splitting: allowed if first half is

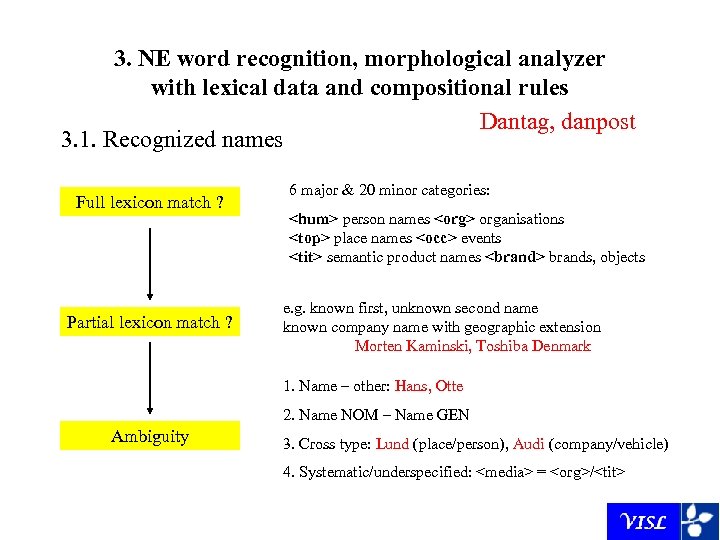

3. NE word recognition, morphological analyzer with lexical data and compositional rules Dantag, danpost 3. 1. Recognized names Full lexicon match ? Partial lexicon match ? 6 major & 20 minor categories:

3. NE word recognition, morphological analyzer with lexical data and compositional rules Dantag, danpost 3. 1. Recognized names Full lexicon match ? Partial lexicon match ? 6 major & 20 minor categories:

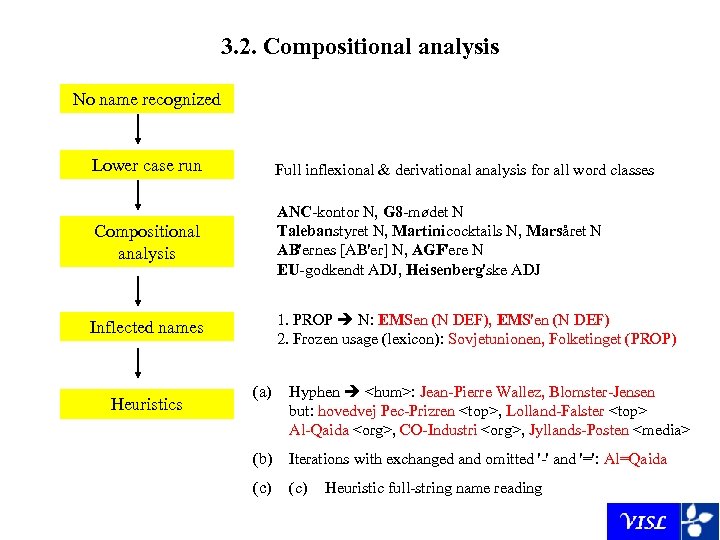

3. 2. Compositional analysis No name recognized Lower case run Full inflexional & derivational analysis for all word classes Compositional analysis ANC-kontor N, G 8 -mødet N Talebanstyret N, Martinicocktails N, Marsåret N AB'ernes [AB'er] N, AGF'ere N EU-godkendt ADJ, Heisenberg'ske ADJ Inflected names 1. PROP N: EMSen (N DEF), EMS'en (N DEF) 2. Frozen usage (lexicon): Sovjetunionen, Folketinget (PROP) Heuristics (a) Hyphen

3. 2. Compositional analysis No name recognized Lower case run Full inflexional & derivational analysis for all word classes Compositional analysis ANC-kontor N, G 8 -mødet N Talebanstyret N, Martinicocktails N, Marsåret N AB'ernes [AB'er] N, AGF'ere N EU-godkendt ADJ, Heisenberg'ske ADJ Inflected names 1. PROP N: EMSen (N DEF), EMS'en (N DEF) 2. Frozen usage (lexicon): Sovjetunionen, Folketinget (PROP) Heuristics (a) Hyphen

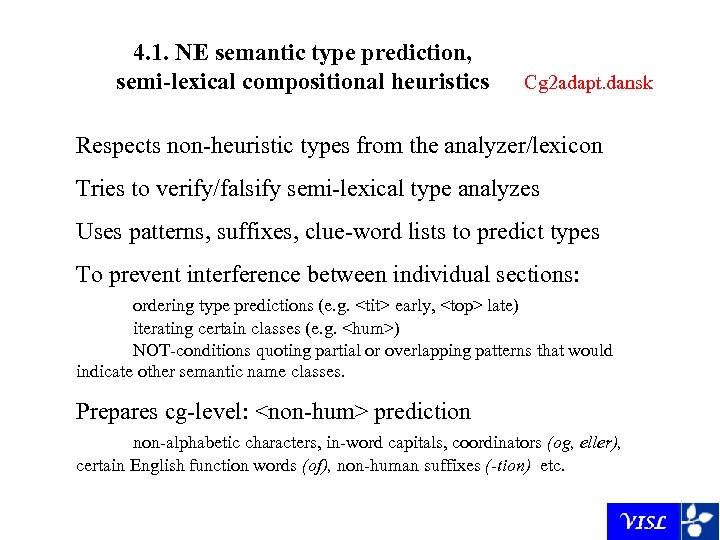

4. 1. NE semantic type prediction, semi-lexical compositional heuristics Cg 2 adapt. dansk Respects non-heuristic types from the analyzer/lexicon Tries to verify/falsify semi-lexical type analyzes Uses patterns, suffixes, clue-word lists to predict types To prevent interference between individual sections: ordering type predictions (e. g.

4. 1. NE semantic type prediction, semi-lexical compositional heuristics Cg 2 adapt. dansk Respects non-heuristic types from the analyzer/lexicon Tries to verify/falsify semi-lexical type analyzes Uses patterns, suffixes, clue-word lists to predict types To prevent interference between individual sections: ordering type predictions (e. g.

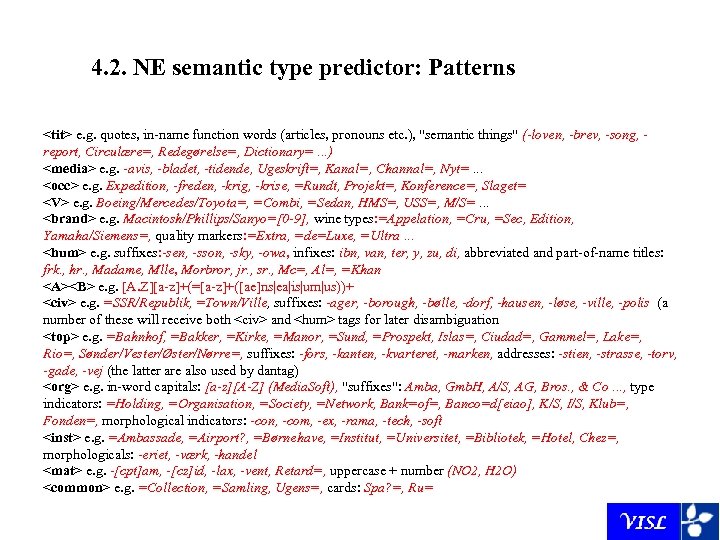

4. 2. NE semantic type predictor: Patterns

4. 2. NE semantic type predictor: Patterns

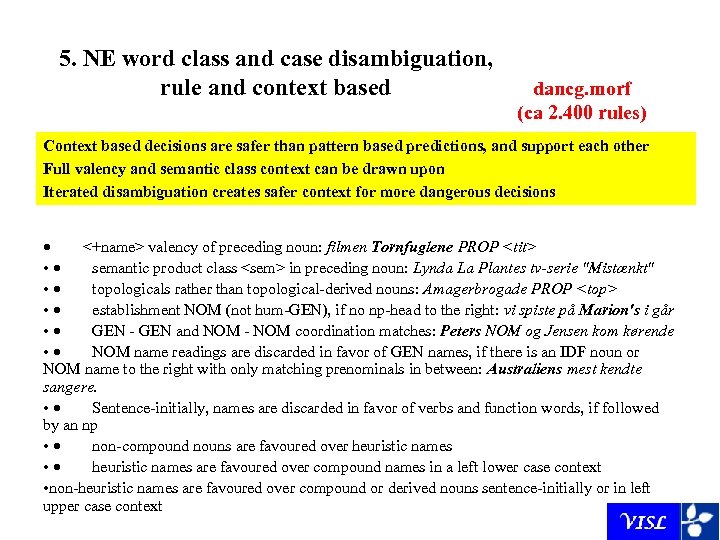

5. NE word class and case disambiguation, rule and context based dancg. morf (ca 2. 400 rules) Context based decisions are safer than pattern based predictions, and support each other Full valency and semantic class context can be drawn upon Iterated disambiguation creates safer context for more dangerous decisions · <+name> valency of preceding noun: filmen Tornfuglene PROP

5. NE word class and case disambiguation, rule and context based dancg. morf (ca 2. 400 rules) Context based decisions are safer than pattern based predictions, and support each other Full valency and semantic class context can be drawn upon Iterated disambiguation creates safer context for more dangerous decisions · <+name> valency of preceding noun: filmen Tornfuglene PROP

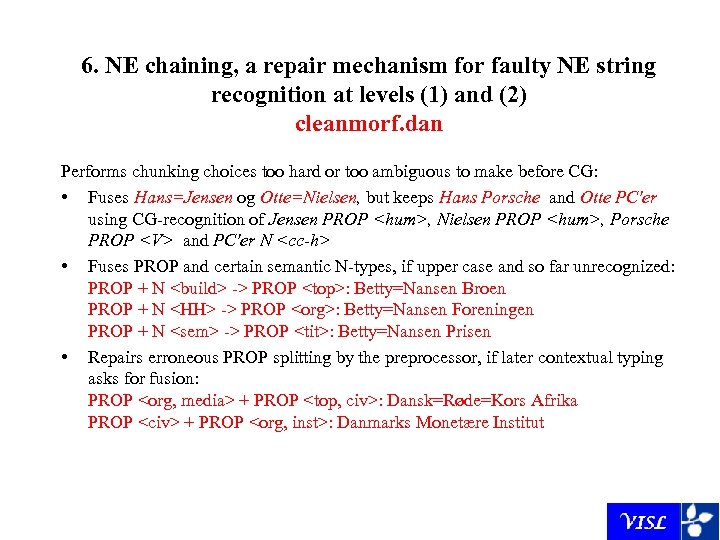

6. NE chaining, a repair mechanism for faulty NE string recognition at levels (1) and (2) cleanmorf. dan Performs chunking choices too hard or too ambiguous to make before CG: • Fuses Hans=Jensen og Otte=Nielsen, but keeps Hans Porsche and Otte PC'er using CG-recognition of Jensen PROP

6. NE chaining, a repair mechanism for faulty NE string recognition at levels (1) and (2) cleanmorf. dan Performs chunking choices too hard or too ambiguous to make before CG: • Fuses Hans=Jensen og Otte=Nielsen, but keeps Hans Porsche and Otte PC'er using CG-recognition of Jensen PROP

7. NE function classes, mapped and disambiguated by context based rules dancg. syn (ca. 4. 400 rules) Handles, among other things, the syntactic function and attachment of names. The following are examples of functions relevant to the subsequent type mapper: (i) @N< (nominal dependents) præsident Bush, filmen "The Matrix" (ii) @APP (identifying appositions) Forældrebestyrelsens forman, Kurt Chistensen, anklager borgmester. . . (iii) @N

7. NE function classes, mapped and disambiguated by context based rules dancg. syn (ca. 4. 400 rules) Handles, among other things, the syntactic function and attachment of names. The following are examples of functions relevant to the subsequent type mapper: (i) @N< (nominal dependents) præsident Bush, filmen "The Matrix" (ii) @APP (identifying appositions) Forældrebestyrelsens forman, Kurt Chistensen, anklager borgmester. . . (iii) @N

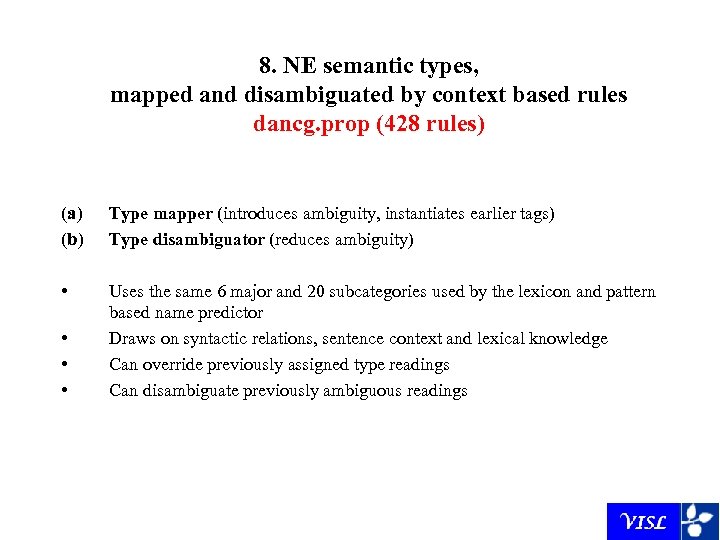

8. NE semantic types, mapped and disambiguated by context based rules dancg. prop (428 rules) (a) (b) Type mapper (introduces ambiguity, instantiates earlier tags) Type disambiguator (reduces ambiguity) • Uses the same 6 major and 20 subcategories used by the lexicon and pattern based name predictor Draws on syntactic relations, sentence context and lexical knowledge Can override previously assigned type readings Can disambiguate previously ambiguous readings • • •

8. NE semantic types, mapped and disambiguated by context based rules dancg. prop (428 rules) (a) (b) Type mapper (introduces ambiguity, instantiates earlier tags) Type disambiguator (reduces ambiguity) • Uses the same 6 major and 20 subcategories used by the lexicon and pattern based name predictor Draws on syntactic relations, sentence context and lexical knowledge Can override previously assigned type readings Can disambiguate previously ambiguous readings • • •

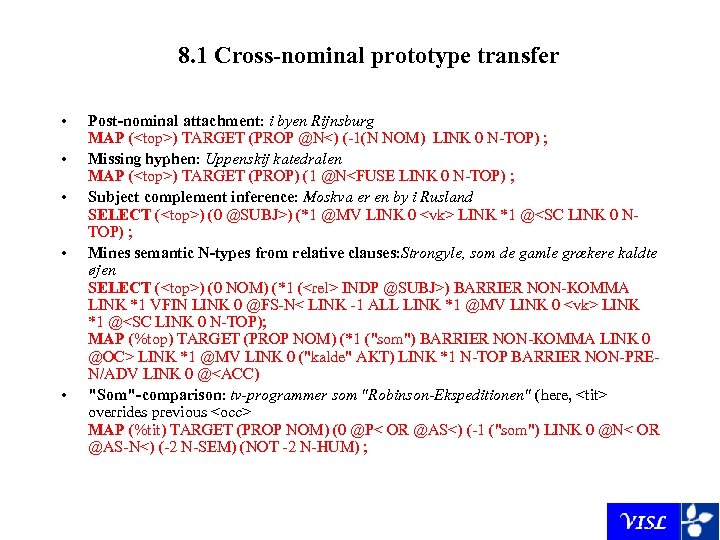

8. 1 Cross-nominal prototype transfer • • • Post-nominal attachment: i byen Rijnsburg MAP (

8. 1 Cross-nominal prototype transfer • • • Post-nominal attachment: i byen Rijnsburg MAP (

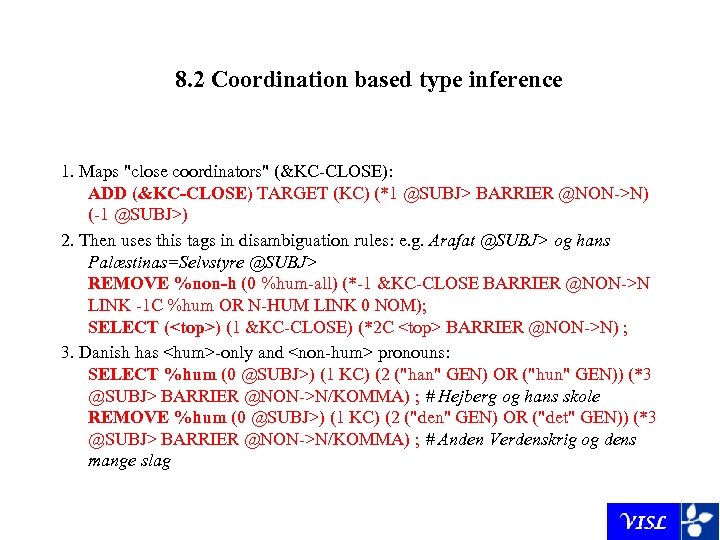

8. 2 Coordination based type inference 1. Maps "close coordinators" (&KC-CLOSE): ADD (&KC-CLOSE) TARGET (KC) (*1 @SUBJ> BARRIER @NON->N) (-1 @SUBJ>) 2. Then uses this tags in disambiguation rules: e. g. Arafat @SUBJ> og hans Palæstinas=Selvstyre @SUBJ> REMOVE %non-h (0 %hum-all) (*-1 &KC-CLOSE BARRIER @NON->N LINK -1 C %hum OR N-HUM LINK 0 NOM); SELECT (

8. 2 Coordination based type inference 1. Maps "close coordinators" (&KC-CLOSE): ADD (&KC-CLOSE) TARGET (KC) (*1 @SUBJ> BARRIER @NON->N) (-1 @SUBJ>) 2. Then uses this tags in disambiguation rules: e. g. Arafat @SUBJ> og hans Palæstinas=Selvstyre @SUBJ> REMOVE %non-h (0 %hum-all) (*-1 &KC-CLOSE BARRIER @NON->N LINK -1 C %hum OR N-HUM LINK 0 NOM); SELECT (

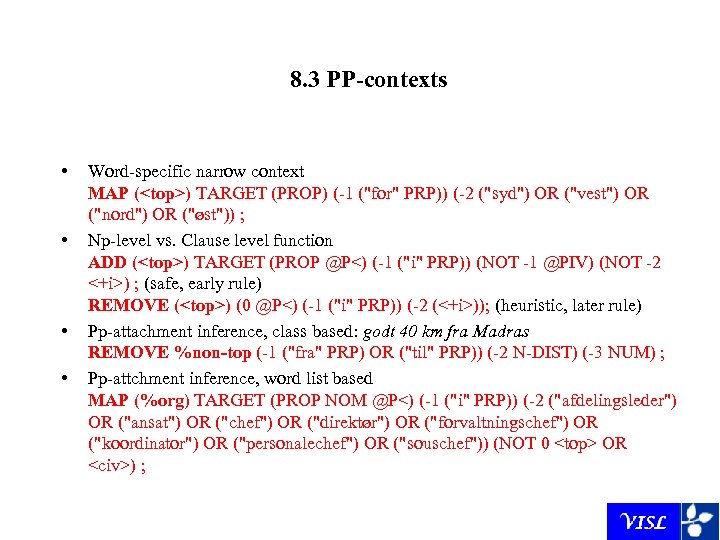

8. 3 PP-contexts • • Word-specific narrow context MAP (

8. 3 PP-contexts • • Word-specific narrow context MAP (

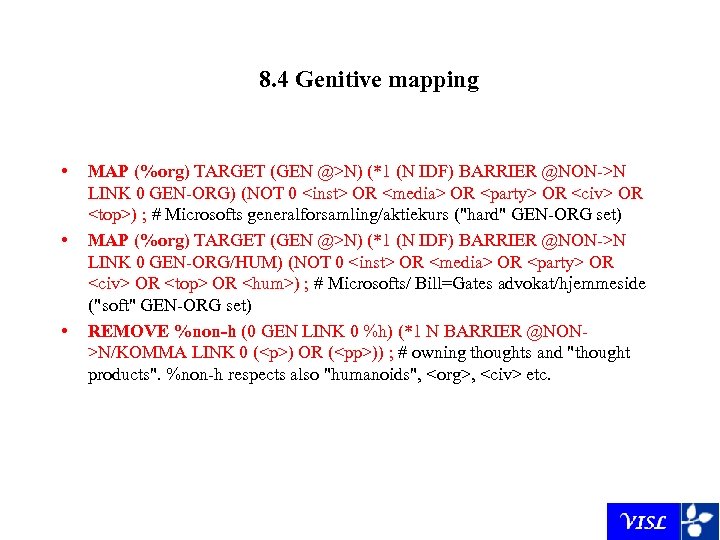

) OR ( 8. 4 Genitive mapping • • • MAP (%org) TARGET (GEN @>N) (*1 (N IDF) BARRIER @NON->N LINK 0 GEN-ORG) (NOT 0

8. 4 Genitive mapping • • • MAP (%org) TARGET (GEN @>N) (*1 (N IDF) BARRIER @NON->N LINK 0 GEN-ORG) (NOT 0

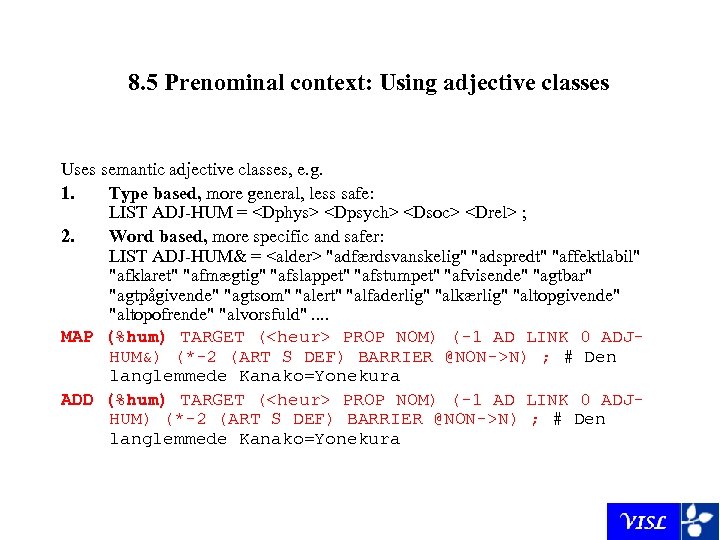

8. 5 Prenominal context: Using adjective classes Uses semantic adjective classes, e. g. 1. Type based, more general, less safe: LIST ADJ-HUM =

8. 5 Prenominal context: Using adjective classes Uses semantic adjective classes, e. g. 1. Type based, more general, less safe: LIST ADJ-HUM =

![Evaluation CG-annotation for Danish news text Recall Precision F-score All word classes[1] 98. 6 Evaluation CG-annotation for Danish news text Recall Precision F-score All word classes[1] 98. 6](https://present5.com/presentation/f3853f0b0a245b5a52d0152e712e50ba/image-29.jpg) Evaluation CG-annotation for Danish news text Recall Precision F-score All word classes[1] 98. 6 98. 7 98. 65 All syntactic functions 95. 4 94. 6 94. 9 [1] Verbal subcategories (present PR, past IMPF, infinitive INF, present and past participle PCP 1/2) and pronoun subcategories (inflecting DET, uninflecting INDP and personal PERS) were counted as different Po. S.

Evaluation CG-annotation for Danish news text Recall Precision F-score All word classes[1] 98. 6 98. 7 98. 65 All syntactic functions 95. 4 94. 6 94. 9 [1] Verbal subcategories (present PR, past IMPF, infinitive INF, present and past participle PCP 1/2) and pronoun subcategories (inflecting DET, uninflecting INDP and personal PERS) were counted as different Po. S.

Performance statistics Korpus 90

Performance statistics Korpus 90

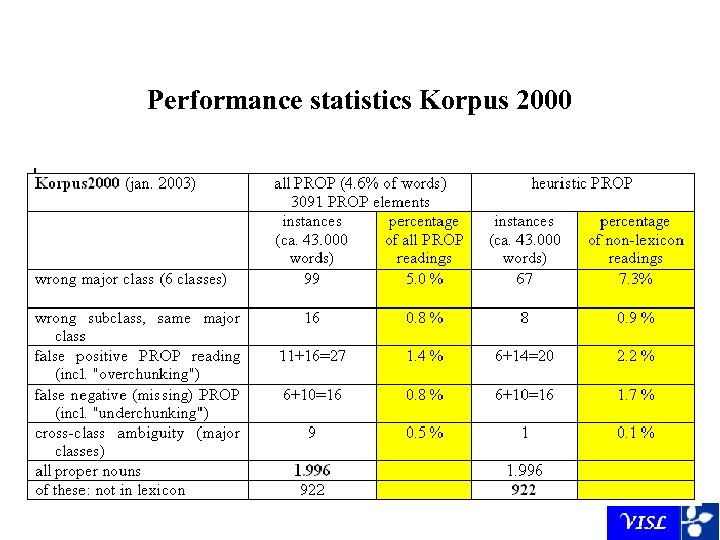

Performance statistics Korpus 2000

Performance statistics Korpus 2000

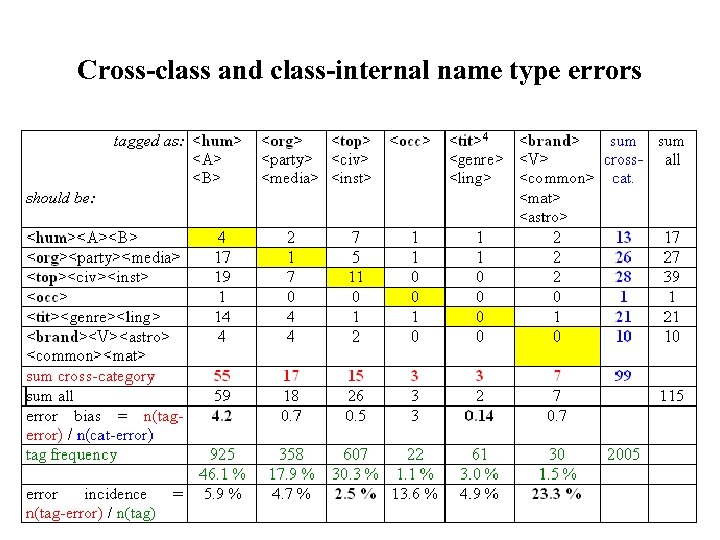

Cross-class and class-internal name type errors

Cross-class and class-internal name type errors

Comparisons • LTG (Mikheev et. al. 1998) achieved an overall F-measure of 93. 39, using hybrid techniques involving both probabilistics/HMM, name/suffix lists and sgml-manipulating rules • MENE (Borthwick et. al. 1998), maximum entropy training: in-domain/same-topic F-scores of up to 92. 20 97. 12% for a hybrid system integrating other MUC-7 -systems cross-topic formal test, F-scores: 84. 22 (pure MENE), 92 (hybrid MENE) possible weakness of trained systems: heavy training data bias? Korpus 90/2000, which was used for the evaluation of the rule based system presented here, is a mixed-genre corpus, even its newstexts are highly cross-domain/cross-topic, since sentence order has been randomized for copyright reasons.

Comparisons • LTG (Mikheev et. al. 1998) achieved an overall F-measure of 93. 39, using hybrid techniques involving both probabilistics/HMM, name/suffix lists and sgml-manipulating rules • MENE (Borthwick et. al. 1998), maximum entropy training: in-domain/same-topic F-scores of up to 92. 20 97. 12% for a hybrid system integrating other MUC-7 -systems cross-topic formal test, F-scores: 84. 22 (pure MENE), 92 (hybrid MENE) possible weakness of trained systems: heavy training data bias? Korpus 90/2000, which was used for the evaluation of the rule based system presented here, is a mixed-genre corpus, even its newstexts are highly cross-domain/cross-topic, since sentence order has been randomized for copyright reasons.

What is it being used for? • Enhance ordinary grammatical analysis - noun-disambiguation - semantic selection restriction fillers • Corpus research on names • Enhance IR-systems: e. g. Question-answering

What is it being used for? • Enhance ordinary grammatical analysis - noun-disambiguation - semantic selection restriction fillers • Corpus research on names • Enhance IR-systems: e. g. Question-answering

Outlook • Future direct comparison might corroborate the intuition that a handcrafted system is less likely to have a domain/topic bias than automated learning systems with limited training data. • Balancing strengths and weaknesses, future work should also examine to which degree automated learning / probabilistic systems can interface with or supplement Constraint Grammar based NER systems • For large chunks, Text/Discourse based memory should be used for name type disambiguation, so clear cases and a majority vote could determine the class of unknown names • With a larger window of analysis, anaphora resolution across sentence boundaries might help NER ("human" pronouns, definite np's, …)

Outlook • Future direct comparison might corroborate the intuition that a handcrafted system is less likely to have a domain/topic bias than automated learning systems with limited training data. • Balancing strengths and weaknesses, future work should also examine to which degree automated learning / probabilistic systems can interface with or supplement Constraint Grammar based NER systems • For large chunks, Text/Discourse based memory should be used for name type disambiguation, so clear cases and a majority vote could determine the class of unknown names • With a larger window of analysis, anaphora resolution across sentence boundaries might help NER ("human" pronouns, definite np's, …)

Where to reach us: http: //beta. visl. sdu. dk - http: //corp. hum. sdu. dk

Where to reach us: http: //beta. visl. sdu. dk - http: //corp. hum. sdu. dk