6252e471e91585a1709e2ca2d9605ae6.ppt

- Количество слайдов: 35

N-Grams CSC 9010: Special Topics. Natural Language Processing. Paula Matuszek, Mary-Angela Papalaskari Spring, 2005 Based on Mc. Coy, http: //www. cis. udel. edu/~mccoy/courses/cisc 882. 03 f/lectures/lect 5 -ngrams. ppt/ CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 1

N-Grams CSC 9010: Special Topics. Natural Language Processing. Paula Matuszek, Mary-Angela Papalaskari Spring, 2005 Based on Mc. Coy, http: //www. cis. udel. edu/~mccoy/courses/cisc 882. 03 f/lectures/lect 5 -ngrams. ppt/ CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 1

Free Association Exercise • I am going to say some phrases. Write down the next word or two that occur to you. – – – – Microsoft announced a new security ____ NHL commissioner cancels rest ____ One Fish, ______ Colorless green ideas ______ Conjunction Junction, what’s _____ Oh, say, can you see, by the dawn’s ______ After I finished my homework I went _____. CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 2

Free Association Exercise • I am going to say some phrases. Write down the next word or two that occur to you. – – – – Microsoft announced a new security ____ NHL commissioner cancels rest ____ One Fish, ______ Colorless green ideas ______ Conjunction Junction, what’s _____ Oh, say, can you see, by the dawn’s ______ After I finished my homework I went _____. CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 2

Human Word Prediction • Clearly, at least some of us have the ability to predict future words in an utterance. • How? – Domain knowledge – Syntactic knowledge – Lexical knowledge CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 3

Human Word Prediction • Clearly, at least some of us have the ability to predict future words in an utterance. • How? – Domain knowledge – Syntactic knowledge – Lexical knowledge CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 3

Claim • A useful part of the knowledge needed to allow Word Prediction can be captured using simple statistical techniques • In particular, we'll rely on the notion of the probability of a sequence (a phrase, a sentence) CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 4

Claim • A useful part of the knowledge needed to allow Word Prediction can be captured using simple statistical techniques • In particular, we'll rely on the notion of the probability of a sequence (a phrase, a sentence) CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 4

Applications • Why do we want to predict a word, given some preceding words? – Rank the likelihood of sequences containing various alternative hypotheses, e. g. for automated speech recognition, OCRing. Theatre owners say popcorn/unicorn sales have doubled. . . – Assess the likelihood/goodness of a sentence, e. g. for text generation or machine translation Como mucho pescado. At the most fished. CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 5

Applications • Why do we want to predict a word, given some preceding words? – Rank the likelihood of sequences containing various alternative hypotheses, e. g. for automated speech recognition, OCRing. Theatre owners say popcorn/unicorn sales have doubled. . . – Assess the likelihood/goodness of a sentence, e. g. for text generation or machine translation Como mucho pescado. At the most fished. CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 5

Real Word Spelling Errors • They are leaving in about fifteen minuets to go to her house. • The study was conducted mainly be John Black. • The design an construction of the system will take more than a year. • Hopefully, all with continue smoothly in my absence. • Can they lave him my messages? • I need to notified the bank of…. • He is trying to fine out. Example from Dorr, http: //www. umiacs. umd. edu/~bonnie/courses/cmsc 723 -04/lecture-notes/Lecture 5. ppt CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 6

Real Word Spelling Errors • They are leaving in about fifteen minuets to go to her house. • The study was conducted mainly be John Black. • The design an construction of the system will take more than a year. • Hopefully, all with continue smoothly in my absence. • Can they lave him my messages? • I need to notified the bank of…. • He is trying to fine out. Example from Dorr, http: //www. umiacs. umd. edu/~bonnie/courses/cmsc 723 -04/lecture-notes/Lecture 5. ppt CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 6

Language Modeling • Fundamental tool in NLP • Main idea: – Some words are more likely than others to follow each other – You can predict fairly accurately that likelihood. • In other words, you can build a language model Adapted from Hearst, http: //www. sims. berkeley. edu/courses/is 290 -2/f 04/lectures/lecture 4. ppt CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 7

Language Modeling • Fundamental tool in NLP • Main idea: – Some words are more likely than others to follow each other – You can predict fairly accurately that likelihood. • In other words, you can build a language model Adapted from Hearst, http: //www. sims. berkeley. edu/courses/is 290 -2/f 04/lectures/lecture 4. ppt CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 7

N-Grams • N-Grams are sequences of tokens. • The N stands for how many terms are used – Unigram: 1 term – Bigram: 2 terms – Trigrams: 3 terms • You can use different kinds of tokens – Character based n-grams – Word-based n-grams – POS-based n-grams • N-Grams give us some idea of the context around the token we are looking at. Adapted from Hearst, http: //www. sims. berkeley. edu/courses/is 290 -2/f 04/lectures/lecture 4. ppt CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 8

N-Grams • N-Grams are sequences of tokens. • The N stands for how many terms are used – Unigram: 1 term – Bigram: 2 terms – Trigrams: 3 terms • You can use different kinds of tokens – Character based n-grams – Word-based n-grams – POS-based n-grams • N-Grams give us some idea of the context around the token we are looking at. Adapted from Hearst, http: //www. sims. berkeley. edu/courses/is 290 -2/f 04/lectures/lecture 4. ppt CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 8

N-Gram Models of Language • A language model is a model that lets us compute the probability, or likelihood, of a sentence S, P(S). • N-Gram models use the previous N-1 words in a sequence to predict the next word – unigrams, bigrams, trigrams, … • How do we construct or train these language models? – Count frequencies in very large corpora – Determine probabilities using Markov models, similar to POS tagging. CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 9

N-Gram Models of Language • A language model is a model that lets us compute the probability, or likelihood, of a sentence S, P(S). • N-Gram models use the previous N-1 words in a sequence to predict the next word – unigrams, bigrams, trigrams, … • How do we construct or train these language models? – Count frequencies in very large corpora – Determine probabilities using Markov models, similar to POS tagging. CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 9

Counting Words in Corpora • What is a word? – e. g. , are cat and cats the same word? – September and Sept? – zero and oh? – Is _ a word? * ? ‘(‘ ? – How many words are there in don’t ? Gonna ? – In Japanese and Chinese text -- how do we identify a word? CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 10

Counting Words in Corpora • What is a word? – e. g. , are cat and cats the same word? – September and Sept? – zero and oh? – Is _ a word? * ? ‘(‘ ? – How many words are there in don’t ? Gonna ? – In Japanese and Chinese text -- how do we identify a word? CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 10

Terminology • Sentence: unit of written language • Utterance: unit of spoken language • Word Form: the inflected form that appears in the corpus • Lemma: an abstract form, shared by word forms having the same stem, part of speech, and word sense • Types: number of distinct words in a corpus (vocabulary size) • Tokens: total number of words CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 11

Terminology • Sentence: unit of written language • Utterance: unit of spoken language • Word Form: the inflected form that appears in the corpus • Lemma: an abstract form, shared by word forms having the same stem, part of speech, and word sense • Types: number of distinct words in a corpus (vocabulary size) • Tokens: total number of words CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 11

Simple N-Grams • Assume a language has V word types in its lexicon, how likely is word x to follow word y? – Simplest model of word probability: 1/ V – Alternative 1: estimate likelihood of x occurring in new text based on its general frequency of occurrence estimated from a corpus (unigram probability) popcorn is more likely to occur than unicorn – Alternative 2: condition the likelihood of x occurring in the context of previous words (bigrams, trigrams, …) mythical unicorn is more likely than mythical popcorn CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 12

Simple N-Grams • Assume a language has V word types in its lexicon, how likely is word x to follow word y? – Simplest model of word probability: 1/ V – Alternative 1: estimate likelihood of x occurring in new text based on its general frequency of occurrence estimated from a corpus (unigram probability) popcorn is more likely to occur than unicorn – Alternative 2: condition the likelihood of x occurring in the context of previous words (bigrams, trigrams, …) mythical unicorn is more likely than mythical popcorn CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 12

Computing the Probability of a Word Sequence • Compute the product of component conditional probabilities? – P(the mythical unicorn) = P(the) P(mythical|the) P(unicorn|the mythical) • The longer the sequence, the less likely we are to find it in a training corpus P(Most biologists and folklore specialists believe that in fact the mythical unicorn horns derived from the narwhal) • Solution: approximate using n-grams CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 13

Computing the Probability of a Word Sequence • Compute the product of component conditional probabilities? – P(the mythical unicorn) = P(the) P(mythical|the) P(unicorn|the mythical) • The longer the sequence, the less likely we are to find it in a training corpus P(Most biologists and folklore specialists believe that in fact the mythical unicorn horns derived from the narwhal) • Solution: approximate using n-grams CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 13

Bigram Model • Approximate by – P(unicorn|the mythical) by P(unicorn|mythical) • Markov assumption: the probability of a word depends only on the probability of a limited history • Generalization: the probability of a word depends only on the probability of the n previous words – Trigrams, 4 -grams, … – The higher n is, the more data needed to train – The higher n is, the sparser the matrix. • Leads us to backoff models CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 14

Bigram Model • Approximate by – P(unicorn|the mythical) by P(unicorn|mythical) • Markov assumption: the probability of a word depends only on the probability of a limited history • Generalization: the probability of a word depends only on the probability of the n previous words – Trigrams, 4 -grams, … – The higher n is, the more data needed to train – The higher n is, the sparser the matrix. • Leads us to backoff models CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 14

Using N-Grams • For N-gram models – – P(wn-1, wn) = P(wn | wn-1) P(wn-1) – By the Chain Rule we can decompose a joint probability, e. g. P(w 1, w 2, w 3) P(w 1, w 2, . . . , wn) = P(w 1|w 2, w 3, . . . , wn) P(w 2|w 3, . . . , wn) … P(wn 1|wn) P(wn) For bigrams then, the probability of a sequence is just the product of the conditional probabilities of its bigrams P(the, mythical, unicorn) = P(unicorn|mythical) P(mythical|the) P(the|

Using N-Grams • For N-gram models – – P(wn-1, wn) = P(wn | wn-1) P(wn-1) – By the Chain Rule we can decompose a joint probability, e. g. P(w 1, w 2, w 3) P(w 1, w 2, . . . , wn) = P(w 1|w 2, w 3, . . . , wn) P(w 2|w 3, . . . , wn) … P(wn 1|wn) P(wn) For bigrams then, the probability of a sequence is just the product of the conditional probabilities of its bigrams P(the, mythical, unicorn) = P(unicorn|mythical) P(mythical|the) P(the|

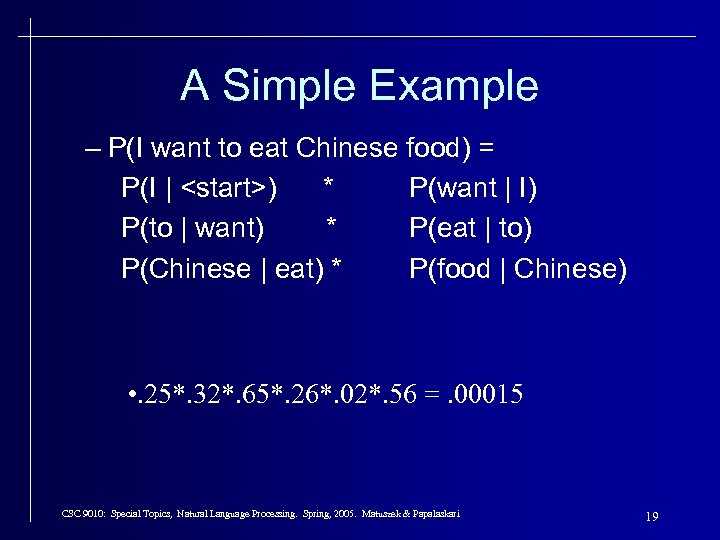

A Simple Example – P(I want to eat Chinese food) = P(I |

A Simple Example – P(I want to eat Chinese food) = P(I |

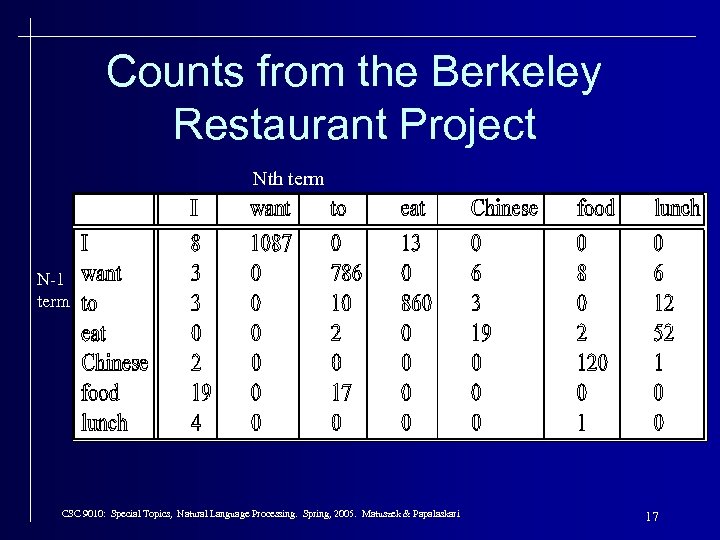

Counts from the Berkeley Restaurant Project Nth term N-1 term CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 17

Counts from the Berkeley Restaurant Project Nth term N-1 term CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 17

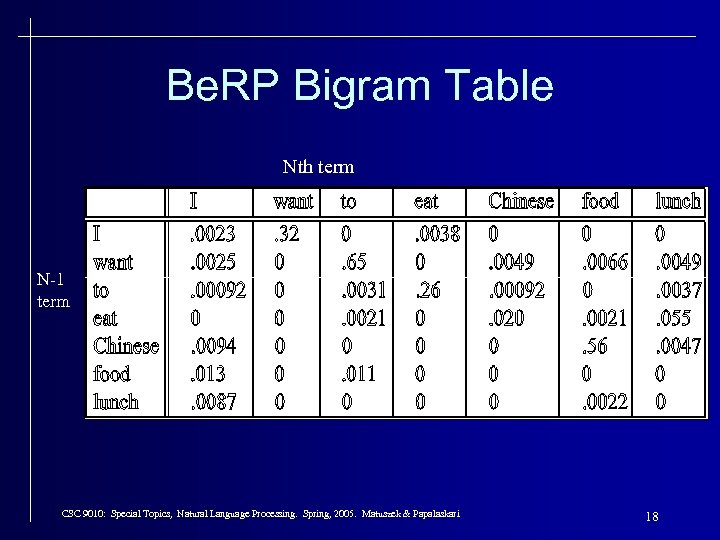

Be. RP Bigram Table Nth term N-1 term CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 18

Be. RP Bigram Table Nth term N-1 term CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 18

A Simple Example – P(I want to eat Chinese food) = P(I |

A Simple Example – P(I want to eat Chinese food) = P(I |

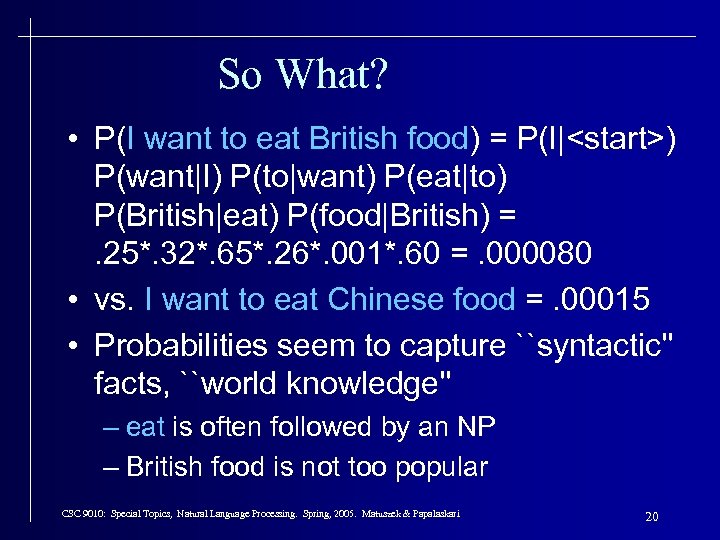

So What? • P(I want to eat British food) = P(I|

So What? • P(I want to eat British food) = P(I|

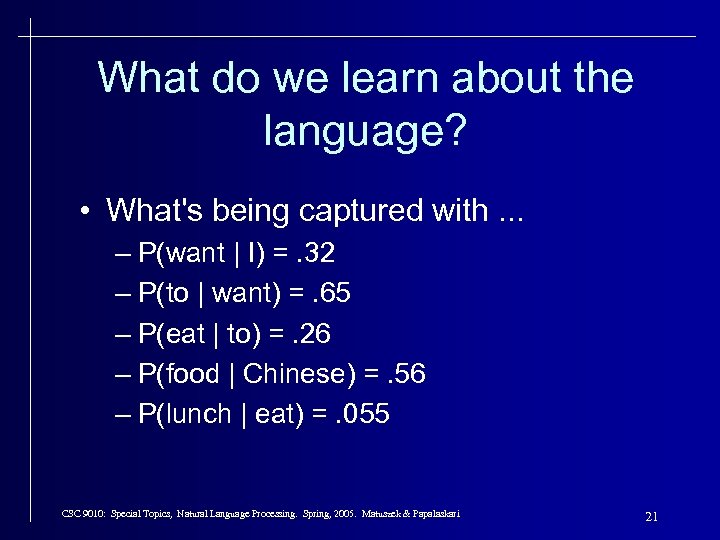

What do we learn about the language? • What's being captured with. . . – P(want | I) =. 32 – P(to | want) =. 65 – P(eat | to) =. 26 – P(food | Chinese) =. 56 – P(lunch | eat) =. 055 CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 21

What do we learn about the language? • What's being captured with. . . – P(want | I) =. 32 – P(to | want) =. 65 – P(eat | to) =. 26 – P(food | Chinese) =. 56 – P(lunch | eat) =. 055 CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 21

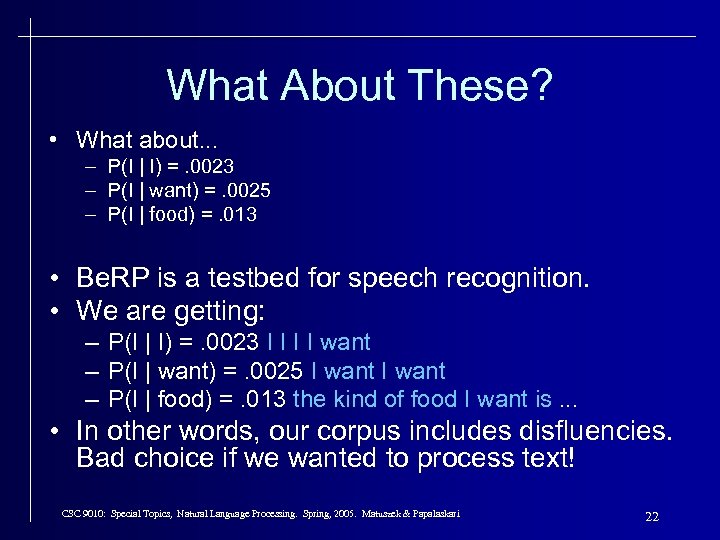

What About These? • What about. . . – P(I | I) =. 0023 – P(I | want) =. 0025 – P(I | food) =. 013 • Be. RP is a testbed for speech recognition. • We are getting: – P(I | I) =. 0023 I I want – P(I | want) =. 0025 I want – P(I | food) =. 013 the kind of food I want is. . . • In other words, our corpus includes disfluencies. Bad choice if we wanted to process text! CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 22

What About These? • What about. . . – P(I | I) =. 0023 – P(I | want) =. 0025 – P(I | food) =. 013 • Be. RP is a testbed for speech recognition. • We are getting: – P(I | I) =. 0023 I I want – P(I | want) =. 0025 I want – P(I | food) =. 013 the kind of food I want is. . . • In other words, our corpus includes disfluencies. Bad choice if we wanted to process text! CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 22

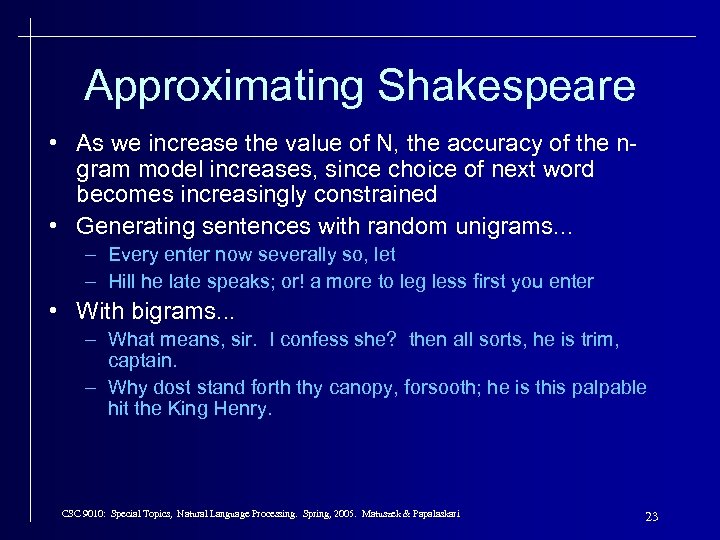

Approximating Shakespeare • As we increase the value of N, the accuracy of the ngram model increases, since choice of next word becomes increasingly constrained • Generating sentences with random unigrams. . . – Every enter now severally so, let – Hill he late speaks; or! a more to leg less first you enter • With bigrams. . . – What means, sir. I confess she? then all sorts, he is trim, captain. – Why dost stand forth thy canopy, forsooth; he is this palpable hit the King Henry. CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 23

Approximating Shakespeare • As we increase the value of N, the accuracy of the ngram model increases, since choice of next word becomes increasingly constrained • Generating sentences with random unigrams. . . – Every enter now severally so, let – Hill he late speaks; or! a more to leg less first you enter • With bigrams. . . – What means, sir. I confess she? then all sorts, he is trim, captain. – Why dost stand forth thy canopy, forsooth; he is this palpable hit the King Henry. CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 23

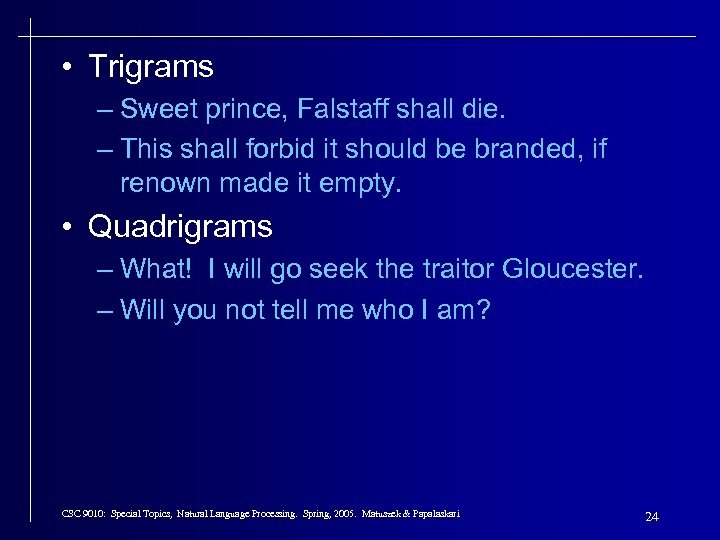

• Trigrams – Sweet prince, Falstaff shall die. – This shall forbid it should be branded, if renown made it empty. • Quadrigrams – What! I will go seek the traitor Gloucester. – Will you not tell me who I am? CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 24

• Trigrams – Sweet prince, Falstaff shall die. – This shall forbid it should be branded, if renown made it empty. • Quadrigrams – What! I will go seek the traitor Gloucester. – Will you not tell me who I am? CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 24

• There are 884, 647 tokens, with 29, 066 word form types, in about a one million word Shakespeare corpus • Shakespeare produced 300, 000 bigram types out of 844 million possible bigrams: so, 99. 96% of the possible bigrams were never seen (have zero entries in the table) • Quadrigrams worse: What's coming out looks like Shakespeare because it is Shakespeare CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 25

• There are 884, 647 tokens, with 29, 066 word form types, in about a one million word Shakespeare corpus • Shakespeare produced 300, 000 bigram types out of 844 million possible bigrams: so, 99. 96% of the possible bigrams were never seen (have zero entries in the table) • Quadrigrams worse: What's coming out looks like Shakespeare because it is Shakespeare CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 25

N-Gram Training Sensitivity • If we repeated the Shakespeare experiment but trained our n-grams on a Wall Street Journal corpus, what would we get? • This has major implications for corpus selection or design CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 26

N-Gram Training Sensitivity • If we repeated the Shakespeare experiment but trained our n-grams on a Wall Street Journal corpus, what would we get? • This has major implications for corpus selection or design CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 26

Some Useful Empirical Observations • A few events occur with high frequency • Many events occur with low frequency • You can quickly collect statistics on the high frequency events • You might have to wait an arbitrarily long time to get valid statistics on low frequency events • Some of the zeroes in the table are really zeros But others are simply low frequency events you haven't seen yet. How to address? CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 27

Some Useful Empirical Observations • A few events occur with high frequency • Many events occur with low frequency • You can quickly collect statistics on the high frequency events • You might have to wait an arbitrarily long time to get valid statistics on low frequency events • Some of the zeroes in the table are really zeros But others are simply low frequency events you haven't seen yet. How to address? CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 27

Zipf’s Law George Kingsley Zipf (1902 -1950) noted that for many frequency distributions, the n-th largest frequency is proportional to a negative power of the rank order n. Let t range over the set of unique events. Let f(t) be the frequency of t and let r(t) be its rank. Then: t r(t) c * f(t)-b for some constants b and c. • Applies to a surprising range of things. • Including frequencies in corpora CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 28

Zipf’s Law George Kingsley Zipf (1902 -1950) noted that for many frequency distributions, the n-th largest frequency is proportional to a negative power of the rank order n. Let t range over the set of unique events. Let f(t) be the frequency of t and let r(t) be its rank. Then: t r(t) c * f(t)-b for some constants b and c. • Applies to a surprising range of things. • Including frequencies in corpora CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 28

Smoothing Techniques • Every n-gram training matrix is sparse, even for very large corpora (Zipf’s law) • Solution: estimate the likelihood of unseen n-grams • Problems: how do you adjust the rest of the corpus to accommodate these ‘phantom’ n-grams? • Methods to handle this are called smoothing. CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 29

Smoothing Techniques • Every n-gram training matrix is sparse, even for very large corpora (Zipf’s law) • Solution: estimate the likelihood of unseen n-grams • Problems: how do you adjust the rest of the corpus to accommodate these ‘phantom’ n-grams? • Methods to handle this are called smoothing. CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 29

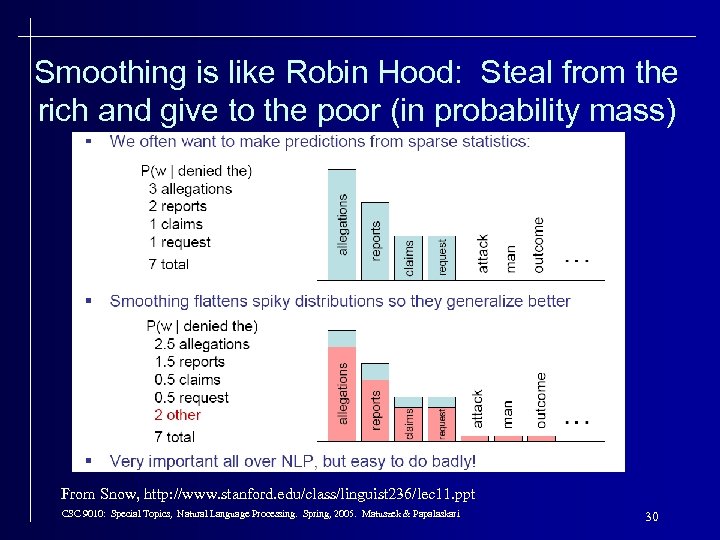

Smoothing is like Robin Hood: Steal from the rich and give to the poor (in probability mass) From Snow, http: //www. stanford. edu/class/linguist 236/lec 11. ppt CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 30

Smoothing is like Robin Hood: Steal from the rich and give to the poor (in probability mass) From Snow, http: //www. stanford. edu/class/linguist 236/lec 11. ppt CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 30

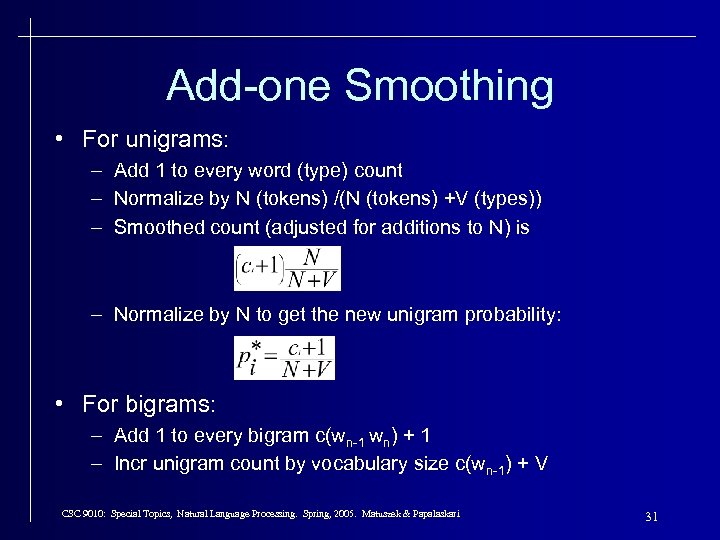

Add-one Smoothing • For unigrams: – Add 1 to every word (type) count – Normalize by N (tokens) /(N (tokens) +V (types)) – Smoothed count (adjusted for additions to N) is – Normalize by N to get the new unigram probability: • For bigrams: – Add 1 to every bigram c(wn-1 wn) + 1 – Incr unigram count by vocabulary size c(wn-1) + V CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 31

Add-one Smoothing • For unigrams: – Add 1 to every word (type) count – Normalize by N (tokens) /(N (tokens) +V (types)) – Smoothed count (adjusted for additions to N) is – Normalize by N to get the new unigram probability: • For bigrams: – Add 1 to every bigram c(wn-1 wn) + 1 – Incr unigram count by vocabulary size c(wn-1) + V CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 31

And “Take from the “Rich” – Discount: ratio of new counts to old (e. g. add-one smoothing changes the Be. RP bigram (to|want) from 786 to 331 (dc=. 42) and p(to|want) from. 65 to. 28) – But this changes counts drastically: • too much weight given to unseen n-grams • in practice, unsmoothed bigrams often work better! CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 32

And “Take from the “Rich” – Discount: ratio of new counts to old (e. g. add-one smoothing changes the Be. RP bigram (to|want) from 786 to 331 (dc=. 42) and p(to|want) from. 65 to. 28) – But this changes counts drastically: • too much weight given to unseen n-grams • in practice, unsmoothed bigrams often work better! CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 32

Witten-Bell Discounting • A zero n-gram is just an n-gram you haven’t seen yet…but every n-gram in the corpus was unseen once…so. . . – How many times did we see an n-gram for the first time? Once for each n-gram type (T) – Est. total probability of unseen bigrams as – View training corpus as series of events, one for each token (N) and one for each new type (T) • We can divide the probability mass equally among unseen bigrams…. or we can condition the probability of an unseen bigram on the first word of the bigram • Discount values for Witten-Bell are much more reasonable than Add-One CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 33

Witten-Bell Discounting • A zero n-gram is just an n-gram you haven’t seen yet…but every n-gram in the corpus was unseen once…so. . . – How many times did we see an n-gram for the first time? Once for each n-gram type (T) – Est. total probability of unseen bigrams as – View training corpus as series of events, one for each token (N) and one for each new type (T) • We can divide the probability mass equally among unseen bigrams…. or we can condition the probability of an unseen bigram on the first word of the bigram • Discount values for Witten-Bell are much more reasonable than Add-One CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 33

Backoff methods • For e. g. a trigram model – Compute unigram, bigram and trigram probabilities – In use: • Where trigram unavailable back off to bigram if available, o. w. unigram probability • E. g An omnivorous unicorn CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 34

Backoff methods • For e. g. a trigram model – Compute unigram, bigram and trigram probabilities – In use: • Where trigram unavailable back off to bigram if available, o. w. unigram probability • E. g An omnivorous unicorn CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 34

Summary • N-gram probabilities can be used to estimate the likelihood – Of a word occurring in a context (N-1) – Of a sentence occurring at all • Smoothing techniques deal with problems of unseen words in a corpus • N-grams are useful in a wide variety of NLP tasks CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 35

Summary • N-gram probabilities can be used to estimate the likelihood – Of a word occurring in a context (N-1) – Of a sentence occurring at all • Smoothing techniques deal with problems of unseen words in a corpus • N-grams are useful in a wide variety of NLP tasks CSC 9010: Special Topics, Natural Language Processing. Spring, 2005. Matuszek & Papalaskari 35