be47ed9e08ddba5a0b222bbafd340d10.ppt

- Количество слайдов: 89

Multimedia Retrieval Arjen P. de Vries arjen@acm. org (Note: a lot of input from Thijs Westerveld) Centrum voor Wiskunde en Informatica

Multimedia Retrieval Arjen P. de Vries arjen@acm. org (Note: a lot of input from Thijs Westerveld) Centrum voor Wiskunde en Informatica

Outline • Overview Indexing Multimedia • Generative Models & MMIR – Probabilistic Retrieval – Language models, GMMs • Experiments – Corel experiments

Outline • Overview Indexing Multimedia • Generative Models & MMIR – Probabilistic Retrieval – Language models, GMMs • Experiments – Corel experiments

Indexing Multimedia

Indexing Multimedia

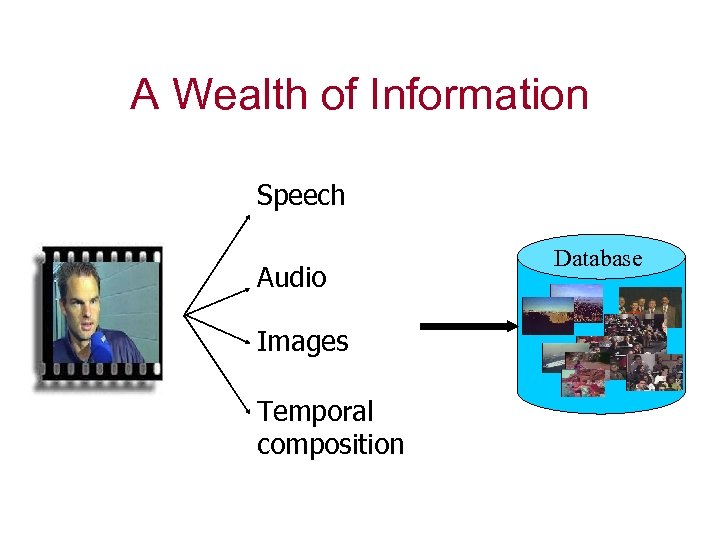

A Wealth of Information Speech Audio Images Temporal composition Database

A Wealth of Information Speech Audio Images Temporal composition Database

Associated Information gender name country Player Profile history Biography id picture

Associated Information gender name country Player Profile history Biography id picture

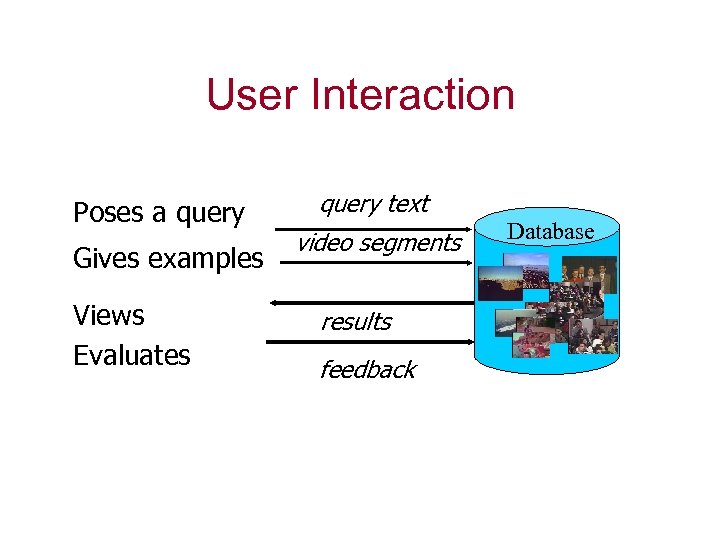

User Interaction Poses a query Gives examples Views Evaluates query text video segments results feedback Database

User Interaction Poses a query Gives examples Views Evaluates query text video segments results feedback Database

Indexing Multimedia • Manually added descriptions – ‘Metadata’ • Analysis of associated data – Speech, captions, OCR, auto-cues, … • Content-based retrieval – Approximate retrieval – Domain-specific techniques

Indexing Multimedia • Manually added descriptions – ‘Metadata’ • Analysis of associated data – Speech, captions, OCR, auto-cues, … • Content-based retrieval – Approximate retrieval – Domain-specific techniques

Limitations of Metadata • Vocabulary problem – Dark vs. somber • Different people describe different aspects – Dark vs. evening

Limitations of Metadata • Vocabulary problem – Dark vs. somber • Different people describe different aspects – Dark vs. evening

Limitations of Metadata • Encoding Specificity Problem – A single person describes different aspects in different situations • Many aspects of multimedia simply cannot be expressed unambiguously – Processes in left (analytic, verbal) vs. right brain (aesthetics, synthetic, nonverbal)

Limitations of Metadata • Encoding Specificity Problem – A single person describes different aspects in different situations • Many aspects of multimedia simply cannot be expressed unambiguously – Processes in left (analytic, verbal) vs. right brain (aesthetics, synthetic, nonverbal)

Approximate Retrieval • Based on similarity – Find all objects that are similar to this one – Distance function – Representations capture some (syntactic) meaning of the object • ‘Query by Example’ paradigm

Approximate Retrieval • Based on similarity – Find all objects that are similar to this one – Distance function – Representations capture some (syntactic) meaning of the object • ‘Query by Example’ paradigm

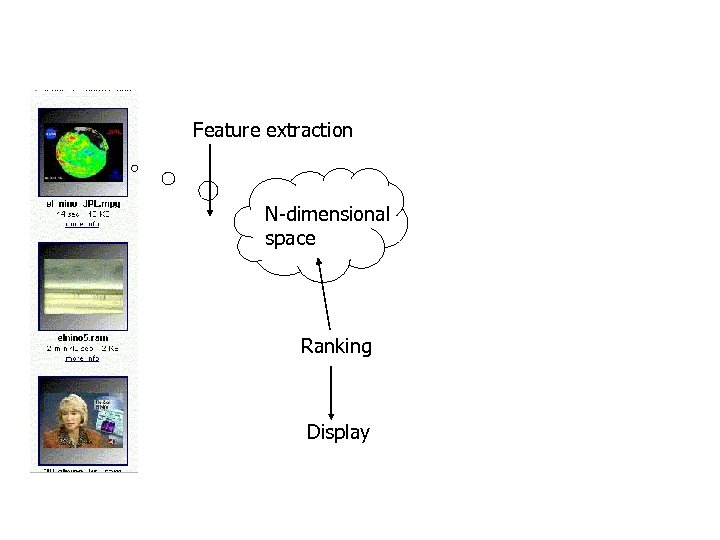

Feature extraction N-dimensional space Ranking Display

Feature extraction N-dimensional space Ranking Display

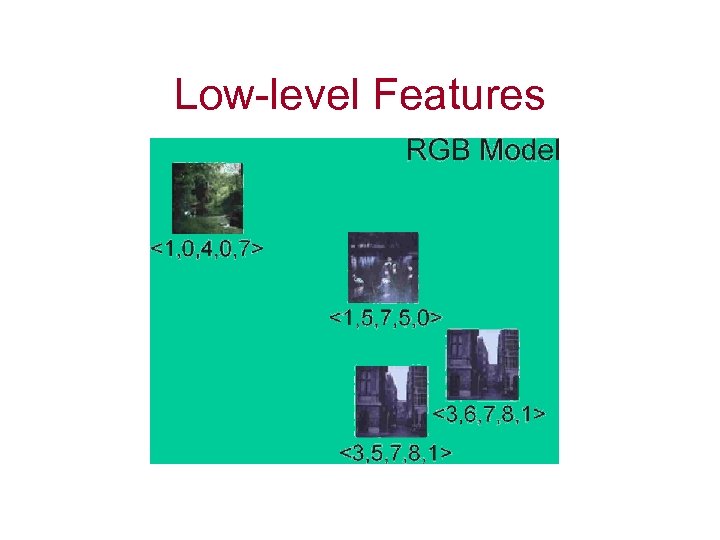

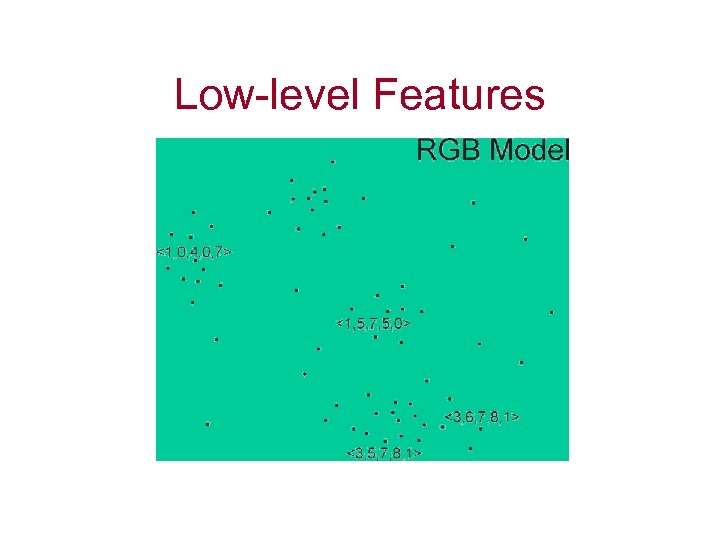

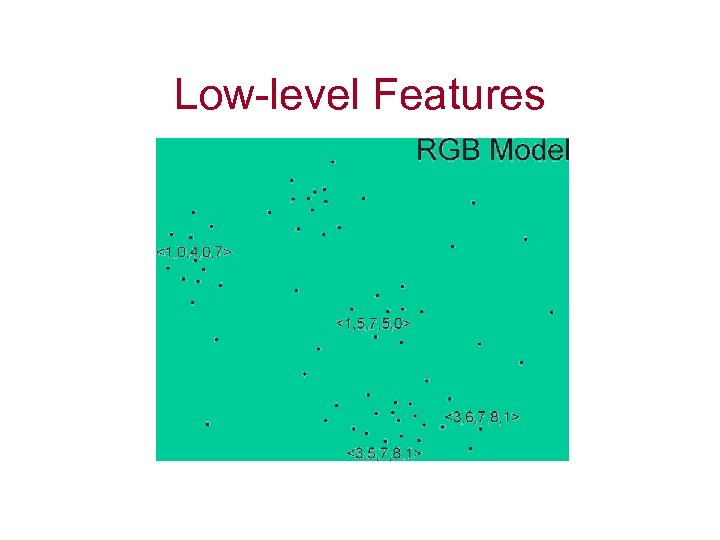

Low-level Features

Low-level Features

Low-level Features

Low-level Features

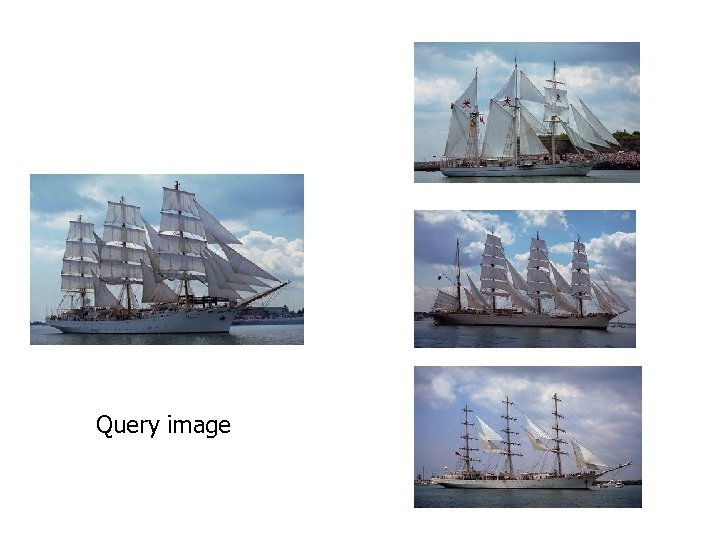

Query image

Query image

So, … Retrieval? !

So, … Retrieval? !

IR is about satisfying vague information needs provided by users, (imprecisely specified in ambiguous natural language) by satisfying them approximately against information provided by authors (specified in the same ambiguous natural language) Smeaton

IR is about satisfying vague information needs provided by users, (imprecisely specified in ambiguous natural language) by satisfying them approximately against information provided by authors (specified in the same ambiguous natural language) Smeaton

No ‘Exact’ Science! • Evaluation is not done analytically, but experimentally – real users (specifying requests) – test collections (real document collections) – benchmarks (TREC: text retrieval conference) – Precision – Recall –. . .

No ‘Exact’ Science! • Evaluation is not done analytically, but experimentally – real users (specifying requests) – test collections (real document collections) – benchmarks (TREC: text retrieval conference) – Precision – Recall –. . .

Known Item

Known Item

Query

Query

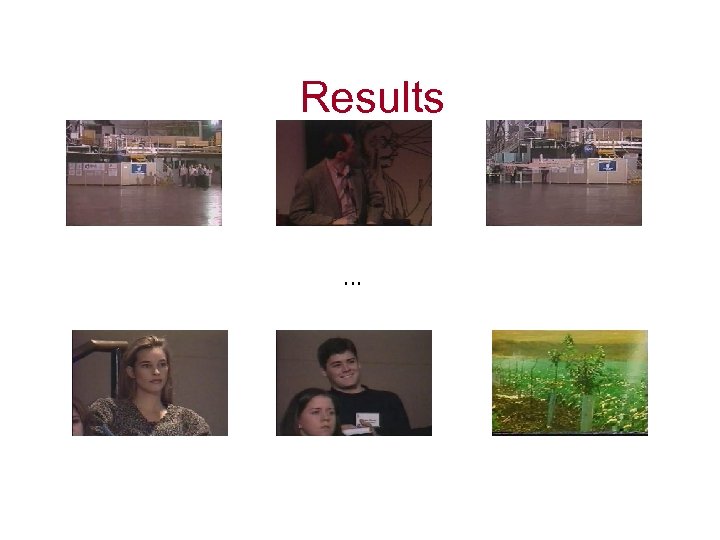

Results …

Results …

Query

Query

Results …

Results …

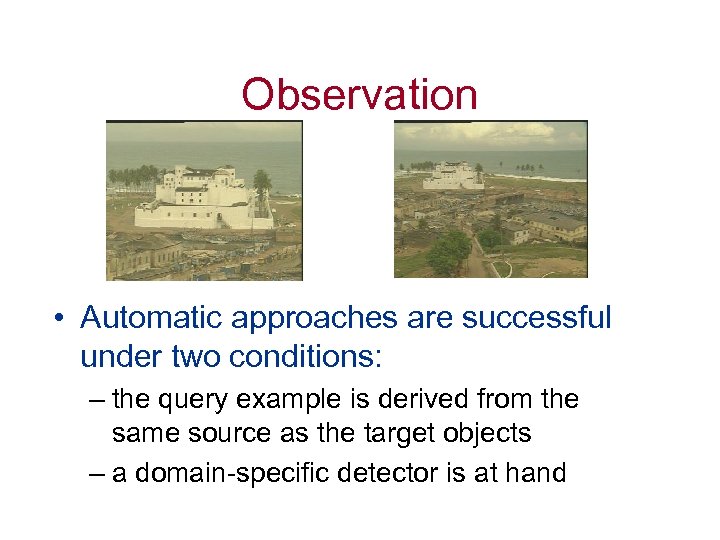

Observation • Automatic approaches are successful under two conditions: – the query example is derived from the same source as the target objects – a domain-specific detector is at hand

Observation • Automatic approaches are successful under two conditions: – the query example is derived from the same source as the target objects – a domain-specific detector is at hand

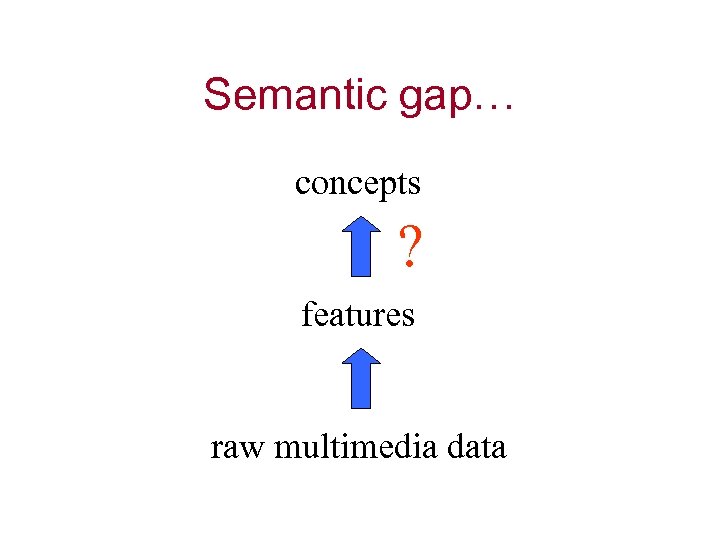

Semantic gap… concepts ? features raw multimedia data

Semantic gap… concepts ? features raw multimedia data

1. Generic Detectors

1. Generic Detectors

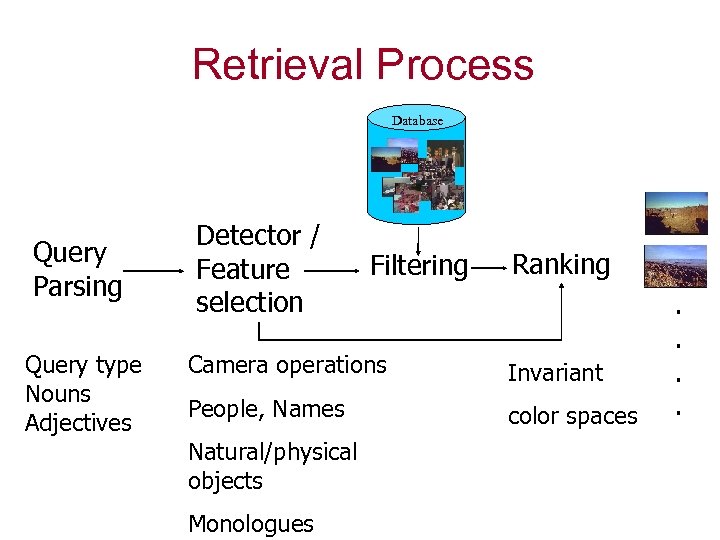

Retrieval Process Database Query Parsing Query type Nouns Adjectives Detector / Feature selection Filtering Ranking Camera operations Invariant People, Names color spaces Natural/physical objects Monologues . .

Retrieval Process Database Query Parsing Query type Nouns Adjectives Detector / Feature selection Filtering Ranking Camera operations Invariant People, Names color spaces Natural/physical objects Monologues . .

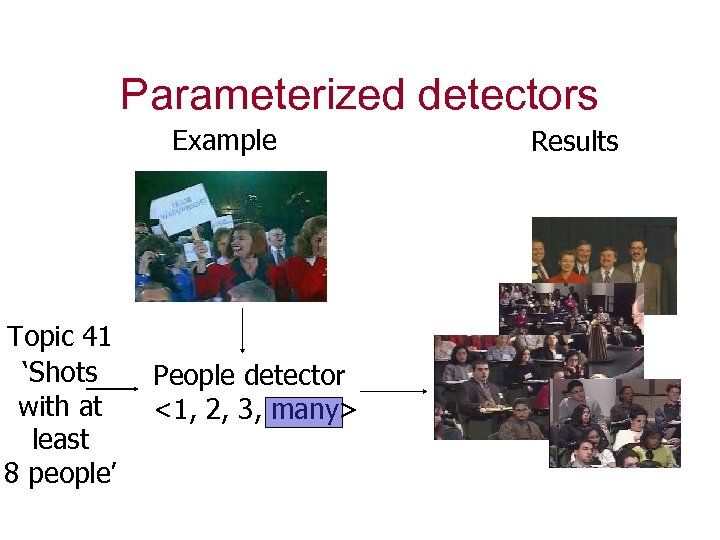

Parameterized detectors Example Topic 41 ‘Shots with at least 8 people’ People detector <1, 2, 3, many> Results

Parameterized detectors Example Topic 41 ‘Shots with at least 8 people’ People detector <1, 2, 3, many> Results

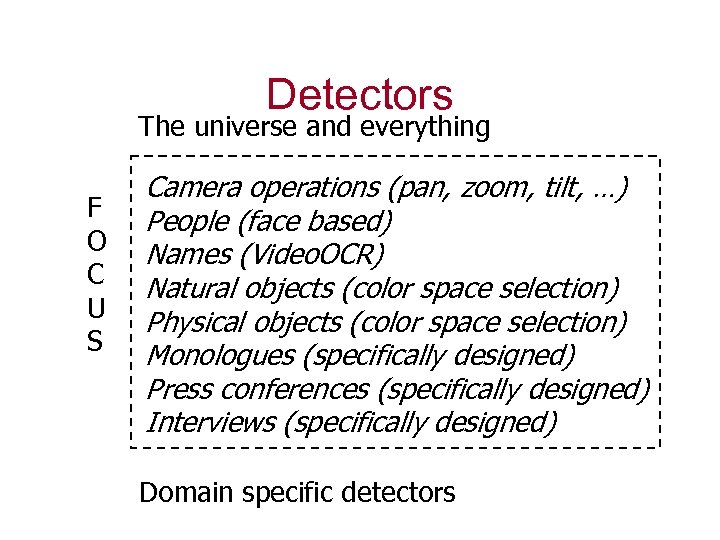

Detectors The universe and everything F O C U S Camera operations (pan, zoom, tilt, …) People (face based) Names (Video. OCR) Natural objects (color space selection) Physical objects (color space selection) Monologues (specifically designed) Press conferences (specifically designed) Interviews (specifically designed) Domain specific detectors

Detectors The universe and everything F O C U S Camera operations (pan, zoom, tilt, …) People (face based) Names (Video. OCR) Natural objects (color space selection) Physical objects (color space selection) Monologues (specifically designed) Press conferences (specifically designed) Interviews (specifically designed) Domain specific detectors

2. Domain knowledge

2. Domain knowledge

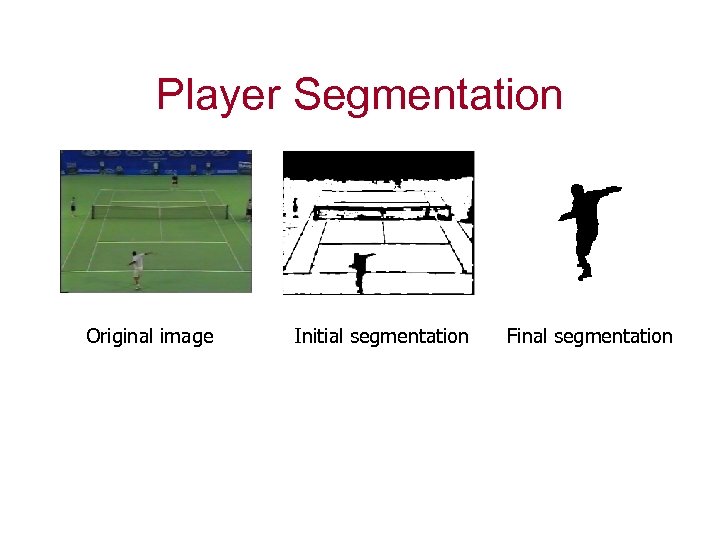

Player Segmentation Original image Initial segmentation Final segmentation

Player Segmentation Original image Initial segmentation Final segmentation

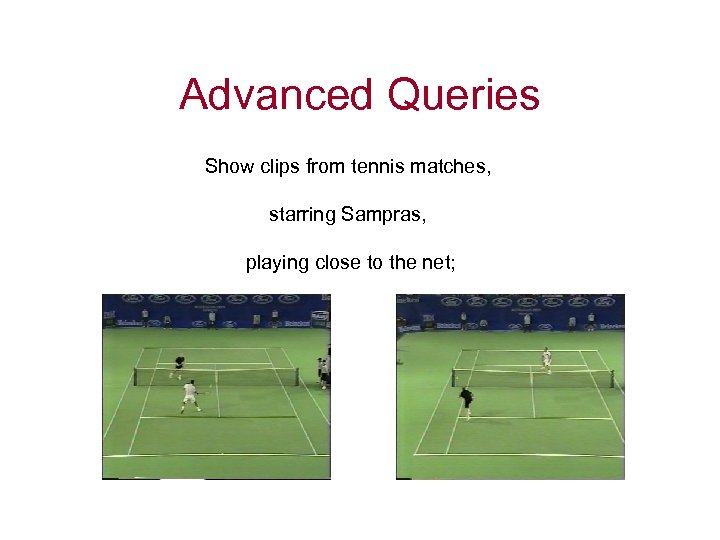

Advanced Queries Show clips from tennis matches, starring Sampras, playing close to the net;

Advanced Queries Show clips from tennis matches, starring Sampras, playing close to the net;

3. Get to know your users

3. Get to know your users

Mirror Approach • Gather User’s Knowledge – Introduce semi-automatic processes for selection and combination of feature models • Local Information – Relevance feedback from a user • Global Information – Thesauri constructed from all users

Mirror Approach • Gather User’s Knowledge – Introduce semi-automatic processes for selection and combination of feature models • Local Information – Relevance feedback from a user • Global Information – Thesauri constructed from all users

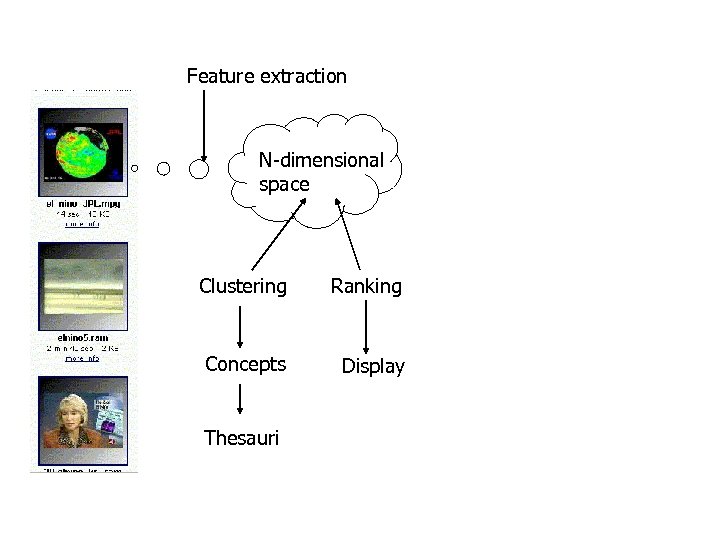

Feature extraction N-dimensional space Clustering Ranking Concepts Display Thesauri

Feature extraction N-dimensional space Clustering Ranking Concepts Display Thesauri

Low-level Features

Low-level Features

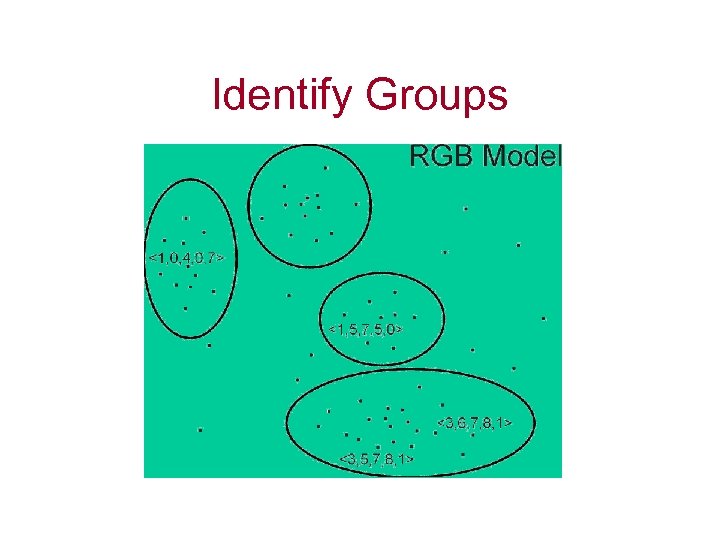

Identify Groups

Identify Groups

Representation • Groups of feature vectors are conceptually equivalent to words in text retrieval • So, techniques from text retrieval can now be applied to multimedia data as if these were text!

Representation • Groups of feature vectors are conceptually equivalent to words in text retrieval • So, techniques from text retrieval can now be applied to multimedia data as if these were text!

Query Formulation • Clusters are internal representations, not suited for user interaction • Use automatic query formulation based on global information (thesaurus) and local information (user feedback)

Query Formulation • Clusters are internal representations, not suited for user interaction • Use automatic query formulation based on global information (thesaurus) and local information (user feedback)

Interactive Query Process • Select relevant clusters from thesaurus • Search collection • Improve results by adapting query – Remove clusters occuring in irrelevant images – Add clusters occuring in relevant images

Interactive Query Process • Select relevant clusters from thesaurus • Search collection • Improve results by adapting query – Remove clusters occuring in irrelevant images – Add clusters occuring in relevant images

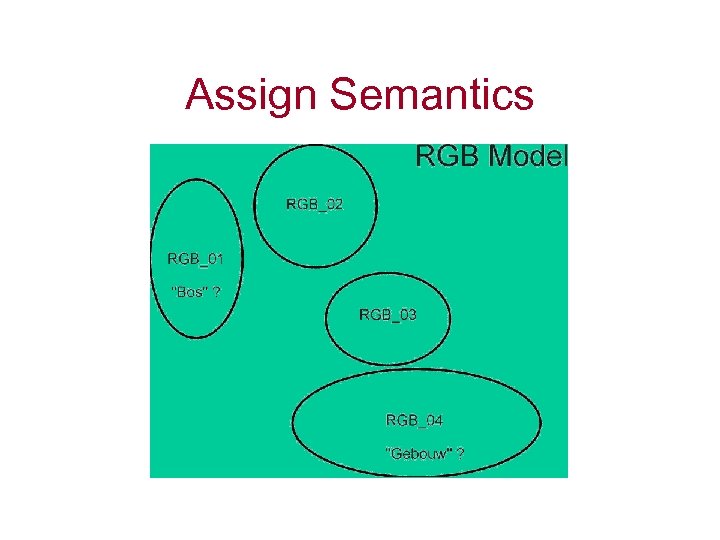

Assign Semantics

Assign Semantics

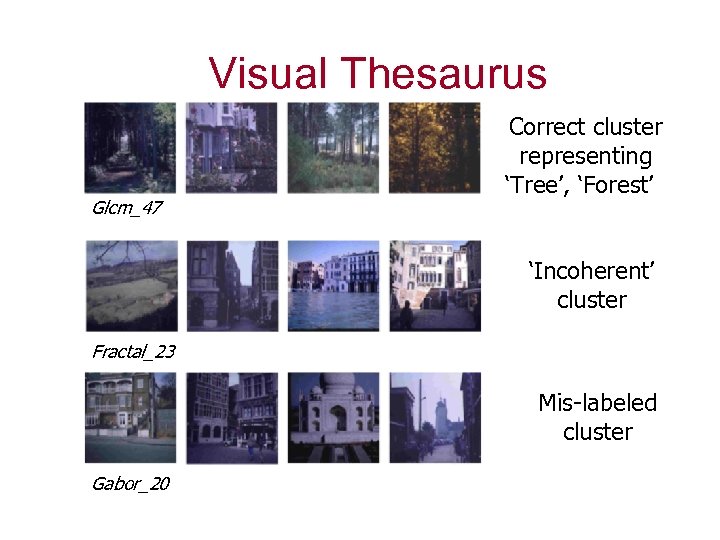

Visual Thesaurus Glcm_47 Correct cluster representing ‘Tree’, ‘Forest’ ‘Incoherent’ cluster Fractal_23 Mis-labeled cluster Gabor_20

Visual Thesaurus Glcm_47 Correct cluster representing ‘Tree’, ‘Forest’ ‘Incoherent’ cluster Fractal_23 Mis-labeled cluster Gabor_20

Learning • Short-term: Adapt query to better reflect this user’s information need • Long-term: Adapt thesaurus and clustering to improve system for all users

Learning • Short-term: Adapt query to better reflect this user’s information need • Long-term: Adapt thesaurus and clustering to improve system for all users

Thesaurus Only After Feedback

Thesaurus Only After Feedback

4. Nobody is unique!

4. Nobody is unique!

Collaborative Filtering • Also: social information filtering – Compare user judgments – Recommend differences between similar users • People’s tastes are not randomly distributed • You are what you buy (Amazon)

Collaborative Filtering • Also: social information filtering – Compare user judgments – Recommend differences between similar users • People’s tastes are not randomly distributed • You are what you buy (Amazon)

Collaborative Filtering • Benefits over content-based approach – Overcomes problems with finding suitable features to represent e. g. art, music – Serendipity – Implicit mechanism for qualitative aspects like style • Problems: large groups, broad domains

Collaborative Filtering • Benefits over content-based approach – Overcomes problems with finding suitable features to represent e. g. art, music – Serendipity – Implicit mechanism for qualitative aspects like style • Problems: large groups, broad domains

5. Ask for help

5. Ask for help

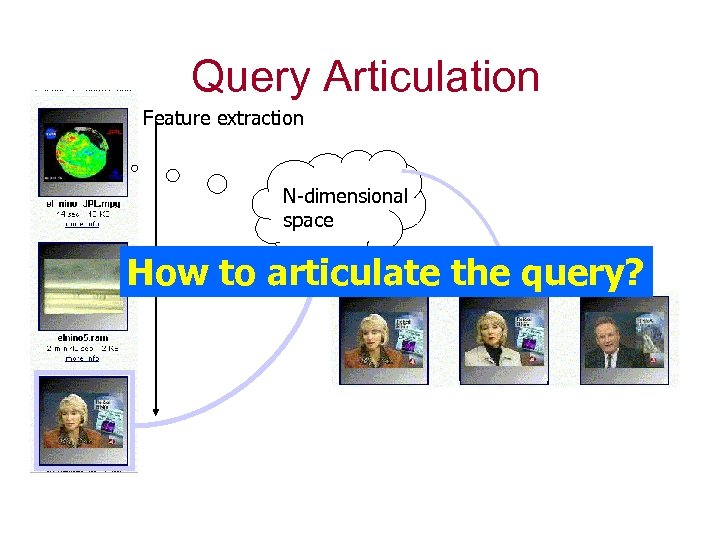

Query Articulation Feature extraction N-dimensional space How to articulate the query?

Query Articulation Feature extraction N-dimensional space How to articulate the query?

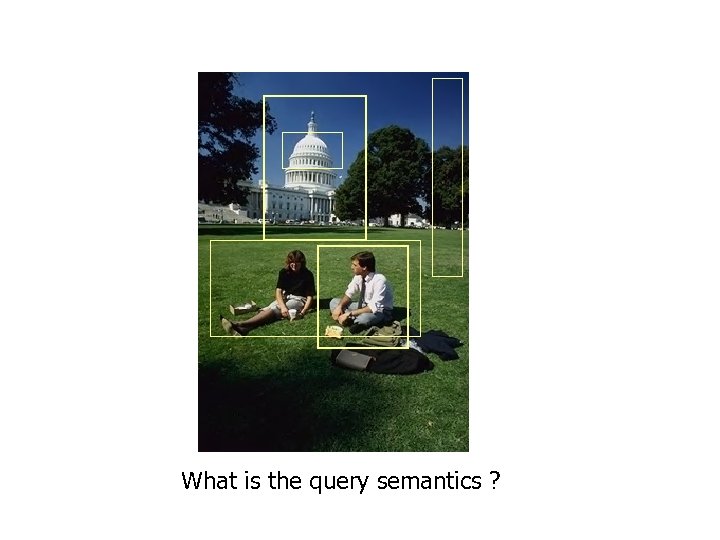

What is the query semantics ?

What is the query semantics ?

Details matter

Details matter

Problem Statement • Feature vectors capture ‘global’ aspects of the whole image • Overall image characteristics dominate the feature-vectors • Hypothesis: users are interested in details

Problem Statement • Feature vectors capture ‘global’ aspects of the whole image • Overall image characteristics dominate the feature-vectors • Hypothesis: users are interested in details

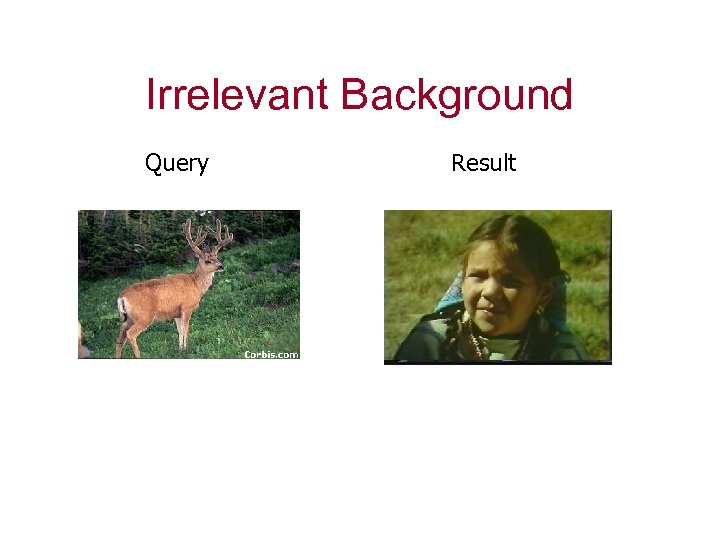

Irrelevant Background Query Result

Irrelevant Background Query Result

Image Spots • Image-spots articulate desired image details – Foreground/background colors – Colors forming ‘shapes’ – Enclosure of shapes by background colors • Multi-spot queries define the spatial relations between a number of spots

Image Spots • Image-spots articulate desired image details – Foreground/background colors – Colors forming ‘shapes’ – Enclosure of shapes by background colors • Multi-spot queries define the spatial relations between a number of spots

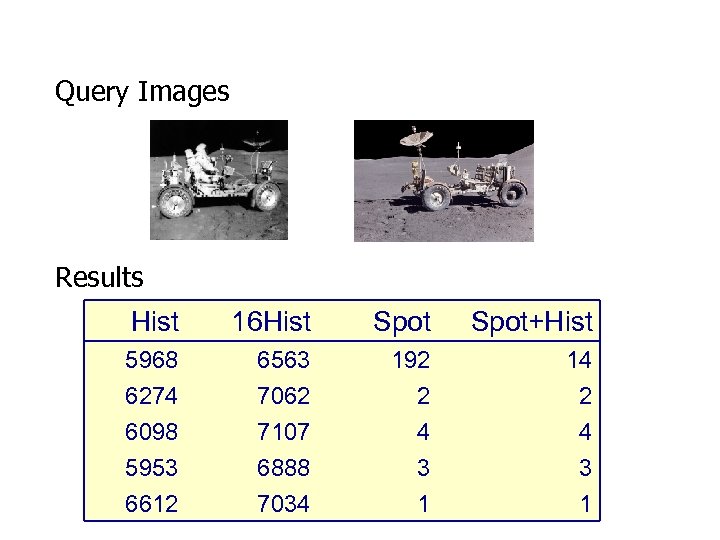

Query Images Results Hist 16 Hist Spot+Hist 5968 6274 6098 5953 6612 6563 7062 7107 6888 7034 192 2 4 3 1 14 2 4 3 1

Query Images Results Hist 16 Hist Spot+Hist 5968 6274 6098 5953 6612 6563 7062 7107 6888 7034 192 2 4 3 1 14 2 4 3 1

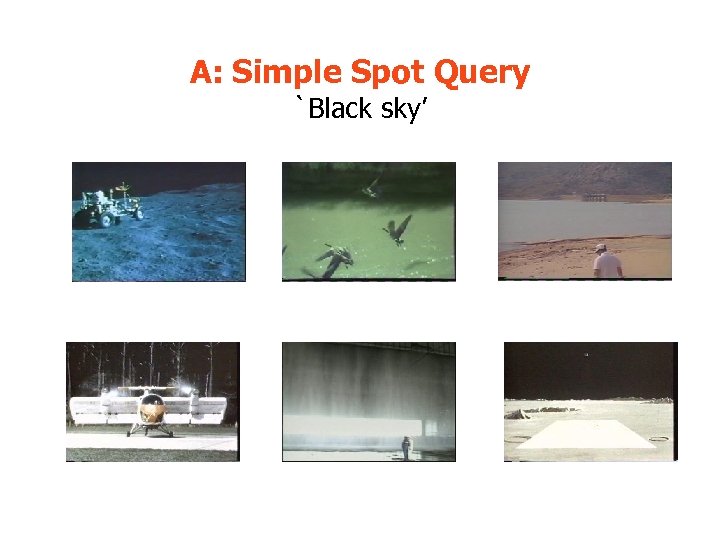

A: Simple Spot Query `Black sky’

A: Simple Spot Query `Black sky’

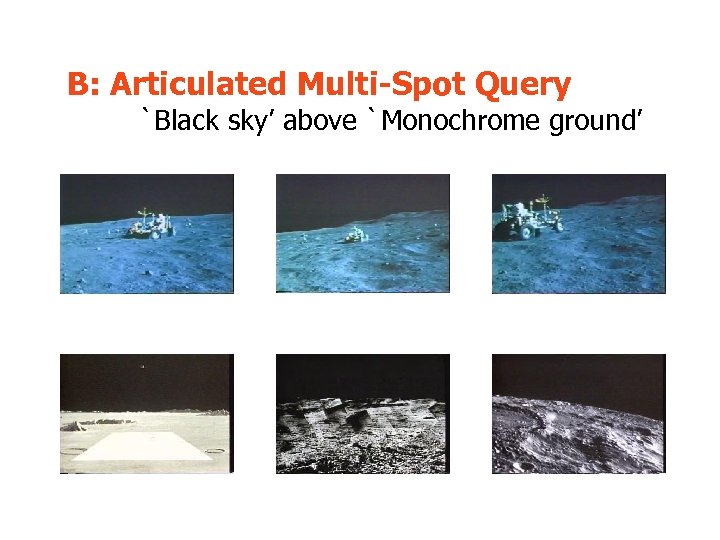

B: Articulated Multi-Spot Query `Black sky’ above `Monochrome ground’

B: Articulated Multi-Spot Query `Black sky’ above `Monochrome ground’

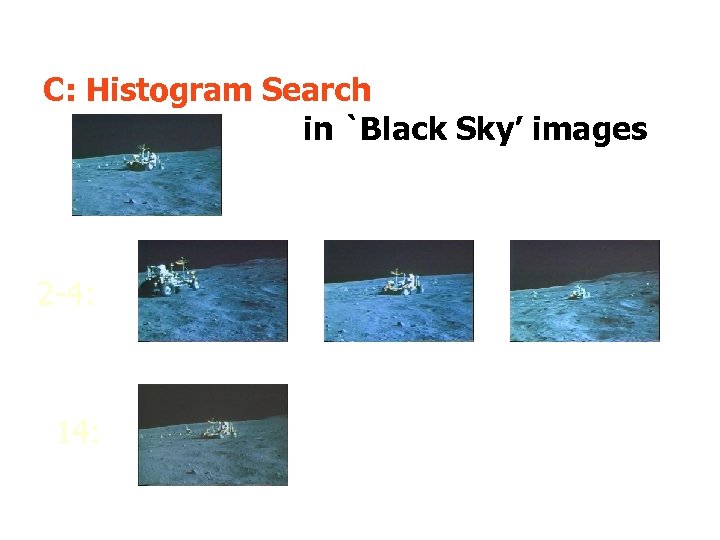

C: Histogram Search in `Black Sky’ images 2 -4: 14:

C: Histogram Search in `Black Sky’ images 2 -4: 14:

Complicating Factors • What are Good Feature Models? • What are Good Ranking Functions? • Queries are Subjective!

Complicating Factors • What are Good Feature Models? • What are Good Ranking Functions? • Queries are Subjective!

Probabilistic Approaches

Probabilistic Approaches

Generative Models LM abstract video of Bayesian model to that present the disclosure can a on for retrieval in have is probabilistic still of for of using this In that is to only queries visual combines visual information look search video the retrieval based search. Both get decision (a visual generic results (a difficult We visual we still needs, search. talk what to do this for with retrieval still specific retrieval information a as model still

Generative Models LM abstract video of Bayesian model to that present the disclosure can a on for retrieval in have is probabilistic still of for of using this In that is to only queries visual combines visual information look search video the retrieval based search. Both get decision (a visual generic results (a difficult We visual we still needs, search. talk what to do this for with retrieval still specific retrieval information a as model still

Generative Models… • A statistical model for generating data – Probability distribution over samples in a given ‘language’aka M ‘Language( Modelling’ P( |M) =P |M) P ( | M, ) © Victor Lavrenko, Aug. 2002

Generative Models… • A statistical model for generating data – Probability distribution over samples in a given ‘language’aka M ‘Language( Modelling’ P( |M) =P |M) P ( | M, ) © Victor Lavrenko, Aug. 2002

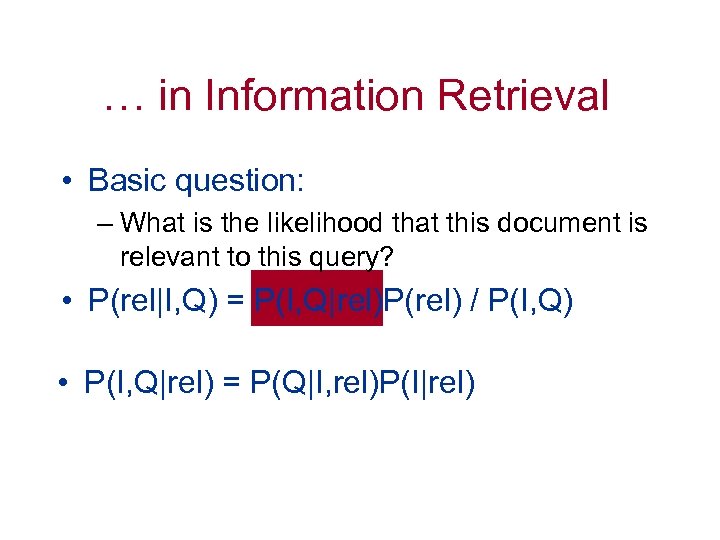

… in Information Retrieval • Basic question: – What is the likelihood that this document is relevant to this query? • P(rel|I, Q) = P(I, Q|rel)P(rel) / P(I, Q) • P(I, Q|rel) = P(Q|I, rel)P(I|rel)

… in Information Retrieval • Basic question: – What is the likelihood that this document is relevant to this query? • P(rel|I, Q) = P(I, Q|rel)P(rel) / P(I, Q) • P(I, Q|rel) = P(Q|I, rel)P(I|rel)

‘Language Modelling’ • Not just ‘English’ • But also, the language of – – author newspaper text document image • Hiemstra or Robertson? • ‘Parsimonious language models explicitly address the relation between levels of language models that are typically used for smoothing. ’

‘Language Modelling’ • Not just ‘English’ • But also, the language of – – author newspaper text document image • Hiemstra or Robertson? • ‘Parsimonious language models explicitly address the relation between levels of language models that are typically used for smoothing. ’

‘Language Modelling’ • Not just ‘English’ • But also, the language of – – author newspaper text document image • Guardian or Times?

‘Language Modelling’ • Not just ‘English’ • But also, the language of – – author newspaper text document image • Guardian or Times?

‘Language Modelling’ • Not just English! • But also, the language of – – author newspaper text document image • or ?

‘Language Modelling’ • Not just English! • But also, the language of – – author newspaper text document image • or ?

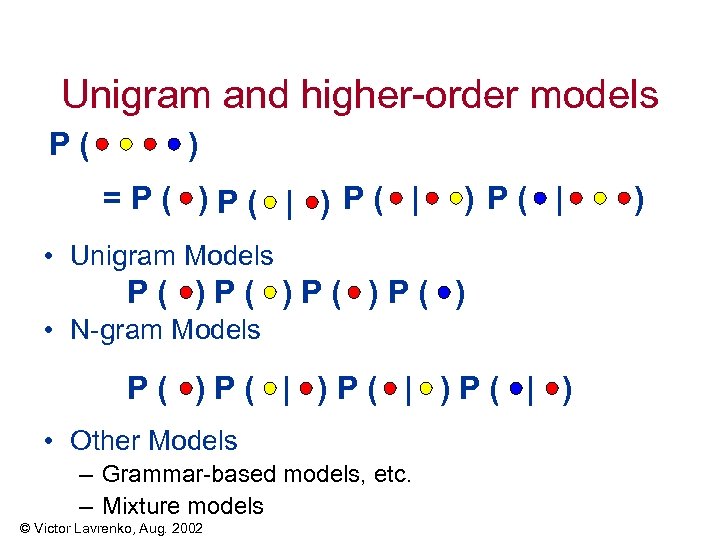

Unigram and higher-order models P( ) =P( )P( | ) P( | • Unigram Models P( )P( ) • N-gram Models P( )P( | ) • Other Models – Grammar-based models, etc. – Mixture models © Victor Lavrenko, Aug. 2002 )

Unigram and higher-order models P( ) =P( )P( | ) P( | • Unigram Models P( )P( ) • N-gram Models P( )P( | ) • Other Models – Grammar-based models, etc. – Mixture models © Victor Lavrenko, Aug. 2002 )

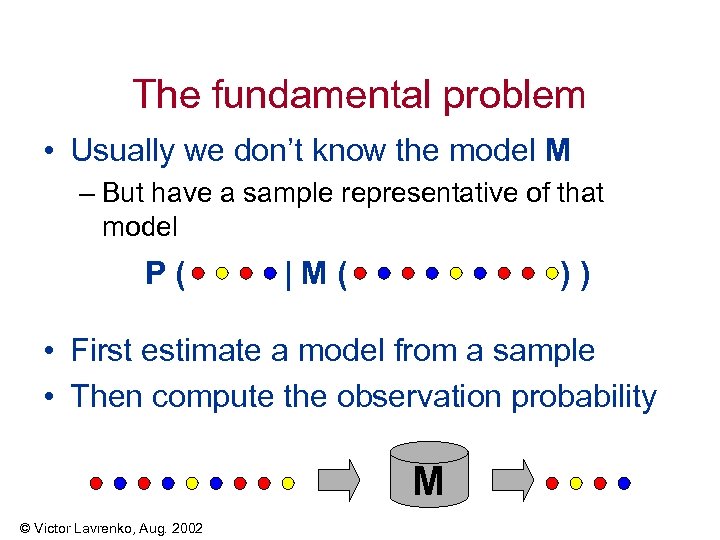

The fundamental problem • Usually we don’t know the model M – But have a sample representative of that model P( |M( )) • First estimate a model from a sample • Then compute the observation probability M © Victor Lavrenko, Aug. 2002

The fundamental problem • Usually we don’t know the model M – But have a sample representative of that model P( |M( )) • First estimate a model from a sample • Then compute the observation probability M © Victor Lavrenko, Aug. 2002

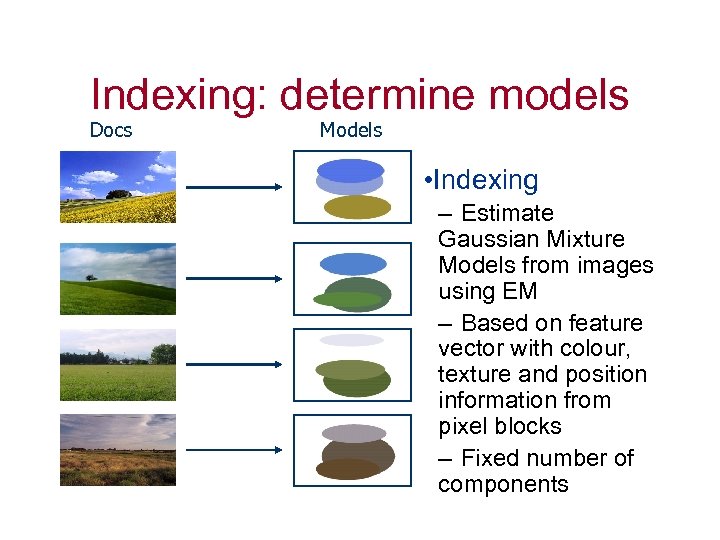

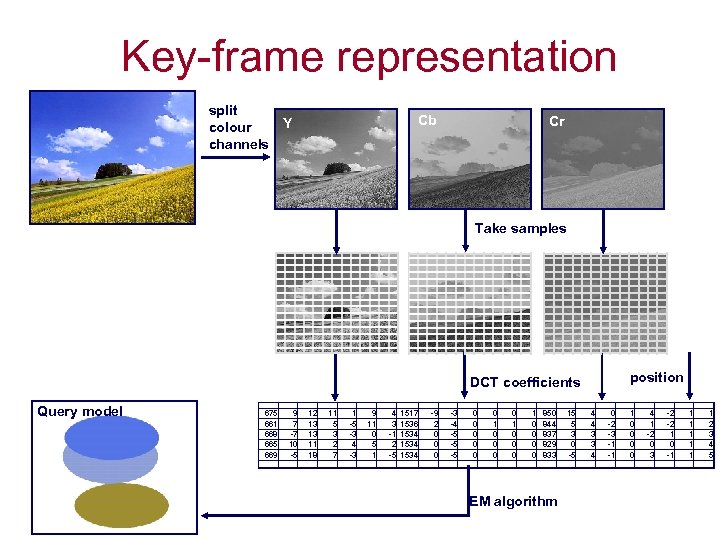

Indexing: determine models Docs Models • Indexing – Estimate Gaussian Mixture Models from images using EM – Based on feature vector with colour, texture and position information from pixel blocks – Fixed number of components

Indexing: determine models Docs Models • Indexing – Estimate Gaussian Mixture Models from images using EM – Based on feature vector with colour, texture and position information from pixel blocks – Fixed number of components

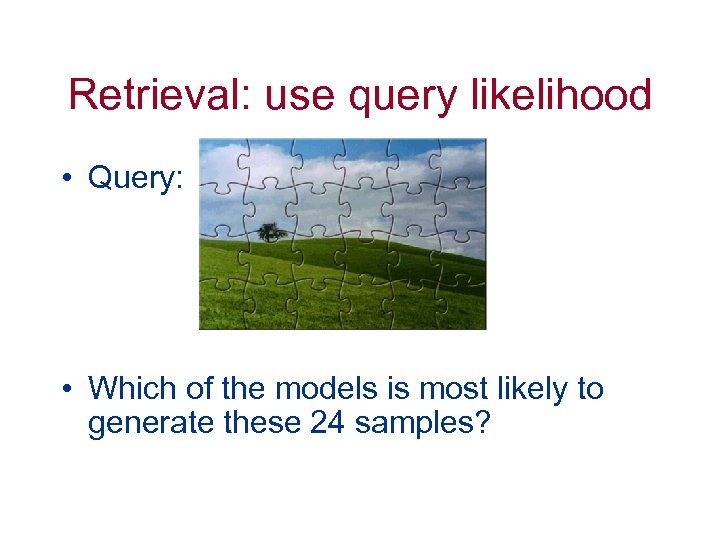

Retrieval: use query likelihood • Query: • Which of the models is most likely to generate these 24 samples?

Retrieval: use query likelihood • Query: • Which of the models is most likely to generate these 24 samples?

Probabilistic Image Retrieval ?

Probabilistic Image Retrieval ?

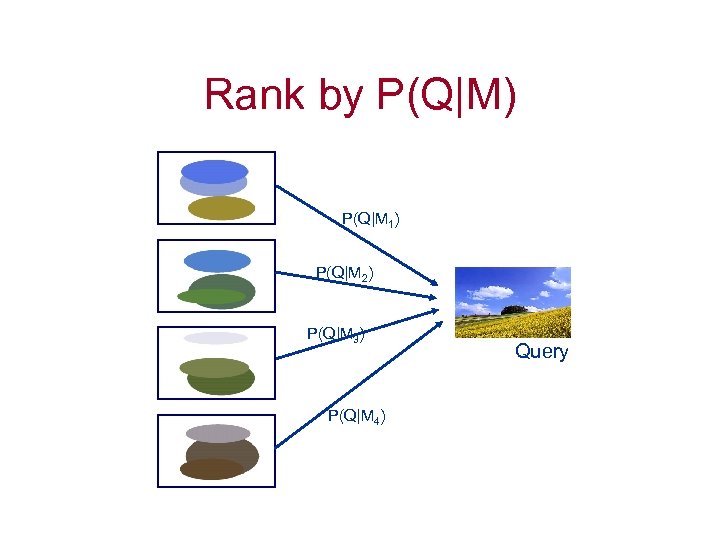

Rank by P(Q|M) P(Q|M 1) P(Q|M 2) P(Q|M 3) P(Q|M 4) Query

Rank by P(Q|M) P(Q|M 1) P(Q|M 2) P(Q|M 3) P(Q|M 4) Query

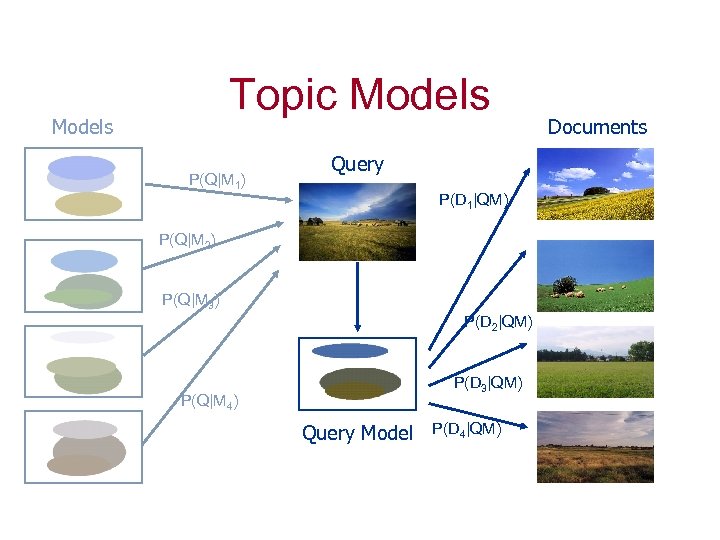

Topic Models P(Q|M 1) Query P(D 1|QM) P(Q|M 2) P(Q|M 3) P(D 2|QM) P(D 3|QM) P(Q|M 4) Query Model P(D 4|QM) Documents

Topic Models P(Q|M 1) Query P(D 1|QM) P(Q|M 2) P(Q|M 3) P(D 2|QM) P(D 3|QM) P(Q|M 4) Query Model P(D 4|QM) Documents

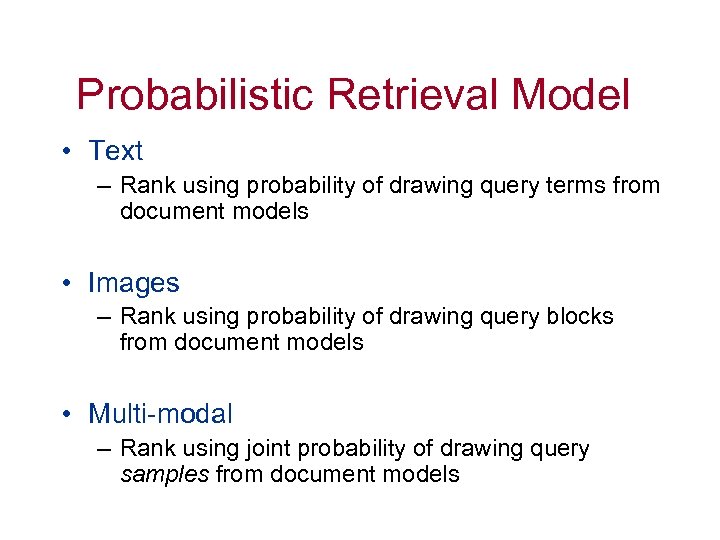

Probabilistic Retrieval Model • Text – Rank using probability of drawing query terms from document models • Images – Rank using probability of drawing query blocks from document models • Multi-modal – Rank using joint probability of drawing query samples from document models

Probabilistic Retrieval Model • Text – Rank using probability of drawing query terms from document models • Images – Rank using probability of drawing query blocks from document models • Multi-modal – Rank using joint probability of drawing query samples from document models

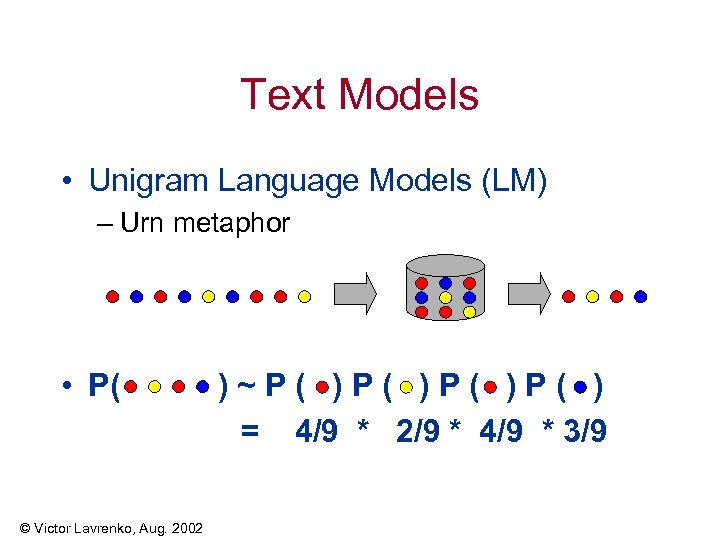

Text Models • Unigram Language Models (LM) – Urn metaphor • P( © Victor Lavrenko, Aug. 2002 )~P( )P( ) = 4/9 * 2/9 * 4/9 * 3/9

Text Models • Unigram Language Models (LM) – Urn metaphor • P( © Victor Lavrenko, Aug. 2002 )~P( )P( ) = 4/9 * 2/9 * 4/9 * 3/9

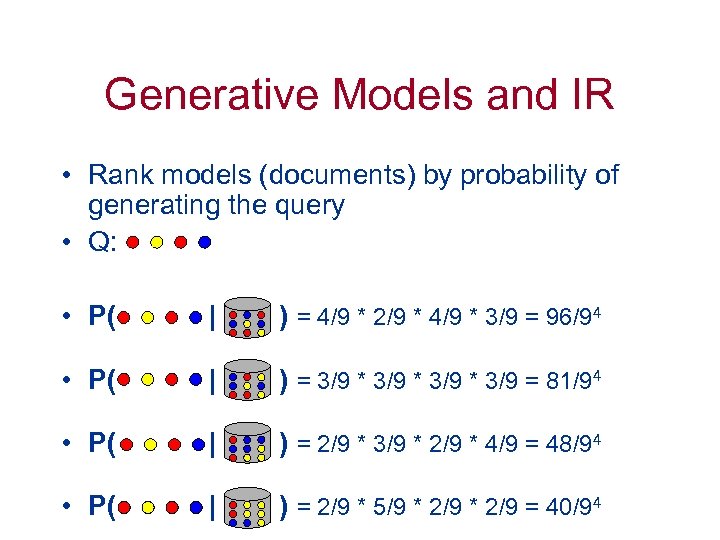

Generative Models and IR • Rank models (documents) by probability of generating the query • Q: • P( | ) = 4/9 * 2/9 * 4/9 * 3/9 = 96/94 • P( | ) = 3/9 * 3/9 = 81/94 • P( | ) = 2/9 * 3/9 * 2/9 * 4/9 = 48/94 • P( | ) = 2/9 * 5/9 * 2/9 = 40/94

Generative Models and IR • Rank models (documents) by probability of generating the query • Q: • P( | ) = 4/9 * 2/9 * 4/9 * 3/9 = 96/94 • P( | ) = 3/9 * 3/9 = 81/94 • P( | ) = 2/9 * 3/9 * 2/9 * 4/9 = 48/94 • P( | ) = 2/9 * 5/9 * 2/9 = 40/94

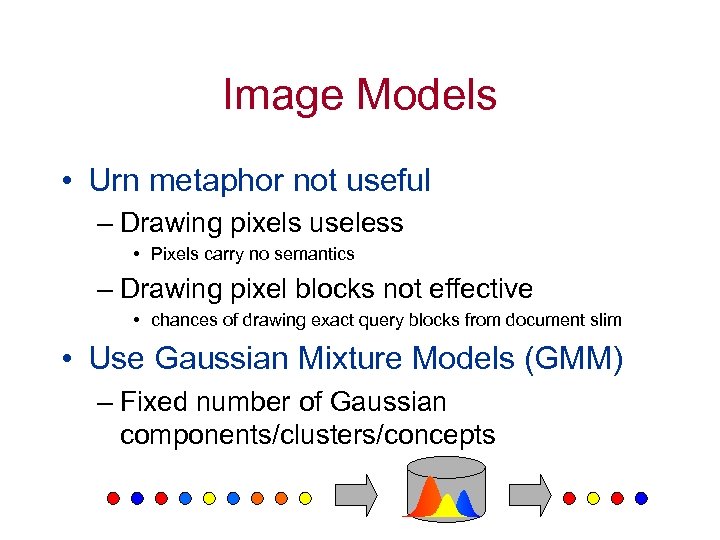

Image Models • Urn metaphor not useful – Drawing pixels useless • Pixels carry no semantics – Drawing pixel blocks not effective • chances of drawing exact query blocks from document slim • Use Gaussian Mixture Models (GMM) – Fixed number of Gaussian components/clusters/concepts

Image Models • Urn metaphor not useful – Drawing pixels useless • Pixels carry no semantics – Drawing pixel blocks not effective • chances of drawing exact query blocks from document slim • Use Gaussian Mixture Models (GMM) – Fixed number of Gaussian components/clusters/concepts

Key-frame representation split Y colour channels Cb Cr Take samples position DCT coefficients Query model 675 661 668 665 669 9 7 -7 10 -5 12 13 13 11 18 11 5 3 2 7 1 -5 -3 4 -3 9 11 0 5 1 4 3 -1 2 -5 1517 1536 1534 -9 2 0 0 0 -3 -4 -5 -5 -5 0 0 0 0 1 0 0 0 1 0 0 850 844 837 829 833 EM algorithm 15 5 3 0 -5 4 4 3 3 4 0 -2 -3 -1 -1 1 0 0 4 1 -2 0 3 -2 -2 1 0 -1 1 1 1 1 2 3 4 5

Key-frame representation split Y colour channels Cb Cr Take samples position DCT coefficients Query model 675 661 668 665 669 9 7 -7 10 -5 12 13 13 11 18 11 5 3 2 7 1 -5 -3 4 -3 9 11 0 5 1 4 3 -1 2 -5 1517 1536 1534 -9 2 0 0 0 -3 -4 -5 -5 -5 0 0 0 0 1 0 0 0 1 0 0 850 844 837 829 833 EM algorithm 15 5 3 0 -5 4 4 3 3 4 0 -2 -3 -1 -1 1 0 0 4 1 -2 0 3 -2 -2 1 0 -1 1 1 1 1 2 3 4 5

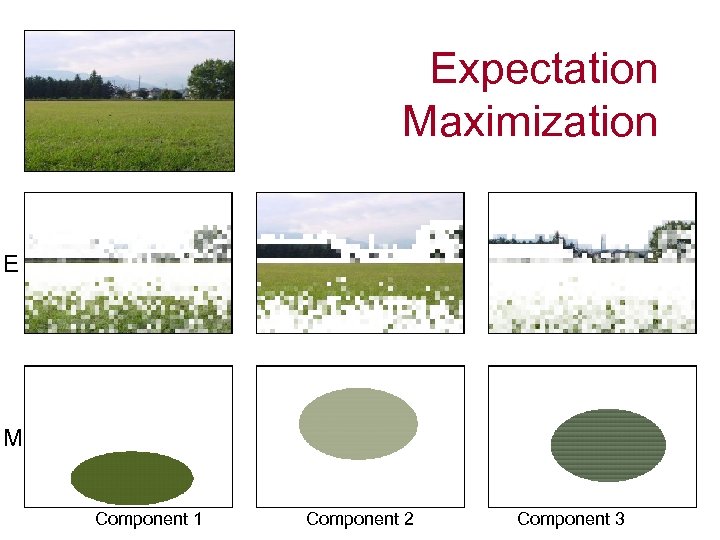

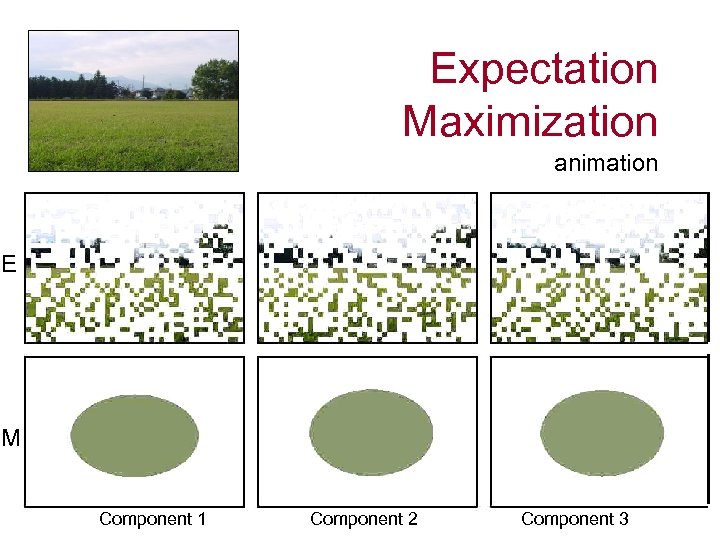

Image Models ? • Expectation-Maximisation (EM) algorithm – iteratively • estimate component assignments • re-estimate component parameters

Image Models ? • Expectation-Maximisation (EM) algorithm – iteratively • estimate component assignments • re-estimate component parameters

Expectation Maximization E M Component 1 Component 2 Component 3

Expectation Maximization E M Component 1 Component 2 Component 3

Expectation Maximization animation E M Component 1 Component 2 Component 3

Expectation Maximization animation E M Component 1 Component 2 Component 3

Corel Experiments

Corel Experiments

No ‘Exact’ Science! • Evaluation is not done analytically, but experimentally – real users (specifying requests) – test collections (real document collections) – benchmarks (TREC: text retrieval conference) – Precision – Recall –. . .

No ‘Exact’ Science! • Evaluation is not done analytically, but experimentally – real users (specifying requests) – test collections (real document collections) – benchmarks (TREC: text retrieval conference) – Precision – Recall –. . .

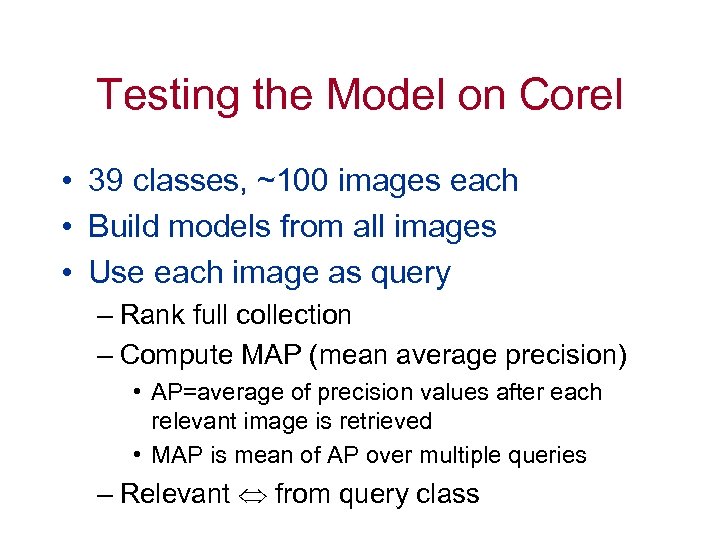

Testing the Model on Corel • 39 classes, ~100 images each • Build models from all images • Use each image as query – Rank full collection – Compute MAP (mean average precision) • AP=average of precision values after each relevant image is retrieved • MAP is mean of AP over multiple queries – Relevant from query class

Testing the Model on Corel • 39 classes, ~100 images each • Build models from all images • Use each image as query – Rank full collection – Compute MAP (mean average precision) • AP=average of precision values after each relevant image is retrieved • MAP is mean of AP over multiple queries – Relevant from query class

Query: Top 5: Example results

Query: Top 5: Example results

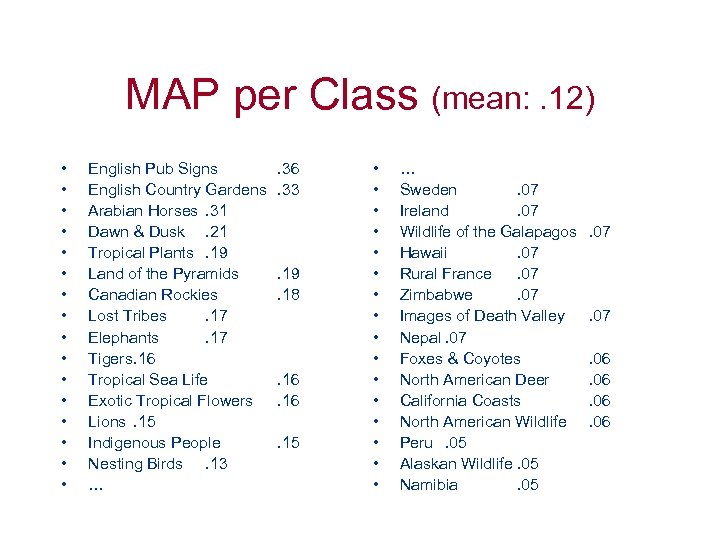

MAP per Class (mean: . 12) • • • • English Pub Signs English Country Gardens Arabian Horses. 31 Dawn & Dusk. 21 Tropical Plants. 19 Land of the Pyramids Canadian Rockies Lost Tribes. 17 Elephants. 17 Tigers. 16 Tropical Sea Life Exotic Tropical Flowers Lions. 15 Indigenous People Nesting Birds. 13 … . 36. 33 . 19. 18 . 16. 15 • • • • … Sweden. 07 Ireland. 07 Wildlife of the Galapagos Hawaii. 07 Rural France. 07 Zimbabwe. 07 Images of Death Valley Nepal. 07 Foxes & Coyotes North American Deer California Coasts North American Wildlife Peru. 05 Alaskan Wildlife. 05 Namibia. 05 . 07. 06. 06

MAP per Class (mean: . 12) • • • • English Pub Signs English Country Gardens Arabian Horses. 31 Dawn & Dusk. 21 Tropical Plants. 19 Land of the Pyramids Canadian Rockies Lost Tribes. 17 Elephants. 17 Tigers. 16 Tropical Sea Life Exotic Tropical Flowers Lions. 15 Indigenous People Nesting Birds. 13 … . 36. 33 . 19. 18 . 16. 15 • • • • … Sweden. 07 Ireland. 07 Wildlife of the Galapagos Hawaii. 07 Rural France. 07 Zimbabwe. 07 Images of Death Valley Nepal. 07 Foxes & Coyotes North American Deer California Coasts North American Wildlife Peru. 05 Alaskan Wildlife. 05 Namibia. 05 . 07. 06. 06

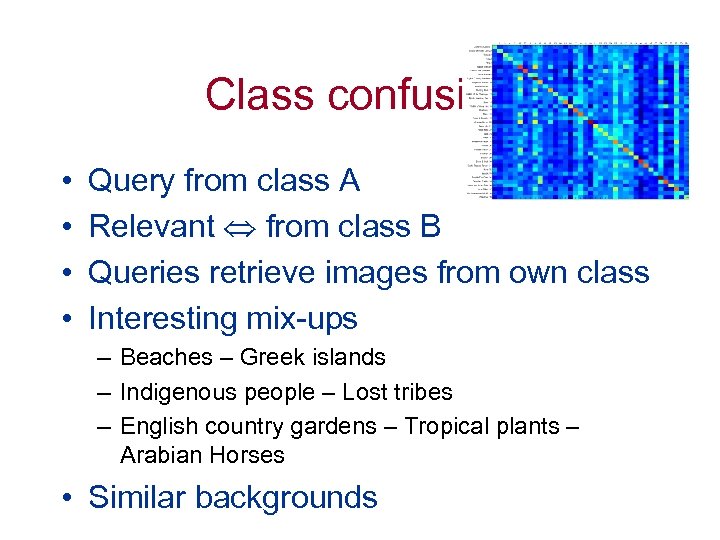

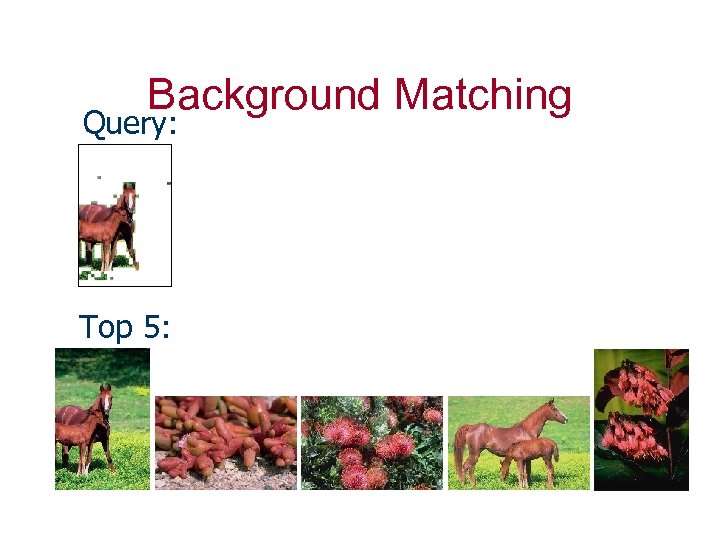

Class confusion • • Query from class A Relevant from class B Queries retrieve images from own class Interesting mix-ups – Beaches – Greek islands – Indigenous people – Lost tribes – English country gardens – Tropical plants – Arabian Horses • Similar backgrounds

Class confusion • • Query from class A Relevant from class B Queries retrieve images from own class Interesting mix-ups – Beaches – Greek islands – Indigenous people – Lost tribes – English country gardens – Tropical plants – Arabian Horses • Similar backgrounds

Background Matching Query: Top 5:

Background Matching Query: Top 5:

Background Matching Query: Top 5:

Background Matching Query: Top 5:

Care for more? T. Westerveld and A. P. de Vries, Generative probabilistic models for multimedia retrieval: query generation versus document generation, in IEE Vision, Image and Signal Processing. http: //www. cwi. nl/~arjen/ arjen@acm. org

Care for more? T. Westerveld and A. P. de Vries, Generative probabilistic models for multimedia retrieval: query generation versus document generation, in IEE Vision, Image and Signal Processing. http: //www. cwi. nl/~arjen/ arjen@acm. org