Multilayer Perceptrons

Multilayer Perceptrons

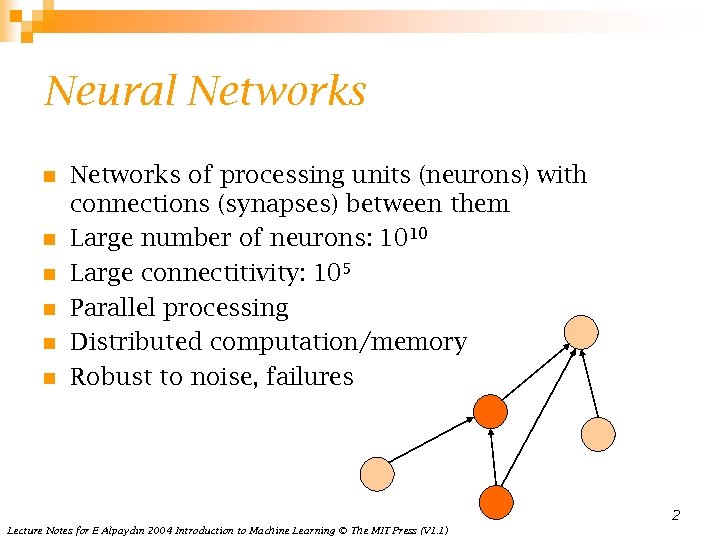

Neural Networks n n n Networks of processing units (neurons) with connections (synapses) between them Large number of neurons: 1010 Large connectitivity: 105 Parallel processing Distributed computation/memory Robust to noise, failures 2 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Neural Networks n n n Networks of processing units (neurons) with connections (synapses) between them Large number of neurons: 1010 Large connectitivity: 105 Parallel processing Distributed computation/memory Robust to noise, failures 2 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Understanding the Brain n Levels of analysis (Marr, 1982) Computational theory 2. Representation and algorithm 3. Hardware implementation 1. n n Reverse engineering: From hardware to theory Parallel processing: SIMD vs MIMD Neural net: SIMD with modifiable local memory Learning: Update by training/experience 3 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Understanding the Brain n Levels of analysis (Marr, 1982) Computational theory 2. Representation and algorithm 3. Hardware implementation 1. n n Reverse engineering: From hardware to theory Parallel processing: SIMD vs MIMD Neural net: SIMD with modifiable local memory Learning: Update by training/experience 3 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

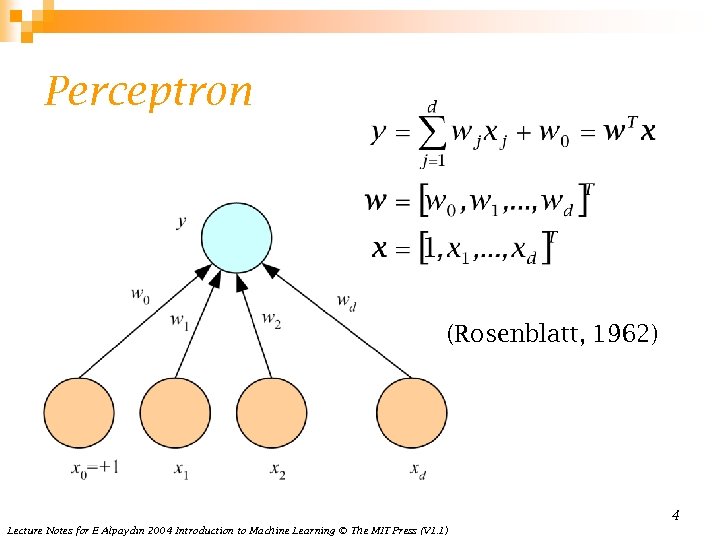

Perceptron (Rosenblatt, 1962) 4 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Perceptron (Rosenblatt, 1962) 4 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

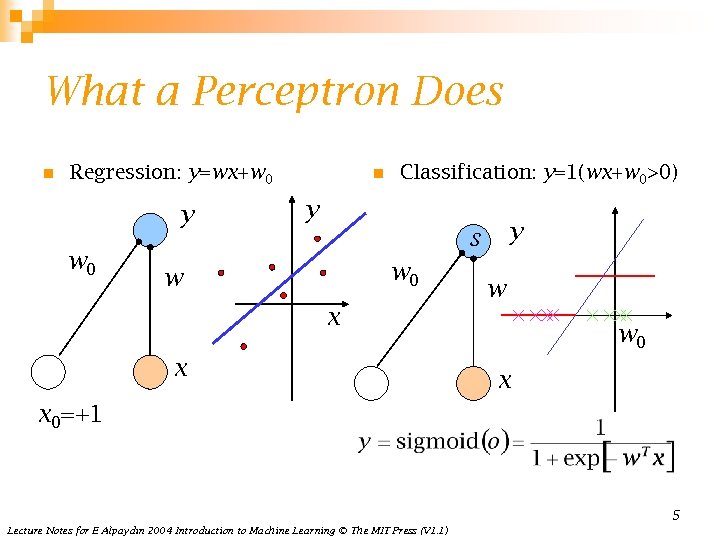

What a Perceptron Does n Regression: y=wx+w 0 y w 0 n Classification: y=1(wx+w 0>0) y y s w 0 w w x x w 0 x x 0=+1 5 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

What a Perceptron Does n Regression: y=wx+w 0 y w 0 n Classification: y=1(wx+w 0>0) y y s w 0 w w x x w 0 x x 0=+1 5 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

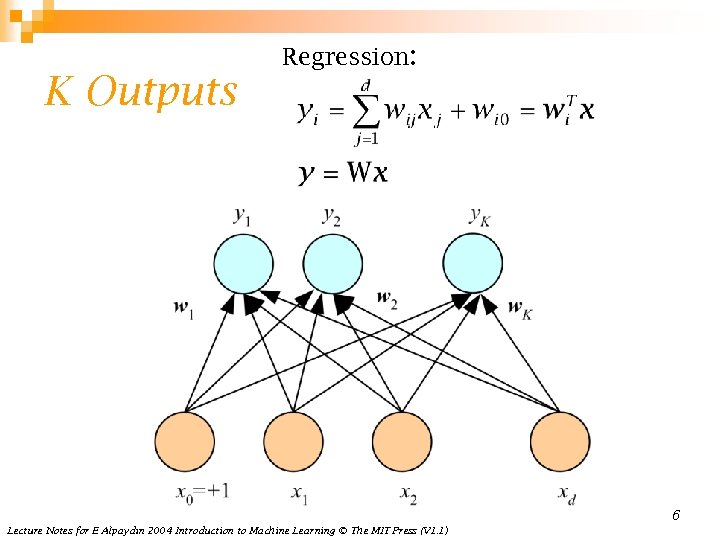

K Outputs Regression: 6 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

K Outputs Regression: 6 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

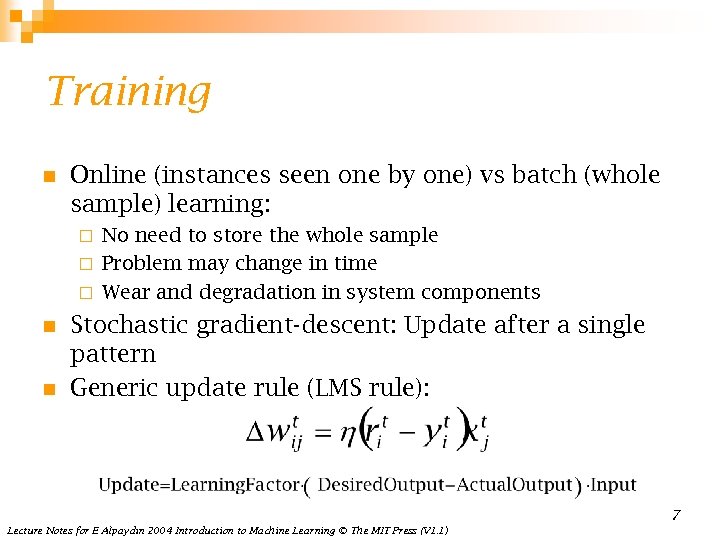

Training n Online (instances seen one by one) vs batch (whole sample) learning: No need to store the whole sample ¨ Problem may change in time ¨ Wear and degradation in system components ¨ n n Stochastic gradient-descent: Update after a single pattern Generic update rule (LMS rule): 7 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Training n Online (instances seen one by one) vs batch (whole sample) learning: No need to store the whole sample ¨ Problem may change in time ¨ Wear and degradation in system components ¨ n n Stochastic gradient-descent: Update after a single pattern Generic update rule (LMS rule): 7 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

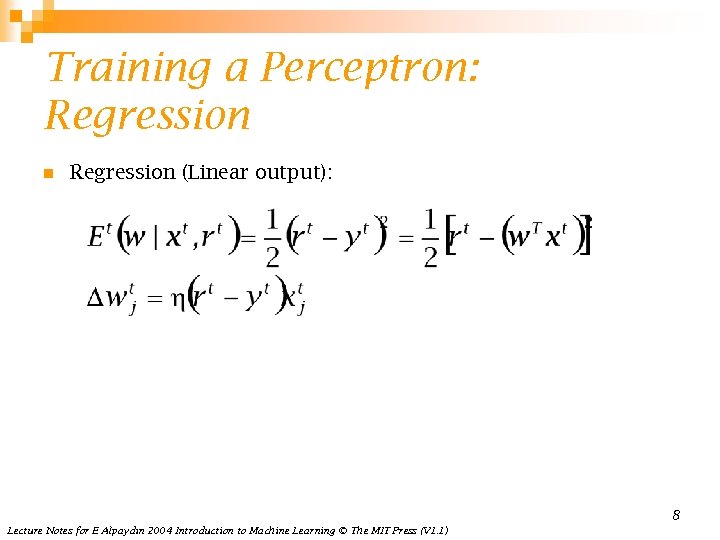

Training a Perceptron: Regression n Regression (Linear output): 8 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Training a Perceptron: Regression n Regression (Linear output): 8 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

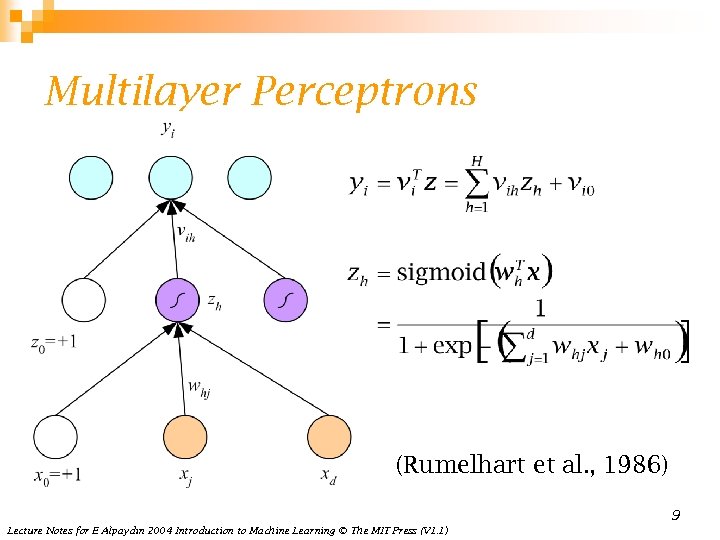

Multilayer Perceptrons (Rumelhart et al. , 1986) 9 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Multilayer Perceptrons (Rumelhart et al. , 1986) 9 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

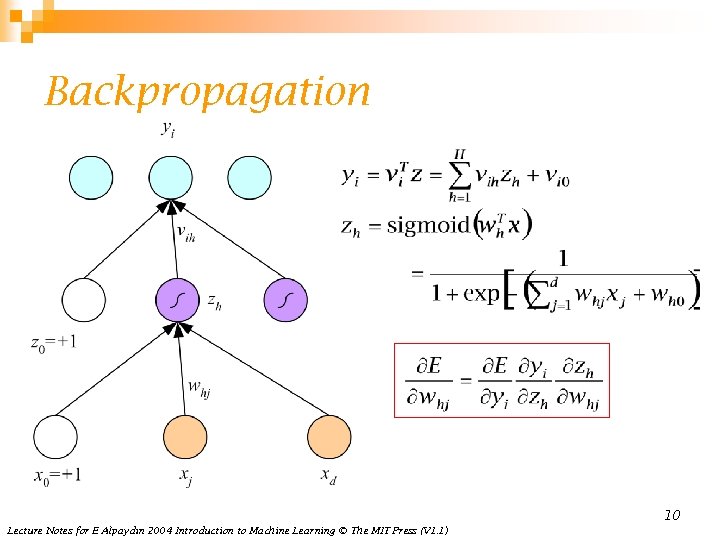

Backpropagation 10 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Backpropagation 10 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

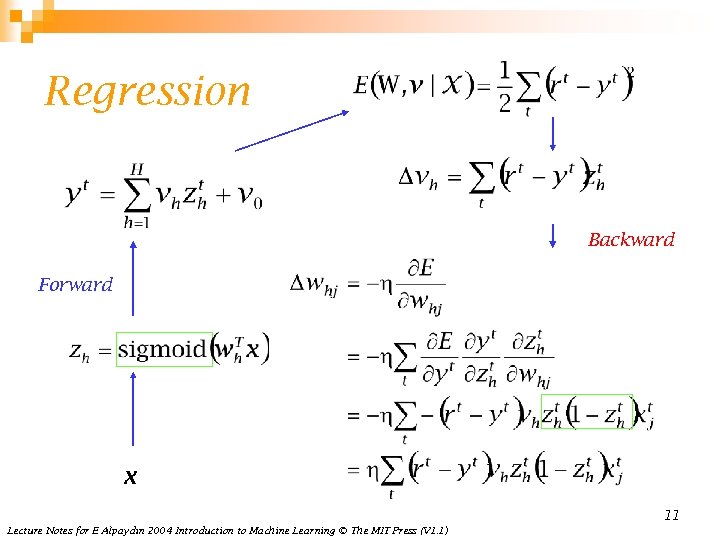

Regression Backward Forward x 11 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Regression Backward Forward x 11 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

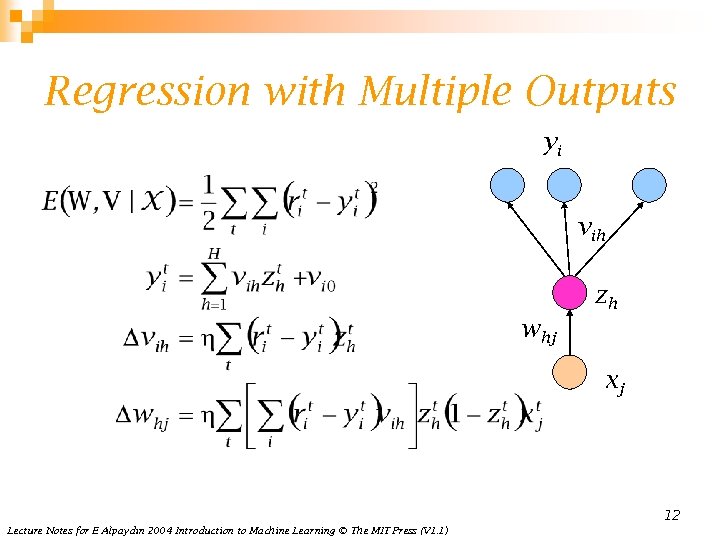

Regression with Multiple Outputs yi vih zh whj xj 12 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

Regression with Multiple Outputs yi vih zh whj xj 12 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

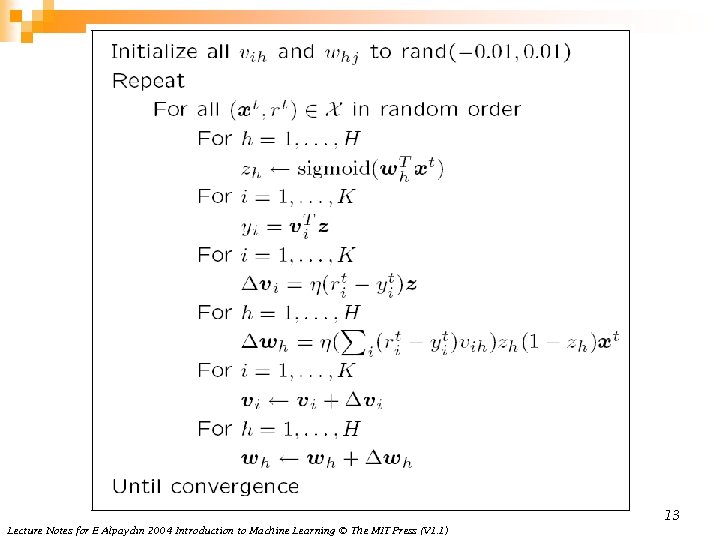

13 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

13 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

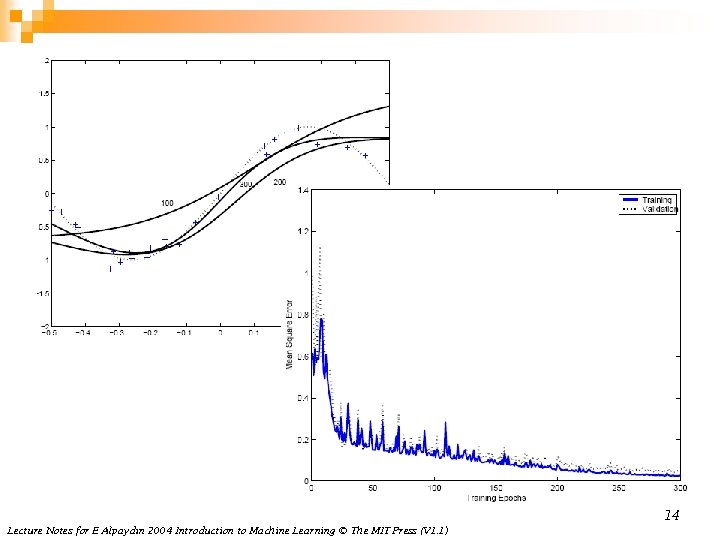

14 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

14 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

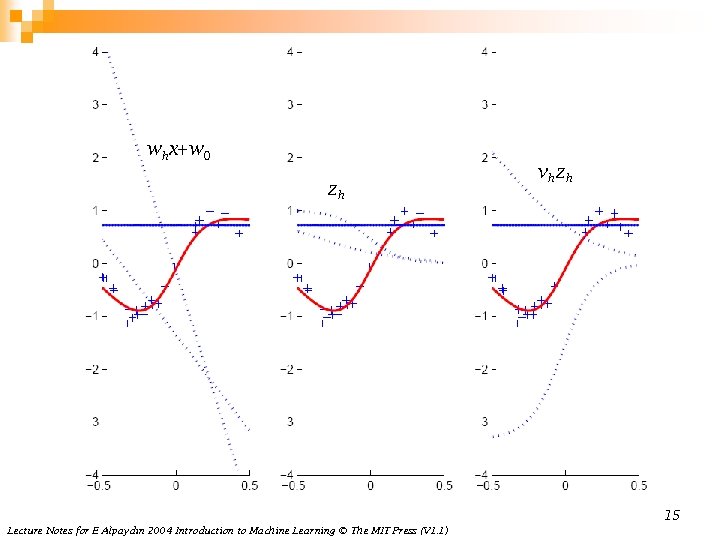

whx+w 0 zh vh zh 15 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)

whx+w 0 zh vh zh 15 Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V 1. 1)