df55e82daf05136b05c1bdd9858d0fd5.ppt

- Количество слайдов: 63

Multi Processing Hardware Gilad Berman

Agenda § Multi Core Background and software limitation § Multi Processing Methods - Scale Up - Scale Out § Process vs. Thread § Multi Processing Architecture - SMP vs. NUMA - cache coherency protocols - Programming discussion § Hyper-Threading Technology § High-Level Atomic Operation in Hardware 2

Why Is This Important? § Computer Hardware has been changes A LOT the past 3 years § The programmer should know the hardware, it makes a difference! § Sadly, the current (TAU) computer sciences material do not cover this issue at all, almost. . § Not only our degree, most of the software companies do not fully aware of that (this is my impression) § Its much easier to buy faster and powerfull hardware than optimizing the code. But, there is a limit to that and computing demands constantly increasing § Interesting, I hope. . 3

Multi Core Background and software limitation 4

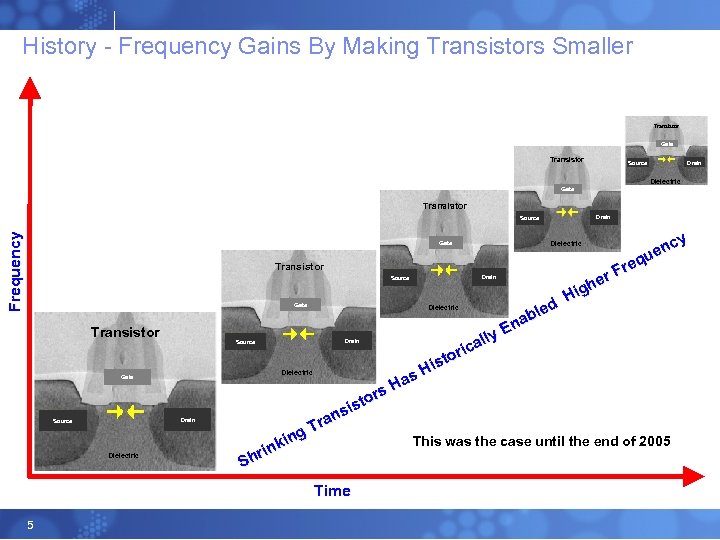

History - Frequency Gains By Making Transistors Smaller Transistor Gate Transistor Drain Source Dielectric Gate Transistor Drain F req u en cy Source Gate Transistor Drain Source Dielectric s Ha rs sto si an Tr Dielectric Gate g kin rin Sh This was the case until the end of 2005 Time 5 d ble a En lly ica or ist H Dielectric cy en u req r. F he Hig Dielectric

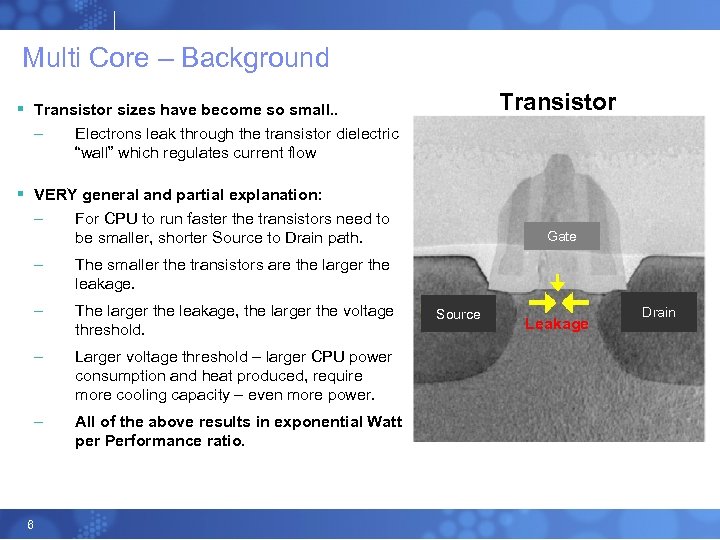

Multi Core – Background Transistor § Transistor sizes have become so small. . – Electrons leak through the transistor dielectric “wall” which regulates current flow § VERY general and partial explanation: – For CPU to run faster the transistors need to be smaller, shorter Source to Drain path. – The larger the leakage, the larger the voltage threshold. – Larger voltage threshold – larger CPU power consumption and heat produced, require more cooling capacity – even more power. – 6 The smaller the transistors are the larger the leakage. – All of the above results in exponential Watt per Performance ratio. Gate Source Leakage Drain

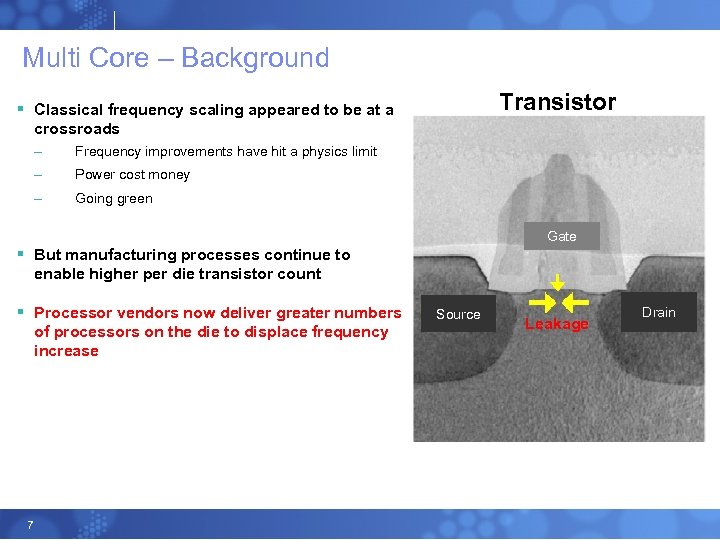

Multi Core – Background Transistor § Classical frequency scaling appeared to be at a crossroads – Frequency improvements have hit a physics limit – Power cost money – Going green Gate § But manufacturing processes continue to enable higher per die transistor count § Processor vendors now deliver greater numbers of processors on the die to displace frequency increase 7 Source Leakage Drain

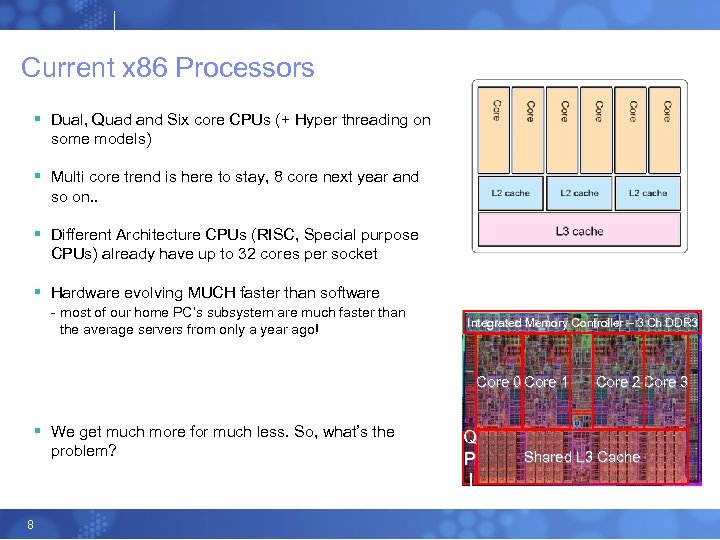

Current x 86 Processors § Dual, Quad and Six core CPUs (+ Hyper threading on some models) § Multi core trend is here to stay, 8 core next year and so on. . § Different Architecture CPUs (RISC, Special purpose CPUs) already have up to 32 cores per socket § Hardware evolving MUCH faster than software - most of our home PC’s subsystem are much faster than the average servers from only a year ago! Integrated Memory Controller – 3 Ch DDR 3 Core 0 Core 1 § We get much more for much less. So, what’s the problem? 8 Q P I Core 2 Core 3 Shared L 3 Cache

Know Your Software Limitations § Software do not scale if it hasn’t been written to do so! § Single threaded application running on 24 core server will use only one core, the OS has nothing to do about it. § Single threaded application running on 24 core server will actually run slower than if it will run on your home PC (or every other single core machine). Explanation coming later. Any ideas? 9

Know Your Software Limitations § Single or Dual Threaded – Typically Developed Before 2000 – Legacy server software and most desktop software – Most server software developed by application developers new to multi-threading § N Threaded (typically N = 4 to 8) – Majority of Business Applications – Vast majority of server software developed in the past 5 - 8 years – Exchange, Notes, Citrix, Terminal Services, most Java, File/Print/Web serving, etc. – Almost all customer developed applications – Some workstation software § Fully Threaded Software (N = 8 to 64) – Enterprise Enabled Applications – SQL Server, Oracle, DB/2, SAP, People. Soft, VMWare, 64 -bit Terminal Services, etc. 10

So, What can be done? § Obviously, write N threaded of fully threaded applications But this is not so simple: - writing fully threaded application is hard. - rewriting all of the existing applications is not possible. § Virtualization 11

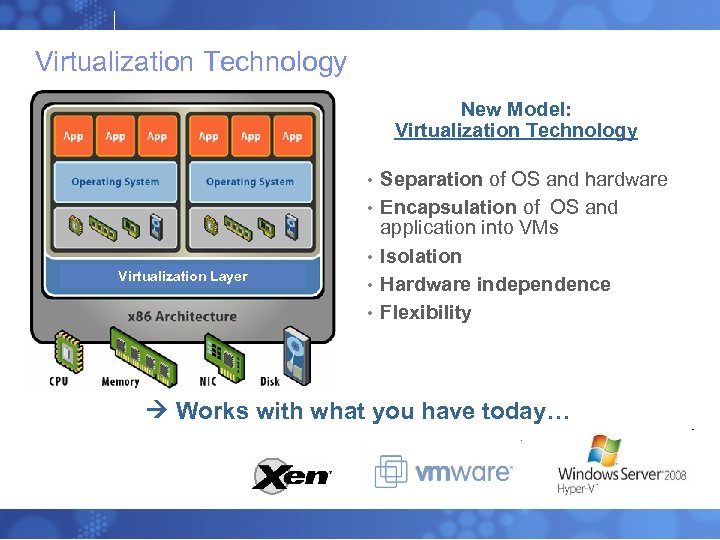

Virtualization Technology New Model: Virtualization Technology • • • Virtualization Layer • • Separation of OS and hardware Encapsulation of OS and application into VMs Isolation Hardware independence Flexibility Works with what you have today…

Multi Core Methods 13

Market View § Every server is a multi Core server § Multi Core trend will continue § Two major software trends - Virtualization - Cloud computing § Power consumption became a real issue One Socket (quad core + HT) Server 14

Multi Processing Methods Two Main methods § Scale Up § Scale Out 15

Multi Processing Methods - Scale Up § One large multi Core server § Single OS § It is not uncommon to see 24 Cores and 256 GB RAM server § Common Applications: - Databases - ERP - Virtualization - Mathematic Applications 16 One server with 96 cores and 1 TB of RAM

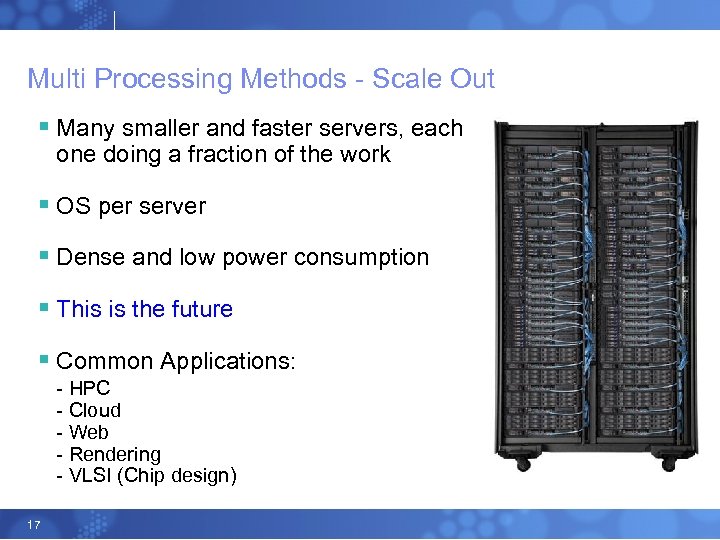

Multi Processing Methods - Scale Out § Many smaller and faster servers, each one doing a fraction of the work § OS per server § Dense and low power consumption § This is the future § Common Applications: - HPC - Cloud - Web - Rendering - VLSI (Chip design) 17

Process vs. Thread 18

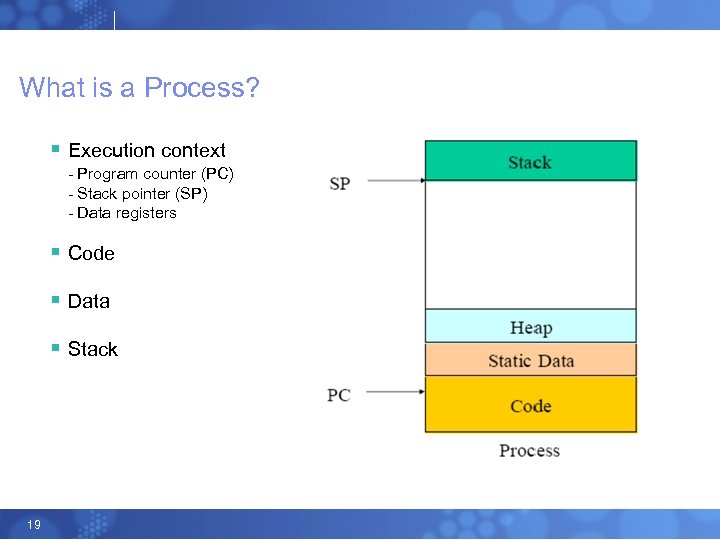

What is a Process? § Execution context - Program counter (PC) - Stack pointer (SP) - Data registers § Code § Data § Stack 19

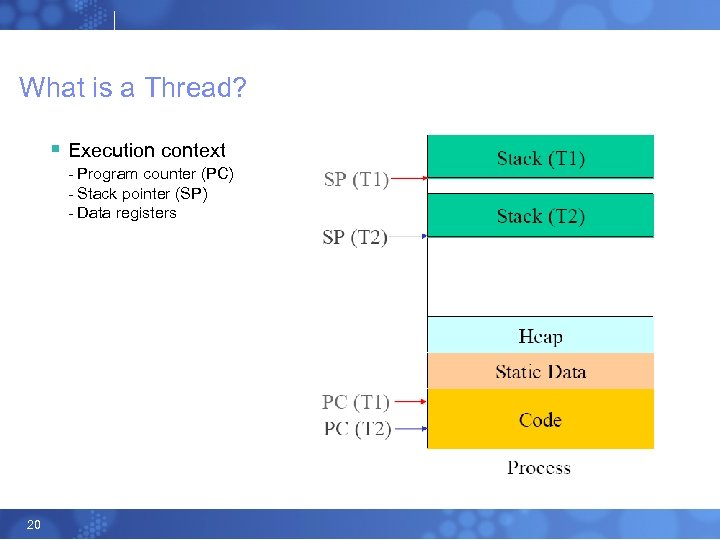

What is a Thread? § Execution context - Program counter (PC) - Stack pointer (SP) - Data registers 20

Process vs. Thread § processes are typically independent, § threads exist as subsets of a process. Each process has one or more threads § processes carry considerable state information § multiple threads within a process share state as well as memory and other resources § processes have separate address space, § threads of the same process share their address space § Inter-process communication is expensive: need to context switch § Inter-thread communication cheap: can use process memory and may not need to context switch 21

Multi Processing Architecture 22

Multi Processing Architecture (Scale Up Servers) § SMP – Symmetric multi processing Implemented by Intel, until the new generation, Nehalem also known as UMA § NUMA – Non-Uniform Memory Access AMD design 23

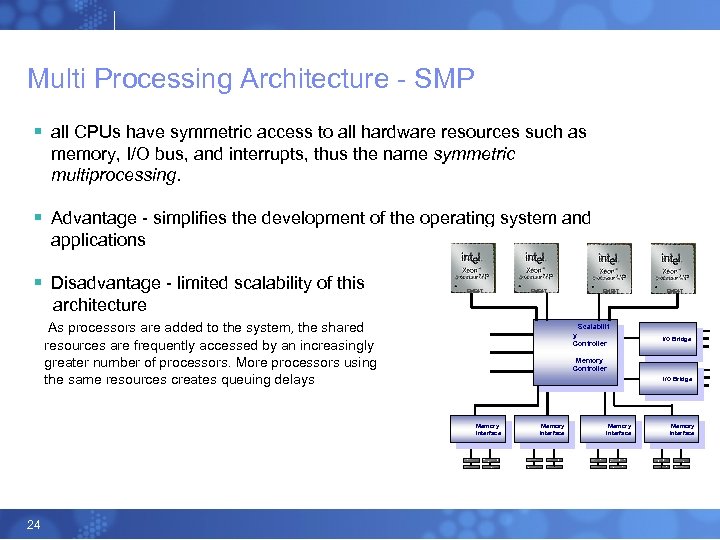

Multi Processing Architecture - SMP § all CPUs have symmetric access to all hardware resources such as memory, I/O bus, and interrupts, thus the name symmetric multiprocessing. § Advantage - simplifies the development of the operating system and applications § Disadvantage - limited scalability of this architecture As processors are added to the system, the shared EM 64 T Scalabilit y Controller resources are frequently accessed by an increasingly greater number of processors. More processors using the same resources creates queuing delays I/O Bridge Memory Controller I/O Bridge Memory Interface 24 Memory Interface

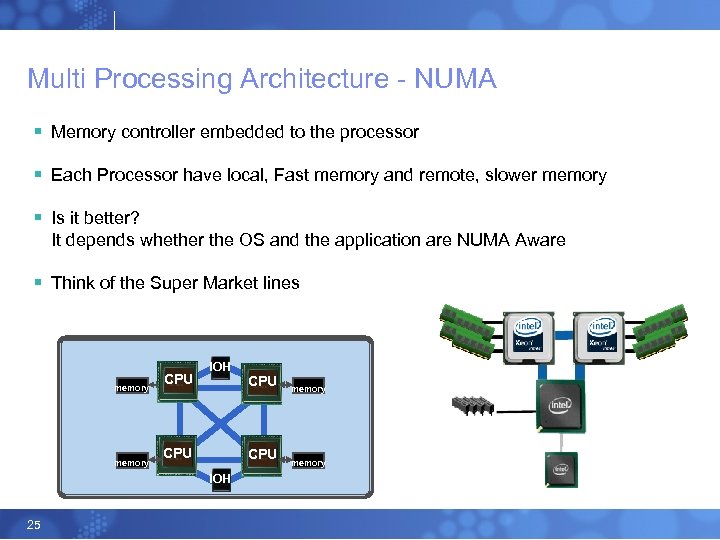

Multi Processing Architecture - NUMA § Memory controller embedded to the processor § Each Processor have local, Fast memory and remote, slower memory § Is it better? It depends whether the OS and the application are NUMA Aware § Think of the Super Market lines memory CPU IOH 25 CPU memory

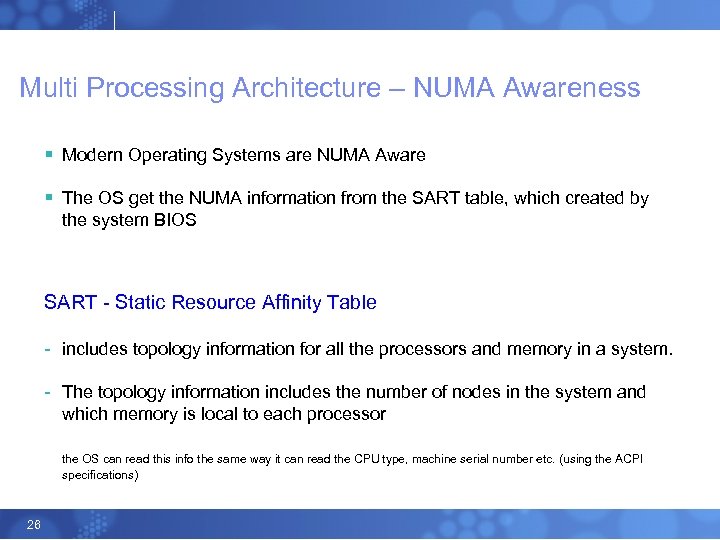

Multi Processing Architecture – NUMA Awareness § Modern Operating Systems are NUMA Aware § The OS get the NUMA information from the SART table, which created by the system BIOS SART - Static Resource Affinity Table - includes topology information for all the processors and memory in a system. - The topology information includes the number of nodes in the system and which memory is local to each processor the OS can read this info the same way it can read the CPU type, machine serial number etc. (using the ACPI specifications) 26

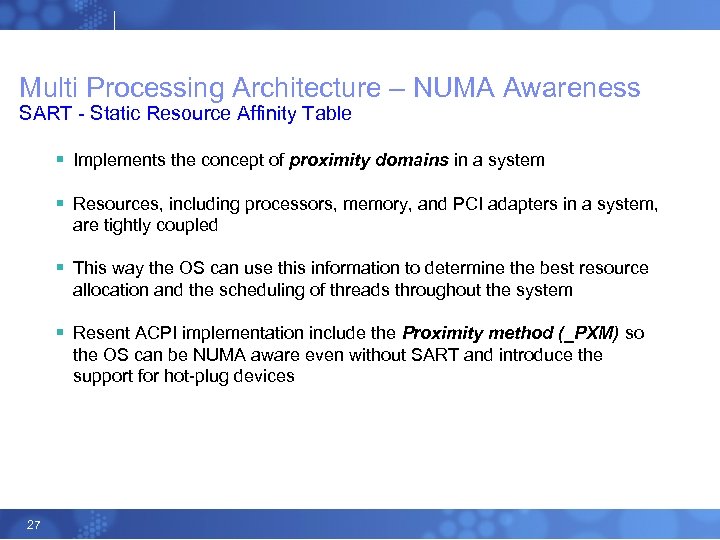

Multi Processing Architecture – NUMA Awareness SART - Static Resource Affinity Table § Implements the concept of proximity domains in a system § Resources, including processors, memory, and PCI adapters in a system, are tightly coupled § This way the OS can use this information to determine the best resource allocation and the scheduling of threads throughout the system § Resent ACPI implementation include the Proximity method (_PXM) so the OS can be NUMA aware even without SART and introduce the support for hot-plug devices 27

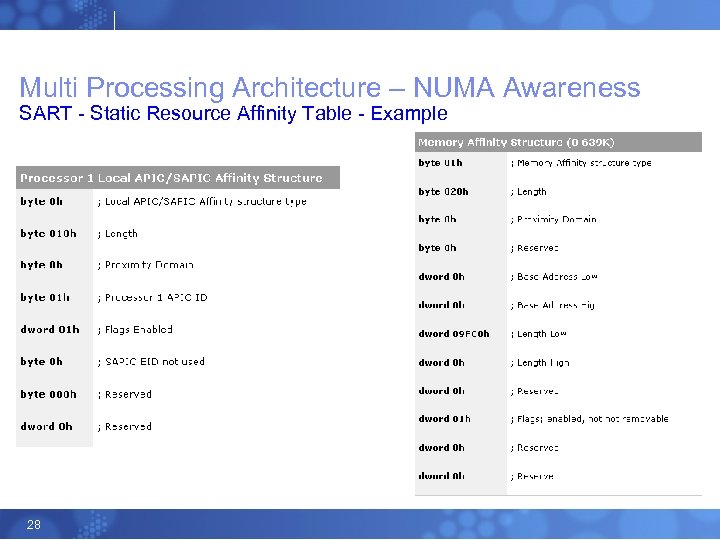

Multi Processing Architecture – NUMA Awareness SART - Static Resource Affinity Table - Example 28

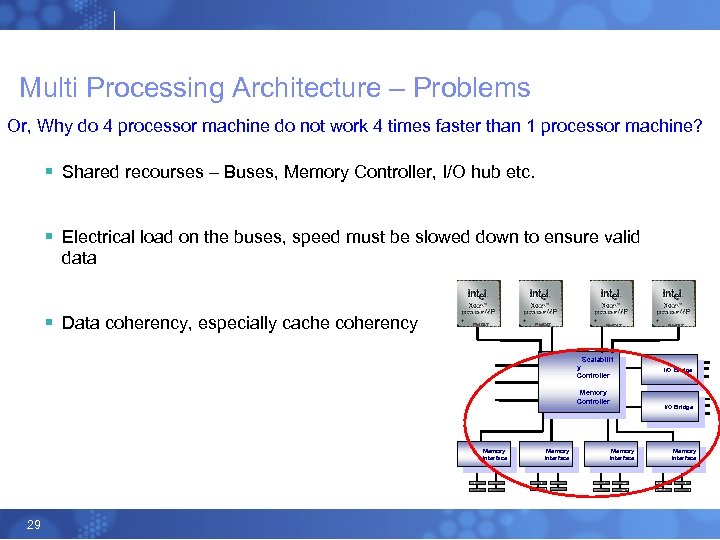

Multi Processing Architecture – Problems Or, Why do 4 processor machine do not work 4 times faster than 1 processor machine? § Shared recourses – Buses, Memory Controller, I/O hub etc. § Electrical load on the buses, speed must be slowed down to ensure valid data § Data coherency, especially cache coherency EM 64 T Scalabilit y Controller Memory Controller I/O Bridge Memory Interface 29 Memory Interface

Multi Processing Architecture Cache Coherency Protocols – the MESI protocol § Intel Implementation § MESI stands for Modified, Exclusive, Shared, and Invalid § One of these four states is assigned to every data element stored in each CPU cache using two additional bit per cache line § On each processor data load into cache, the processor must broadcast to all other processors in the system to check their caches to see if they have the requested data § These broadcasts are called snoop cycles, and they must occur during every memory read or write operations 30

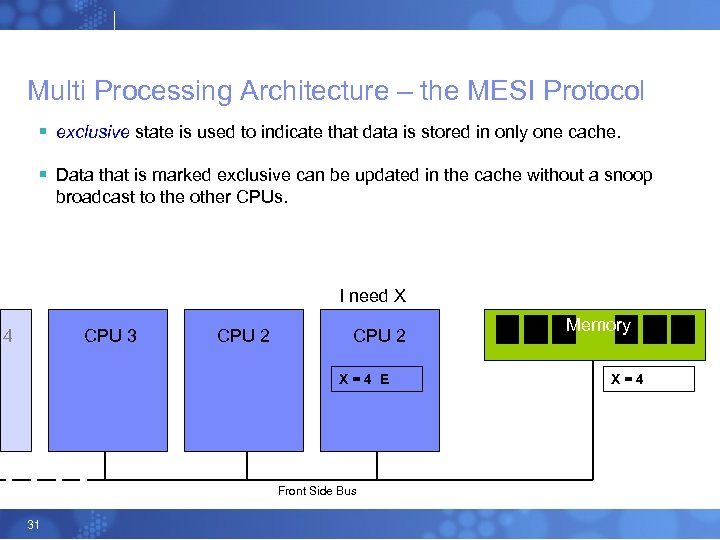

Multi Processing Architecture – the MESI Protocol § exclusive state is used to indicate that data is stored in only one cache. § Data that is marked exclusive can be updated in the cache without a snoop broadcast to the other CPUs. I need X 4 CPU 3 CPU 2 X=4 E Front Side Bus 31 Memory X=4

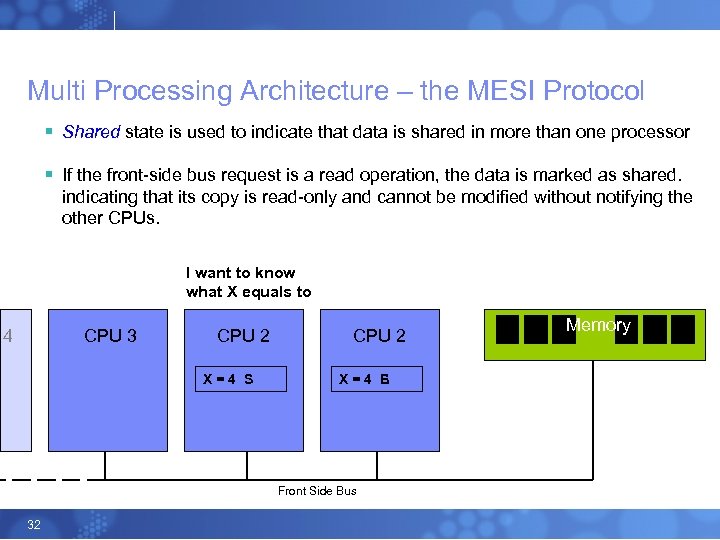

Multi Processing Architecture – the MESI Protocol § Shared state is used to indicate that data is shared in more than one processor § If the front-side bus request is a read operation, the data is marked as shared. indicating that its copy is read-only and cannot be modified without notifying the other CPUs. I want to know what X equals to 4 CPU 3 CPU 2 X=4 S CPU 2 X=4 E S Front Side Bus 32 Memory

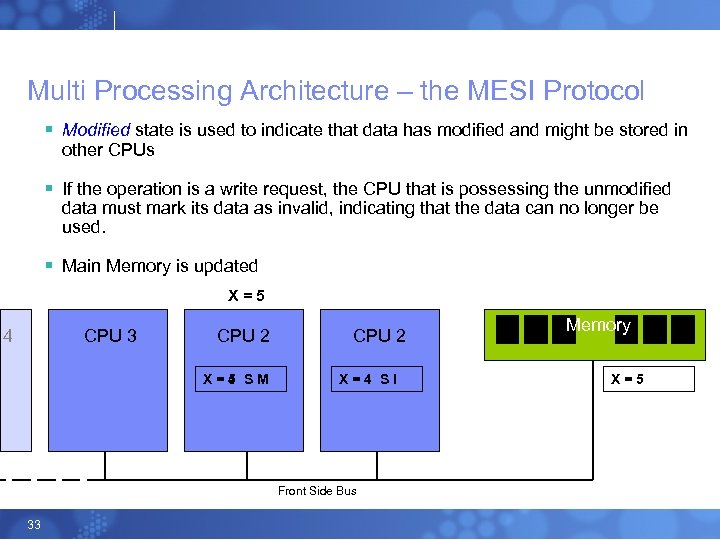

Multi Processing Architecture – the MESI Protocol § Modified state is used to indicate that data has modified and might be stored in other CPUs § If the operation is a write request, the CPU that is possessing the unmodified data must mark its data as invalid, indicating that the data can no longer be used. § Main Memory is updated X=5 4 CPU 3 CPU 2 X=4 SM 5 CPU 2 X=4 SI Front Side Bus 33 Memory X=5

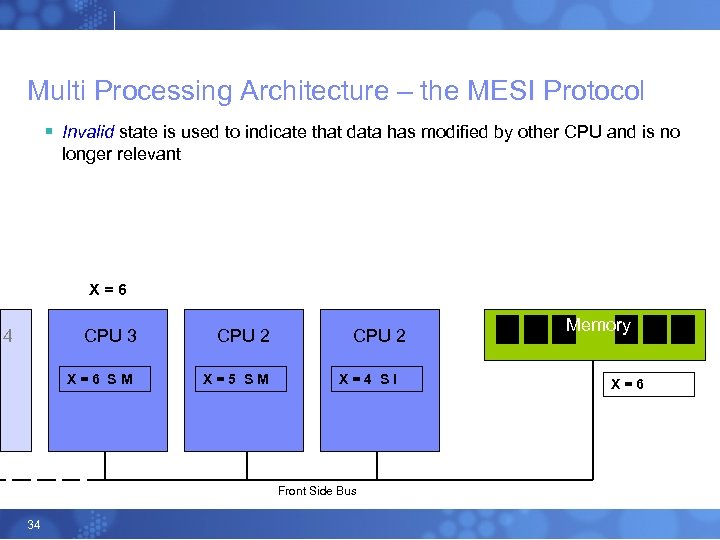

Multi Processing Architecture – the MESI Protocol § Invalid state is used to indicate that data has modified by other CPU and is no longer relevant X=6 4 CPU 3 X=6 SM CPU 2 X=5 SM I CPU 2 X=4 SI Front Side Bus 34 Memory X=6

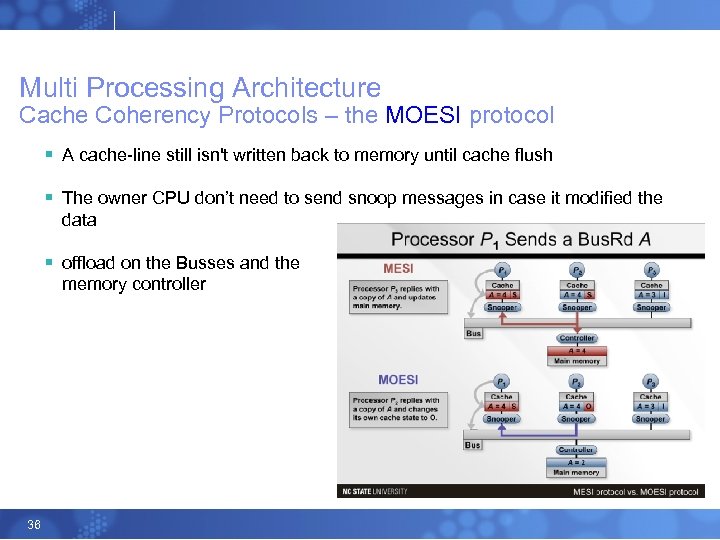

Multi Processing Architecture Cache Coherency Protocols – the MOESI protocol § AMD Implementation – Better for NUMA § The MOESI protocol expands the MESI protocol with yet another cache line status flag namely the owner status (thus the O in MOESI). § After the update of a cache line, the cache line is not written back to system memory but is flagged as an owner. § When another CPU issues a read, it gets its data from the owner’s cache rather than from slower memory 35

Multi Processing Architecture Cache Coherency Protocols – the MOESI protocol § A cache-line still isn't written back to memory until cache flush § The owner CPU don’t need to send snoop messages in case it modified the data § offload on the Busses and the memory controller 36

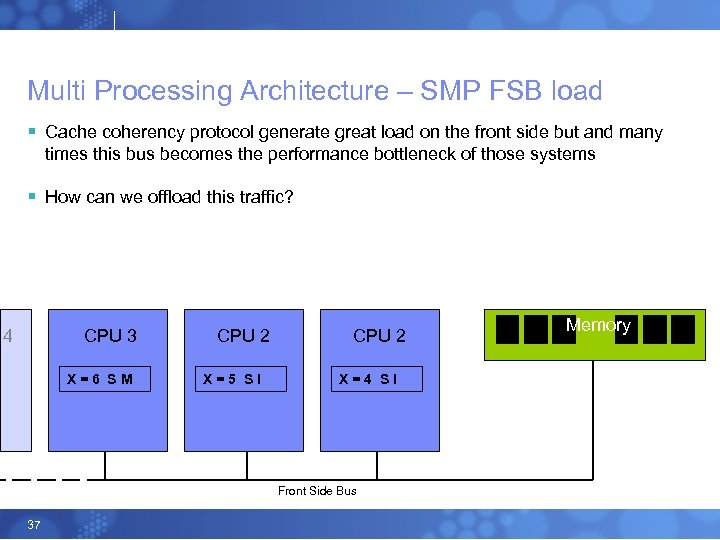

Multi Processing Architecture – SMP FSB load § Cache coherency protocol generate great load on the front side but and many times this bus becomes the performance bottleneck of those systems § How can we offload this traffic? 4 CPU 3 X=6 SM CPU 2 X=5 SI CPU 2 X=4 SI Front Side Bus 37 Memory

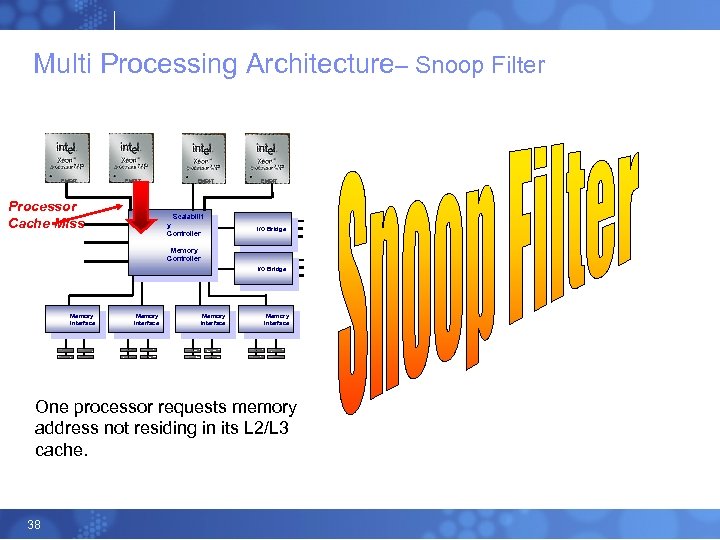

Multi Processing Architecture– Snoop Filter EM 64 T Processor Cache Miss Scalabilit y Controller I/O Bridge Memory Controller I/O Bridge Memory Interface One processor requests memory address not residing in its L 2/L 3 cache. 38

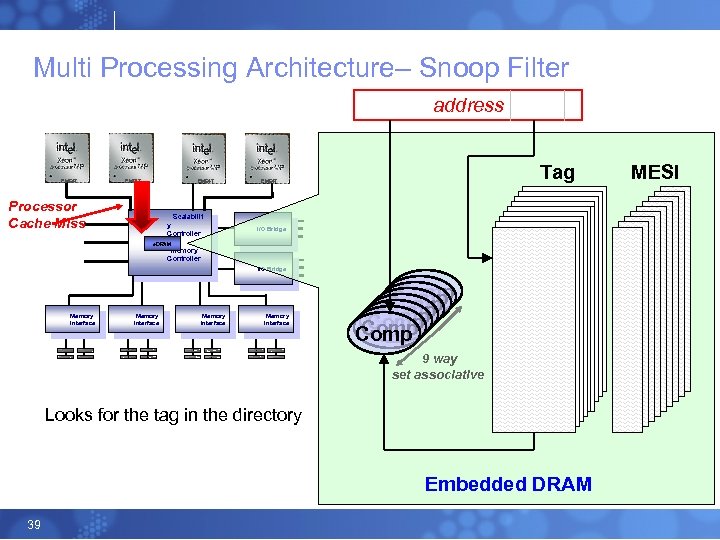

Multi Processing Architecture– Snoop Filter address EM 64 T Tag EM 64 T Processor Cache Miss Scalabilit y Controller I/O Bridge e. DRAM Memory Controller I/O Bridge Memory Interface Comp Comp 9 way set associative Looks for the tag in the directory Embedded DRAM 39 MESI

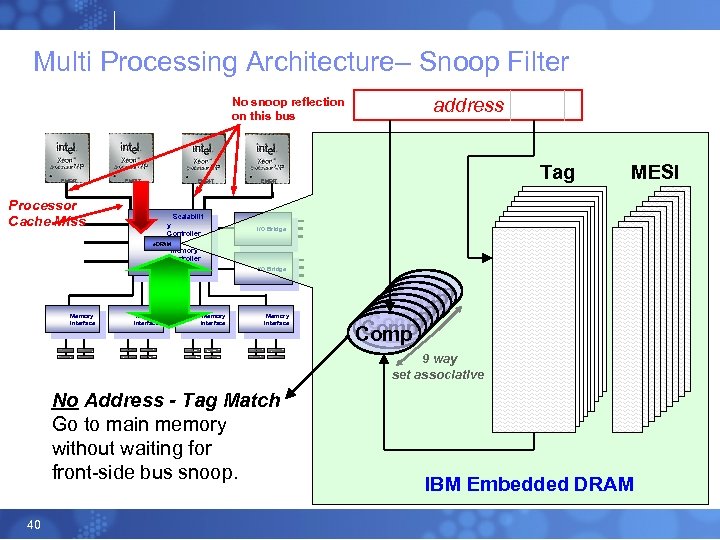

Multi Processing Architecture– Snoop Filter No snoop reflection on this bus EM 64 T address Tag EM 64 T MESI Processor Cache Miss Scalabilit y Controller I/O Bridge e. DRAM Memory Controller I/O Bridge Memory Interface Comp Comp 9 way set associative No Address - Tag Match Go to main memory without waiting for front-side bus snoop. 40 IBM Embedded DRAM

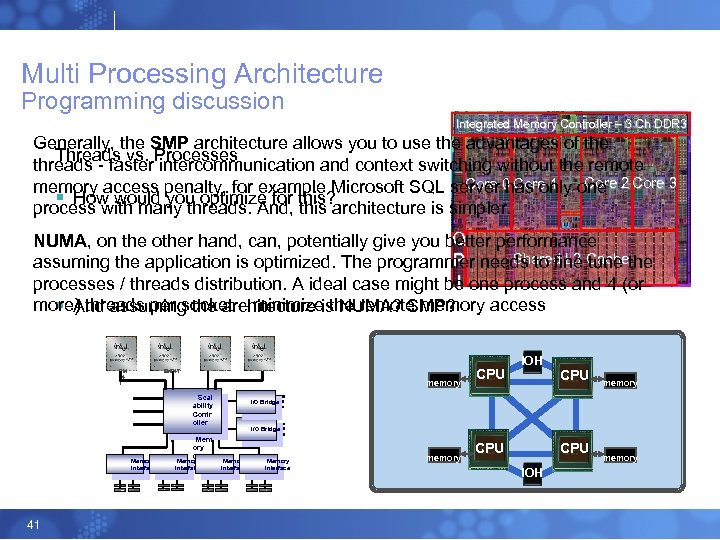

Multi Processing Architecture Programming discussion Integrated Memory Controller – 3 Ch DDR 3 Generally, the SMP architecture allows you to use the advantages of the Threads vs. Processes threads - faster intercommunication and context switching without the remote Core 2 Core 0 Core 1 memory access penalty, for example Microsoft SQL server has only one Core 3 § How would you optimize for this? process with many threads. And, this architecture is simpler. Q NUMA, on the other hand, can, potentially give you better performance Shared L 3 Cache P assuming the application is optimized. The programmer needs to fine tune the I processes / threads distribution. A ideal case might be one process and 4 (or more) threads per socket – minimize the remote memory access § And assuming the architecture is NUMA? SMP? EM 64 T EM 64 T memory Scal ability Contr oller Memory Interface 41 EM 64 T Mem ory Contr Memory oller Interface CPU IOH CPU memory I/O Bridge Memory Interface memory CPU IOH memory

Hyper-Threading Technology 42

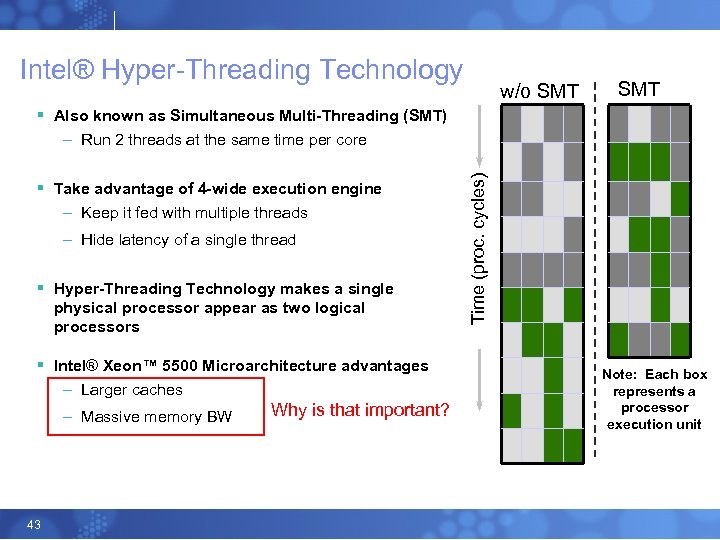

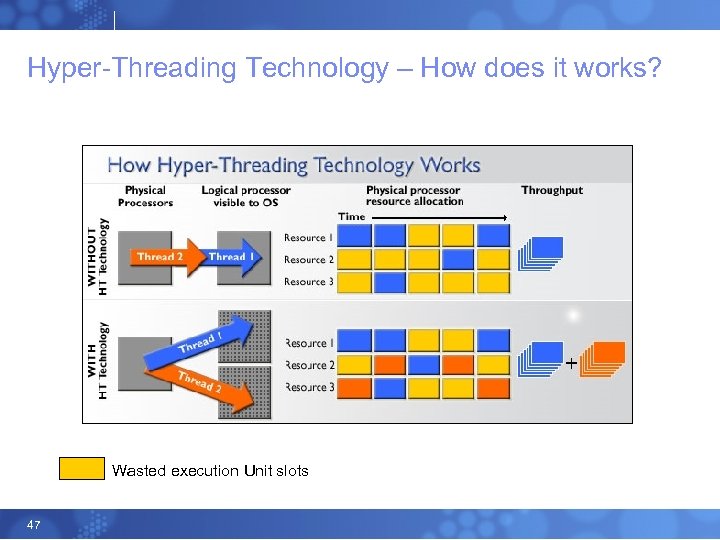

Intel® Hyper-Threading Technology w/o SMT § Also known as Simultaneous Multi-Threading (SMT) § Take advantage of 4 -wide execution engine – Keep it fed with multiple threads – Hide latency of a single thread § Hyper-Threading Technology makes a single physical processor appear as two logical processors § Intel® Xeon™ 5500 Microarchitecture advantages – Larger caches – Massive memory BW 43 Why is that important? Time (proc. cycles) – Run 2 threads at the same time per core Note: Each box represents a processor execution unit

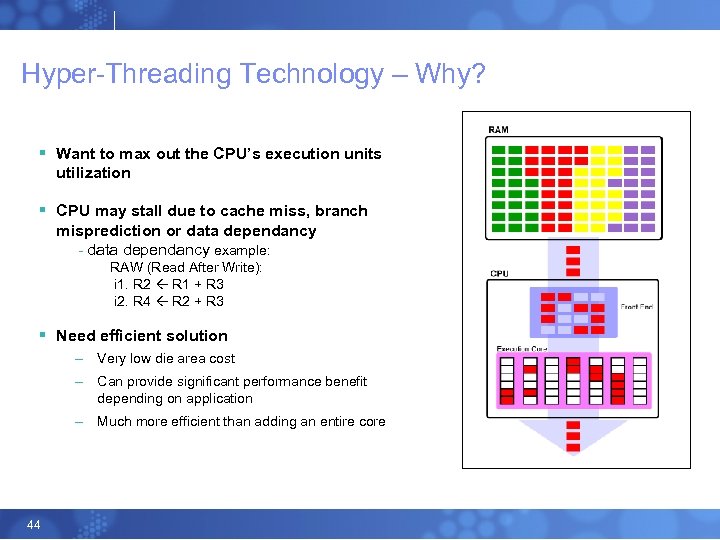

Hyper-Threading Technology – Why? § Want to max out the CPU’s execution units utilization § CPU may stall due to cache miss, branch misprediction or data dependancy - data dependancy example: RAW (Read After Write): i 1. R 2 R 1 + R 3 i 2. R 4 R 2 + R 3 § Need efficient solution – Very low die area cost – Can provide significant performance benefit depending on application – Much more efficient than adding an entire core 44

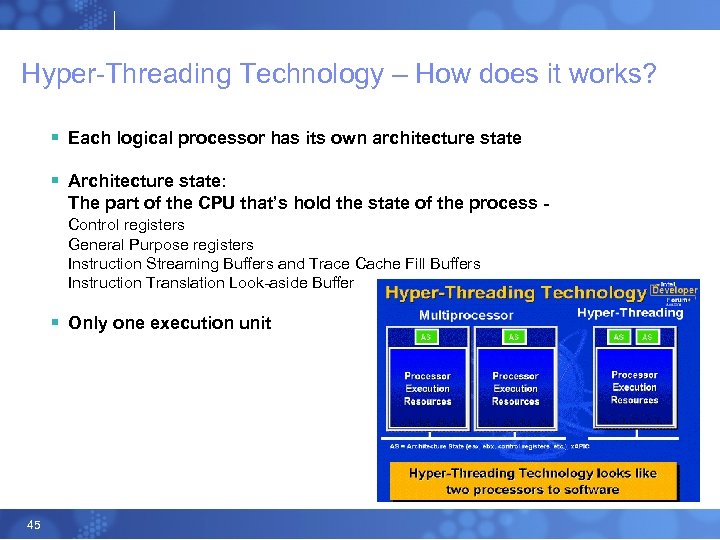

Hyper-Threading Technology – How does it works? § Each logical processor has its own architecture state § Architecture state: The part of the CPU that’s hold the state of the process Control registers General Purpose registers Instruction Streaming Buffers and Trace Cache Fill Buffers Instruction Translation Look-aside Buffer § Only one execution unit 45

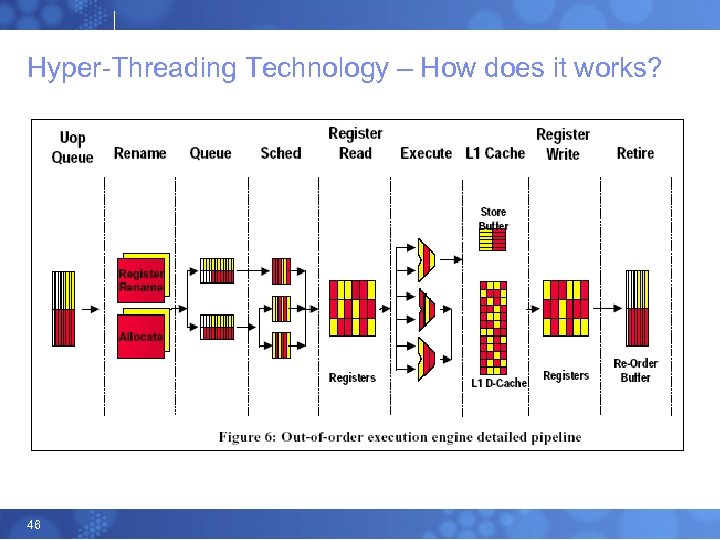

Hyper-Threading Technology – How does it works? 46

Hyper-Threading Technology – How does it works? Wasted execution Unit slots 47

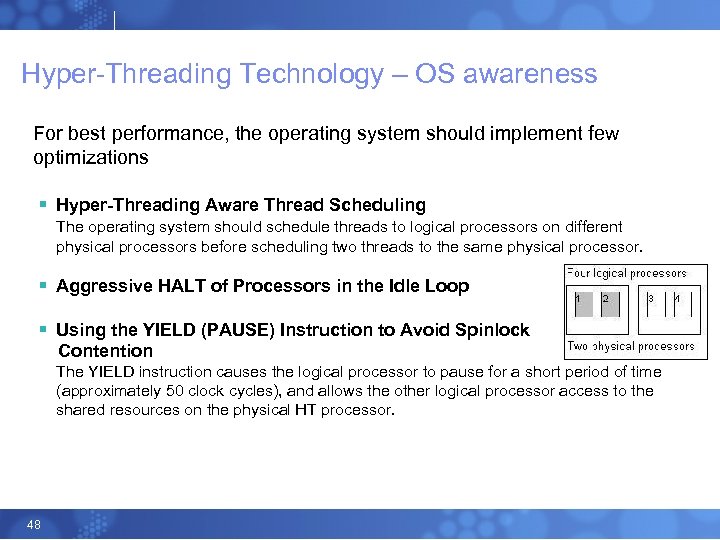

Hyper-Threading Technology – OS awareness For best performance, the operating system should implement few optimizations § Hyper-Threading Aware Thread Scheduling The operating system should schedule threads to logical processors on different physical processors before scheduling two threads to the same physical processor. § Aggressive HALT of Processors in the Idle Loop § Using the YIELD (PAUSE) Instruction to Avoid Spinlock Contention The YIELD instruction causes the logical processor to pause for a short period of time (approximately 50 clock cycles), and allows the other logical processor access to the shared resources on the physical HT processor. 48

Hyper-Threading Technology – Application awareness § Operating System expose Hyper-Threading API for example - Get. Logical. Processor. Information for Windows § To optimize the application performance benefit on HT-enabled systems, the application should ensure that the threads executing on the two logical processors have minimal dependencies on the same shared resources on the same physical processor. § Bad HT thread affinity example Threads that perform similar actions and stall for the same reasons should not be scheduled on the same physical processor. Too. Much. Milk? The benefit of HT is that shared processor resources can be used by one logical processor while the other logical processor is stalled. This does not work when both logical processors are stalled for the same reason. 49

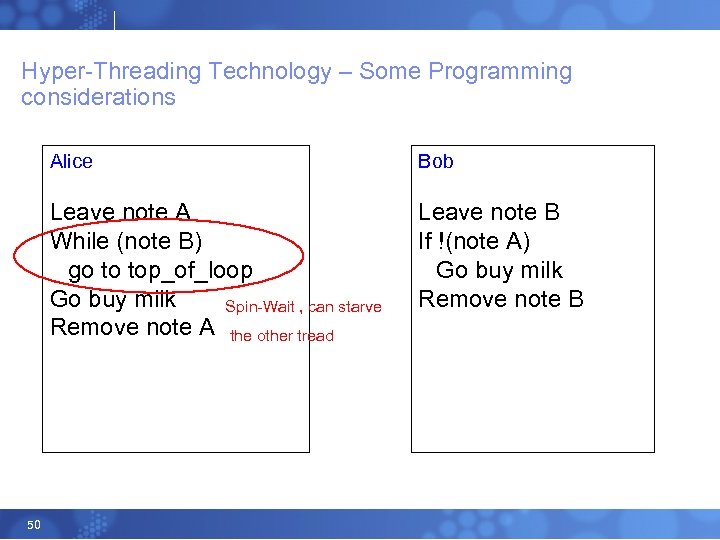

Hyper-Threading Technology – Some Programming considerations Alice Leave note A While (note B) go to top_of_loop Go buy milk Spin-Wait , can starve Remove note A the other tread 50 Bob Leave note B If !(note A) Go buy milk Remove note B

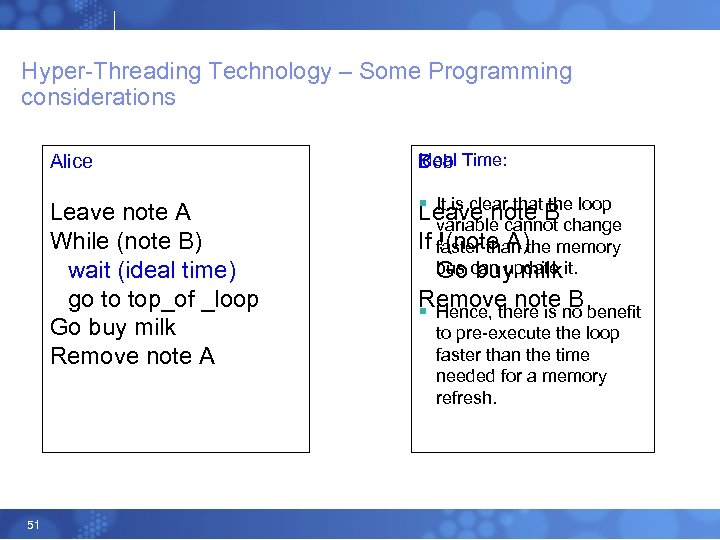

Hyper-Threading Technology – Some Programming considerations Alice Leave note A While (note B) wait (ideal time) go to top_of _loop Go buy milk Remove note A 51 Ideal Time: Bob § It is clear that the loop Leave note B variable cannot change If !(note A) faster than the memory bus can update it. Go buy milk Remove note B § Hence, there is no benefit to pre-execute the loop faster than the time needed for a memory refresh.

Hyper-Threading Technology – Hardware assists Two new (2004) instructions directly aimed at the spin-wait issue § Monitor - watches a specified area of memory for write activity § Its companion instruction, mwait, associates writes to this memory block to waking up a specific processor § Since updating a variable is a write activity, by specifying its memory address and using mwait, a processor can simply be suspended until the variable is updated. Effectively, this enables a wait on a variable without spinning a loop. 52

High-Level Atomic Operation in Hardware 53

Atomic Operation in Hardware LOCK Instruction § x 86 Architecture implement the LOCK prefix § Causes the processor’s LOCK# signal to be asserted during the execution of the accompanying instruction (turns it to atomic instruction) § The LOCK signal insures that the processor has exclusive use of any shared memory while the signal is asserted atomic instructions (Intel at least) • Increment • Decrement • Exchange • Fetch and add 54 (lock inc r/m) (lock dec r/m) (xchg r/m, r) Always executed with the LOCK prefix (lock xadd r/m, r)

Atomic Operation in Hardware Read-Modify-Write § Read-modify-write is a class of high level atomic operations which both read a memory location and write a new value into it simultaneously § Typically they are used to implement mutexes or semaphores § Examples: - Compare and Swap - Fetch and Add - Test and Set - Load Link / Store Conditional 55

Atomic Operation in Hardware Compare and Swap / Exchange § CMPXCHG r/m , r IF accumulator == DEST THEN ZF = 1 DEST = SRC ELSE ZF = 0 accumulator = DEST FI; § ZF indicates whether the swap has accrued § The destination operand receives a write cycle without regard to the result of the comparison § Needs the LOCK prefix to ensure atomically 56

Atomic Operation in Hardware Compare and Swap / Exchange § OS system calls and programming languages automatically implement the LOCK prefix #include <sys/atomic_op. h< boolean_t compare_and_swap ( word_addr, old_val_addr, new_val) atomic_p word_addr; int *old_val_addr; int new_val; § Available in Java Atomic. Integer. compare. And. Set(int, int) -> bool 57

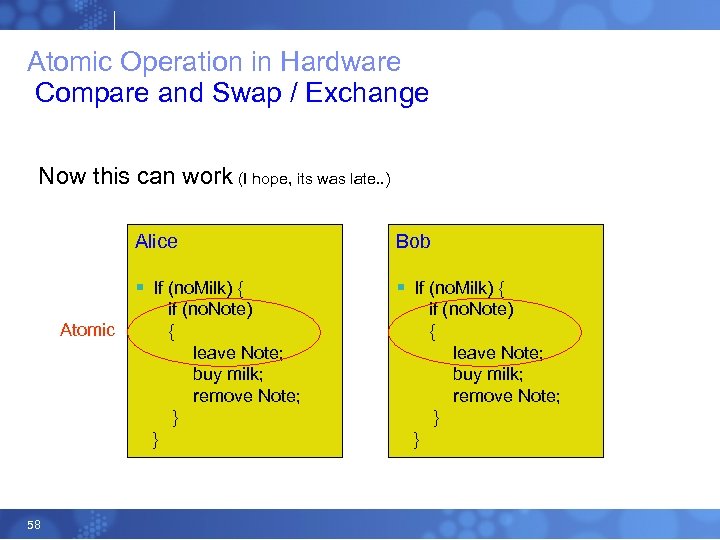

Atomic Operation in Hardware Compare and Swap / Exchange Now this can work (I hope, its was late. . ) Alice § If (no. Milk) { Atomic 58 Bob § If (no. Milk) { if (no. Note) { leave Note; buy milk; remove Note; } }

Atomic Operation in Hardware Load-Link/Store-Conditional § RISC / MIPS implementation § The LL and SC instructions are primitive instructions used to perform a readmodify-write operation to storage § the use of the LL and SC instructions ensures that no other processor or mechanism has modified the target memory location between the time the LL instruction is executed and the time the SC § The LL instruction, in addition to doing a simple load, has the side effect of setting a user transparent bit called the load link bit(LLbit), similar to the cache coherency protocols § The LLbit forms a breakable link between the LL instruction and asubsequent SC instruction 59

Atomic Operation in Hardware Load-Link/Store-Conditional § The SC performs a simple store if and only if the LLbit is set when the store is executed. If the LLbit is not set, then the store will fail to execute § LLbit is reset upon occurrence of any event that even has potential to modify the lock-variable while the sequence of code between LL and SC is being executed § The most obvious case where the link will be broken is when an invalidate occurs to the cache line which was the subject of load 60

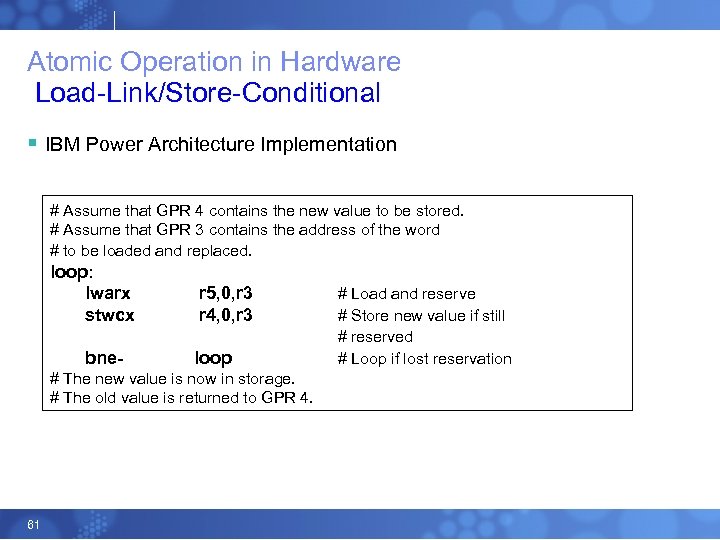

Atomic Operation in Hardware Load-Link/Store-Conditional § IBM Power Architecture Implementation # Assume that GPR 4 contains the new value to be stored. # Assume that GPR 3 contains the address of the word # to be loaded and replaced. loop: lwarx stwcx r 5, 0, r 3 r 4, 0, r 3 bne- loop # The new value is now in storage. # The old value is returned to GPR 4. 61 # Load and reserve # Store new value if still # reserved # Loop if lost reservation

Atomic Operation in Hardware Load-Link/Store-Conditional - Usage The ABA problem - Suppose that the value of V is A. - Try a CAS to change A to X. - Another thread can change A to B and back to A. - The Compare-And-Swap won’t see it and will succeed § The obvious case is working with lists, or every other data structure using pointers § Note: CAS do have a solution for this using sort of a counter to changes. 62

Thank You 63

df55e82daf05136b05c1bdd9858d0fd5.ppt