1fba51ebf275a192cff8e29947458cca.ppt

- Количество слайдов: 116

Motion estimation Introduction to Computer Vision CS 223 B, Winter 2005 Richard Szeliski 2/1/2005 Motion estimation

Motion estimation Introduction to Computer Vision CS 223 B, Winter 2005 Richard Szeliski 2/1/2005 Motion estimation

Why Visual Motion? Visual Motion can be annoying • Camera instabilities, jitter • Measure it. Remove it. Visual Motion indicates dynamics in the scene • Moving objects, behavior • Track objects and analyze trajectories Visual Motion reveals spatial layout of the scene • Motion parallax 2/1/2005 Motion estimation 2

Why Visual Motion? Visual Motion can be annoying • Camera instabilities, jitter • Measure it. Remove it. Visual Motion indicates dynamics in the scene • Moving objects, behavior • Track objects and analyze trajectories Visual Motion reveals spatial layout of the scene • Motion parallax 2/1/2005 Motion estimation 2

Today’s lecture Motion estimation • background: image pyramids, image warping • application: image morphing • parametric motion (review) • optic flow • layered motion models 2/1/2005 Motion estimation 3

Today’s lecture Motion estimation • background: image pyramids, image warping • application: image morphing • parametric motion (review) • optic flow • layered motion models 2/1/2005 Motion estimation 3

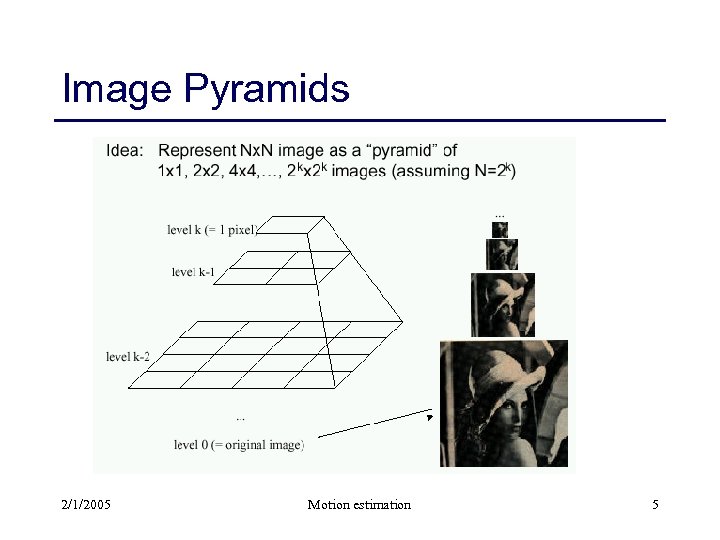

Image Pyramids 2/1/2005 Motion estimation

Image Pyramids 2/1/2005 Motion estimation

Image Pyramids 2/1/2005 Motion estimation 5

Image Pyramids 2/1/2005 Motion estimation 5

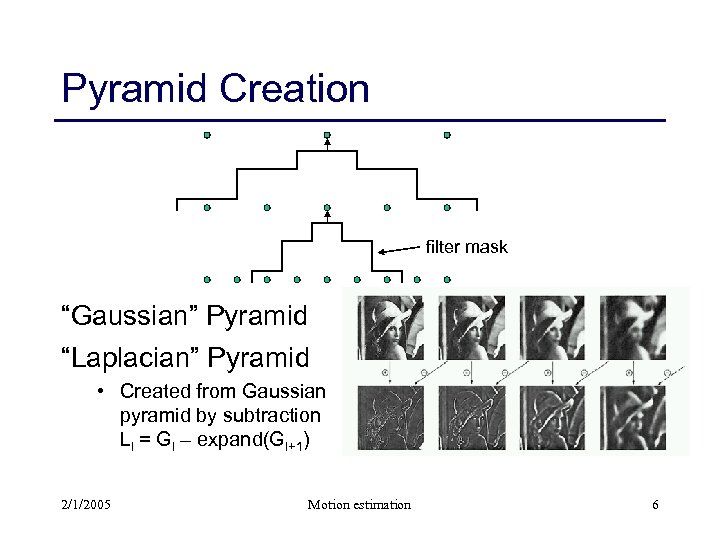

Pyramid Creation filter mask “Gaussian” Pyramid “Laplacian” Pyramid • Created from Gaussian pyramid by subtraction Ll = Gl – expand(Gl+1) 2/1/2005 Motion estimation 6

Pyramid Creation filter mask “Gaussian” Pyramid “Laplacian” Pyramid • Created from Gaussian pyramid by subtraction Ll = Gl – expand(Gl+1) 2/1/2005 Motion estimation 6

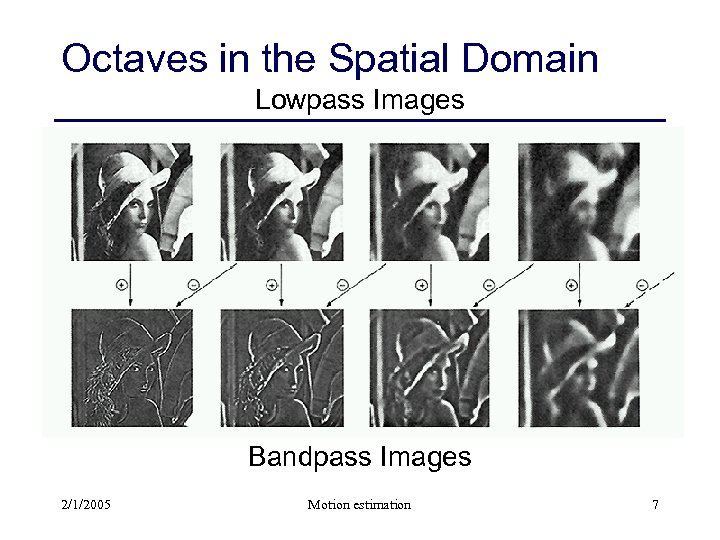

Octaves in the Spatial Domain Lowpass Images Bandpass Images 2/1/2005 Motion estimation 7

Octaves in the Spatial Domain Lowpass Images Bandpass Images 2/1/2005 Motion estimation 7

Pyramids Advantages of pyramids • Faster than Fourier transform • Avoids “ringing” artifacts Many applications • • small images faster to process good for multiresolution processing compression progressive transmission Known as “mip-maps” in graphics community Precursor to wavelets • Wavelets also have these advantages 2/1/2005 Motion estimation 8

Pyramids Advantages of pyramids • Faster than Fourier transform • Avoids “ringing” artifacts Many applications • • small images faster to process good for multiresolution processing compression progressive transmission Known as “mip-maps” in graphics community Precursor to wavelets • Wavelets also have these advantages 2/1/2005 Motion estimation 8

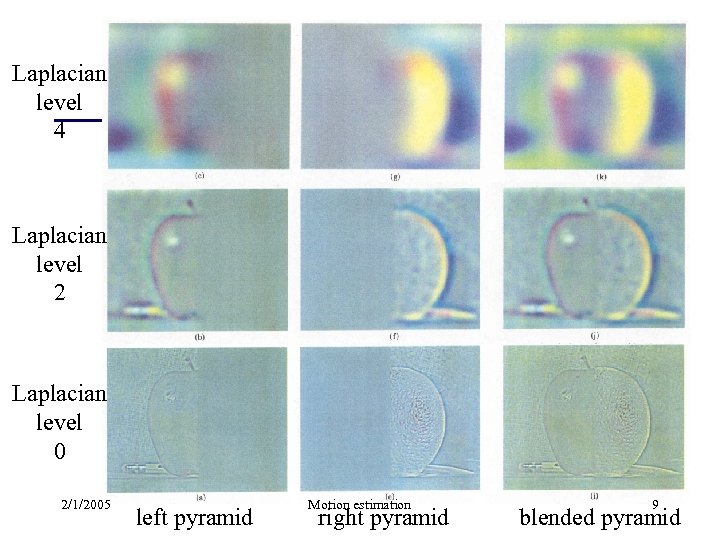

Laplacian level 4 Laplacian level 2 Laplacian level 0 2/1/2005 left pyramid Motion estimation right pyramid 9 blended pyramid

Laplacian level 4 Laplacian level 2 Laplacian level 0 2/1/2005 left pyramid Motion estimation right pyramid 9 blended pyramid

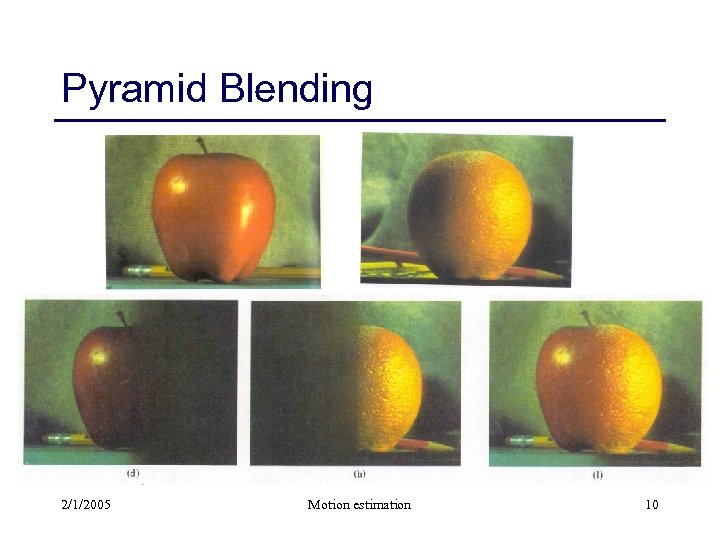

Pyramid Blending 2/1/2005 Motion estimation 10

Pyramid Blending 2/1/2005 Motion estimation 10

Image Warping 2/1/2005 Motion estimation

Image Warping 2/1/2005 Motion estimation

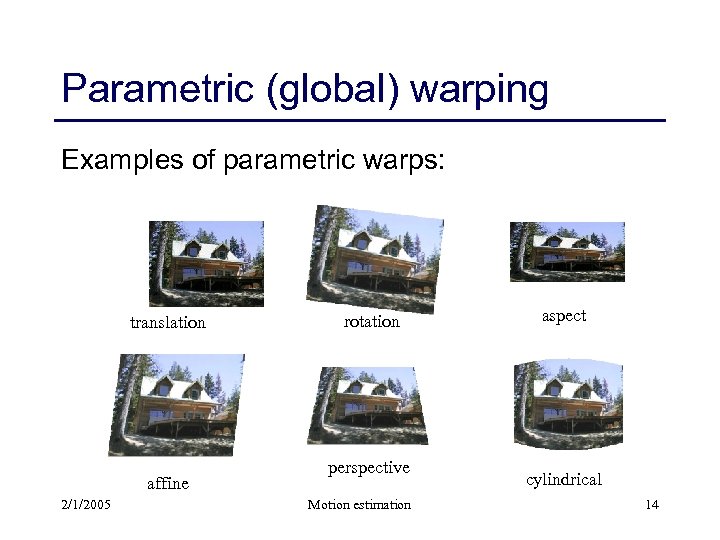

Parametric (global) warping Examples of parametric warps: translation affine 2/1/2005 rotation perspective Motion estimation aspect cylindrical 14

Parametric (global) warping Examples of parametric warps: translation affine 2/1/2005 rotation perspective Motion estimation aspect cylindrical 14

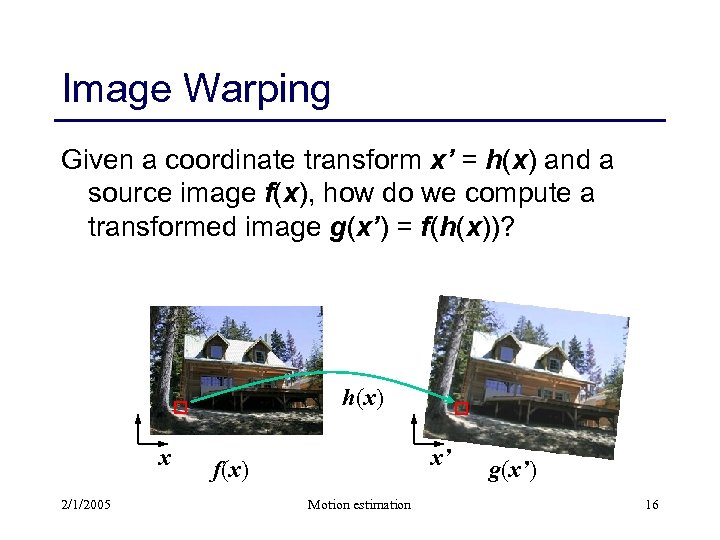

Image Warping Given a coordinate transform x’ = h(x) and a source image f(x), how do we compute a transformed image g(x’) = f(h(x))? h(x) x 2/1/2005 x’ f(x) Motion estimation g(x’) 16

Image Warping Given a coordinate transform x’ = h(x) and a source image f(x), how do we compute a transformed image g(x’) = f(h(x))? h(x) x 2/1/2005 x’ f(x) Motion estimation g(x’) 16

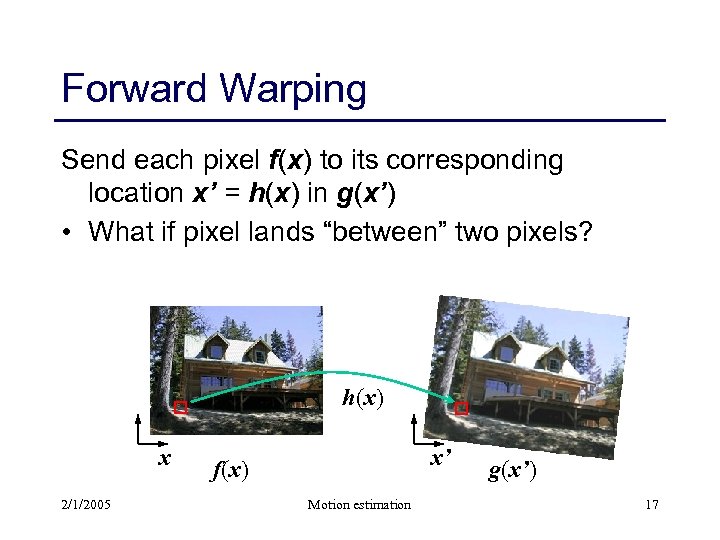

Forward Warping Send each pixel f(x) to its corresponding location x’ = h(x) in g(x’) • What if pixel lands “between” two pixels? h(x) x 2/1/2005 x’ f(x) Motion estimation g(x’) 17

Forward Warping Send each pixel f(x) to its corresponding location x’ = h(x) in g(x’) • What if pixel lands “between” two pixels? h(x) x 2/1/2005 x’ f(x) Motion estimation g(x’) 17

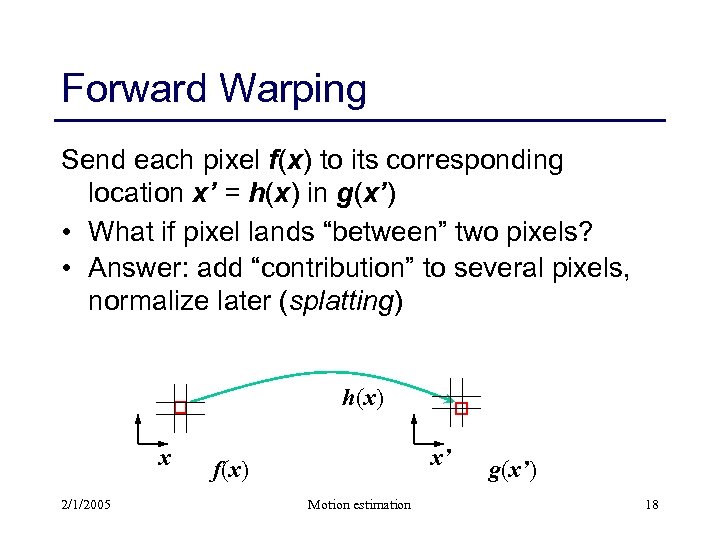

Forward Warping Send each pixel f(x) to its corresponding location x’ = h(x) in g(x’) • What if pixel lands “between” two pixels? • Answer: add “contribution” to several pixels, normalize later (splatting) h(x) x 2/1/2005 x’ f(x) Motion estimation g(x’) 18

Forward Warping Send each pixel f(x) to its corresponding location x’ = h(x) in g(x’) • What if pixel lands “between” two pixels? • Answer: add “contribution” to several pixels, normalize later (splatting) h(x) x 2/1/2005 x’ f(x) Motion estimation g(x’) 18

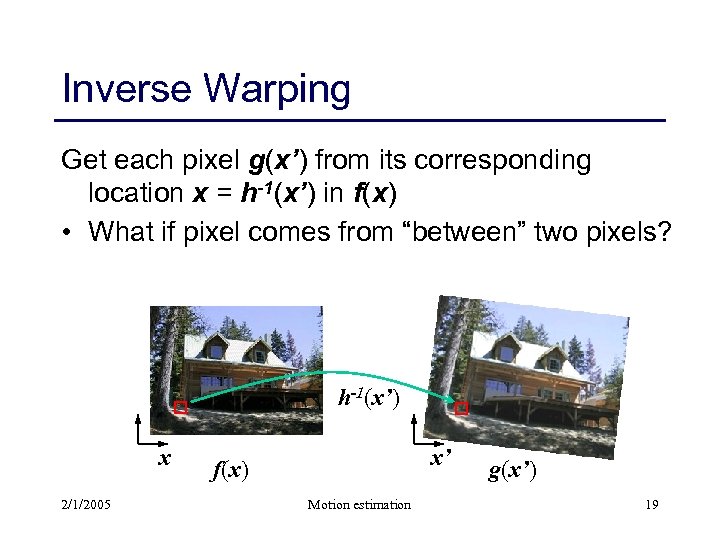

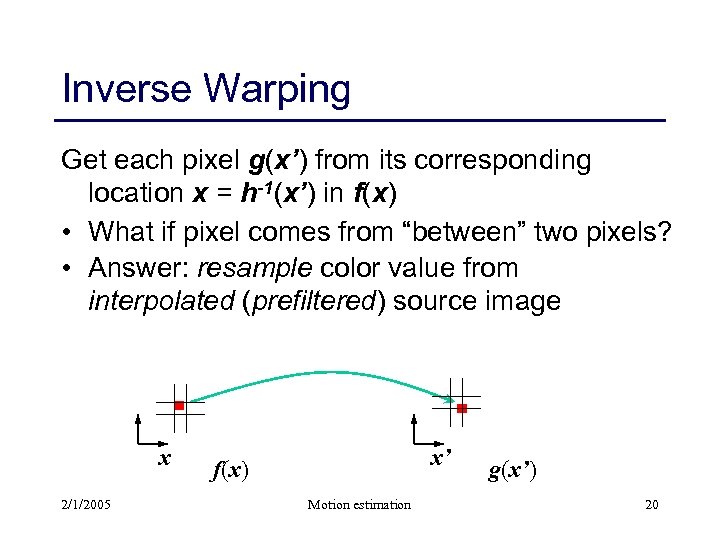

Inverse Warping Get each pixel g(x’) from its corresponding location x = h-1(x’) in f(x) • What if pixel comes from “between” two pixels? h-1(x’) x 2/1/2005 x’ f(x) Motion estimation g(x’) 19

Inverse Warping Get each pixel g(x’) from its corresponding location x = h-1(x’) in f(x) • What if pixel comes from “between” two pixels? h-1(x’) x 2/1/2005 x’ f(x) Motion estimation g(x’) 19

Inverse Warping Get each pixel g(x’) from its corresponding location x = h-1(x’) in f(x) • What if pixel comes from “between” two pixels? • Answer: resample color value from interpolated (prefiltered) source image x 2/1/2005 x’ f(x) Motion estimation g(x’) 20

Inverse Warping Get each pixel g(x’) from its corresponding location x = h-1(x’) in f(x) • What if pixel comes from “between” two pixels? • Answer: resample color value from interpolated (prefiltered) source image x 2/1/2005 x’ f(x) Motion estimation g(x’) 20

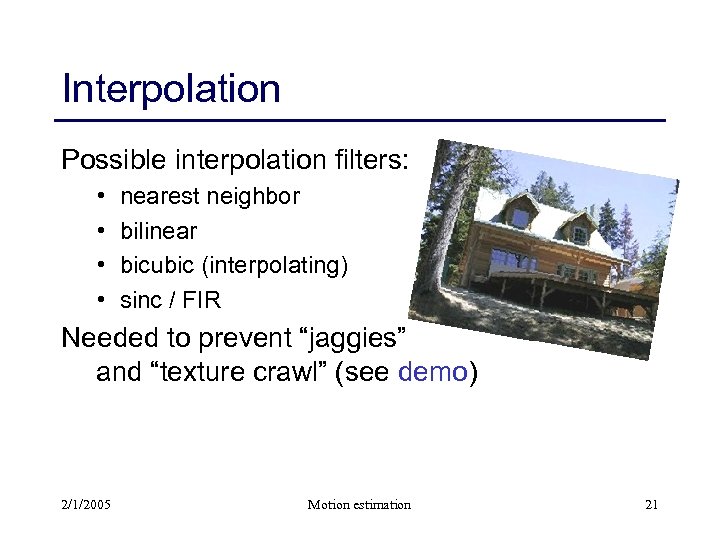

Interpolation Possible interpolation filters: • • nearest neighbor bilinear bicubic (interpolating) sinc / FIR Needed to prevent “jaggies” and “texture crawl” (see demo) 2/1/2005 Motion estimation 21

Interpolation Possible interpolation filters: • • nearest neighbor bilinear bicubic (interpolating) sinc / FIR Needed to prevent “jaggies” and “texture crawl” (see demo) 2/1/2005 Motion estimation 21

![Prefiltering Essential for downsampling (decimation) to prevent aliasing MIP-mapping [Williams’ 83]: 1. build pyramid Prefiltering Essential for downsampling (decimation) to prevent aliasing MIP-mapping [Williams’ 83]: 1. build pyramid](https://present5.com/presentation/1fba51ebf275a192cff8e29947458cca/image-19.jpg) Prefiltering Essential for downsampling (decimation) to prevent aliasing MIP-mapping [Williams’ 83]: 1. build pyramid (but what decimation filter? ): – block averaging – Burt & Adelson (5 -tap binomial) – 7 -tap wavelet-based filter (better) 2. trilinear interpolation – bilinear within each 2 adjacent levels – linear blend between levels (determined by pixel size) 2/1/2005 Motion estimation 22

Prefiltering Essential for downsampling (decimation) to prevent aliasing MIP-mapping [Williams’ 83]: 1. build pyramid (but what decimation filter? ): – block averaging – Burt & Adelson (5 -tap binomial) – 7 -tap wavelet-based filter (better) 2. trilinear interpolation – bilinear within each 2 adjacent levels – linear blend between levels (determined by pixel size) 2/1/2005 Motion estimation 22

Prefiltering Essential for downsampling (decimation) to prevent aliasing Other possibilities: • summed area tables • elliptically weighted Gaussians (EWA) [Heckbert’ 86] 2/1/2005 Motion estimation 23

Prefiltering Essential for downsampling (decimation) to prevent aliasing Other possibilities: • summed area tables • elliptically weighted Gaussians (EWA) [Heckbert’ 86] 2/1/2005 Motion estimation 23

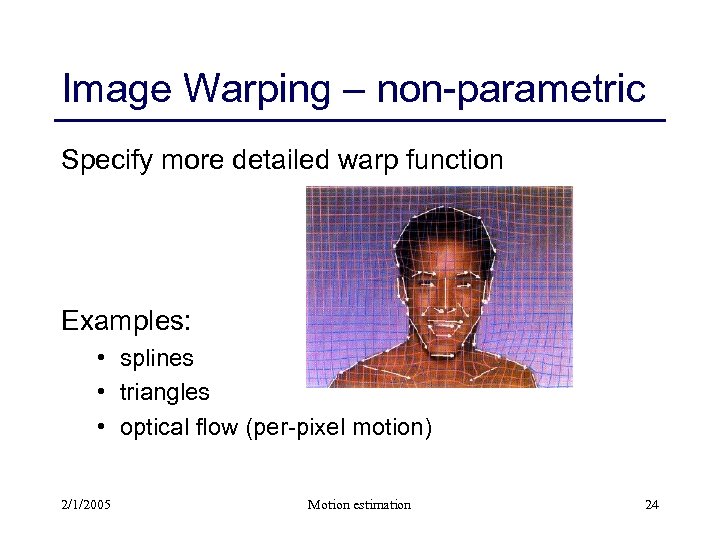

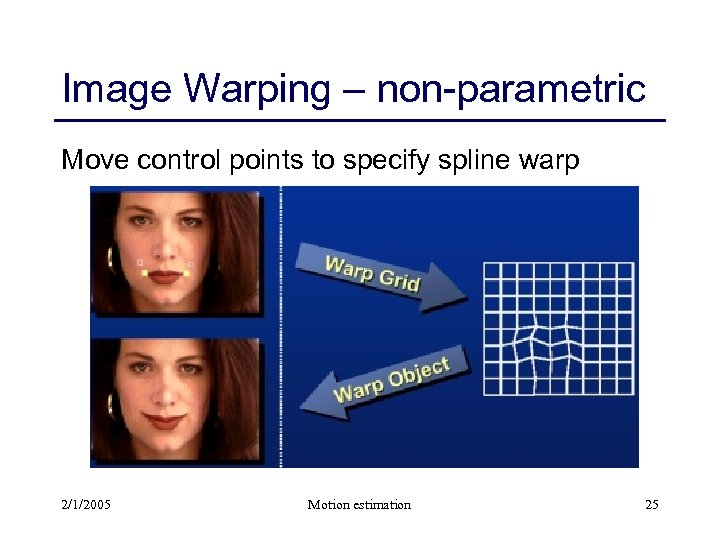

Image Warping – non-parametric Specify more detailed warp function Examples: • splines • triangles • optical flow (per-pixel motion) 2/1/2005 Motion estimation 24

Image Warping – non-parametric Specify more detailed warp function Examples: • splines • triangles • optical flow (per-pixel motion) 2/1/2005 Motion estimation 24

Image Warping – non-parametric Move control points to specify spline warp 2/1/2005 Motion estimation 25

Image Warping – non-parametric Move control points to specify spline warp 2/1/2005 Motion estimation 25

Image Morphing 2/1/2005 Motion estimation

Image Morphing 2/1/2005 Motion estimation

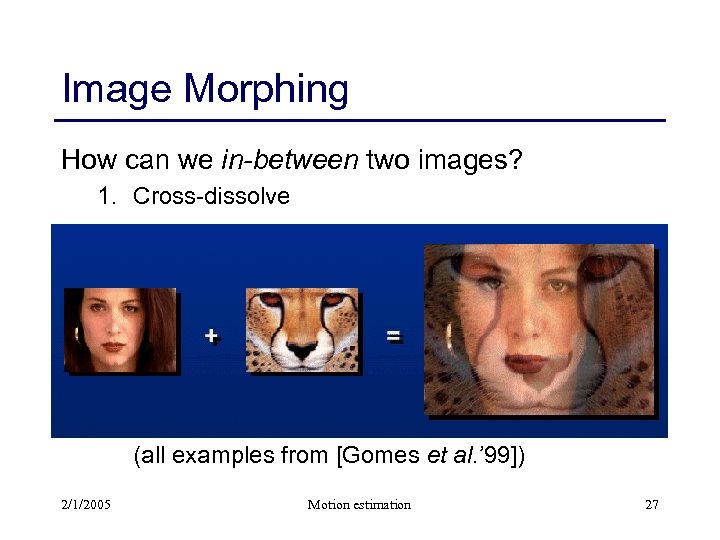

Image Morphing How can we in-between two images? 1. Cross-dissolve (all examples from [Gomes et al. ’ 99]) 2/1/2005 Motion estimation 27

Image Morphing How can we in-between two images? 1. Cross-dissolve (all examples from [Gomes et al. ’ 99]) 2/1/2005 Motion estimation 27

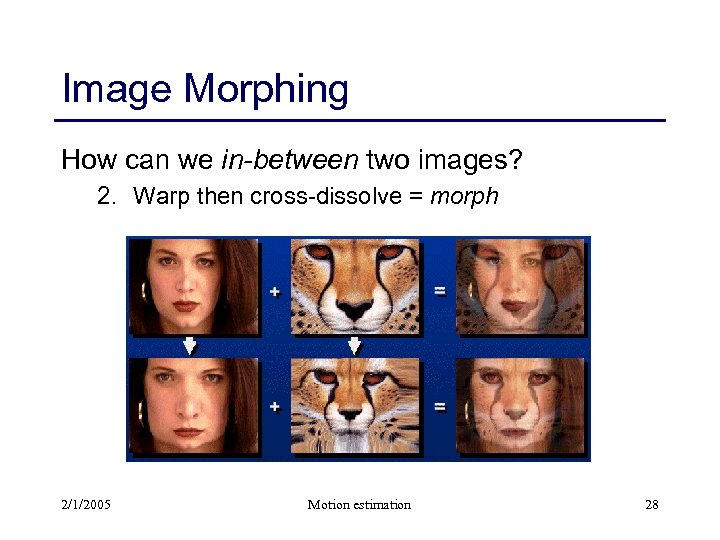

Image Morphing How can we in-between two images? 2. Warp then cross-dissolve = morph 2/1/2005 Motion estimation 28

Image Morphing How can we in-between two images? 2. Warp then cross-dissolve = morph 2/1/2005 Motion estimation 28

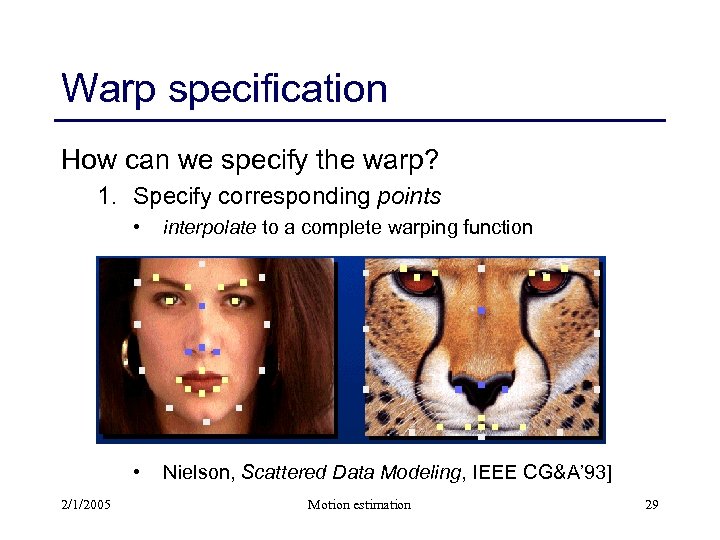

Warp specification How can we specify the warp? 1. Specify corresponding points • • 2/1/2005 interpolate to a complete warping function Nielson, Scattered Data Modeling, IEEE CG&A’ 93] Motion estimation 29

Warp specification How can we specify the warp? 1. Specify corresponding points • • 2/1/2005 interpolate to a complete warping function Nielson, Scattered Data Modeling, IEEE CG&A’ 93] Motion estimation 29

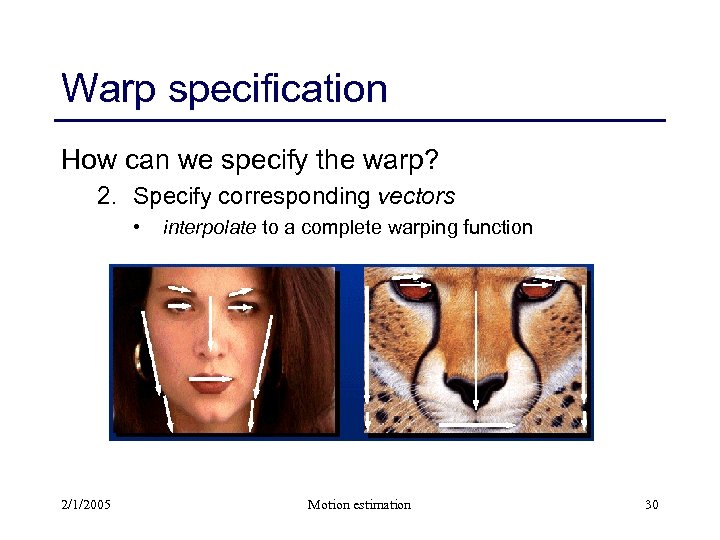

Warp specification How can we specify the warp? 2. Specify corresponding vectors • 2/1/2005 interpolate to a complete warping function Motion estimation 30

Warp specification How can we specify the warp? 2. Specify corresponding vectors • 2/1/2005 interpolate to a complete warping function Motion estimation 30

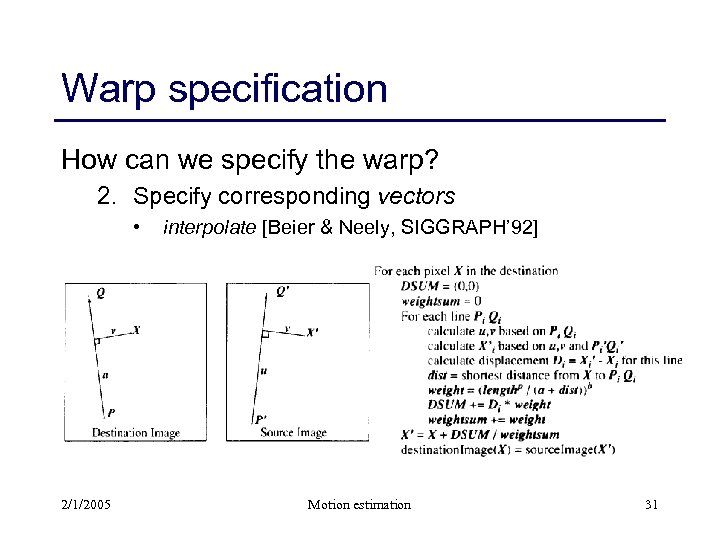

Warp specification How can we specify the warp? 2. Specify corresponding vectors • 2/1/2005 interpolate [Beier & Neely, SIGGRAPH’ 92] Motion estimation 31

Warp specification How can we specify the warp? 2. Specify corresponding vectors • 2/1/2005 interpolate [Beier & Neely, SIGGRAPH’ 92] Motion estimation 31

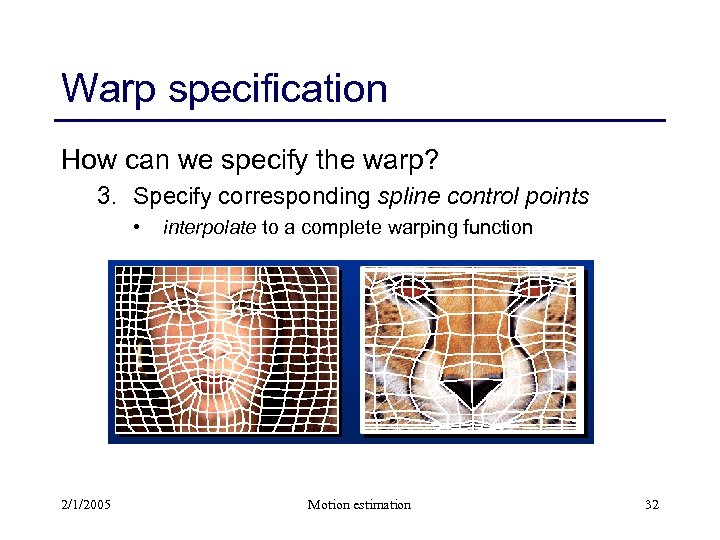

Warp specification How can we specify the warp? 3. Specify corresponding spline control points • 2/1/2005 interpolate to a complete warping function Motion estimation 32

Warp specification How can we specify the warp? 3. Specify corresponding spline control points • 2/1/2005 interpolate to a complete warping function Motion estimation 32

Final Morph Result 2/1/2005 Motion estimation 33

Final Morph Result 2/1/2005 Motion estimation 33

Motion estimation 2/1/2005 Motion estimation

Motion estimation 2/1/2005 Motion estimation

Classes of Techniques Feature-based methods • Extract salient visual features (corners, textured areas) and track them over multiple frames • Analyze the global pattern of motion vectors of these features • Sparse motion fields, but possibly robust tracking • Suitable especially when image motion is large (10 -s of pixels) Direct-methods • Directly recover image motion from spatio-temporal image brightness variations • Global motion parameters directly recovered without an intermediate feature motion calculation • Dense motion fields, but more sensitive to appearance variations • Suitable for video and when image motion is small (< 10 pixels) 2/1/2005 Motion estimation 35

Classes of Techniques Feature-based methods • Extract salient visual features (corners, textured areas) and track them over multiple frames • Analyze the global pattern of motion vectors of these features • Sparse motion fields, but possibly robust tracking • Suitable especially when image motion is large (10 -s of pixels) Direct-methods • Directly recover image motion from spatio-temporal image brightness variations • Global motion parameters directly recovered without an intermediate feature motion calculation • Dense motion fields, but more sensitive to appearance variations • Suitable for video and when image motion is small (< 10 pixels) 2/1/2005 Motion estimation 35

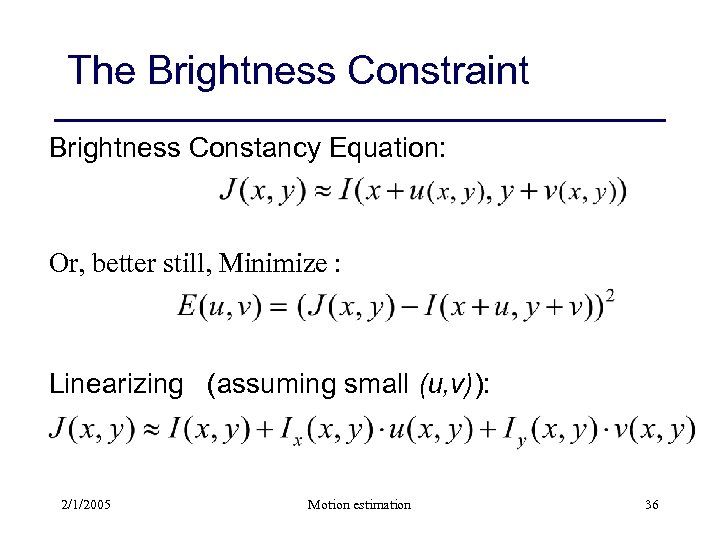

The Brightness Constraint Brightness Constancy Equation: Or, better still, Minimize : Linearizing (assuming small (u, v)): 2/1/2005 Motion estimation 36

The Brightness Constraint Brightness Constancy Equation: Or, better still, Minimize : Linearizing (assuming small (u, v)): 2/1/2005 Motion estimation 36

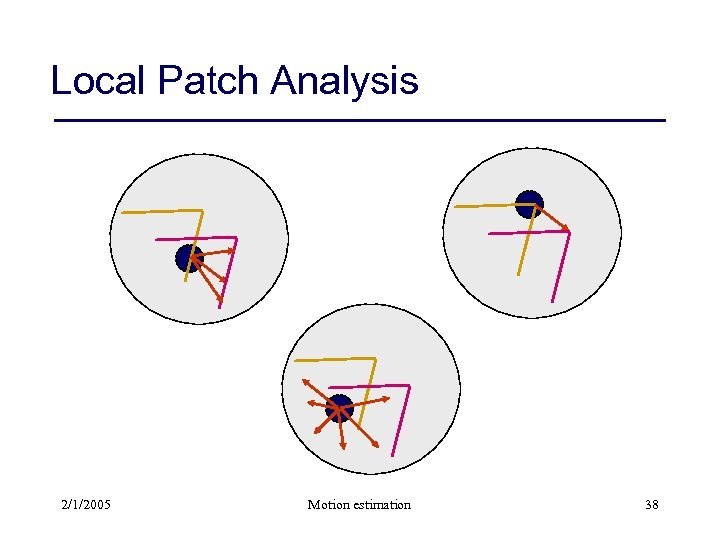

Local Patch Analysis 2/1/2005 Motion estimation 38

Local Patch Analysis 2/1/2005 Motion estimation 38

![Patch Translation [Lucas-Kanade] Assume a single velocity for all pixels within an image patch Patch Translation [Lucas-Kanade] Assume a single velocity for all pixels within an image patch](https://present5.com/presentation/1fba51ebf275a192cff8e29947458cca/image-35.jpg) Patch Translation [Lucas-Kanade] Assume a single velocity for all pixels within an image patch Minimizing LHS: sum of the 2 x 2 outer product tensor of the gradient vector 2/1/2005 Motion estimation 39

Patch Translation [Lucas-Kanade] Assume a single velocity for all pixels within an image patch Minimizing LHS: sum of the 2 x 2 outer product tensor of the gradient vector 2/1/2005 Motion estimation 39

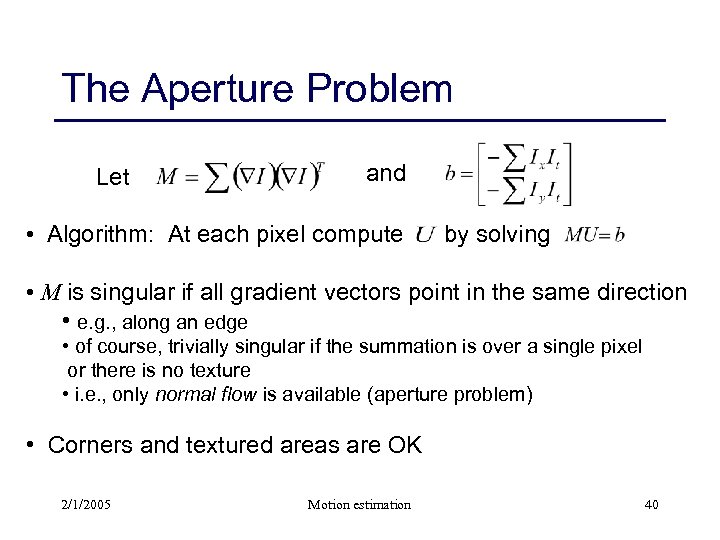

The Aperture Problem Let and • Algorithm: At each pixel compute by solving • M is singular if all gradient vectors point in the same direction • e. g. , along an edge • of course, trivially singular if the summation is over a single pixel or there is no texture • i. e. , only normal flow is available (aperture problem) • Corners and textured areas are OK 2/1/2005 Motion estimation 40

The Aperture Problem Let and • Algorithm: At each pixel compute by solving • M is singular if all gradient vectors point in the same direction • e. g. , along an edge • of course, trivially singular if the summation is over a single pixel or there is no texture • i. e. , only normal flow is available (aperture problem) • Corners and textured areas are OK 2/1/2005 Motion estimation 40

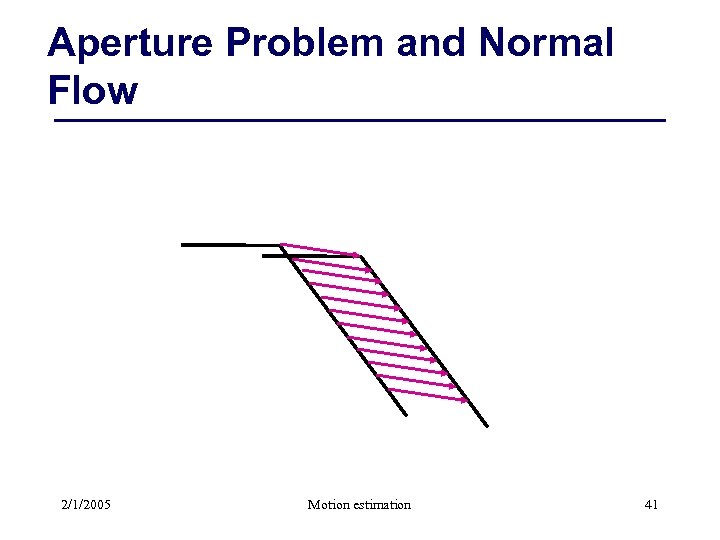

Aperture Problem and Normal Flow 2/1/2005 Motion estimation 41

Aperture Problem and Normal Flow 2/1/2005 Motion estimation 41

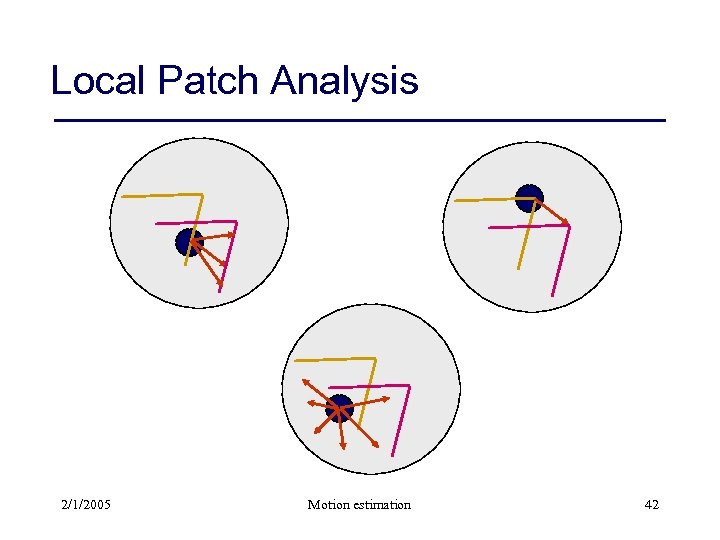

Local Patch Analysis 2/1/2005 Motion estimation 42

Local Patch Analysis 2/1/2005 Motion estimation 42

Iterative Refinement Estimate velocity at each pixel using one iteration of Lucas and Kanade estimation Warp one image toward the other using the estimated flow field (easier said than done) Refine estimate by repeating the process 2/1/2005 Motion estimation 43

Iterative Refinement Estimate velocity at each pixel using one iteration of Lucas and Kanade estimation Warp one image toward the other using the estimated flow field (easier said than done) Refine estimate by repeating the process 2/1/2005 Motion estimation 43

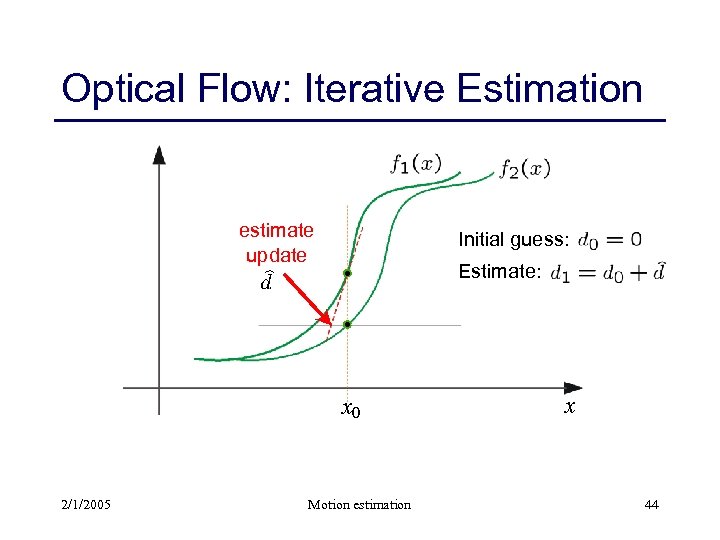

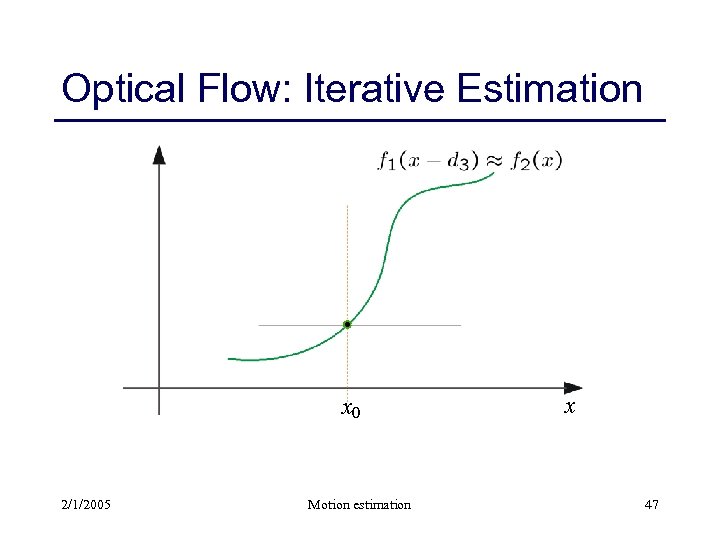

Optical Flow: Iterative Estimation estimate update Initial guess: Estimate: x 0 2/1/2005 Motion estimation x 44

Optical Flow: Iterative Estimation estimate update Initial guess: Estimate: x 0 2/1/2005 Motion estimation x 44

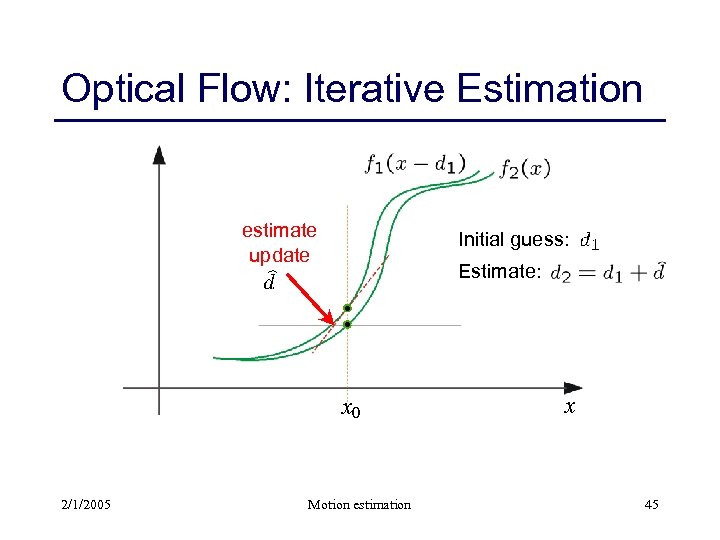

Optical Flow: Iterative Estimation estimate update Initial guess: Estimate: x 0 2/1/2005 Motion estimation x 45

Optical Flow: Iterative Estimation estimate update Initial guess: Estimate: x 0 2/1/2005 Motion estimation x 45

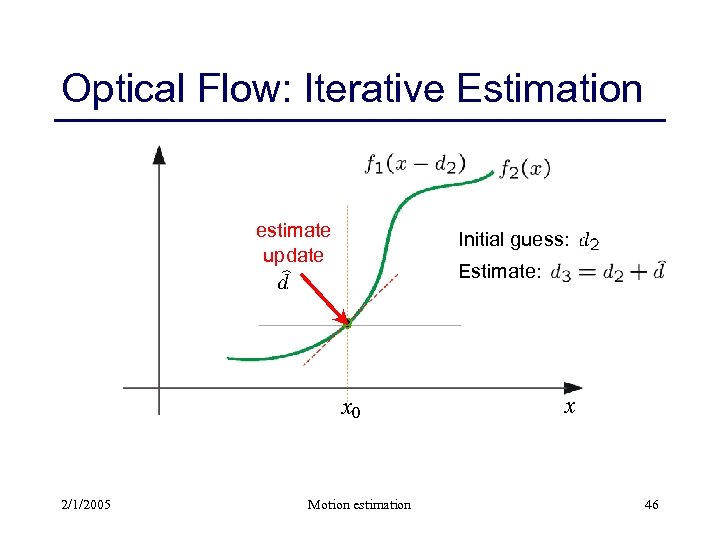

Optical Flow: Iterative Estimation estimate update Initial guess: Estimate: x 0 2/1/2005 Motion estimation x 46

Optical Flow: Iterative Estimation estimate update Initial guess: Estimate: x 0 2/1/2005 Motion estimation x 46

Optical Flow: Iterative Estimation x 0 2/1/2005 Motion estimation x 47

Optical Flow: Iterative Estimation x 0 2/1/2005 Motion estimation x 47

Optical Flow: Iterative Estimation Some Implementation Issues: § warping is not easy (make sure that errors in interpolation and warping are not bigger than the estimate refinement) § warp one image, take derivatives of the other so you don’t need to re-compute the gradient after each iteration. § often useful to low-pass filter the images before motion estimation (for better derivative estimation, and somewhat better linear approximations to image intensity) 2/1/2005 Motion estimation 48

Optical Flow: Iterative Estimation Some Implementation Issues: § warping is not easy (make sure that errors in interpolation and warping are not bigger than the estimate refinement) § warp one image, take derivatives of the other so you don’t need to re-compute the gradient after each iteration. § often useful to low-pass filter the images before motion estimation (for better derivative estimation, and somewhat better linear approximations to image intensity) 2/1/2005 Motion estimation 48

Optical Flow: Iterative Estimation Some Implementation Issues: • warping is not easy (make sure that errors in interpolation and warping are not bigger than the estimate refinement) • warp one image, take derivatives of the other so you don’t need to re-compute the gradient after each iteration. • often useful to low-pass filter the images before motion estimation (for better derivative estimation, and somewhat better linear approximations to image intensity) 2/1/2005 Motion estimation 49

Optical Flow: Iterative Estimation Some Implementation Issues: • warping is not easy (make sure that errors in interpolation and warping are not bigger than the estimate refinement) • warp one image, take derivatives of the other so you don’t need to re-compute the gradient after each iteration. • often useful to low-pass filter the images before motion estimation (for better derivative estimation, and somewhat better linear approximations to image intensity) 2/1/2005 Motion estimation 49

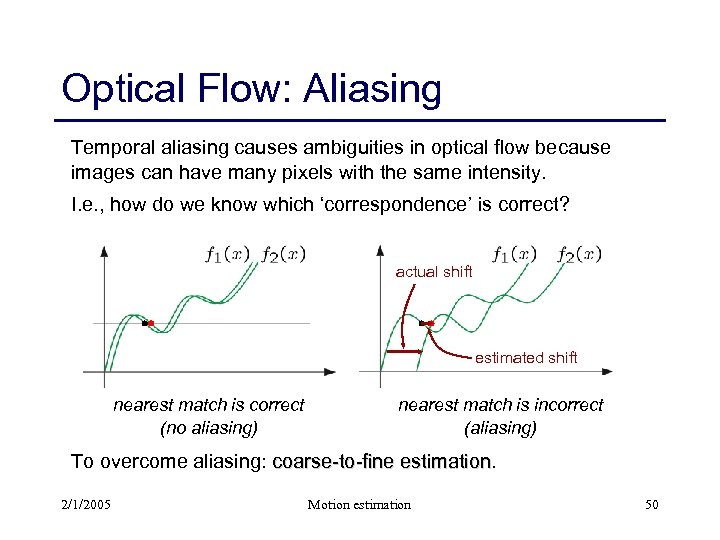

Optical Flow: Aliasing Temporal aliasing causes ambiguities in optical flow because images can have many pixels with the same intensity. I. e. , how do we know which ‘correspondence’ is correct? actual shift estimated shift nearest match is correct (no aliasing) nearest match is incorrect (aliasing) To overcome aliasing: coarse-to-fine estimation 2/1/2005 Motion estimation 50

Optical Flow: Aliasing Temporal aliasing causes ambiguities in optical flow because images can have many pixels with the same intensity. I. e. , how do we know which ‘correspondence’ is correct? actual shift estimated shift nearest match is correct (no aliasing) nearest match is incorrect (aliasing) To overcome aliasing: coarse-to-fine estimation 2/1/2005 Motion estimation 50

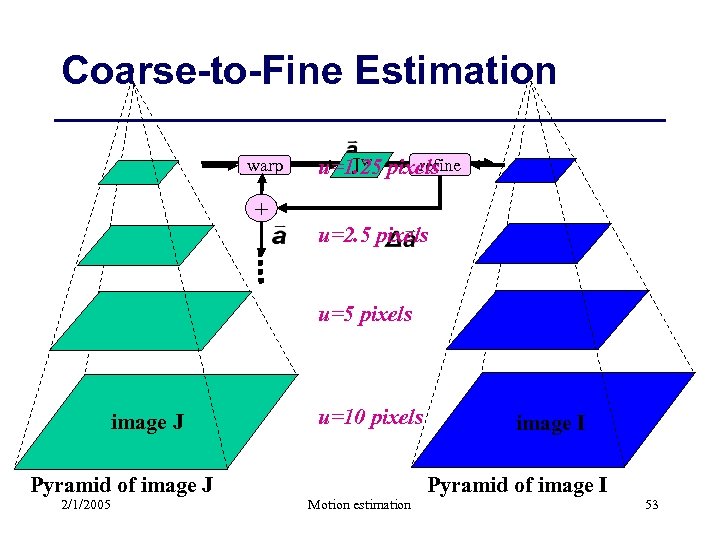

Coarse-to-Fine Estimation warp refine Jw u=1. 25 pixels + u=2. 5 pixels u=5 pixels image J Pyramid of image J 2/1/2005 u=10 pixels Motion estimation image I Pyramid of image I 53

Coarse-to-Fine Estimation warp refine Jw u=1. 25 pixels + u=2. 5 pixels u=5 pixels image J Pyramid of image J 2/1/2005 u=10 pixels Motion estimation image I Pyramid of image I 53

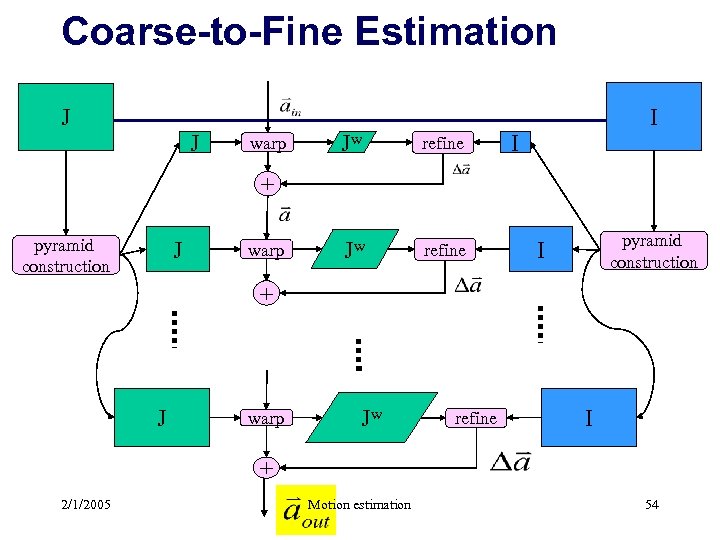

Coarse-to-Fine Estimation J J warp Jw refine I I + pyramid construction J warp pyramid construction I + J warp Jw refine I + 2/1/2005 Motion estimation 54

Coarse-to-Fine Estimation J J warp Jw refine I I + pyramid construction J warp pyramid construction I + J warp Jw refine I + 2/1/2005 Motion estimation 54

Global Motion Models 2 D Models: Affine Quadratic Planar projective transform (Homography) 3 D Models: Instantaneous camera motion models Homography+epipole Plane+Parallax 2/1/2005 Motion estimation 55

Global Motion Models 2 D Models: Affine Quadratic Planar projective transform (Homography) 3 D Models: Instantaneous camera motion models Homography+epipole Plane+Parallax 2/1/2005 Motion estimation 55

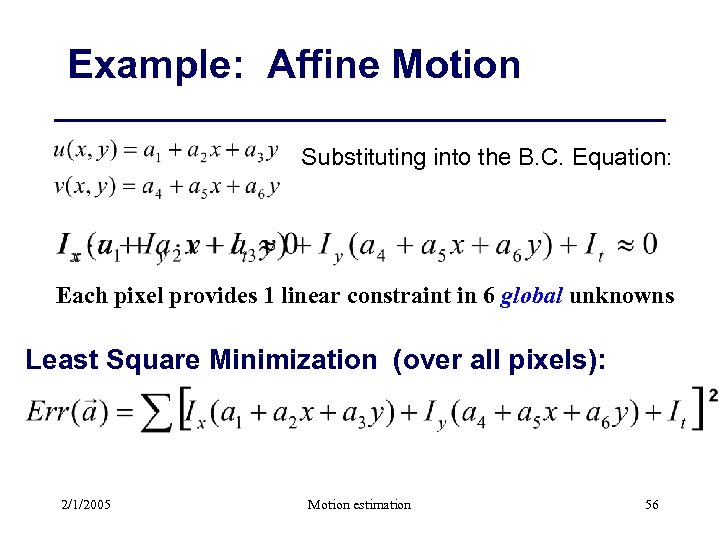

Example: Affine Motion Substituting into the B. C. Equation: Each pixel provides 1 linear constraint in 6 global unknowns Least Square Minimization (over all pixels): 2/1/2005 Motion estimation 56

Example: Affine Motion Substituting into the B. C. Equation: Each pixel provides 1 linear constraint in 6 global unknowns Least Square Minimization (over all pixels): 2/1/2005 Motion estimation 56

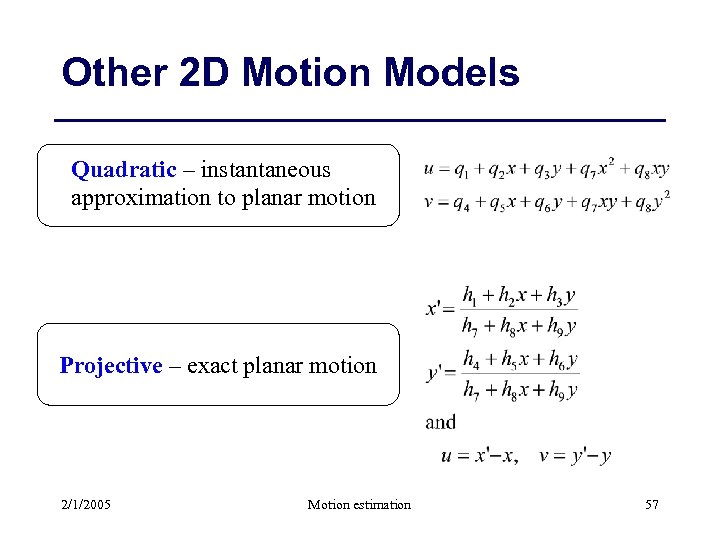

Other 2 D Motion Models Quadratic – instantaneous approximation to planar motion Projective – exact planar motion 2/1/2005 Motion estimation 57

Other 2 D Motion Models Quadratic – instantaneous approximation to planar motion Projective – exact planar motion 2/1/2005 Motion estimation 57

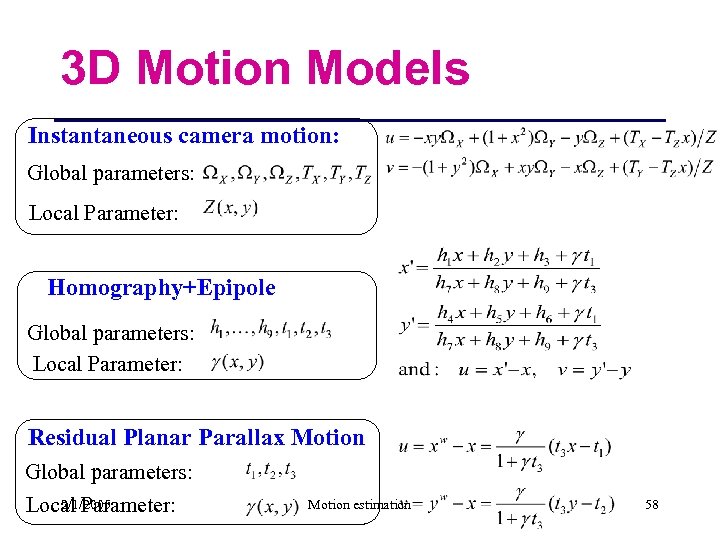

3 D Motion Models Instantaneous camera motion: Global parameters: Local Parameter: Homography+Epipole Global parameters: Local Parameter: Residual Planar Parallax Motion Global parameters: 2/1/2005 Local Parameter: Motion estimation 58

3 D Motion Models Instantaneous camera motion: Global parameters: Local Parameter: Homography+Epipole Global parameters: Local Parameter: Residual Planar Parallax Motion Global parameters: 2/1/2005 Local Parameter: Motion estimation 58

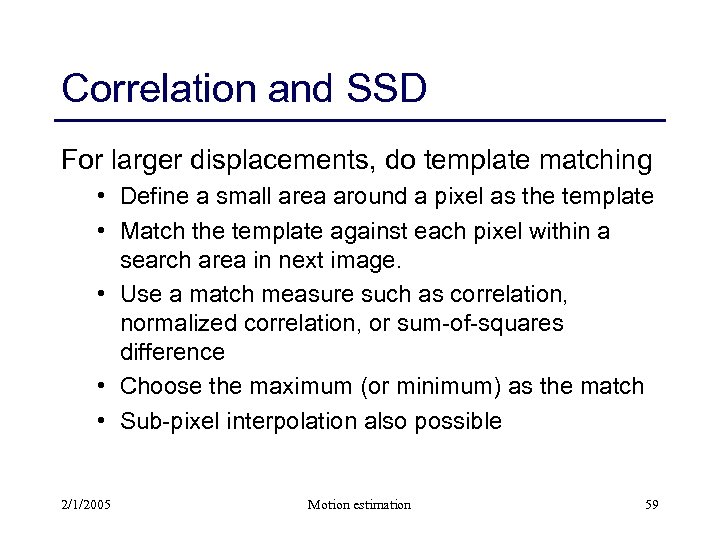

Correlation and SSD For larger displacements, do template matching • Define a small area around a pixel as the template • Match the template against each pixel within a search area in next image. • Use a match measure such as correlation, normalized correlation, or sum-of-squares difference • Choose the maximum (or minimum) as the match • Sub-pixel interpolation also possible 2/1/2005 Motion estimation 59

Correlation and SSD For larger displacements, do template matching • Define a small area around a pixel as the template • Match the template against each pixel within a search area in next image. • Use a match measure such as correlation, normalized correlation, or sum-of-squares difference • Choose the maximum (or minimum) as the match • Sub-pixel interpolation also possible 2/1/2005 Motion estimation 59

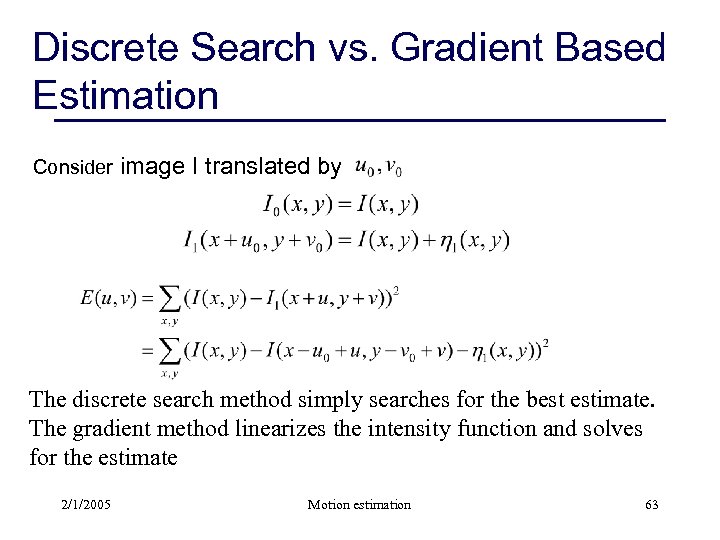

Discrete Search vs. Gradient Based Estimation Consider image I translated by The discrete search method simply searches for the best estimate. The gradient method linearizes the intensity function and solves for the estimate 2/1/2005 Motion estimation 63

Discrete Search vs. Gradient Based Estimation Consider image I translated by The discrete search method simply searches for the best estimate. The gradient method linearizes the intensity function and solves for the estimate 2/1/2005 Motion estimation 63

Correlation Window Size Small windows lead to more false matches Large windows are better this way, but… • Neighboring flow vectors will be more correlated (since the template windows have more in common) • Flow resolution also lower (same reason) • More expensive to compute Another way to look at this: Small windows are good for local search but more precise and less smooth Large windows good for global search but less precise and more smooth method 2/1/2005 Motion estimation 69

Correlation Window Size Small windows lead to more false matches Large windows are better this way, but… • Neighboring flow vectors will be more correlated (since the template windows have more in common) • Flow resolution also lower (same reason) • More expensive to compute Another way to look at this: Small windows are good for local search but more precise and less smooth Large windows good for global search but less precise and more smooth method 2/1/2005 Motion estimation 69

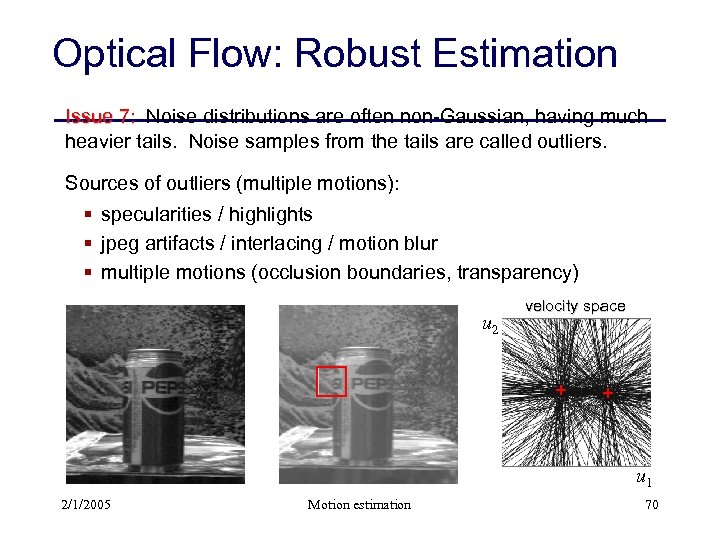

Optical Flow: Robust Estimation Issue 7: Noise distributions are often non-Gaussian, having much heavier tails. Noise samples from the tails are called outliers. Sources of outliers (multiple motions): § specularities / highlights § jpeg artifacts / interlacing / motion blur § multiple motions (occlusion boundaries, transparency) u 2 velocity space + + u 1 2/1/2005 Motion estimation 70

Optical Flow: Robust Estimation Issue 7: Noise distributions are often non-Gaussian, having much heavier tails. Noise samples from the tails are called outliers. Sources of outliers (multiple motions): § specularities / highlights § jpeg artifacts / interlacing / motion blur § multiple motions (occlusion boundaries, transparency) u 2 velocity space + + u 1 2/1/2005 Motion estimation 70

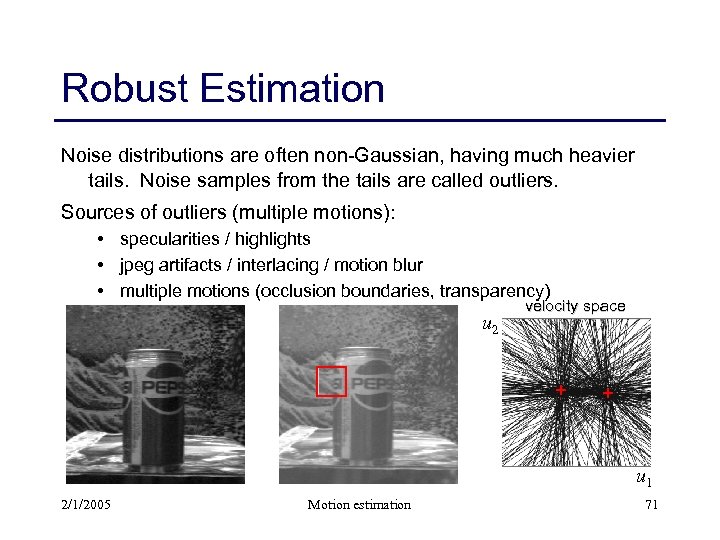

Robust Estimation Noise distributions are often non-Gaussian, having much heavier tails. Noise samples from the tails are called outliers. Sources of outliers (multiple motions): • specularities / highlights • jpeg artifacts / interlacing / motion blur • multiple motions (occlusion boundaries, transparency) u 2 velocity space + + u 1 2/1/2005 Motion estimation 71

Robust Estimation Noise distributions are often non-Gaussian, having much heavier tails. Noise samples from the tails are called outliers. Sources of outliers (multiple motions): • specularities / highlights • jpeg artifacts / interlacing / motion blur • multiple motions (occlusion boundaries, transparency) u 2 velocity space + + u 1 2/1/2005 Motion estimation 71

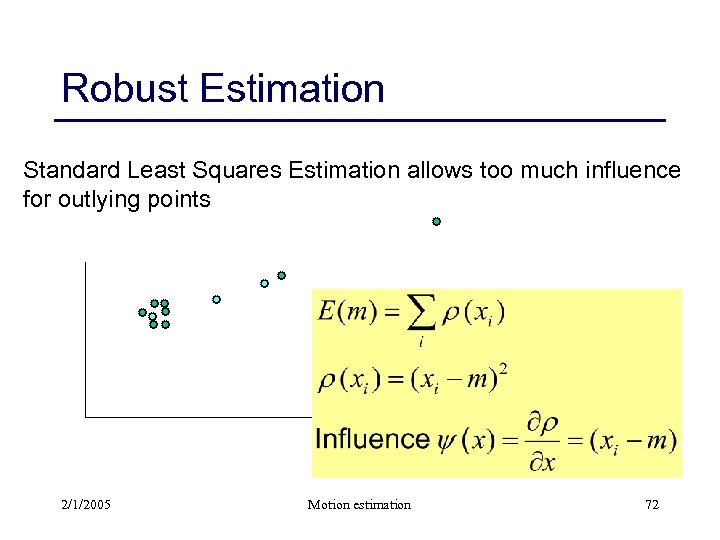

Robust Estimation Standard Least Squares Estimation allows too much influence for outlying points 2/1/2005 Motion estimation 72

Robust Estimation Standard Least Squares Estimation allows too much influence for outlying points 2/1/2005 Motion estimation 72

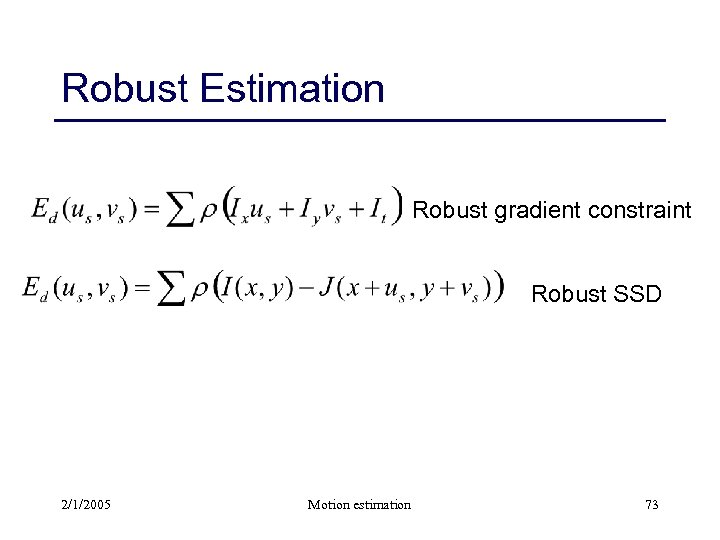

Robust Estimation Robust gradient constraint Robust SSD 2/1/2005 Motion estimation 73

Robust Estimation Robust gradient constraint Robust SSD 2/1/2005 Motion estimation 73

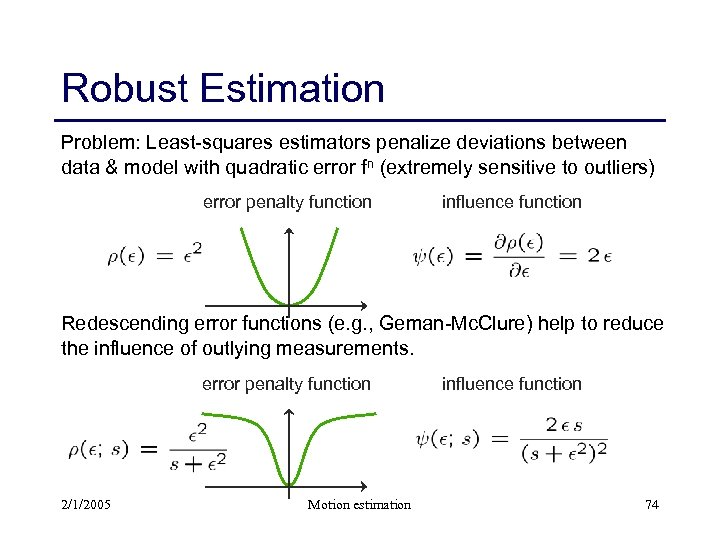

Robust Estimation Problem: Least-squares estimators penalize deviations between data & model with quadratic error fn (extremely sensitive to outliers) error penalty function influence function Redescending error functions (e. g. , Geman-Mc. Clure) help to reduce the influence of outlying measurements. error penalty function 2/1/2005 Motion estimation influence function 74

Robust Estimation Problem: Least-squares estimators penalize deviations between data & model with quadratic error fn (extremely sensitive to outliers) error penalty function influence function Redescending error functions (e. g. , Geman-Mc. Clure) help to reduce the influence of outlying measurements. error penalty function 2/1/2005 Motion estimation influence function 74

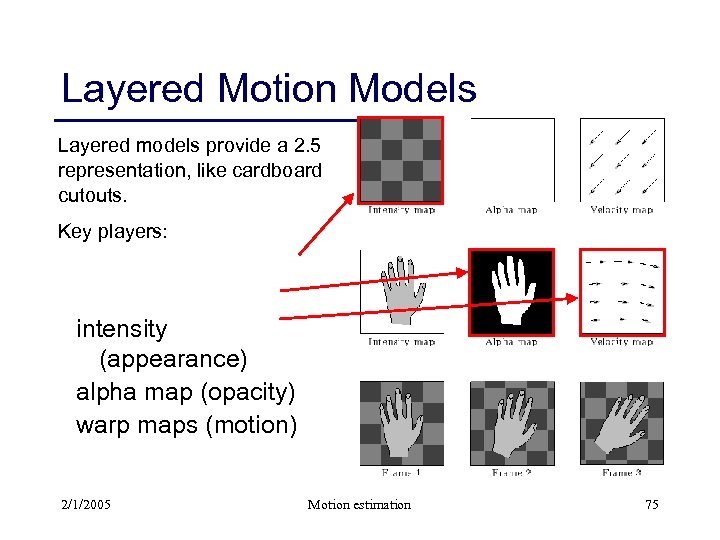

Layered Motion Models Layered models provide a 2. 5 representation, like cardboard cutouts. Key players: intensity (appearance) alpha map (opacity) warp maps (motion) 2/1/2005 Motion estimation 75

Layered Motion Models Layered models provide a 2. 5 representation, like cardboard cutouts. Key players: intensity (appearance) alpha map (opacity) warp maps (motion) 2/1/2005 Motion estimation 75

Layered Scene Representations 2/1/2005 Motion estimation

Layered Scene Representations 2/1/2005 Motion estimation

Motion representations How can we describe this scene? 2/1/2005 Motion estimation 77

Motion representations How can we describe this scene? 2/1/2005 Motion estimation 77

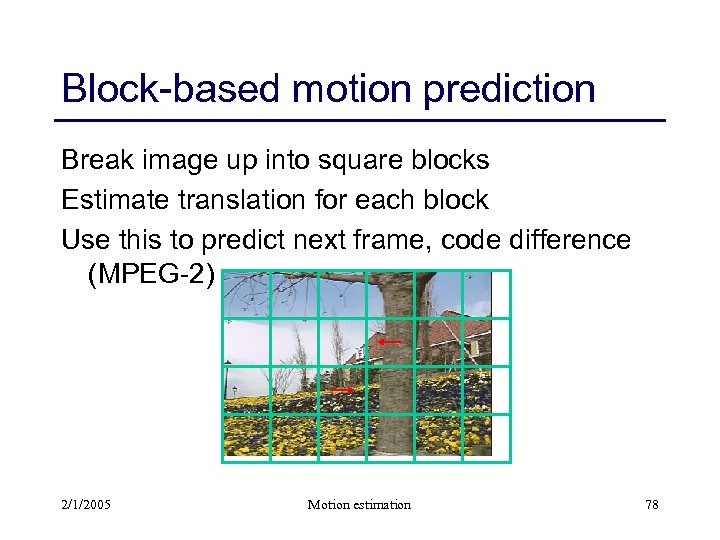

Block-based motion prediction Break image up into square blocks Estimate translation for each block Use this to predict next frame, code difference (MPEG-2) 2/1/2005 Motion estimation 78

Block-based motion prediction Break image up into square blocks Estimate translation for each block Use this to predict next frame, code difference (MPEG-2) 2/1/2005 Motion estimation 78

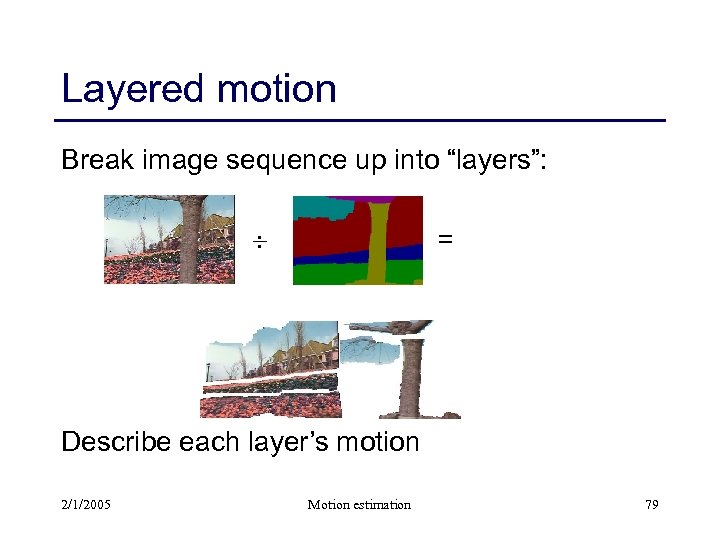

Layered motion Break image sequence up into “layers”: = Describe each layer’s motion 2/1/2005 Motion estimation 79

Layered motion Break image sequence up into “layers”: = Describe each layer’s motion 2/1/2005 Motion estimation 79

Outline • • • Why layers? 2 -D layers [Wang & Adelson 94; Weiss 97] 3 -D layers [Baker et al. 98] Layered Depth Images [Shade et al. 98] Transparency [Szeliski et al. 00] 2/1/2005 Motion estimation 80

Outline • • • Why layers? 2 -D layers [Wang & Adelson 94; Weiss 97] 3 -D layers [Baker et al. 98] Layered Depth Images [Shade et al. 98] Transparency [Szeliski et al. 00] 2/1/2005 Motion estimation 80

Layered motion Advantages: • can represent occlusions / disocclusions • each layer’s motion can be smooth • video segmentation for semantic processing Difficulties: • how do we determine the correct number? • how do we assign pixels? • how do we model the motion? 2/1/2005 Motion estimation 81

Layered motion Advantages: • can represent occlusions / disocclusions • each layer’s motion can be smooth • video segmentation for semantic processing Difficulties: • how do we determine the correct number? • how do we assign pixels? • how do we model the motion? 2/1/2005 Motion estimation 81

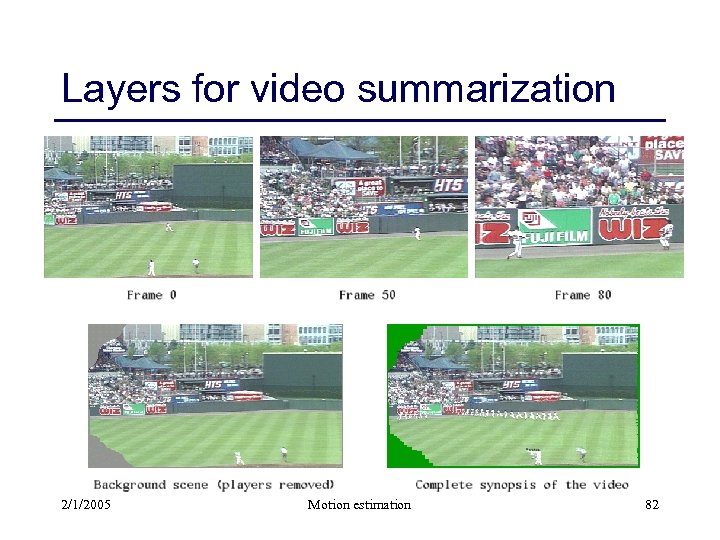

Layers for video summarization 2/1/2005 Motion estimation 82

Layers for video summarization 2/1/2005 Motion estimation 82

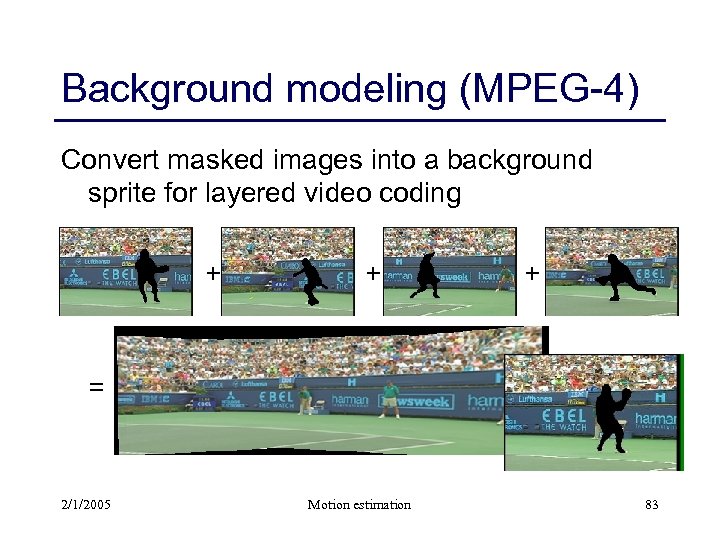

Background modeling (MPEG-4) Convert masked images into a background sprite for layered video coding + + + = 2/1/2005 Motion estimation 83

Background modeling (MPEG-4) Convert masked images into a background sprite for layered video coding + + + = 2/1/2005 Motion estimation 83

![What are layers? [Wang & Adelson, 1994] • intensities • alphas • velocities 2/1/2005 What are layers? [Wang & Adelson, 1994] • intensities • alphas • velocities 2/1/2005](https://present5.com/presentation/1fba51ebf275a192cff8e29947458cca/image-70.jpg) What are layers? [Wang & Adelson, 1994] • intensities • alphas • velocities 2/1/2005 Motion estimation 84

What are layers? [Wang & Adelson, 1994] • intensities • alphas • velocities 2/1/2005 Motion estimation 84

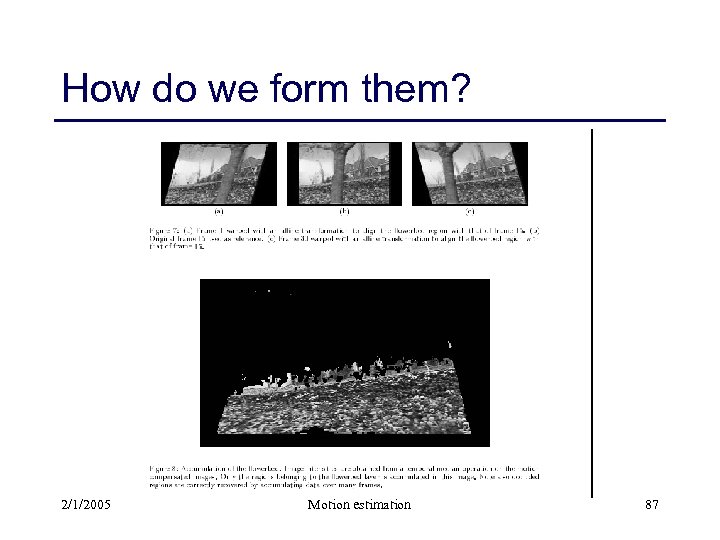

How do we form them? 2/1/2005 Motion estimation 87

How do we form them? 2/1/2005 Motion estimation 87

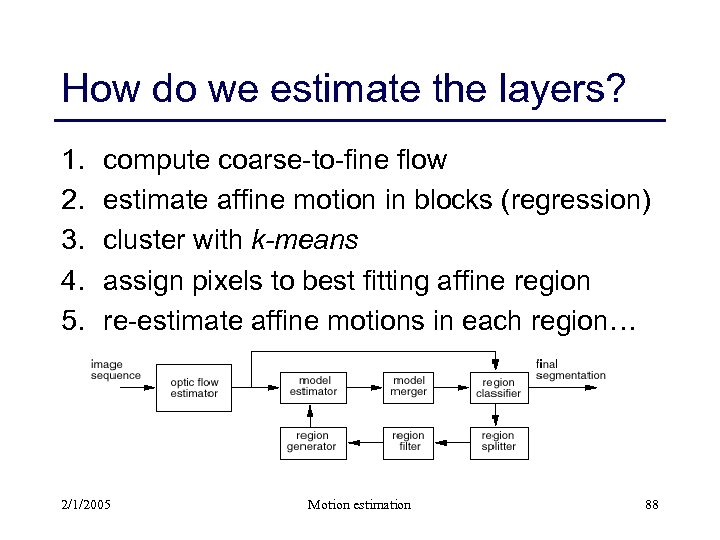

How do we estimate the layers? 1. 2. 3. 4. 5. compute coarse-to-fine flow estimate affine motion in blocks (regression) cluster with k-means assign pixels to best fitting affine region re-estimate affine motions in each region… 2/1/2005 Motion estimation 88

How do we estimate the layers? 1. 2. 3. 4. 5. compute coarse-to-fine flow estimate affine motion in blocks (regression) cluster with k-means assign pixels to best fitting affine region re-estimate affine motions in each region… 2/1/2005 Motion estimation 88

Layer synthesis For each layer: • stabilize the sequence with the affine motion • compute median value at each pixel Determine occlusion relationships 2/1/2005 Motion estimation 89

Layer synthesis For each layer: • stabilize the sequence with the affine motion • compute median value at each pixel Determine occlusion relationships 2/1/2005 Motion estimation 89

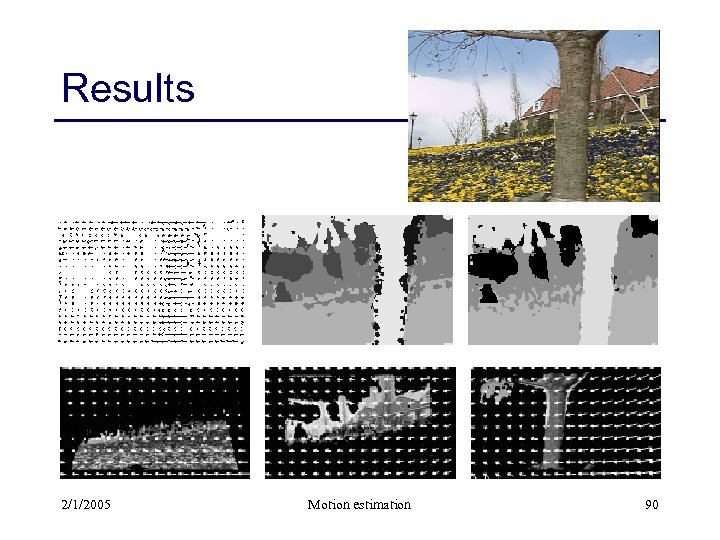

Results 2/1/2005 Motion estimation 90

Results 2/1/2005 Motion estimation 90

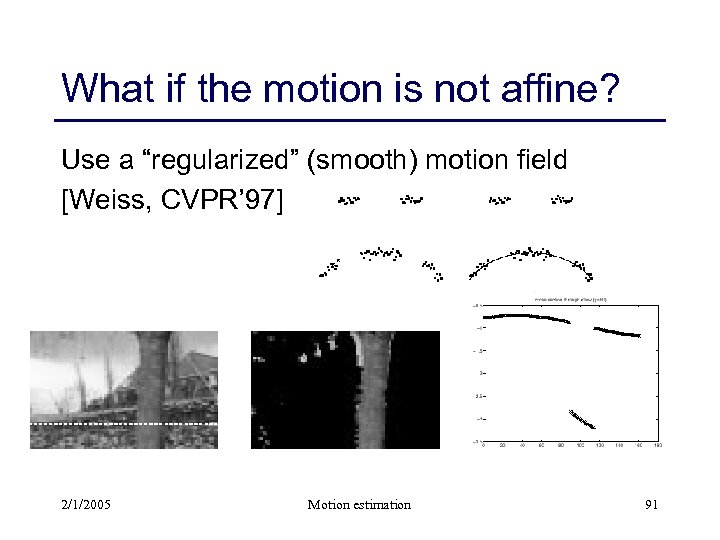

What if the motion is not affine? Use a “regularized” (smooth) motion field [Weiss, CVPR’ 97] 2/1/2005 Motion estimation 91

What if the motion is not affine? Use a “regularized” (smooth) motion field [Weiss, CVPR’ 97] 2/1/2005 Motion estimation 91

A Layered Approach To Stereo Reconstruction Simon Baker, Richard Szeliski and P. Anandan CVPR’ 98 2/1/2005 Motion estimation

A Layered Approach To Stereo Reconstruction Simon Baker, Richard Szeliski and P. Anandan CVPR’ 98 2/1/2005 Motion estimation

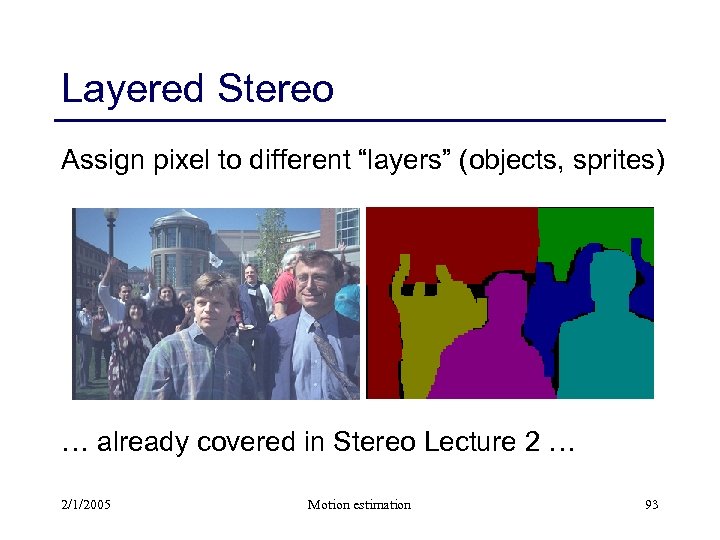

Layered Stereo Assign pixel to different “layers” (objects, sprites) … already covered in Stereo Lecture 2 … 2/1/2005 Motion estimation 93

Layered Stereo Assign pixel to different “layers” (objects, sprites) … already covered in Stereo Lecture 2 … 2/1/2005 Motion estimation 93

Layer extraction from multiple images containing reflections and transparency Richard Szeliski Shai Avidan P. Anandan CVPR’ 2000 “extra bonus material” 2/1/2005 Motion estimation

Layer extraction from multiple images containing reflections and transparency Richard Szeliski Shai Avidan P. Anandan CVPR’ 2000 “extra bonus material” 2/1/2005 Motion estimation

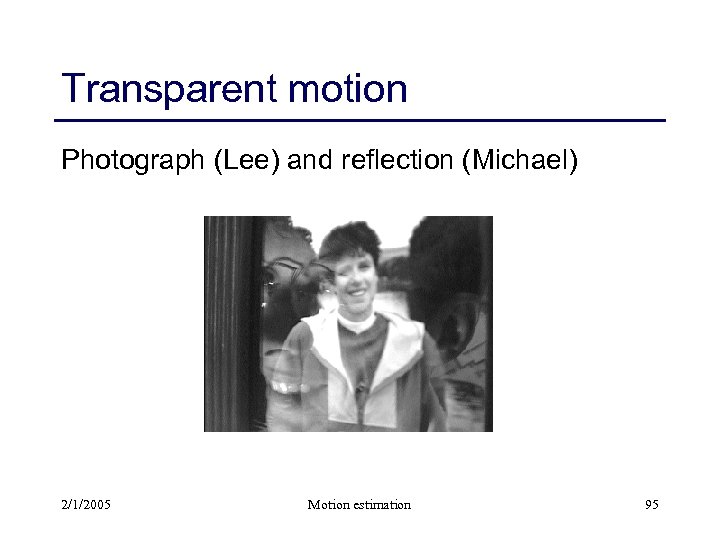

Transparent motion Photograph (Lee) and reflection (Michael) 2/1/2005 Motion estimation 95

Transparent motion Photograph (Lee) and reflection (Michael) 2/1/2005 Motion estimation 95

Previous work Physics-based vision and polarization [Shafer et al. ; Wolff; Nayar et al. ] Perception of transparency [Adelson…] Transparent motion estimation [Shizawa & Mase; Bergen et al. ; Irani et al. ; Darrell & Simoncelli] 3 -frame layer recovery [Bergen et al. ] 2/1/2005 Motion estimation 96

Previous work Physics-based vision and polarization [Shafer et al. ; Wolff; Nayar et al. ] Perception of transparency [Adelson…] Transparent motion estimation [Shizawa & Mase; Bergen et al. ; Irani et al. ; Darrell & Simoncelli] 3 -frame layer recovery [Bergen et al. ] 2/1/2005 Motion estimation 96

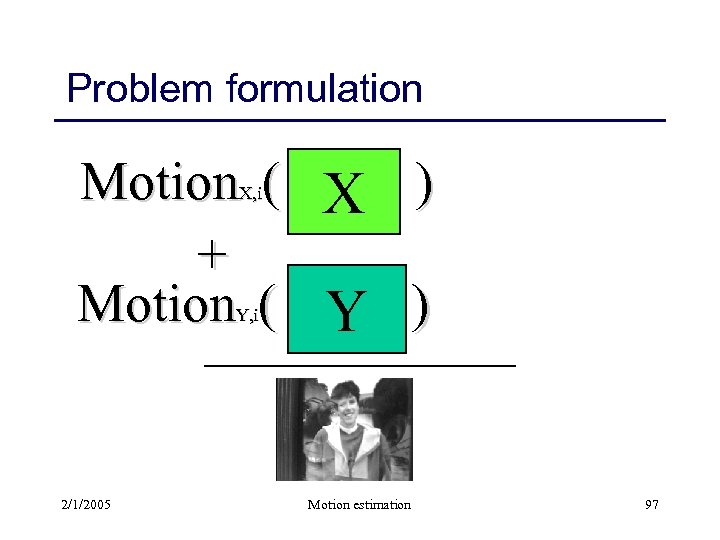

Problem formulation Motion ( X ) + Motion ( Y ) X, i Y, i 2/1/2005 Motion estimation 97

Problem formulation Motion ( X ) + Motion ( Y ) X, i Y, i 2/1/2005 Motion estimation 97

Image formation model Pure additive mixing of positive signals mk(x) = l Wkl fl(x) or mk = l Wkl fl Assume motion is planar (perspective transform, aka homography) 2/1/2005 Motion estimation 98

Image formation model Pure additive mixing of positive signals mk(x) = l Wkl fl(x) or mk = l Wkl fl Assume motion is planar (perspective transform, aka homography) 2/1/2005 Motion estimation 98

Two processing stages Estimate the motions and initial layer estimates Compute optimal layer estimates (for known motion) 2/1/2005 Motion estimation 99

Two processing stages Estimate the motions and initial layer estimates Compute optimal layer estimates (for known motion) 2/1/2005 Motion estimation 99

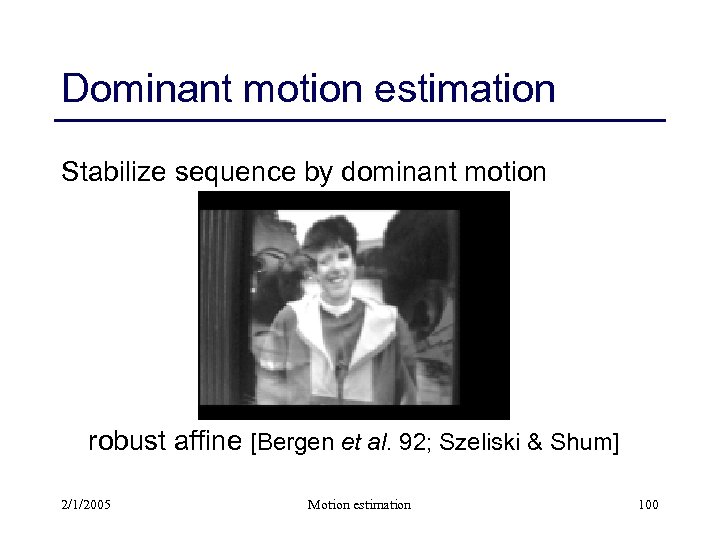

Dominant motion estimation Stabilize sequence by dominant motion robust affine [Bergen et al. 92; Szeliski & Shum] 2/1/2005 Motion estimation 100

Dominant motion estimation Stabilize sequence by dominant motion robust affine [Bergen et al. 92; Szeliski & Shum] 2/1/2005 Motion estimation 100

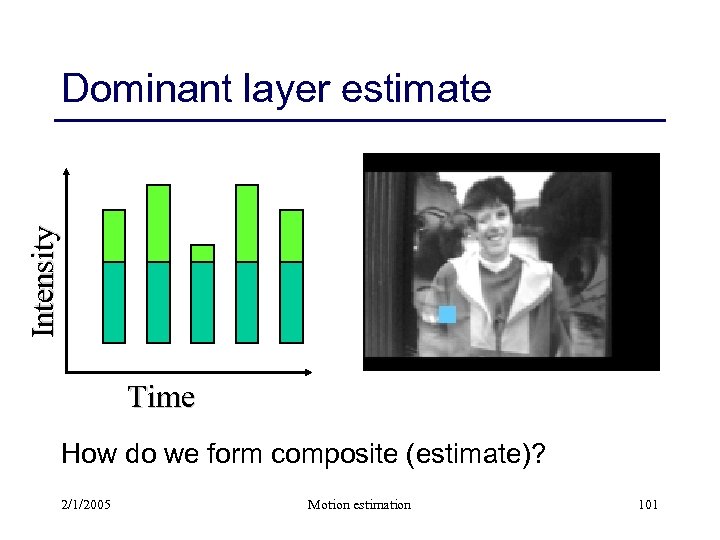

Intensity Dominant layer estimate Time How do we form composite (estimate)? 2/1/2005 Motion estimation 101

Intensity Dominant layer estimate Time How do we form composite (estimate)? 2/1/2005 Motion estimation 101

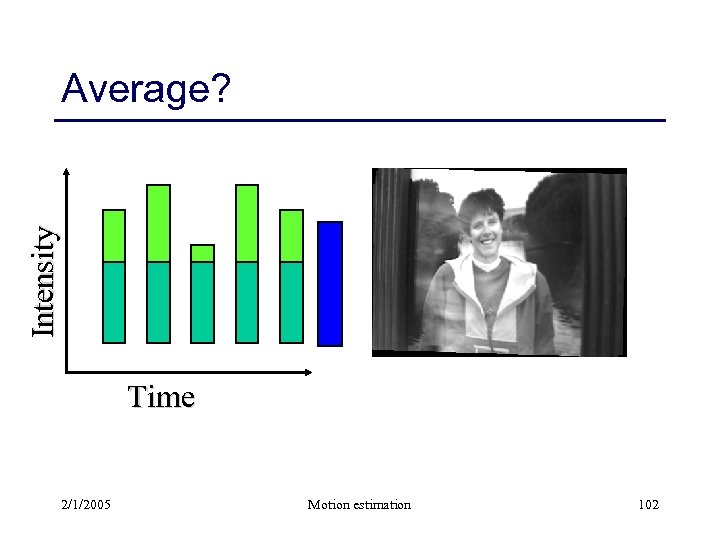

Intensity Average? Time 2/1/2005 Motion estimation 102

Intensity Average? Time 2/1/2005 Motion estimation 102

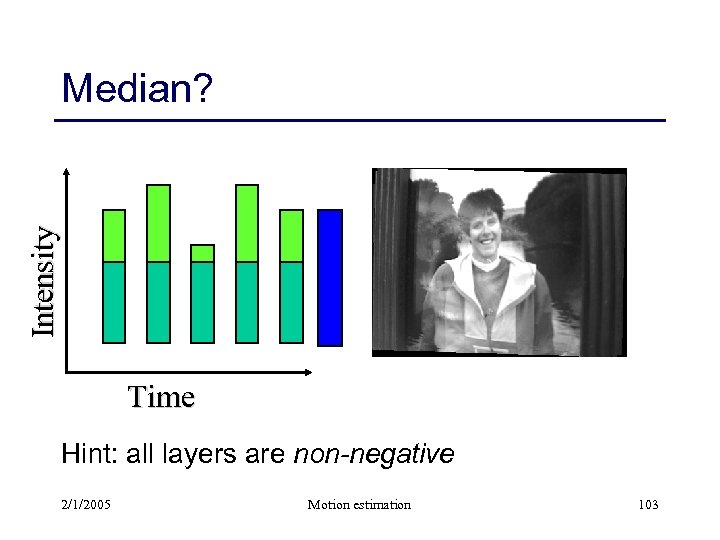

Intensity Median? Time Hint: all layers are non-negative 2/1/2005 Motion estimation 103

Intensity Median? Time Hint: all layers are non-negative 2/1/2005 Motion estimation 103

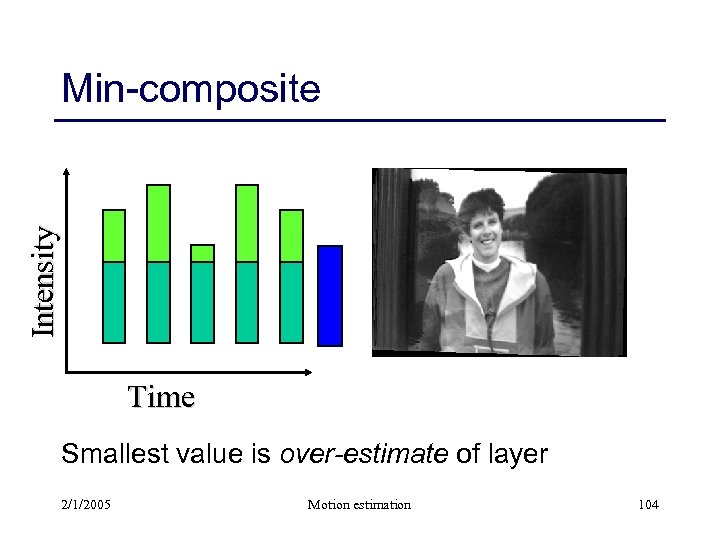

Intensity Min-composite Time Smallest value is over-estimate of layer 2/1/2005 Motion estimation 104

Intensity Min-composite Time Smallest value is over-estimate of layer 2/1/2005 Motion estimation 104

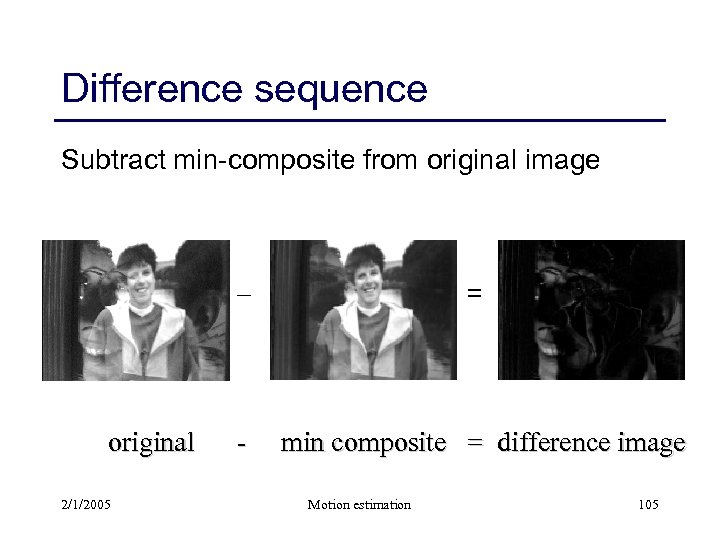

Difference sequence Subtract min-composite from original image original 2/1/2005 - = min composite = difference image Motion estimation 105

Difference sequence Subtract min-composite from original image original 2/1/2005 - = min composite = difference image Motion estimation 105

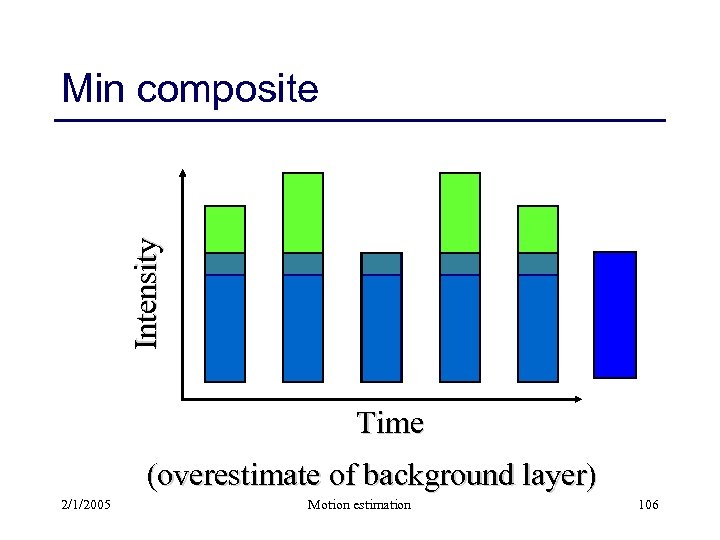

Intensity Min composite Time (overestimate of background layer) 2/1/2005 Motion estimation 106

Intensity Min composite Time (overestimate of background layer) 2/1/2005 Motion estimation 106

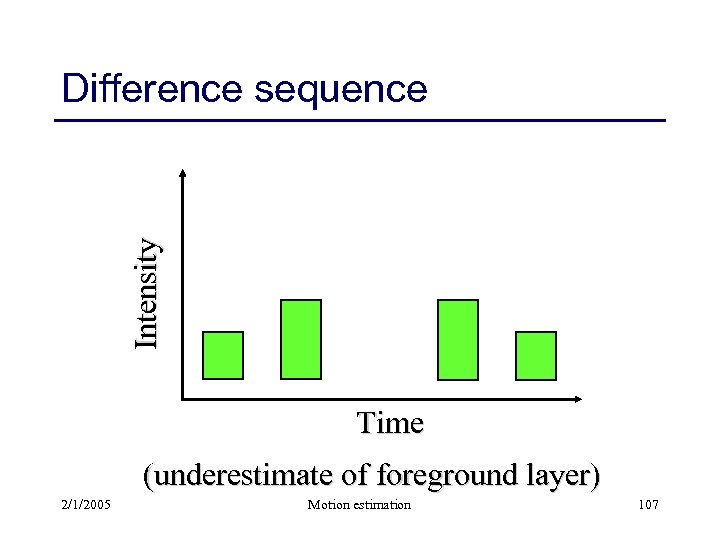

Intensity Difference sequence Time (underestimate of foreground layer) 2/1/2005 Motion estimation 107

Intensity Difference sequence Time (underestimate of foreground layer) 2/1/2005 Motion estimation 107

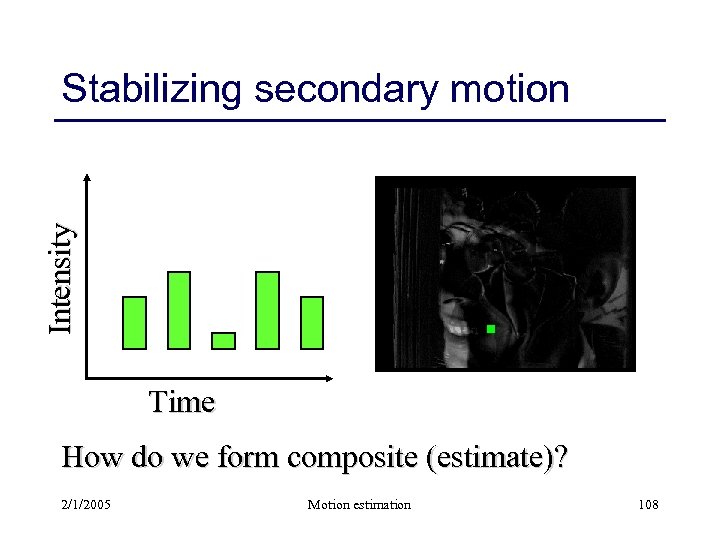

Intensity Stabilizing secondary motion Time How do we form composite (estimate)? 2/1/2005 Motion estimation 108

Intensity Stabilizing secondary motion Time How do we form composite (estimate)? 2/1/2005 Motion estimation 108

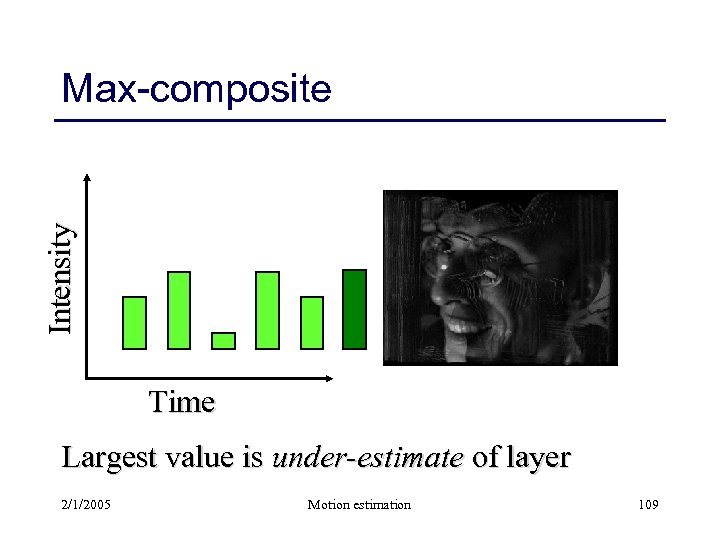

Intensity Max-composite Time Largest value is under-estimate of layer 2/1/2005 Motion estimation 109

Intensity Max-composite Time Largest value is under-estimate of layer 2/1/2005 Motion estimation 109

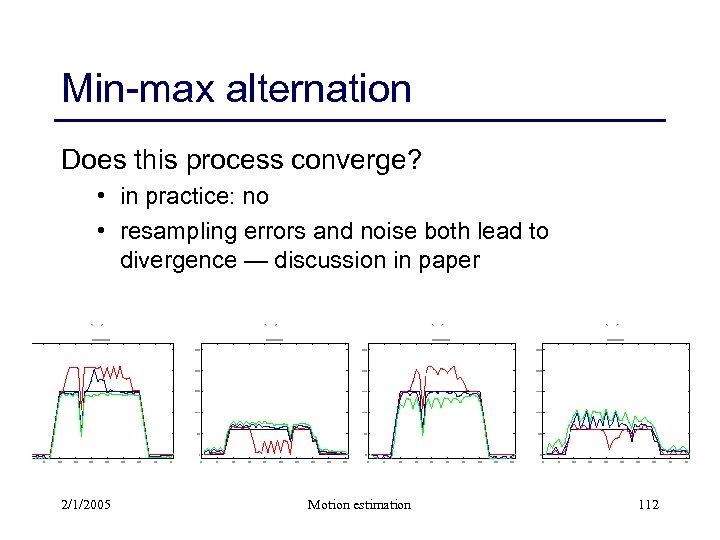

Min-max alternation Subtract secondary layer (under-estimate) from original sequence Re-compute dominant motion and better mincomposite Iterate … Does this process converge? 2/1/2005 Motion estimation 110

Min-max alternation Subtract secondary layer (under-estimate) from original sequence Re-compute dominant motion and better mincomposite Iterate … Does this process converge? 2/1/2005 Motion estimation 110

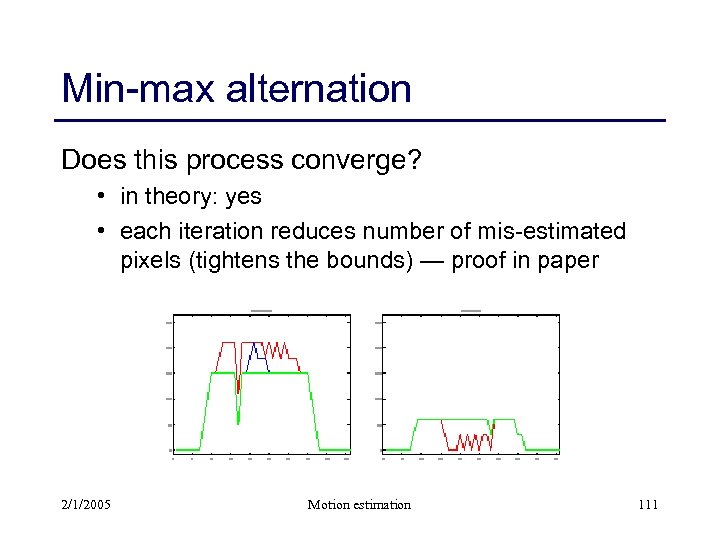

Min-max alternation Does this process converge? • in theory: yes • each iteration reduces number of mis-estimated pixels (tightens the bounds) — proof in paper 2/1/2005 Motion estimation 111

Min-max alternation Does this process converge? • in theory: yes • each iteration reduces number of mis-estimated pixels (tightens the bounds) — proof in paper 2/1/2005 Motion estimation 111

Min-max alternation Does this process converge? • in practice: no • resampling errors and noise both lead to divergence — discussion in paper resampling error 2/1/2005 noisy Motion estimation 112

Min-max alternation Does this process converge? • in practice: no • resampling errors and noise both lead to divergence — discussion in paper resampling error 2/1/2005 noisy Motion estimation 112

Two processing stages Estimate the motions and initial layer estimates Compute optimal layer estimates (for known motion) 2/1/2005 Motion estimation 113

Two processing stages Estimate the motions and initial layer estimates Compute optimal layer estimates (for known motion) 2/1/2005 Motion estimation 113

Optimal estimation Recall: additive mixing of positive signals mk = l Wkl fl Use constrained least squares (quadratic programming) min k | l Wkl fl – mk |2 s. t. fl 0 2/1/2005 Motion estimation 114

Optimal estimation Recall: additive mixing of positive signals mk = l Wkl fl Use constrained least squares (quadratic programming) min k | l Wkl fl – mk |2 s. t. fl 0 2/1/2005 Motion estimation 114

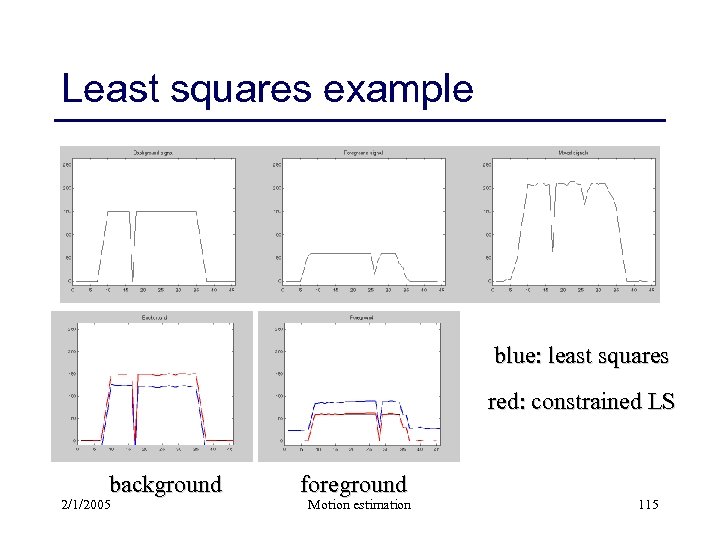

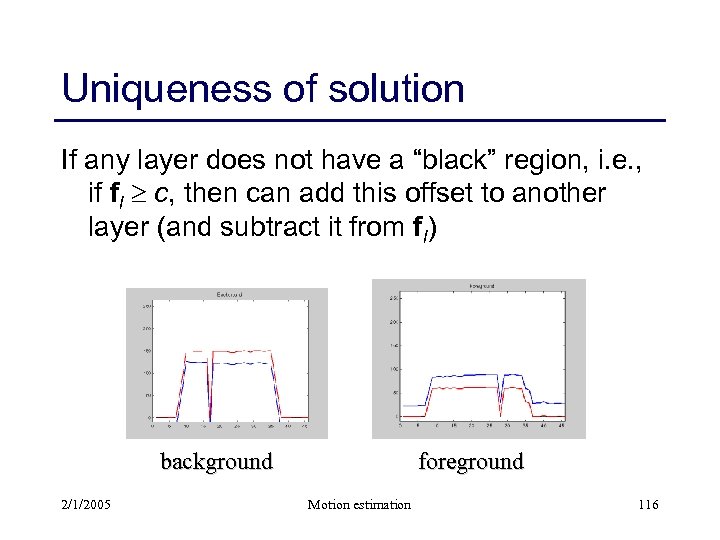

Least squares example blue: least squares red: constrained LS background 2/1/2005 foreground Motion estimation 115

Least squares example blue: least squares red: constrained LS background 2/1/2005 foreground Motion estimation 115

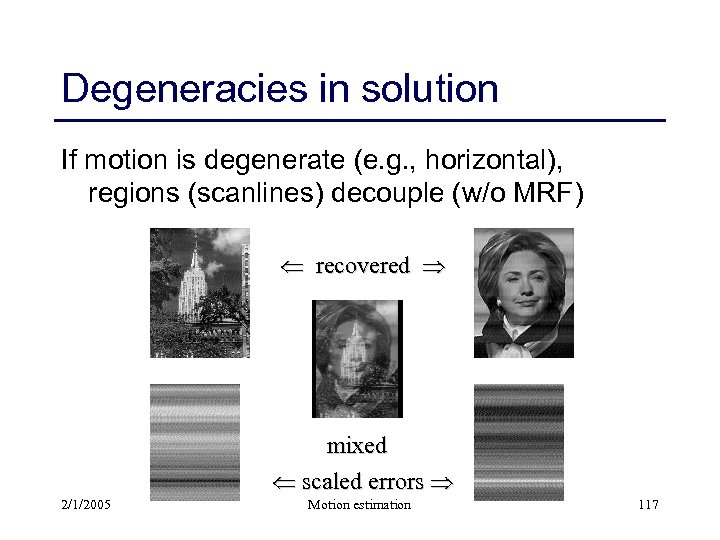

Uniqueness of solution If any layer does not have a “black” region, i. e. , if fl c, then can add this offset to another layer (and subtract it from fl) background 2/1/2005 foreground Motion estimation 116

Uniqueness of solution If any layer does not have a “black” region, i. e. , if fl c, then can add this offset to another layer (and subtract it from fl) background 2/1/2005 foreground Motion estimation 116

Degeneracies in solution If motion is degenerate (e. g. , horizontal), regions (scanlines) decouple (w/o MRF) recovered mixed scaled errors 2/1/2005 Motion estimation 117

Degeneracies in solution If motion is degenerate (e. g. , horizontal), regions (scanlines) decouple (w/o MRF) recovered mixed scaled errors 2/1/2005 Motion estimation 117

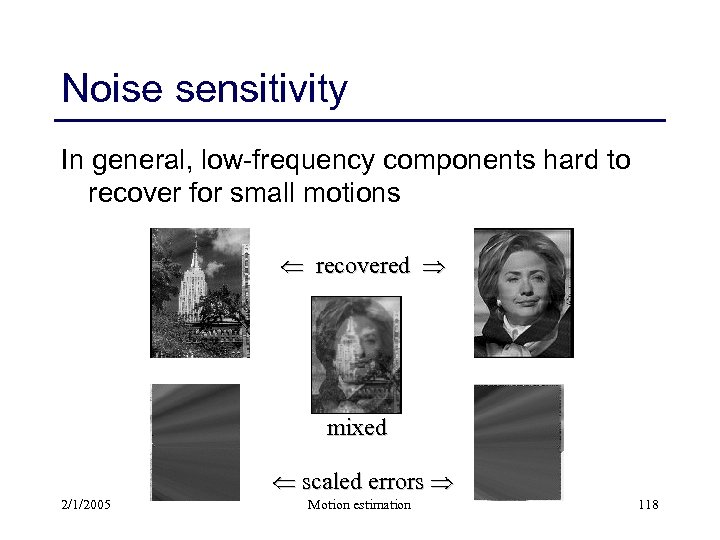

Noise sensitivity In general, low-frequency components hard to recover for small motions recovered mixed scaled errors 2/1/2005 Motion estimation 118

Noise sensitivity In general, low-frequency components hard to recover for small motions recovered mixed scaled errors 2/1/2005 Motion estimation 118

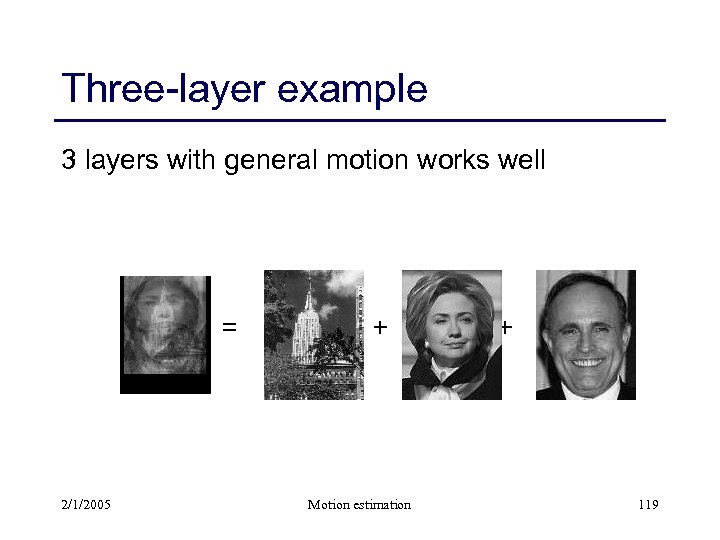

Three-layer example 3 layers with general motion works well = 2/1/2005 + Motion estimation + 119

Three-layer example 3 layers with general motion works well = 2/1/2005 + Motion estimation + 119

Complete algorithm Dominant motion with min-composites Difference (residual) images Non-dominant motion on differences Improve the motion estimates Unconstrained least-squares problem Constrained least-squares problem 2/1/2005 Motion estimation 120

Complete algorithm Dominant motion with min-composites Difference (residual) images Non-dominant motion on differences Improve the motion estimates Unconstrained least-squares problem Constrained least-squares problem 2/1/2005 Motion estimation 120

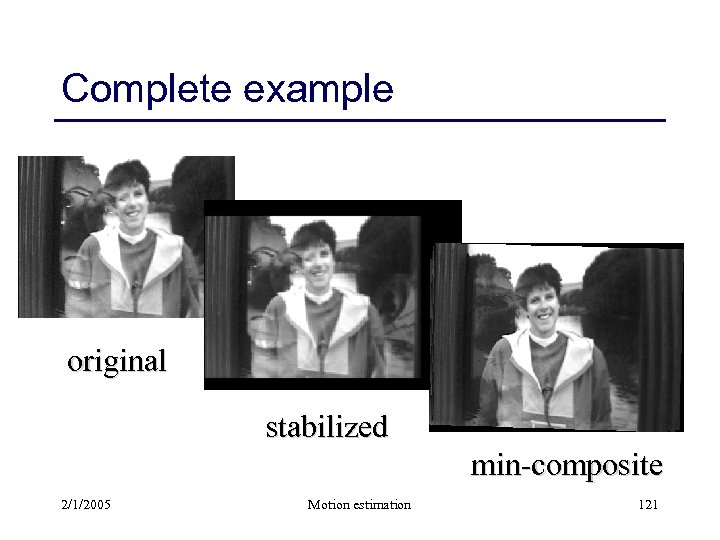

Complete example original stabilized min-composite 2/1/2005 Motion estimation 121

Complete example original stabilized min-composite 2/1/2005 Motion estimation 121

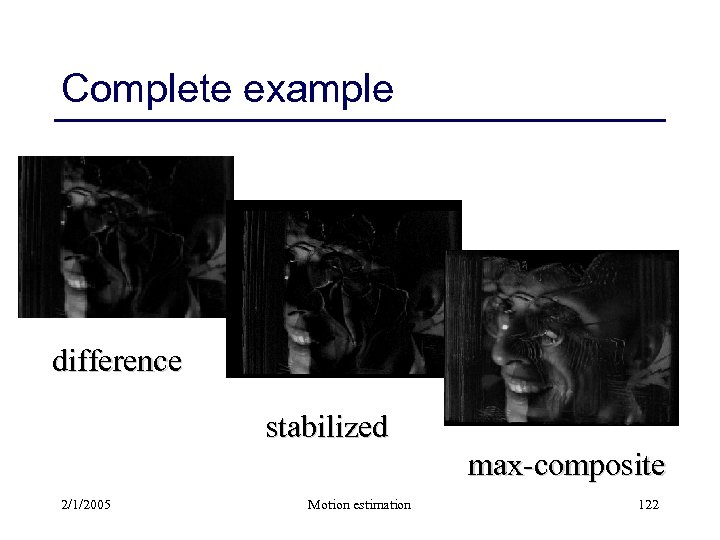

Complete example difference stabilized max-composite 2/1/2005 Motion estimation 122

Complete example difference stabilized max-composite 2/1/2005 Motion estimation 122

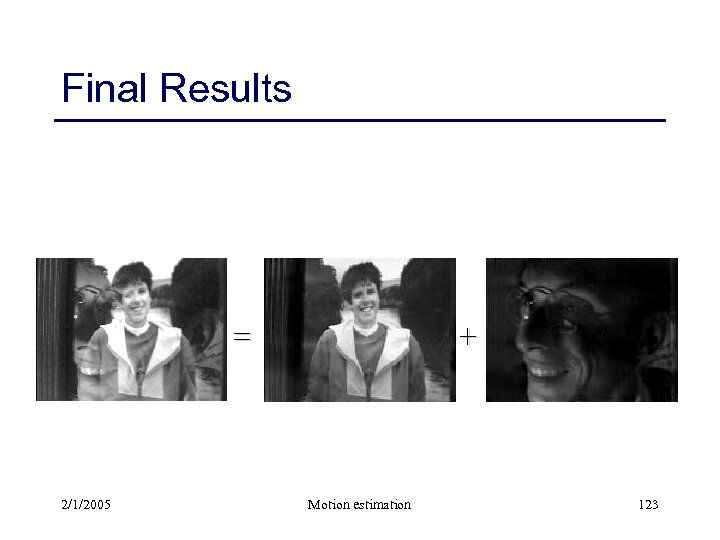

Final Results = 2/1/2005 + Motion estimation 123

Final Results = 2/1/2005 + Motion estimation 123

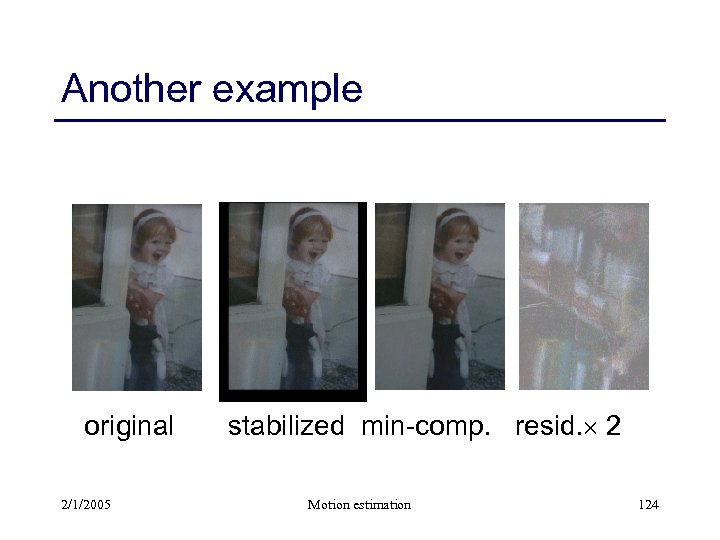

Another example original 2/1/2005 stabilized min-comp. resid. 2 Motion estimation 124

Another example original 2/1/2005 stabilized min-comp. resid. 2 Motion estimation 124

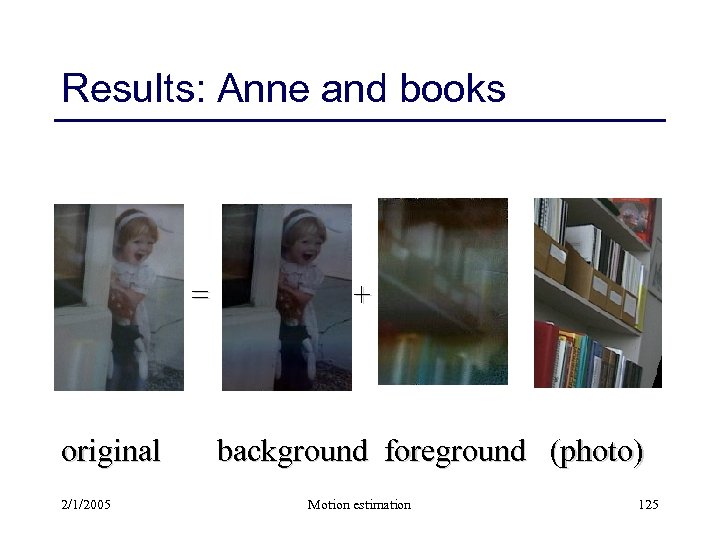

Results: Anne and books = original 2/1/2005 + background foreground (photo) Motion estimation 125

Results: Anne and books = original 2/1/2005 + background foreground (photo) Motion estimation 125

Transparent layer recovery Pure (additive) mixing of intensities • simple constrained least squares problem • degeneracies for simple or small motions Processing stages • dominant motion estimation • min- and max-composites to initialize • optimization of motion and layers 2/1/2005 Motion estimation 126

Transparent layer recovery Pure (additive) mixing of intensities • simple constrained least squares problem • degeneracies for simple or small motions Processing stages • dominant motion estimation • min- and max-composites to initialize • optimization of motion and layers 2/1/2005 Motion estimation 126

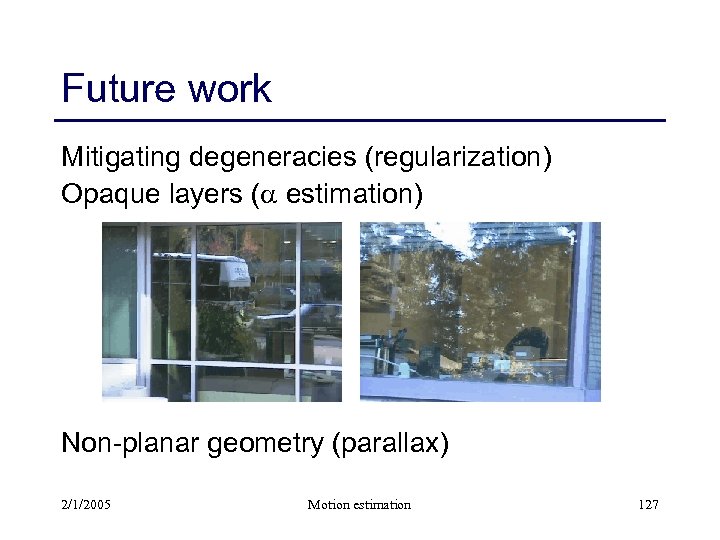

Future work Mitigating degeneracies (regularization) Opaque layers ( estimation) Non-planar geometry (parallax) 2/1/2005 Motion estimation 127

Future work Mitigating degeneracies (regularization) Opaque layers ( estimation) Non-planar geometry (parallax) 2/1/2005 Motion estimation 127

Bibliography L. Williams. Pyramidal parametrics. Computer Graphics, 17(3): 1 --11, July 1983. L. G. Brown. A survey of image registration techniques. Computing Surveys, 24(4): 325 --376, December 1992. C. D. Kuglin and D. C. Hines. The phase correlation image alignment method. In IEEE 1975 Conference on Cybernetics and Society, pages 163 --165, New York, September 1975. J. Gomes, L. Darsa, B. Costa, and L. Velho. Warping and Morphing of Graphical Objects. Morgan Kaufmann Publishers, San Francisco Altos, California, 1999. G. M. Nielson. Scattered data modeling. IEEE Computer Graphics and Applications, 13(1): 60 --70, January 1993. T. Beier and S. Neely. Feature-based image metamorphosis. Computer Graphics (SIGGRAPH'92), 26(2): 35 --42, July 1992. 2/1/2005 Motion estimation 128

Bibliography L. Williams. Pyramidal parametrics. Computer Graphics, 17(3): 1 --11, July 1983. L. G. Brown. A survey of image registration techniques. Computing Surveys, 24(4): 325 --376, December 1992. C. D. Kuglin and D. C. Hines. The phase correlation image alignment method. In IEEE 1975 Conference on Cybernetics and Society, pages 163 --165, New York, September 1975. J. Gomes, L. Darsa, B. Costa, and L. Velho. Warping and Morphing of Graphical Objects. Morgan Kaufmann Publishers, San Francisco Altos, California, 1999. G. M. Nielson. Scattered data modeling. IEEE Computer Graphics and Applications, 13(1): 60 --70, January 1993. T. Beier and S. Neely. Feature-based image metamorphosis. Computer Graphics (SIGGRAPH'92), 26(2): 35 --42, July 1992. 2/1/2005 Motion estimation 128

Bibliography J. R. Bergen, P. Anandan, K. J. Hanna, and R. Hingorani. Hierarchical model-based motion estimation. In ECCV’ 92, pp. 237– 252, Italy, May 1992. M. J. Black and P. Anandan. The robust estimation of multiple motions: Parametric and piecewise-smooth flow fields. Comp. Vis. Image Understanding, 63(1): 75– 104, 1996. H. S. Sawhney and S. Ayer. Compact representation of videos through dominant multiple motion estimation. IEEE Trans. Patt. Anal. Mach. Intel. , 18(8): 814– 830, Aug. 1996. Y. Weiss. Smoothness in layers: Motion segmentation using nonparametric mixture estimation. In CVPR’ 97, pp. 520– 526, June 1997. 2/1/2005 Motion estimation 129

Bibliography J. R. Bergen, P. Anandan, K. J. Hanna, and R. Hingorani. Hierarchical model-based motion estimation. In ECCV’ 92, pp. 237– 252, Italy, May 1992. M. J. Black and P. Anandan. The robust estimation of multiple motions: Parametric and piecewise-smooth flow fields. Comp. Vis. Image Understanding, 63(1): 75– 104, 1996. H. S. Sawhney and S. Ayer. Compact representation of videos through dominant multiple motion estimation. IEEE Trans. Patt. Anal. Mach. Intel. , 18(8): 814– 830, Aug. 1996. Y. Weiss. Smoothness in layers: Motion segmentation using nonparametric mixture estimation. In CVPR’ 97, pp. 520– 526, June 1997. 2/1/2005 Motion estimation 129

Bibliography J. Y. A. Wang and E. H. Adelson. Representing moving images with layers. IEEE Transactions on Image Processing, 3(5): 625 --638, September 1994. Y. Weiss and E. H. Adelson. A unified mixture framework for motion segmentation: Incorporating spatial coherence and estimating the number of models. In IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR'96), pages 321 --326, San Francisco, California, June 1996. Y. Weiss. Smoothness in layers: Motion segmentation using nonparametric mixture estimation. In IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR'97), pages 520 --526, San Juan, Puerto Rico, June 1997. P. R. Hsu, P. Anandan, and S. Peleg. Accurate computation of optical flow by using layered motion representations. In Twelfth International Conference on Pattern Recognition (ICPR'94), pages 743 --746, Jerusalem, Israel, October 1994. IEEE Computer Society Press 2/1/2005 Motion estimation 130

Bibliography J. Y. A. Wang and E. H. Adelson. Representing moving images with layers. IEEE Transactions on Image Processing, 3(5): 625 --638, September 1994. Y. Weiss and E. H. Adelson. A unified mixture framework for motion segmentation: Incorporating spatial coherence and estimating the number of models. In IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR'96), pages 321 --326, San Francisco, California, June 1996. Y. Weiss. Smoothness in layers: Motion segmentation using nonparametric mixture estimation. In IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR'97), pages 520 --526, San Juan, Puerto Rico, June 1997. P. R. Hsu, P. Anandan, and S. Peleg. Accurate computation of optical flow by using layered motion representations. In Twelfth International Conference on Pattern Recognition (ICPR'94), pages 743 --746, Jerusalem, Israel, October 1994. IEEE Computer Society Press 2/1/2005 Motion estimation 130

Bibliography T. Darrell and A. Pentland. Cooperative robust estimation using layers of support. IEEE Transactions on Pattern Analysis and Machine Intelligence, 17(5): 474 --487, May 1995. S. X. Ju, M. J. Black, and A. D. Jepson. Skin and bones: Multi-layer, locally affine, optical flow and regularization with transparency. In IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR'96), pages 307 --314, San Francisco, California, June 1996. M. Irani, B. Rousso, and S. Peleg. Computing occluding and transparent motions. International Journal of Computer Vision, 12(1): 5 --16, January 1994. H. S. Sawhney and S. Ayer. Compact representation of videos through dominant multiple motion estimation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 18(8): 814 --830, August 1996. M. -C. Lee et al. A layered video object coding system using sprite and affine motion model. IEEE Transactions on Circuits and Systems for Video Technology, 7(1): 130 --145, February 1997. 2/1/2005 Motion estimation 131

Bibliography T. Darrell and A. Pentland. Cooperative robust estimation using layers of support. IEEE Transactions on Pattern Analysis and Machine Intelligence, 17(5): 474 --487, May 1995. S. X. Ju, M. J. Black, and A. D. Jepson. Skin and bones: Multi-layer, locally affine, optical flow and regularization with transparency. In IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR'96), pages 307 --314, San Francisco, California, June 1996. M. Irani, B. Rousso, and S. Peleg. Computing occluding and transparent motions. International Journal of Computer Vision, 12(1): 5 --16, January 1994. H. S. Sawhney and S. Ayer. Compact representation of videos through dominant multiple motion estimation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 18(8): 814 --830, August 1996. M. -C. Lee et al. A layered video object coding system using sprite and affine motion model. IEEE Transactions on Circuits and Systems for Video Technology, 7(1): 130 --145, February 1997. 2/1/2005 Motion estimation 131

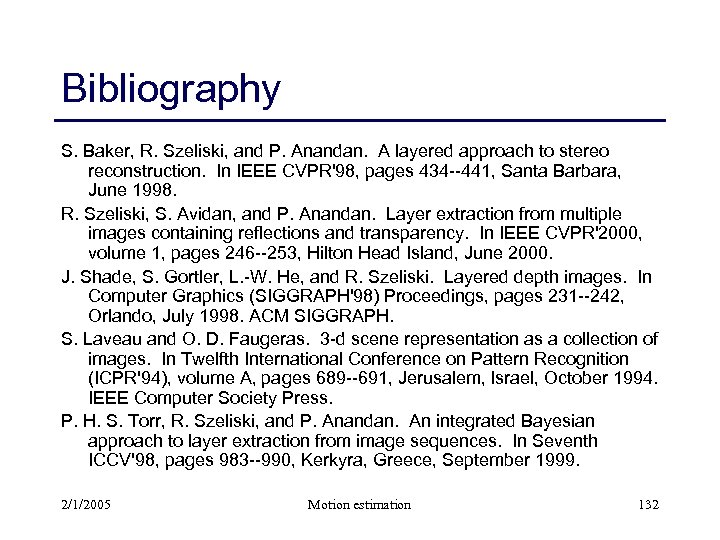

Bibliography S. Baker, R. Szeliski, and P. Anandan. A layered approach to stereo reconstruction. In IEEE CVPR'98, pages 434 --441, Santa Barbara, June 1998. R. Szeliski, S. Avidan, and P. Anandan. Layer extraction from multiple images containing reflections and transparency. In IEEE CVPR'2000, volume 1, pages 246 --253, Hilton Head Island, June 2000. J. Shade, S. Gortler, L. -W. He, and R. Szeliski. Layered depth images. In Computer Graphics (SIGGRAPH'98) Proceedings, pages 231 --242, Orlando, July 1998. ACM SIGGRAPH. S. Laveau and O. D. Faugeras. 3 -d scene representation as a collection of images. In Twelfth International Conference on Pattern Recognition (ICPR'94), volume A, pages 689 --691, Jerusalem, Israel, October 1994. IEEE Computer Society Press. P. H. S. Torr, R. Szeliski, and P. Anandan. An integrated Bayesian approach to layer extraction from image sequences. In Seventh ICCV'98, pages 983 --990, Kerkyra, Greece, September 1999. 2/1/2005 Motion estimation 132

Bibliography S. Baker, R. Szeliski, and P. Anandan. A layered approach to stereo reconstruction. In IEEE CVPR'98, pages 434 --441, Santa Barbara, June 1998. R. Szeliski, S. Avidan, and P. Anandan. Layer extraction from multiple images containing reflections and transparency. In IEEE CVPR'2000, volume 1, pages 246 --253, Hilton Head Island, June 2000. J. Shade, S. Gortler, L. -W. He, and R. Szeliski. Layered depth images. In Computer Graphics (SIGGRAPH'98) Proceedings, pages 231 --242, Orlando, July 1998. ACM SIGGRAPH. S. Laveau and O. D. Faugeras. 3 -d scene representation as a collection of images. In Twelfth International Conference on Pattern Recognition (ICPR'94), volume A, pages 689 --691, Jerusalem, Israel, October 1994. IEEE Computer Society Press. P. H. S. Torr, R. Szeliski, and P. Anandan. An integrated Bayesian approach to layer extraction from image sequences. In Seventh ICCV'98, pages 983 --990, Kerkyra, Greece, September 1999. 2/1/2005 Motion estimation 132