be8d6f0fb710b23e5b2ce43e6902f9c1.ppt

- Количество слайдов: 60

Monitoring, Control and Optimization in Large Distributed Systems ECSAC- August 2009, Veli Losink Iosif Legrand California Institute of Technology 1 Iosif Legrand August 2009 April 2007 Iosif Legra

Monitoring Distributed Systems An essential part of managing large scale, distributed data processing facilities, is a monitoring system that is able to monitor computing facilities, storage systems, networks and a very large number of applications running on these systems in near-real time. The monitoring information gathered for all the subsystems is essential for design, debugging, accounting and the development of “higher level services”, that provide decision support and some degree of automated decisions and for maintaining and optimizing workflow in large scale distributed systems. Iosif Legrand August 2009

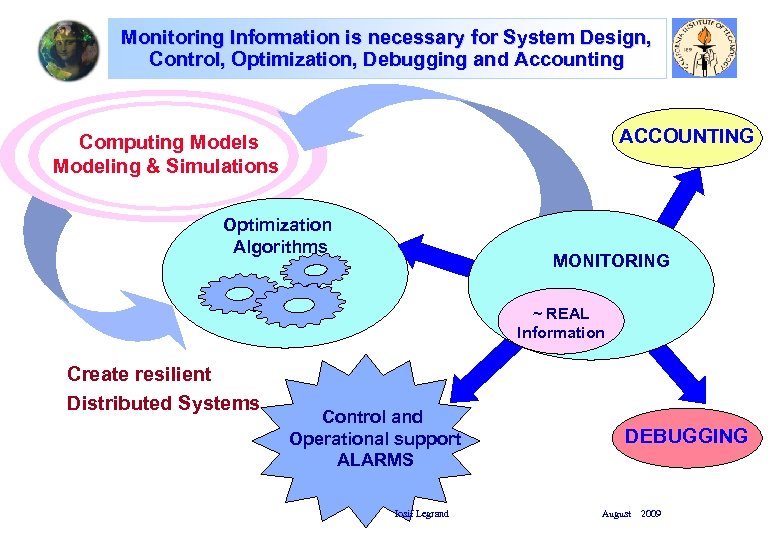

Monitoring Information is necessary for System Design, Control, Optimization, Debugging and Accounting ACCOUNTING Computing Models Modeling & Simulations Optimization Algorithms MONITORING ~ REAL Information Create resilient Distributed Systems Control and Operational support ALARMS Iosif Legrand DEBUGGING August 2009

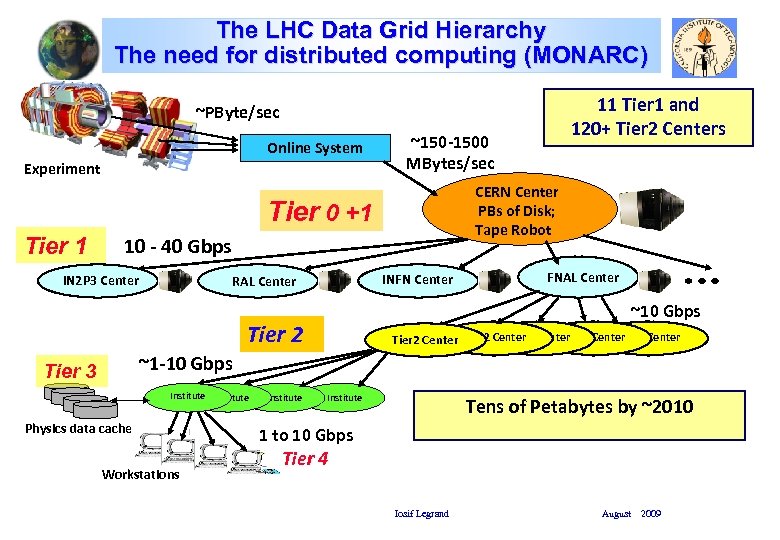

The LHC Data Grid Hierarchy The need for distributed computing (MONARC) 11 Tier 1 and 120+ Tier 2 Centers ~PByte/sec Online System Experiment ~150 -1500 MBytes/sec CERN Center PBs of Disk; Tape Robot Tier 0 +1 Tier 1 10 - 40 Gbps IN 2 P 3 Center INFN Center RAL Center FNAL Center ~10 Gbps Tier 2 Center Tier 2 Center ~1 -10 Gbps Tier 3 Institute Physics data cache Workstations Institute Tens of Petabytes by ~2010 1 to 10 Gbps Tier 4 Iosif Legrand August 2009

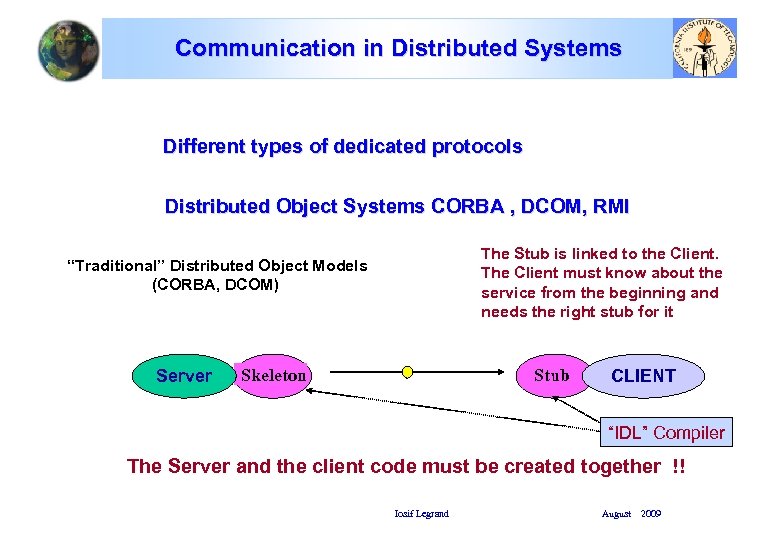

Communication in Distributed Systems Different types of dedicated protocols Distributed Object Systems CORBA , DCOM, RMI The Stub is linked to the Client. The Client must know about the service from the beginning and needs the right stub for it “Traditional” Distributed Object Models (CORBA, DCOM) Server Lookup Stub Service Lookup Skeleton Service CLIENT “IDL” Compiler The Server and the client code must be created together !! Iosif Legrand August 2009

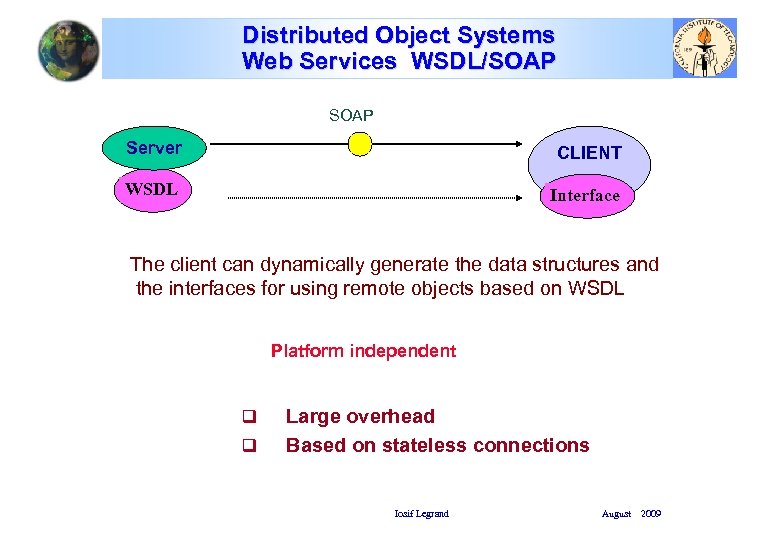

Distributed Object Systems Web Services WSDL/SOAP Server CLIENT Lookup WSDL Service Lookup Interface Service The client can dynamically generate the data structures and the interfaces for using remote objects based on WSDL Platform independent q q Large overhead Based on stateless connections Iosif Legrand August 2009

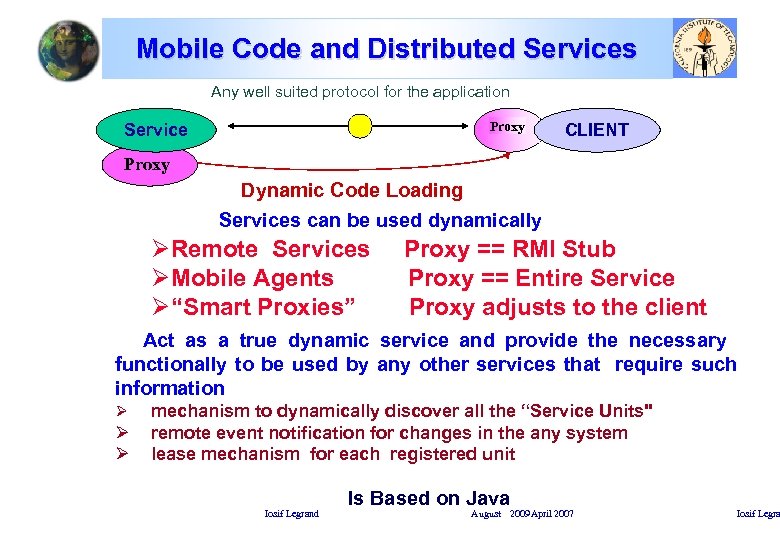

Mobile Code and Distributed Services Any well suited protocol for the application Lookup Proxy Service CLIENT Lookup Proxy Service Dynamic Code Loading Services can be used dynamically ØRemote Services Proxy == RMI Stub ØMobile Agents Proxy == Entire Service Ø“Smart Proxies” Proxy adjusts to the client Act as a true dynamic service and provide the necessary functionally to be used by any other services that require such information Ø Ø Ø mechanism to dynamically discover all the “Service Units" remote event notification for changes in the any system lease mechanism for each registered unit Iosif Legrand Is Based on Java August 2009 April 2007 Iosif Legra

The Mon. ALISA Framework Ø Mon. ALISA is a Dynamic, Distributed Service System capable to collect any type of information from different systems, to analyze it in near real time and to provide support for automated control decisions and global optimization of workflows in complex grid systems. Ø The Mon. ALISA system is designed as an ensemble of autonomous multithreaded, self-describing agent-based subsystems which are registered as dynamic services, and are able to collaborate and cooperate in performing a wide range of monitoring tasks. These agents can analyze and process the information, in a distributed way, and to provide optimization decisions in large scale distributed applications. Iosif Legrand August 2009 April 2007 Iosif Legra

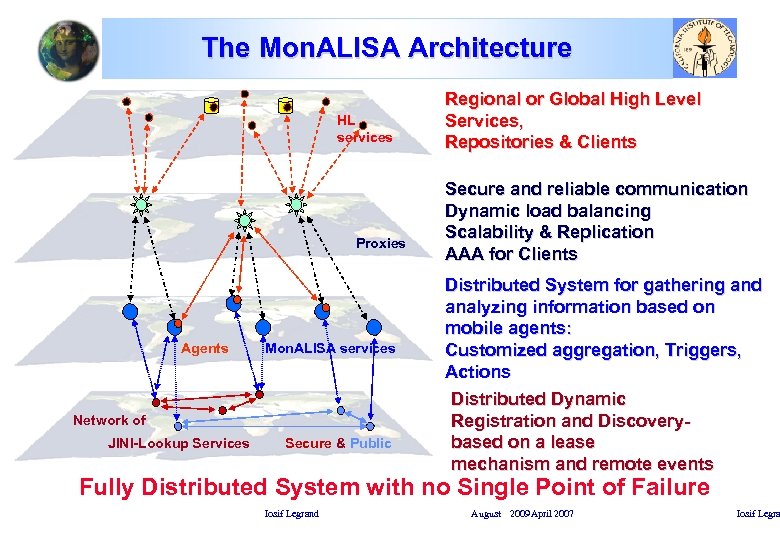

The Mon. ALISA Architecture HL services Proxies Agents Mon. ALISA services Network of JINI-Lookup Services Secure & Public Regional or Global High Level Services, Repositories & Clients Secure and reliable communication Dynamic load balancing Scalability & Replication AAA for Clients Distributed System for gathering and analyzing information based on mobile agents: Customized aggregation, Triggers, Actions Distributed Dynamic Registration and Discoverybased on a lease mechanism and remote events Fully Distributed System with no Single Point of Failure Iosif Legrand August 2009 April 2007 Iosif Legra

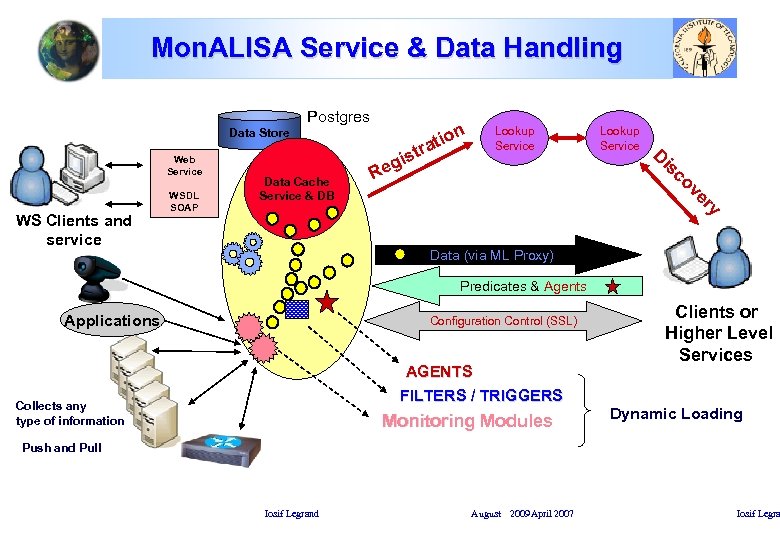

Mon. ALISA Service & Data Handling Postgres n Data Store Web Service WS Clients and service WSDL SOAP Data Cache Service & DB io rat t is eg R Lookup Service D is co ve ry Data (via ML Proxy) Predicates & Agents Applications Configuration Control (SSL) AGENTS FILTERS / TRIGGERS Collects any type of information Monitoring Modules Clients or Higher Level Services Dynamic Loading Push and Pull Iosif Legrand August 2009 April 2007 Iosif Legra

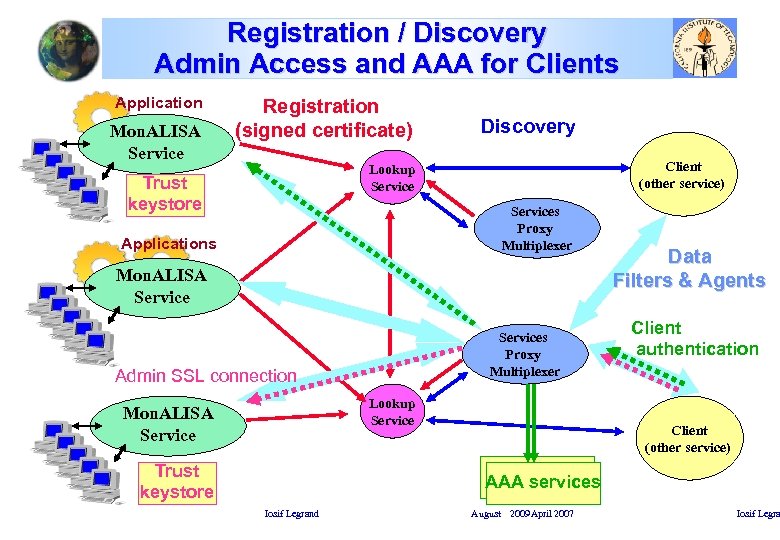

Registration / Discovery Admin Access and AAA for Clients Application Mon. ALISA Service Registration (signed certificate) Discovery Client (other service) Lookup Service Trust keystore Services Proxy Multiplexer Applications Mon. ALISA Services Proxy Multiplexer Admin SSL connection Lookup Service Mon. ALISA Service Trust keystore Data Filters & Agents Client authentication Client (other service) AAA services Iosif Legrand August 2009 April 2007 Iosif Legra

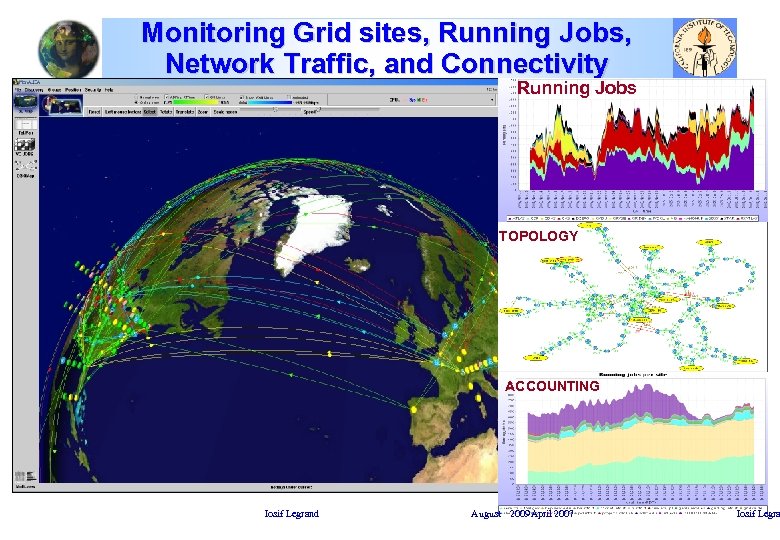

Monitoring Grid sites, Running Jobs, Network Traffic, and Connectivity Running Jobs JOBS TOPOLOGY ACCOUNTING Iosif Legrand August 2009 April 2007 Iosif Legra

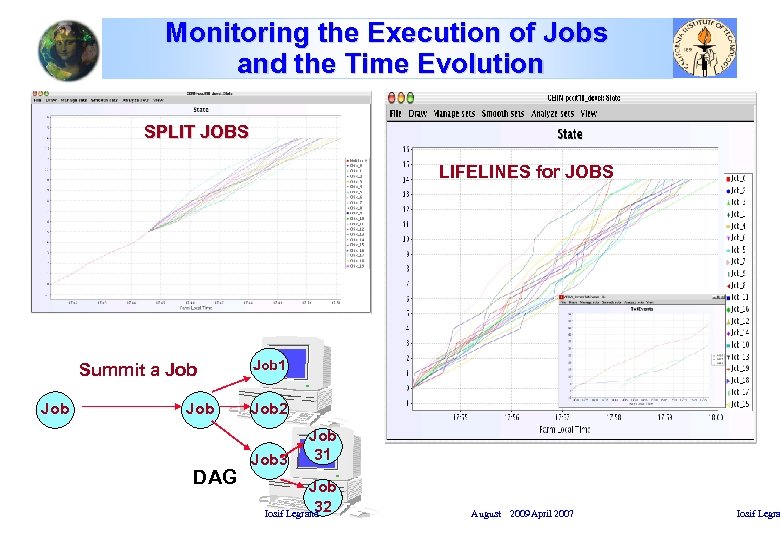

Monitoring the Execution of Jobs and the Time Evolution SPLIT JOBS LIFELINES for JOBS Summit a Job Job DAG Job 1 Job 2 Job 31 Job 32 Iosif Legrand August 2009 April 2007 Iosif Legra

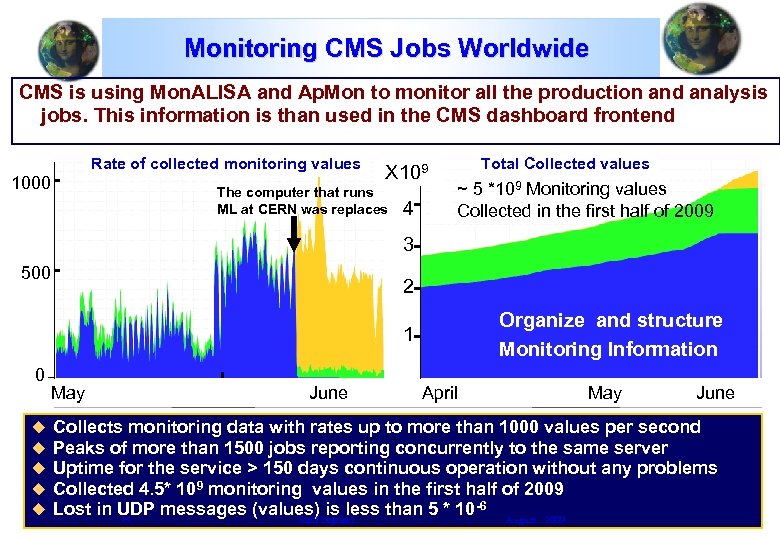

Monitoring CMS Jobs Worldwide CMS is using Mon. ALISA and Ap. Mon to monitor all the production and analysis jobs. This information is than used in the CMS dashboard frontend Rate of collected monitoring values 1000 X 109 The computer that runs ML at CERN was replaces 4 Total Collected values ~ 5 *109 Monitoring values Collected in the first half of 2009 3 500 2 Organize and structure Monitoring Information 1 0 u u u May June April May June Collects monitoring data with rates up to more than 1000 values per second Peaks of more than 1500 jobs reporting concurrently to the same server Uptime for the service > 150 days continuous operation without any problems Collected 4. 5* 109 monitoring values in the first half of 2009 Lost in UDP messages (values) is less than 5 * 10 -6 August 2009 14 Iosif Legrand

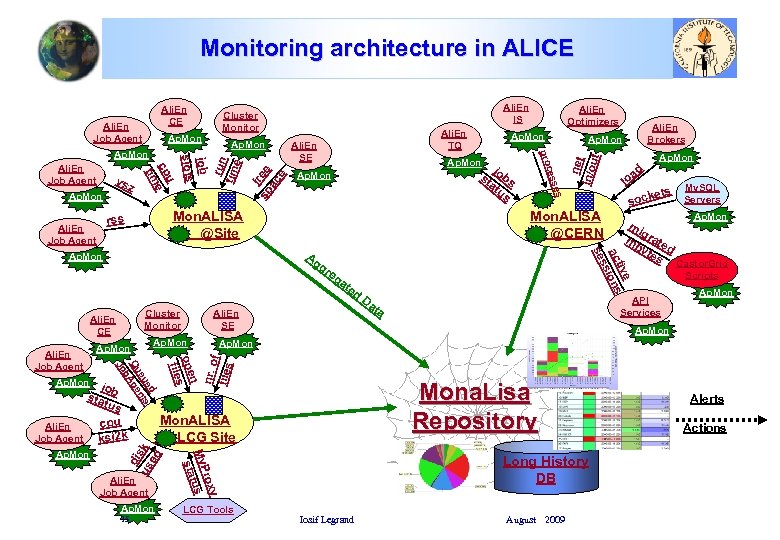

Monitoring architecture in ALICE Ap. Mon run tim e eg at ed n ope files ed eu nts Qu Age b Jo di us sk ed My. SQL ets Servers sock mi g mb rate yte d s API Services at a Ap. Mon Castor. Grid Scripts Ap. Mon Mona. Lisa Repository y rox My. P tus sta Ap. Mon D lo Ap. Mon. ALISA LCG Site Ali. En Job Agent 15 gr nr. o f files Ap. Mon job sta tus cpu Ali. En Job Agent ksi 2 k Ap. Mon. ALISA @CERN Ali. En SE Ap. Mon ad e tiv ac ions ss se Cluster Monitor Ali. En CE Ap. Mon Ag Ap. Mon Ali. En Job Agent j st obs at us Mon. ALISA @Site rss Ali. En Job Agent Ap. Mon Ali. En Brokers Ap. Mon ses z Ali. En SE Ap. Mon ces vs Ali. En TQ Ali. En Optimizers pro Ali. En Job Agent Ap. Mon job slots u cp e tim Ap. Mon f sp ree ac e Ali. En Job Agent Ali. En IS Cluster Monitor net In/o ut Ali. En CE LCG Tools Alerts Actions Long History DB Iosif Legrand August 2009

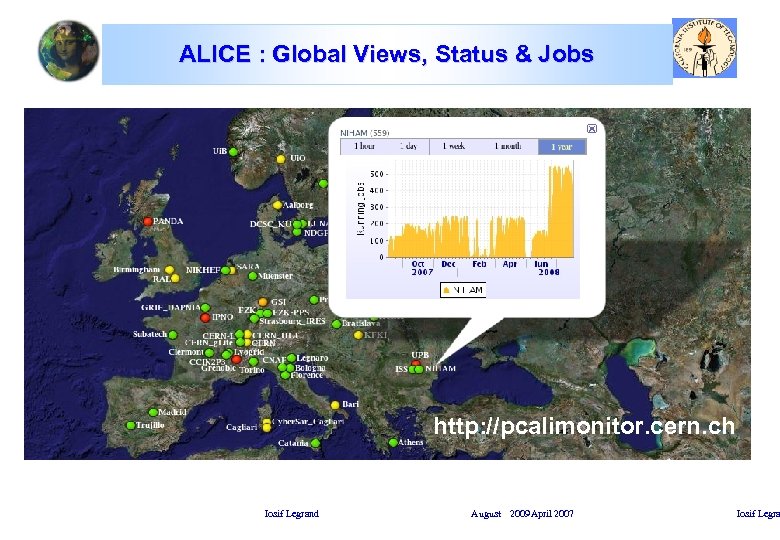

ALICE : Global Views, Status & Jobs http: //pcalimonitor. cern. ch Iosif Legrand August 2009 April 2007 Iosif Legra

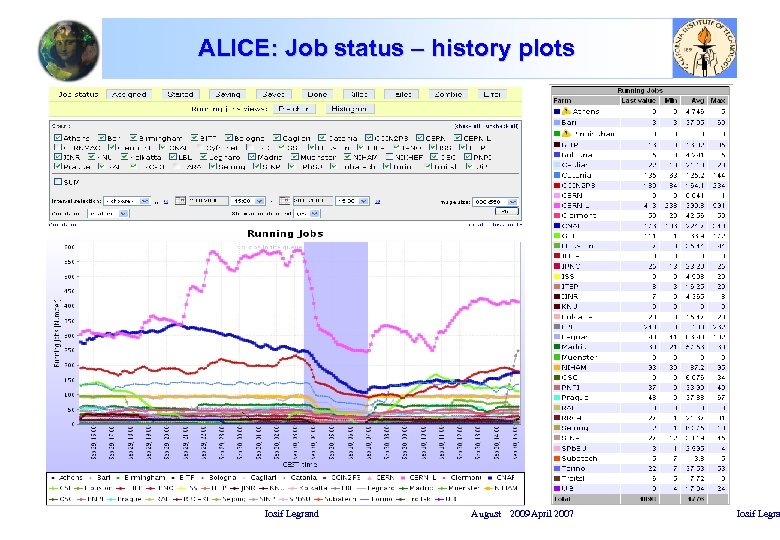

ALICE: Job status – history plots Iosif Legrand August 2009 April 2007 Iosif Legra

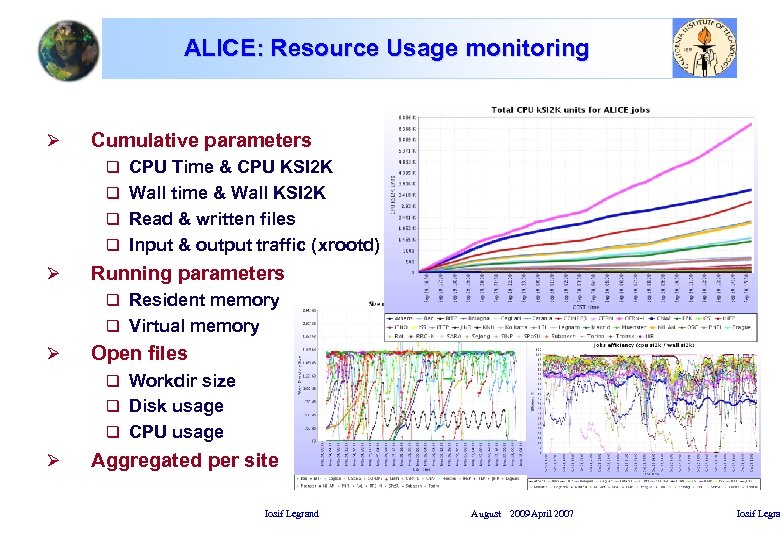

ALICE: Resource Usage monitoring Ø Cumulative parameters q CPU Time & CPU KSI 2 K q Wall time & Wall KSI 2 K q Read & written files q Input & output traffic (xrootd) Ø Running parameters q Resident memory q Virtual memory Ø Open files q Workdir size q Disk usage q CPU usage Ø Aggregated per site Iosif Legrand August 2009 April 2007 Iosif Legra

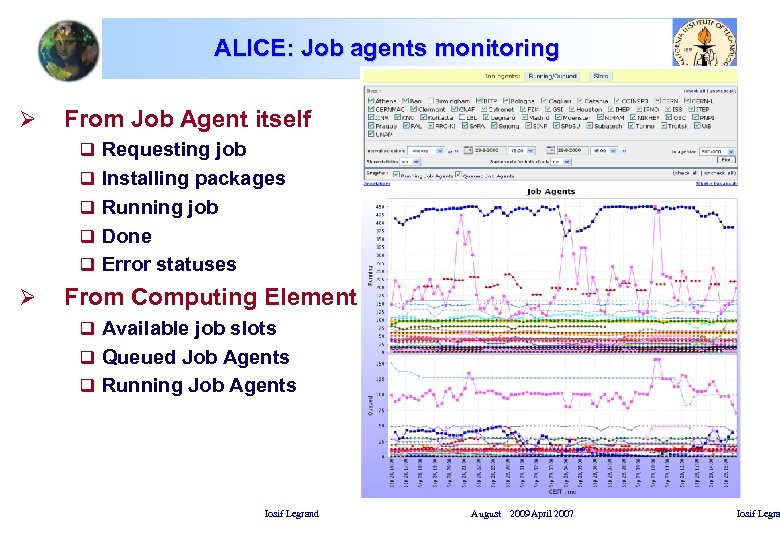

ALICE: Job agents monitoring Ø From Job Agent itself q Requesting job q Installing packages q Running job q Done q Error statuses Ø From Computing Element q Available job slots q Queued Job Agents q Running Job Agents Iosif Legrand August 2009 April 2007 Iosif Legra

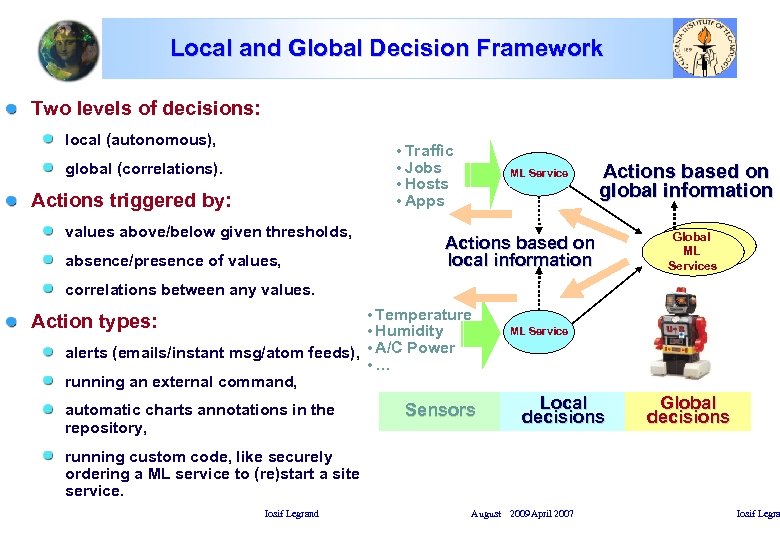

Local and Global Decision Framework Two levels of decisions: local (autonomous), • Traffic • Jobs • Hosts • Apps global (correlations). Actions triggered by: values above/below given thresholds, absence/presence of values, ML Service Actions based on global information Actions based on local information Global ML Services correlations between any values. • Temperature • Humidity alerts (emails/instant msg/atom feeds), • A/C Power • … Action types: ML Service running an external command, automatic charts annotations in the repository, Sensors Local decisions Global decisions running custom code, like securely ordering a ML service to (re)start a site service. Iosif Legrand August 2009 April 2007 Iosif Legra

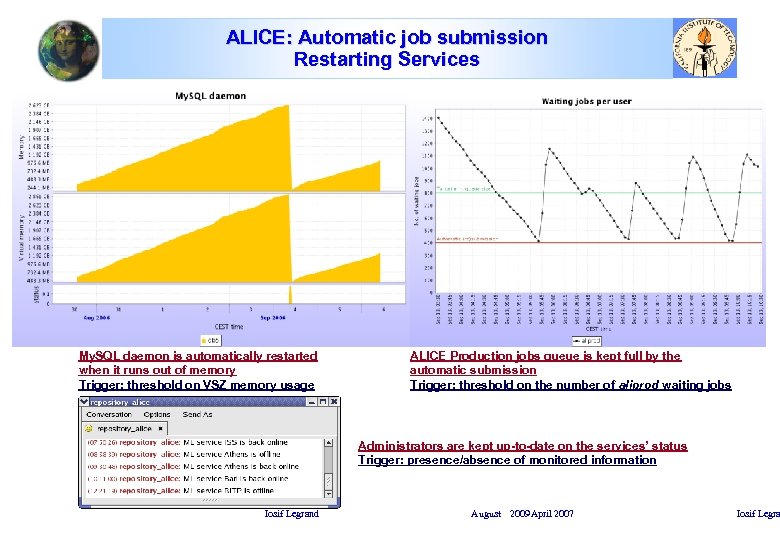

ALICE: Automatic job submission Restarting Services My. SQL daemon is automatically restarted when it runs out of memory Trigger: threshold on VSZ memory usage ALICE Production jobs queue is kept full by the automatic submission Trigger: threshold on the number of aliprod waiting jobs Administrators are kept up-to-date on the services’ status Trigger: presence/absence of monitored information Iosif Legrand August 2009 April 2007 Iosif Legra

Automatic actions in ALICE is using the monitoring information to automatically: resubmit error jobs until a target completion percentage is reached, submit new jobs when necessary (watching the task queue size for each service account) production jobs, RAW data reconstruction jobs, for each pass, restart site services, whenever tests of Vo. Box services fail but the central services are OK, send email notifications / add chart annotations when a problem was not solved by a restart dynamically modify the DNS aliases of central services for an efficient load-balancing. Most of the actions are defined by few lines configuration files. Iosif Legrand August 2009 April 2007 Iosif Legra

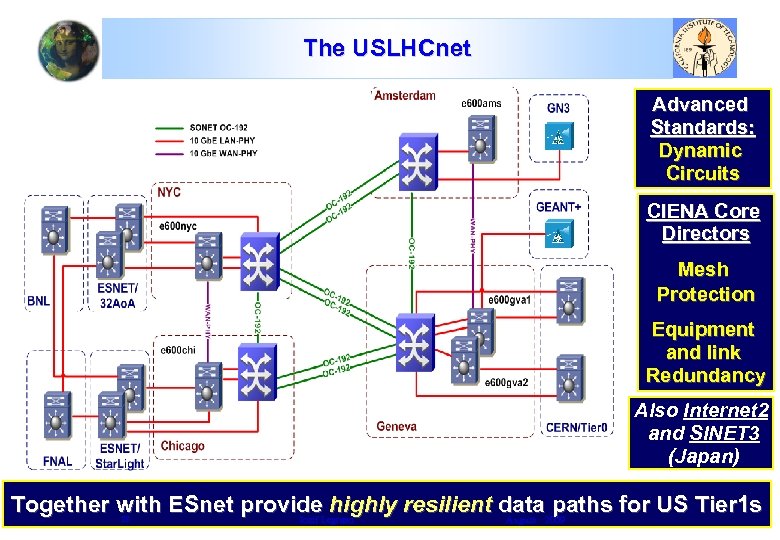

The USLHCnet Advanced Standards: Dynamic Circuits CIENA Core Directors Mesh Protection Equipment and link Redundancy Also Internet 2 and SINET 3 (Japan) Together with ESnet provide Artur Barczyk, 04/16/2009 highly resilient data paths for US Tier 1 s Iosif Legrand August 2009 US LHCNet status report 23

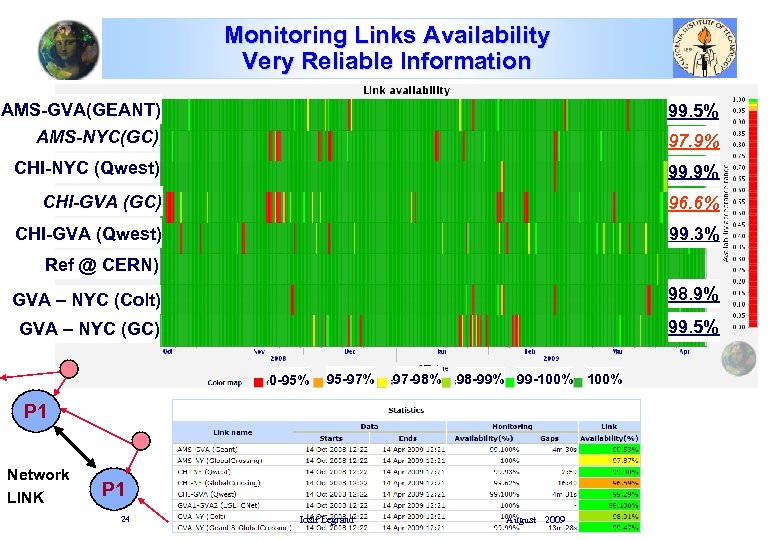

Monitoring Links Availability Very Reliable Information AMS-GVA(GEANT) 99. 5% AMS-NYC(GC) 97. 9% CHI-NYC (Qwest) 99. 9% CHI-GVA (GC) 96. 6% CHI-GVA (Qwest) 99. 3% Ref @ CERN) GVA – NYC (Colt) 98. 9% GVA – NYC (GC) 99. 5% 0 -95% 95 -97% 97 -98% 98 -99% 99 -100% P 1 Network LINK P 1 24 Iosif Legrand. Barczyk, 04/16/2009 Artur August 2009

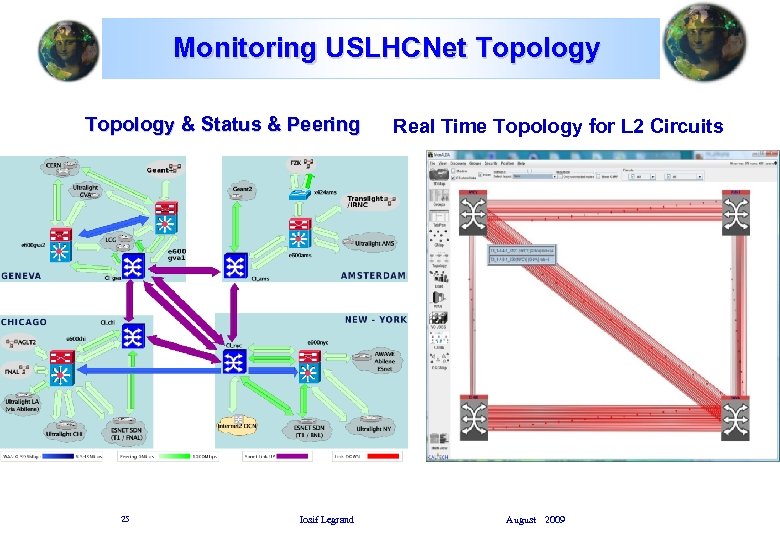

Monitoring USLHCNet Topology & Status & Peering Real Time Topology for L 2 Circuits 25 Iosif Legrand August 2009

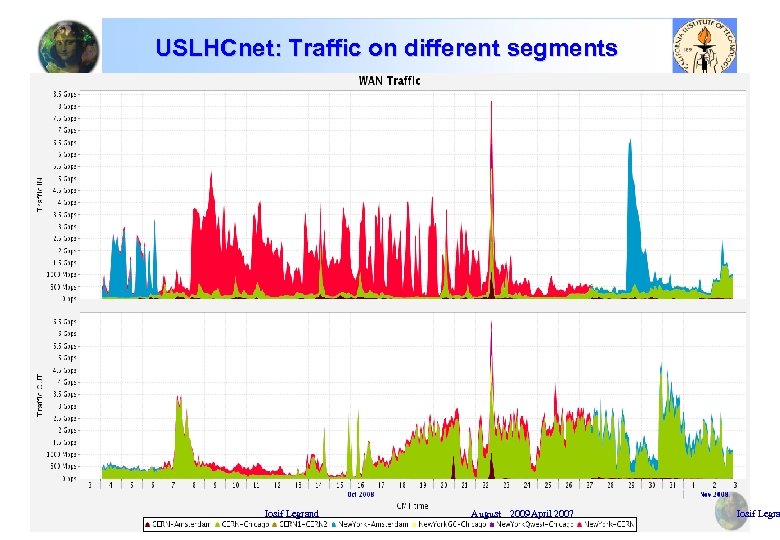

USLHCnet: Traffic on different segments Iosif Legrand August 2009 April 2007 Iosif Legra

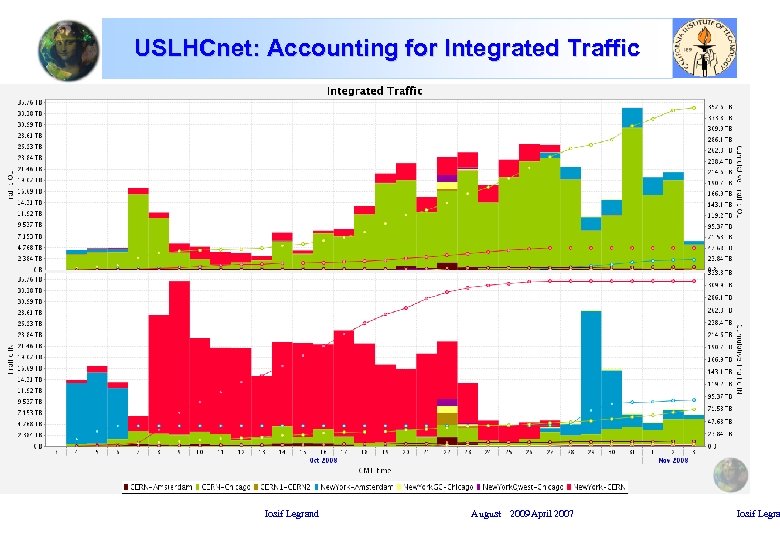

USLHCnet: Accounting for Integrated Traffic Iosif Legrand August 2009 April 2007 Iosif Legra

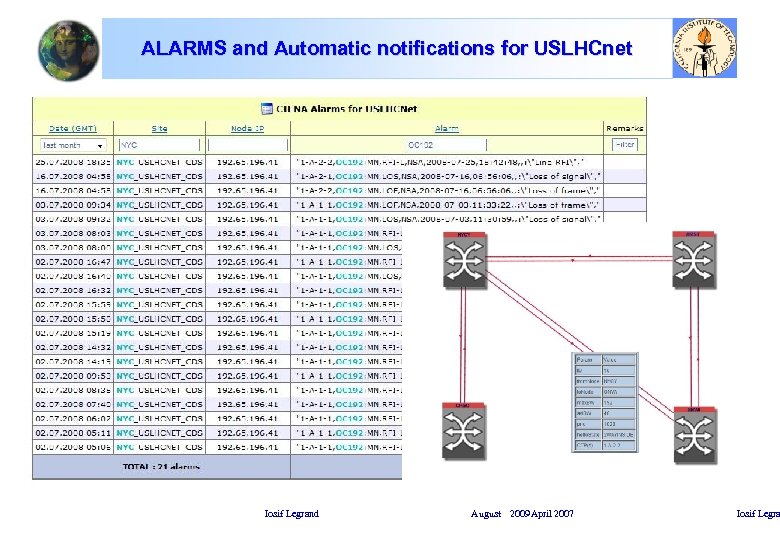

ALARMS and Automatic notifications for USLHCnet Iosif Legrand August 2009 April 2007 Iosif Legra

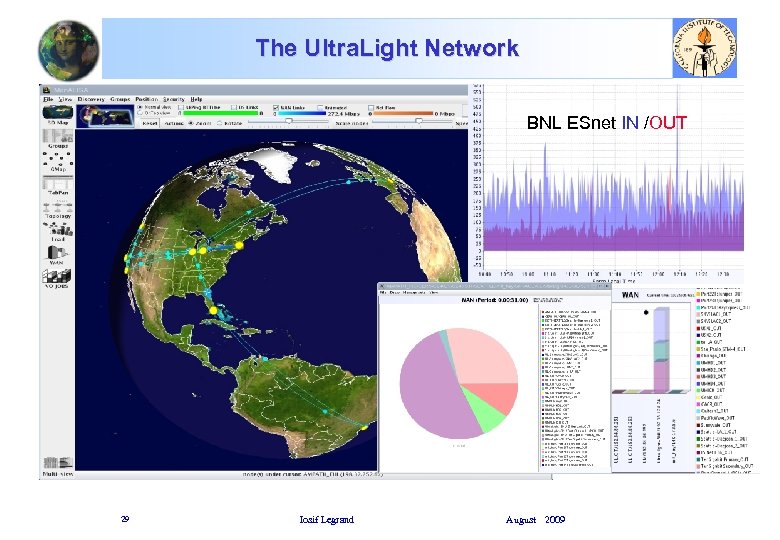

The Ultra. Light Network BNL ESnet IN /OUT 29 Iosif Legrand August 2009

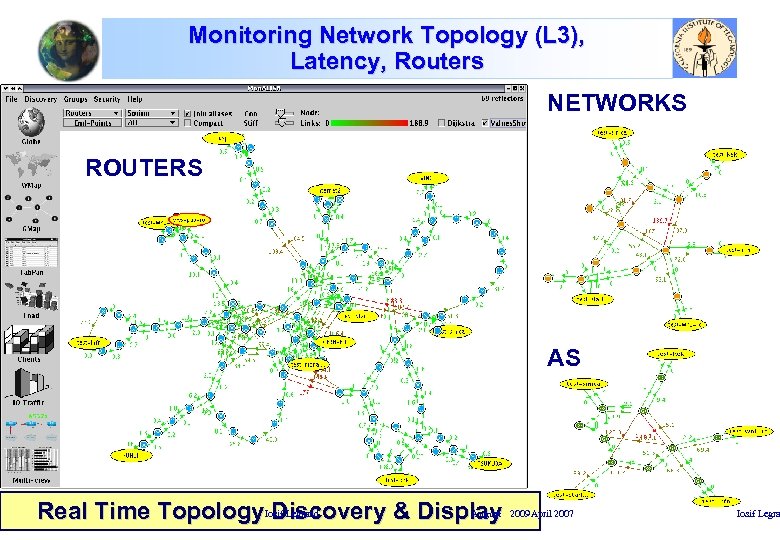

Monitoring Network Topology (L 3), Latency, Routers NETWORKS ROUTERS AS Iosif Legrand August Real Time Topology Discovery & Display 2009 April 2007 Iosif Legra

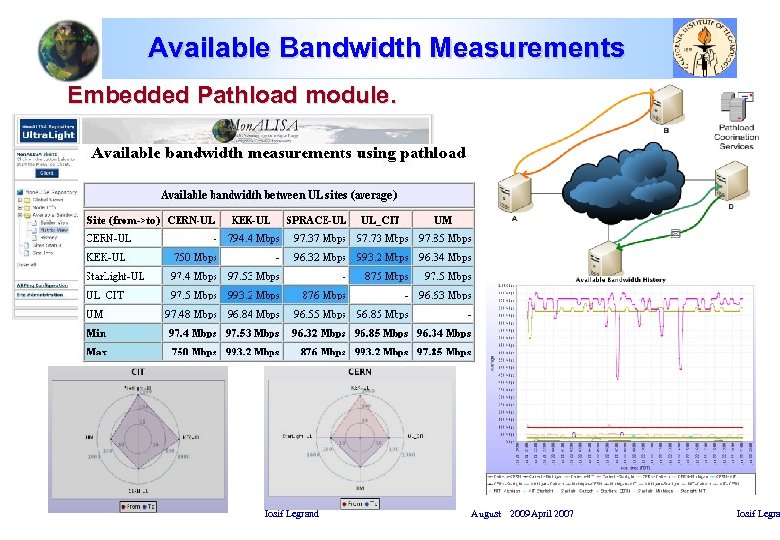

Available Bandwidth Measurements Embedded Pathload module. Iosif Legrand August 2009 April 2007 Iosif Legra

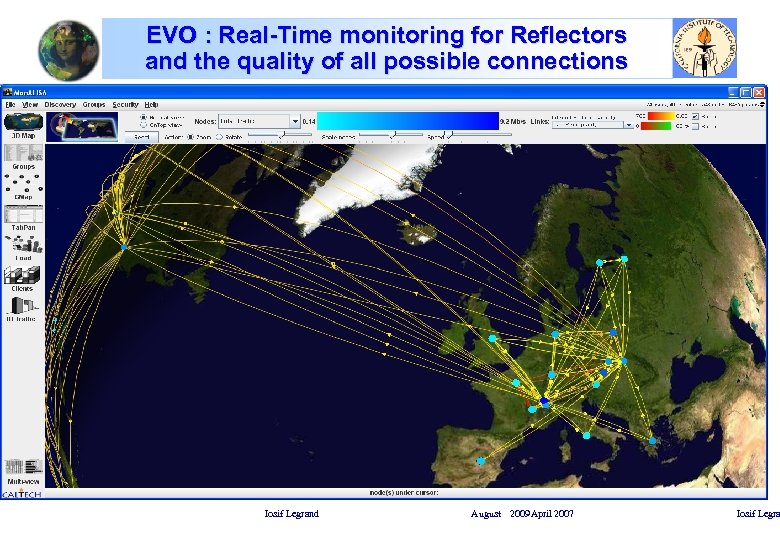

EVO : Real-Time monitoring for Reflectors and the quality of all possible connections Iosif Legrand August 2009 April 2007 Iosif Legra

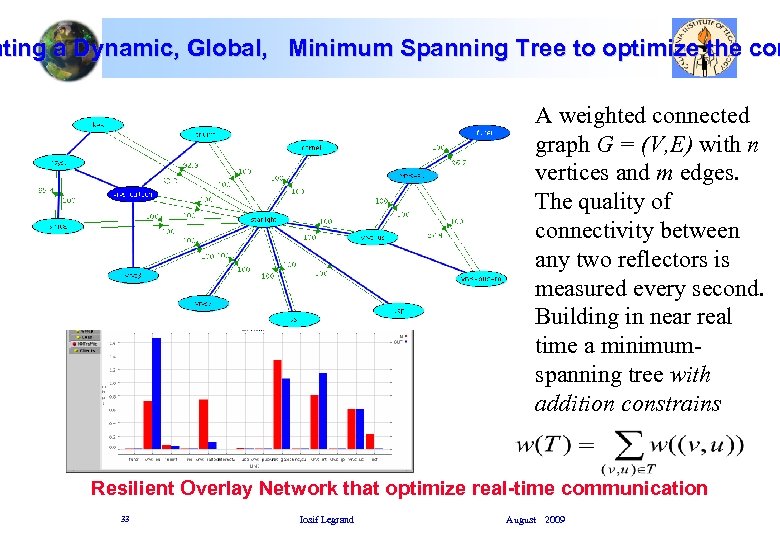

ating a Dynamic, Global, Minimum Spanning Tree to optimize the con A weighted connected graph G = (V, E) with n vertices and m edges. The quality of connectivity between any two reflectors is measured every second. Building in near real time a minimumspanning tree with addition constrains Resilient Overlay Network that optimize real-time communication 33 Iosif Legrand August 2009

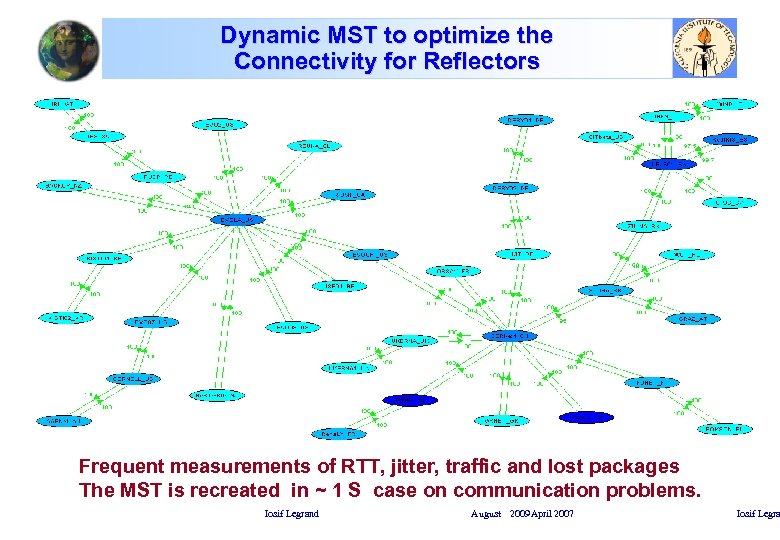

Dynamic MST to optimize the Connectivity for Reflectors Frequent measurements of RTT, jitter, traffic and lost packages The MST is recreated in ~ 1 S case on communication problems. Iosif Legrand August 2009 April 2007 Iosif Legra

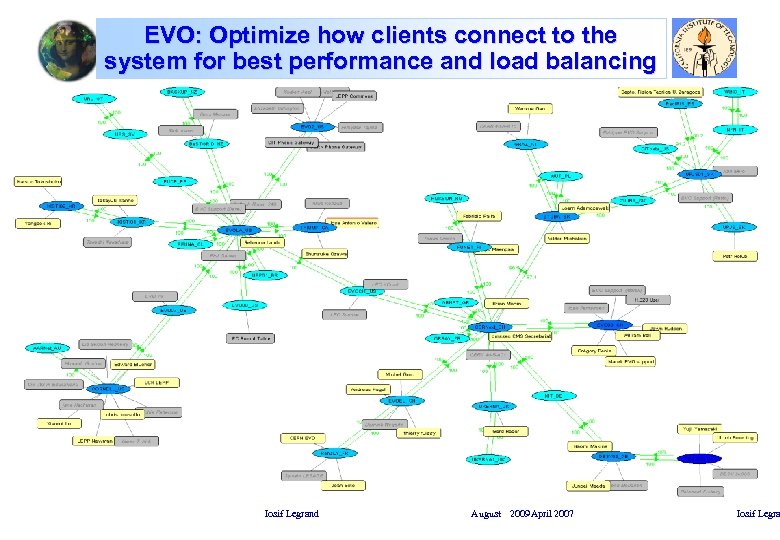

EVO: Optimize how clients connect to the system for best performance and load balancing Iosif Legrand August 2009 April 2007 Iosif Legra

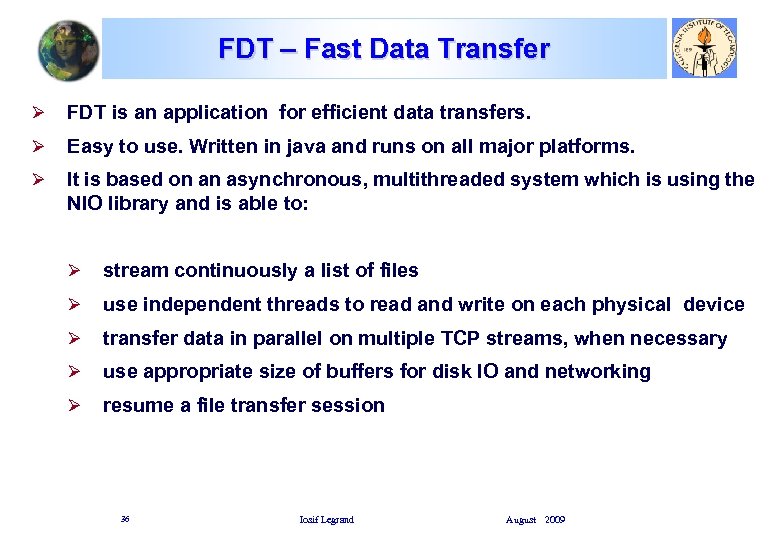

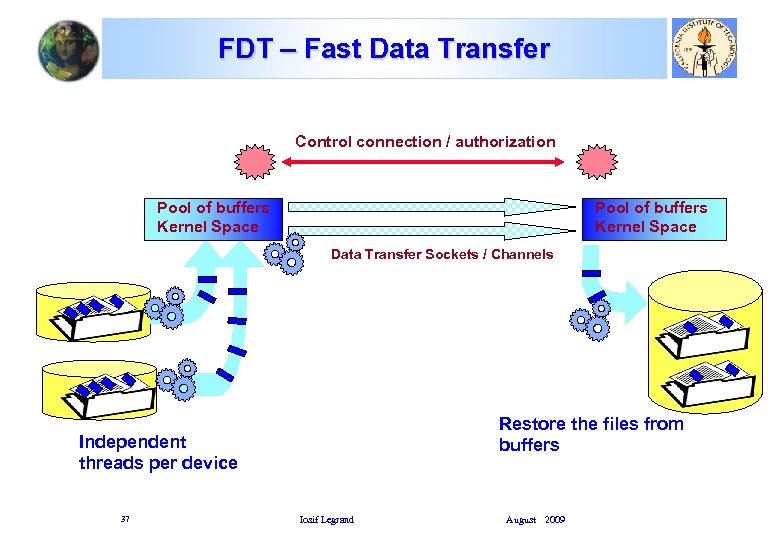

FDT – Fast Data Transfer Ø FDT is an application for efficient data transfers. Ø Easy to use. Written in java and runs on all major platforms. Ø It is based on an asynchronous, multithreaded system which is using the NIO library and is able to: Ø stream continuously a list of files Ø use independent threads to read and write on each physical device Ø transfer data in parallel on multiple TCP streams, when necessary Ø use appropriate size of buffers for disk IO and networking Ø resume a file transfer session 36 Iosif Legrand August 2009

FDT – Fast Data Transfer Control connection / authorization Pool of buffers Kernel Space Data Transfer Sockets / Channels Restore the files from buffers Independent threads per device 37 Iosif Legrand August 2009

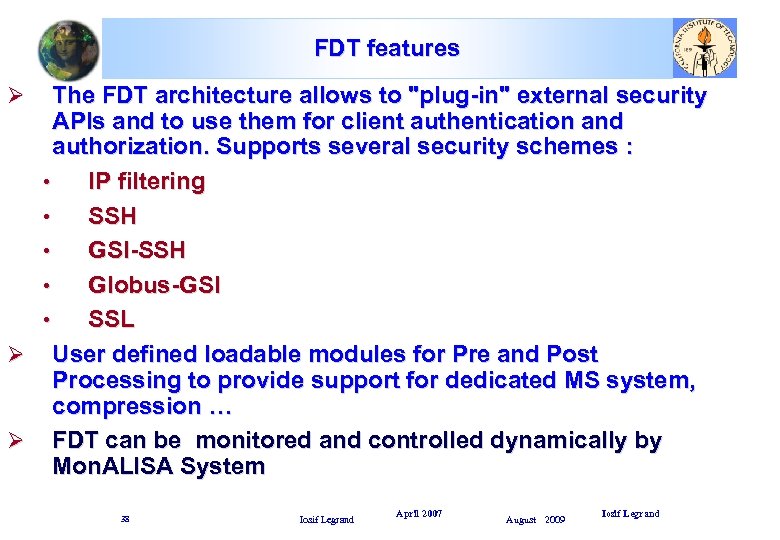

FDT features The FDT architecture allows to "plug-in" external security APIs and to use them for client authentication and authorization. Supports several security schemes : • IP filtering • SSH • GSI-SSH • Globus-GSI • SSL Ø User defined loadable modules for Pre and Post Processing to provide support for dedicated MS system, compression … Ø FDT can be monitored and controlled dynamically by Mon. ALISA System Ø 38 Iosif Legrand April 2007 August 2009 Iosif Legrand

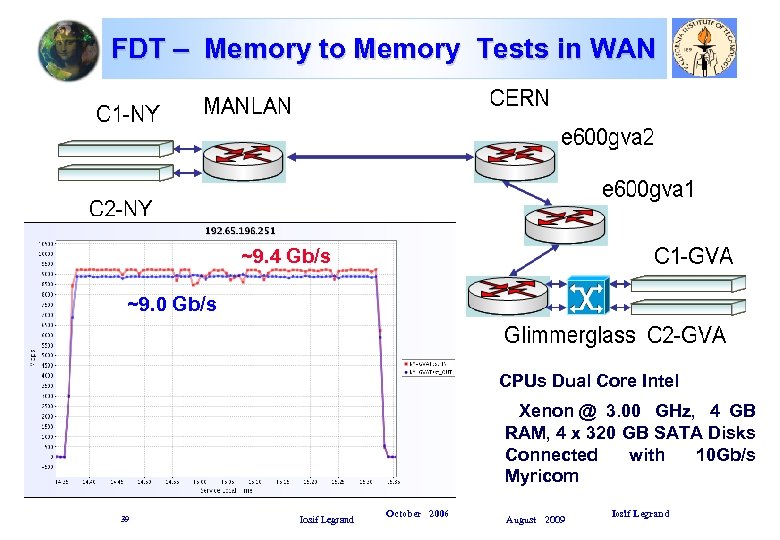

FDT – Memory to Memory Tests in WAN ~9. 4 Gb/s ~9. 0 Gb/s CPUs Dual Core Intel Xenon @ 3. 00 GHz, 4 GB RAM, 4 x 320 GB SATA Disks Connected with 10 Gb/s Myricom 39 Iosif Legrand October 2006 August 2009 Iosif Legrand

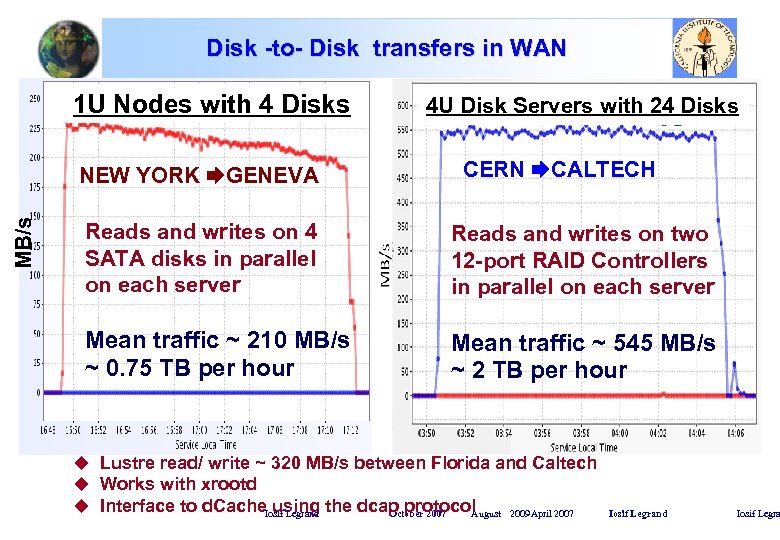

Disk -to- Disk transfers in WAN 1 U Nodes with 4 Disks MB/s NEW YORK GENEVA 4 U Disk Servers with 24 Disks CERN CALTECH Reads and writes on 4 SATA disks in parallel on each server Reads and writes on two 12 -port RAID Controllers in parallel on each server Mean traffic ~ 210 MB/s ~ 0. 75 TB per hour Mean traffic ~ 545 MB/s ~ 2 TB per hour u Lustre read/ write ~ 320 MB/s between Florida and Caltech u Works with xrootd u Interface to d. Cache using the dcap protocol Iosif Legrand October 2007 August 2009 April 2007 Iosif Legrand Iosif Legra

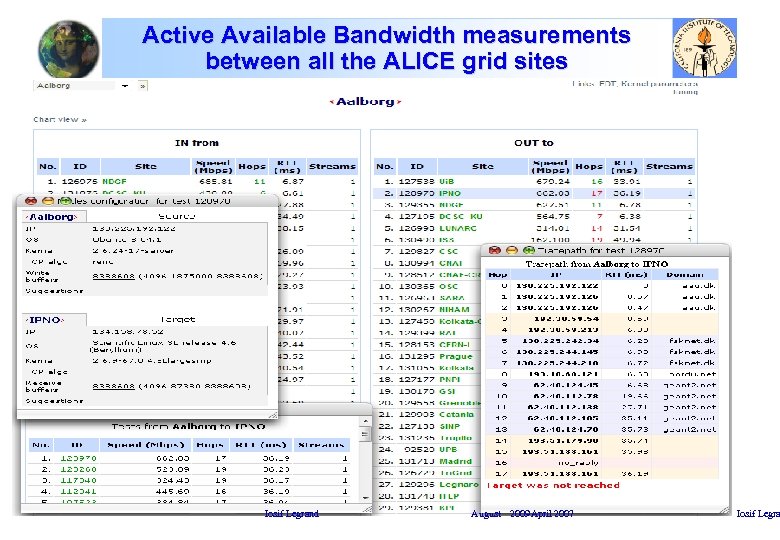

Active Available Bandwidth measurements between all the ALICE grid sites Iosif Legrand August 2009 April 2007 Iosif Legra

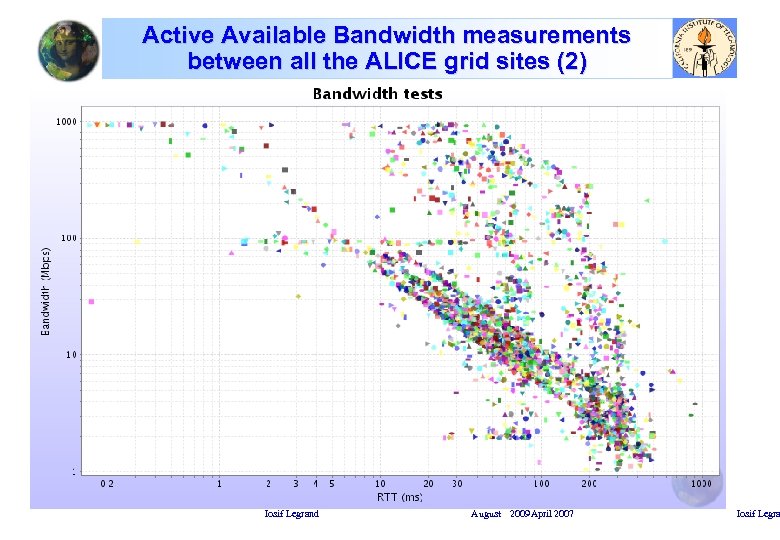

Active Available Bandwidth measurements between all the ALICE grid sites (2) Iosif Legrand August 2009 April 2007 Iosif Legra

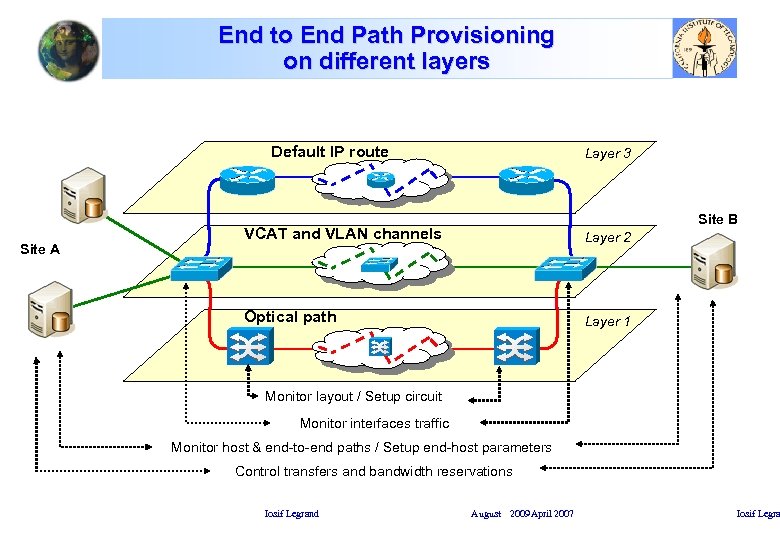

End to End Path Provisioning on different layers Default IP route Site A Layer 3 Site B VCAT and VLAN channels Layer 2 Optical path Layer 1 Monitor layout / Setup circuit Monitor interfaces traffic Monitor host & end-to-end paths / Setup end-host parameters Control transfers and bandwidth reservations Iosif Legrand August 2009 April 2007 Iosif Legra

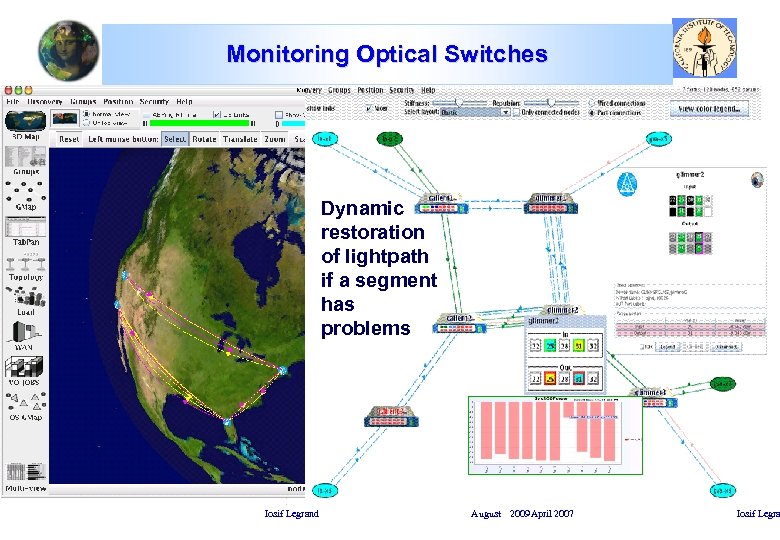

Monitoring Optical Switches Dynamic restoration of lightpath if a segment has problems Iosif Legrand August 2009 April 2007 Iosif Legra

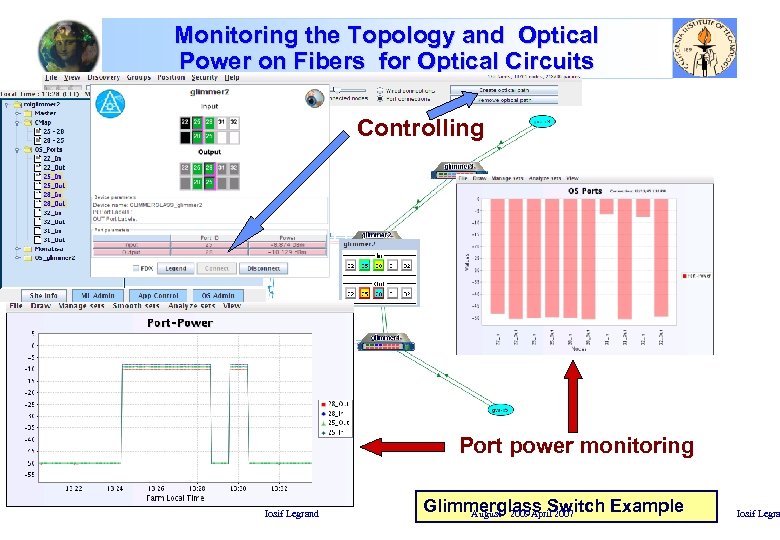

Monitoring the Topology and Optical Power on Fibers for Optical Circuits Controlling Port power monitoring Iosif Legrand Glimmerglass Switch Example August 2009 April 2007 Iosif Legra

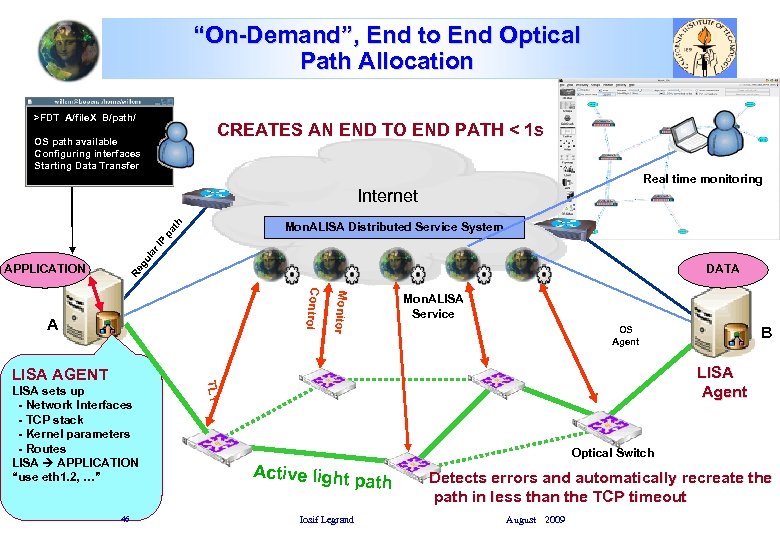

“On-Demand”, End to End Optical Path Allocation >FDT A/file. X B/path/ CREATES AN END TO END PATH < 1 s OS path available Configuring interfaces Starting Data Transfer Real time monitoring Internet DATA Re g APPLICATION ul ar IP p at h Mon. ALISA Distributed Service System LISA sets up - Network Interfaces - TCP stack - Kernel parameters - Routes LISA APPLICATION “use eth 1. 2, …” 46 OS Agent B LISA Agent TL 1 LISA AGENT Monitor Control A Mon. ALISA Service Optical Switch Active light p ath Iosif Legrand Detects errors and automatically recreate the path in less than the TCP timeout August 2009

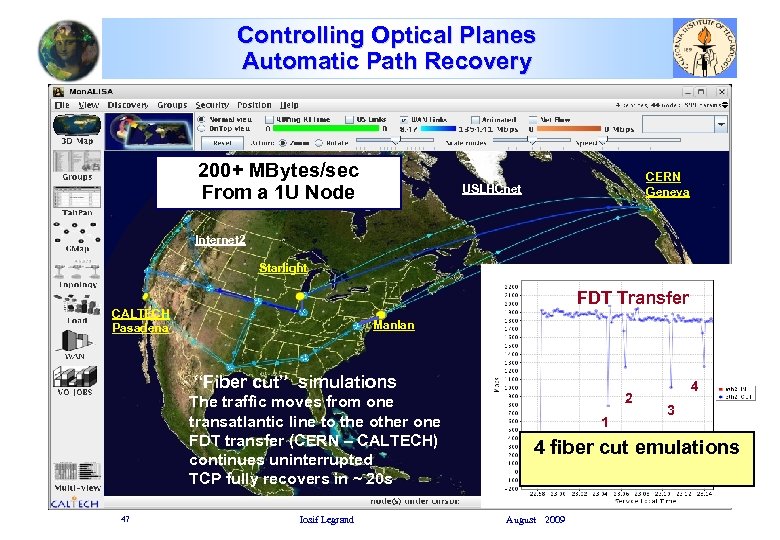

Controlling Optical Planes Automatic Path Recovery 200+ MBytes/sec From a 1 U Node CERN Geneva USLHCnet Internet 2 Starlight FDT Transfer CALTECH Pasadena Manlan “Fiber cut” simulations The traffic moves from one transatlantic line to the other one FDT transfer (CERN – CALTECH) continues uninterrupted TCP fully recovers in ~ 20 s 47 Iosif Legrand 2 1 4 3 4 fiber cut emulations 4 Fiber cuts simulations August 2009

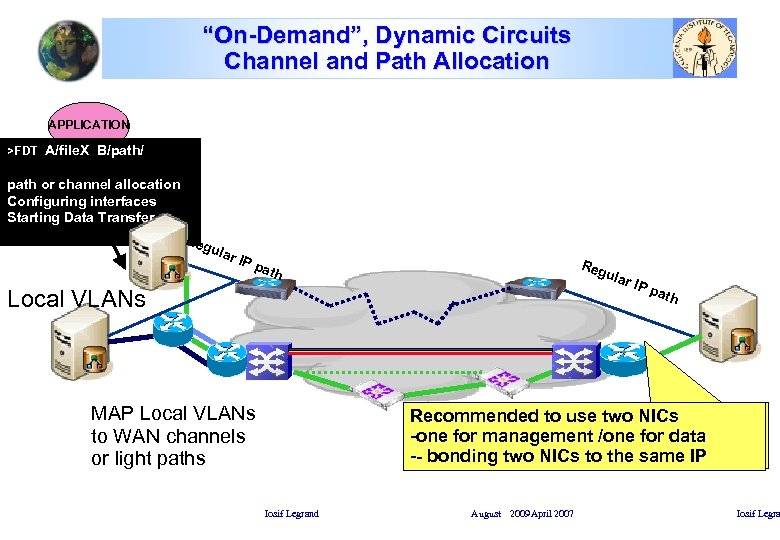

“On-Demand”, Dynamic Circuits Channel and Path Allocation APPLICATION >FDT A/file. X B/path/ path or channel allocation Configuring interfaces Starting Data Transfer Reg ula r IP pa Reg th ular Local VLANs MAP Local VLANs to WAN channels or light paths IP p ath Recommended to use two NICs -one for management /one for data -- bonding two NICs to the same IP Iosif Legrand August 2009 April 2007 Iosif Legra

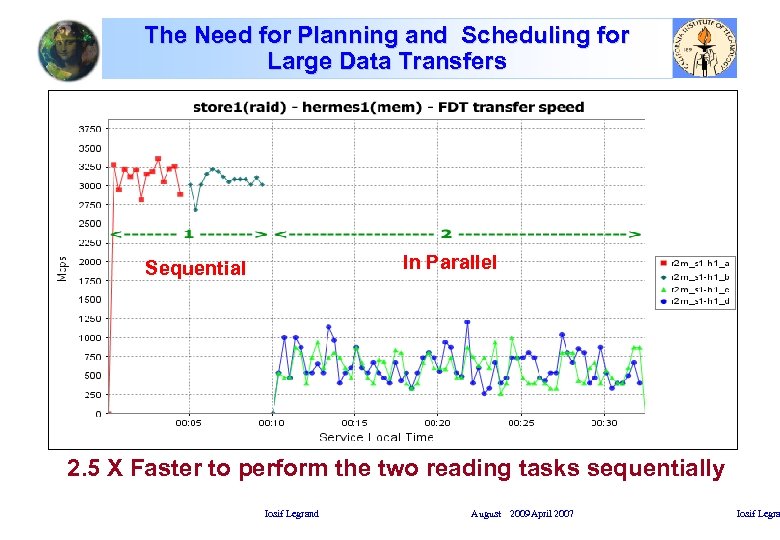

The Need for Planning and Scheduling for Large Data Transfers In Parallel Sequential 2. 5 X Faster to perform the two reading tasks sequentially Iosif Legrand August 2009 April 2007 Iosif Legra

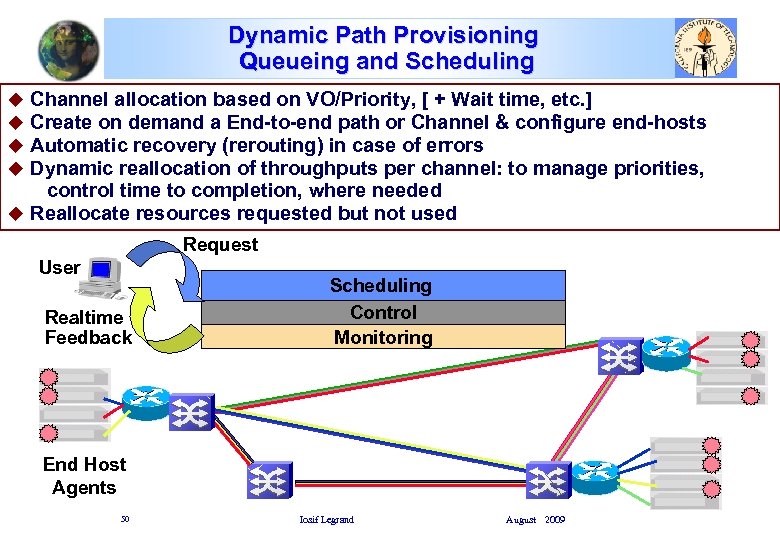

Dynamic Path Provisioning Queueing and Scheduling Channel allocation based on VO/Priority, [ + Wait time, etc. ] Create on demand a End-to-end path or Channel & configure end-hosts Automatic recovery (rerouting) in case of errors Dynamic reallocation of throughputs per channel: to manage priorities, control time to completion, where needed u Reallocate resources requested but not used u u Request User Realtime Feedback Scheduling Control Monitoring End Host Agents 50 Iosif Legrand August 2009

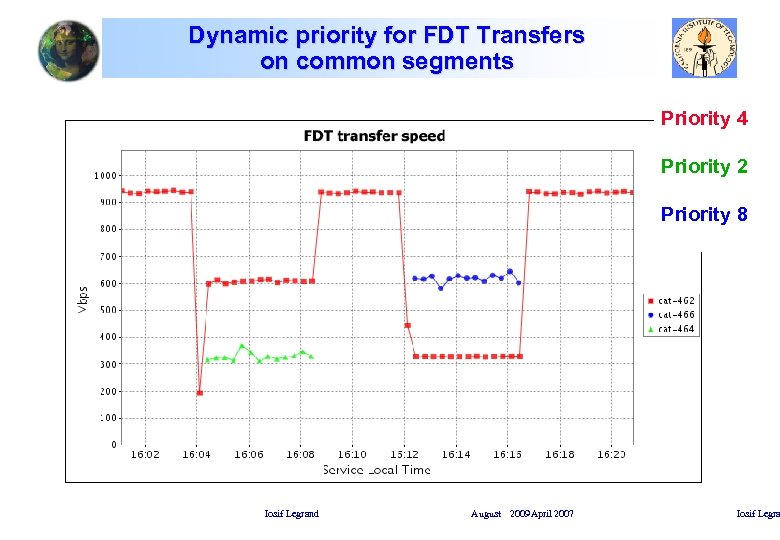

Dynamic priority for FDT Transfers on common segments Priority 4 Priority 2 Priority 8 Iosif Legrand August 2009 April 2007 Iosif Legra

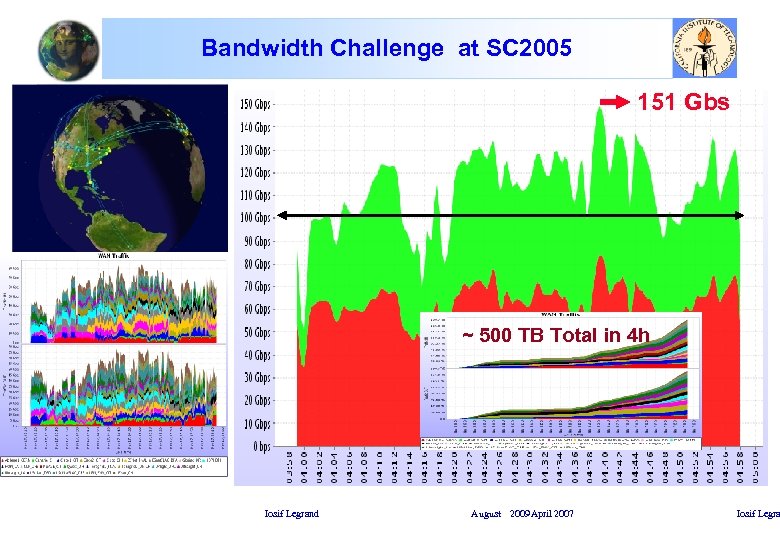

Bandwidth Challenge at SC 2005 151 Gbs ~ 500 TB Total in 4 h Iosif Legrand August 2009 April 2007 Iosif Legra

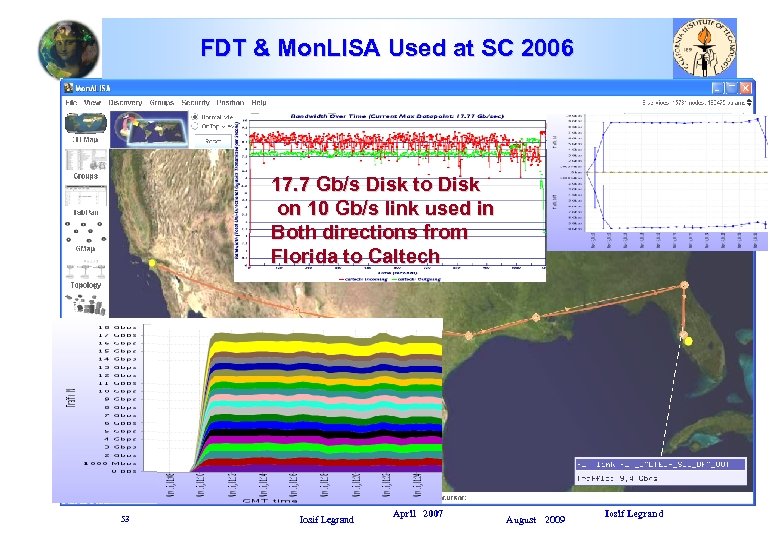

FDT & Mon. LISA Used at SC 2006 17. 7 Gb/s Disk to Disk on 10 Gb/s link used in Both directions from Florida to Caltech 53 Iosif Legrand April 2007 August 2009 Iosif Legrand

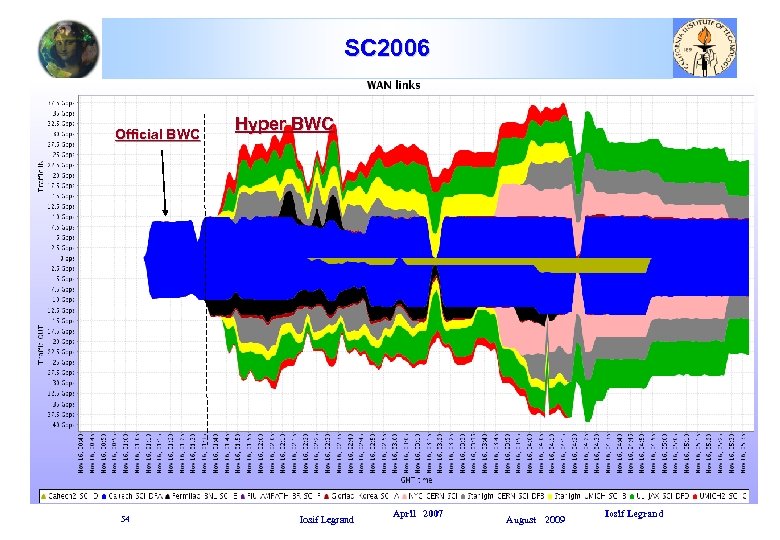

SC 2006 Official BWC 54 Hyper BWC Iosif Legrand April 2007 August 2009 Iosif Legrand

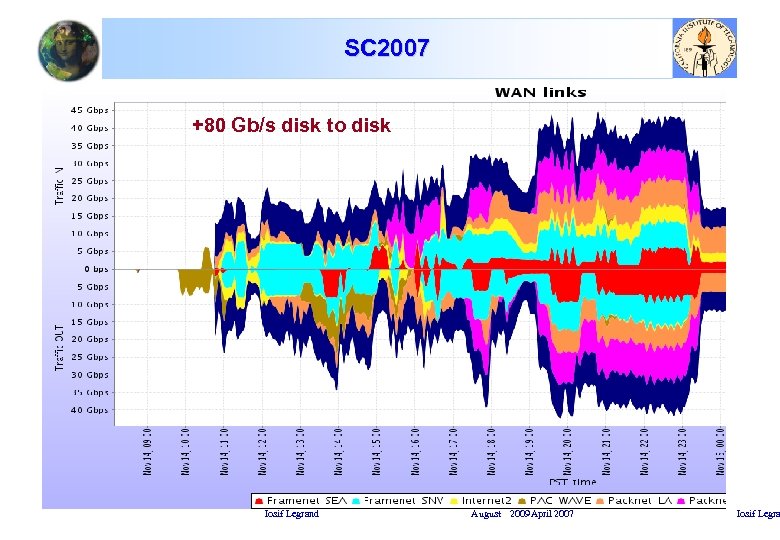

SC 2007 +80 Gb/s disk to disk Iosif Legrand August 2009 April 2007 Iosif Legra

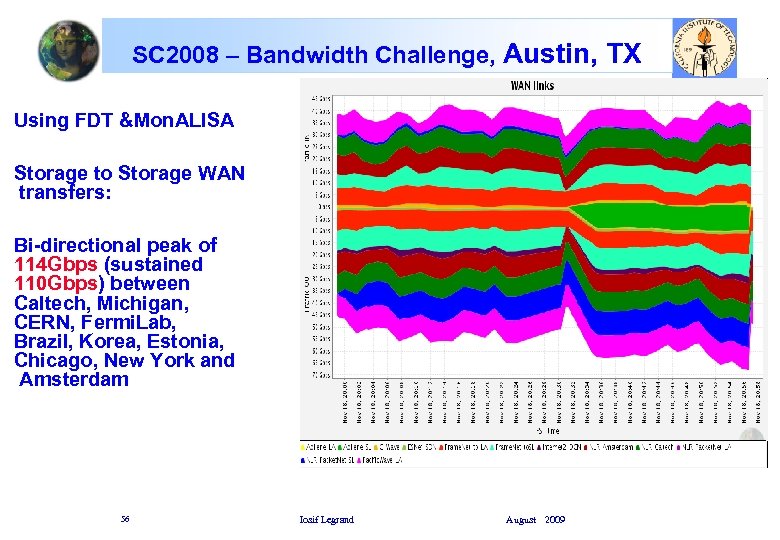

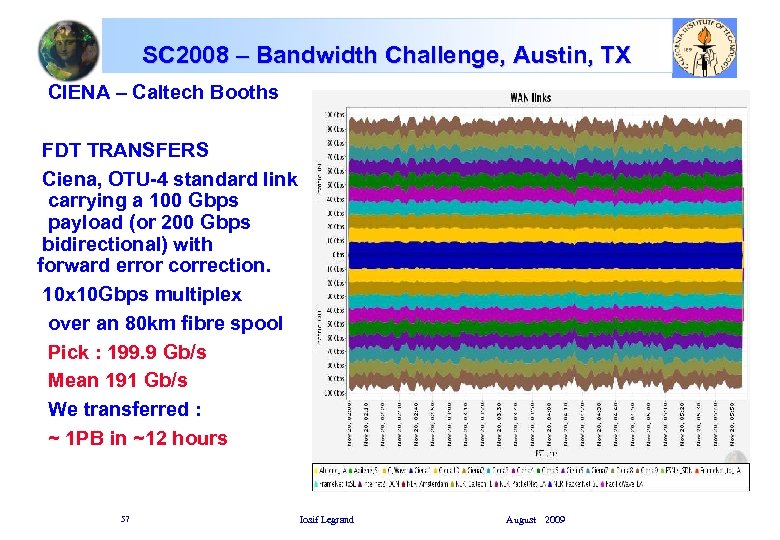

SC 2008 – Bandwidth Challenge, Austin, TX Using FDT &Mon. ALISA Storage to Storage WAN transfers: Bi-directional peak of 114 Gbps (sustained 110 Gbps) between Caltech, Michigan, CERN, Fermi. Lab, Brazil, Korea, Estonia, Chicago, New York and Amsterdam 56 Iosif Legrand August 2009

SC 2008 – Bandwidth Challenge, Austin, TX CIENA – Caltech Booths FDT TRANSFERS Ciena, OTU-4 standard link carrying a 100 Gbps payload (or 200 Gbps bidirectional) with forward error correction. 10 x 10 Gbps multiplex over an 80 km fibre spool Pick : 199. 9 Gb/s Mean 191 Gb/s We transferred : ~ 1 PB in ~12 hours 57 Iosif Legrand August 2009

The eight fallacies of distributed computing It is fair to say that at the beginning of this project we underestimated some of the potential problems in developing a large distributed system in WAN, and indeed the “eight fallacies of distributed computing” are very important lessons: 1) The network is reliable. 2) Latency is zero. 3) Bandwidth is infinite. 4) The network is secure. 5) Topology doesn't change. 6) There is one administrator. 7) Transport cost is zero. 8) The network is homogeneous. 58 Iosif Legrand August 2009

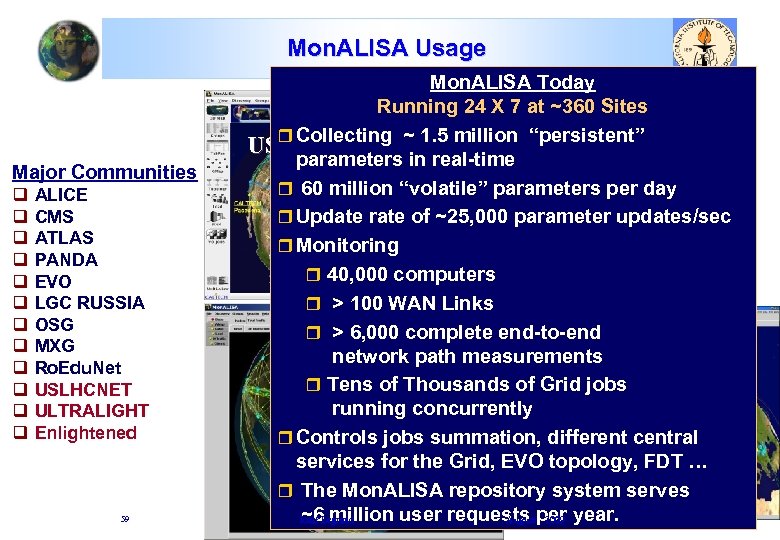

Mon. ALISA Usage Major Communities q q q ALICE CMS ATLAS PANDA EVO LGC RUSSIA OSG MXG Ro. Edu. Net USLHCNET ULTRALIGHT Enlightened 59 Mon. ALISA Today Running 24 X 7 at ~360 Sites r Collecting ~ 1. 5 million “persistent” USLHCnet VRVS parameters in real-time ALICE r 60 million “volatile” parameters per day r Update rate of ~25, 000 parameter updates/sec r Monitoring U r 40, 000 computers r > 100 WAN Links r > 6, 000 complete end-to-end OSG network path measurements r Tens of Thousands of Grid jobs running concurrently r Controls jobs summation, different central EVO services for the Grid, EVO topology, FDT … r The Mon. ALISA repository system serves ~6 million user requests per year. Iosif Legrand August 2009

Summary Ø Ø Ø Modeling and simulations is important to understand the way distributed systems scale, the limits, and help to develop and test optimization algorithms. The algorithms to create resilient distributed systems are not easy (Handling the asymmetric information) For data intensive applications, is important to move to more synergetic relationships between the applications, computing and storage facilities and the NETWORK. http: //monalisa. caltech. edu Iosif Legrand August 2009 April 2007 Iosif Legra

be8d6f0fb710b23e5b2ce43e6902f9c1.ppt