mod4_.pptx

- Количество слайдов: 24

Module 4: Human Factors and Error Detection Models SMS BASICS FOR PUBLIC SERVICE AVIATION

Module 4: Human Factors and Error Detection Models SMS BASICS FOR PUBLIC SERVICE AVIATION

Human Factors Going Beyond “Pilot Error” Are pilot/crew errors different from other professional errors? ○ No! Perhaps only in consequence ○ Public perceptions of “pilot error” are often based on media and other influences. An understanding and application of human factors is a proactive approach in preventing errors downrange. Sadly, human factors is often a reactive learning process, after the error or accident.

Human Factors Going Beyond “Pilot Error” Are pilot/crew errors different from other professional errors? ○ No! Perhaps only in consequence ○ Public perceptions of “pilot error” are often based on media and other influences. An understanding and application of human factors is a proactive approach in preventing errors downrange. Sadly, human factors is often a reactive learning process, after the error or accident.

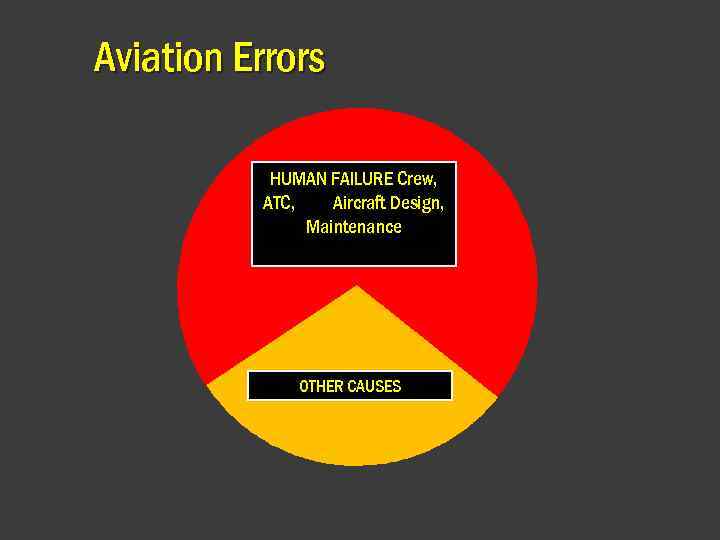

Aviation Errors HUMAN FAILURE Crew, ATC, Aircraft Design, Maintenance OTHER CAUSES

Aviation Errors HUMAN FAILURE Crew, ATC, Aircraft Design, Maintenance OTHER CAUSES

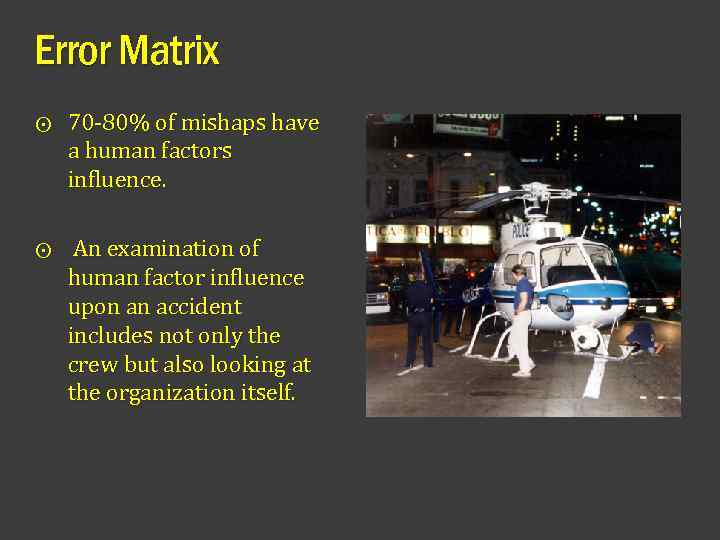

Error Matrix ⨀ 70 -80% of mishaps have a human factors influence. ⨀ An examination of human factor influence upon an accident includes not only the crew but also looking at the organization itself.

Error Matrix ⨀ 70 -80% of mishaps have a human factors influence. ⨀ An examination of human factor influence upon an accident includes not only the crew but also looking at the organization itself.

HUMAN FACTORS DEFINED ⨀ Human Factors is the study of interactions between humans, the tools, equipment, activities and operations they are involved in. ⨀ It is a multi discipline study that examines the human, as well as what and how the human does within the specific environment

HUMAN FACTORS DEFINED ⨀ Human Factors is the study of interactions between humans, the tools, equipment, activities and operations they are involved in. ⨀ It is a multi discipline study that examines the human, as well as what and how the human does within the specific environment

Key Tenants of Human Factors ⨀ Anyone can and will make errors. ⨀ The origins of error can be fundamentally different. ⨀ The consequence of similar errors can be quite different. ⨀ It is imperative to understand root causes of human factor errors.

Key Tenants of Human Factors ⨀ Anyone can and will make errors. ⨀ The origins of error can be fundamentally different. ⨀ The consequence of similar errors can be quite different. ⨀ It is imperative to understand root causes of human factor errors.

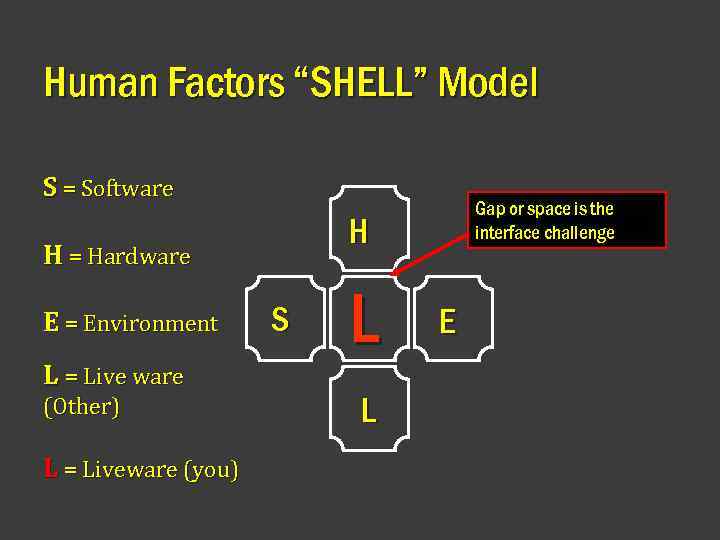

Human Factors “SHELL” Model S = Software H H = Hardware E = Environment L = Live ware (Other) L = Liveware (you) Gap or space is the interface challenge S L L E

Human Factors “SHELL” Model S = Software H H = Hardware E = Environment L = Live ware (Other) L = Liveware (you) Gap or space is the interface challenge S L L E

Human Factors “SHELL” Model ⨀ S = Software: manuals, symbols, written data ⨀ H = Hardware: aircraft, instruments, seats, windows, doors, controls, etc. ⨀ E =Environment: ambient conditions or work culture ⨀ L = Liveware (you): The operator ⨀ L = Liveware (Other): Other crewmembers. Communication issues between people.

Human Factors “SHELL” Model ⨀ S = Software: manuals, symbols, written data ⨀ H = Hardware: aircraft, instruments, seats, windows, doors, controls, etc. ⨀ E =Environment: ambient conditions or work culture ⨀ L = Liveware (you): The operator ⨀ L = Liveware (Other): Other crewmembers. Communication issues between people.

Characteristics of Liveware ⨀ Physical size and shape ⨀ Fuel requirements (food and water) ⨀ Input characteristics (senses) ⨀ Information processing (brain and cognition) ⨀ Output characteristics (movement) ⨀ Environmental tolerances (cold, heat, air etc) ⨀ Individual differences (personality and traits)

Characteristics of Liveware ⨀ Physical size and shape ⨀ Fuel requirements (food and water) ⨀ Input characteristics (senses) ⨀ Information processing (brain and cognition) ⨀ Output characteristics (movement) ⨀ Environmental tolerances (cold, heat, air etc) ⨀ Individual differences (personality and traits)

Liveware-Hardware Interface ⨀ Hardware should be designed to meet human needs, ergonomic, functional and non-demanding for human adaptation. ⨀ Less optimized for the human = greater chance for errors. ⨀ Example: Design of seats, controls and instruments.

Liveware-Hardware Interface ⨀ Hardware should be designed to meet human needs, ergonomic, functional and non-demanding for human adaptation. ⨀ Less optimized for the human = greater chance for errors. ⨀ Example: Design of seats, controls and instruments.

Liveware - Liveware Interface The ability to effectively communicate in a timely and proper manner. Crew Resource Management-CRM Odd, you don’t see mountain goats up this high. Stupid goat isn’t talking to ATC either

Liveware - Liveware Interface The ability to effectively communicate in a timely and proper manner. Crew Resource Management-CRM Odd, you don’t see mountain goats up this high. Stupid goat isn’t talking to ATC either

Liveware -Software Interface ⨀ Non-physical aspects ⨀ Procedures, manuals and checklists ⨀ Layouts symbolism “glass” ⨀ More difficult or complex = more error ⨀ Flight manuals ⧐ Easy to read ⧐ Organized ⧐ Syntax

Liveware -Software Interface ⨀ Non-physical aspects ⨀ Procedures, manuals and checklists ⨀ Layouts symbolism “glass” ⨀ More difficult or complex = more error ⨀ Flight manuals ⧐ Easy to read ⧐ Organized ⧐ Syntax

Liveware -Environment ⨀ Operational environment (“the elements”) Human can only operate in limited environment without equipment. Example, Protection from heat, cold, need for oxygen etc. ⨀ Environment can also mean the work culture, e, g hostile etc.

Liveware -Environment ⨀ Operational environment (“the elements”) Human can only operate in limited environment without equipment. Example, Protection from heat, cold, need for oxygen etc. ⨀ Environment can also mean the work culture, e, g hostile etc.

⨀ Human errors may manifest from a single element or be an aggregate of various factors.

⨀ Human errors may manifest from a single element or be an aggregate of various factors.

HUMAN FACTORS IN SAFETY Situational Awareness and Effective Aeronautical Decision Making are based on ⧐ Perceptions-Judgment-Decision ⧐ Understanding of Risk ⧐ Training and Skills ( perceptual and cognitive) ⧐ Maintaining a standard of safety ⧐ Meeting objectives for safe flight ⧐ Education and Health ( mental and physical) ⨀ The organization should promote and foster human factor training and awareness to everyone, regardless of rank or job title. ⨀

HUMAN FACTORS IN SAFETY Situational Awareness and Effective Aeronautical Decision Making are based on ⧐ Perceptions-Judgment-Decision ⧐ Understanding of Risk ⧐ Training and Skills ( perceptual and cognitive) ⧐ Maintaining a standard of safety ⧐ Meeting objectives for safe flight ⧐ Education and Health ( mental and physical) ⨀ The organization should promote and foster human factor training and awareness to everyone, regardless of rank or job title. ⨀

HUMAN FACTORS ANALYSIS AND CLASSIFICATION SYSTEM (HFACS) ⨀ THE FIRST STEP IN THE RISK MANAGEMENT PROCESS

HUMAN FACTORS ANALYSIS AND CLASSIFICATION SYSTEM (HFACS) ⨀ THE FIRST STEP IN THE RISK MANAGEMENT PROCESS

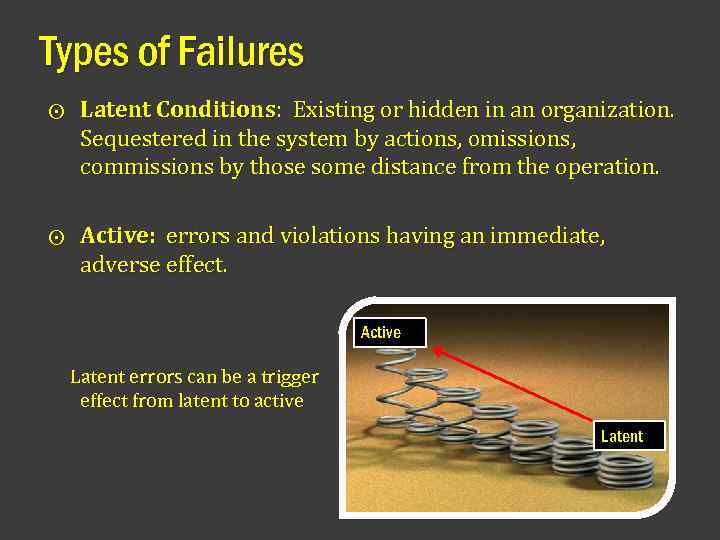

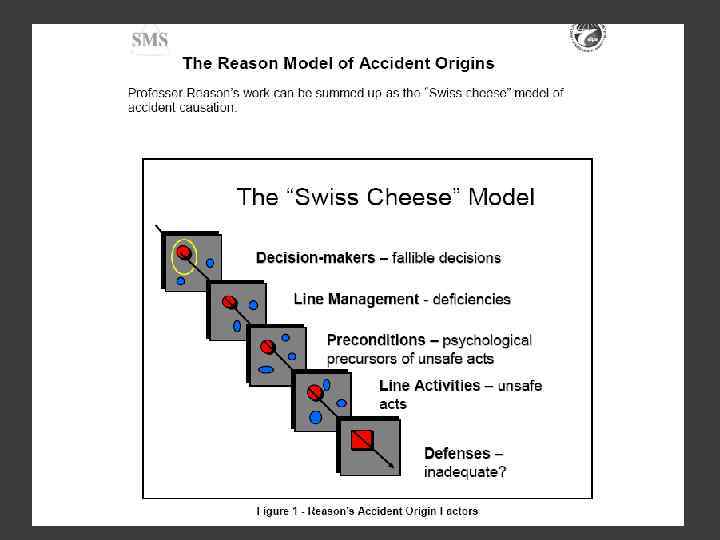

Types of Failures ⨀ Latent Conditions: Existing or hidden in an organization. Sequestered in the system by actions, omissions, commissions by those some distance from the operation. ⨀ Active: errors and violations having an immediate, adverse effect. Active Latent errors can be a trigger effect from latent to active Latent

Types of Failures ⨀ Latent Conditions: Existing or hidden in an organization. Sequestered in the system by actions, omissions, commissions by those some distance from the operation. ⨀ Active: errors and violations having an immediate, adverse effect. Active Latent errors can be a trigger effect from latent to active Latent

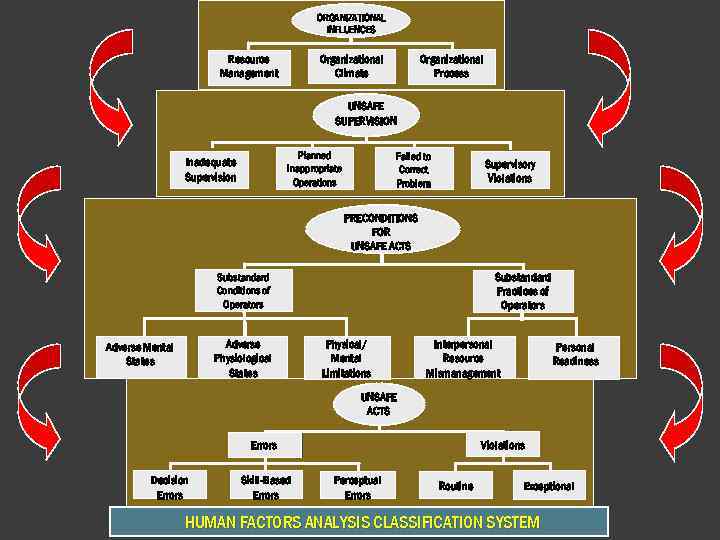

ORGANIZATIONAL INFLUENCES Resource Management Organizational Climate Organizational Process UNSAFE SUPERVISION Planned Inappropriate Operations Inadequate Supervision Failed to Correct Problem Supervisory Violations PRECONDITIONS FOR UNSAFE ACTS Substandard Practices of Operators Substandard Conditions of Operators Adverse Physiological States Adverse Mental Adverse States Mental States Physical/ Mental Limitations Interpersonal Resource Mismanagement Personal Readiness UNSAFE ACTS Errors Decision Errors Skill-Based Errors Violations Perceptual Errors Routine Exceptional HUMAN FACTORS ANALYSIS CLASSIFICATION SYSTEM

ORGANIZATIONAL INFLUENCES Resource Management Organizational Climate Organizational Process UNSAFE SUPERVISION Planned Inappropriate Operations Inadequate Supervision Failed to Correct Problem Supervisory Violations PRECONDITIONS FOR UNSAFE ACTS Substandard Practices of Operators Substandard Conditions of Operators Adverse Physiological States Adverse Mental Adverse States Mental States Physical/ Mental Limitations Interpersonal Resource Mismanagement Personal Readiness UNSAFE ACTS Errors Decision Errors Skill-Based Errors Violations Perceptual Errors Routine Exceptional HUMAN FACTORS ANALYSIS CLASSIFICATION SYSTEM

5 M Concept Error Chain Tracking Matrix Each element may have influence on the other Management Influence Man Highest level of risk Mission Machine Medium

5 M Concept Error Chain Tracking Matrix Each element may have influence on the other Management Influence Man Highest level of risk Mission Machine Medium

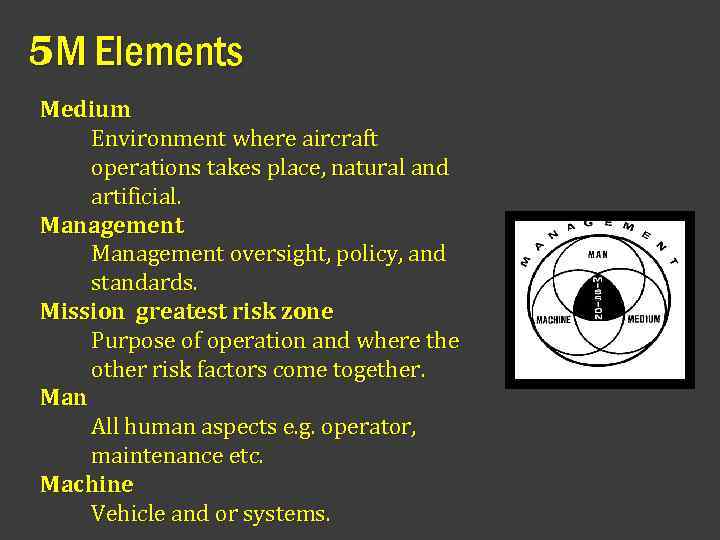

5 M Elements Medium Environment where aircraft operations takes place, natural and artificial. Management oversight, policy, and standards. Mission greatest risk zone Purpose of operation and where the other risk factors come together. Man All human aspects e. g. operator, maintenance etc. Machine Vehicle and or systems.

5 M Elements Medium Environment where aircraft operations takes place, natural and artificial. Management oversight, policy, and standards. Mission greatest risk zone Purpose of operation and where the other risk factors come together. Man All human aspects e. g. operator, maintenance etc. Machine Vehicle and or systems.

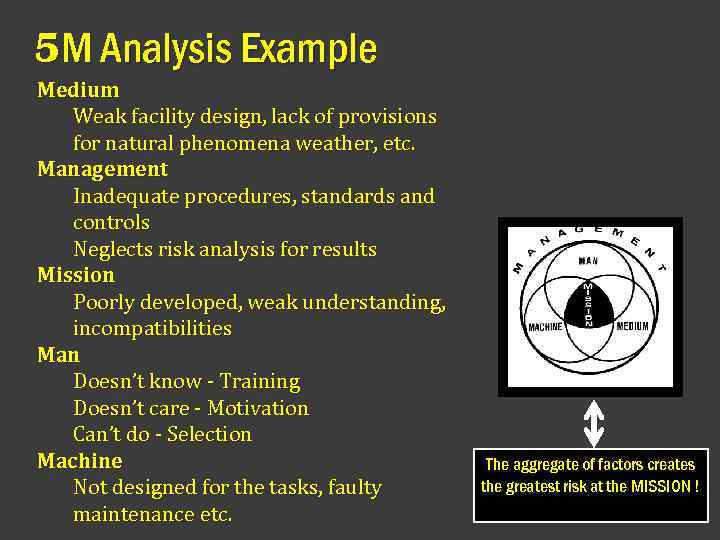

5 M Analysis Example Medium Weak facility design, lack of provisions for natural phenomena weather, etc. Management Inadequate procedures, standards and controls Neglects risk analysis for results Mission Poorly developed, weak understanding, incompatibilities Man Doesn’t know - Training Doesn’t care - Motivation Can’t do - Selection Machine Not designed for the tasks, faulty maintenance etc. The aggregate of factors creates the greatest risk at the MISSION !

5 M Analysis Example Medium Weak facility design, lack of provisions for natural phenomena weather, etc. Management Inadequate procedures, standards and controls Neglects risk analysis for results Mission Poorly developed, weak understanding, incompatibilities Man Doesn’t know - Training Doesn’t care - Motivation Can’t do - Selection Machine Not designed for the tasks, faulty maintenance etc. The aggregate of factors creates the greatest risk at the MISSION !

Warning on Modeling: “Hindsight Bias” Knowing the terminal event(accident) may influence or sway the investigation to consider that every single, nonperfect, non-standard or small glitch in the system, action or inaction by supervision or the crew was a “factor” in the accident. ⨀ Exercise caution when “looking back” and not assume that every “dot” will naturally connect to form the error chain picture. ⨀

Warning on Modeling: “Hindsight Bias” Knowing the terminal event(accident) may influence or sway the investigation to consider that every single, nonperfect, non-standard or small glitch in the system, action or inaction by supervision or the crew was a “factor” in the accident. ⨀ Exercise caution when “looking back” and not assume that every “dot” will naturally connect to form the error chain picture. ⨀

End

End