ec5f24c8afc6d8b5173864a11ffcb96c.ppt

- Количество слайдов: 43

Models for University HPC Facilities Dan Stanzione Director, Fulton High Performance Computing Presented at University of Hawai’i 2/18/09 dstanzi@asu. edu

Models for University HPC Facilities Dan Stanzione Director, Fulton High Performance Computing Presented at University of Hawai’i 2/18/09 dstanzi@asu. edu

The Givens For this Audience, let’s skip the motivation speech, and make it a given that HPC/Supercomputing is critically important to continued research progress Capacity: accelerating time to result Capability: otherwise unachievable results Replacing experimentation when too costly. We all think we should do big research computing, even if we differ on where and how.

The Givens For this Audience, let’s skip the motivation speech, and make it a given that HPC/Supercomputing is critically important to continued research progress Capacity: accelerating time to result Capability: otherwise unachievable results Replacing experimentation when too costly. We all think we should do big research computing, even if we differ on where and how.

Fulton High Performance Computing Initiative CFD Simulation of Golf Ball in Flight, 1 billion unknowns in grid • The hub for large scale computation-based research activities on the ASU Campus • >45 Teraflops of local computing power deployed on campus (83 rd fastest in world, top 10 US academic). • Deploying the world’s largest computing system in Austin, TX in conjunction with the University of Texas, SUN Microsystems, and the NSF (>500 TF). • Managing research datasets which will exceed 2 Petabytes (2 quadrillion bytes) • State-of-the-art facilities • Expert HPC staff

Fulton High Performance Computing Initiative CFD Simulation of Golf Ball in Flight, 1 billion unknowns in grid • The hub for large scale computation-based research activities on the ASU Campus • >45 Teraflops of local computing power deployed on campus (83 rd fastest in world, top 10 US academic). • Deploying the world’s largest computing system in Austin, TX in conjunction with the University of Texas, SUN Microsystems, and the NSF (>500 TF). • Managing research datasets which will exceed 2 Petabytes (2 quadrillion bytes) • State-of-the-art facilities • Expert HPC staff

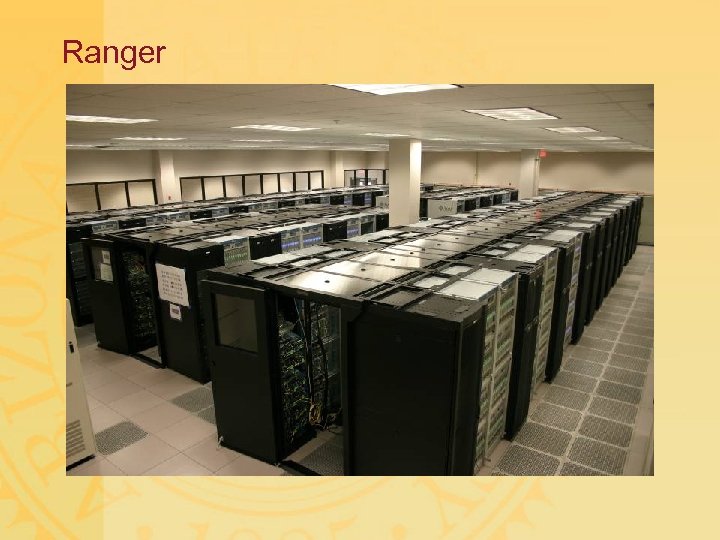

HPCI Major Projects The i. Plant Collaborative- a $100 M NSF effort to build Cyber. Infrastructure for “Grand Challenges” in the Plant Science Community. Key pieces of the storage, computation and software development will be in the HPCI. Ranger - The world’s largest system for open science. The first in NSF’s “Track 2” petascale system acquisitions, this $59 M project is a collaboration between UT-Austin, ASU, Cornell, Sun, and AMD. 21

HPCI Major Projects The i. Plant Collaborative- a $100 M NSF effort to build Cyber. Infrastructure for “Grand Challenges” in the Plant Science Community. Key pieces of the storage, computation and software development will be in the HPCI. Ranger - The world’s largest system for open science. The first in NSF’s “Track 2” petascale system acquisitions, this $59 M project is a collaboration between UT-Austin, ASU, Cornell, Sun, and AMD. 21

HPCI 2007/2008 Project Highlights The HPCI maintains an active portfolio of HPC-oriented research and application projects • Developing models for debugging and performance analysis for programs scaling beyond 1 million threads with Intel • Evaluating future parallel programming paradigms for the DOD • Developing streams-based parallel software development teams for hybrid systems for the US Air Force • Partnered with Microsoft to port new HPC applications to Windows CCS The HPCI works with more than 100 faculty to accelerate research discovery, and apply HPC in traditional and non-traditional disciplines, e. g. : • Computational golf ball design (with Aerospace Engineering and Srixon Sports) • Flows of pollution in the upper atmosphere (with Math, Mech. Eng. , and the Az Dept. of Environmental Quality) • Digital Phoenix: combining geospatial data and on-demand urban modeling (with the School of Design, Civil Engineering, and the Decision Theater) • Lunar Reconaissance Orbiter Camera; providing primary data storage and processing for this 2008 NASA Spacecraft (SESE)

HPCI 2007/2008 Project Highlights The HPCI maintains an active portfolio of HPC-oriented research and application projects • Developing models for debugging and performance analysis for programs scaling beyond 1 million threads with Intel • Evaluating future parallel programming paradigms for the DOD • Developing streams-based parallel software development teams for hybrid systems for the US Air Force • Partnered with Microsoft to port new HPC applications to Windows CCS The HPCI works with more than 100 faculty to accelerate research discovery, and apply HPC in traditional and non-traditional disciplines, e. g. : • Computational golf ball design (with Aerospace Engineering and Srixon Sports) • Flows of pollution in the upper atmosphere (with Math, Mech. Eng. , and the Az Dept. of Environmental Quality) • Digital Phoenix: combining geospatial data and on-demand urban modeling (with the School of Design, Civil Engineering, and the Decision Theater) • Lunar Reconaissance Orbiter Camera; providing primary data storage and processing for this 2008 NASA Spacecraft (SESE)

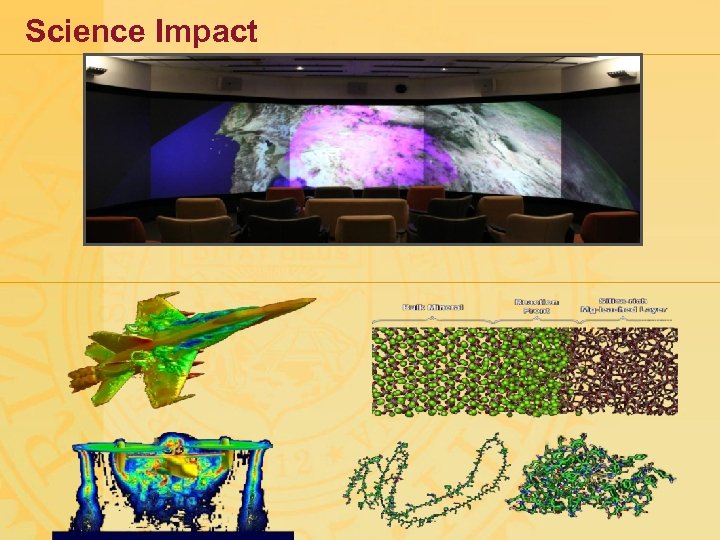

Science Impact

Science Impact

Speeds and Feeds - Saguaro @ ASU • The HPCI primary datacenter has 750, 000 VA of UPS power, chilled water racks, under floor cooling (700 watt/sq ft). • The Saguaro cluster has 4, 900 cores running Linux, capable of about 45 trillion FLOP/s • 10 TB Primary RAM • 400 TB Scratch disk, 3 GB/sec • Infiniband 20 Gb interconnect, 6 us max latency, 1. 5 Tb/s backplane • In the last 6 months, 150, 000 simulations completed (1 -1, 024 processors)

Speeds and Feeds - Saguaro @ ASU • The HPCI primary datacenter has 750, 000 VA of UPS power, chilled water racks, under floor cooling (700 watt/sq ft). • The Saguaro cluster has 4, 900 cores running Linux, capable of about 45 trillion FLOP/s • 10 TB Primary RAM • 400 TB Scratch disk, 3 GB/sec • Infiniband 20 Gb interconnect, 6 us max latency, 1. 5 Tb/s backplane • In the last 6 months, 150, 000 simulations completed (1 -1, 024 processors)

Speeds and Feeds -- Ranger @ TACC • Compute power - 504 Teraflops – 3, 936 Sun four-socket blades – 15, 744 AMD “Barcelona” processors • Quad-core, four flops/cycle (dual pipelines) • Memory - 123 Terabytes – 2 GB/core, 32 GB/node – 132 GB/s aggregate bandwidth • Disk subsystem - 1. 7 Petabytes – 72 Sun x 4500 “Thumper” I/O servers, 24 TB each – 40 GB/sec total aggregate I/O bandwidth – 1 PB raw capacity in largest filesystem • Interconnect - 10 Gbps / 3. 0 sec latency – Sun Infini. Band-based switches (2), up to 3456 4 x ports each – Full non-blocking 7 -stage Clos fabric – Mellanox Connect. X Infini. Band

Speeds and Feeds -- Ranger @ TACC • Compute power - 504 Teraflops – 3, 936 Sun four-socket blades – 15, 744 AMD “Barcelona” processors • Quad-core, four flops/cycle (dual pipelines) • Memory - 123 Terabytes – 2 GB/core, 32 GB/node – 132 GB/s aggregate bandwidth • Disk subsystem - 1. 7 Petabytes – 72 Sun x 4500 “Thumper” I/O servers, 24 TB each – 40 GB/sec total aggregate I/O bandwidth – 1 PB raw capacity in largest filesystem • Interconnect - 10 Gbps / 3. 0 sec latency – Sun Infini. Band-based switches (2), up to 3456 4 x ports each – Full non-blocking 7 -stage Clos fabric – Mellanox Connect. X Infini. Band

Ranger

Ranger

• • Alternate Systems We strive to be driven by science, not systems Advanced Computing no longer “one size fits all” – Traditional supercomputer architectures excel at compute intensive problems – Data Intensive, High Throughput Computing is now equally important; HPCI Storage has long been a focus • HPCI “Cloud” system now deployed – Google, Amazon like – Focus on large dataset apps – New class in cloud systems, in conjunction with Google, offered this spring

• • Alternate Systems We strive to be driven by science, not systems Advanced Computing no longer “one size fits all” – Traditional supercomputer architectures excel at compute intensive problems – Data Intensive, High Throughput Computing is now equally important; HPCI Storage has long been a focus • HPCI “Cloud” system now deployed – Google, Amazon like – Focus on large dataset apps – New class in cloud systems, in conjunction with Google, offered this spring

HPC/Cyberinfrastructure Services • Cycles: Compute power through access to Linux clusters with thousands of processors (first 10, 000 processor-hours free to all ASU faculty). • Storage: Reliable, secure, scalable storage for datasets of virtually infinite size (multi-site backups included!). • Visualization: Expert support at producing 2 D or 3 D visualizations of your data (SERV Program). • Application Consulting: Parallelization support on existing codes, development of new software. • Education and Training: Academic Courses or seminars and short courses in the effective use of HPC. • Proposal Support: Aid in developing applications for money or resources for projects that have cyberinfrastructure components (80+ proposals last year, >$500 M). • Research Partnerships

HPC/Cyberinfrastructure Services • Cycles: Compute power through access to Linux clusters with thousands of processors (first 10, 000 processor-hours free to all ASU faculty). • Storage: Reliable, secure, scalable storage for datasets of virtually infinite size (multi-site backups included!). • Visualization: Expert support at producing 2 D or 3 D visualizations of your data (SERV Program). • Application Consulting: Parallelization support on existing codes, development of new software. • Education and Training: Academic Courses or seminars and short courses in the effective use of HPC. • Proposal Support: Aid in developing applications for money or resources for projects that have cyberinfrastructure components (80+ proposals last year, >$500 M). • Research Partnerships

Towards a Sustainable Business Model for Campus High Performance Computing

Towards a Sustainable Business Model for Campus High Performance Computing

HPC on Campus • Every campus does some form of HPC. – To do research with HPC – To do research on HPC (CS) – To teach HPC • We all think we should do big research computing, even if we differ on where and how. • Everybody pays for it somehow, but there is no consensus how, and many systems end up abandoned (this is bad for everybody).

HPC on Campus • Every campus does some form of HPC. – To do research with HPC – To do research on HPC (CS) – To teach HPC • We all think we should do big research computing, even if we differ on where and how. • Everybody pays for it somehow, but there is no consensus how, and many systems end up abandoned (this is bad for everybody).

Context • Federal Funding in this area is NOT getting scarcer (a rarity), but is getting more concentrated. . . – NSF Petascale, DOE NLCF moving to even fewer, more massive centers • NSF made $460 M in new HPC awards in FY 08. . . at two sites (from OCI alone). • For reference, total CISE spending is <$600 M, and represents 81% of all academic CS research – For most of us, these awards aren’t helpful for developing local resources

Context • Federal Funding in this area is NOT getting scarcer (a rarity), but is getting more concentrated. . . – NSF Petascale, DOE NLCF moving to even fewer, more massive centers • NSF made $460 M in new HPC awards in FY 08. . . at two sites (from OCI alone). • For reference, total CISE spending is <$600 M, and represents 81% of all academic CS research – For most of us, these awards aren’t helpful for developing local resources

The Challenges • HPC has no clear home on campus: It’s research infrastructure, so the VPR office might be involved It’s computers, so IT should be involved Each now has an excuse to hand it to the other : ) HPC can be inordinately expensive, and can’t be put just anywhere. Done even a little wrong, it can be a huge failure. “Plain old IT” folks can’t provide the right service. “Plain old researchers” can’t, either.

The Challenges • HPC has no clear home on campus: It’s research infrastructure, so the VPR office might be involved It’s computers, so IT should be involved Each now has an excuse to hand it to the other : ) HPC can be inordinately expensive, and can’t be put just anywhere. Done even a little wrong, it can be a huge failure. “Plain old IT” folks can’t provide the right service. “Plain old researchers” can’t, either.

The Challenges No one knows exactly what HPC is. To some it means Linux clusters, to some it means web servers. . . • Every faculty member wants their own cluster. – No waiting time, local sw stack. – HW optimized for *their* problem • Those who don’t want their own, want it for free • Systems get old quickly. You’ll drop 200 spots a year in the Top 500. If you win a grant for hardware money, how will you replace it in 24 months, or will you become irrelevant? • Can’t we get free access from {NSF, NASA, DOE, DOD}?

The Challenges No one knows exactly what HPC is. To some it means Linux clusters, to some it means web servers. . . • Every faculty member wants their own cluster. – No waiting time, local sw stack. – HW optimized for *their* problem • Those who don’t want their own, want it for free • Systems get old quickly. You’ll drop 200 spots a year in the Top 500. If you win a grant for hardware money, how will you replace it in 24 months, or will you become irrelevant? • Can’t we get free access from {NSF, NASA, DOE, DOD}?

Centers All the (NSF) Centers have great value, and all have made massive contributions to the field. The Teragrid serves many needs for big users *but* Data Migration is an issue, particularly if data is generated locally The environment isn’t flexible for apps with, *ahem* “novel” requirements. Tough to do nimble allocations to seed research growth; same for education Teragrid delivers *cycles* to your users, but not the rest of what a computation center should, such as: (Human) Services and Expertise Visualization Data life cycle management Centers are a partial solution!

Centers All the (NSF) Centers have great value, and all have made massive contributions to the field. The Teragrid serves many needs for big users *but* Data Migration is an issue, particularly if data is generated locally The environment isn’t flexible for apps with, *ahem* “novel” requirements. Tough to do nimble allocations to seed research growth; same for education Teragrid delivers *cycles* to your users, but not the rest of what a computation center should, such as: (Human) Services and Expertise Visualization Data life cycle management Centers are a partial solution!

The Good News There a number of compelling arguments for consolidating HPC funding that have emerged recently: Funding agencies are increasingly concerned about stewardship of their computing investments and data (management plans are often required). Physical requirements of new systems make the “machine in a closet” approach more costly. Increasing campus focus on security makes distributed servers unpopular. HPC is unique among research infrastructure more on this later. There is much opportunity to provide both carrots and sticks to the user community

The Good News There a number of compelling arguments for consolidating HPC funding that have emerged recently: Funding agencies are increasingly concerned about stewardship of their computing investments and data (management plans are often required). Physical requirements of new systems make the “machine in a closet” approach more costly. Increasing campus focus on security makes distributed servers unpopular. HPC is unique among research infrastructure more on this later. There is much opportunity to provide both carrots and sticks to the user community

Guiding Principles in An HPC Business Model Free, unlimited services are never successful, and never end up being either free or unlimited. Asking any part of the university for money, and offering nothing tangible in return is a bad plan. Funding from multiple sources is a must. Hardware is the easiest money to find “elsewhere”. “Self-Sustaining” is a pipe dream. The fundamental goal is to increase research productivity (increase $, discovery will follow).

Guiding Principles in An HPC Business Model Free, unlimited services are never successful, and never end up being either free or unlimited. Asking any part of the university for money, and offering nothing tangible in return is a bad plan. Funding from multiple sources is a must. Hardware is the easiest money to find “elsewhere”. “Self-Sustaining” is a pipe dream. The fundamental goal is to increase research productivity (increase $, discovery will follow).

Guiding Principles in An HPC Business Model There is a difference between setting up a cluster, and making HPC-enabled research work. This gap must be filled. Be prepared for success (this isn’t easy) Provide incentives to participate (mostly), and disincentives not to (a little), but don’t be too heavyhanded. 90% capture is good enough The other 10% aren’t worth the price.

Guiding Principles in An HPC Business Model There is a difference between setting up a cluster, and making HPC-enabled research work. This gap must be filled. Be prepared for success (this isn’t easy) Provide incentives to participate (mostly), and disincentives not to (a little), but don’t be too heavyhanded. 90% capture is good enough The other 10% aren’t worth the price.

How to Build a Center • First, acquire allies. You need several types: Administrators Faculty Vendors Some allies are more equal than others; a couple of bulldozers is worth a lot of termites. Then, make allies partners: This means everyone has to *put in* something, and everyone has to *get* something (including the administrators). Don’t start off empty handed. . .

How to Build a Center • First, acquire allies. You need several types: Administrators Faculty Vendors Some allies are more equal than others; a couple of bulldozers is worth a lot of termites. Then, make allies partners: This means everyone has to *put in* something, and everyone has to *get* something (including the administrators). Don’t start off empty handed. . .

How To Build A Center • Third, find some money to get started. • Figure out how you can provide value (e. g. , beyond the machine, what is needed for success? ). Get a couple of champions who are research leaders to buy in. • Plan modest, but expect success. You won’t get $10 M to start, but you need to be prepared if you do (e. g. if your hardware budget triples due to wild demand, does your model scale the people, or do you go back with hat in hand? ). Put in milestones for growth, and make sure all parties will know what success is, and are ready for it. Do the same for failure. . . under what circumstances should they stop funding you?

How To Build A Center • Third, find some money to get started. • Figure out how you can provide value (e. g. , beyond the machine, what is needed for success? ). Get a couple of champions who are research leaders to buy in. • Plan modest, but expect success. You won’t get $10 M to start, but you need to be prepared if you do (e. g. if your hardware budget triples due to wild demand, does your model scale the people, or do you go back with hat in hand? ). Put in milestones for growth, and make sure all parties will know what success is, and are ready for it. Do the same for failure. . . under what circumstances should they stop funding you?

How To Build A Center Figure out what you can measure to determine success. Track everything you can (e. g. I know *exactly* what percentage of total university research expenditures come from our users, and what % of proposal dollars involves the center). Get faculty buy-in. Figure out what the key faculty need. Early successes are critical; word gets around. Pick 5 groups, and do whatever it takes to make them view the center as critical. Now that you have a plan, be prepared to scrap it at a moment’s notice. Be opportunistic and flexible.

How To Build A Center Figure out what you can measure to determine success. Track everything you can (e. g. I know *exactly* what percentage of total university research expenditures come from our users, and what % of proposal dollars involves the center). Get faculty buy-in. Figure out what the key faculty need. Early successes are critical; word gets around. Pick 5 groups, and do whatever it takes to make them view the center as critical. Now that you have a plan, be prepared to scrap it at a moment’s notice. Be opportunistic and flexible.

. . . And Then • The day you get started, you need to start working on your upgrade. If you don’t know what your next 5 proposals are going to be, you aren’t pushing the pipeline hard enough. Build a great staff. Your HPC leadership is not the lead admin, it’s the person who can write grant proposals. You need someone who has production, HPC expertise. Once you have some of this in-house, you can train more. . .

. . . And Then • The day you get started, you need to start working on your upgrade. If you don’t know what your next 5 proposals are going to be, you aren’t pushing the pipeline hard enough. Build a great staff. Your HPC leadership is not the lead admin, it’s the person who can write grant proposals. You need someone who has production, HPC expertise. Once you have some of this in-house, you can train more. . .

HPC is important. So is world peace. Why should we get resources? • HPC is in constant competition with other research/university priorities – IT is already a huge line item in the budget of every university and every unit in it (ASU: $90 M, half distributed). – It is true HPC is critical to research progress, but somebody will make the same argument about the electron microscope down the hall, or wetlab space, or, sequencers, or. . . – And just because it’s important, doesn’t mean anyone cares. . .

HPC is important. So is world peace. Why should we get resources? • HPC is in constant competition with other research/university priorities – IT is already a huge line item in the budget of every university and every unit in it (ASU: $90 M, half distributed). – It is true HPC is critical to research progress, but somebody will make the same argument about the electron microscope down the hall, or wetlab space, or, sequencers, or. . . – And just because it’s important, doesn’t mean anyone cares. . .

Dealing With Administrators • This comes in several forms (dealt with on the slides to follow), but administrators typically have a few things in common: Despite the opinion of others, they are always underfunded, and they want to stretch what they have as far as they can. *Leveraging dollars from elsewhere is important *. They really want their part of the university to succeed, and they have some plan about how *they* want to do that. *Understand their goals and priorities, and try to help them get their rather than change them* They’d rather make an investment they can point to as a win than accept a cost they must bear forever. In the end, you are going to ask for money. Everyone who walks through their door does. Try a new approach. . . ask them what they would want from an HPC center on campus, and find ways their is value for them. Tell them how you are going to leverage other funds beyond what you are asking for.

Dealing With Administrators • This comes in several forms (dealt with on the slides to follow), but administrators typically have a few things in common: Despite the opinion of others, they are always underfunded, and they want to stretch what they have as far as they can. *Leveraging dollars from elsewhere is important *. They really want their part of the university to succeed, and they have some plan about how *they* want to do that. *Understand their goals and priorities, and try to help them get their rather than change them* They’d rather make an investment they can point to as a win than accept a cost they must bear forever. In the end, you are going to ask for money. Everyone who walks through their door does. Try a new approach. . . ask them what they would want from an HPC center on campus, and find ways their is value for them. Tell them how you are going to leverage other funds beyond what you are asking for.

Asking IT for Support… No new problems It is not in the best interest of university IT for each faculty member to run their own cluster and fileserver. Security: IT is still responsible for the network Data Integrity: Who will the Inspector General call when federally funded research data is lost? Faculty will build clusters. . . IT can either choose to manage or not manage them. • If you don’t manage them, they will be broken, underutilized, security problems on which you get lots of calls, and everyone will be unhappy. • If you do manage them, everyone will want different things, it will be impossible to keep track of local state on each of them, and everyone will be unhappy.

Asking IT for Support… No new problems It is not in the best interest of university IT for each faculty member to run their own cluster and fileserver. Security: IT is still responsible for the network Data Integrity: Who will the Inspector General call when federally funded research data is lost? Faculty will build clusters. . . IT can either choose to manage or not manage them. • If you don’t manage them, they will be broken, underutilized, security problems on which you get lots of calls, and everyone will be unhappy. • If you do manage them, everyone will want different things, it will be impossible to keep track of local state on each of them, and everyone will be unhappy.

Asking the Research Office for Support • ROI - Return on Investment Myth: VPRs want you to make great discoveries Reality: VPRs want you to discover you’ve been externally funded. The research office is not a funding agency; a good research office invests in centers that provide a Return-on. Investment. For HPC centers, this means either: Win your own grants Drive research wins (how do you measure impact? ) Exemplar: TACC

Asking the Research Office for Support • ROI - Return on Investment Myth: VPRs want you to make great discoveries Reality: VPRs want you to discover you’ve been externally funded. The research office is not a funding agency; a good research office invests in centers that provide a Return-on. Investment. For HPC centers, this means either: Win your own grants Drive research wins (how do you measure impact? ) Exemplar: TACC

Why others invest • State Legislatures: Economic Development; states want to create jobs. They will care if you bring in companies or create new companies (employing people in your center does not count as job creation : ) Academic Units: Deans are already spending money on individual faculty clusters, and their associated costs.

Why others invest • State Legislatures: Economic Development; states want to create jobs. They will care if you bring in companies or create new companies (employing people in your center does not count as job creation : ) Academic Units: Deans are already spending money on individual faculty clusters, and their associated costs.

The Good News: Leverage • HPC/Research Computing can affect all these areas. . . – Research office is already investing in HPC through startup packages (on individual faculty clusters. . . in our case, >$400 k/yr) – IT is incurring support costs, one way or another – Someone is covering facilities costs (maybe local units) – HPC can drive research across a broad range of areas – HPC competitiveness focus lately makes ED an easier argument • It’s *not* just another piece of research infrastructure.

The Good News: Leverage • HPC/Research Computing can affect all these areas. . . – Research office is already investing in HPC through startup packages (on individual faculty clusters. . . in our case, >$400 k/yr) – IT is incurring support costs, one way or another – Someone is covering facilities costs (maybe local units) – HPC can drive research across a broad range of areas – HPC competitiveness focus lately makes ED an easier argument • It’s *not* just another piece of research infrastructure.

A Few Myths (for faculty) • F&A will pay for this! Research Computing is distinctly NOT in the indirect cost rate. . . F is physical plant only, and is break even. . . A is everything else, and is usually a money loser. We can set up a cost recovery center! (or faculty are willing to part with money. . . ) This just doesn’t work, at least not entirely. Academic centers that tried this are gone. Many commercial services are trying to sell cycles (IBM, SUN, Amazon) without a huge amount of sucess. I won an MRI, and the hardware is the hard part! Hardware money is relatively easy, and is actually not the biggest cost. Facilities cost for 20 KW racks are huge, and personnel costs forever.

A Few Myths (for faculty) • F&A will pay for this! Research Computing is distinctly NOT in the indirect cost rate. . . F is physical plant only, and is break even. . . A is everything else, and is usually a money loser. We can set up a cost recovery center! (or faculty are willing to part with money. . . ) This just doesn’t work, at least not entirely. Academic centers that tried this are gone. Many commercial services are trying to sell cycles (IBM, SUN, Amazon) without a huge amount of sucess. I won an MRI, and the hardware is the hard part! Hardware money is relatively easy, and is actually not the biggest cost. Facilities cost for 20 KW racks are huge, and personnel costs forever.

The ASU Experience • Begun with endowment funds • Housed in the Fulton School of Engineering • Construction planning began 9/04 • Operations began 9/05 • Year 1 Budget: $600 k (Core system 2 TF) • Year 4 Budget: $4, 800, 000 (Core System 50 TF)

The ASU Experience • Begun with endowment funds • Housed in the Fulton School of Engineering • Construction planning began 9/04 • Operations began 9/05 • Year 1 Budget: $600 k (Core system 2 TF) • Year 4 Budget: $4, 800, 000 (Core System 50 TF)

Funding Model • Core funds: $1. 25 M University Investment: UTO (IT), Research Office, contribute $250 k each annually (40% of total) Three Academic units (were) investing $250 k each: Fulton School of Engineering Liberal Arts and Science Life Sciences

Funding Model • Core funds: $1. 25 M University Investment: UTO (IT), Research Office, contribute $250 k each annually (40% of total) Three Academic units (were) investing $250 k each: Fulton School of Engineering Liberal Arts and Science Life Sciences

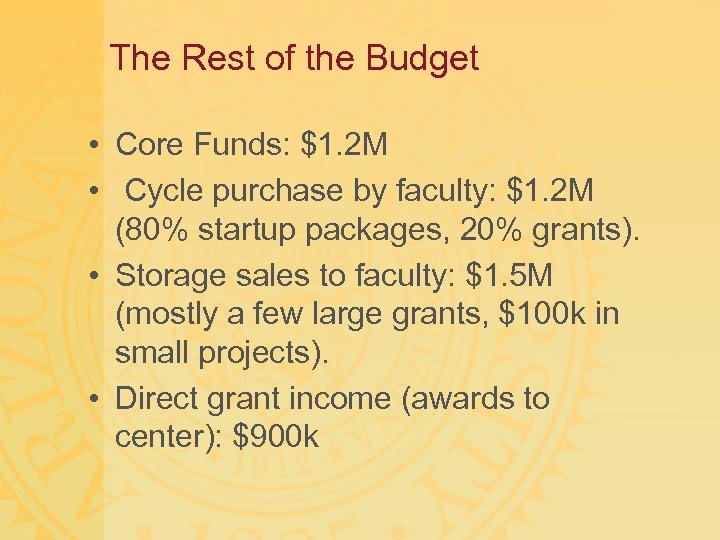

The Rest of the Budget • Core Funds: $1. 2 M • Cycle purchase by faculty: $1. 2 M (80% startup packages, 20% grants). • Storage sales to faculty: $1. 5 M (mostly a few large grants, $100 k in small projects). • Direct grant income (awards to center): $900 k

The Rest of the Budget • Core Funds: $1. 2 M • Cycle purchase by faculty: $1. 2 M (80% startup packages, 20% grants). • Storage sales to faculty: $1. 5 M (mostly a few large grants, $100 k in small projects). • Direct grant income (awards to center): $900 k

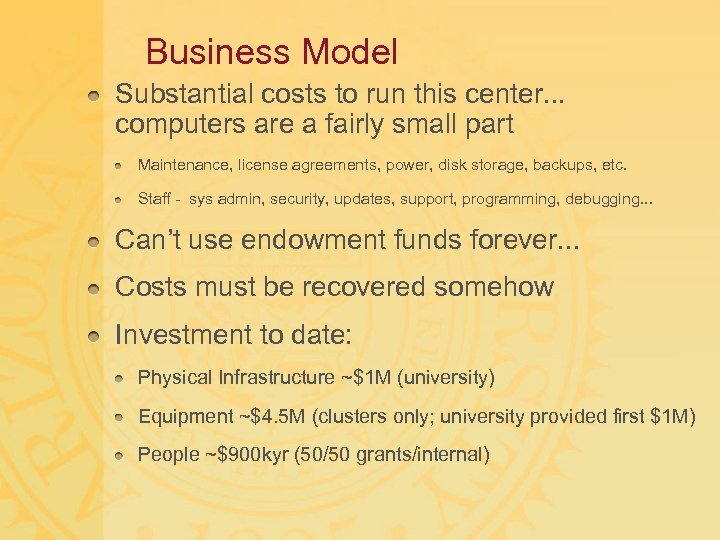

Business Model Substantial costs to run this center. . . computers are a fairly small part Maintenance, license agreements, power, disk storage, backups, etc. Staff - sys admin, security, updates, support, programming, debugging. . . Can’t use endowment funds forever. . . Costs must be recovered somehow Investment to date: Physical Infrastructure ~$1 M (university) Equipment ~$4. 5 M (clusters only; university provided first $1 M) People ~$900 kyr (50/50 grants/internal)

Business Model Substantial costs to run this center. . . computers are a fairly small part Maintenance, license agreements, power, disk storage, backups, etc. Staff - sys admin, security, updates, support, programming, debugging. . . Can’t use endowment funds forever. . . Costs must be recovered somehow Investment to date: Physical Infrastructure ~$1 M (university) Equipment ~$4. 5 M (clusters only; university provided first $1 M) People ~$900 kyr (50/50 grants/internal)

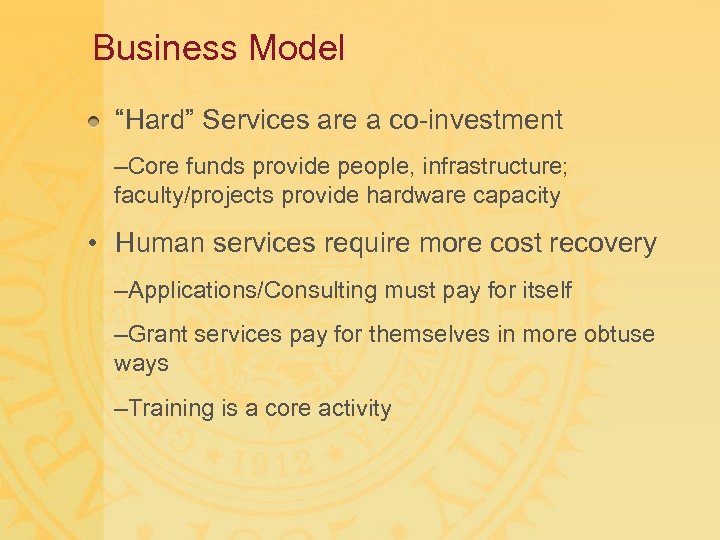

Business Model “Hard” Services are a co-investment –Core funds provide people, infrastructure; faculty/projects provide hardware capacity • Human services require more cost recovery –Applications/Consulting must pay for itself –Grant services pay for themselves in more obtuse ways –Training is a core activity

Business Model “Hard” Services are a co-investment –Core funds provide people, infrastructure; faculty/projects provide hardware capacity • Human services require more cost recovery –Applications/Consulting must pay for itself –Grant services pay for themselves in more obtuse ways –Training is a core activity

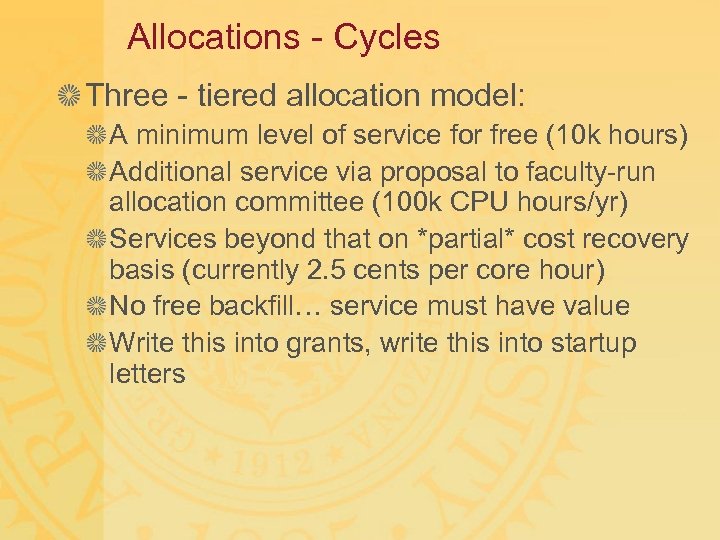

Allocations - Cycles Three - tiered allocation model: A minimum level of service for free (10 k hours) Additional service via proposal to faculty-run allocation committee (100 k CPU hours/yr) Services beyond that on *partial* cost recovery basis (currently 2. 5 cents per core hour) No free backfill… service must have value Write this into grants, write this into startup letters

Allocations - Cycles Three - tiered allocation model: A minimum level of service for free (10 k hours) Additional service via proposal to faculty-run allocation committee (100 k CPU hours/yr) Services beyond that on *partial* cost recovery basis (currently 2. 5 cents per core hour) No free backfill… service must have value Write this into grants, write this into startup letters

Allocations - Storage There are lots of kinds of storage High Performance Storage (large allocations free, but short term, ~30 days) Long term, high integrity - $2, 000/TB for 5 year lease. Still less than cost… Not forever (but a Terabyte will be almost free in 5 years) Many times disk cost, but managed, secure, snapshots, backups (multi-site mirror). Premium service at $3 k includes off site copies (outsourced). Ignore the lowest end (easily replaceable data… Best Buy can sell attached disk for $150/TB)

Allocations - Storage There are lots of kinds of storage High Performance Storage (large allocations free, but short term, ~30 days) Long term, high integrity - $2, 000/TB for 5 year lease. Still less than cost… Not forever (but a Terabyte will be almost free in 5 years) Many times disk cost, but managed, secure, snapshots, backups (multi-site mirror). Premium service at $3 k includes off site copies (outsourced). Ignore the lowest end (easily replaceable data… Best Buy can sell attached disk for $150/TB)

Other Tricky Issues • Service vs. Equipment and Indirect Cost • Equipment is exempt from IDC -- to get faculty to participate (and capture those funds) HPC fees better be as well. • We did this… its my greatest administrative achievement! • Portfolio Balance – As number of projects grow, protecting the core service mission is a challenge • Protecting Core Funding

Other Tricky Issues • Service vs. Equipment and Indirect Cost • Equipment is exempt from IDC -- to get faculty to participate (and capture those funds) HPC fees better be as well. • We did this… its my greatest administrative achievement! • Portfolio Balance – As number of projects grow, protecting the core service mission is a challenge • Protecting Core Funding

What Others have done. . . • Final Report: A Workshop on Effective Approaches to Campus Research Computing Cyberinfrastructure • http: //middleware. internet 2. edu/crcc/docs/internet 2 -crcc-report-200607. html – Sponsored by Internet 2 and NSF • Conclusions offered a list of problems, but no solutions, including: Major negative reinforcements exist in the current environment. For example, grant solicitations at several major funding agencies seem to favor "autonomous, small clusters in closets" over more sustainable and secure resources. There is increasing conflict between research computing systems and campus security procedures. The profile of researchers who use campus research computing cyberinfrastructure seems different from those who use national resources There are several campuses which are very active in supporting local research cyberinfrastructures, but even they are challenged with chronically insufficient resources • (Lack of) attention by funding agencies to this problem was pointed out 6 times in the executive summary - which tell you the goal of the meeting

What Others have done. . . • Final Report: A Workshop on Effective Approaches to Campus Research Computing Cyberinfrastructure • http: //middleware. internet 2. edu/crcc/docs/internet 2 -crcc-report-200607. html – Sponsored by Internet 2 and NSF • Conclusions offered a list of problems, but no solutions, including: Major negative reinforcements exist in the current environment. For example, grant solicitations at several major funding agencies seem to favor "autonomous, small clusters in closets" over more sustainable and secure resources. There is increasing conflict between research computing systems and campus security procedures. The profile of researchers who use campus research computing cyberinfrastructure seems different from those who use national resources There are several campuses which are very active in supporting local research cyberinfrastructures, but even they are challenged with chronically insufficient resources • (Lack of) attention by funding agencies to this problem was pointed out 6 times in the executive summary - which tell you the goal of the meeting

Mistakes I’ve made • Joint funding models are meant to be broken… didn’t have built-in consequences – Deep grant entanglements make “shutting off” units when they bailout is not practical (or ethical) – If I had it to do over, the unit contributions would have been a central tax. • Needed to move more aggressively in growing personnel base – Hiring has been *very* difficult

Mistakes I’ve made • Joint funding models are meant to be broken… didn’t have built-in consequences – Deep grant entanglements make “shutting off” units when they bailout is not practical (or ethical) – If I had it to do over, the unit contributions would have been a central tax. • Needed to move more aggressively in growing personnel base – Hiring has been *very* difficult

Take Aways • You need allies to run a center. • Campus funding is politically complex, but doable. • Make sure everyone contributes, one way or another, but spread the burden. . . everyone gets more than they pay for. • Centralizing HPC on the campus is worth doing.

Take Aways • You need allies to run a center. • Campus funding is politically complex, but doable. • Make sure everyone contributes, one way or another, but spread the burden. . . everyone gets more than they pay for. • Centralizing HPC on the campus is worth doing.

Discussion

Discussion