04e49f1d041d2b1fce4257da6557e8f4.ppt

- Количество слайдов: 30

Mining Behavior Graphs for “Backtrace” of Noncrashing Bugs Chao Liu, Xifeng Yan, Hwanjo Yu, Jiawei Han University of Illinois at Urbana-Champaign Philip S. Yu IBM T. J. Watson Research Presented by: Chao Liu

Outline Ø Ø Ø Motivations Related Work Classification of Program Executions Extract “Backtrace” from Classification Dynamics Case Study Conclusions

Motivations • Software is full of bugs – Windows 2000, 35 M LOC • 63, 000 known bugs at the time of release, 2 per 1000 lines • Software failure costs – Ariane 5 explosion is due to “errors in the software of the inertial reference system” (Ariaen-5 flight 501 inquiry board report http: //ravel. esrin. esa. it/docs/esa-x-1819 eng. pdf) – A study by the National Institute of Standards and Technology found that software errors cost the U. S. economy about $59. 5 billion annually http: //www. nist. gov/director/prog-ofc/report 02 -3. pdf Courtesy to CNN. com • Testing and debugging are laborious and expensive – “ 50% of my company employees are testers, and the rest spends 50% of their time testing!” --Bill Gates, in 1995

Bug Localization • Automatically circle out the most suspicious places • Two kinds of bugs w. r. t. symptoms – Crashing bugs • Typical symptoms: segmentation faults • Reasons: memory access violations – Noncrashing bugs • Typical symptoms: smooth executions but unexpected outputs • Reasons: logic or semantic errors • An example

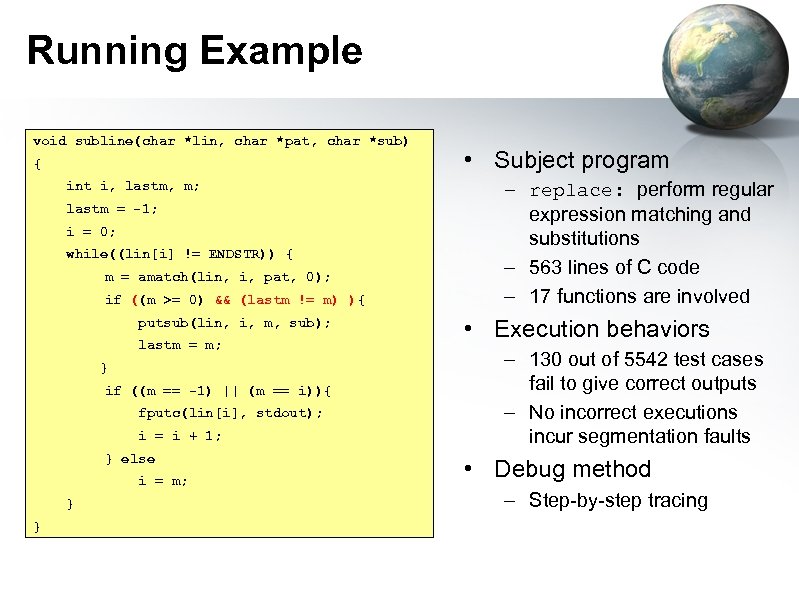

Running Example void subline(char *lin, char *pat, char *sub) { int i, lastm, m; lastm = -1; i = 0; while((lin[i] != ENDSTR)) { m = amatch(lin, i, pat, 0); if (m >= 0){ && (lastm != m) ){ ((m >= 0) putsub(lin, i, m, sub); lastm = m; } if ((m == -1) || (m == i)){ fputc(lin[i], stdout); i = i + 1; } else i = m; } } • Subject program – replace: perform regular expression matching and substitutions – 563 lines of C code – 17 functions are involved • Execution behaviors – 130 out of 5542 test cases fail to give correct outputs – No incorrect executions incur segmentation faults • Debug method – Step-by-step tracing

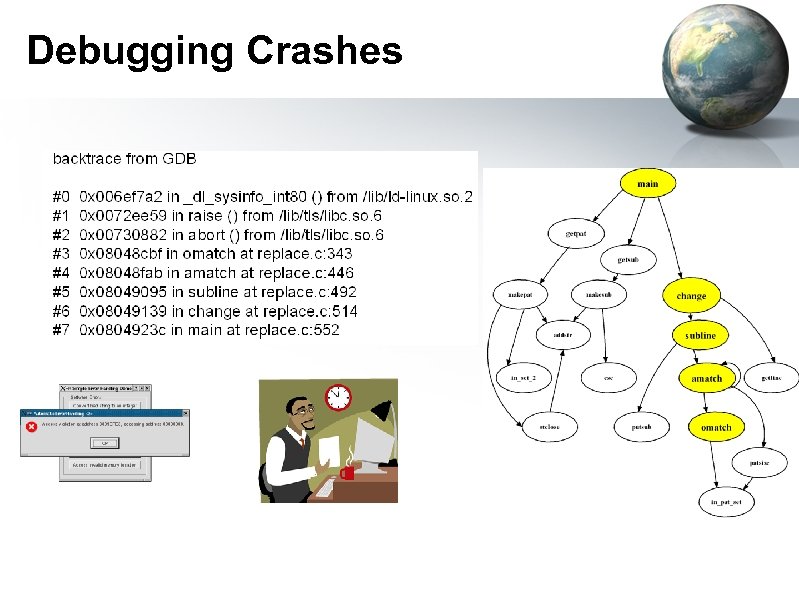

Debugging Crashes

Bug Localization via Backtrace • Backtrace for noncrashing bugs? • Major challenges – No abnormality is visible on the surface. – When and where the abnormality happens.

Outline Ø Ø Ø Motivations Related Work Classification of Program Executions Extract “Backtrace” from Classification Dynamics Case Study Conclusions

![Related Work • Crashing bugs – Memory access monitoring • Purify [HJ 92], Valgrind Related Work • Crashing bugs – Memory access monitoring • Purify [HJ 92], Valgrind](https://present5.com/presentation/04e49f1d041d2b1fce4257da6557e8f4/image-9.jpg)

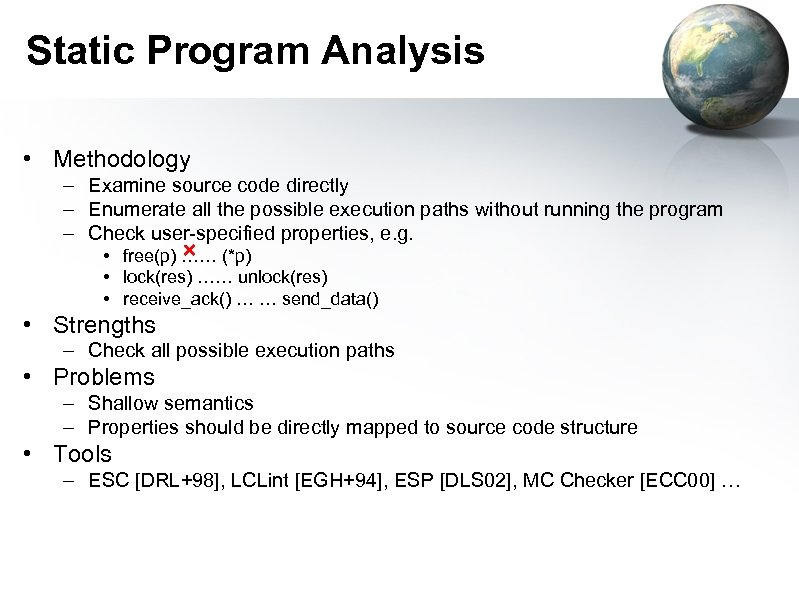

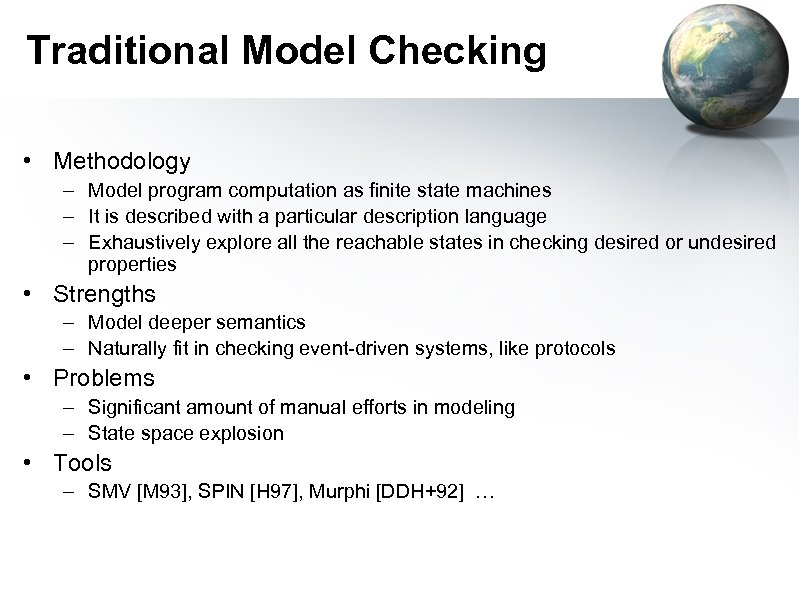

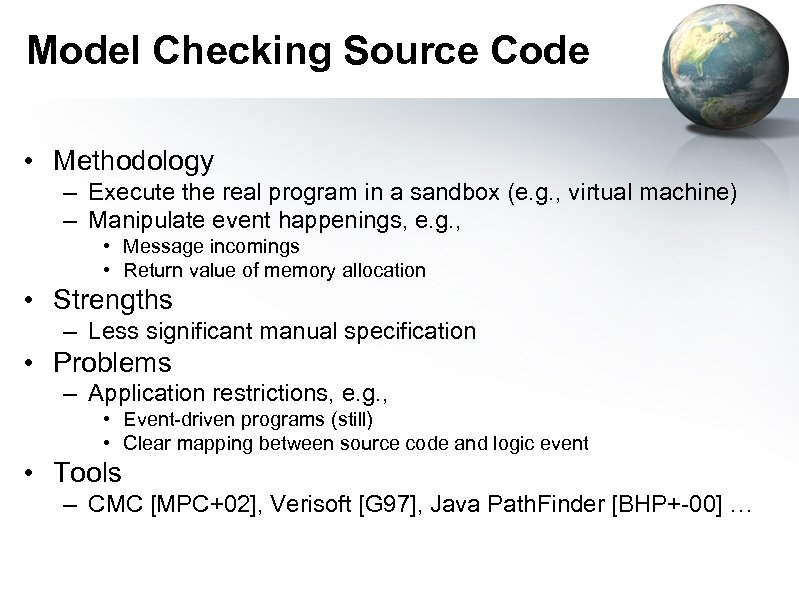

Related Work • Crashing bugs – Memory access monitoring • Purify [HJ 92], Valgrind [SN 00], GDB … • Noncrashing bugs – Static program analysis – Traditional model checking – Model checking source code

Static Program Analysis • Methodology – Examine source code directly – Enumerate all the possible execution paths without running the program – Check user-specified properties, e. g. × • free(p) …… (*p) • lock(res) …… unlock(res) • receive_ack() … … send_data() • Strengths – Check all possible execution paths • Problems – Shallow semantics – Properties should be directly mapped to source code structure • Tools – ESC [DRL+98], LCLint [EGH+94], ESP [DLS 02], MC Checker [ECC 00] …

Traditional Model Checking • Methodology – Model program computation as finite state machines – It is described with a particular description language – Exhaustively explore all the reachable states in checking desired or undesired properties • Strengths – Model deeper semantics – Naturally fit in checking event-driven systems, like protocols • Problems – Significant amount of manual efforts in modeling – State space explosion • Tools – SMV [M 93], SPIN [H 97], Murphi [DDH+92] …

Model Checking Source Code • Methodology – Execute the real program in a sandbox (e. g. , virtual machine) – Manipulate event happenings, e. g. , • Message incomings • Return value of memory allocation • Strengths – Less significant manual specification • Problems – Application restrictions, e. g. , • Event-driven programs (still) • Clear mapping between source code and logic event • Tools – CMC [MPC+02], Verisoft [G 97], Java Path. Finder [BHP+-00] …

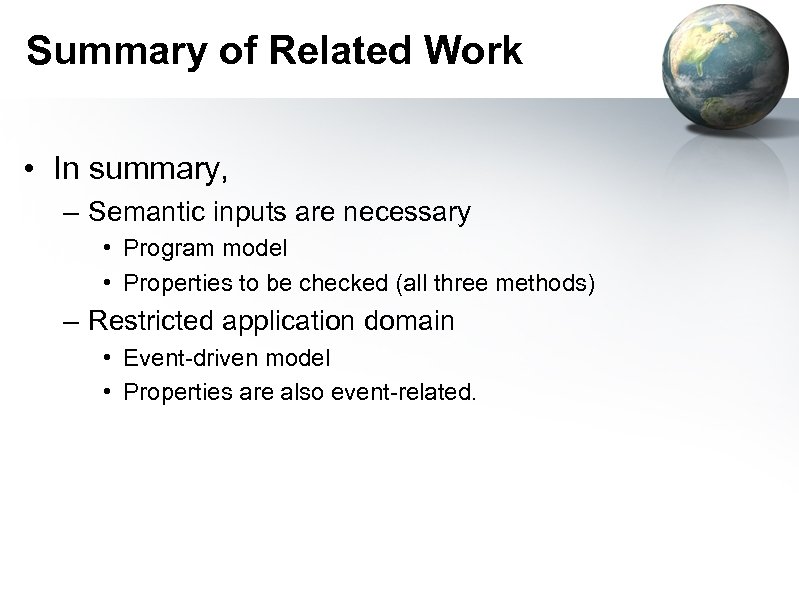

Summary of Related Work • In summary, – Semantic inputs are necessary • Program model • Properties to be checked (all three methods) – Restricted application domain • Event-driven model • Properties are also event-related.

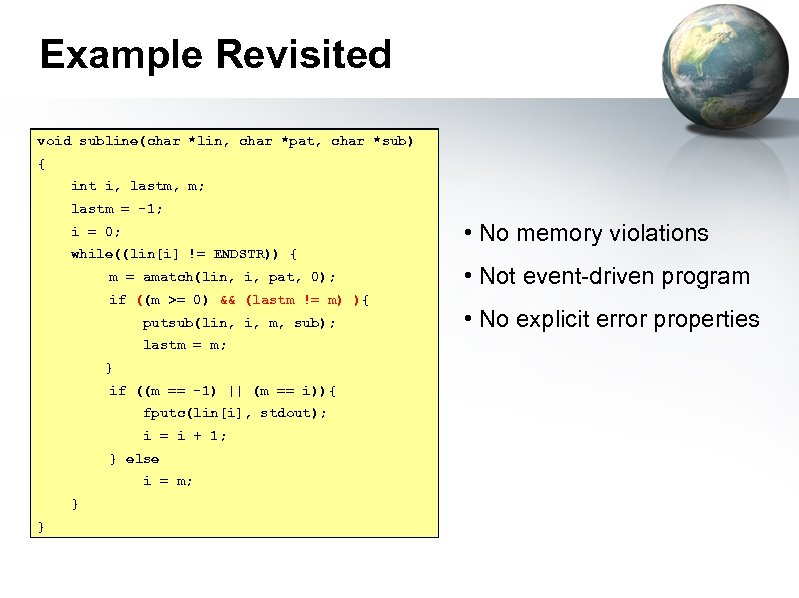

Example Revisited void subline(char *lin, char *pat, char *sub) { int i, lastm, m; lastm = -1; • No memory violations i = 0; while((lin[i] != ENDSTR)) { m = amatch(lin, i, pat, 0); if (m >=0){ && (lastm != m) ){ ((m>>=0){ 0) putsub(lin, i, m, sub); lastm = m; } if ((m == -1) || (m == i)){ fputc(lin[i], stdout); i = i + 1; } else i = m; } } • Not event-driven program • No explicit error properties

Outline Ø Ø Ø Motivations Related Work Classification of Program Executions Extract “Backtrace” from Classification Dynamics Case Study Conclusions

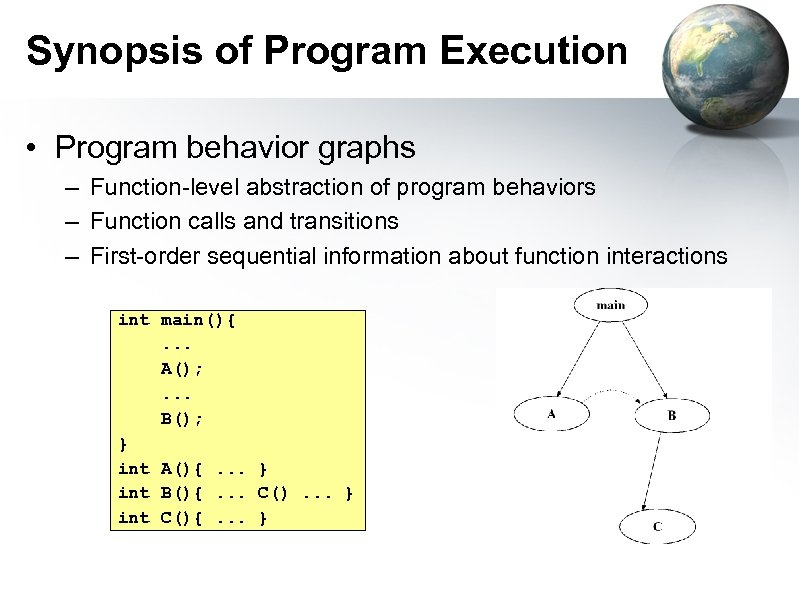

Synopsis of Program Execution • Program behavior graphs – Function-level abstraction of program behaviors – Function calls and transitions – First-order sequential information about function interactions int main(){. . . A(); . . . B(); } int A(){. . . } int B(){. . . C(). . . } int C(){. . . }

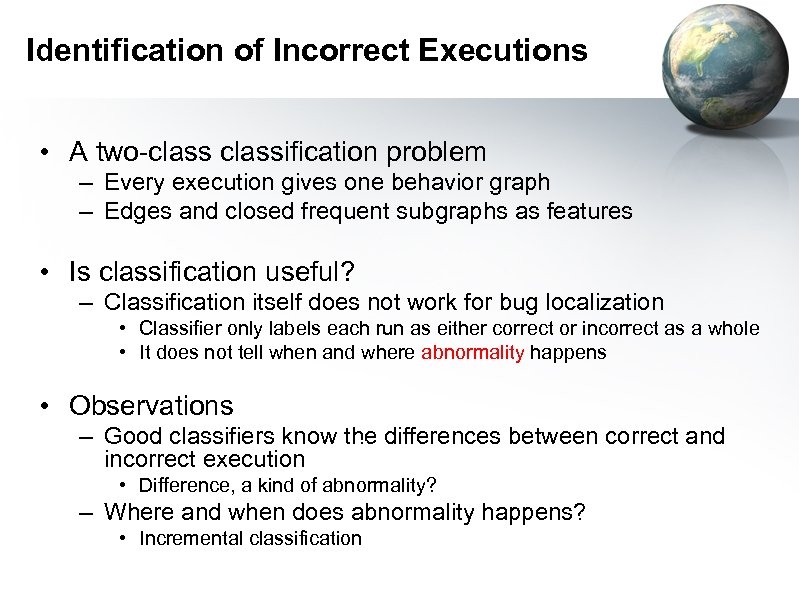

Identification of Incorrect Executions • A two-classification problem – Every execution gives one behavior graph – Edges and closed frequent subgraphs as features • Is classification useful? – Classification itself does not work for bug localization • Classifier only labels each run as either correct or incorrect as a whole • It does not tell when and where abnormality happens • Observations – Good classifiers know the differences between correct and ? incorrect execution • Difference, a kind of abnormality? – Where and when does abnormality happens? • Incremental classification

Outline Ø Ø Ø Motivations Related Work Classification of Program Executions Extract “Backtrace” from Classification Dynamics Case Study Conclusions

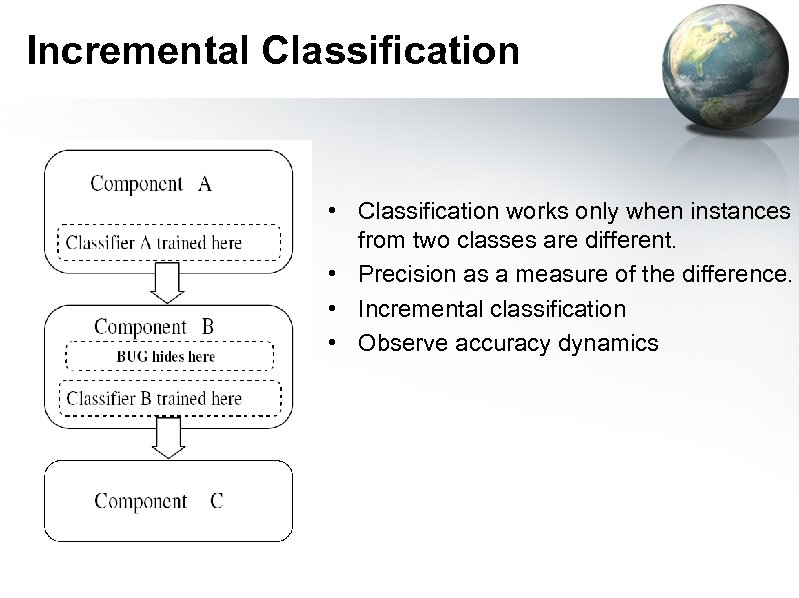

Incremental Classification • Classification works only when instances from two classes are different. • Precision as a measure of the difference. • Incremental classification • Observe accuracy dynamics

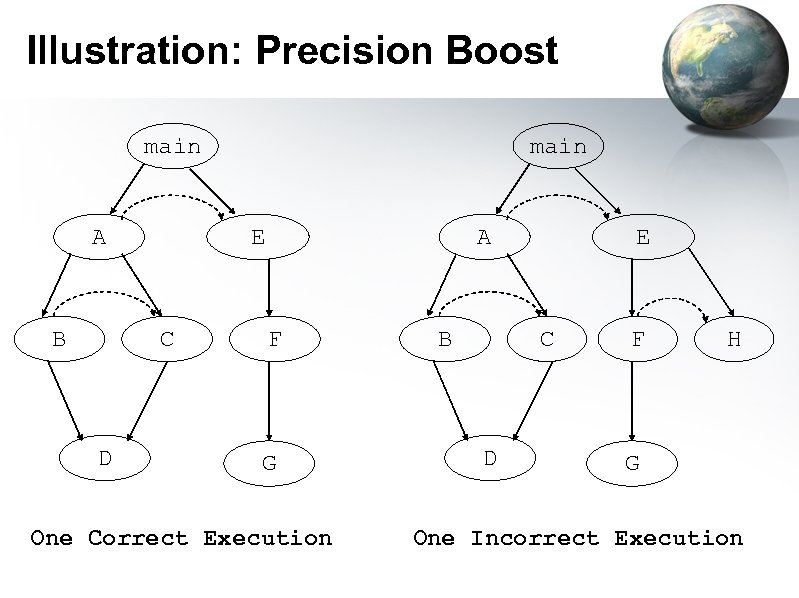

Illustration: Precision Boost main A B E C D main A F G One Correct Execution E C B D F H G One Incorrect Execution

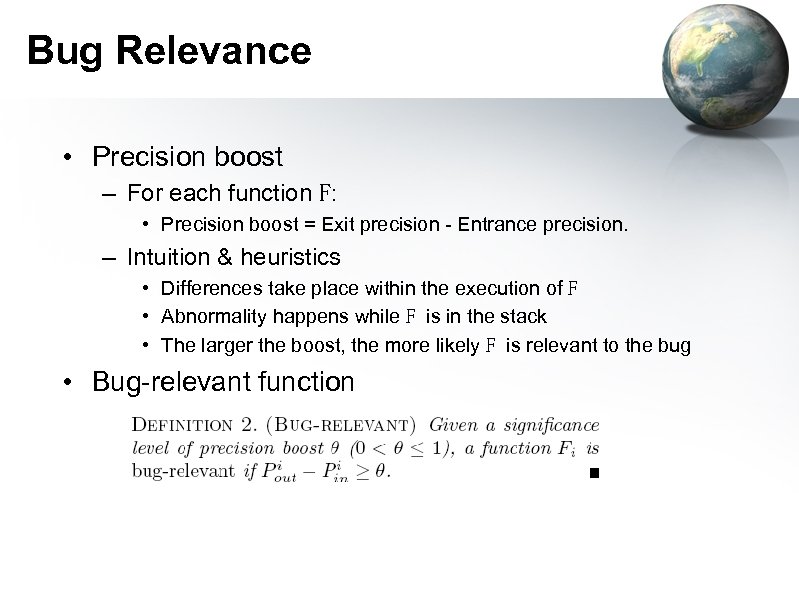

Bug Relevance • Precision boost – For each function F: • Precision boost = Exit precision - Entrance precision. – Intuition & heuristics • Differences take place within the execution of F • Abnormality happens while F is in the stack • The larger the boost, the more likely F is relevant to the bug • Bug-relevant function

Outline Ø Ø Ø Related Work Classification of Program Executions Extract “Backtrace” from Classification Dynamics Case Study Conclusions

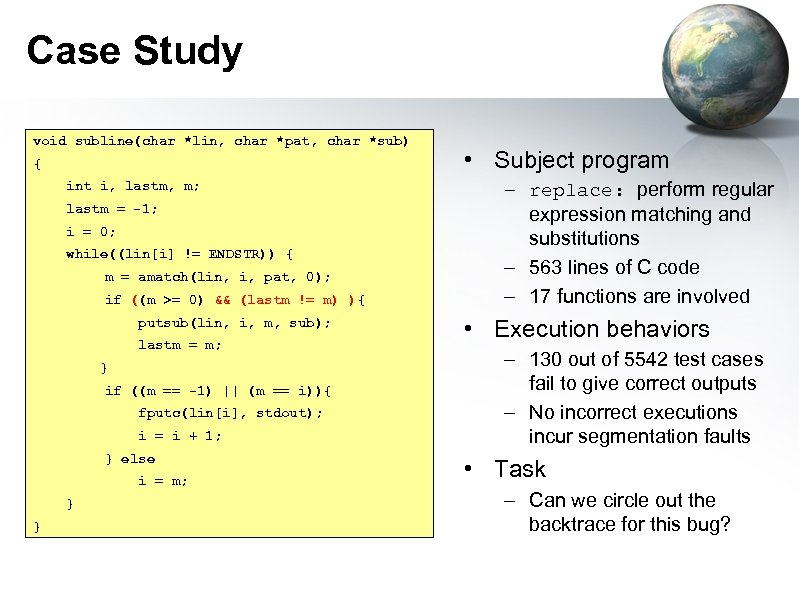

Case Study void subline(char *lin, char *pat, char *sub) { int i, lastm, m; lastm = -1; i = 0; while((lin[i] != ENDSTR)) { m = amatch(lin, i, pat, 0); if (m >= 0){ && (lastm != m) ){ ((m >= 0) putsub(lin, i, m, sub); lastm = m; } if ((m == -1) || (m == i)){ fputc(lin[i], stdout); i = i + 1; } else i = m; } } • Subject program – replace: perform regular expression matching and substitutions – 563 lines of C code – 17 functions are involved • Execution behaviors – 130 out of 5542 test cases fail to give correct outputs – No incorrect executions incur segmentation faults • Task – Can we circle out the backtrace for this bug?

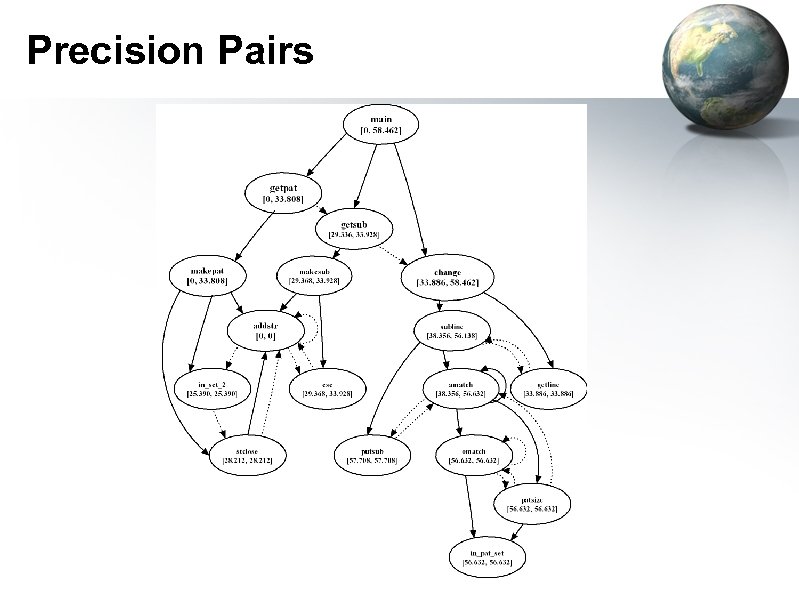

Precision Pairs

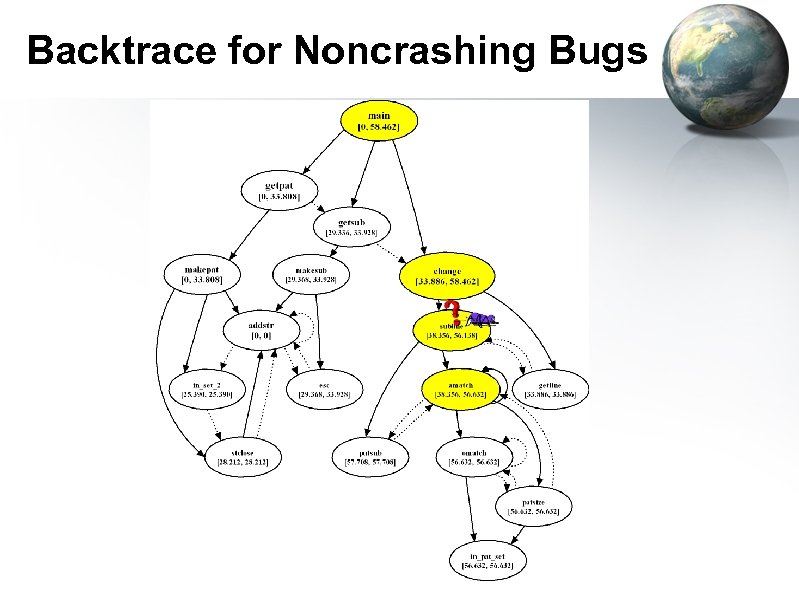

Backtrace for Noncrashing Bugs

Outline Ø Ø Ø Motivations Related Work Classification of Program Executions Extract “Backtrace” from Classification Dynamics Case Study Conclusions

Conclusions • Identify incorrect executions from program runtime behaviors. • Classification dynamics can give away “backtrace” for noncrashing bugs without any semantic inputs. • Data mining can contribute to software engineering and system researches in general. Mining into Software and Systems?

![References • • [DRL+98] David L. Detlefs, K. Rustan, M. Leino, Greg Nelson and References • • [DRL+98] David L. Detlefs, K. Rustan, M. Leino, Greg Nelson and](https://present5.com/presentation/04e49f1d041d2b1fce4257da6557e8f4/image-28.jpg)

References • • [DRL+98] David L. Detlefs, K. Rustan, M. Leino, Greg Nelson and James B. Saxe. Extended static checking, 1998 [EGH+94] David Evans, John Guttag, James Horning, and Yang Meng Tan. LCLint: A tool for using specifications to check code. In Proceedings of the ACM SIG-SOFT '94 Symposium on the Foundations of Software Engineering, pages 87 -96, 1994. [DLS 02] Manuvir Das, Sorin Lerner, and Mark Seigle. Esp: Path-sensitive program verication in polynomial time. In Conference on Programming Language Design and Implementation, 2002. [ECC 00] D. R. Engler, B. Chelf, A. Chou, and S. Hallem. Checking system rules using system-specic, programmer-written compiler extensions. In Proceedings of the Fourth Symposium on Operating Systems Design and Implementation, October 2000. [M 93] Ken Mc. Millan. Symbolic Model Checking. Kluwer Academic Publishers, 1993 [H 97] Gerard J. Holzmann. The model checker SPIN. Software Engineering, 23(5): 279295, 1997. [DDH+92] David L. Dill, Andreas J. Drexler, Alan J. Hu, and C. Han Yang. Protocol verication as a hardware design aid. In IEEE International Conference on Computer Design: VLSI in Computers and Processors, pages 522 -525, 1992. [MPC+02] Madanlal Musuvathi, David Y. W. Park, Andy Chou, Dawson R. Engler and David L. Dill. CMC: A Pragmatic Approach to Model Checking Real Code. In Proceedings of the fifth Symposium on Operating Systems Design and Implementation, 2002.

![References (cont’d) • • • [G 97] P. Godefroid. Model Checking for Programming Languages References (cont’d) • • • [G 97] P. Godefroid. Model Checking for Programming Languages](https://present5.com/presentation/04e49f1d041d2b1fce4257da6557e8f4/image-29.jpg)

References (cont’d) • • • [G 97] P. Godefroid. Model Checking for Programming Languages using Veri. Soft. In Proceedings of the 24 th ACM Symposium on Principles of Programming Languages, 1997 [BHP+-00] G. Brat, K. Havelund, S. Park, and W. Visser. Model checking programs. In IEEE International Conference on Automated Software Engineering (ASE), 2000. [HJ 92] R. Hastings and B. Joyce. Purify: Fast Detection of Memory Leaks and Access Errors. 1991. in Proceeding of the fthe Winter 1992 USENIX Conference, pages 125 -138. San Francisco, California [SN 00] Julian Seward and Nick Nethercote. Valgrind, an open-source memory debugger for x 86 -GNU/Linux http: //valgrind. org/ [LLM+04] Zhenmin Li, Shan Lu, Suvda Myagmar, Yuanyuan Zhou. CP-Miner: A Tool for Finding Copy-paste and Related Bugs in Operating System Code, in Proceeding of the 6 th Symposium of Operating Systems Design and Implementation, 2004 [LCS+04] Zhenmin Li, Zhifeng Chen, Sudarshan M. Srinivasan, Yuanyuan Zhou. C-Miner: Mining Block Correlations in Storage Systems. In proceeding of the 3 rd usenix conferences on file and storage technologies, 2004

Q & A Thank You!

04e49f1d041d2b1fce4257da6557e8f4.ppt