2ea5548aaf2c5dc59a3a3f5336fda428.ppt

- Количество слайдов: 10

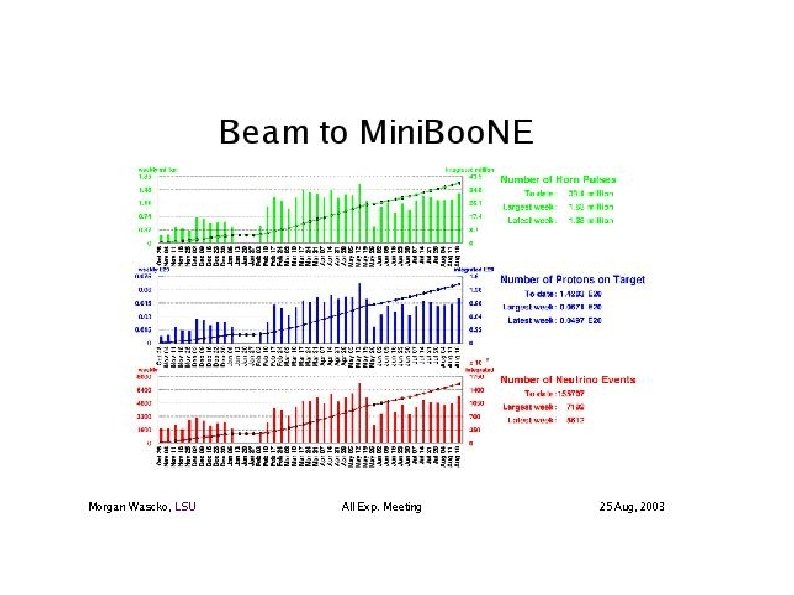

Mini. Boo. NE Computing Description: Support Mini. Boo. NE online and offline computing by coordinating the use of, and occasionally managing, CD resources. Participants: P. Spentzouris (15%) CD, CD liaison Steve Brice (50%), PPD, offline Chris Green (100%), user, sysadmin & general computing issues Timeline: end of run (2005 ? ? ? ), end of run+2 years (estimated) for offline related issues.

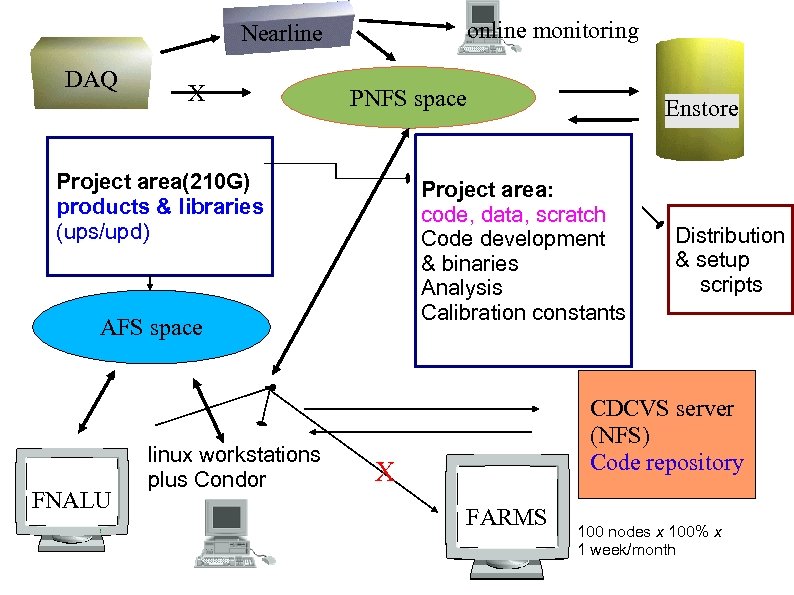

online monitoring Nearline DAQ X PNFS space Project area(210 G) products & libraries (ups/upd) Project area: code, data, scratch Code development & binaries Analysis Calibration constants AFS space FNALU linux workstations plus Condor Enstore Distribution & setup scripts CDCVS server (NFS) Code repository X FARMS 100 nodes x 100% x 1 week/month

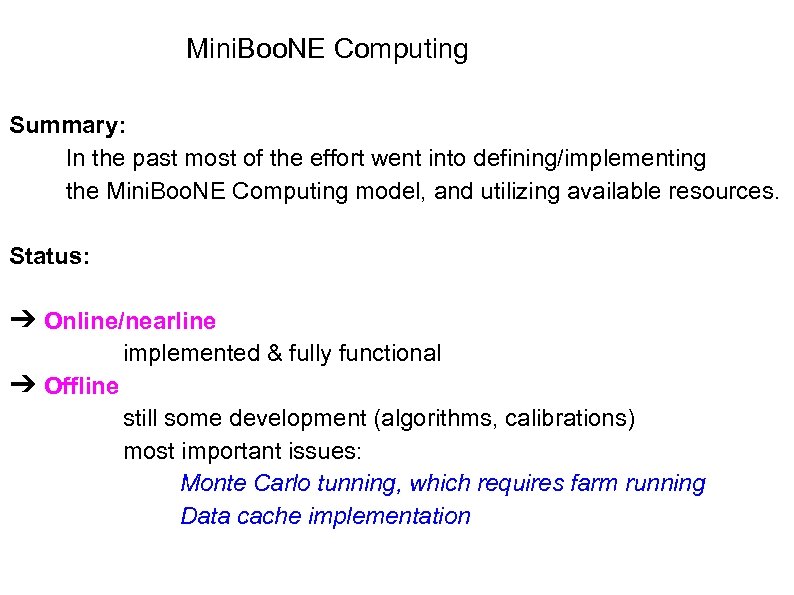

Mini. Boo. NE Computing Summary: In the past most of the effort went into defining/implementing the Mini. Boo. NE Computing model, and utilizing available resources. Status: ➔ Online/nearline implemented & fully functional ➔ Offline still some development (algorithms, calibrations) most important issues: Monte Carlo tunning, which requires farm running Data cache implementation

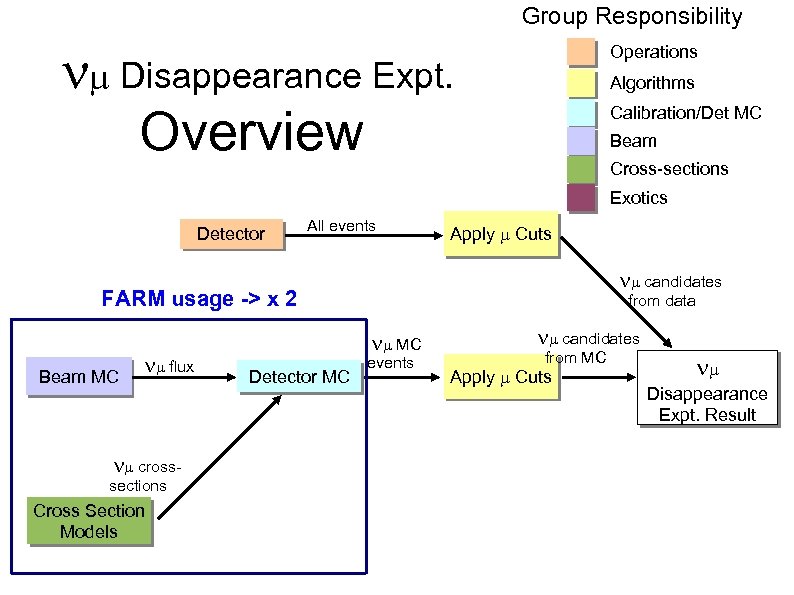

Group Responsibility nm Disappearance Expt. Overview Operations Algorithms Calibration/Det MC Beam Cross-sections Exotics Detector All events Apply m Cuts nm candidates FARM usage -> x 2 Beam MC nm flux nm cross- sections Cross Section Models from data nm MC Detector MC events nm candidates from MC Apply m Cuts nm Disappearance Expt. Result

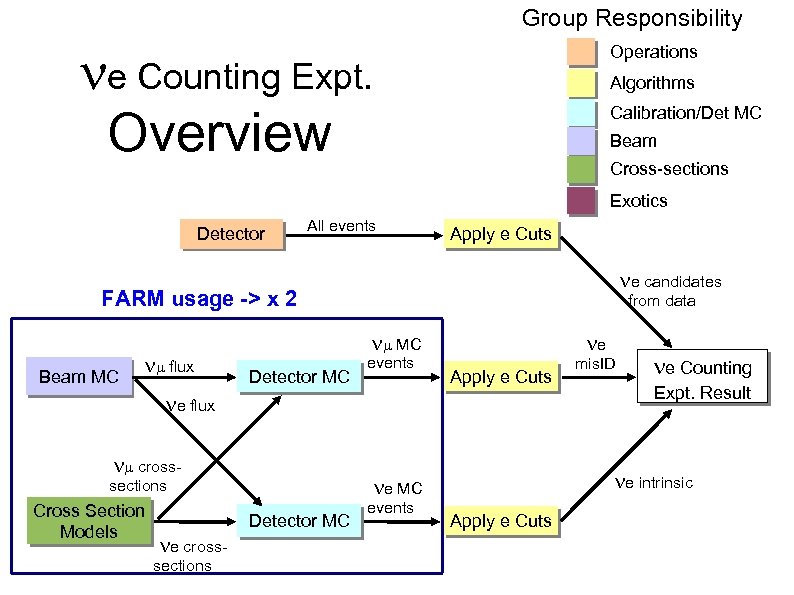

Group Responsibility ne Counting Expt. Overview Operations Algorithms Calibration/Det MC Beam Cross-sections Exotics Detector All events Apply e Cuts ne candidates FARM usage -> x 2 Beam MC nm flux from data nm MC Detector MC events ne Apply e Cuts ne flux nm cross- Cross Section Models ne cross- sections Detector MC events ne Counting Expt. Result ne intrinsic ne MC sections mis. ID Apply e Cuts

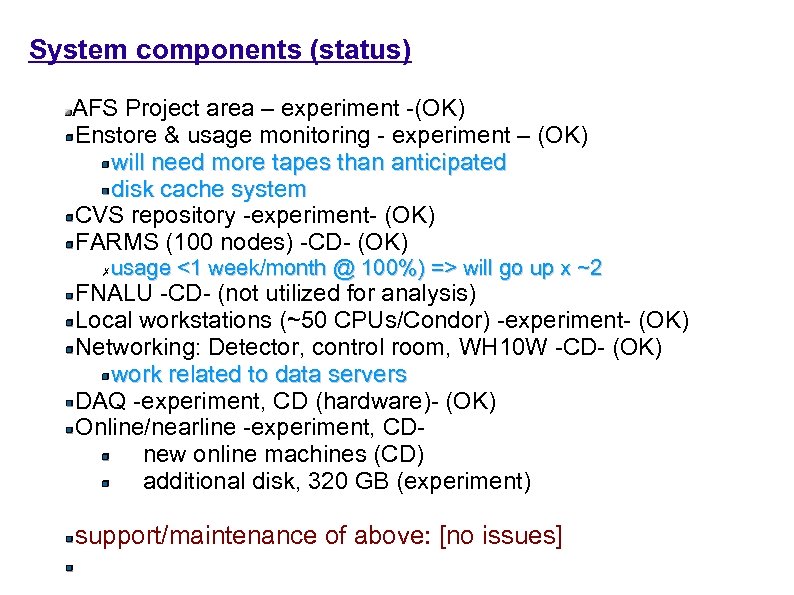

System components (status) AFS Project area – experiment -(OK) Enstore & usage monitoring - experiment – (OK) will need more tapes than anticipated disk cache system CVS repository -experiment- (OK) FARMS (100 nodes) -CD- (OK) usage <1 week/month @ 100%) => will go up x ~2 ✗ FNALU -CD- (not utilized for analysis) Local workstations (~50 CPUs/Condor) -experiment- (OK) Networking: Detector, control room, WH 10 W -CD- (OK) work related to data servers DAQ -experiment, CD (hardware)- (OK) Online/nearline -experiment, CDnew online machines (CD) additional disk, 320 GB (experiment) support/maintenance of above: [no issues]

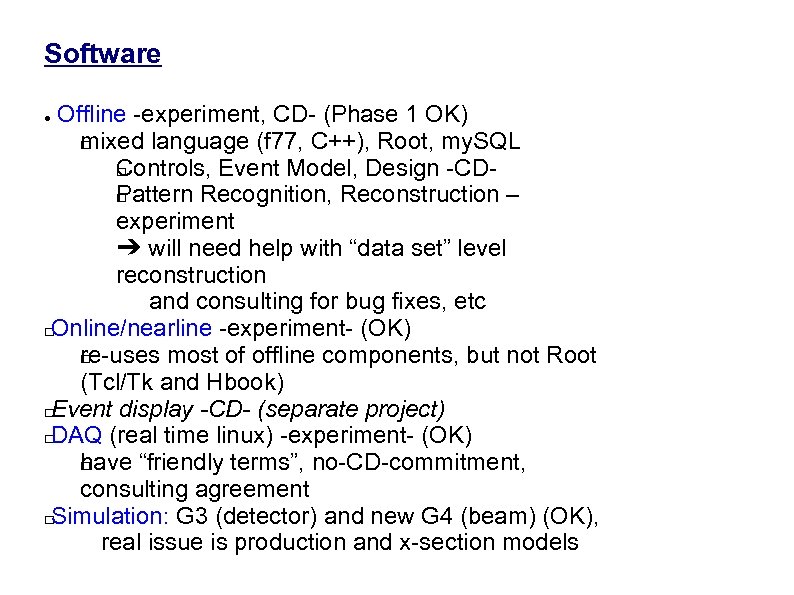

Software Offline -experiment, CD- (Phase 1 OK) m ixed language (f 77, C++), Root, my. SQL C ontrols, Event Model, Design -CDP attern Recognition, Reconstruction – experiment ➔ will need help with “data set” level reconstruction and consulting for bug fixes, etc Online/nearline -experiment- (OK) re-uses most of offline components, but not Root (Tcl/Tk and Hbook) Event display -CD- (separate project) DAQ (real time linux) -experiment- (OK) h ave “friendly terms”, no-CD-commitment, consulting agreement Simulation: G 3 (detector) and new G 4 (beam) (OK), real issue is production and x-section models ●

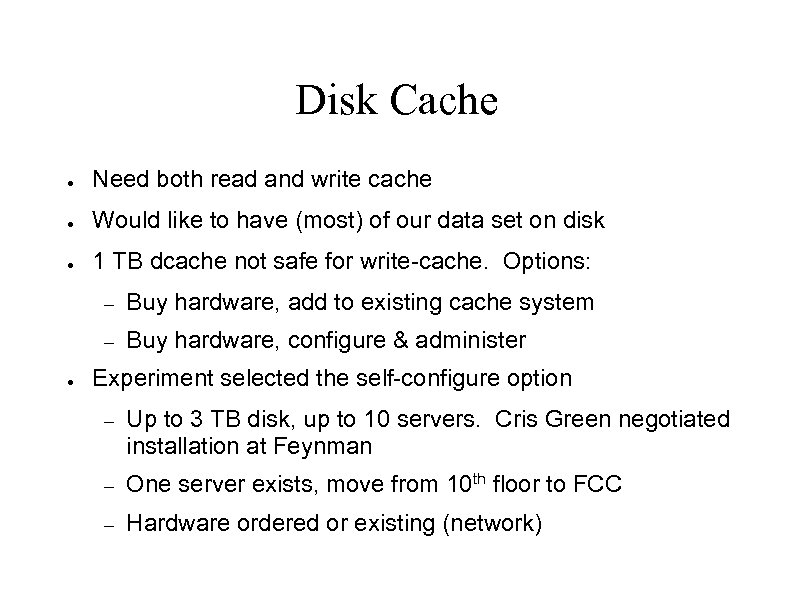

Disk Cache ● Need both read and write cache ● Would like to have (most) of our data set on disk ● 1 TB dcache not safe for write-cache. Options: – – ● Buy hardware, add to existing cache system Buy hardware, configure & administer Experiment selected the self-configure option – Up to 3 TB disk, up to 10 servers. Cris Green negotiated installation at Feynman – One server exists, move from 10 th floor to FCC – Hardware ordered or existing (network)

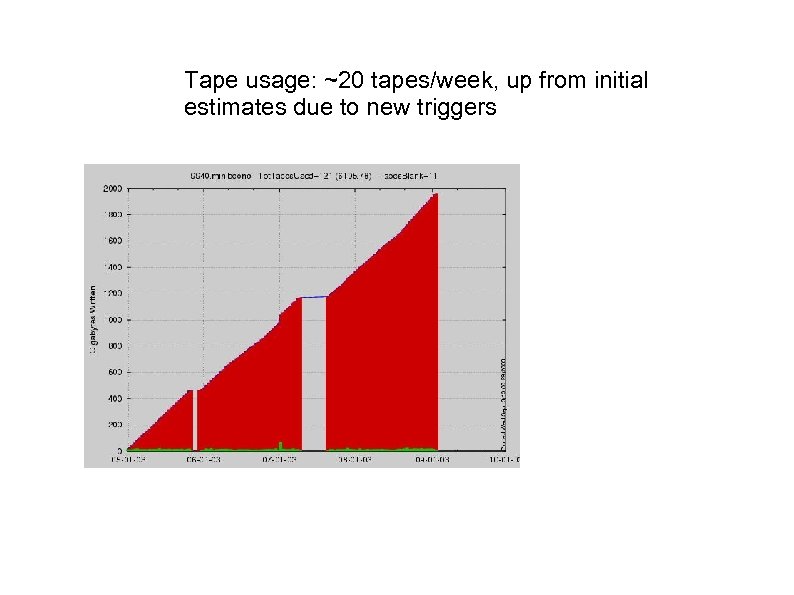

Tape usage: ~20 tapes/week, up from initial estimates due to new triggers

2ea5548aaf2c5dc59a3a3f5336fda428.ppt