fe6720eb6a474598b93910dec8c41beb.ppt

- Количество слайдов: 39

MIND READING WHEN YOU SAY NOTHING AT ALL…. Aditya Sharma (06305007) Mitesh M. Khapra (06305016) Sourabh Kasliwal (06329013) Rajiv Khanna (06329019)

MIND READING WHEN YOU SAY NOTHING AT ALL…. Aditya Sharma (06305007) Mitesh M. Khapra (06305016) Sourabh Kasliwal (06329013) Rajiv Khanna (06329019)

INTRODUCTION Mind Reading: The set of abilities that allow a person to infer others’ mental states from nonverbal cues and observed behavior. Humans do it all the time!! Words are not the only means of conveying a message: facial expressions, hand gestures and voice modulations also play a vital role. A few examples Mind reading is a very important part of a broader area of intelligence called social intelligence. During a lecture expressions like “yawning” or “staring at the ceiling” indicate boredom. Lovers : You say it best when you say nothing at all - Ronan Keating It gives you the ability to behave well in a social environment. Empathize with others. Choose correct words during a conversation. The absence of this ability in some humans is diagnosed as autism: such people find it difficult to operate in our complex social environment. 2

INTRODUCTION Mind Reading: The set of abilities that allow a person to infer others’ mental states from nonverbal cues and observed behavior. Humans do it all the time!! Words are not the only means of conveying a message: facial expressions, hand gestures and voice modulations also play a vital role. A few examples Mind reading is a very important part of a broader area of intelligence called social intelligence. During a lecture expressions like “yawning” or “staring at the ceiling” indicate boredom. Lovers : You say it best when you say nothing at all - Ronan Keating It gives you the ability to behave well in a social environment. Empathize with others. Choose correct words during a conversation. The absence of this ability in some humans is diagnosed as autism: such people find it difficult to operate in our complex social environment. 2

MOTIVATION Taking Human Computer Interaction to the next level. Affective Computing: Computers are designed to match their behavior to match that of the user Computer games Personalizing emotional skin design Psychological Applications It is no longer sufficient for a product to be simply usable or aesthetically pleasing, it also needs to evoke positive emotional responses. Pleasure, enjoyment, fun play an important role in HCI Fatigue detection Applications at rehabilitation Training of children diagnosed with autism Open new horizons for Human Computer Interaction Who knows, in the future we might have robots capable of playing soft music on recognizing that the user is depressed. (Wow!! Now that would be interesting!! Everyone likes an employee who does not need to be told what is to be done. ) 3

MOTIVATION Taking Human Computer Interaction to the next level. Affective Computing: Computers are designed to match their behavior to match that of the user Computer games Personalizing emotional skin design Psychological Applications It is no longer sufficient for a product to be simply usable or aesthetically pleasing, it also needs to evoke positive emotional responses. Pleasure, enjoyment, fun play an important role in HCI Fatigue detection Applications at rehabilitation Training of children diagnosed with autism Open new horizons for Human Computer Interaction Who knows, in the future we might have robots capable of playing soft music on recognizing that the user is depressed. (Wow!! Now that would be interesting!! Everyone likes an employee who does not need to be told what is to be done. ) 3

You say it best when you say nothing at all!! Try as I may I can never explain this thing that we share!! 4

You say it best when you say nothing at all!! Try as I may I can never explain this thing that we share!! 4

ROADMAP Introduction/Motivation Emotions and Facial Expressions Framework for Facial Expression Recognition Feature Extraction and Display Recognition Identifying emotions from the features and displays Applications Training children diagnosed with Autism Fatigue detection Summary 5

ROADMAP Introduction/Motivation Emotions and Facial Expressions Framework for Facial Expression Recognition Feature Extraction and Display Recognition Identifying emotions from the features and displays Applications Training children diagnosed with Autism Fatigue detection Summary 5

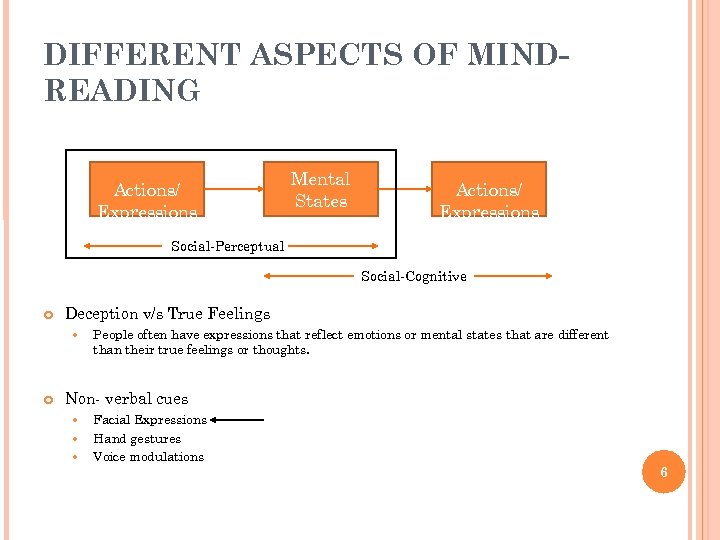

DIFFERENT ASPECTS OF MINDREADING Actions/ Expressions Mental States Actions/ Expressions Social-Perceptual Social-Cognitive Deception v/s True Feelings People often have expressions that reflect emotions or mental states that are different than their true feelings or thoughts. Non- verbal cues Facial Expressions Hand gestures Voice modulations 6

DIFFERENT ASPECTS OF MINDREADING Actions/ Expressions Mental States Actions/ Expressions Social-Perceptual Social-Cognitive Deception v/s True Feelings People often have expressions that reflect emotions or mental states that are different than their true feelings or thoughts. Non- verbal cues Facial Expressions Hand gestures Voice modulations 6

READING THE MIND IN THE FACE 7

READING THE MIND IN THE FACE 7

A TAXONOMY OF EMOTIONS 8

A TAXONOMY OF EMOTIONS 8

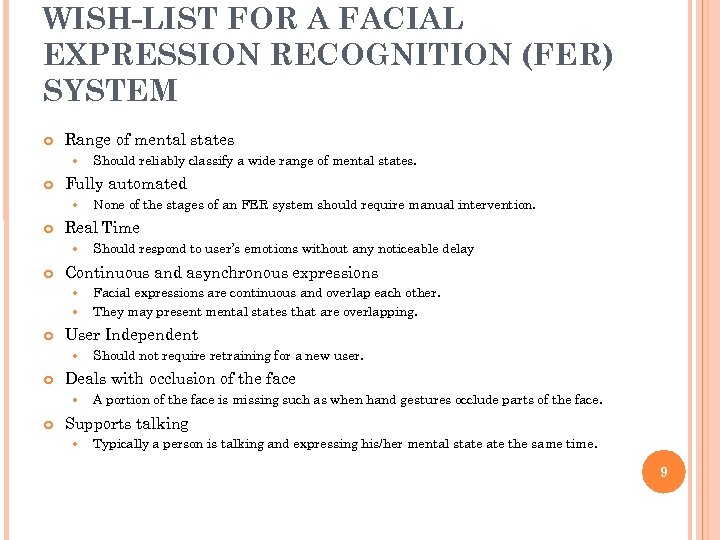

WISH-LIST FOR A FACIAL EXPRESSION RECOGNITION (FER) SYSTEM Range of mental states Fully automated Should not require retraining for a new user. Deals with occlusion of the face Facial expressions are continuous and overlap each other. They may present mental states that are overlapping. User Independent Should respond to user’s emotions without any noticeable delay Continuous and asynchronous expressions None of the stages of an FER system should require manual intervention. Real Time Should reliably classify a wide range of mental states. A portion of the face is missing such as when hand gestures occlude parts of the face. Supports talking Typically a person is talking and expressing his/her mental state the same time. 9

WISH-LIST FOR A FACIAL EXPRESSION RECOGNITION (FER) SYSTEM Range of mental states Fully automated Should not require retraining for a new user. Deals with occlusion of the face Facial expressions are continuous and overlap each other. They may present mental states that are overlapping. User Independent Should respond to user’s emotions without any noticeable delay Continuous and asynchronous expressions None of the stages of an FER system should require manual intervention. Real Time Should reliably classify a wide range of mental states. A portion of the face is missing such as when hand gestures occlude parts of the face. Supports talking Typically a person is talking and expressing his/her mental state the same time. 9

FRAMEWORK OF A FER SYSTEM 10

FRAMEWORK OF A FER SYSTEM 10

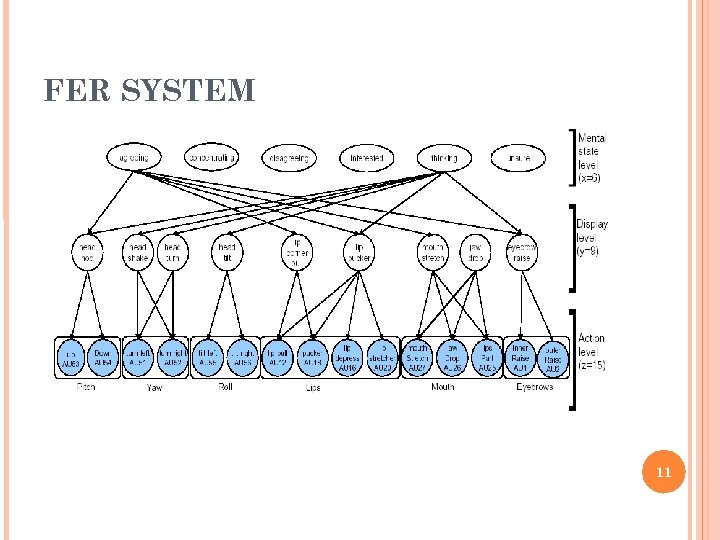

FER SYSTEM 11

FER SYSTEM 11

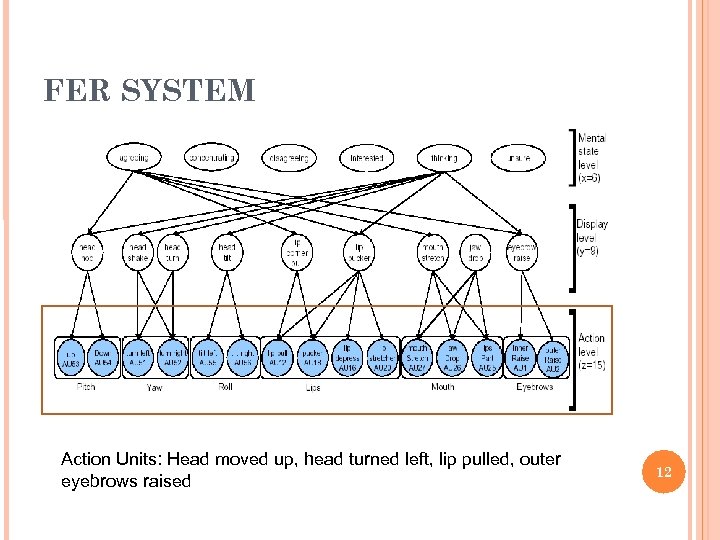

FER SYSTEM Action Units: Head moved up, head turned left, lip pulled, outer eyebrows raised 12

FER SYSTEM Action Units: Head moved up, head turned left, lip pulled, outer eyebrows raised 12

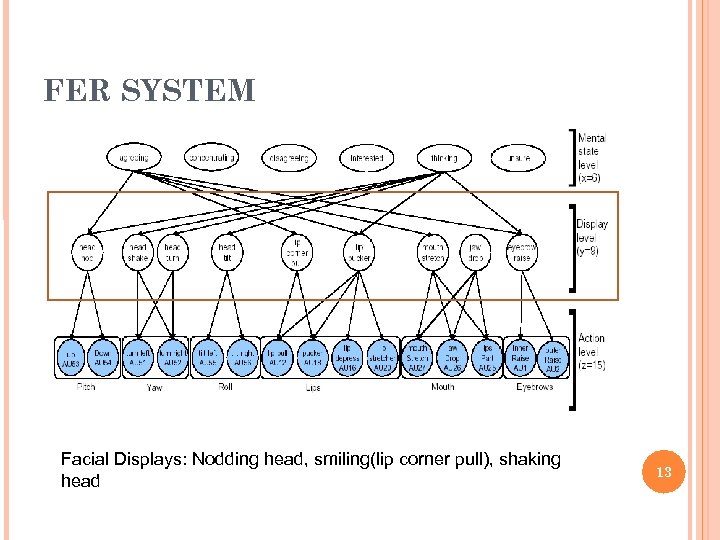

FER SYSTEM Facial Displays: Nodding head, smiling(lip corner pull), shaking head 13

FER SYSTEM Facial Displays: Nodding head, smiling(lip corner pull), shaking head 13

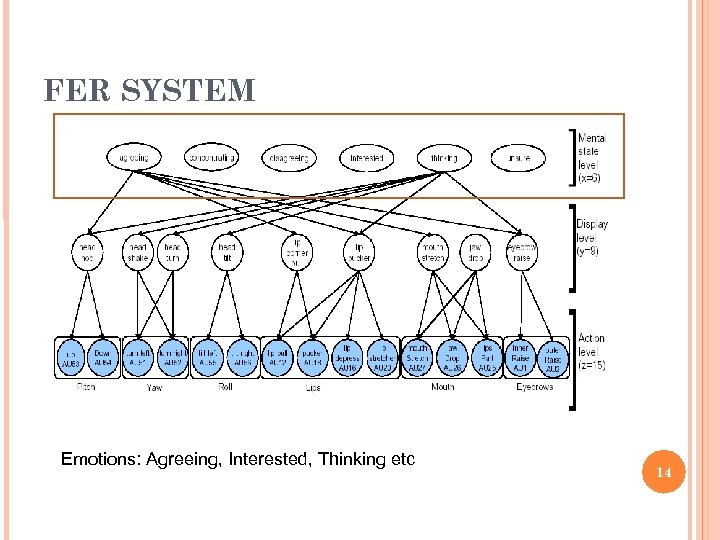

FER SYSTEM Emotions: Agreeing, Interested, Thinking etc 14

FER SYSTEM Emotions: Agreeing, Interested, Thinking etc 14

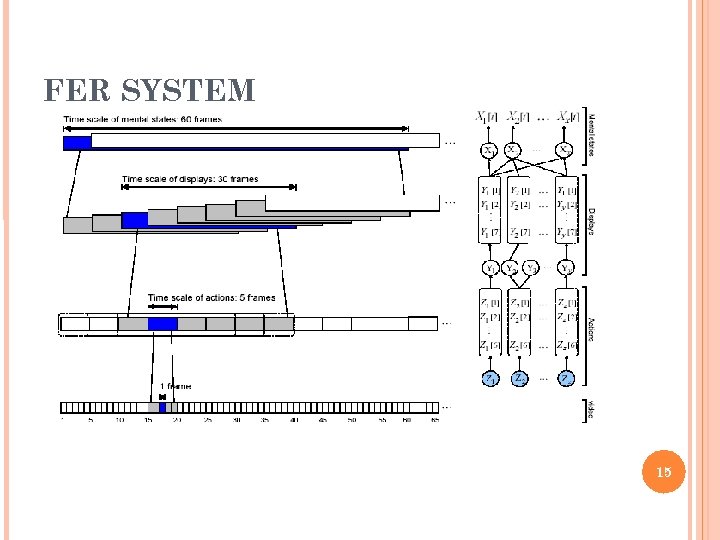

FER SYSTEM 15

FER SYSTEM 15

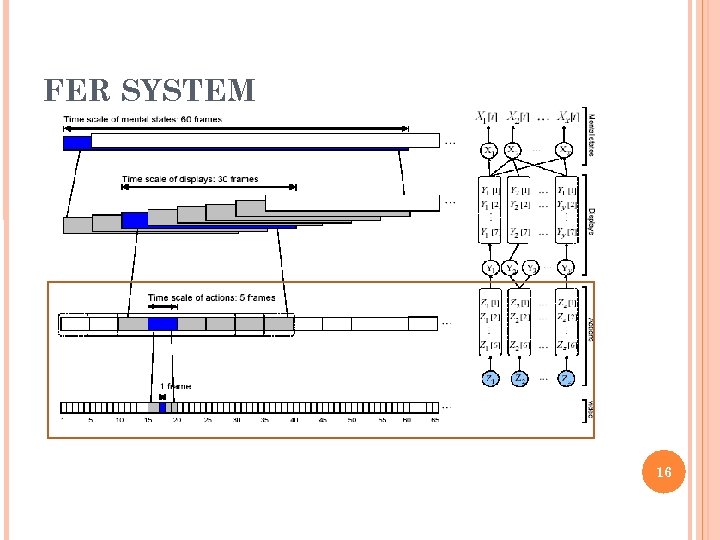

FER SYSTEM 16

FER SYSTEM 16

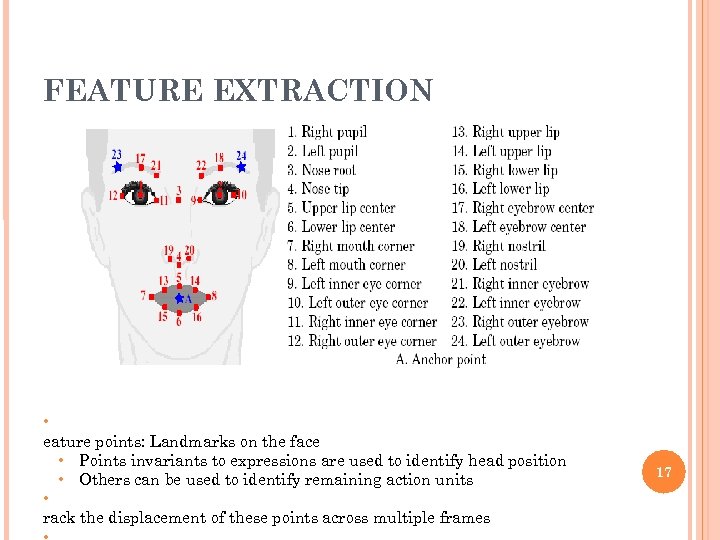

FEATURE EXTRACTION • eature points: Landmarks on the face • Points invariants to expressions are used to identify head position • Others can be used to identify remaining action units • rack the displacement of these points across multiple frames 17

FEATURE EXTRACTION • eature points: Landmarks on the face • Points invariants to expressions are used to identify head position • Others can be used to identify remaining action units • rack the displacement of these points across multiple frames 17

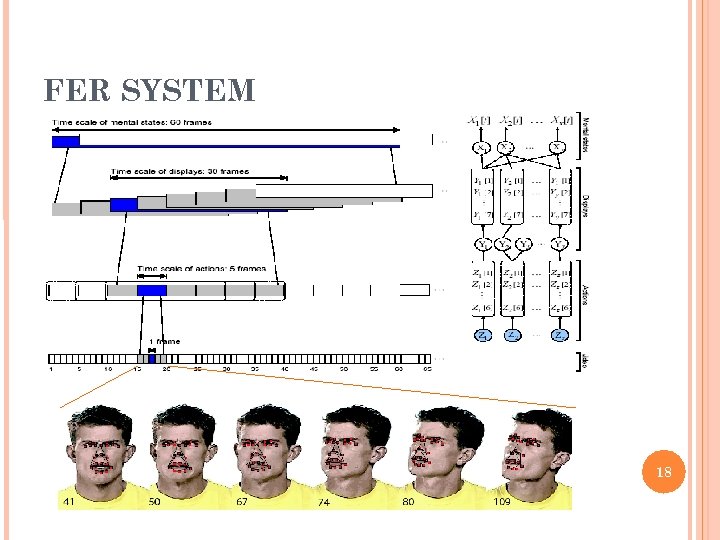

FER SYSTEM 18

FER SYSTEM 18

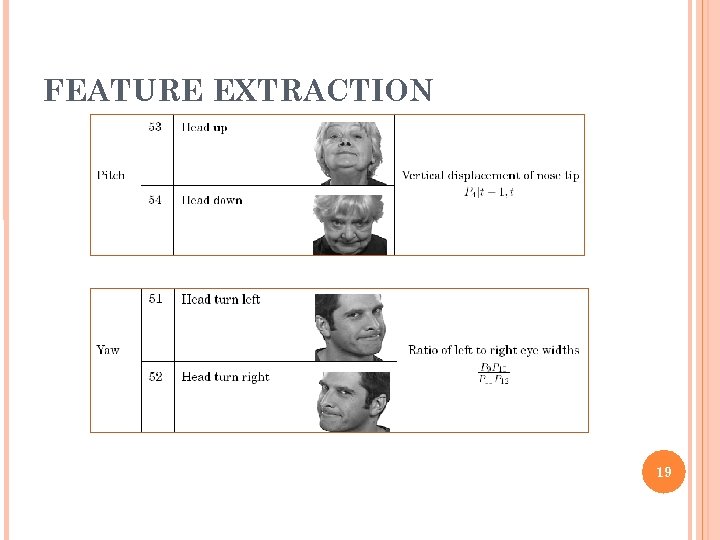

FEATURE EXTRACTION 19

FEATURE EXTRACTION 19

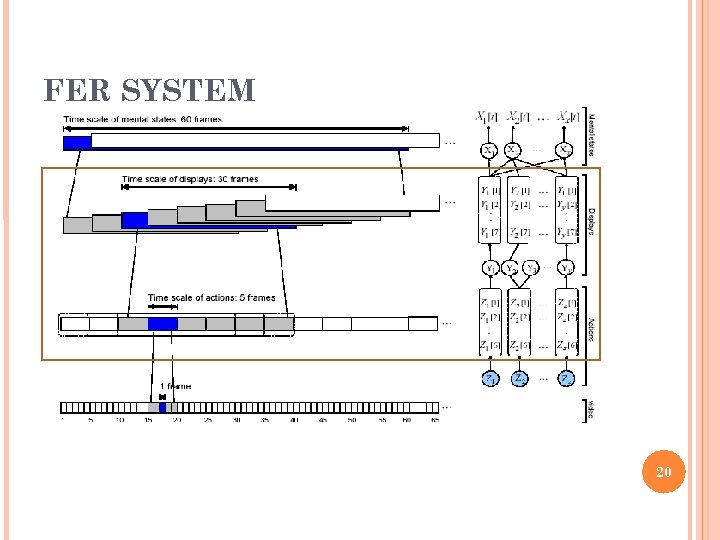

FER SYSTEM 20

FER SYSTEM 20

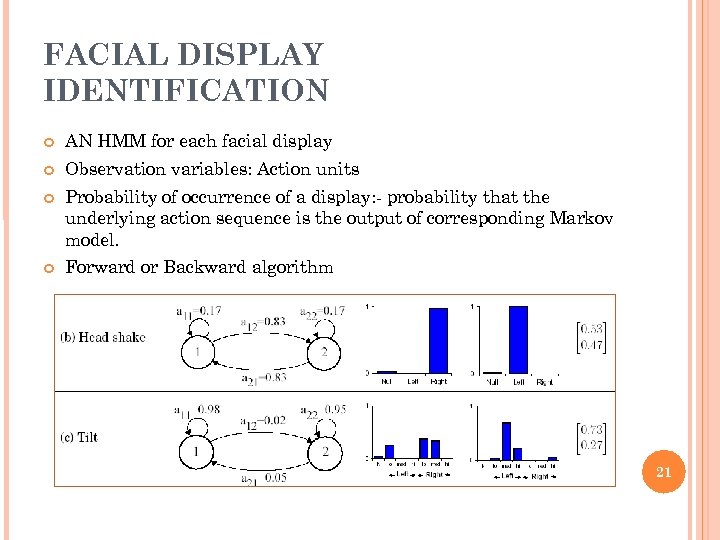

FACIAL DISPLAY IDENTIFICATION AN HMM for each facial display Observation variables: Action units Probability of occurrence of a display: - probability that the underlying action sequence is the output of corresponding Markov model. Forward or Backward algorithm 21

FACIAL DISPLAY IDENTIFICATION AN HMM for each facial display Observation variables: Action units Probability of occurrence of a display: - probability that the underlying action sequence is the output of corresponding Markov model. Forward or Backward algorithm 21

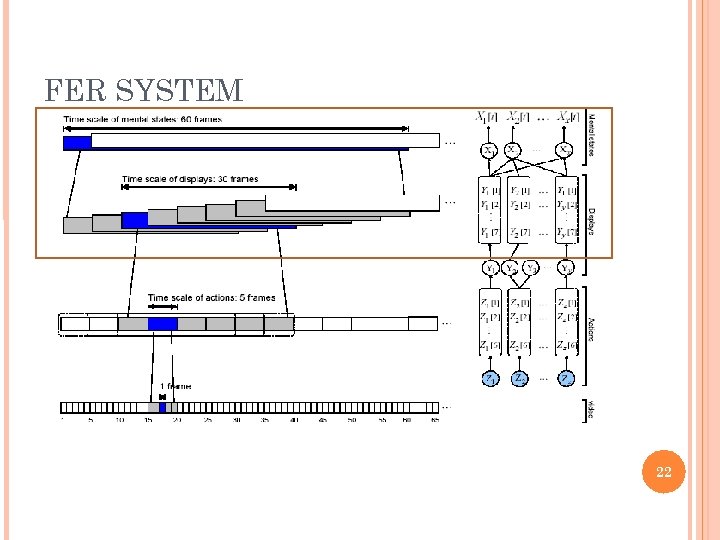

FER SYSTEM 22

FER SYSTEM 22

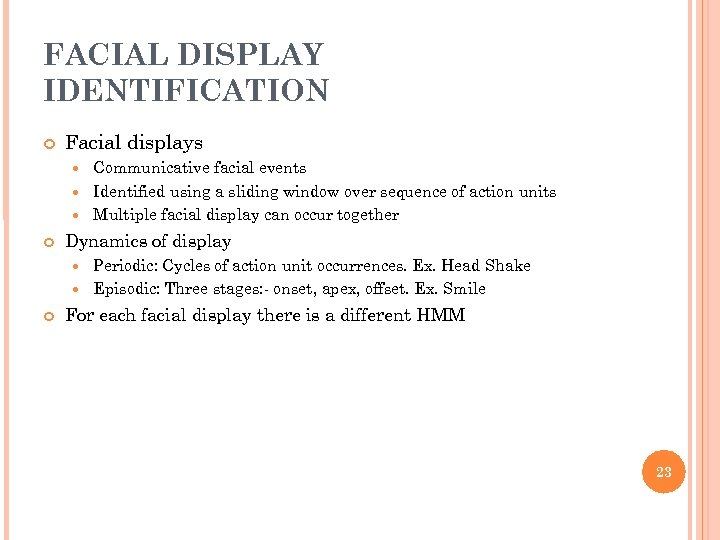

FACIAL DISPLAY IDENTIFICATION Facial displays Communicative facial events Identified using a sliding window over sequence of action units Multiple facial display can occur together Dynamics of display Periodic: Cycles of action unit occurrences. Ex. Head Shake Episodic: Three stages: - onset, apex, offset. Ex. Smile For each facial display there is a different HMM 23

FACIAL DISPLAY IDENTIFICATION Facial displays Communicative facial events Identified using a sliding window over sequence of action units Multiple facial display can occur together Dynamics of display Periodic: Cycles of action unit occurrences. Ex. Head Shake Episodic: Three stages: - onset, apex, offset. Ex. Smile For each facial display there is a different HMM 23

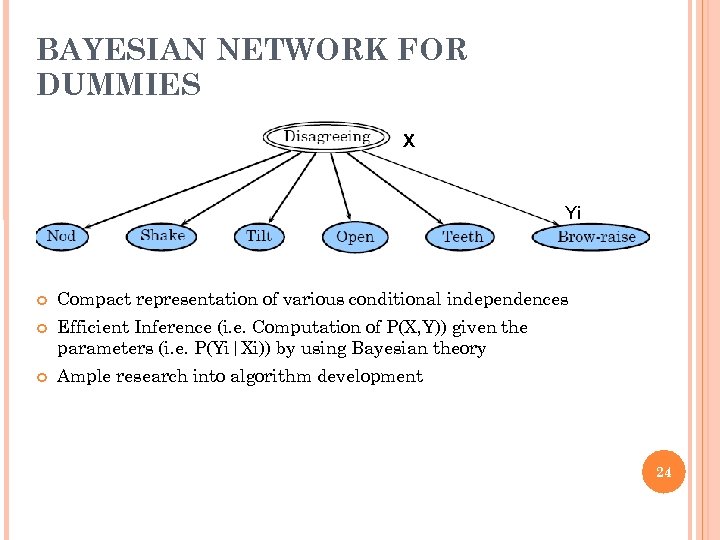

BAYESIAN NETWORK FOR DUMMIES X Yi Compact representation of various conditional independences Efficient Inference (i. e. Computation of P(X, Y)) given the parameters (i. e. P(Yi|Xi)) by using Bayesian theory Ample research into algorithm development 24

BAYESIAN NETWORK FOR DUMMIES X Yi Compact representation of various conditional independences Efficient Inference (i. e. Computation of P(X, Y)) given the parameters (i. e. P(Yi|Xi)) by using Bayesian theory Ample research into algorithm development 24

![MENTAL STATE INFERENCE BY DBN Emotions Displays Given: Yi[t] – probability that display i MENTAL STATE INFERENCE BY DBN Emotions Displays Given: Yi[t] – probability that display i](https://present5.com/presentation/fe6720eb6a474598b93910dec8c41beb/image-25.jpg) MENTAL STATE INFERENCE BY DBN Emotions Displays Given: Yi[t] – probability that display i was observed at instant t Parameters: Prior knowledge, temporal dependence To output: Probabilities Xi[t] 25

MENTAL STATE INFERENCE BY DBN Emotions Displays Given: Yi[t] – probability that display i was observed at instant t Parameters: Prior knowledge, temporal dependence To output: Probabilities Xi[t] 25

![DEFINING PARAMETERS Observation matrix: (intra-slice) Transition matrix: (inter-slice) Prior: P(Xi[0]). Application specific Learning: Maximum DEFINING PARAMETERS Observation matrix: (intra-slice) Transition matrix: (inter-slice) Prior: P(Xi[0]). Application specific Learning: Maximum](https://present5.com/presentation/fe6720eb6a474598b93910dec8c41beb/image-26.jpg) DEFINING PARAMETERS Observation matrix: (intra-slice) Transition matrix: (inter-slice) Prior: P(Xi[0]). Application specific Learning: Maximum Likelihood Estimation (MLE) 26

DEFINING PARAMETERS Observation matrix: (intra-slice) Transition matrix: (inter-slice) Prior: P(Xi[0]). Application specific Learning: Maximum Likelihood Estimation (MLE) 26

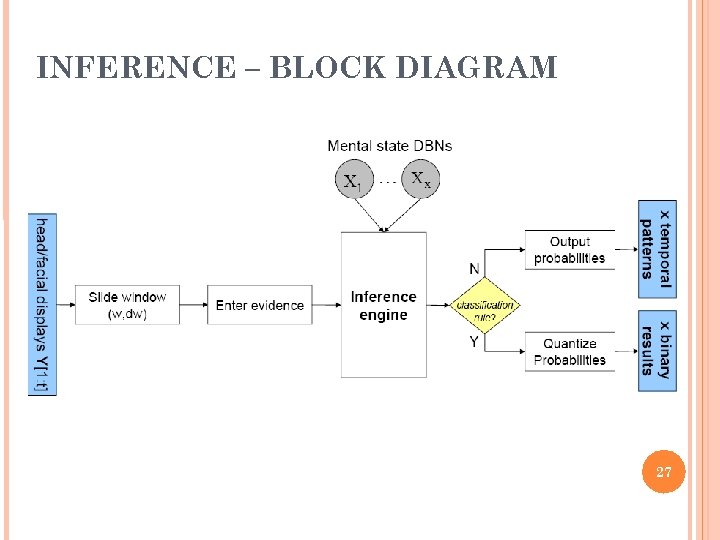

INFERENCE – BLOCK DIAGRAM 27

INFERENCE – BLOCK DIAGRAM 27

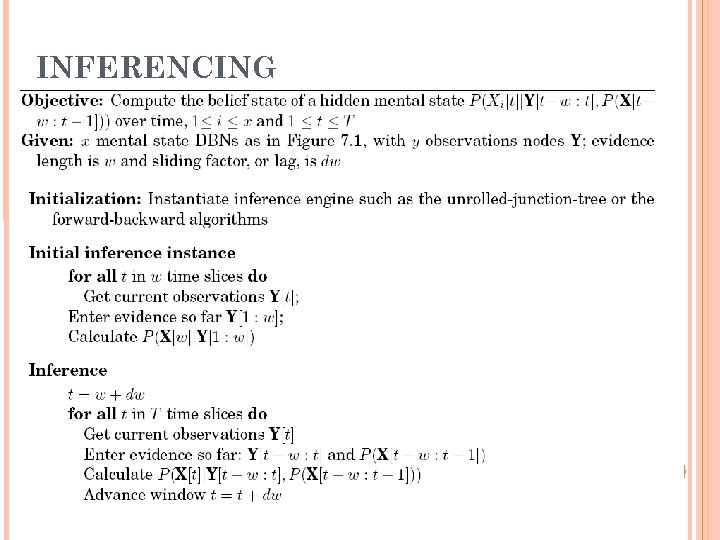

INFERENCING 28

INFERENCING 28

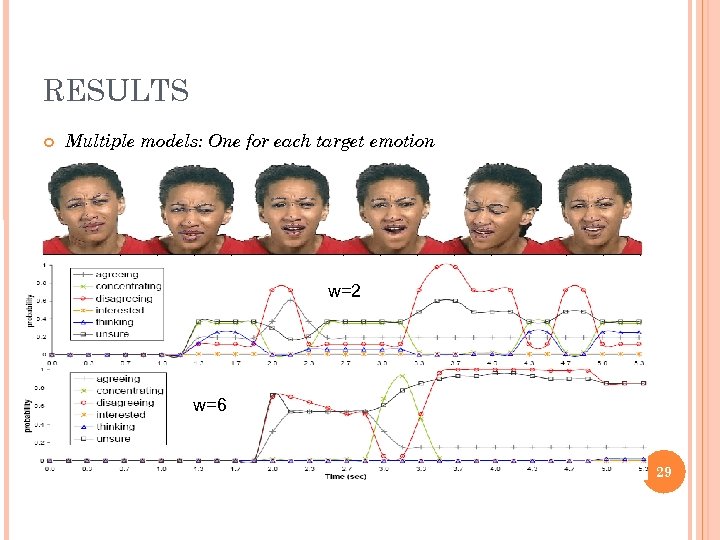

RESULTS Multiple models: One for each target emotion w=2 w=6 29

RESULTS Multiple models: One for each target emotion w=2 w=6 29

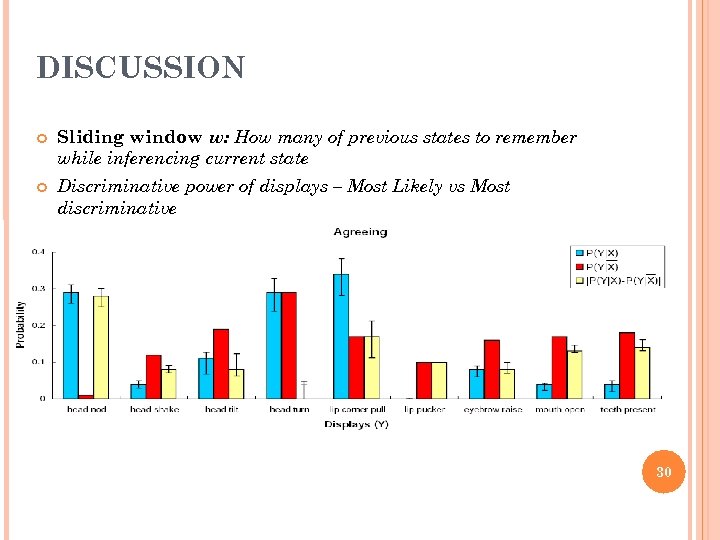

DISCUSSION Sliding window w: How many of previous states to remember while inferencing current state Discriminative power of displays – Most Likely vs Most discriminative 30

DISCUSSION Sliding window w: How many of previous states to remember while inferencing current state Discriminative power of displays – Most Likely vs Most discriminative 30

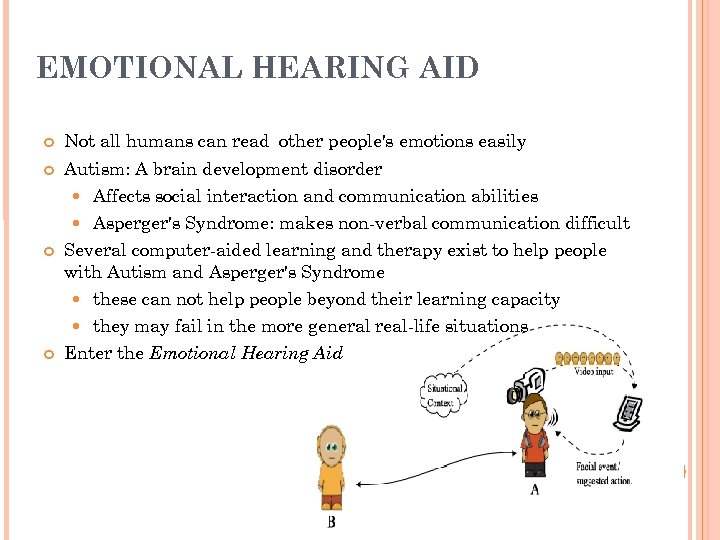

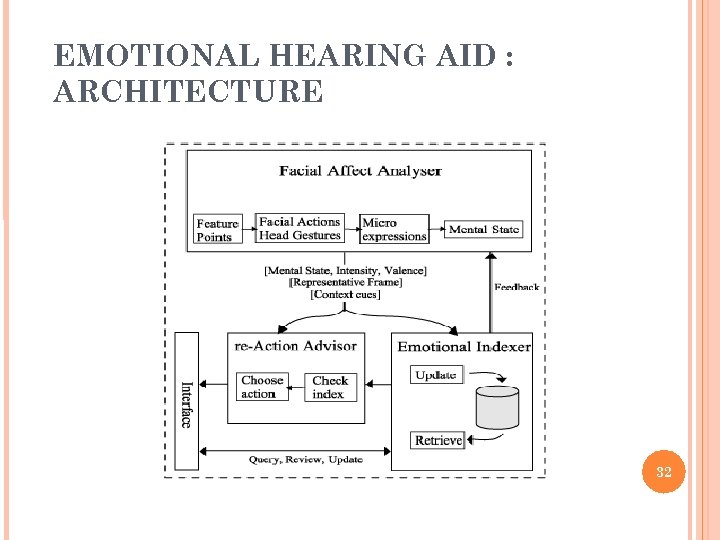

EMOTIONAL HEARING AID Not all humans can read other people's emotions easily Autism: A brain development disorder Affects social interaction and communication abilities Asperger's Syndrome: makes non-verbal communication difficult Several computer-aided learning and therapy exist to help people with Autism and Asperger's Syndrome these can not help people beyond their learning capacity they may fail in the more general real-life situations Enter the Emotional Hearing Aid 31

EMOTIONAL HEARING AID Not all humans can read other people's emotions easily Autism: A brain development disorder Affects social interaction and communication abilities Asperger's Syndrome: makes non-verbal communication difficult Several computer-aided learning and therapy exist to help people with Autism and Asperger's Syndrome these can not help people beyond their learning capacity they may fail in the more general real-life situations Enter the Emotional Hearing Aid 31

EMOTIONAL HEARING AID : ARCHITECTURE 32

EMOTIONAL HEARING AID : ARCHITECTURE 32

MONITORING DRIVER VIGILANCE Increasing number of road accidents due to lack of alertness in drivers Fatigued drivers show several visual cues : through eyes, face, head orientation Enter FER Systems To alert driver of unsafe driving condition using a camera and FER Computer in the car Main Target Areas Eyelid movement detection Gaze Determination Face Orientation Monitoring 33

MONITORING DRIVER VIGILANCE Increasing number of road accidents due to lack of alertness in drivers Fatigued drivers show several visual cues : through eyes, face, head orientation Enter FER Systems To alert driver of unsafe driving condition using a camera and FER Computer in the car Main Target Areas Eyelid movement detection Gaze Determination Face Orientation Monitoring 33

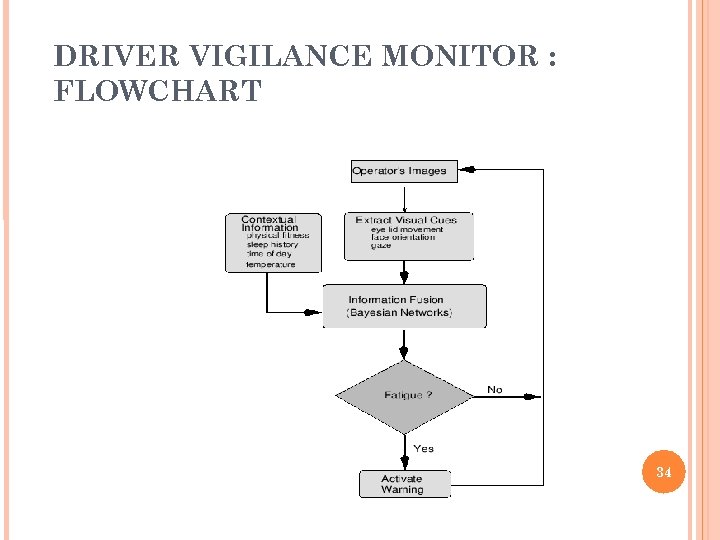

DRIVER VIGILANCE MONITOR : FLOWCHART 34

DRIVER VIGILANCE MONITOR : FLOWCHART 34

SUMMARY Non-verbal cues, especially facial expressions play a very important role in social interaction. Identifying and inferencing these features involves the synergic union of diverse fields like psychology, image processing and statistical machine learning. Enabling emotion recognition in computers will take HCI to the next level. Emotion recognition also helps in tackling various psychological problems. 35

SUMMARY Non-verbal cues, especially facial expressions play a very important role in social interaction. Identifying and inferencing these features involves the synergic union of diverse fields like psychology, image processing and statistical machine learning. Enabling emotion recognition in computers will take HCI to the next level. Emotion recognition also helps in tackling various psychological problems. 35

REFERENCES Rana Ayman el Kaliouby, “Mind-reading machines: automated inference of complex mental states”, Ph. D. Dissertation, University Of Cambridge, July 2005. el Kaliouby, Rana and Robinson, Peter, “Real-time inference of complex mental states from facial expressions and head gestures. ”, In: Real-time vision for HCI. Springer, pp. 181 -200. ISBN 0 387 27697 1, 2005. el Kaliouby, Rana and Robinson, Peter, “The emotional hearing aid - an assistive tool for autism. ”, In: HCI International 2003, 22 -27 Jun 2003, Crete, Greece. el Kaliouby, Rana and Robinson, Peter, “Mind Reading Machines: Automated Inference of Cognitive Mental States from Video”, in: Systems, Man and Cybernetics, 2004 IEEE International Conference on, Oct. 2004. el Kaliouby and Peter Robinson. Designing a More Inclusive World, chapter “The Emotional Hearing Aid: An Assistive Tool for Children with Asperger Syndrome” , London: Springer. Verlag, 2004. Qiang Ji and Xiaojie Yang, “Real-time eye, gaze, and face pose tracking for monitoring driver vigilance”, In: Real-Time Imaging, Volume 8, Issue 5, October 2002 36

REFERENCES Rana Ayman el Kaliouby, “Mind-reading machines: automated inference of complex mental states”, Ph. D. Dissertation, University Of Cambridge, July 2005. el Kaliouby, Rana and Robinson, Peter, “Real-time inference of complex mental states from facial expressions and head gestures. ”, In: Real-time vision for HCI. Springer, pp. 181 -200. ISBN 0 387 27697 1, 2005. el Kaliouby, Rana and Robinson, Peter, “The emotional hearing aid - an assistive tool for autism. ”, In: HCI International 2003, 22 -27 Jun 2003, Crete, Greece. el Kaliouby, Rana and Robinson, Peter, “Mind Reading Machines: Automated Inference of Cognitive Mental States from Video”, in: Systems, Man and Cybernetics, 2004 IEEE International Conference on, Oct. 2004. el Kaliouby and Peter Robinson. Designing a More Inclusive World, chapter “The Emotional Hearing Aid: An Assistive Tool for Children with Asperger Syndrome” , London: Springer. Verlag, 2004. Qiang Ji and Xiaojie Yang, “Real-time eye, gaze, and face pose tracking for monitoring driver vigilance”, In: Real-Time Imaging, Volume 8, Issue 5, October 2002 36

EXTRA

EXTRA

PROBLEM AT HAND Uncertain about uncertainty – The Noise Factor Uniqueness of faces and features such as beard etc Personal capacity of expressiveness Inherent uncertainty in current image processing techniques Background “noise” while image capture Facial orientation Temporal inference Co-existence of emotions -multiple results Feature Extraction Efficient !!! and the winner is. . . (symphony music runs. . . ) 38

PROBLEM AT HAND Uncertain about uncertainty – The Noise Factor Uniqueness of faces and features such as beard etc Personal capacity of expressiveness Inherent uncertainty in current image processing techniques Background “noise” while image capture Facial orientation Temporal inference Co-existence of emotions -multiple results Feature Extraction Efficient !!! and the winner is. . . (symphony music runs. . . ) 38

DYNAMIC BAYESIAN NETWORKS Combines domain knowledge with observational evidence Excellent coupling with image processing techniques Efficient enough to train an ensemble of models Non-restricting assumption of conditional independence Inherent temporal inference handling (real time component) Feature selection Easy addition of new features Handling absent features by max entropy 39

DYNAMIC BAYESIAN NETWORKS Combines domain knowledge with observational evidence Excellent coupling with image processing techniques Efficient enough to train an ensemble of models Non-restricting assumption of conditional independence Inherent temporal inference handling (real time component) Feature selection Easy addition of new features Handling absent features by max entropy 39