c20da8b13d8f426916d1ce05b7475b61.ppt

- Количество слайдов: 49

Middleware Status Markus Schulz, Oliver Keeble, Flavia Donno Michael Grønager (ARC) Alain Roy (OSG) ~~~ LCG-LHCC Mini Review 1 st July 2008 Modified for the GDB

Overview Discussion on the status of g. Lite Status summary CCRC and issues raised Future prospects OSG Alain Roy ARC Oxana Smirnova Storage and SRM Flavia Donno October 7, 2005 2

g. Lite: Current Status Current middleware stack is g. Lite 3. 1 Available on SL 4 32 bit Clients and selected services available also on 64 bit Represents ~15 services Only awaiting FTS to be available on SL 4 (in certification) Updates are released every week Updates are sets of patches to components Components can evolve independently Release process includes full certification phase Includes DPM, d. Cache and FTS for storage management LFC and AMGA for catalogues WMS/LB and lcg-CE for workload Clients for WN and UI BDII for the Information System Various other services (eg VOMS) October 7, 2005 3

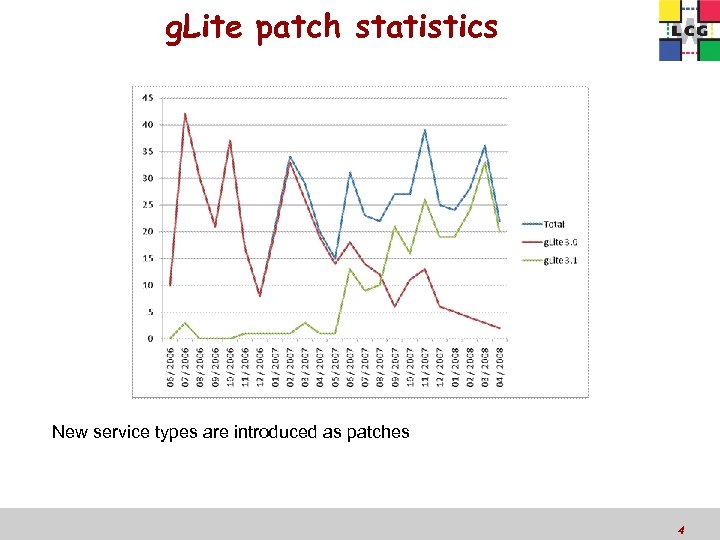

g. Lite patch statistics New service types are introduced as patches October 7, 2005 4

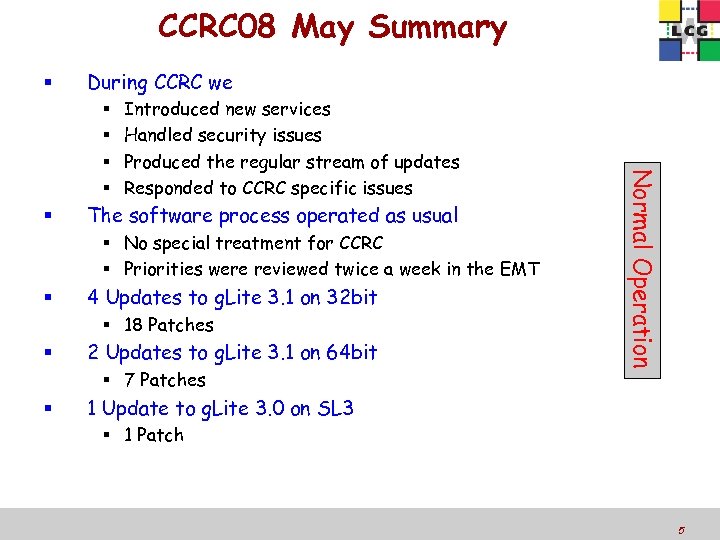

CCRC 08 May Summary During CCRC we Introduced new services Handled security issues Produced the regular stream of updates Responded to CCRC specific issues The software process operated as usual No special treatment for CCRC Priorities were reviewed twice a week in the EMT 4 Updates to g. Lite 3. 1 on 32 bit 18 Patches 2 Updates to g. Lite 3. 1 on 64 bit 7 Patches Normal Operation 1 Update to g. Lite 3. 0 on SL 3 1 Patch October 7, 2005 5

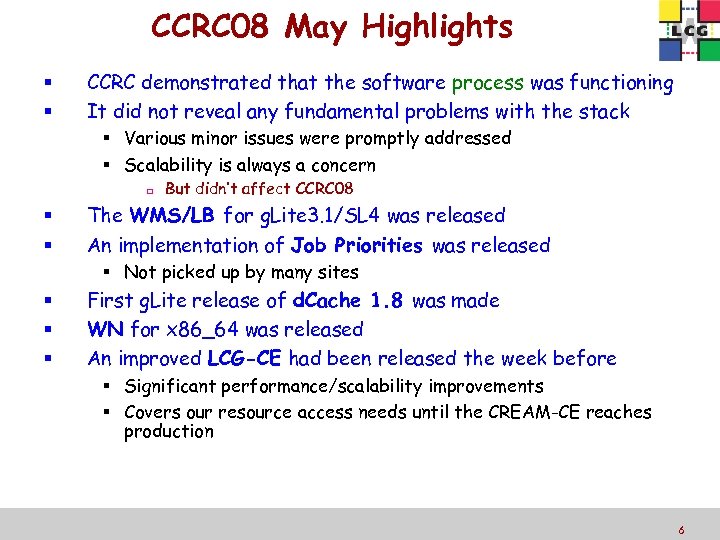

CCRC 08 May Highlights CCRC demonstrated that the software process was functioning It did not reveal any fundamental problems with the stack Various minor issues were promptly addressed Scalability is always a concern But didn’t affect CCRC 08 The WMS/LB for g. Lite 3. 1/SL 4 was released An implementation of Job Priorities was released Not picked up by many sites First g. Lite release of d. Cache 1. 8 was made WN for x 86_64 was released An improved LCG-CE had been released the week before Significant performance/scalability improvements Covers our resource access needs until the CREAM-CE reaches production October 7, 2005 6

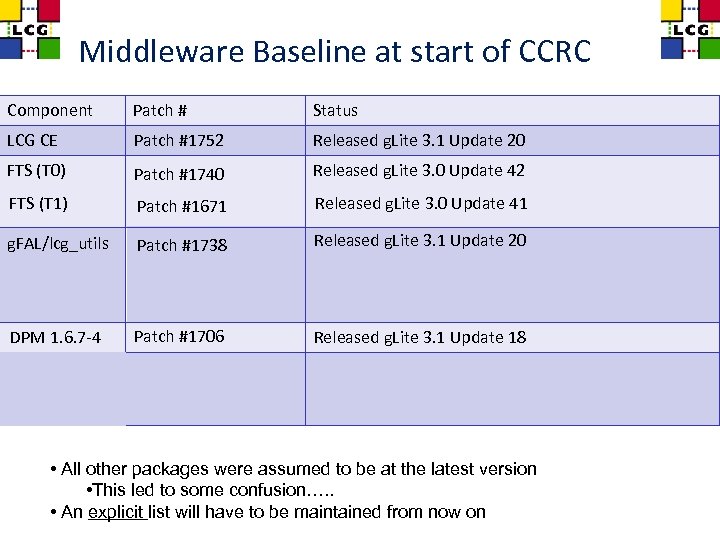

Middleware Baseline at start of CCRC Component Patch # Status LCG CE Patch #1752 Released g. Lite 3. 1 Update 20 FTS (T 0) Patch #1740 Released g. Lite 3. 0 Update 42 FTS (T 1) Patch #1671 Released g. Lite 3. 0 Update 41 g. FAL/lcg_utils Patch #1738 Released g. Lite 3. 1 Update 20 DPM 1. 6. 7 -4 Patch #1706 Released g. Lite 3. 1 Update 18 • All other packages were assumed to be at the latest version • This led to some confusion…. . • An explicit list will have to be maintained from now on

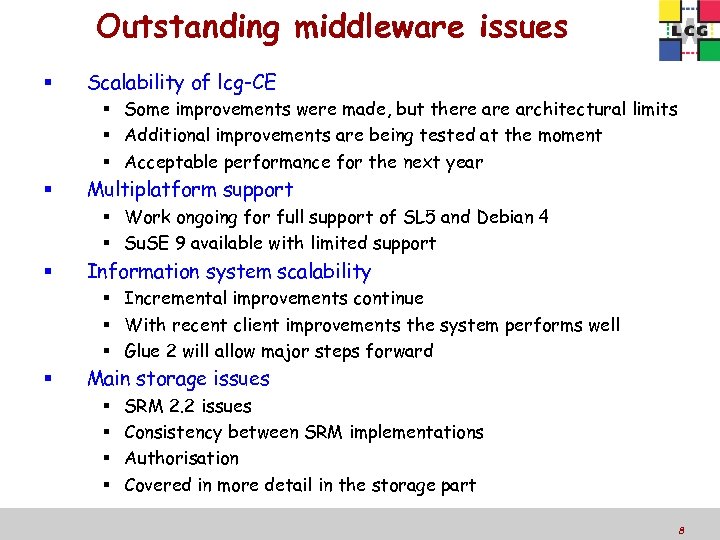

Outstanding middleware issues Scalability of lcg-CE Some improvements were made, but there architectural limits Additional improvements are being tested at the moment Acceptable performance for the next year Multiplatform support Work ongoing for full support of SL 5 and Debian 4 Su. SE 9 available with limited support Information system scalability Incremental improvements continue With recent client improvements the system performs well Glue 2 will allow major steps forward Main storage issues SRM 2. 2 issues Consistency between SRM implementations Authorisation Covered in more detail in the storage part October 7, 2005 8

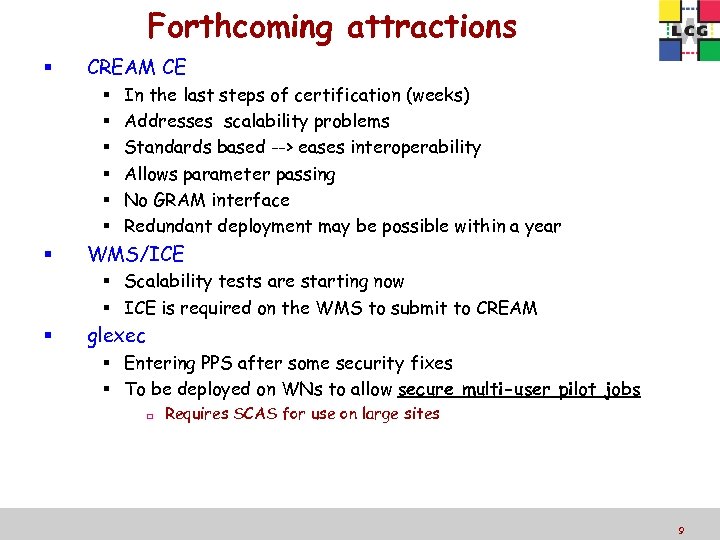

Forthcoming attractions CREAM CE In the last steps of certification (weeks) Addresses scalability problems Standards based --> eases interoperability Allows parameter passing No GRAM interface Redundant deployment may be possible within a year WMS/ICE Scalability tests are starting now ICE is required on the WMS to submit to CREAM glexec Entering PPS after some security fixes To be deployed on WNs to allow secure multi-user pilot jobs Requires SCAS for use on large sites October 7, 2005 9

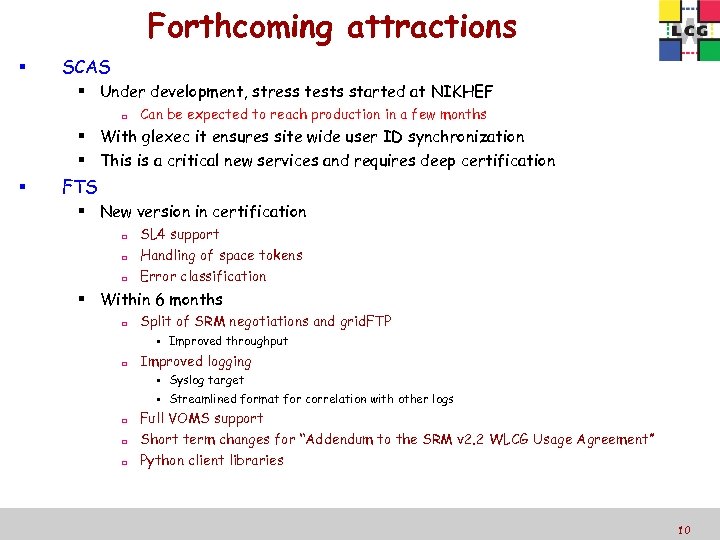

Forthcoming attractions SCAS Under development, stress tests started at NIKHEF Can be expected to reach production in a few months With glexec it ensures site wide user ID synchronization This is a critical new services and requires deep certification FTS New version in certification SL 4 support Handling of space tokens Error classification Within 6 months Split of SRM negotiations and grid. FTP Improved throughput Improved logging Syslog target Streamlined format for correlation with other logs Full VOMS support Short term changes for “Addendum to the SRM v 2. 2 WLCG Usage Agreement” Python client libraries October 7, 2005 10

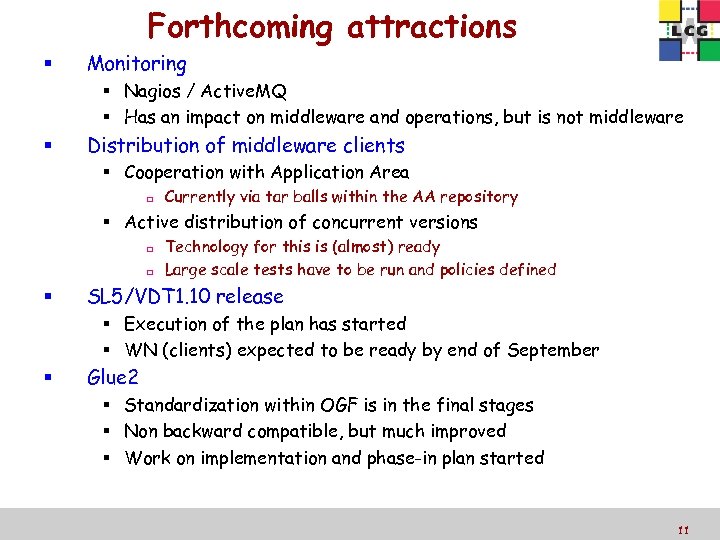

Forthcoming attractions Monitoring Nagios / Active. MQ Has an impact on middleware and operations, but is not middleware Distribution of middleware clients Cooperation with Application Area Currently via tar balls within the AA repository Active distribution of concurrent versions Technology for this is (almost) ready Large scale tests have to be run and policies defined SL 5/VDT 1. 10 release Execution of the plan has started WN (clients) expected to be ready by end of September Glue 2 Standardization within OGF is in the final stages Non backward compatible, but much improved Work on implementation and phase-in plan started October 7, 2005 11

OSG Slides provided by Alain Roy OSG Software OSG Facility CCRC and last 6 months Interoperability and Interoperation Issues and next steps October 7, 2005 12

OSG for the LHCC Mini Review Created by Alain Roy Edited and reviewed by others (including Markus Schulz)

OSG Software Concept • OSG Software Goal: Provide software needed by VOs within the OSG, and by other users of the VDT, including EGEE and LCG. Work is mainly software integration and packaging: we do not do software development Work closely with external software providers OSG Software is component of the OSG Facility, and works closely with other facility groups March 11, 2008 USCMS Tier-2 Workshop 14

OSG Facility Concept • Engagement Reach out to new users • Integration Quality assurance of OSG releases • Operations Day-to-day running of OSG Facility • Security Operational security • Software integration and packaging • Troubleshooting Debug the hard end-to-end problems March 11, 2008 USCMS Tier-2 Workshop 15

During CCRC & Last Six Months Status • Responded to ATLAS/CMS/LCG/EGEE update requests and fixes, • My. Proxy fixed for LCG(threading problem) • in certification now • Globus proxy chain length problem fixed for LCG • in certification now • Fixed security problem (rpath) reported by LCG • OSG 1. 0 released—major new release Added lcg-utils for OSG software installations Now use system version of Open. SSL instead of Globus’s Open. SSL (more secure) Introduced and supported SRM V 2 based storage software based on d. Cache, Bestman, and xrootd. March 11, 2008 USCMS Tier-2 Workshop 16

OSG / LCG interoperation Status • Big improvements in reporting New RSV software collects information about functioning of sites, reports it to Grid. View. Much improved software, better information reported. Generic Information Provider OSG-specific version greatly improved to provide more accurate information about a site to the BDII. March 11, 2008 USCMS Tier-2 Workshop 17

Ongoing Issues & Work ISSUES • US OSG sites need more LCG client tools for data management (LFC tools, etc. ). • Working to improve interoperability via testing between OSG’s ITB and EGEE’s PPS. • g. Lite plans to move to new version of VDT: • We’ll help with the transition. • We have regular communication • via Alain Roy’s attendance at the EMT meetings. March 11, 2008 USCMS Tier-2 Workshop 18

ARC • Slides provided by Michael Grønager March 11, 2008 USCMS Tier-2 Workshop

ARC middleware status for the WLCG GDB Michael Grønager Project Director, NDGF July Grid Deployment Board CERN, Geneva, July 9 th 2008

Talk Outline Nordic Acronym Soup Cheat Sheet ARC Introduction Other Infrastructure components ARC and WLCG ARC Status ARC Challenges ARC Future GDB, CERN, July 2008 21

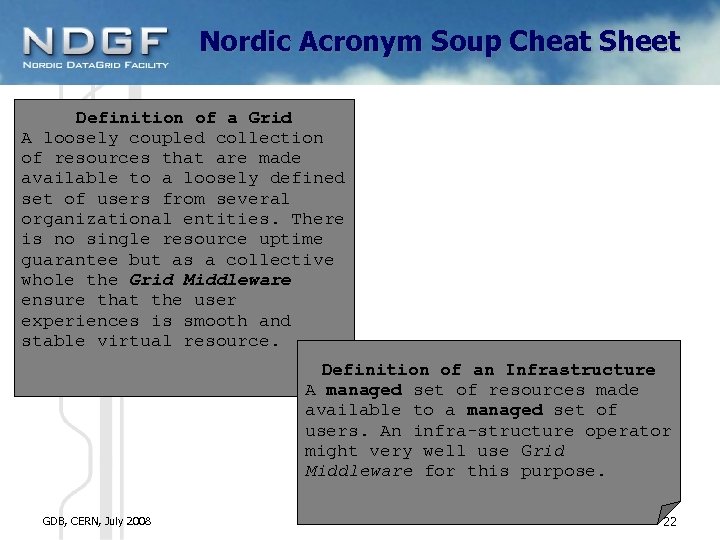

Nordic Acronym Soup Cheat Sheet Definition of a Grid A loosely coupled collection of resources that are made available to a loosely defined set of users from several organizational entities. There is no single resource uptime guarantee but as a collective whole the Grid Middleware ensure that the user experiences is smooth and stable virtual resource. Definition of an Infrastructure A managed set of resources made available to a managed set of users. An infra-structure operator might very well use Grid Middleware for this purpose. GDB, CERN, July 2008 22

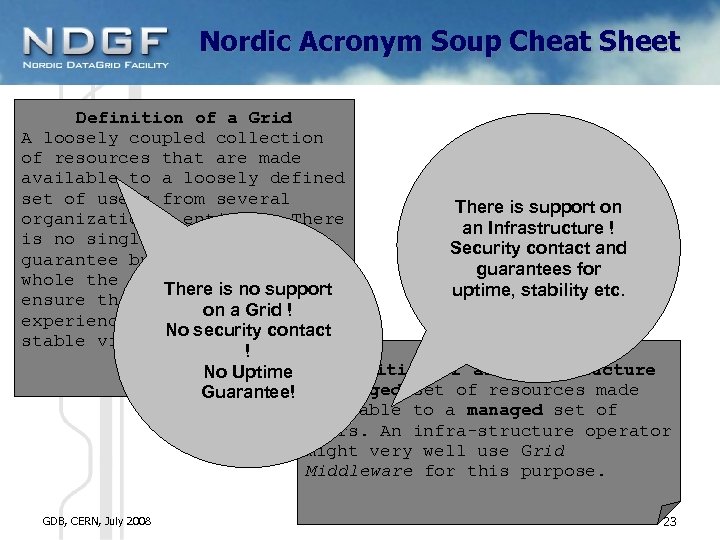

Nordic Acronym Soup Cheat Sheet Definition of a Grid A loosely coupled collection of resources that are made available to a loosely defined set of users from several There is support on organizational entities. There an Infrastructure ! is no single resource uptime Security contact and guarantee but as a collective guarantees for whole the Grid Middleware There is no support uptime, stability etc. ensure that the user on a Grid ! experiences is smooth and No security contact stable virtual resource. ! Definition of an Infrastructure No Uptime Guarantee! A managed set of resources made available to a managed set of users. An infra-structure operator might very well use Grid Middleware for this purpose. GDB, CERN, July 2008 23

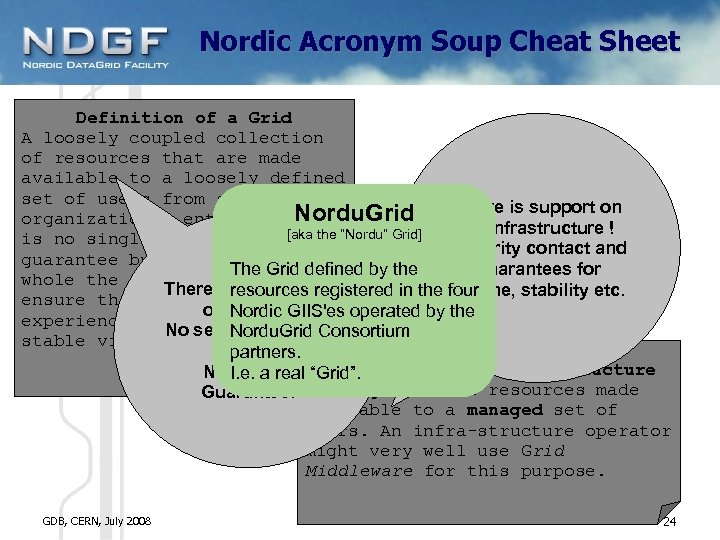

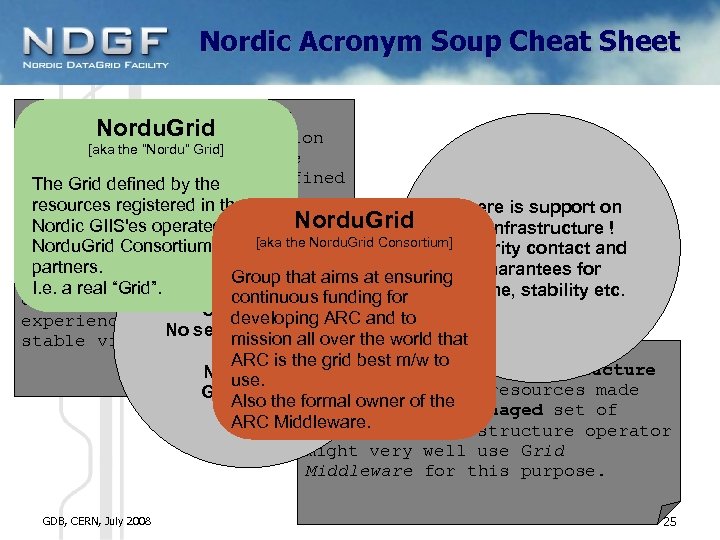

Nordic Acronym Soup Cheat Sheet Definition of a Grid A loosely coupled collection of resources that are made available to a loosely defined set of users from several There is support on Nordu. Grid organizational entities. There an Infrastructure ! [aka the “Nordu” Grid] is no single resource uptime Security contact and guarantee but as a collective The Grid defined by the guarantees for whole the Grid Middleware There isresources registered in the four no support uptime, stability etc. ensure that the user on a Grid ! Nordic GIIS'es operated by the experiences is smooth and No security contact Nordu. Grid Consortium stable virtual resource. ! partners. Definition of an Infrastructure No I. e. a real “Grid”. Uptime Guarantee! A managed set of resources made available to a managed set of users. An infra-structure operator might very well use Grid Middleware for this purpose. GDB, CERN, July 2008 24

Nordic Acronym Soup Cheat Sheet Definition of a Grid Nordu. Grid A loosely coupled collection [aka the “Nordu” Grid] of resources that are made available to a loosely defined The Grid defined by the set of users from several resources registered in the four There is support on organizational entities. There Nordic GIIS'es operated by the Nordu. Grid an Infrastructure ! is no single resource [aka the Nordu. Grid Consortium] uptime Nordu. Grid Consortium Security contact and guarantee but as a collective partners. Group that aims at ensuring guarantees for whole the Grid Middleware I. e. a real “Grid”. There is no support uptime, stability etc. continuous funding for ensure that the user on a Grid ! developing ARC and to experiences is smooth and No security contact mission all over the world that stable virtual resource. ! ARC is the grid best m/w to Definition of an Infrastructure No use. Uptime Guarantee! A managed set of resources made Also the formal owner of the managed set of available to a ARC Middleware. An infra-structure operator users. might very well use Grid Middleware for this purpose. GDB, CERN, July 2008 25

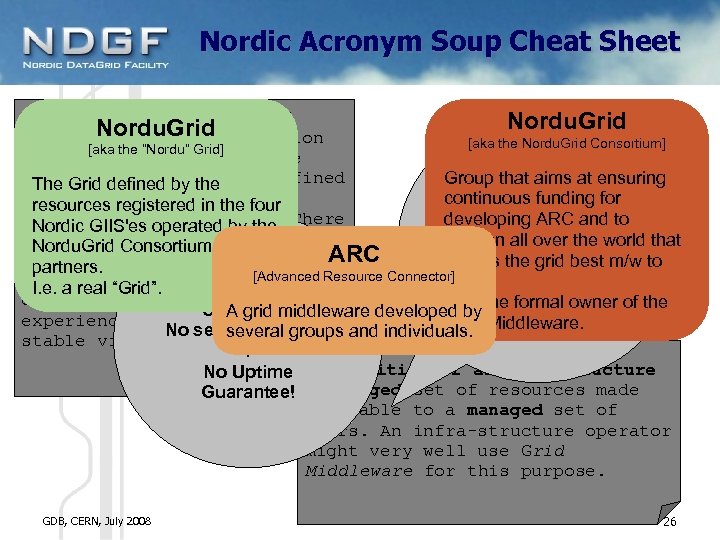

Nordic Acronym Soup Cheat Sheet Definition of a Grid Nordu. Grid A loosely coupled collection [aka the Nordu. Grid Consortium] [aka the “Nordu” Grid] of resources that are made available to a loosely defined Group that aims at ensuring The Grid defined by the set of users from several continuous funding for resources registered in the four There is support on organizational entities. There developing ARC and to Nordic GIIS'es operated by the an Infrastructure ! is no single resource uptime mission all over the world that Nordu. Grid Consortium Security contact and ARC guarantee but as a collective ARC is the grid best m/w to partners. [Advanced Resource Connector] guarantees for whole the Grid Middleware use. I. e. a real “Grid”. There is no support uptime, stability etc. ensure that the user Also the formal owner of the on A grid middleware developed by a Grid ! experiences is smooth and ARC Middleware. No security contact several groups and individuals. stable virtual resource. ! Definition of an Infrastructure No Uptime Guarantee! A managed set of resources made available to a managed set of users. An infra-structure operator might very well use Grid Middleware for this purpose. GDB, CERN, July 2008 26

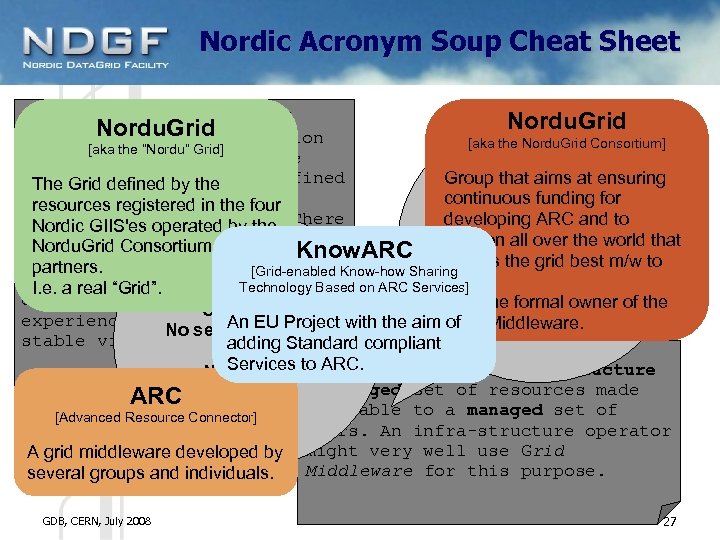

Nordic Acronym Soup Cheat Sheet Definition of a Grid Nordu. Grid A loosely coupled collection [aka the Nordu. Grid Consortium] [aka the “Nordu” Grid] of resources that are made available to a loosely defined Group that aims at ensuring The Grid defined by the set of users from several continuous funding for resources registered in the four There is support on organizational entities. There developing ARC and to Nordic GIIS'es operated by the an Infrastructure ! is no single resource uptime mission all over the world that Nordu. Grid Consortium Security contact and Know. ARC is the grid best m/w to guarantee but as a collective partners. [Grid-enabled Know-how Sharing guarantees for whole the Grid Middleware use. Technology Based on ARC Services] I. e. a real “Grid”. There is no support uptime, stability etc. ensure that the user Also the formal owner of the on a Grid ! experiences is smooth and An EU Project with the aim of ARC Middleware. No security contact stable virtual resource. adding Standard compliant ! Services to ARC. Definition of an Infrastructure No Uptime set ARC Guarantee! A managed to aof resources made available managed set of [Advanced Resource Connector] users. An infra-structure operator A grid middleware developed by might very well use Grid several groups and individuals. Middleware for this purpose. GDB, CERN, July 2008 27

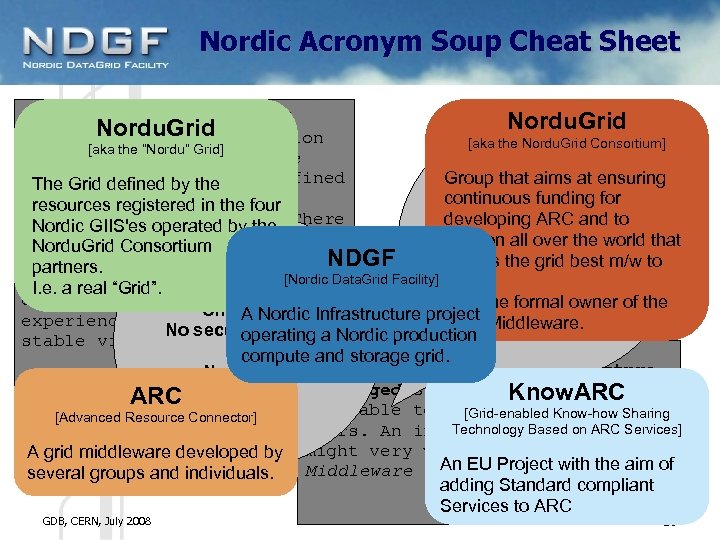

Nordic Acronym Soup Cheat Sheet Definition of a Grid Nordu. Grid A loosely coupled collection [aka the Nordu. Grid Consortium] [aka the “Nordu” Grid] of resources that are made available to a loosely defined Group that aims at ensuring The Grid defined by the set of users from several continuous funding for resources registered in the four There is support on organizational entities. There developing ARC and to Nordic GIIS'es operated by the an Infrastructure ! is no single resource uptime mission all over the world that Nordu. Grid Consortium Security contact and guarantee but as a collective NDGF ARC is the grid best m/w to partners. guarantees for [Nordic Data. Grid Facility] use. whole the Grid Middleware I. e. a real “Grid”. There is no support uptime, stability etc. ensure that the user Also the formal owner of the on a A Nordic Infrastructure project Grid ! experiences is smooth and ARC Middleware. No security contact operating a Nordic production stable virtual resource. ! compute and storage grid. Definition of an Infrastructure No Uptime set Know. ARC Guarantee! A managed to aof resources made available managed set of [Grid-enabled Know-how Sharing [Advanced Resource Connector] Technology Based on ARC Services] users. An infra-structure operator A grid middleware developed by might very well use Grid An EU Project with the aim of several groups and individuals. Middleware for this purpose. adding Standard compliant Services to ARC GDB, CERN, July 2008 28

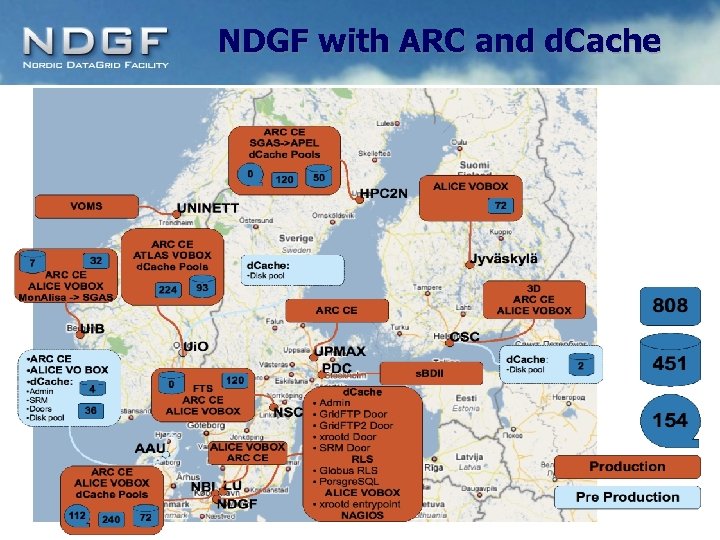

NDGF with ARC and d. Cache GDB, CERN, July 2008

NDGF with ARC and d. Cache Note ! The coupling between NDGF and ARC is that NDGF uses ARC (as it uses d. Cache) for running a stable Compute and Storage Infrastructure for the Nordic WLCG Tier-1 and other Nordic e-Science projects. In the best open source tradition NDGF contributes to ARC (and d. Cache) as a pay back to the community and a way of getting what we need. GDB, CERN, July 2008

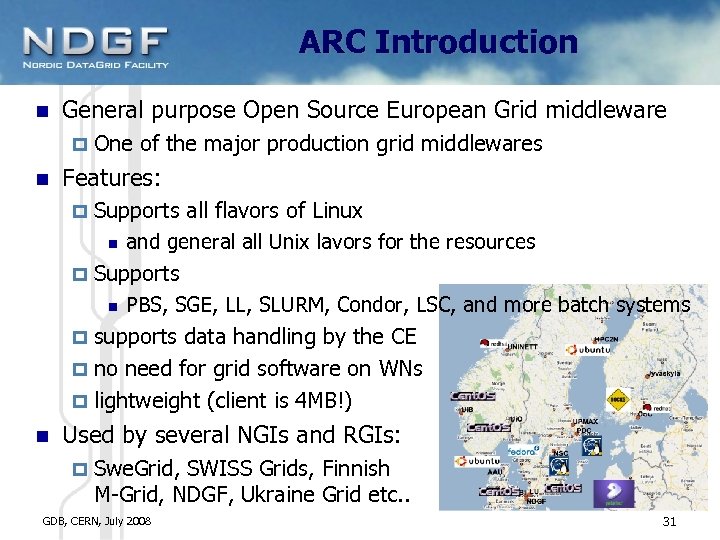

ARC Introduction General purpose Open Source European Grid middleware One of the major production grid middlewares Features: Supports all flavors of Linux and general all Unix lavors for the resources Supports PBS, SGE, LL, SLURM, Condor, LSC, and more batch systems supports data handling by the CE no need for grid software on WNs lightweight (client is 4 MB!) Used by several NGIs and RGIs: Swe. Grid, SWISS Grids, Finnish M-Grid, NDGF, Ukraine Grid etc. . GDB, CERN, July 2008 31

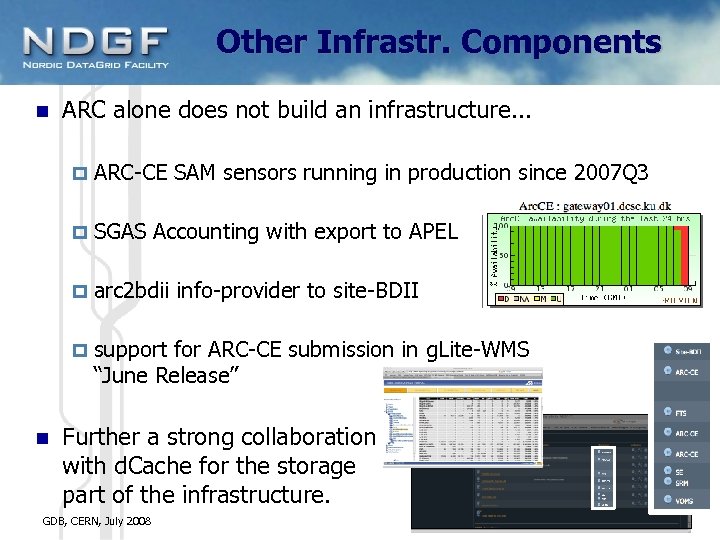

Other Infrastr. Components ARC alone does not build an infrastructure. . . SGAS Accounting with export to APEL arc 2 bdii info-provider to site-BDII ARC-CE SAM sensors running in production since 2007 Q 3 support for ARC-CE submission in g. Lite-WMS “June Release” Further a strong collaboration with d. Cache for the storage part of the infrastructure. GDB, CERN, July 2008 32

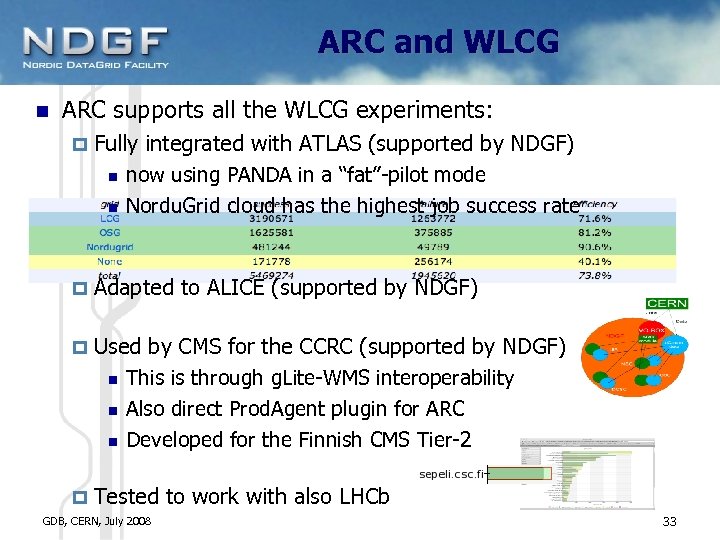

ARC and WLCG ARC supports all the WLCG experiments: Fully integrated with ATLAS (supported by NDGF) now using PANDA in a “fat”-pilot mode Nordu. Grid cloud has the highest job success rate Adapted to ALICE (supported by NDGF) Used by CMS for the CCRC (supported by NDGF) This is through g. Lite-WMS interoperability Also direct Prod. Agent plugin for ARC Developed for the Finnish CMS Tier-2 Tested to work with also LHCb GDB, CERN, July 2008 33

ARC Status Current stable release 0. 6. 3 with support for LFC – LCG File Catalogue (Deprecating RLS) This is now deployed at NDGF SRM 2. 2 Interacting with SRM 2. 2 storage Handy user client “ngstage” enable easy Interaction with SRM based SEs Priorities and Shares Support for User/VO-role based priorities Support for Shares using LRMS Accounts GDB, CERN, July 2008 34

ARC Next release Next stable release 0. 6. 4 (due for September) will support: the BDII as a replacement for the Globus MDS Rendering the ARC-Schema Optional rendering of the GLUE 1. 3 Schema optional scalability improvement packages GDB, CERN, July 2008 35

ARC Challenges ARC have scalability issues on huge +5 k core clusters data up and download is limited by the CE session and cache access is limited on NFS based clusters this issue is absent on clusters running e. g. GPFS These issues will be solved in the 0. 8 release (end 2008) Move towards a flexible “d. Cache like” setup where more nodes can be added based on need e. g. adding more up and downloaders e. g. adding more nfs servers GDB, CERN, July 2008 36

ARC Future The stable branch of ARC (sometimes called ARC classic) will continue to evolve More and more features will be added from e. g. the Know. ARC project. The Know. ARC development branch (sometimes called ARC 1) adds a lot of services which adheres to standards like GLUE 2, BES and JSDL These will be incorporated into the stable ARC branch. Work on dynamic Runtime Environments for e. g. bioinformatics are already being incorporated. There will be no “migrations” but a graduate incorporation of the novel components into the stable branch. GDB, CERN, July 2008 37

SRM Slides provided by Flavia Donno Goal of the SRM v 2. 2 Addendum Short term solution CASTOR, d. Cache, STORM, DPM October 7, 2005 43

The Addendum to the WLCG SRM v 2. 2 Usage Agreement Flavia Donno CERN/IT LCG-LHCC Mini review CERN 1 July 2008

The requirements The goal of the SRM v 2. 2 Addendum is to provide answers to the following (requirements and priorities given by the experiments) Main requirements : Space protection (VOMS-awareness) Space selection Supported space types (T 1 D 0, T 1 D 1, T 0 D 1) Pre-staging, pinning Space usage statistics Tape optimization (reducing the number of mount operations, etc. ) F. Donno, LCG-LHCC Mini review , CERN 1 July 2008 45

The document The most recent version is v 1. 4 available on CCRC/SSWG twiki: https: //twiki. cern. ch/twiki/pub/LCG/WLCGCommon. Computing. Readiness. Challenges/ WLCG_SRMv 22_Memo-14. pdf 2 main parts: An implementation-specific with limited capabilities short-term solution that can be made available by the end of 2008 A detailed description of an implementation-independent full solution The document has been agreed by storage developers, clients developers, experiments (ATLAS, CMS, LHCb) F. Donno, LCG-LHCC Mini review, CERN 1 July 2008 46

The recommendations and priorities 24 June 2004 : WLCG Management Board approval with the following recommendations Top priority is services functionality, stability, reliability and performance The implementation plan for the “short term solution” can start it introduces the minimal new features needed to guarantee protection from resources abuse and support for data reprocessing It offers limited functionality and is implementation specific with clients hiding differences The long term solution is a solid starting point Ø It is what is technically missing/needed Ø Details and functionalities can be re-discussed with acquired experience F. Donno, LCG-LHCC Mini review , CERN 1 July 2008 47

The short-term solution CASTOR Space Protection based on UID/GID. Administrative interface ready by 3 rd quarter of 2008. Space selection already available. srm. Purge. From. Space available in 3 rd quarter of 2008. Space types T 1 D 1 provided. – pin. Life. Time parameter negotiated to be always equal to a system defined default. Space statistics and tape optimization Already addressed Implementation plan Available by the end of 2008 F. Donno, LCG-LHCC Mini review, CERN 1 July 2008 48

The short-term solution d. Cache Space Protection Protected creation and usage of “write” space tokens Controlling access to the tape system by DN's or FQAN's. Space selection Based on the IP number of the client, the requested transfer protocol or the path of the file. Use of SRM special structures for more refined selection of read buffers. Space types T 1 D 0 + pinning provided. Releasing pins will be possible for a specific DN or FQAN. Space statistics and tape optimization Already addressed Implementation plan Available by the end of 2008 F. Donno, LCG-LHCC Mini review, CERN 1 July 2008 49

The short-term solution DPM Space Protection Support a list of VOMS FQANs for the space write permission check, rather than just the current single FQAN Space selection Not available, not necessary at the moment Space types Only T 0 D 1 Space statistics and tape optimization Space statistics already available. Tape optimization not needed Implementation plan Available by the end of 2008 F. Donno, LCG-LHCC Mini review , CERN 1 July 2008 50

The short-term solution Sto. RM Space Protection Spaces in Sto. RM will be protected via DN or FQAN based ACLs. Sto. RM is already VOMS-aware. Space selection Not available Space types T 0 D 1 and T 1 D 1 (no tape transitions allowed in WLCG) Space statistics and tape optimization Space statistics already available. Tape optimization will be addressed Implementation plan Available by November 2008 F. Donno, LCG-LHCC Mini review, CERN 1 July 2008 51

The short-term solution Client tools: FTS, lcg-utils/gfal Space selection The client tools will pass both the SPACE token and SRM special structures for refined selection of read/write pools. Pinning Client tools will internally extend the pinlifetime of newly created copies. Space Types The same SPACE Token might be implemented as T 1 D 1 for CASTOR, or T 1 D 0 + pinning in d. Cache. The clients will perform the needed operations to release copies in both types of spaces transparently. Implementation plan Available by the end of 2008 F. Donno, LCG-LHCC Mini review, CERN 1 July 2008 52

Summary g. Lite handled the CCRC load Release process was not affected by CCRC Improved lcg-CE can bridge the gap until we move to CREAM Scaling for core services is understood OSG Several minor fixes and improvements User driven released OSG-1. 0 SRM-2 support Lcg data management clients Improved interoperation and interoperability Middleware ready for show time October 7, 2005 53

Summary ARC 0. 6. 3 has been released Stability improvements, bug fixes LFC (file catalogue) support Will be maintained ARC 0. 8 release planning started Will address several scaling issues for very large sites (>10 k) ARC-1 prototype exists SRM-2. 2 SRM v 2. 2 Addendum Agreement has been reached Short term plan implementation can start Affects Castor, d. Cache, Storm, DPM and clients Clients will hide differences Expected to be in production by the end of the year Long term plan can still reflect new experiences October 7, 2005 54

c20da8b13d8f426916d1ce05b7475b61.ppt