7372817a0f2b0dd1b52cd49ab6f1eb9d.ppt

- Количество слайдов: 24

Metrics and Techniques for Evaluating the Performability of Internet Services Pete Broadwell pbwell@cs. berkeley. edu

Outline 1. Introduction to performability 2. Performability metrics for Internet services • Throughput-based metrics (Rutgers) • Latency-based metrics (ROC) 3. Analysis and future directions

Motivation • Goal of ROC project: develop metrics to evaluate new recovery techniques • Problem: concept of availability assumes system is either “up” or “down” at a given time • Availability doesn’t capture system’s capacity to support degraded service – degraded performance during failures – reduced data quality during high load

What is “performability”? • Combination of performance and dependability measures • Classical defn: probabilistic (modelbased) measure of a system’s “ability to perform” in the presence of faults 1 – Concept from traditional fault-tolerant systems community, ca. 1978 – Has since been applied to other areas, but still not in widespread use 1 J. F. Meyer, Performability Evaluation: Where It Is and What Lies Ahead, 1994

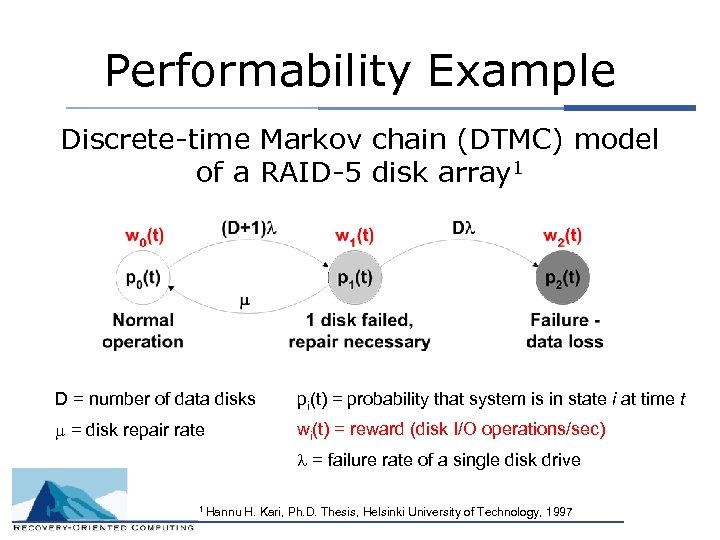

Performability Example Discrete-time Markov chain (DTMC) model of a RAID-5 disk array 1 D = number of data disks pi(t) = probability that system is in state i at time t m = disk repair rate wi(t) = reward (disk I/O operations/sec) l = failure rate of a single disk drive 1 Hannu H. Kari, Ph. D. Thesis, Helsinki University of Technology, 1997

Performability for Online Services: Rutgers Study • Rich Martin (UCB alum) et al. wanted to quantify tradeoffs between web server designs, using a single metric for both performance and availability • Approach: – Performed fault injection on PRESS, a locality-aware, cluster-based web server – Measured throughput of cluster during simulated faults and normal operation

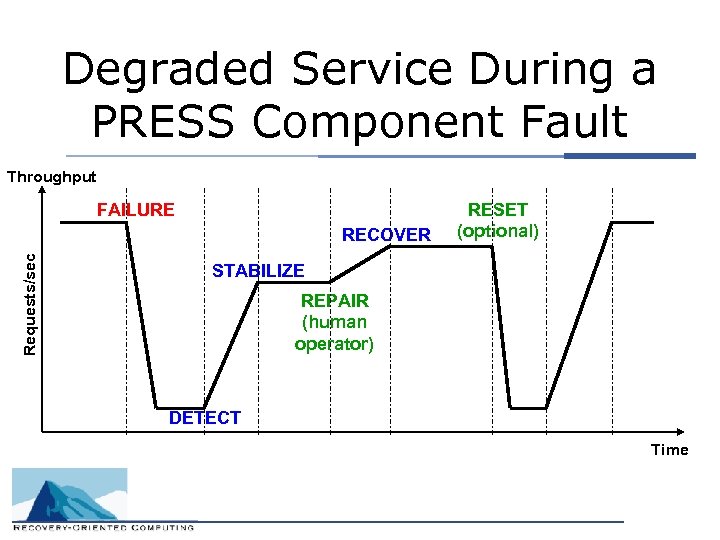

Degraded Service During a PRESS Component Fault Throughput FAILURE Requests/sec RECOVER RESET (optional) STABILIZE REPAIR (human operator) DETECT Time

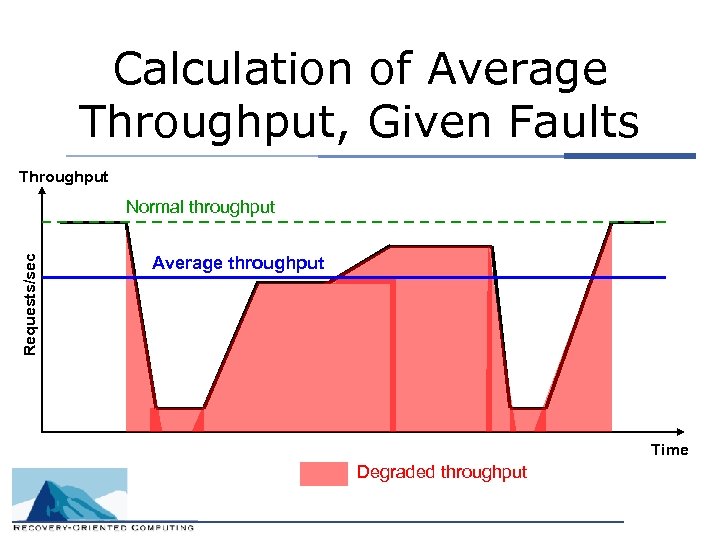

Calculation of Average Throughput, Given Faults Throughput Requests/sec Normal throughput Average throughput Time Degraded throughput

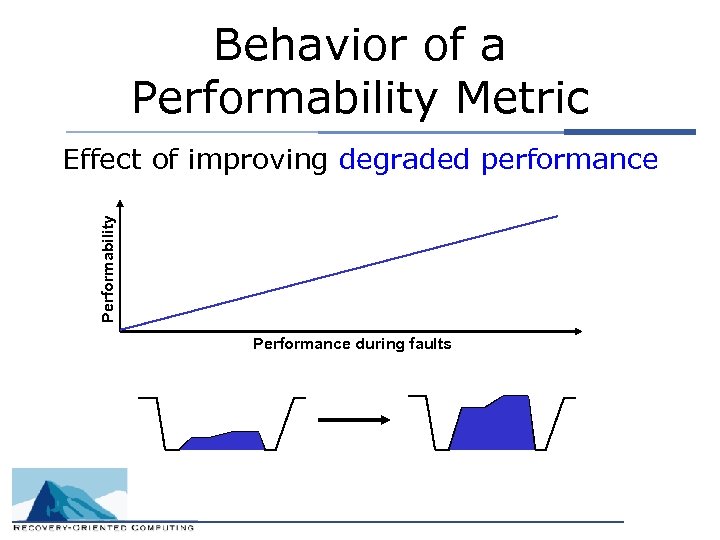

Behavior of a Performability Metric Performability Effect of improving degraded performance Performance during faults

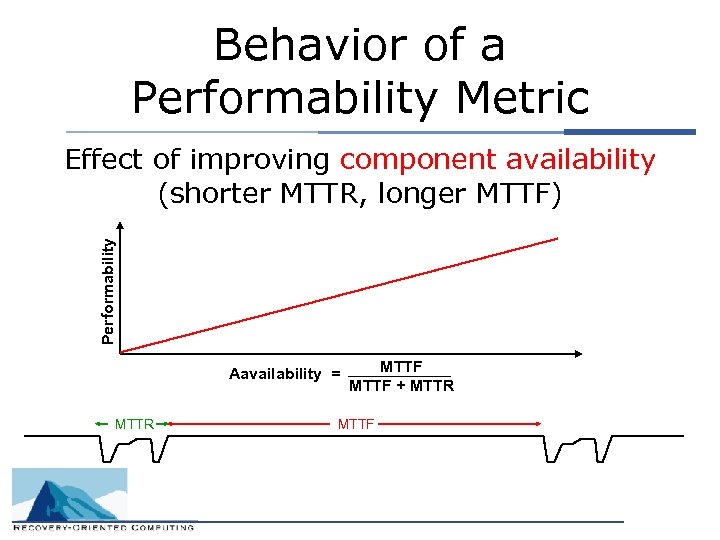

Behavior of a Performability Metric Performability Effect of improving component availability (shorter MTTR, longer MTTF) Aavailability = MTTR MTTF + MTTR MTTF

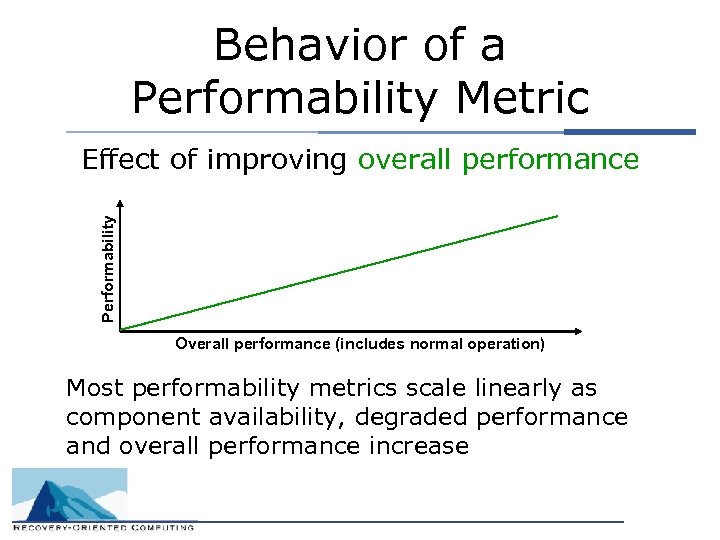

Behavior of a Performability Metric Performability Effect of improving overall performance Overall performance (includes normal operation) Most performability metrics scale linearly as component availability, degraded performance and overall performance increase

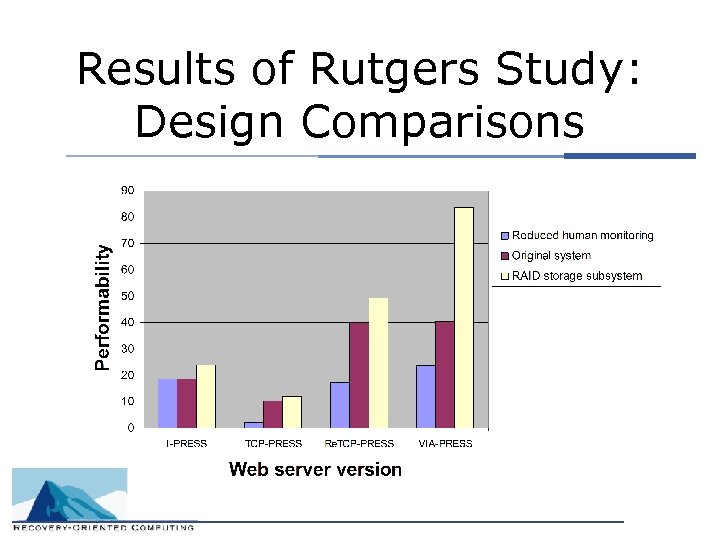

Results of Rutgers Study: Design Comparisons

An Alternative Metric: Response Latency • Originally, performability metrics were meant to capture end-user experience 1 • Latency better describes the experience of an end user of a web site – response time >8 sec = site abandonment = lost income $$2 • Throughput describes the raw processing ability of a service – best used to quantify expenses 1 J. F. Meyer, Performability Evaluation: Where It Is and What Lies Ahead, 1994 2 Zona Research and Keynote Systems, The Need for Speed II, 2001

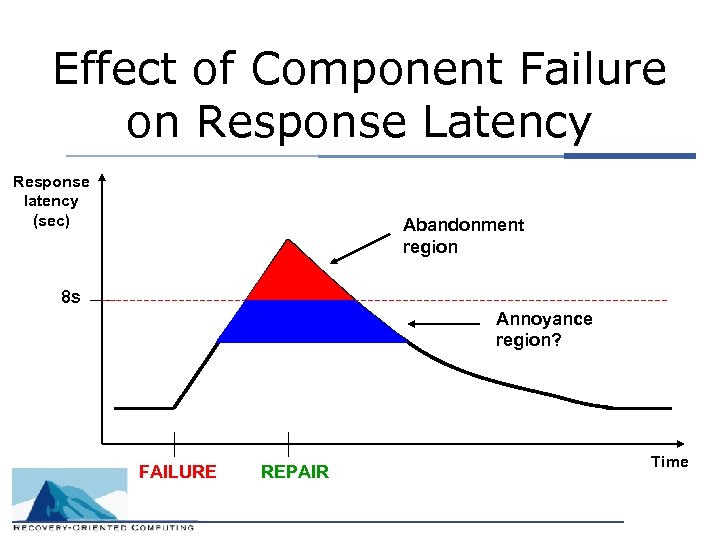

Effect of Component Failure on Response Latency Response latency (sec) Abandonment region 8 s Annoyance region? FAILURE REPAIR Time

Issues With Latency As a Performability Metric • Modeling concerns: – Human element: retries and abandonment – Queuing issues: buffering and timeouts – Unavailability of load balancer due to faults – Burstiness of workload • Latency is more accurately modeled at service, rather than end-to-end 1 • Alternate approach: evaluate an existing system 1 M. Merzbacher and D. Patterson, Measuring End-User Availability on the Web: Practical Experience, 2002

Analysis • Queuing behavior may have a significant effect on latency-based performability evaluation – Long component MTTRs = longer waits, lower latency-based score – High performance in normal case = faster queue reduction after repair, higher latency-based score • More study is needed!

Future Work • Further collaboration with Rutgers on collecting new measurements for latency-based performability analysis • Development of more realistic fault and workload models, other performability factors such as data quality • Research into methods for conducting automated performability evaluations of web services

Metrics and Techniques for Evaluating the Performability of Internet Services Pete Broadwell pbwell@cs. berkeley. edu

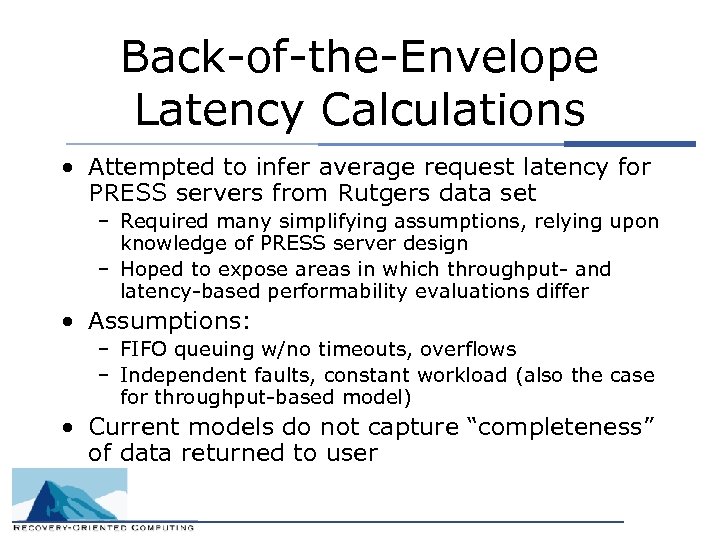

Back-of-the-Envelope Latency Calculations • Attempted to infer average request latency for PRESS servers from Rutgers data set – Required many simplifying assumptions, relying upon knowledge of PRESS server design – Hoped to expose areas in which throughput- and latency-based performability evaluations differ • Assumptions: – FIFO queuing w/no timeouts, overflows – Independent faults, constant workload (also the case for throughput-based model) • Current models do not capture “completeness” of data returned to user

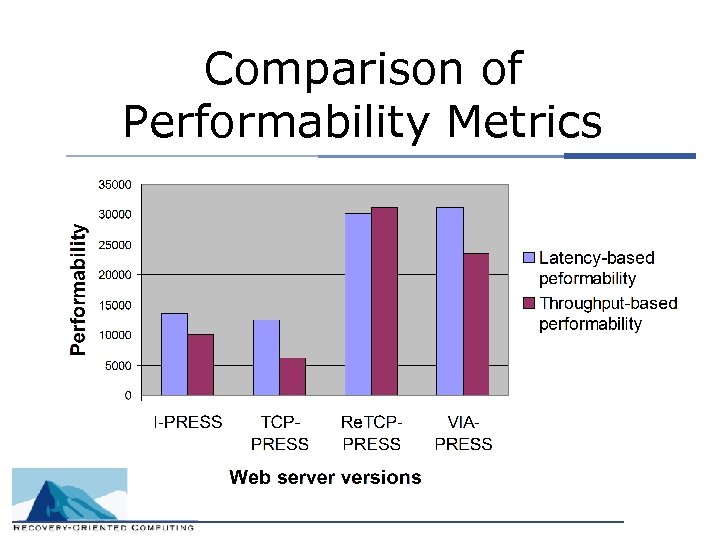

Comparison of Performability Metrics

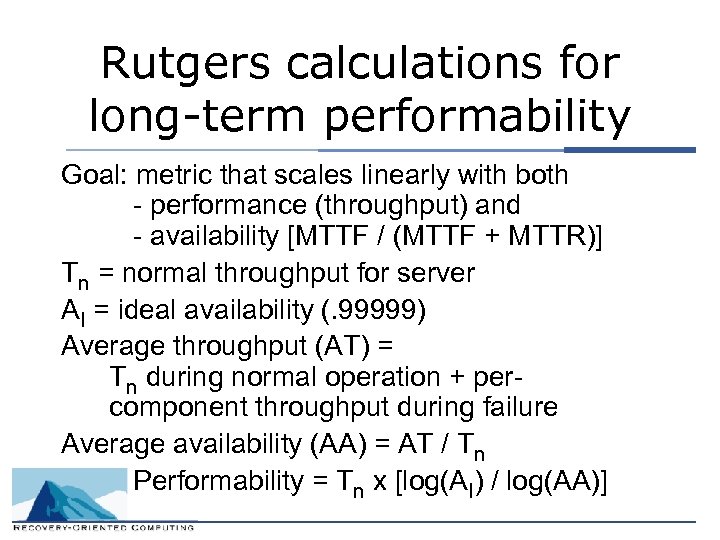

Rutgers calculations for long-term performability Goal: metric that scales linearly with both - performance (throughput) and - availability [MTTF / (MTTF + MTTR)] Tn = normal throughput for server AI = ideal availability (. 99999) Average throughput (AT) = Tn during normal operation + percomponent throughput during failure Average availability (AA) = AT / Tn Performability = Tn x [log(AI) / log(AA)]

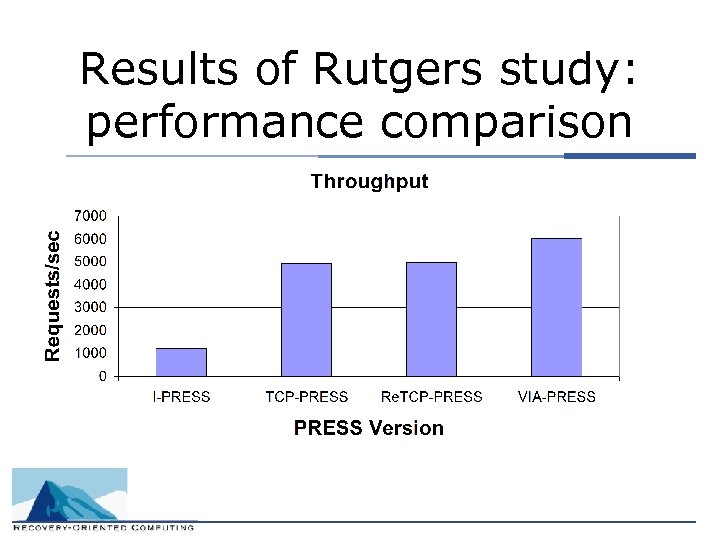

Results of Rutgers study: performance comparison

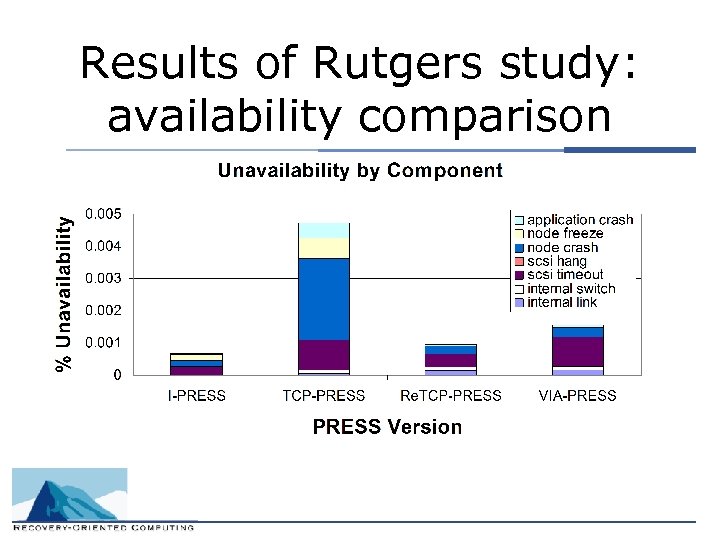

Results of Rutgers study: availability comparison

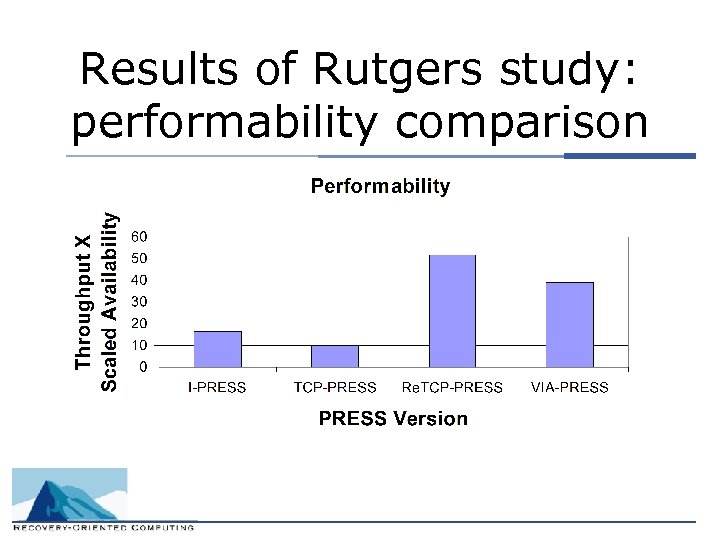

Results of Rutgers study: performability comparison

7372817a0f2b0dd1b52cd49ab6f1eb9d.ppt