d9af47c182922ba3eb46af3a5e9ddf8f.ppt

- Количество слайдов: 42

Methods for Software Protection Prof. Clark Thomborson Keynote Address at the International Forum on Computer Science and Advanced Software Technology Jiangxi Normal University 11 th June 2007

Questions to be (Partially) Answered What is security? n What is software watermarking, and how is it implemented? n What is software obfuscation, and how is it implemented? n How does software obfuscation compare with encryption? n Is “perfect obfuscation” possible? n SW Protection 11 June 07 2

What is Security? (A Taxonomic Approach) The first step in wisdom is to know the things themselves; this notion consists in having a true idea of the objects; objects are distinguished and known by classifying them methodically and giving them appropriate names. Therefore, classification and name-giving will be the foundation of our science. Carolus Linnæus, Systema Naturæ, 1735 (from Lindqvist and Jonsson, “How to Systematically Classify Computer Security Intrusions”, 1997. ) SW Protection 11 June 07 3

Standard Taxonomy of Security 1. 2. 3. n n Confidentiality: no one is allowed to read, unless they are authorised. Integrity: no one is allowed to write, unless they are authorised. Availability: all authorised reads and writes will be performed by the system. Authorisation: giving someone the authority to do something. Authentication: being assured of someone’s identity. Identification: knowing someone’s name or ID#. Auditing: maintaining (and reviewing) records of security decisions. SW Protection 11 June 07 4

A Multi-Level Hierarchy n n Static security: the Confidentiality, Integrity, and Availability properties of a system. Dynamic security: the technical processes which assure static security. n The gold standard: Authentication, Authorisation, Audit. n n Defense in depth: Prevention, Detection, Response. Security governance: the “people processes” which develop and maintain a secure system. n Governors set budgets and delegate their responsibilities for Specification, Implementation, and Assurance. SW Protection 11 June 07 5

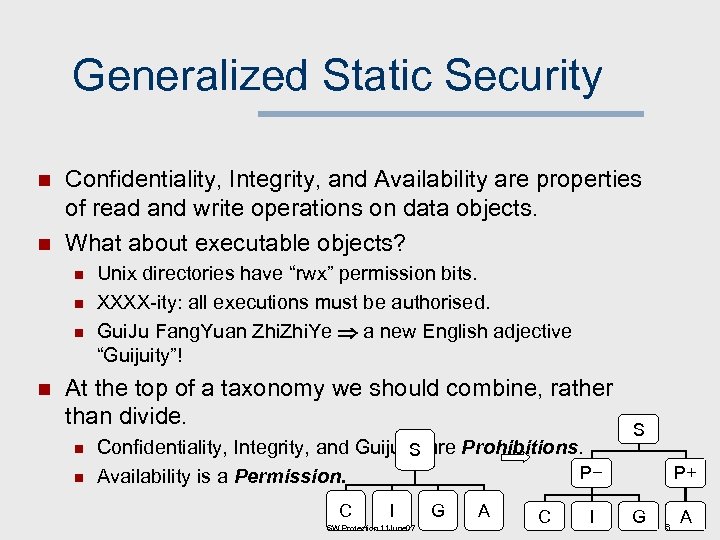

Generalized Static Security n n Confidentiality, Integrity, and Availability are properties of read and write operations on data objects. What about executable objects? n n Unix directories have “rwx” permission bits. XXXX-ity: all executions must be authorised. Gui. Ju Fang. Yuan Zhi. Ye a new English adjective “Guijuity”! At the top of a taxonomy we should combine, rather than divide. n n Confidentiality, Integrity, and Guijuity are Prohibitions. S P− Availability is a Permission. C I SW Protection 11 June 07 G A C I S P+ G 6 A

Prohibitions and Permissions n n n Prohibition: prevent an action. Permission: allow an action. There are two types of action-secure systems: n n In a prohibitive system, all actions are prohibited by default. Permissions are granted in special cases, e. g. to authorised individuals. In a permissive system, all actions are permitted by default. Prohibitions are special cases, e. g. when an individual attempts to access a secure system. Prohibitive systems have permissive subsystems. Permissive systems have prohibitive subsystems. SW Protection 11 June 07 7

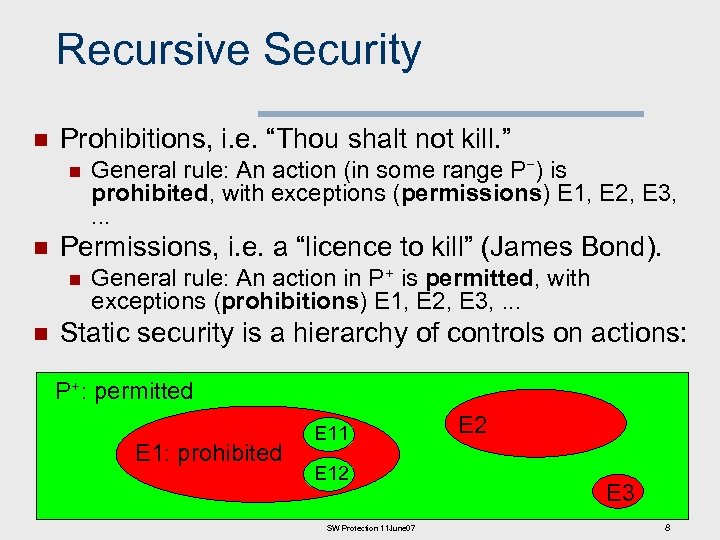

Recursive Security n Prohibitions, i. e. “Thou shalt not kill. ” n n Permissions, i. e. a “licence to kill” (James Bond). n n General rule: An action (in some range P−) is prohibited, with exceptions (permissions) E 1, E 2, E 3, . . . General rule: An action in P+ is permitted, with exceptions (prohibitions) E 1, E 2, E 3, . . . Static security is a hierarchy of controls on actions: P+: permitted E 1: prohibited E 11 E 12 SW Protection 11 June 07 E 2 E 3 8

Is Our Taxonomy Complete? n Prohibitions and permissions are properties of hierarchical systems, such as a judicial system. n n Contracts are non-hierarchical (agreed between peers), and consist mostly of requirements to act (with some exceptions): n n n Most legal controls (“laws”) are prohibitive: they prohibit certain actions, with some exceptions (permissions). Obligations are promises to do something in the future. Exemptions are exceptions to an obligation. Obligations and exemptions are not well-modeled by action-security rules. n Obligations arise occasionally in the law, e. g. a doctor’s “duty of care” or a trustee’s fiduciary responsibility. SW Protection 11 June 07 9

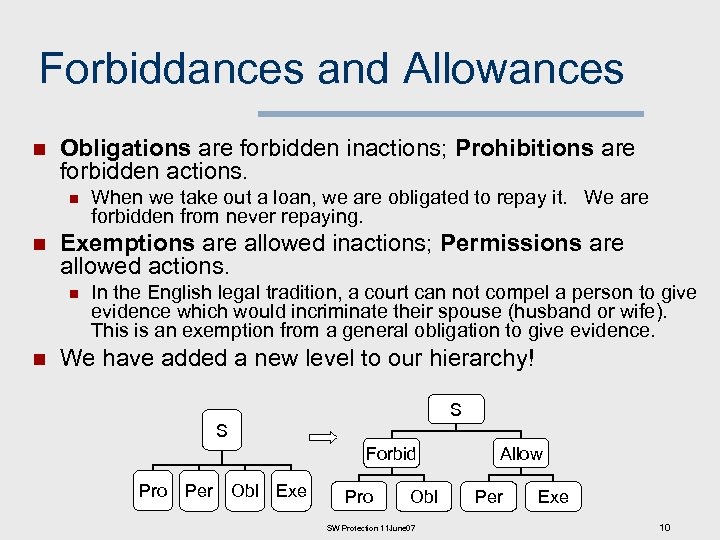

Forbiddances and Allowances n Obligations are forbidden inactions; Prohibitions are forbidden actions. n n Exemptions are allowed inactions; Permissions are allowed actions. n n When we take out a loan, we are obligated to repay it. We are forbidden from never repaying. In the English legal tradition, a court can not compel a person to give evidence which would incriminate their spouse (husband or wife). This is an exemption from a general obligation to give evidence. We have added a new level to our hierarchy! S S Forbid Pro Per Obl Exe Pro Obl SW Protection 11 June 07 Allow Per Exe 10

Reviewing our Questions 1. What is security? n Three layers: static, dynamic, governance. n A new taxonomic structure for static security: (forbiddances, allowances) x (actions, inactions). n Four types of static security rules: prohibitions (including “guijus”), permissions, obligations, and exemptions. 2. What is software watermarking, and how is it implemented? 3. What is software obfuscation, and how is it implemented? SW Protection 11 June 07 11

Defense in Depth for Software 1. Prevention: a) Deter attacks on forbiddances using obfuscation, encryption, watermarking, cryptographic hashes, or trustworthy computing. b) Deter attacks on allowances using replication or resilient algorithms. 2. Detection: a) Monitor subjects (user logs). Requires user ID: biometrics, ID tokens, or passwords. b) Monitor actions (execution logs, intrusion detectors). Requires code ID: cryptographic hashing, watermarking. c) Monitor objects (object logs). Requires object ID: hashing, watermarking. 3. Response: a) Ask for help: Set off an alarm (which may be silent – steganographic), then wait for an enforcement agent. b) Self-help: Self-destructive or self-repairing systems. Note: “steganography” means “secret writing” – an invisible watermark. SW Protection 11 June 07 12

Software Watermarking Key taxonomic questions: n Where is the watermark embedded? How is the watermark embedded? When is the watermark embedded? n Why is the watermark embedded? n What are its desired properties? SW Protection 11 June 07 13

Software Watermarking Systems n n An embedder E(P; W; k) Pw embeds a message (the watermark) W into a program P using secret key k, yielding a watermarked program Pw An extractor R(Pw ; . . . ) W extracts W from Pw n n n In an invisible watermarking system, R (or a parameter) is a secret. In visible watermarking, R is well-publicised (ideally obvious). The attack set A and goal G model the security threat. n n n For a robust watermark, the attacker’s goal is a false-negative extraction, usually by creating an attacked object a(Pw), with R(a(Pw); . . . ) ≠ W such that Pw is valuable. For a fragile watermark, the attacker’s goal is a false-positive: R(a(Pw); . . . ) = W such that Pw ≠ P is valuable. A protocol attack is a substitution of R’ for R, causing a falsenegative or false-positive extraction. SW Protection 11 June 07 14

Where Software Watermarks are Embedded n n n Static code watermarks are stored in the section of the executable that contains instructions. Static data watermarks are stored in other sections of the executable Static watermarks are extracted without executing (or emulating) the code. Ø Ø A watermark extractor is a special-purpose static analysis. Extraction is inexpensive, but we don’t know of any robust static code watermarks. Attackers can easily modify the watermarked code to create an unwatermarked (false-negative) version. SW Protection 11 June 07 15

Dynamic Watermarks n n Easter Eggs are revealed to any end-user who types a special input sequence. Other dynamic behaviour watermarks: Execution Trace Watermarks are carried in the instruction execution sequence of a program, when it is given a special input sequence (possibly null). v Data Structure Watermarks are built by a program, when it is given a special input. v Data Value Watermarks are produced by a program on a surreptitious channel, when it is given a special input. v SW Protection 11 June 07 16

Easter Eggs n n n The watermark is visible – if you know where to look! Not very robust, after the secret is published. See www. eeggs. com SW Protection 11 June 07 17

Dynamic Data Structure Watermarks n n The embedder inserts code in the program, so that it creates a recognisable data structure when given specific input (the key). Details are given in our POPL’ 99 paper, and in two published patent applications. n n Assigned to Auckland Uni. Services Ltd. I would very much like to find licensed uses for this technology! Implemented at http: //www. cs. arizona. edu/sandmark/ (2000 - ) Experimental findings by Palsberg et al. (2001): n n Java. Wiz adds less than 10 kilobytes of code on average. Embedding a watermark takes less than 20 seconds. Watermarking increases a program’s execution time by less than 7%. Watermark retrieval takes about 1 minute per megabyte of heap. SW Protection 11 June 07 18

Thread-Based Watermarks n A dynamic watermark is expressed in the thread-switching behaviour of a program, when given a specific input (the key). n n The thread-switches are controlled by non-nested locks. NZ Patent 533208, US Patent App 2005/0262490 Article in IH’ 04; Jas Nagra’s Ph. D thesis, 2006 The embedder inserts tamper-proofing sequences which closely resemble the watermark sequences but which, if removed, will cause the program to behave incorrectly. n This is a “self-help” response mechanism. SW Protection 11 June 07 19

SW Watermarking (Review of Taxonomic Questions) n Where is the watermark embedded? How is the watermark embedded? When is the watermark embedded? n Why is the watermark embedded? n What are its desired properties? SW Protection 11 June 07 20

Active Watermarks n We can embed a watermark during a design step (“active watermarking”: Kahng et al. , 2001). n n n IC designs may carry watermarks in place-route constraints. Register assignments during compilation can encode a software watermark, however such watermarks are insecure because they can be easily removed by an adversary. Most software watermarks are “passive”, i. e. inserted at or near the end of the design process. SW Protection 11 June 07 21

Why Watermark Software? Invisible robust watermarks: useful for prohibition (of unlicensed use) n Invisible fragile watermarks: useful for permission (of licensed uses). n Visible robust watermarks: useful for assertion (of copyright or authorship). n Visible fragile watermarks: useful for affirmation (of authenticity or validity). n SW Protection 11 June 07 22

A Fifth Function? Any watermark is useful for the transmission of information irrelevant to security (espionage, humour, …). n Transmission Marks may involve security for other systems, in which case they can be categorised as Permissions, Prohibitions, etc. n SW Protection 11 June 07 23

![Our Functional Taxonomy for Watermarks [2002] But: there are no “assertions” and “affirmations” in Our Functional Taxonomy for Watermarks [2002] But: there are no “assertions” and “affirmations” in](https://present5.com/presentation/d9af47c182922ba3eb46af3a5e9ddf8f/image-24.jpg)

Our Functional Taxonomy for Watermarks [2002] But: there are no “assertions” and “affirmations” in our theory of static security! SW Protection 11 June 07 24

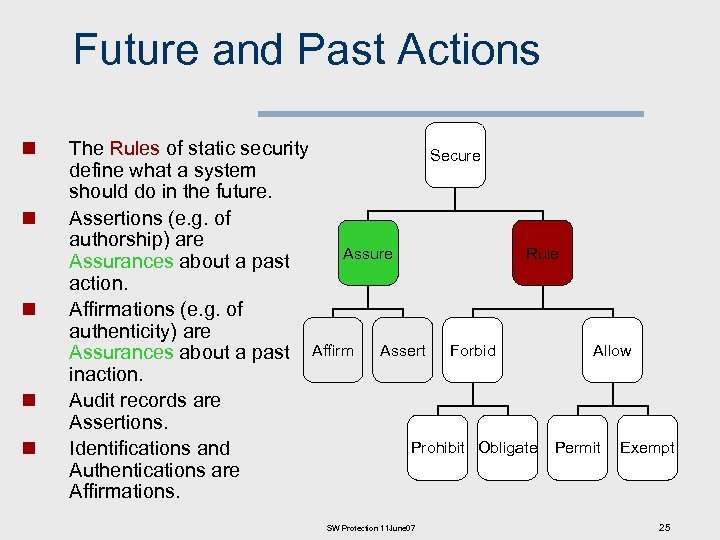

Future and Past Actions n n n The Rules of static security Secure define what a system should do in the future. Assertions (e. g. of authorship) are Assure Rule Assurances about a past action. Affirmations (e. g. of authenticity) are Allow Assurances about a past Affirm Assert Forbid inaction. Audit records are Assertions. Prohibit Obligate Permit Exempt Identifications and Authentications are Affirmations. SW Protection 11 June 07 25

Reviewing our Questions 1. What is Security? 2. What is software watermarking, and how is it implemented? 3. What is software obfuscation, and how is it implemented? 4. How does software obfuscation compare with encryption? Is “perfect obfuscation” possible? SW Protection 11 June 07 26

What is Obfuscation? n Obfuscation is a semantics-preserving transformation of computer code that renders it more secure against confidentiality attacks. SW Protection 11 June 07 27

What Secrets are in Software? n n Algorithms (so competitors or attackers can’t build similar functionality without redesigning from scratch). Constants, such as an encryption key (typically hidden in code that computes obscure functions of this constant). Internal function points, such as a licensecontrol predicate “if (not licensed) exit()”. External interfaces (to deny access by attackers and competitors to an intentional “service entrance” or an unintentional “backdoor”). SW Protection 11 June 07 28

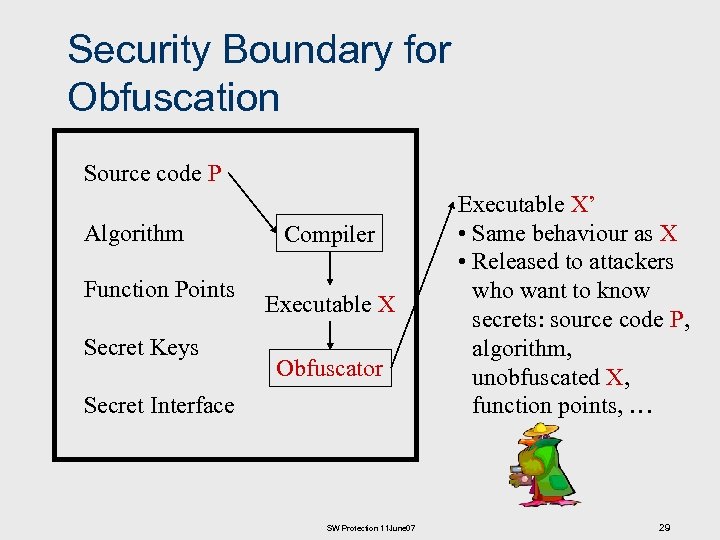

Security Boundary for Obfuscation Source code P Algorithm Function Points Secret Keys Compiler Executable X Obfuscator Secret Interface SW Protection 11 June 07 Executable X’ • Same behaviour as X • Released to attackers who want to know secrets: source code P, algorithm, unobfuscated X, function points, … 29

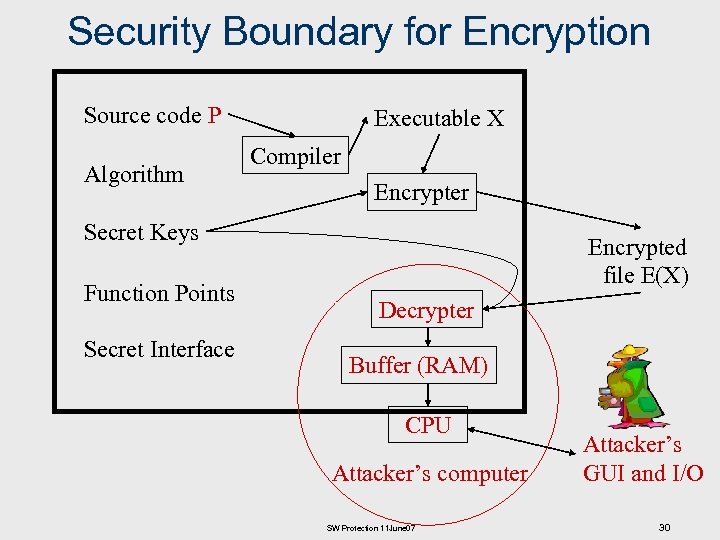

Security Boundary for Encryption Source code P Algorithm Executable X Compiler Encrypter Secret Keys Function Points Secret Interface Encrypted file E(X) Decrypter Buffer (RAM) CPU Attacker’s computer SW Protection 11 June 07 Attacker’s GUI and I/O 30

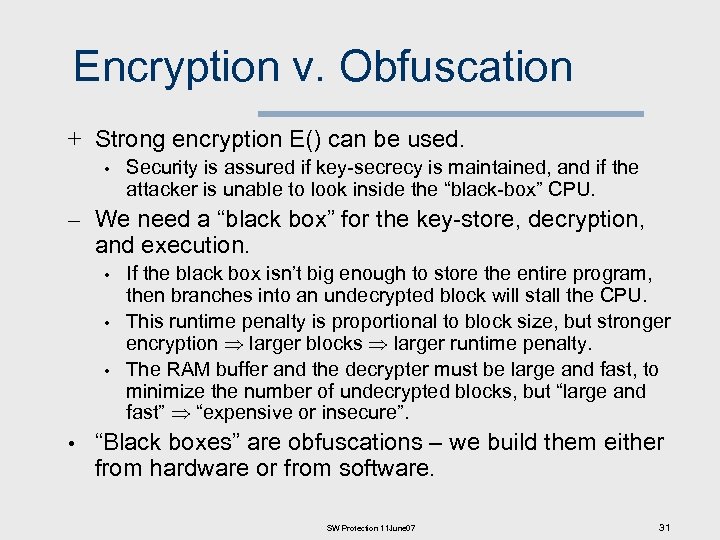

Encryption v. Obfuscation + Strong encryption E() can be used. • Security is assured if key-secrecy is maintained, and if the attacker is unable to look inside the “black-box” CPU. – We need a “black box” for the key-store, decryption, and execution. • • If the black box isn’t big enough to store the entire program, then branches into an undecrypted block will stall the CPU. This runtime penalty is proportional to block size, but stronger encryption larger blocks larger runtime penalty. The RAM buffer and the decrypter must be large and fast, to minimize the number of undecrypted blocks, but “large and fast” “expensive or insecure”. “Black boxes” are obfuscations – we build them either from hardware or from software. SW Protection 11 June 07 31

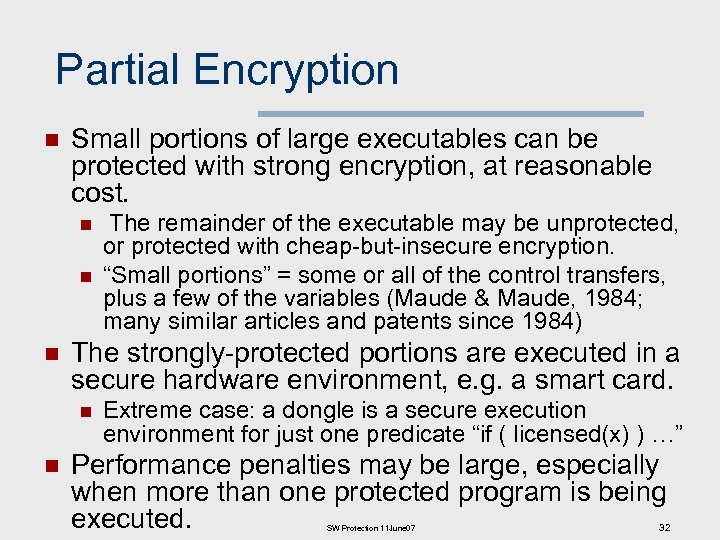

Partial Encryption n Small portions of large executables can be protected with strong encryption, at reasonable cost. n n n The strongly-protected portions are executed in a secure hardware environment, e. g. a smart card. n n The remainder of the executable may be unprotected, or protected with cheap-but-insecure encryption. “Small portions” = some or all of the control transfers, plus a few of the variables (Maude & Maude, 1984; many similar articles and patents since 1984) Extreme case: a dongle is a secure execution environment for just one predicate “if ( licensed(x) ) …” Performance penalties may be large, especially when more than one protected program is being executed. SW Protection 11 June 07 32

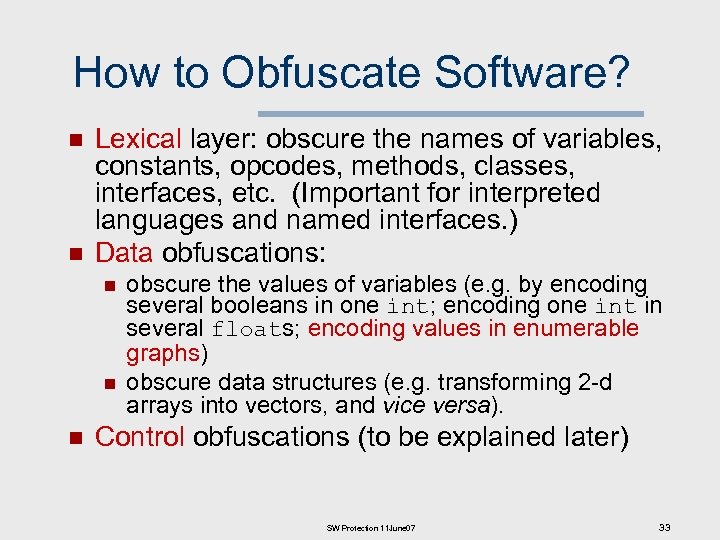

How to Obfuscate Software? n n Lexical layer: obscure the names of variables, constants, opcodes, methods, classes, interfaces, etc. (Important for interpreted languages and named interfaces. ) Data obfuscations: n n n obscure the values of variables (e. g. by encoding several booleans in one int; encoding one int in several floats; encoding values in enumerable graphs) obscure data structures (e. g. transforming 2 -d arrays into vectors, and vice versa). Control obfuscations (to be explained later) SW Protection 11 June 07 33

Attacks on Data Obfuscation n n An attacker may be able to discover the decoding function, by observing program behaviour immediately prior to output: print( decode( x ) ), where x is an obfuscated variable. An attacker may be able to discover the encoding function, by observing program behaviour immediately after input. A sufficiently clever human will eventually deobfuscate any code. Our goal is to frustrate an attacker who wants to automate the de-obfuscation process. More complex obfuscations are more difficult to deobfuscate, but they tend to degrade program efficiency and may enable pattern-matching attacks. SW Protection 11 June 07 34

Cryptographic Obfuscations? n Cloakware have patented an algebraic obfuscation on data, but it does not have a cryptographic secret key. n n n W Zhu, in my group, fixed a bug in their division algorithm. An ideal data obfuscator would have a cryptographic key that selects one of 264 encoding functions. Fundamental vulnerability: The encoding and decoding functions must be included in the obfuscated software. Otherwise the obfuscated variables cannot be read and written. n “White-box cryptography” is an obfuscated code that resists automated analysis, deterring adversaries who would extract a working implementation of the keyed functions or of the keys themselves. SW Protection 11 June 07 35

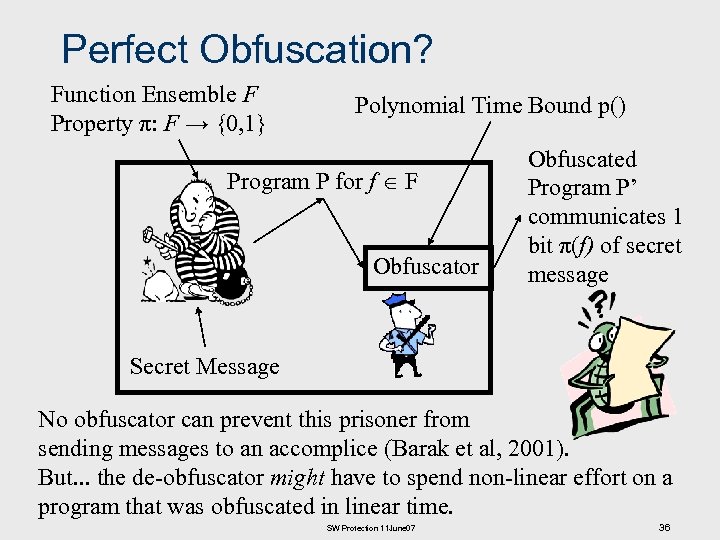

Perfect Obfuscation? Function Ensemble F Property π: F → {0, 1} Polynomial Time Bound p() Program P for f F Obfuscator Obfuscated Program P’ communicates 1 bit π(f) of secret message Secret Message No obfuscator can prevent this prisoner from sending messages to an accomplice (Barak et al, 2001). But. . . the de-obfuscator might have to spend non-linear effort on a program that was obfuscated in linear time. SW Protection 11 June 07 36

Practical Data Obfuscation n Barak et al. have proved that “perfect obfuscation” is impossible, but “practical obfuscation” is still possible. We cannot build a “black box” (as required to implement an encryption) without using obfuscation somewhere – either in our hardware, or in software, or in both. In practical obfuscation, our goal is to find a costeffective way of preventing our adversaries from learning our secret for some period of time. n n n This places a constraint on system design – we must be able to re-establish security after we lose control of our secret. “Technical security” is insufficient as a response mechanism. Practical systems rely on legal, moral, and financial controls to mitigate damage and to restore security after a successful attack. SW Protection 11 June 07 37

Control Obfuscations Inline procedures n Outline procedures n Obscure method inheritances (e. g. refactor classes) n Opaque predicates: n Dead code (which may trigger a tamperresponse mechanism if it is executed!) n Variant (duplicate) code n Obscure control flow (“flattened” or irreducible) n SW Protection 11 June 07 38

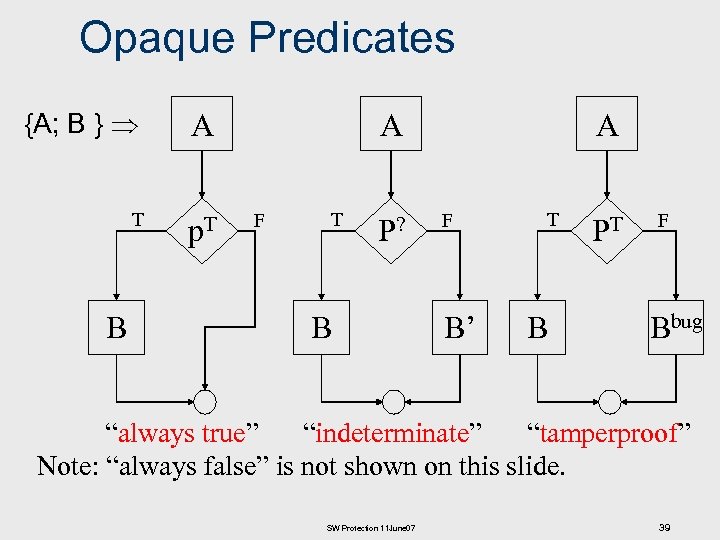

Opaque Predicates {A; B } A T p. T B A F T P? B A F B’ T B PT F Bbug “always true” “indeterminate” “tamperproof” Note: “always false” is not shown on this slide. SW Protection 11 June 07 39

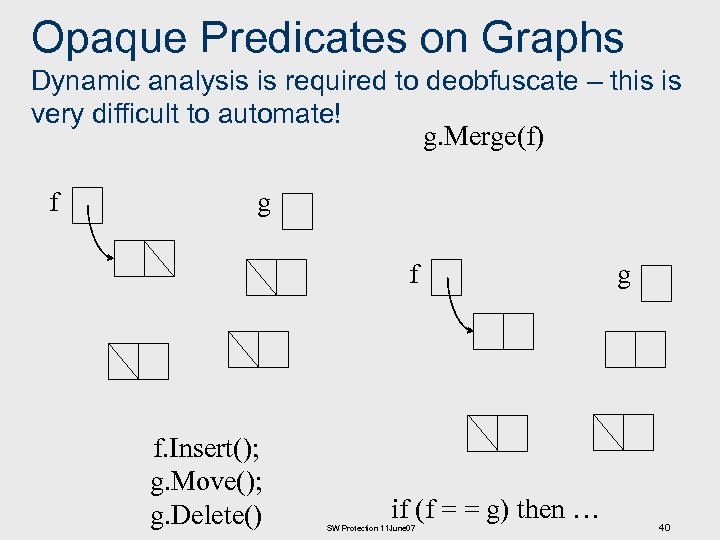

Opaque Predicates on Graphs Dynamic analysis is required to deobfuscate – this is very difficult to automate! g. Merge(f) f g f f. Insert(); g. Move(); g. Delete() if (f = = g) then … SW Protection 11 June 07 g 40

History of Software Obfuscation n n n n “Hand-crafted” obfuscations: IOCCC (Int’l Obfuscated C Code Contest, 1984 - ); a few earlier examples. Install. Shield has used obfuscation since its first product (1987). Automated lexical obfuscations since 1996: Crema, Hose. Mocha, … Automated control obfuscations since 1996: Monden. Opaque predicates since 1997: Collberg, Thomborson, Low. Commercial vendors since 1997: Cloakware, Microsoft (in their compiler). Commercial users since 1997: Adobe Doc. Box, Skype. Obfuscation is still a small field, with just a handful of companies selling obfuscation products and services. There are only a few non-trivial published results, and a few patents. SW Protection 11 June 07 41

Summary n A new taxonomy of static security: n (forbiddance, allowance) x (action, inaction) = n (prohibition, permission, obligation, exemption). n Progress toward a general theory for hierarchical and peering secure systems. n n (past, future) x (P-, P+) x (action, inaction) ? ? Existing theories of P- security for future actions • Bell-La. Padula, for confidentiality • Biba, for integrity • Clark-Wilson, for guijuity n An overview of software security techniques, focussing on watermarking and obfuscation. SW Protection 11 June 07 42

d9af47c182922ba3eb46af3a5e9ddf8f.ppt