6fafd954da21fccee8039c72da9fe3fa.ppt

- Количество слайдов: 108

Metamorphic Testing Techniques to Detect Defects in Applications without Test Oracles Christian Murphy Thesis Defense April 12, 2010

Metamorphic Testing Techniques to Detect Defects in Applications without Test Oracles Christian Murphy Thesis Defense April 12, 2010

Overview n Software testing is important! n Certain types of applications are particularly hard to test because there is no “test oracle” ¨ Machine Learning, Discrete Event Simulation, Optimization, Scientific Computing, etc. n Even when there is no oracle, it is possible to detect defects if properties of the software violated n My research introduces and evaluates new techniques for testing such “non-testable programs” [Weyuker, Computer Journal’ 82] 2

Overview n Software testing is important! n Certain types of applications are particularly hard to test because there is no “test oracle” ¨ Machine Learning, Discrete Event Simulation, Optimization, Scientific Computing, etc. n Even when there is no oracle, it is possible to detect defects if properties of the software violated n My research introduces and evaluates new techniques for testing such “non-testable programs” [Weyuker, Computer Journal’ 82] 2

Motivating Example: Machine Learning 3

Motivating Example: Machine Learning 3

Motivating Example: Simulation 4

Motivating Example: Simulation 4

Problem Statement Partial oracles may exist for a limited subset of the input domain in applications such as Machine Learning, Discrete Event Simulation, Scientific Computing, Optimization, etc. n Obvious errors (e. g. , crashes) can be detected with certain inputs or testing techniques n n However, it is difficult to detect subtle computational defects in applications without test oracles in the general case 5

Problem Statement Partial oracles may exist for a limited subset of the input domain in applications such as Machine Learning, Discrete Event Simulation, Scientific Computing, Optimization, etc. n Obvious errors (e. g. , crashes) can be detected with certain inputs or testing techniques n n However, it is difficult to detect subtle computational defects in applications without test oracles in the general case 5

What do I mean by “defect”? n Deviation of the implementation from the specification Violation of a sound property of the software n “Discrete localized” calculation errors n ¨ Off-by-one ¨ Incorrect sentinel values for loops ¨ Wrong comparison or mathematical operator n Misinterpretation of specification ¨ Parts of input domain not handled ¨ Incorrect assumptions made about input 6

What do I mean by “defect”? n Deviation of the implementation from the specification Violation of a sound property of the software n “Discrete localized” calculation errors n ¨ Off-by-one ¨ Incorrect sentinel values for loops ¨ Wrong comparison or mathematical operator n Misinterpretation of specification ¨ Parts of input domain not handled ¨ Incorrect assumptions made about input 6

Observation n Many programs without oracles have properties such that certain changes to the input yield predictable changes to the output n We can detect defects in these programs by looking for any violations of these “metamorphic properties” n This is known as “metamorphic testing” ¨ [T. Y. Chen et al. , Info. & Soft. Tech vol. 4, 2002] 7

Observation n Many programs without oracles have properties such that certain changes to the input yield predictable changes to the output n We can detect defects in these programs by looking for any violations of these “metamorphic properties” n This is known as “metamorphic testing” ¨ [T. Y. Chen et al. , Info. & Soft. Tech vol. 4, 2002] 7

Research Goals n Facilitate the way that metamorphic testing is used in practice n Develop new testing techniques based on metamorphic testing n Demonstrate the effectiveness of metamorphic testing techniques 8

Research Goals n Facilitate the way that metamorphic testing is used in practice n Develop new testing techniques based on metamorphic testing n Demonstrate the effectiveness of metamorphic testing techniques 8

Hypotheses n For programs that do not have a test oracle, an automated approach to metamorphic testing is more effective at detecting defects than other approaches n An approach that conducts function-level metamorphic testing in the context of a running application will further increase the effectiveness n It is feasible to continue this type of testing in the deployment environment, with minimal impact on the end user 9

Hypotheses n For programs that do not have a test oracle, an automated approach to metamorphic testing is more effective at detecting defects than other approaches n An approach that conducts function-level metamorphic testing in the context of a running application will further increase the effectiveness n It is feasible to continue this type of testing in the deployment environment, with minimal impact on the end user 9

Contributions 1. A set of guidelines to help identify metamorphic properties 2. New empirical studies comparing the effectiveness of metamorphic testing to other approaches 3. An approach for detecting defects in non-deterministic applications called Heuristic Metamorphic Testing 4. A new testing technique called Metamorphic Runtime Checking based on function-level metamorphic properties 5. A generalized technique for testing in the deployment environment called In Vivo Testing 10

Contributions 1. A set of guidelines to help identify metamorphic properties 2. New empirical studies comparing the effectiveness of metamorphic testing to other approaches 3. An approach for detecting defects in non-deterministic applications called Heuristic Metamorphic Testing 4. A new testing technique called Metamorphic Runtime Checking based on function-level metamorphic properties 5. A generalized technique for testing in the deployment environment called In Vivo Testing 10

Outline n Background ¨ Related Work ¨ Metamorphic Testing Empirical Studies n Metamorphic Runtime Checking n Future Work & Conclusion n 11

Outline n Background ¨ Related Work ¨ Metamorphic Testing Empirical Studies n Metamorphic Runtime Checking n Future Work & Conclusion n 11

![Other Approaches [Baresi & Young, 2001] n Formal specifications ¨A n complete specification is Other Approaches [Baresi & Young, 2001] n Formal specifications ¨A n complete specification is](https://present5.com/presentation/6fafd954da21fccee8039c72da9fe3fa/image-12.jpg) Other Approaches [Baresi & Young, 2001] n Formal specifications ¨A n complete specification is essentially a test oracle Embedded assertions ¨ Can n check that the software behaves as expected Algebraic properties ¨ Used n to generate test cases for abstract datatypes Trace checking & Log file analysis ¨ Analyze intermediate results and sequence of executions 12

Other Approaches [Baresi & Young, 2001] n Formal specifications ¨A n complete specification is essentially a test oracle Embedded assertions ¨ Can n check that the software behaves as expected Algebraic properties ¨ Used n to generate test cases for abstract datatypes Trace checking & Log file analysis ¨ Analyze intermediate results and sequence of executions 12

![Metamorphic Testing [Chen et al. , 2002] Initial test case x Transformation function based Metamorphic Testing [Chen et al. , 2002] Initial test case x Transformation function based](https://present5.com/presentation/6fafd954da21fccee8039c72da9fe3fa/image-13.jpg) Metamorphic Testing [Chen et al. , 2002] Initial test case x Transformation function based on metamorphic properties of f t New test case n n t(x) f f(x) and f(t(x)) are “pseudo-oracles” f f(t(x)) If new test case output f(t(x)) is as expected, it is not necessarily correct However, if f(t(x)) is not as expected, either f(x) or f(t(x)) – or both! – is wrong 13

Metamorphic Testing [Chen et al. , 2002] Initial test case x Transformation function based on metamorphic properties of f t New test case n n t(x) f f(x) and f(t(x)) are “pseudo-oracles” f f(t(x)) If new test case output f(t(x)) is as expected, it is not necessarily correct However, if f(t(x)) is not as expected, either f(x) or f(t(x)) – or both! – is wrong 13

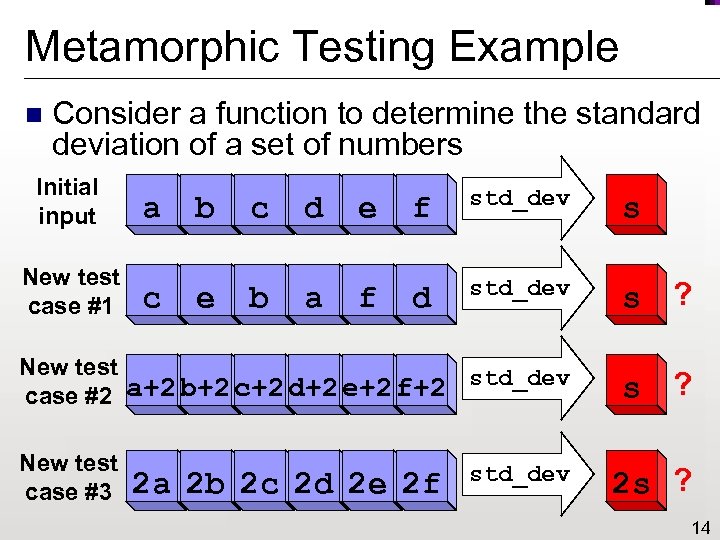

Metamorphic Testing Example n Consider a function to determine the standard deviation of a set of numbers Initial input a b c d e f std_dev s New test case #1 c e b a f d std_dev s ? New test a+2 b+2 c+2 d+2 e+2 f+2 std_dev case #2 s ? New test case #3 2 a 2 b 2 c 2 d 2 e 2 f std_dev 2 s ? 14

Metamorphic Testing Example n Consider a function to determine the standard deviation of a set of numbers Initial input a b c d e f std_dev s New test case #1 c e b a f d std_dev s ? New test a+2 b+2 c+2 d+2 e+2 f+2 std_dev case #2 s ? New test case #3 2 a 2 b 2 c 2 d 2 e 2 f std_dev 2 s ? 14

Outline n Background ¨ Related Work ¨ Metamorphic Testing Empirical Studies n Metamorphic Runtime Checking n Future Work & Conclusion n 15

Outline n Background ¨ Related Work ¨ Metamorphic Testing Empirical Studies n Metamorphic Runtime Checking n Future Work & Conclusion n 15

Empirical Study n Is metamorphic testing more effective than other approaches in detecting defects in applications without test oracles? n Approaches investigated ¨ Metamorphic n Using metamorphic properties of the entire application ¨ Runtime n Assertion Checking Using Daikon-detected program invariants ¨ Partial n Testing Oracle Simple inputs for which correct output can easily be determined 16

Empirical Study n Is metamorphic testing more effective than other approaches in detecting defects in applications without test oracles? n Approaches investigated ¨ Metamorphic n Using metamorphic properties of the entire application ¨ Runtime n Assertion Checking Using Daikon-detected program invariants ¨ Partial n Testing Oracle Simple inputs for which correct output can easily be determined 16

Applications Investigated n Machine Learning ¨ C 4. 5: decision tree classifier ¨ Marti. Rank: ranking ¨ Support Vector Machines (SVM): vector-based classifier ¨ PAYL: anomaly-based intrusion detection system n Discrete Event Simulation ¨ JSim: n used in simulating hospital ER Information Retrieval ¨ Lucene: n Apache framework’s text search engine Optimization ¨ gaffitter: problem genetic algorithm approach to bin-packing 17

Applications Investigated n Machine Learning ¨ C 4. 5: decision tree classifier ¨ Marti. Rank: ranking ¨ Support Vector Machines (SVM): vector-based classifier ¨ PAYL: anomaly-based intrusion detection system n Discrete Event Simulation ¨ JSim: n used in simulating hospital ER Information Retrieval ¨ Lucene: n Apache framework’s text search engine Optimization ¨ gaffitter: problem genetic algorithm approach to bin-packing 17

Methodology n Mutation testing was used to seed defects into each application ¨ Comparison operators were reversed ¨ Math operators were changed ¨ Off-by-one errors were introduced n n n For each program, we created multiple versions, each with exactly one mutation We ignored mutants that yielded outputs that were obviously wrong, caused crashes, etc. Effectiveness is determined by measuring what percentage of the mutants were “killed” 18

Methodology n Mutation testing was used to seed defects into each application ¨ Comparison operators were reversed ¨ Math operators were changed ¨ Off-by-one errors were introduced n n n For each program, we created multiple versions, each with exactly one mutation We ignored mutants that yielded outputs that were obviously wrong, caused crashes, etc. Effectiveness is determined by measuring what percentage of the mutants were “killed” 18

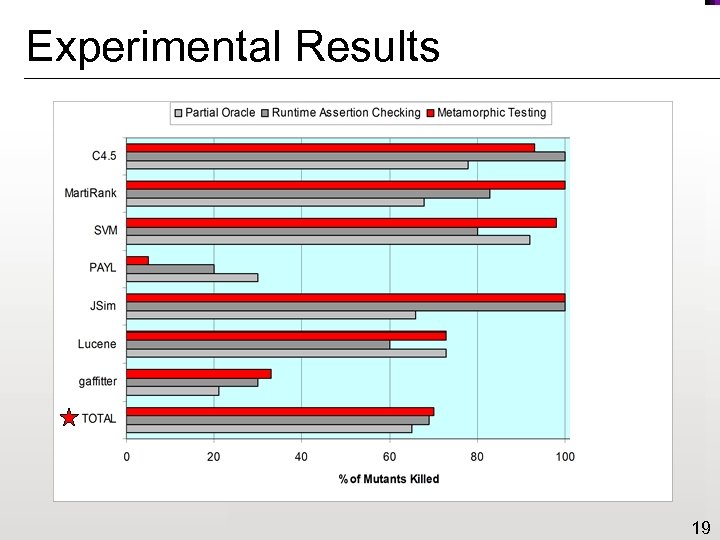

Experimental Results 19

Experimental Results 19

Analysis of Results n Assertions are good for checking bounds and relationships but not for changes to values n Metamorphic testing particularly good for detecting errors in loop conditions n Metamorphic testing was not very effective for PAYL (5%) and gaffitter (33%) ¨ fewer properties identified ¨ defects had little impact on output 20

Analysis of Results n Assertions are good for checking bounds and relationships but not for changes to values n Metamorphic testing particularly good for detecting errors in loop conditions n Metamorphic testing was not very effective for PAYL (5%) and gaffitter (33%) ¨ fewer properties identified ¨ defects had little impact on output 20

Outline n Background ¨ Related Work ¨ Metamorphic Testing Empirical Studies n Metamorphic Runtime Checking n Future Work & Conclusion n 21

Outline n Background ¨ Related Work ¨ Metamorphic Testing Empirical Studies n Metamorphic Runtime Checking n Future Work & Conclusion n 21

Metamorphic Runtime Checking n Results of previous study revealed limitations of scope and robustness in metamorphic testing n What if we consider the metamorphic properties of individual functions and check those properties as the entire program is running? n A combination of metamorphic testing and runtime assertion checking 22

Metamorphic Runtime Checking n Results of previous study revealed limitations of scope and robustness in metamorphic testing n What if we consider the metamorphic properties of individual functions and check those properties as the entire program is running? n A combination of metamorphic testing and runtime assertion checking 22

Metamorphic Runtime Checking n Tester specifies the metamorphic properties of individual functions using a special notation in the code (based on JML) n Pre-processor instruments code with corresponding metamorphic tests n Tester runs entire program as normal (e. g. , to perform system tests) n Violation of any property reveals a defect 23

Metamorphic Runtime Checking n Tester specifies the metamorphic properties of individual functions using a special notation in the code (based on JML) n Pre-processor instruments code with corresponding metamorphic tests n Tester runs entire program as normal (e. g. , to perform system tests) n Violation of any property reveals a defect 23

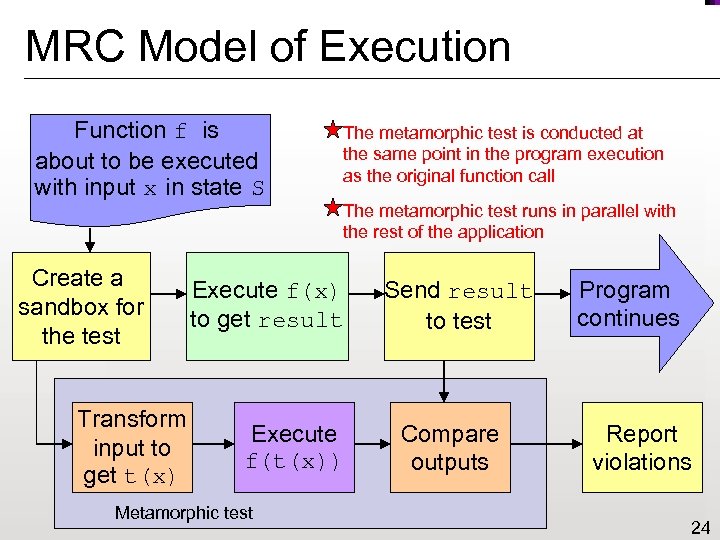

MRC Model of Execution Function f is about to be executed with input x in state S Create a sandbox for the test Transform input to get t(x) The metamorphic test is conducted at the same point in the program execution as the original function call The metamorphic test runs in parallel with the rest of the application Execute f(x) to get result Execute f(t(x)) Metamorphic test Send result to test Compare outputs Program continues Report violations 24

MRC Model of Execution Function f is about to be executed with input x in state S Create a sandbox for the test Transform input to get t(x) The metamorphic test is conducted at the same point in the program execution as the original function call The metamorphic test runs in parallel with the rest of the application Execute f(x) to get result Execute f(t(x)) Metamorphic test Send result to test Compare outputs Program continues Report violations 24

Empirical Study n Can Metamorphic Runtime Checking detect defects not found by system-level metamorphic testing? n Same mutants used in previous study ¨ 29% n were not found by metamorphic testing Metamorphic properties identified at function level using suggested guidelines 25

Empirical Study n Can Metamorphic Runtime Checking detect defects not found by system-level metamorphic testing? n Same mutants used in previous study ¨ 29% n were not found by metamorphic testing Metamorphic properties identified at function level using suggested guidelines 25

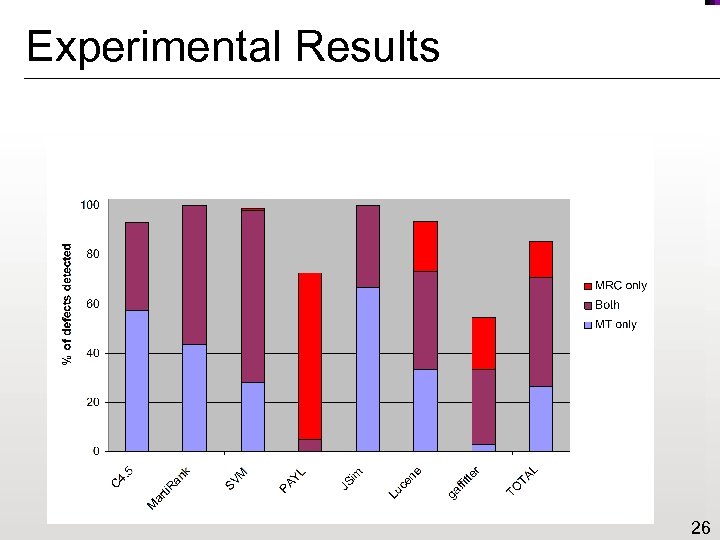

Experimental Results 26

Experimental Results 26

Analysis of Results n Scope: Function-level testing allowed us to: ¨ identify additional metamorphic properties ¨ execute more tests n Robustness: Metamorphic testing “inside” the application detected subtle defects that did not have much effect on the overall program output 27

Analysis of Results n Scope: Function-level testing allowed us to: ¨ identify additional metamorphic properties ¨ execute more tests n Robustness: Metamorphic testing “inside” the application detected subtle defects that did not have much effect on the overall program output 27

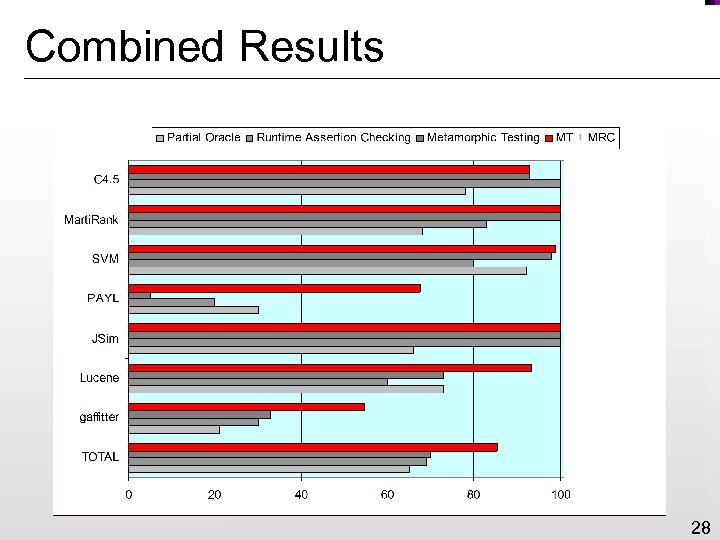

Combined Results 28

Combined Results 28

Outline n Background ¨ Related Work ¨ Metamorphic Testing Empirical Studies n Metamorphic Runtime Checking n Future Work & Conclusion n 29

Outline n Background ¨ Related Work ¨ Metamorphic Testing Empirical Studies n Metamorphic Runtime Checking n Future Work & Conclusion n 29

Results n Demonstrated that metamorphic testing advances the state of the art in detecting defects in applications without test oracles n Proved that Metamorphic Runtime Checking will reveal defects not found by using system-level properties n Showed that it is feasible to continue this type of testing in the deployment environment, with minimal impact on the end user 30

Results n Demonstrated that metamorphic testing advances the state of the art in detecting defects in applications without test oracles n Proved that Metamorphic Runtime Checking will reveal defects not found by using system-level properties n Showed that it is feasible to continue this type of testing in the deployment environment, with minimal impact on the end user 30

Short-Term Opportunities n Automatic detection of metamorphic properties ¨ Using n dynamic and/or static techniques Fault localization ¨ Once a defect has been detected, figure out where it occurred and how to fix it n Implementation issues ¨ Reducing overhead ¨ Handling external databases, network traffic, etc. 31

Short-Term Opportunities n Automatic detection of metamorphic properties ¨ Using n dynamic and/or static techniques Fault localization ¨ Once a defect has been detected, figure out where it occurred and how to fix it n Implementation issues ¨ Reducing overhead ¨ Handling external databases, network traffic, etc. 31

Long-Term Directions n Testing of multi-process or distributed applications in these domains n Collaborative defect detection and notification n Investigate the impact on the software development processes used in the domains of non-testable programs 32

Long-Term Directions n Testing of multi-process or distributed applications in these domains n Collaborative defect detection and notification n Investigate the impact on the software development processes used in the domains of non-testable programs 32

Contributions & Accomplishments 1. A set of metamorphic testing guidelines n 2. New empirical studies n 3. [Murphy, Shen, Kaiser; ISSTA’ 09] Metamorphic Runtime Checking n 5. [Xie, Ho, Murphy, Kaiser, Xu, Chen; QSIC’ 09] Heuristic Metamorphic Testing n 4. [Murphy, Kaiser, Hu, Wu; SEKE’ 08] [Murphy, Shen, Kaiser; ICST’ 09] In Vivo Testing n n [Murphy, Kaiser, Vo, Chu; ICST’ 09] [Murphy, Vaughan, Ilahi, Kaiser; AST’ 10] 33

Contributions & Accomplishments 1. A set of metamorphic testing guidelines n 2. New empirical studies n 3. [Murphy, Shen, Kaiser; ISSTA’ 09] Metamorphic Runtime Checking n 5. [Xie, Ho, Murphy, Kaiser, Xu, Chen; QSIC’ 09] Heuristic Metamorphic Testing n 4. [Murphy, Kaiser, Hu, Wu; SEKE’ 08] [Murphy, Shen, Kaiser; ICST’ 09] In Vivo Testing n n [Murphy, Kaiser, Vo, Chu; ICST’ 09] [Murphy, Vaughan, Ilahi, Kaiser; AST’ 10] 33

Thank you! 34

Thank you! 34

Backup Slides! Motivation 35

Backup Slides! Motivation 35

Assessment of Quality n 1994: Hatton et al. pointed out a “disturbing” number of defects due to calculation errors in scientific computing software [TSE vol. 20] n 2007: Hatton reports that “many scientific results are corrupted, perhaps fatally so, by undiscovered mistakes in the software used to calculate and present those results” [Computer vol. 40] 36

Assessment of Quality n 1994: Hatton et al. pointed out a “disturbing” number of defects due to calculation errors in scientific computing software [TSE vol. 20] n 2007: Hatton reports that “many scientific results are corrupted, perhaps fatally so, by undiscovered mistakes in the software used to calculate and present those results” [Computer vol. 40] 36

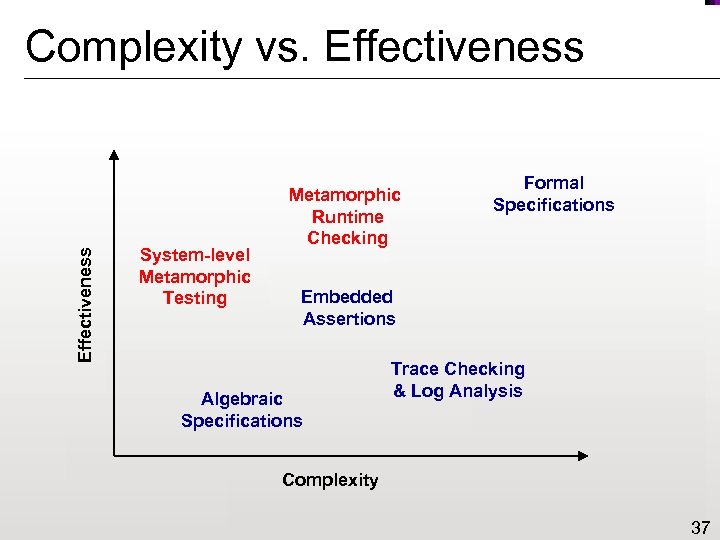

Effectiveness Complexity vs. Effectiveness System-level Metamorphic Testing Metamorphic Runtime Checking Formal Specifications Embedded Assertions Algebraic Specifications Trace Checking & Log Analysis Complexity 37

Effectiveness Complexity vs. Effectiveness System-level Metamorphic Testing Metamorphic Runtime Checking Formal Specifications Embedded Assertions Algebraic Specifications Trace Checking & Log Analysis Complexity 37

Metamorphic Motivation Properties 38

Metamorphic Motivation Properties 38

Categories of Metamorphic Properties Additive: Increase (or decrease) numerical values by a constant n Multiplicative: Multiply numerical values by a constant n Permutative: Randomly permute the order of elements in a set n Invertive: Negate the elements in a set n Inclusive: Add a new element to a set n Exclusive: Remove an element from a set n Compositional: Compose a set 39 n

Categories of Metamorphic Properties Additive: Increase (or decrease) numerical values by a constant n Multiplicative: Multiply numerical values by a constant n Permutative: Randomly permute the order of elements in a set n Invertive: Negate the elements in a set n Inclusive: Add a new element to a set n Exclusive: Remove an element from a set n Compositional: Compose a set 39 n

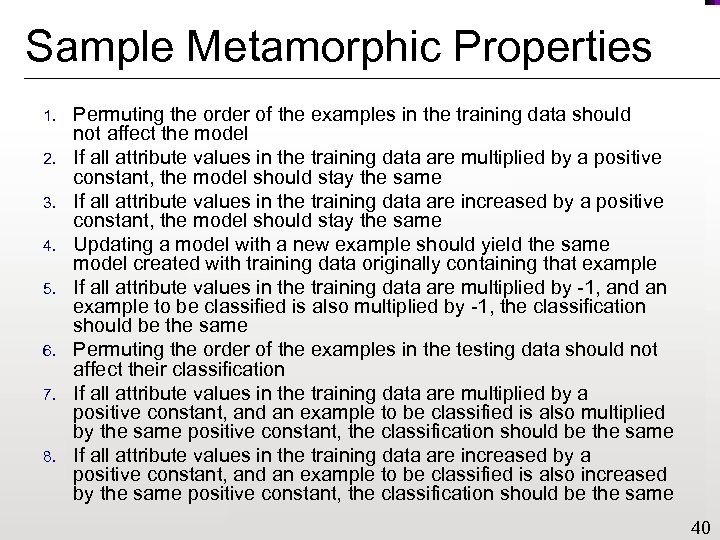

Sample Metamorphic Properties 1. 2. 3. 4. 5. 6. 7. 8. Permuting the order of the examples in the training data should not affect the model If all attribute values in the training data are multiplied by a positive constant, the model should stay the same If all attribute values in the training data are increased by a positive constant, the model should stay the same Updating a model with a new example should yield the same model created with training data originally containing that example If all attribute values in the training data are multiplied by -1, and an example to be classified is also multiplied by -1, the classification should be the same Permuting the order of the examples in the testing data should not affect their classification If all attribute values in the training data are multiplied by a positive constant, and an example to be classified is also multiplied by the same positive constant, the classification should be the same If all attribute values in the training data are increased by a positive constant, and an example to be classified is also increased by the same positive constant, the classification should be the same 40

Sample Metamorphic Properties 1. 2. 3. 4. 5. 6. 7. 8. Permuting the order of the examples in the training data should not affect the model If all attribute values in the training data are multiplied by a positive constant, the model should stay the same If all attribute values in the training data are increased by a positive constant, the model should stay the same Updating a model with a new example should yield the same model created with training data originally containing that example If all attribute values in the training data are multiplied by -1, and an example to be classified is also multiplied by -1, the classification should be the same Permuting the order of the examples in the testing data should not affect their classification If all attribute values in the training data are multiplied by a positive constant, and an example to be classified is also multiplied by the same positive constant, the classification should be the same If all attribute values in the training data are increased by a positive constant, and an example to be classified is also increased by the same positive constant, the classification should be the same 40

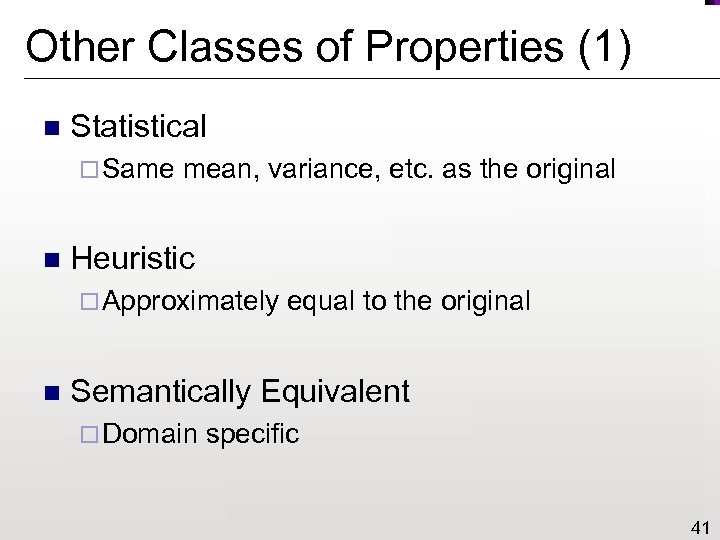

Other Classes of Properties (1) n Statistical ¨ Same n mean, variance, etc. as the original Heuristic ¨ Approximately n equal to the original Semantically Equivalent ¨ Domain specific 41

Other Classes of Properties (1) n Statistical ¨ Same n mean, variance, etc. as the original Heuristic ¨ Approximately n equal to the original Semantically Equivalent ¨ Domain specific 41

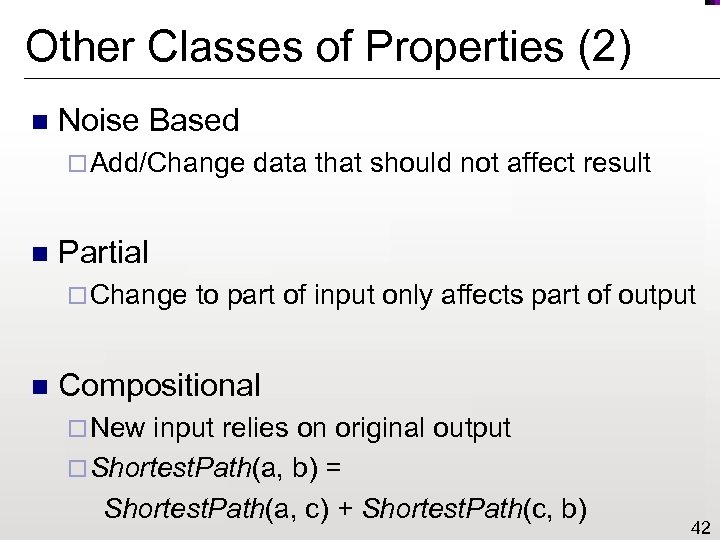

Other Classes of Properties (2) n Noise Based ¨ Add/Change n Partial ¨ Change n data that should not affect result to part of input only affects part of output Compositional ¨ New input relies on original output ¨ Shortest. Path(a, b) = Shortest. Path(a, c) + Shortest. Path(c, b) 42

Other Classes of Properties (2) n Noise Based ¨ Add/Change n Partial ¨ Change n data that should not affect result to part of input only affects part of output Compositional ¨ New input relies on original output ¨ Shortest. Path(a, b) = Shortest. Path(a, c) + Shortest. Path(c, b) 42

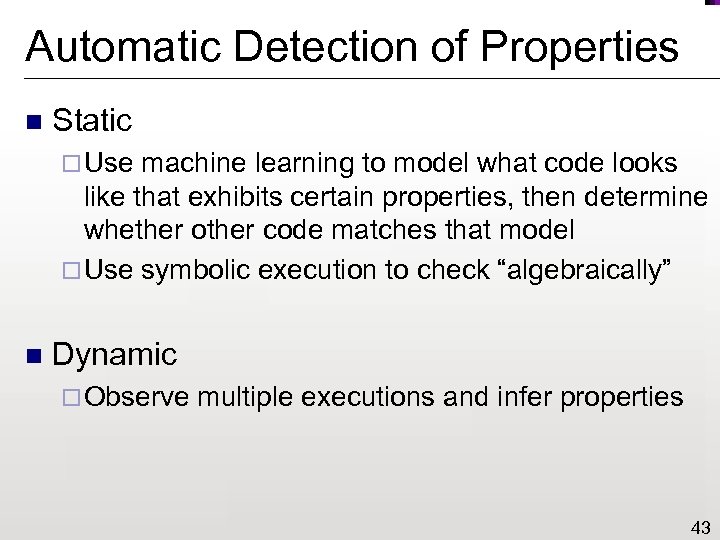

Automatic Detection of Properties n Static ¨ Use machine learning to model what code looks like that exhibits certain properties, then determine whether other code matches that model ¨ Use symbolic execution to check “algebraically” n Dynamic ¨ Observe multiple executions and infer properties 43

Automatic Detection of Properties n Static ¨ Use machine learning to model what code looks like that exhibits certain properties, then determine whether other code matches that model ¨ Use symbolic execution to check “algebraically” n Dynamic ¨ Observe multiple executions and infer properties 43

Automated Motivation Metamorphic Testing 44

Automated Motivation Metamorphic Testing 44

Automated Metamorphic Testing n Tester specifies the application’s metamorphic properties n Test framework does the rest: ¨ Transform inputs ¨ Execute program with each input ¨ Compare outputs according to specification 45

Automated Metamorphic Testing n Tester specifies the application’s metamorphic properties n Test framework does the rest: ¨ Transform inputs ¨ Execute program with each input ¨ Compare outputs according to specification 45

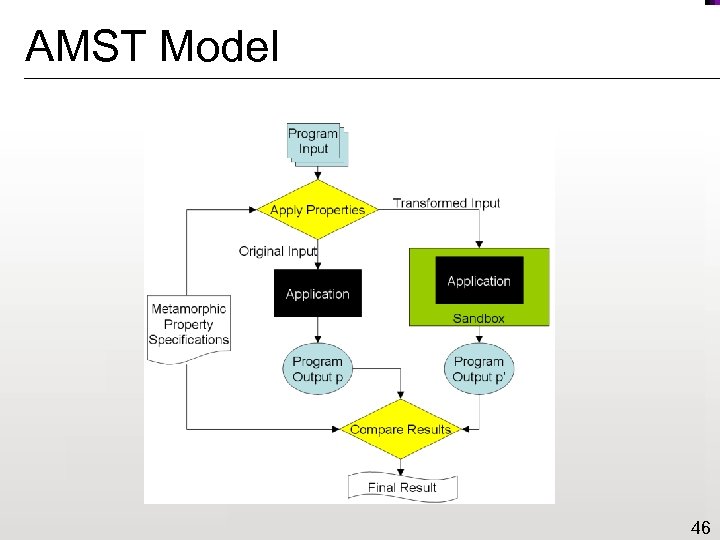

AMST Model 46

AMST Model 46

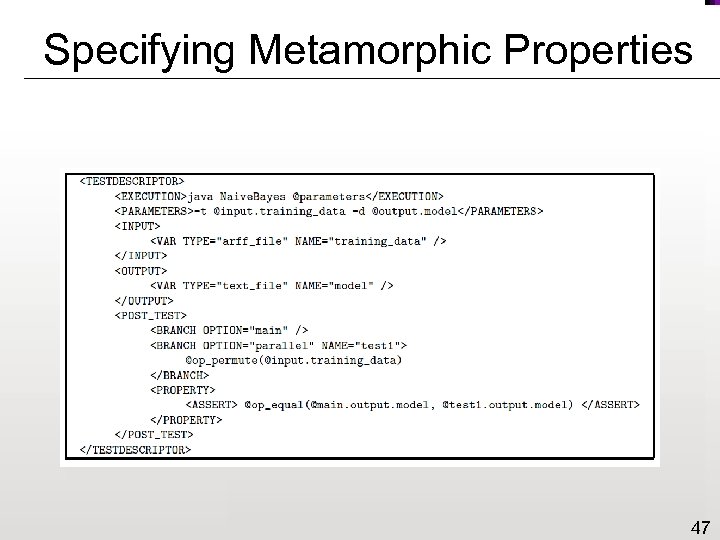

Specifying Metamorphic Properties 47

Specifying Metamorphic Properties 47

Heuristic Motivation Metamorphic Testing 48

Heuristic Motivation Metamorphic Testing 48

Statistical Metamorphic Testing n Introduced by Guderlei & Mayer in 2007 n The application is run multiple times with the same input to get a mean value μo and variance σo Metamorphic properties are applied The application is run multiple times with the new input to get a mean value μ 1 and variance σ1 n n n If the means are not statistically similar, then the property is considered violated 49

Statistical Metamorphic Testing n Introduced by Guderlei & Mayer in 2007 n The application is run multiple times with the same input to get a mean value μo and variance σo Metamorphic properties are applied The application is run multiple times with the new input to get a mean value μ 1 and variance σ1 n n n If the means are not statistically similar, then the property is considered violated 49

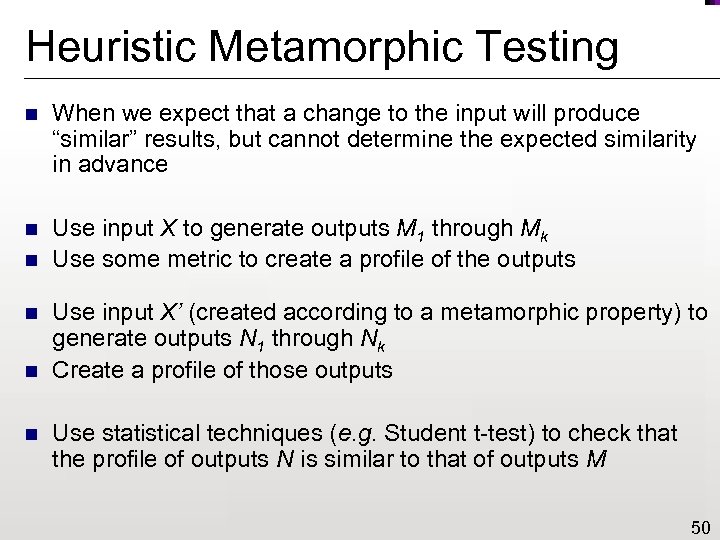

Heuristic Metamorphic Testing n When we expect that a change to the input will produce “similar” results, but cannot determine the expected similarity in advance n Use input X to generate outputs M 1 through Mk Use some metric to create a profile of the outputs n n Use input X’ (created according to a metamorphic property) to generate outputs N 1 through Nk Create a profile of those outputs Use statistical techniques (e. g. Student t-test) to check that the profile of outputs N is similar to that of outputs M 50

Heuristic Metamorphic Testing n When we expect that a change to the input will produce “similar” results, but cannot determine the expected similarity in advance n Use input X to generate outputs M 1 through Mk Use some metric to create a profile of the outputs n n Use input X’ (created according to a metamorphic property) to generate outputs N 1 through Nk Create a profile of those outputs Use statistical techniques (e. g. Student t-test) to check that the profile of outputs N is similar to that of outputs M 50

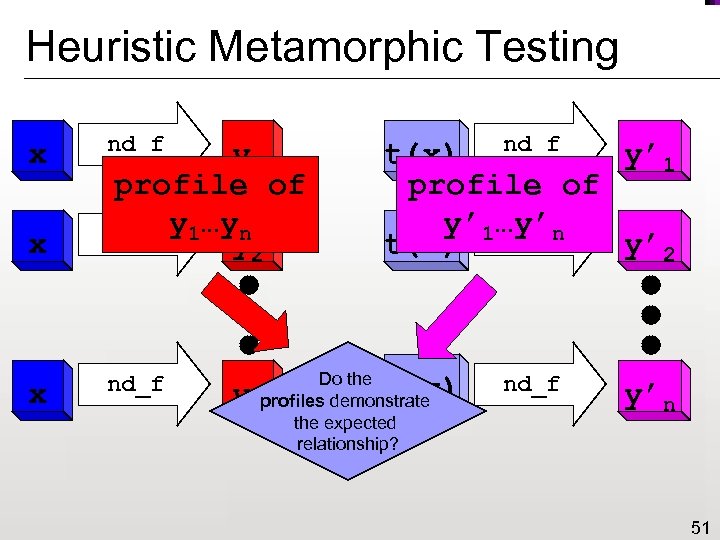

Heuristic Metamorphic Testing x x x nd_f y 1 profile of nd_f y 1…yn y 2 nd_f y y’ 1 t(x) nd_f profile of y’ 1…y’n t(x) nd_f y’ 2 Do the nprofiles demonstrate the expected relationship? t(x) nd_f y’n 51

Heuristic Metamorphic Testing x x x nd_f y 1 profile of nd_f y 1…yn y 2 nd_f y y’ 1 t(x) nd_f profile of y’ 1…y’n t(x) nd_f y’ 2 Do the nprofiles demonstrate the expected relationship? t(x) nd_f y’n 51

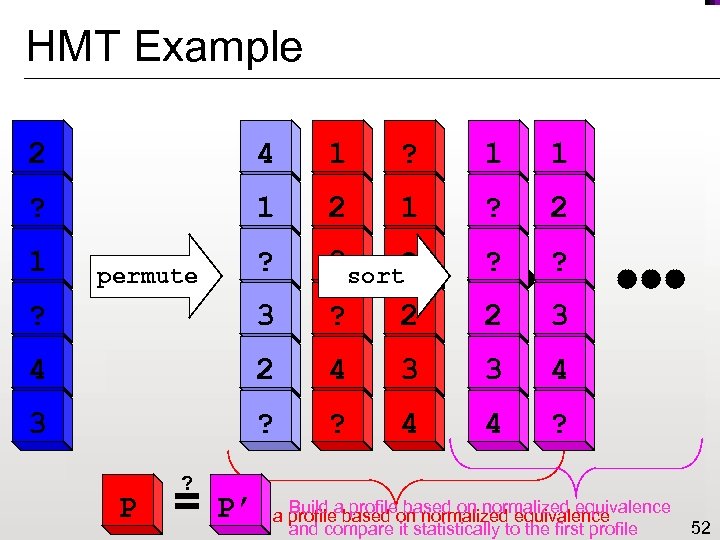

HMT Example 2 4 1 1 ? 2 ? 2 3 sort? ? 3 ? 2 2 3 4 2 4 4 3 3 4 3 ? ? 4 4 ? 1 permute sort P ? = P’ a profileabased on normalized equivalence Build profile based on normalized equivalence and compare it statistically to the first profile 52

HMT Example 2 4 1 1 ? 2 ? 2 3 sort? ? 3 ? 2 2 3 4 2 4 4 3 3 4 3 ? ? 4 4 ? 1 permute sort P ? = P’ a profileabased on normalized equivalence Build profile based on normalized equivalence and compare it statistically to the first profile 52

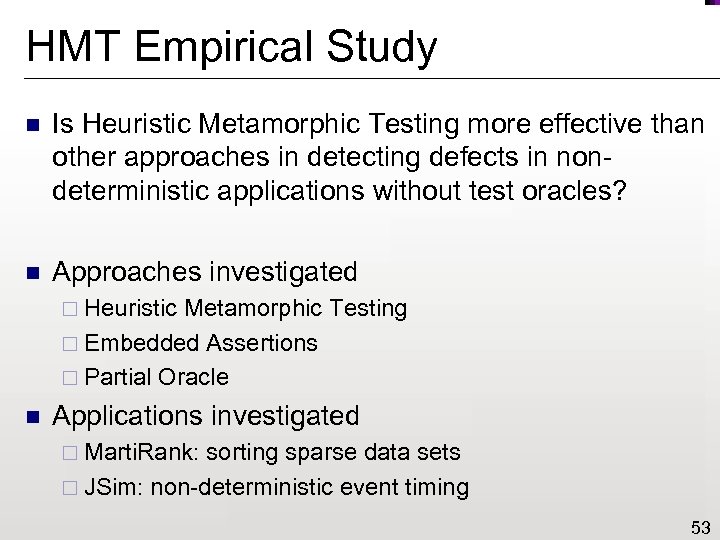

HMT Empirical Study n Is Heuristic Metamorphic Testing more effective than other approaches in detecting defects in nondeterministic applications without test oracles? n Approaches investigated ¨ Heuristic Metamorphic Testing ¨ Embedded Assertions ¨ Partial Oracle n Applications investigated ¨ Marti. Rank: sorting sparse data sets ¨ JSim: non-deterministic event timing 53

HMT Empirical Study n Is Heuristic Metamorphic Testing more effective than other approaches in detecting defects in nondeterministic applications without test oracles? n Approaches investigated ¨ Heuristic Metamorphic Testing ¨ Embedded Assertions ¨ Partial Oracle n Applications investigated ¨ Marti. Rank: sorting sparse data sets ¨ JSim: non-deterministic event timing 53

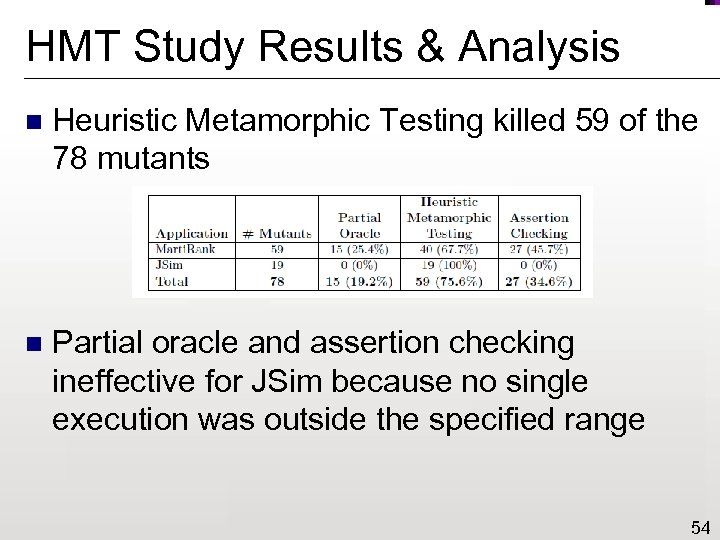

HMT Study Results & Analysis n Heuristic Metamorphic Testing killed 59 of the 78 mutants n Partial oracle and assertion checking ineffective for JSim because no single execution was outside the specified range 54

HMT Study Results & Analysis n Heuristic Metamorphic Testing killed 59 of the 78 mutants n Partial oracle and assertion checking ineffective for JSim because no single execution was outside the specified range 54

Metamorphic Motivation Runtime Checking 55

Metamorphic Motivation Runtime Checking 55

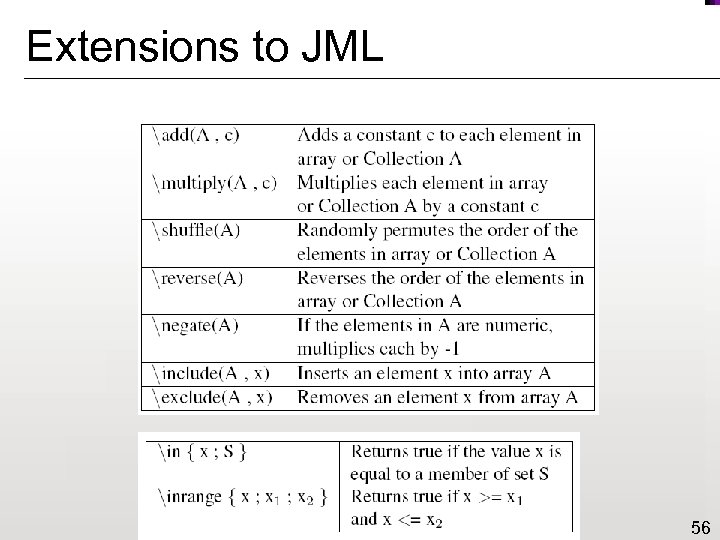

Extensions to JML 56

Extensions to JML 56

Creating Test Functions /*@ @meta std_dev(multiply(A, 2)) == result * 2 */ public double __std_dev(double[] A) {. . . } protected boolean __MRCtest 0_std_dev (double[] A, double result) { return Columbus. approximately. Equal. To (__std_dev(Columbus. multiply(A, 2)), result * 2); } 57

Creating Test Functions /*@ @meta std_dev(multiply(A, 2)) == result * 2 */ public double __std_dev(double[] A) {. . . } protected boolean __MRCtest 0_std_dev (double[] A, double result) { return Columbus. approximately. Equal. To (__std_dev(Columbus. multiply(A, 2)), result * 2); } 57

![Instrumentation public double std_dev(double[] A) { // call original function and save result double Instrumentation public double std_dev(double[] A) { // call original function and save result double](https://present5.com/presentation/6fafd954da21fccee8039c72da9fe3fa/image-58.jpg) Instrumentation public double std_dev(double[] A) { // call original function and save result double result = __std_dev(A); // create sandbox int pid = Columbus. create. Sandbox(); // program continues as normal if (pid != 0) return result; else { // run test in child process if (!__MRCtest 0_std_dev(A, result)) Columbus. fail(); // handle failure Columbus. exit(); // clean up } } 58

Instrumentation public double std_dev(double[] A) { // call original function and save result double result = __std_dev(A); // create sandbox int pid = Columbus. create. Sandbox(); // program continues as normal if (pid != 0) return result; else { // run test in child process if (!__MRCtest 0_std_dev(A, result)) Columbus. fail(); // handle failure Columbus. exit(); // clean up } } 58

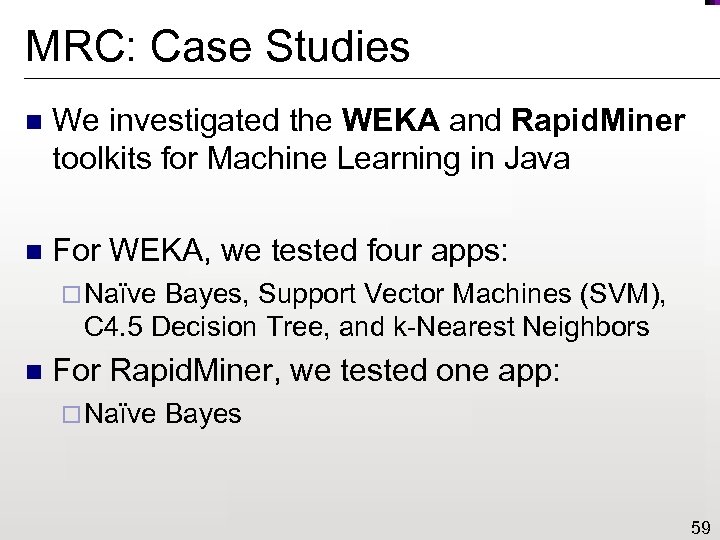

MRC: Case Studies n We investigated the WEKA and Rapid. Miner toolkits for Machine Learning in Java n For WEKA, we tested four apps: ¨ Naïve Bayes, Support Vector Machines (SVM), C 4. 5 Decision Tree, and k-Nearest Neighbors n For Rapid. Miner, we tested one app: ¨ Naïve Bayes 59

MRC: Case Studies n We investigated the WEKA and Rapid. Miner toolkits for Machine Learning in Java n For WEKA, we tested four apps: ¨ Naïve Bayes, Support Vector Machines (SVM), C 4. 5 Decision Tree, and k-Nearest Neighbors n For Rapid. Miner, we tested one app: ¨ Naïve Bayes 59

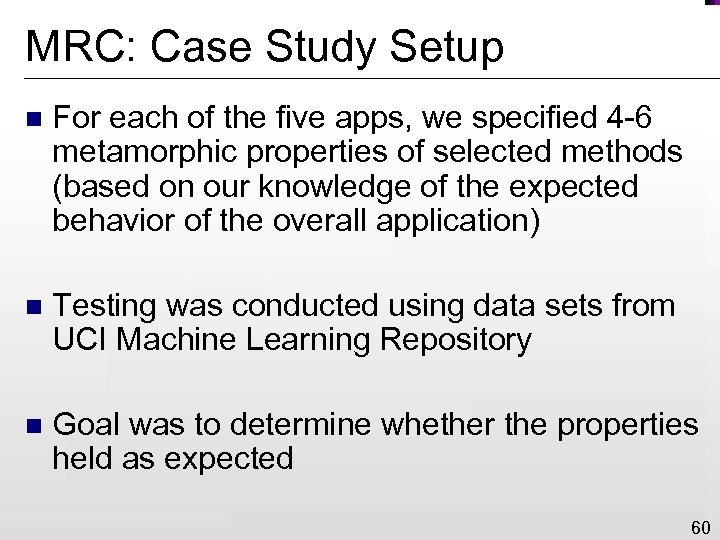

MRC: Case Study Setup n For each of the five apps, we specified 4 -6 metamorphic properties of selected methods (based on our knowledge of the expected behavior of the overall application) n Testing was conducted using data sets from UCI Machine Learning Repository n Goal was to determine whether the properties held as expected 60

MRC: Case Study Setup n For each of the five apps, we specified 4 -6 metamorphic properties of selected methods (based on our knowledge of the expected behavior of the overall application) n Testing was conducted using data sets from UCI Machine Learning Repository n Goal was to determine whether the properties held as expected 60

MRC: Case Study Findings n Discovered defects in WEKA k-NN and WEKA Naïve Bayes related to modifying the machine learning “model” ¨ This was the result of a variable not being updated appropriately n Discovered a defect in Rapid. Miner Naïve Bayes related to determining confidence ¨ There was an error in the calculation 61

MRC: Case Study Findings n Discovered defects in WEKA k-NN and WEKA Naïve Bayes related to modifying the machine learning “model” ¨ This was the result of a variable not being updated appropriately n Discovered a defect in Rapid. Miner Naïve Bayes related to determining confidence ¨ There was an error in the calculation 61

Metamorphic Testing Motivation Experimental Study 62

Metamorphic Testing Motivation Experimental Study 62

Approaches Not Investigated n Formal specification ¨ Issues related to completeness ¨ Prev. work converted specifications to invariants n Algebraic properties ¨ Not appropriate at system-level ¨ Automatic detection only supported in Java n Log/trace file analysis ¨ Need n more detailed knowledge of implementation Pseudo-oracles ¨ None appropriate for applications investigated 63

Approaches Not Investigated n Formal specification ¨ Issues related to completeness ¨ Prev. work converted specifications to invariants n Algebraic properties ¨ Not appropriate at system-level ¨ Automatic detection only supported in Java n Log/trace file analysis ¨ Need n more detailed knowledge of implementation Pseudo-oracles ¨ None appropriate for applications investigated 63

Methodology: Metamorphic Testing n Each variant (containing one mutation) acted as a pseudo-oracle for itself: ¨ Program was run to produce an output with the original input dataset ¨ Metamorphic properties applied to create new input datasets ¨ Program run on new inputs to create new outputs ¨ If outputs not as expected, the mutant had been killed (i. e. the defect had been detected) 64

Methodology: Metamorphic Testing n Each variant (containing one mutation) acted as a pseudo-oracle for itself: ¨ Program was run to produce an output with the original input dataset ¨ Metamorphic properties applied to create new input datasets ¨ Program run on new inputs to create new outputs ¨ If outputs not as expected, the mutant had been killed (i. e. the defect had been detected) 64

Methodology: Partial Oracle n Data sets were chosen so that the correct output could be calculated by hand n These data sets were typically smaller than the ones used for other approaches n To ensure fairness, the data sets were selected so that the line coverage was approximately the same for each approach 65

Methodology: Partial Oracle n Data sets were chosen so that the correct output could be calculated by hand n These data sets were typically smaller than the ones used for other approaches n To ensure fairness, the data sets were selected so that the line coverage was approximately the same for each approach 65

Methodology: Runtime Assertion Checking n Daikon was used to detect program invariants in the “gold standard” implementation n Because Daikon can generate spurious invariants, programs were run with a variety of inputs, and obvious spurious invariants were discarded n Invariants then checked at runtime 66

Methodology: Runtime Assertion Checking n Daikon was used to detect program invariants in the “gold standard” implementation n Because Daikon can generate spurious invariants, programs were run with a variety of inputs, and obvious spurious invariants were discarded n Invariants then checked at runtime 66

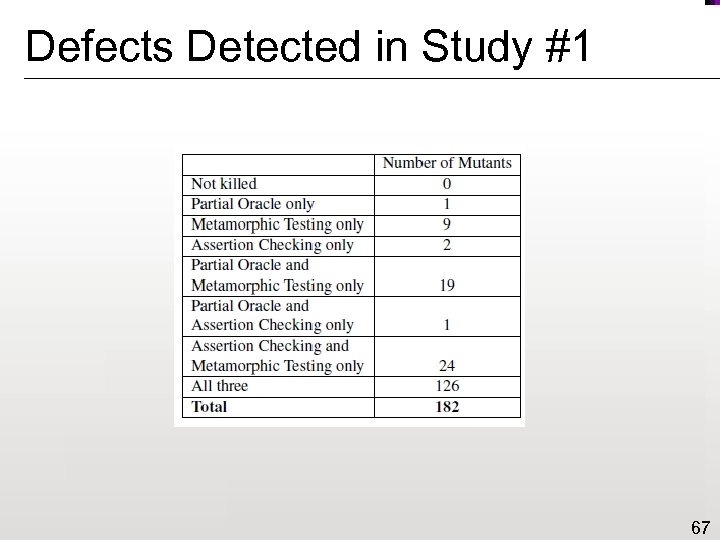

Defects Detected in Study #1 67

Defects Detected in Study #1 67

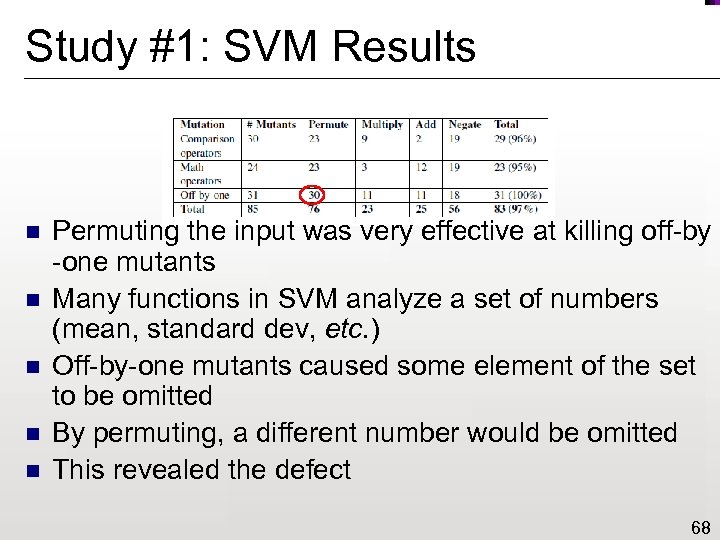

Study #1: SVM Results n n n Permuting the input was very effective at killing off-by -one mutants Many functions in SVM analyze a set of numbers (mean, standard dev, etc. ) Off-by-one mutants caused some element of the set to be omitted By permuting, a different number would be omitted This revealed the defect 68

Study #1: SVM Results n n n Permuting the input was very effective at killing off-by -one mutants Many functions in SVM analyze a set of numbers (mean, standard dev, etc. ) Off-by-one mutants caused some element of the set to be omitted By permuting, a different number would be omitted This revealed the defect 68

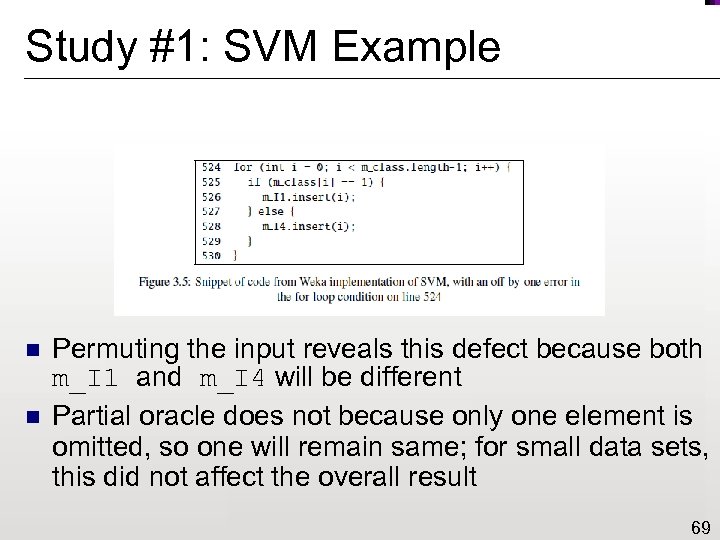

Study #1: SVM Example n n Permuting the input reveals this defect because both m_I 1 and m_I 4 will be different Partial oracle does not because only one element is omitted, so one will remain same; for small data sets, this did not affect the overall result 69

Study #1: SVM Example n n Permuting the input reveals this defect because both m_I 1 and m_I 4 will be different Partial oracle does not because only one element is omitted, so one will remain same; for small data sets, this did not affect the overall result 69

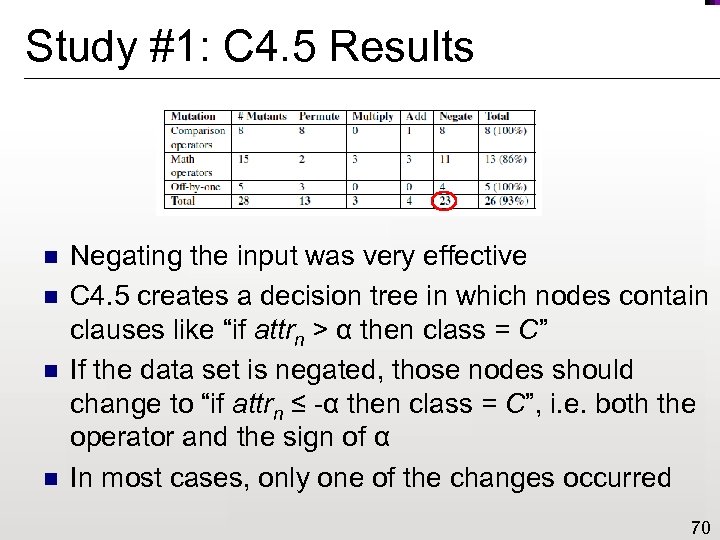

Study #1: C 4. 5 Results n n Negating the input was very effective C 4. 5 creates a decision tree in which nodes contain clauses like “if attrn > α then class = C” If the data set is negated, those nodes should change to “if attrn ≤ -α then class = C”, i. e. both the operator and the sign of α In most cases, only one of the changes occurred 70

Study #1: C 4. 5 Results n n Negating the input was very effective C 4. 5 creates a decision tree in which nodes contain clauses like “if attrn > α then class = C” If the data set is negated, those nodes should change to “if attrn ≤ -α then class = C”, i. e. both the operator and the sign of α In most cases, only one of the changes occurred 70

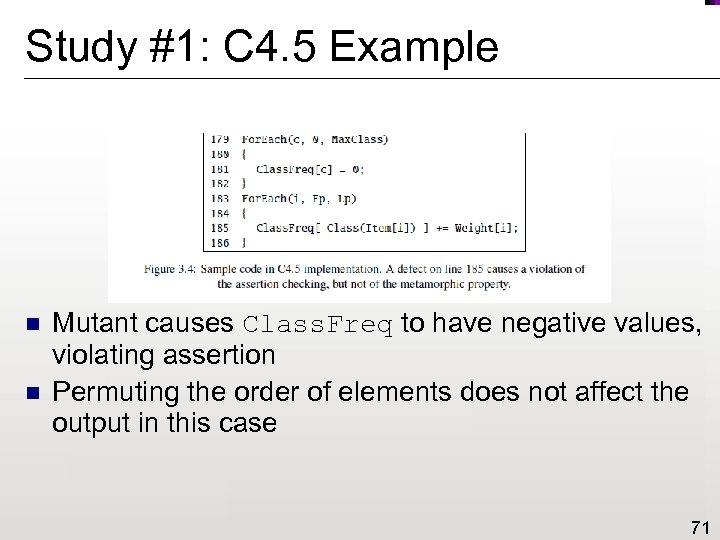

Study #1: C 4. 5 Example n n Mutant causes Class. Freq to have negative values, violating assertion Permuting the order of elements does not affect the output in this case 71

Study #1: C 4. 5 Example n n Mutant causes Class. Freq to have negative values, violating assertion Permuting the order of elements does not affect the output in this case 71

Study #1: Marti. Rank Results n n n Permuting and negating were effective at killing comparison operator mutants Marti. Rank depends heavily on sorting Permuting and negating change which numbers get sorted and what the result should be, thus inducing the differences in the final sorted list 72

Study #1: Marti. Rank Results n n n Permuting and negating were effective at killing comparison operator mutants Marti. Rank depends heavily on sorting Permuting and negating change which numbers get sorted and what the result should be, thus inducing the differences in the final sorted list 72

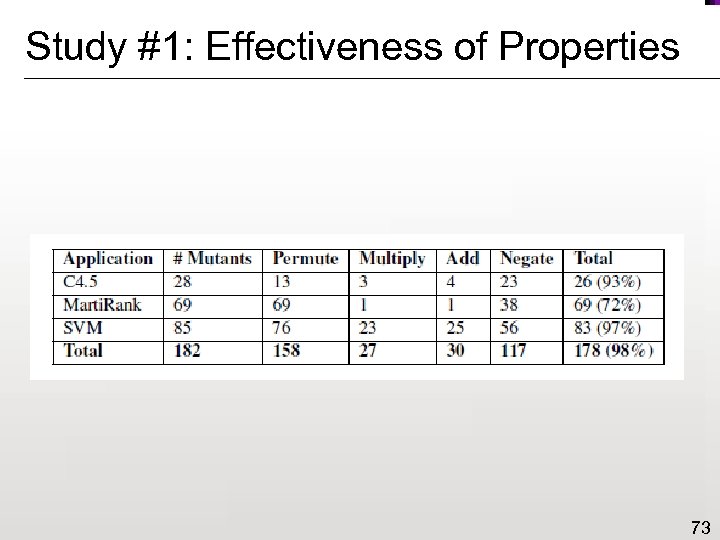

Study #1: Effectiveness of Properties 73

Study #1: Effectiveness of Properties 73

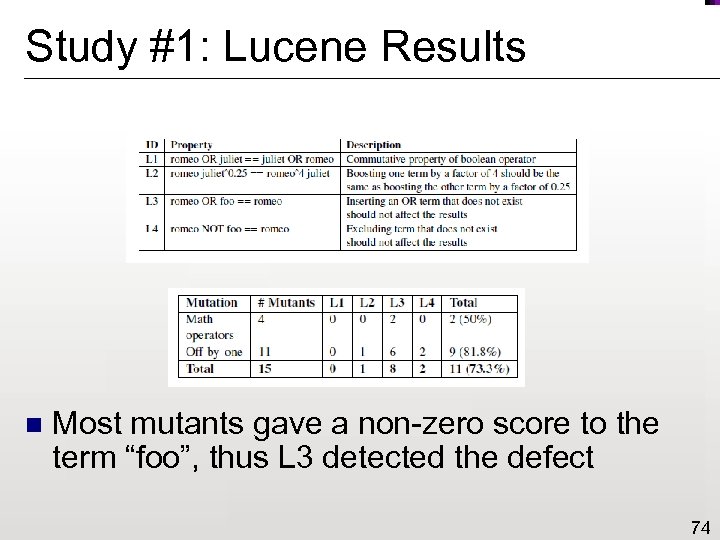

Study #1: Lucene Results n Most mutants gave a non-zero score to the term “foo”, thus L 3 detected the defect 74

Study #1: Lucene Results n Most mutants gave a non-zero score to the term “foo”, thus L 3 detected the defect 74

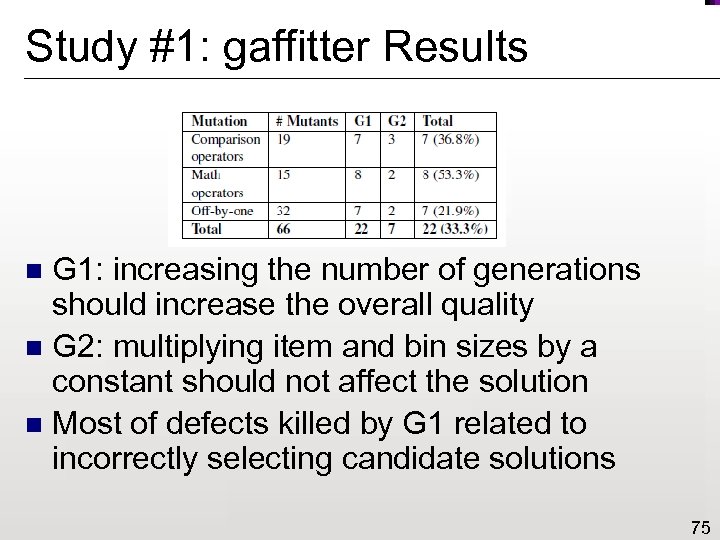

Study #1: gaffitter Results G 1: increasing the number of generations should increase the overall quality n G 2: multiplying item and bin sizes by a constant should not affect the solution n Most of defects killed by G 1 related to incorrectly selecting candidate solutions n 75

Study #1: gaffitter Results G 1: increasing the number of generations should increase the overall quality n G 2: multiplying item and bin sizes by a constant should not affect the solution n Most of defects killed by G 1 related to incorrectly selecting candidate solutions n 75

Empirical Studies: Threats to Validity Representativeness of selected programs n Types of defects n Data sets n Daikon-generated program invariants n Selection of metamorphic properties n 76

Empirical Studies: Threats to Validity Representativeness of selected programs n Types of defects n Data sets n Daikon-generated program invariants n Selection of metamorphic properties n 76

Metamorphic Runtime Motivation Checking Experimental Study 77

Metamorphic Runtime Motivation Checking Experimental Study 77

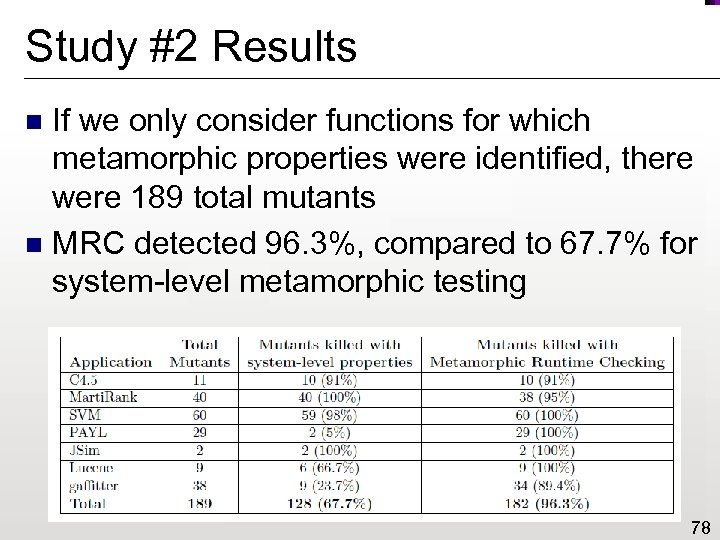

Study #2 Results If we only consider functions for which metamorphic properties were identified, there were 189 total mutants n MRC detected 96. 3%, compared to 67. 7% for system-level metamorphic testing n 78

Study #2 Results If we only consider functions for which metamorphic properties were identified, there were 189 total mutants n MRC detected 96. 3%, compared to 67. 7% for system-level metamorphic testing n 78

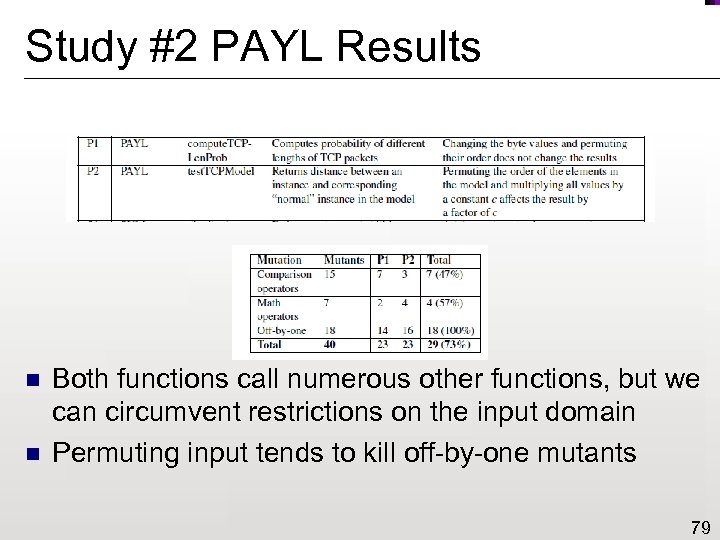

Study #2 PAYL Results n n Both functions call numerous other functions, but we can circumvent restrictions on the input domain Permuting input tends to kill off-by-one mutants 79

Study #2 PAYL Results n n Both functions call numerous other functions, but we can circumvent restrictions on the input domain Permuting input tends to kill off-by-one mutants 79

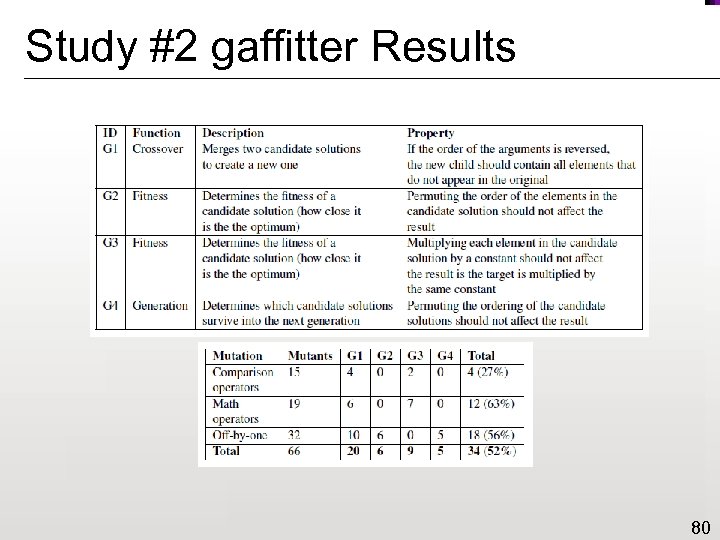

Study #2 gaffitter Results 80

Study #2 gaffitter Results 80

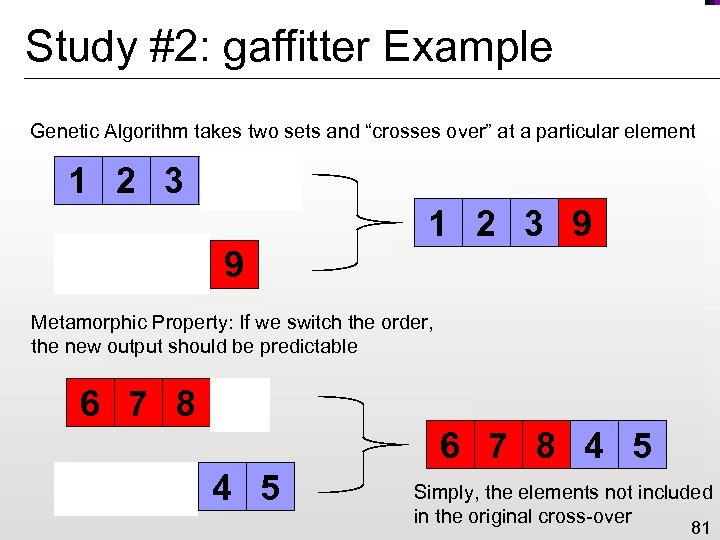

Study #2: gaffitter Example Genetic Algorithm takes two sets and “crosses over” at a particular element 1 2 3 4 5 1 2 3 9 6 7 8 9 Metamorphic Property: If we switch the order, the new output should be predictable 6 7 8 9 6 7 8 4 5 1 2 3 4 5 Simply, the elements not included in the original cross-over 81

Study #2: gaffitter Example Genetic Algorithm takes two sets and “crosses over” at a particular element 1 2 3 4 5 1 2 3 9 6 7 8 9 Metamorphic Property: If we switch the order, the new output should be predictable 6 7 8 9 6 7 8 4 5 1 2 3 4 5 Simply, the elements not included in the original cross-over 81

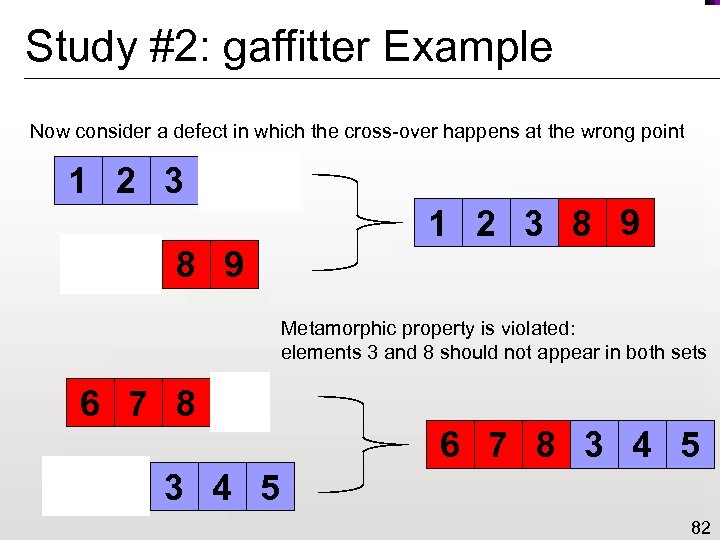

Study #2: gaffitter Example Now consider a defect in which the cross-over happens at the wrong point 1 2 3 4 5 1 2 3 8 9 6 7 8 9 Metamorphic property is violated: elements 3 and 8 should not appear in both sets 6 7 8 9 6 7 8 3 4 5 1 2 3 4 5 82

Study #2: gaffitter Example Now consider a defect in which the cross-over happens at the wrong point 1 2 3 4 5 1 2 3 8 9 6 7 8 9 Metamorphic property is violated: elements 3 and 8 should not appear in both sets 6 7 8 9 6 7 8 3 4 5 1 2 3 4 5 82

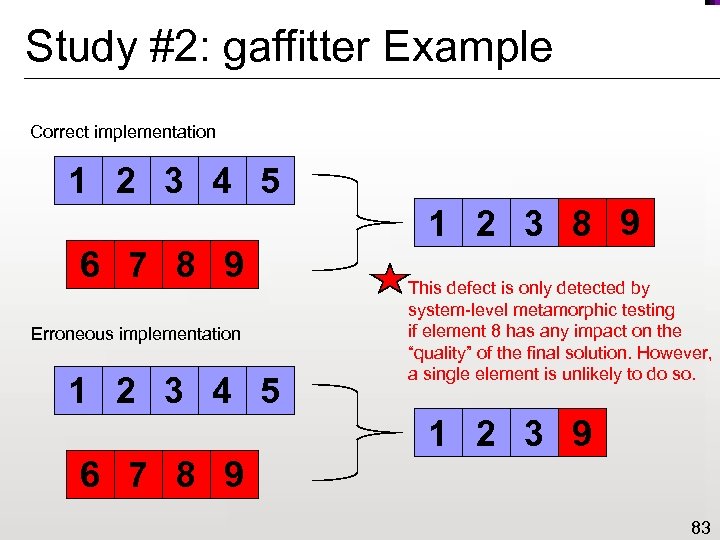

Study #2: gaffitter Example Correct implementation 1 2 3 4 5 1 2 3 8 9 6 7 8 9 Erroneous implementation 1 2 3 4 5 This defect is only detected by system-level metamorphic testing if element 8 has any impact on the “quality” of the final solution. However, a single element is unlikely to do so. 1 2 3 9 6 7 8 9 83

Study #2: gaffitter Example Correct implementation 1 2 3 4 5 1 2 3 8 9 6 7 8 9 Erroneous implementation 1 2 3 4 5 This defect is only detected by system-level metamorphic testing if element 8 has any impact on the “quality” of the final solution. However, a single element is unlikely to do so. 1 2 3 9 6 7 8 9 83

Study #2 Lucene Results n MRC killed three mutants not killed by MT n All three were in the idf function 84

Study #2 Lucene Results n MRC killed three mutants not killed by MT n All three were in the idf function 84

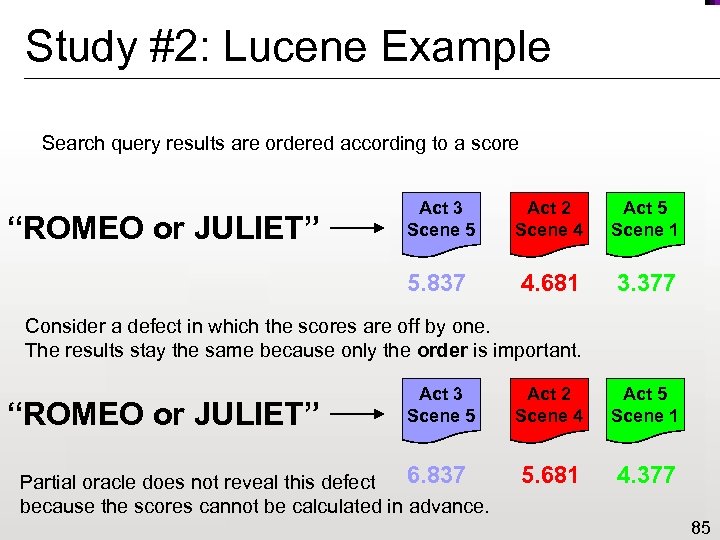

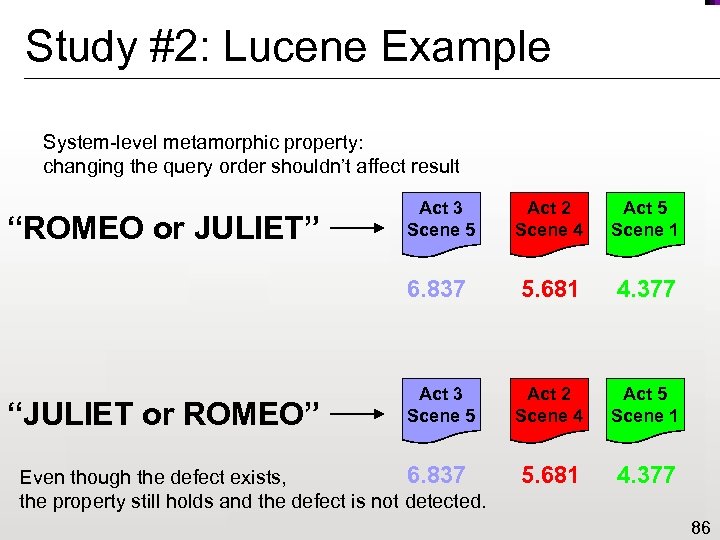

Study #2: Lucene Example Search query results are ordered according to a score Act 2 Scene 4 Act 5 Scene 1 5. 837 “ROMEO or JULIET” Act 3 Scene 5 4. 681 3. 377 Consider a defect in which the scores are off by one. The results stay the same because only the order is important. “ROMEO or JULIET” Act 3 Scene 5 6. 837 Partial oracle does not reveal this defect because the scores cannot be calculated in advance. Act 2 Scene 4 Act 5 Scene 1 5. 681 4. 377 85

Study #2: Lucene Example Search query results are ordered according to a score Act 2 Scene 4 Act 5 Scene 1 5. 837 “ROMEO or JULIET” Act 3 Scene 5 4. 681 3. 377 Consider a defect in which the scores are off by one. The results stay the same because only the order is important. “ROMEO or JULIET” Act 3 Scene 5 6. 837 Partial oracle does not reveal this defect because the scores cannot be calculated in advance. Act 2 Scene 4 Act 5 Scene 1 5. 681 4. 377 85

Study #2: Lucene Example System-level metamorphic property: changing the query order shouldn’t affect result “JULIET or ROMEO” Act 2 Scene 4 Act 5 Scene 1 6. 837 “ROMEO or JULIET” Act 3 Scene 5 5. 681 4. 377 Act 3 Scene 5 Act 2 Scene 4 Act 5 Scene 1 5. 681 4. 377 6. 837 Even though the defect exists, the property still holds and the defect is not detected. 86

Study #2: Lucene Example System-level metamorphic property: changing the query order shouldn’t affect result “JULIET or ROMEO” Act 2 Scene 4 Act 5 Scene 1 6. 837 “ROMEO or JULIET” Act 3 Scene 5 5. 681 4. 377 Act 3 Scene 5 Act 2 Scene 4 Act 5 Scene 1 5. 681 4. 377 6. 837 Even though the defect exists, the property still holds and the defect is not detected. 86

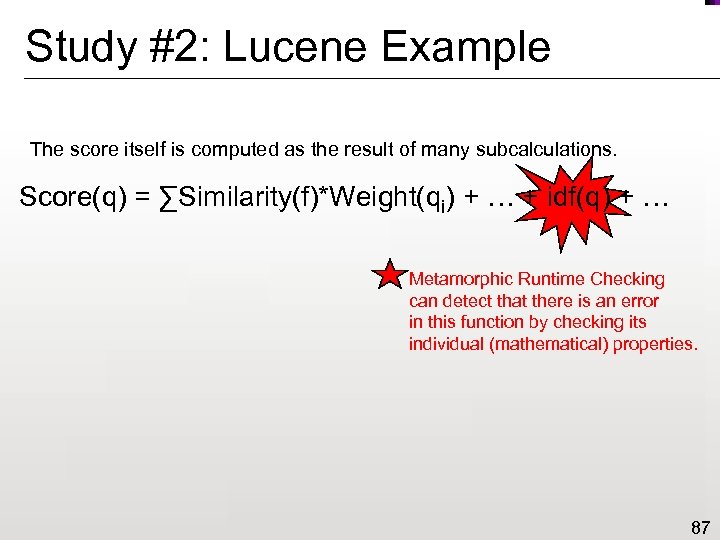

Study #2: Lucene Example The score itself is computed as the result of many subcalculations. Score(q) = ∑Similarity(f)*Weight(qi) + … + idf(q) + … Metamorphic Runtime Checking can detect that there is an error in this function by checking its individual (mathematical) properties. 87

Study #2: Lucene Example The score itself is computed as the result of many subcalculations. Score(q) = ∑Similarity(f)*Weight(qi) + … + idf(q) + … Metamorphic Runtime Checking can detect that there is an error in this function by checking its individual (mathematical) properties. 87

In Vivo Testing Motivation 88

In Vivo Testing Motivation 88

Generalization of MRC n In Metamorphic Runtime Checking, the software tests itself Why only run metamorphic tests? n Why limit ourselves only to applications without test oracles? n Why not allow the software to continue testing itself as it runs in the production environment? n 89

Generalization of MRC n In Metamorphic Runtime Checking, the software tests itself Why only run metamorphic tests? n Why limit ourselves only to applications without test oracles? n Why not allow the software to continue testing itself as it runs in the production environment? n 89

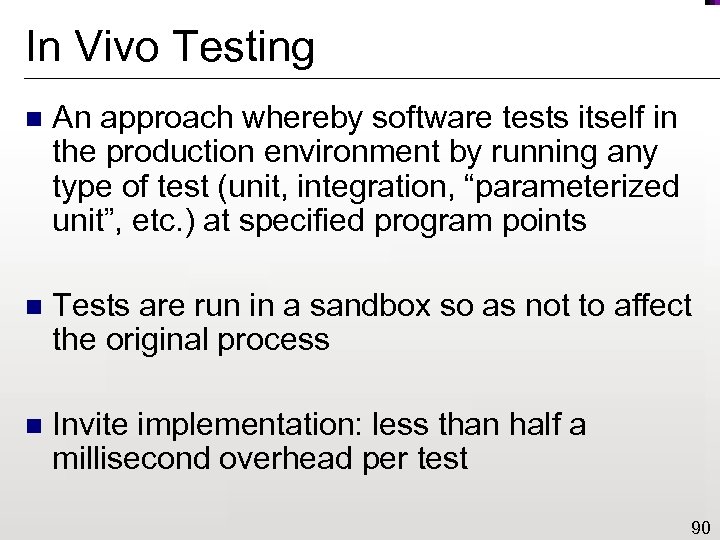

In Vivo Testing n An approach whereby software tests itself in the production environment by running any type of test (unit, integration, “parameterized unit”, etc. ) at specified program points n Tests are run in a sandbox so as not to affect the original process n Invite implementation: less than half a millisecond overhead per test 90

In Vivo Testing n An approach whereby software tests itself in the production environment by running any type of test (unit, integration, “parameterized unit”, etc. ) at specified program points n Tests are run in a sandbox so as not to affect the original process n Invite implementation: less than half a millisecond overhead per test 90

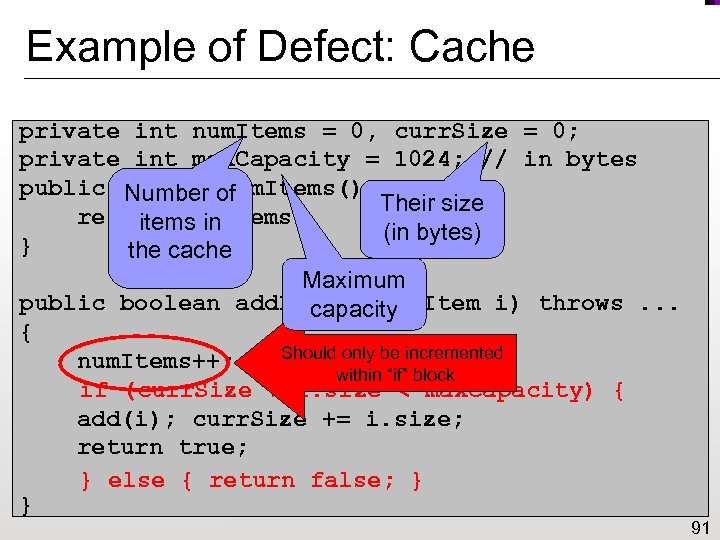

Example of Defect: Cache private int num. Items = 0, curr. Size = 0; private int max. Capacity = 1024; // in bytes public int get. Num. Items() { Number of Their size return num. Items; items in (in bytes) } the cache Maximum public boolean add. Item(Cache. Item i) throws. . . capacity { Should only be incremented num. Items++; within “if” block if (curr. Size + i. size < max. Capacity) { add(i); curr. Size += i. size; return true; } else { return false; } } 91

Example of Defect: Cache private int num. Items = 0, curr. Size = 0; private int max. Capacity = 1024; // in bytes public int get. Num. Items() { Number of Their size return num. Items; items in (in bytes) } the cache Maximum public boolean add. Item(Cache. Item i) throws. . . capacity { Should only be incremented num. Items++; within “if” block if (curr. Size + i. size < max. Capacity) { add(i); curr. Size += i. size; return true; } else { return false; } } 91

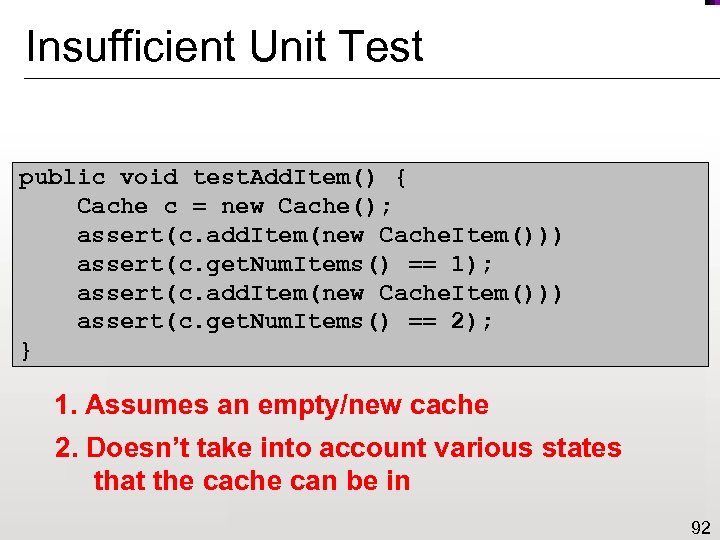

Insufficient Unit Test public void test. Add. Item() { Cache c = new Cache(); assert(c. add. Item(new Cache. Item())) assert(c. get. Num. Items() == 1); assert(c. add. Item(new Cache. Item())) assert(c. get. Num. Items() == 2); } 1. Assumes an empty/new cache 2. Doesn’t take into account various states that the cache can be in 92

Insufficient Unit Test public void test. Add. Item() { Cache c = new Cache(); assert(c. add. Item(new Cache. Item())) assert(c. get. Num. Items() == 1); assert(c. add. Item(new Cache. Item())) assert(c. get. Num. Items() == 2); } 1. Assumes an empty/new cache 2. Doesn’t take into account various states that the cache can be in 92

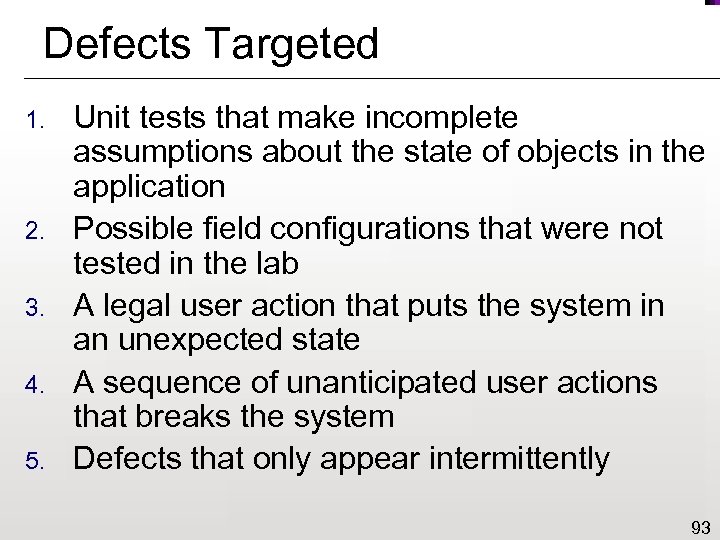

Defects Targeted 1. 2. 3. 4. 5. Unit tests that make incomplete assumptions about the state of objects in the application Possible field configurations that were not tested in the lab A legal user action that puts the system in an unexpected state A sequence of unanticipated user actions that breaks the system Defects that only appear intermittently 93

Defects Targeted 1. 2. 3. 4. 5. Unit tests that make incomplete assumptions about the state of objects in the application Possible field configurations that were not tested in the lab A legal user action that puts the system in an unexpected state A sequence of unanticipated user actions that breaks the system Defects that only appear intermittently 93

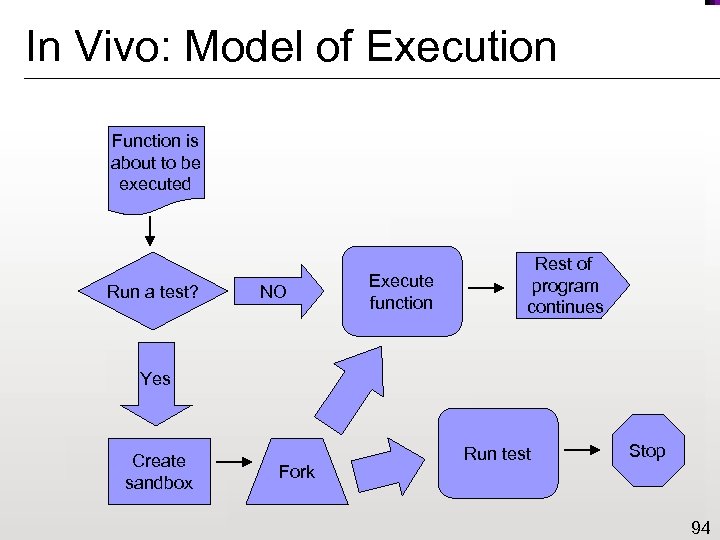

In Vivo: Model of Execution Function is about to be executed Run a test? NO Execute function Rest of program continues Yes Create sandbox Fork Run test Stop 94

In Vivo: Model of Execution Function is about to be executed Run a test? NO Execute function Rest of program continues Yes Create sandbox Fork Run test Stop 94

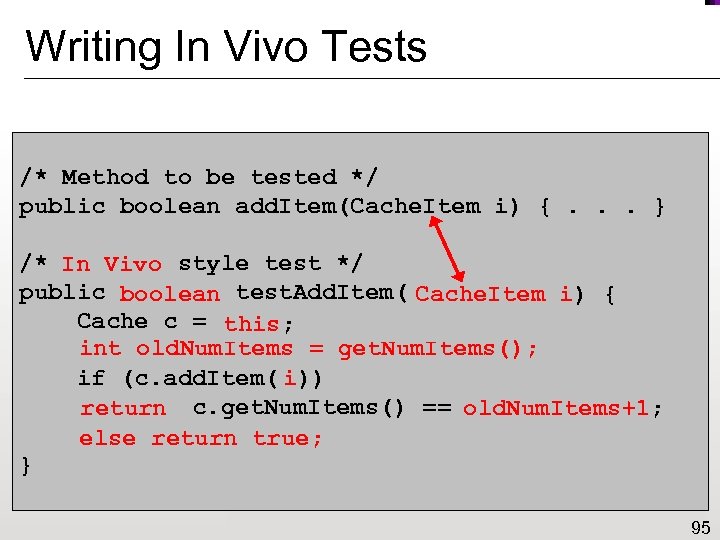

Writing In Vivo Tests /* Method to be tested */ public boolean add. Item(Cache. Item i) {. . . } /* In Vivo style test */ JUnit public boolean test. Add. Item() { void Cache. Item i) { Cache c = new Cache(); this; int old. Num. Items = get. Num. Items(); i)) if (c. add. Item(new Cache. Item())) assert (c. get. Num. Items() == old. Num. Items+1; 1); return else return true; } 95

Writing In Vivo Tests /* Method to be tested */ public boolean add. Item(Cache. Item i) {. . . } /* In Vivo style test */ JUnit public boolean test. Add. Item() { void Cache. Item i) { Cache c = new Cache(); this; int old. Num. Items = get. Num. Items(); i)) if (c. add. Item(new Cache. Item())) assert (c. get. Num. Items() == old. Num. Items+1; 1); return else return true; } 95

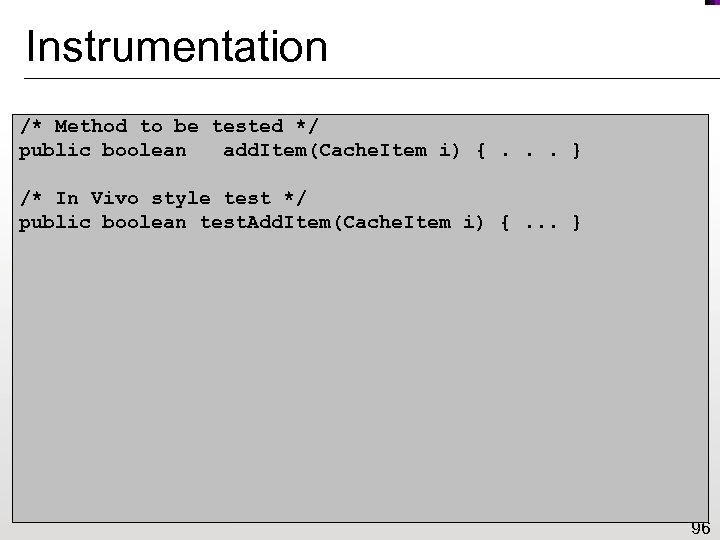

Instrumentation /* Method to be tested */ public boolean __add. Item(Cache. Item i) {. . . } /* In Vivo style test */ public boolean test. Add. Item(Cache. Item i) {. . . } public boolean add. Item(Cache. Item i) { if (Invite. run. Test(“Cache. add. Item”)) { Invite. create. Sandbox. And. Fork(); if (Invite. is. Test. Process()) { if (test. Add. Item(i) == false) Invite. fail(); else Invite. succeed(); Invite. destroy. Sandbox. And. Exit(); } } return __add. Item(i); } 96

Instrumentation /* Method to be tested */ public boolean __add. Item(Cache. Item i) {. . . } /* In Vivo style test */ public boolean test. Add. Item(Cache. Item i) {. . . } public boolean add. Item(Cache. Item i) { if (Invite. run. Test(“Cache. add. Item”)) { Invite. create. Sandbox. And. Fork(); if (Invite. is. Test. Process()) { if (test. Add. Item(i) == false) Invite. fail(); else Invite. succeed(); Invite. destroy. Sandbox. And. Exit(); } } return __add. Item(i); } 96

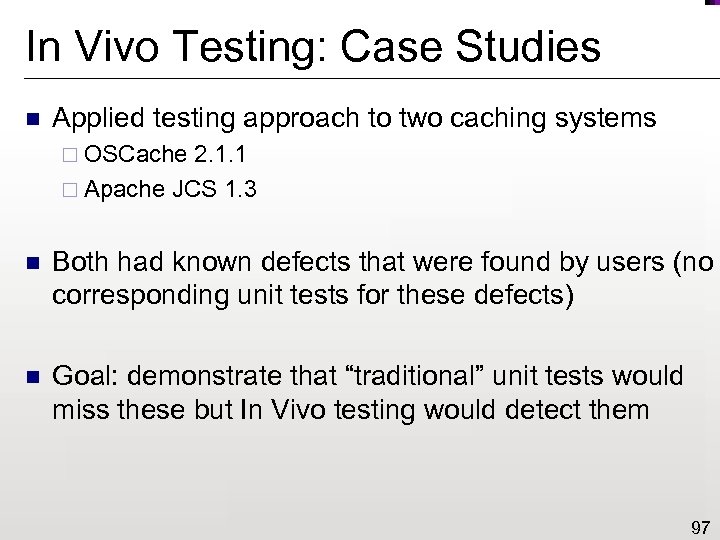

In Vivo Testing: Case Studies n Applied testing approach to two caching systems ¨ OSCache 2. 1. 1 ¨ Apache JCS 1. 3 n Both had known defects that were found by users (no corresponding unit tests for these defects) n Goal: demonstrate that “traditional” unit tests would miss these but In Vivo testing would detect them 97

In Vivo Testing: Case Studies n Applied testing approach to two caching systems ¨ OSCache 2. 1. 1 ¨ Apache JCS 1. 3 n Both had known defects that were found by users (no corresponding unit tests for these defects) n Goal: demonstrate that “traditional” unit tests would miss these but In Vivo testing would detect them 97

In Vivo Testing: Experimental Setup An undergraduate student created unit tests for the methods that contained the defects n These tests passed in “development” n Student was then asked to convert the unit tests to In Vivo tests n Driver created to simulate real usage in a “deployment environment” n 98

In Vivo Testing: Experimental Setup An undergraduate student created unit tests for the methods that contained the defects n These tests passed in “development” n Student was then asked to convert the unit tests to In Vivo tests n Driver created to simulate real usage in a “deployment environment” n 98

In Vivo Testing: Discussion n In Vivo testing revealed all defects, even though unit testing did not n Some defects only appeared in certain states, e. g. when the cache was at full capacity ¨ These are the very types of defects that In Vivo testing is targeted at n However, the approach depends heavily on the quality of the tests themselves 99

In Vivo Testing: Discussion n In Vivo testing revealed all defects, even though unit testing did not n Some defects only appeared in certain states, e. g. when the cache was at full capacity ¨ These are the very types of defects that In Vivo testing is targeted at n However, the approach depends heavily on the quality of the tests themselves 99

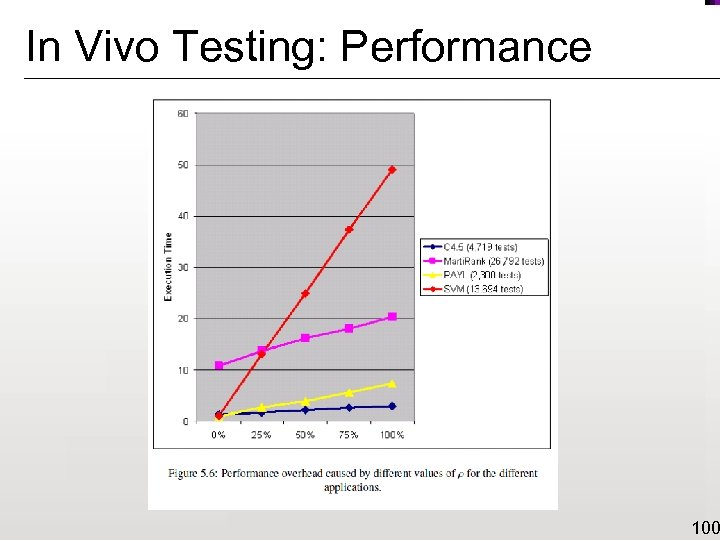

In Vivo Testing: Performance 100

In Vivo Testing: Performance 100

More Robust Sandboxes n “Safe” test case selection ¨ [Willmor n and Embury, ICSE’ 06] Copy-on-write database snapshots ¨ MS SQL Server v 8 101

More Robust Sandboxes n “Safe” test case selection ¨ [Willmor n and Embury, ICSE’ 06] Copy-on-write database snapshots ¨ MS SQL Server v 8 101

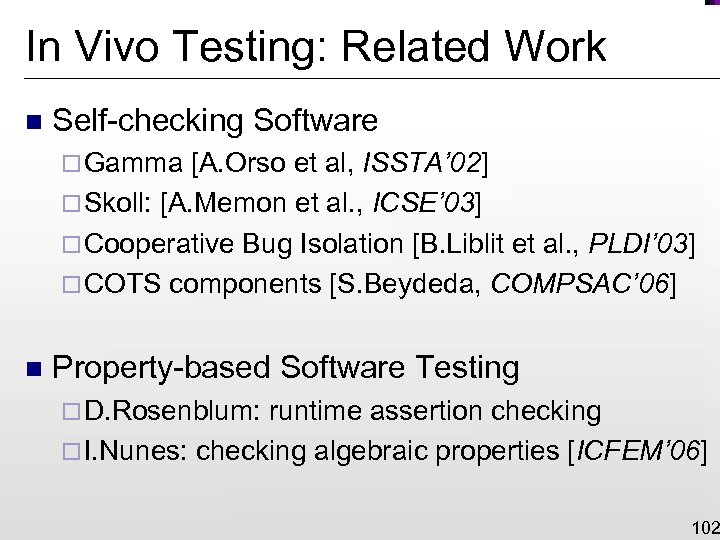

In Vivo Testing: Related Work n Self-checking Software ¨ Gamma [A. Orso et al, ISSTA’ 02] ¨ Skoll: [A. Memon et al. , ICSE’ 03] ¨ Cooperative Bug Isolation [B. Liblit et al. , PLDI’ 03] ¨ COTS components [S. Beydeda, COMPSAC’ 06] n Property-based Software Testing ¨ D. Rosenblum: runtime assertion checking ¨ I. Nunes: checking algebraic properties [ICFEM’ 06] 102

In Vivo Testing: Related Work n Self-checking Software ¨ Gamma [A. Orso et al, ISSTA’ 02] ¨ Skoll: [A. Memon et al. , ICSE’ 03] ¨ Cooperative Bug Isolation [B. Liblit et al. , PLDI’ 03] ¨ COTS components [S. Beydeda, COMPSAC’ 06] n Property-based Software Testing ¨ D. Rosenblum: runtime assertion checking ¨ I. Nunes: checking algebraic properties [ICFEM’ 06] 102

Related Work Motivation 103

Related Work Motivation 103

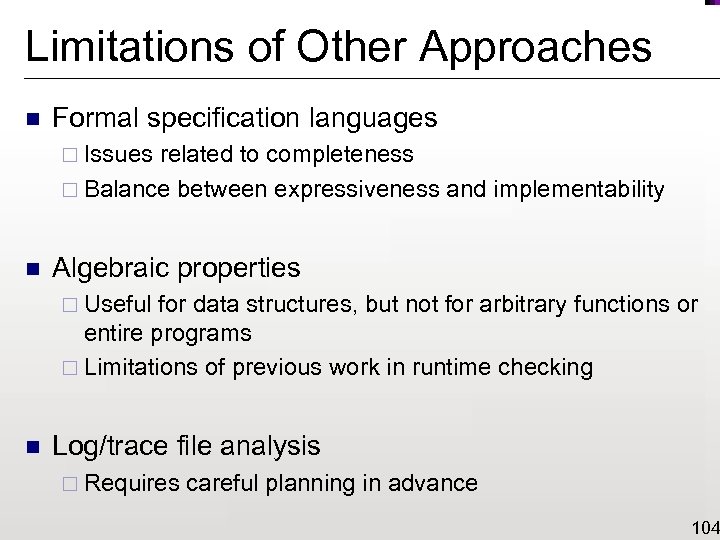

Limitations of Other Approaches n Formal specification languages ¨ Issues related to completeness ¨ Balance between expressiveness and implementability n Algebraic properties ¨ Useful for data structures, but not for arbitrary functions or entire programs ¨ Limitations of previous work in runtime checking n Log/trace file analysis ¨ Requires careful planning in advance 104

Limitations of Other Approaches n Formal specification languages ¨ Issues related to completeness ¨ Balance between expressiveness and implementability n Algebraic properties ¨ Useful for data structures, but not for arbitrary functions or entire programs ¨ Limitations of previous work in runtime checking n Log/trace file analysis ¨ Requires careful planning in advance 104

Previous Work in MT n T. Y. Chen et al. : applying metamorphic testing to applications without oracles [Info. & Soft. Tech. vol. 44, 2002] n Domain-specific testing ¨ Graphics [J. Mayer and R. Guderlei, QSIC’ 07] ¨ Bioinformatics [T. Y. Chen et al. , BMC Bioinf. 10(24), 2009] ¨ Middleware [W. K. Chan et al. , QSIC’ 05] ¨ Others… 105

Previous Work in MT n T. Y. Chen et al. : applying metamorphic testing to applications without oracles [Info. & Soft. Tech. vol. 44, 2002] n Domain-specific testing ¨ Graphics [J. Mayer and R. Guderlei, QSIC’ 07] ¨ Bioinformatics [T. Y. Chen et al. , BMC Bioinf. 10(24), 2009] ¨ Middleware [W. K. Chan et al. , QSIC’ 05] ¨ Others… 105

![Previous Studies [Hu et al. , SOQUA’ 06] Invariants hand-generated n Smaller programs n Previous Studies [Hu et al. , SOQUA’ 06] Invariants hand-generated n Smaller programs n](https://present5.com/presentation/6fafd954da21fccee8039c72da9fe3fa/image-106.jpg) Previous Studies [Hu et al. , SOQUA’ 06] Invariants hand-generated n Smaller programs n Only deterministic applications n Didn’t consider partial oracle n 106

Previous Studies [Hu et al. , SOQUA’ 06] Invariants hand-generated n Smaller programs n Only deterministic applications n Didn’t consider partial oracle n 106

![Developer Effort [Hu et al. , SOQUA’ 06] Students were given three-hour training sessions Developer Effort [Hu et al. , SOQUA’ 06] Students were given three-hour training sessions](https://present5.com/presentation/6fafd954da21fccee8039c72da9fe3fa/image-107.jpg) Developer Effort [Hu et al. , SOQUA’ 06] Students were given three-hour training sessions on MT and on assertion checking n Given three hours to identify metamorphic properties and program invariants n Averaged about the same number of metamorphic properties as invariants n The metamorphic properties were more effective at killing mutants n 107

Developer Effort [Hu et al. , SOQUA’ 06] Students were given three-hour training sessions on MT and on assertion checking n Given three hours to identify metamorphic properties and program invariants n Averaged about the same number of metamorphic properties as invariants n The metamorphic properties were more effective at killing mutants n 107

![Fault Localization n Delta debugging ¨ [Zeller, FSE’ 02] ¨ Compare trace of n Fault Localization n Delta debugging ¨ [Zeller, FSE’ 02] ¨ Compare trace of n](https://present5.com/presentation/6fafd954da21fccee8039c72da9fe3fa/image-108.jpg) Fault Localization n Delta debugging ¨ [Zeller, FSE’ 02] ¨ Compare trace of n failed execution vs. successful ones Cooperative Bug Isolation ¨ [Liblit et al. , PLDI’ 03] ¨ Numerous instances report compared to those n results and failed execution is Statistical approach ¨ [Baah, Gray, Harrold; So. QUA’ 06] ¨ Combines model of normal behavior monitoring with runtime 108

Fault Localization n Delta debugging ¨ [Zeller, FSE’ 02] ¨ Compare trace of n failed execution vs. successful ones Cooperative Bug Isolation ¨ [Liblit et al. , PLDI’ 03] ¨ Numerous instances report compared to those n results and failed execution is Statistical approach ¨ [Baah, Gray, Harrold; So. QUA’ 06] ¨ Combines model of normal behavior monitoring with runtime 108