53bc96b1754b1294e948913879db78f2.ppt

- Количество слайдов: 56

Metadata Extraction @ ODU for DTIC Presentation to Senior Management May 16, 2007 Kurt Maly, Steve Zeil, Mohammad Zubair {maly, zeil, zubair} @cs. odu. edu

Metadata Extraction @ ODU for DTIC Presentation to Senior Management May 16, 2007 Kurt Maly, Steve Zeil, Mohammad Zubair {maly, zeil, zubair} @cs. odu. edu

Outline n n Metadata Extraction Project q System overview q Demo q Current status Why ODU q Research, new technology, Inexpensive, Maintenance (Department commitment) Why DTIC as Lead q Amortize development cost, Expand template set (helpful in future too), Consistent with DTIC strategic mission Required enhancements

Outline n n Metadata Extraction Project q System overview q Demo q Current status Why ODU q Research, new technology, Inexpensive, Maintenance (Department commitment) Why DTIC as Lead q Amortize development cost, Expand template set (helpful in future too), Consistent with DTIC strategic mission Required enhancements

ODU Metadata Extraction System n Input: pdf documents q n processed through OCR (Optical Character Recognition) Output: metadata in XML format q easily processed for uploading into DTIC databases (demo: 1 st document)

ODU Metadata Extraction System n Input: pdf documents q n processed through OCR (Optical Character Recognition) Output: metadata in XML format q easily processed for uploading into DTIC databases (demo: 1 st document)

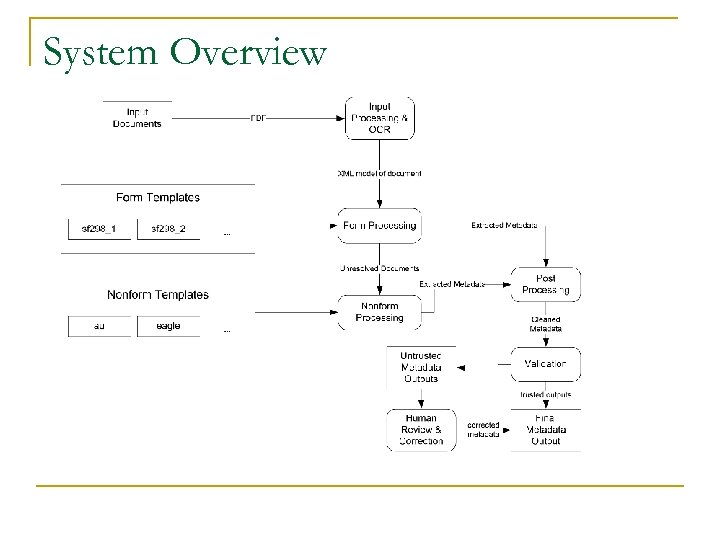

System Overview n Processing has two main branches: q q Documents with forms (RDPs) Documents without forms

System Overview n Processing has two main branches: q q Documents with forms (RDPs) Documents without forms

System Overview

System Overview

Demo (additional documents)

Demo (additional documents)

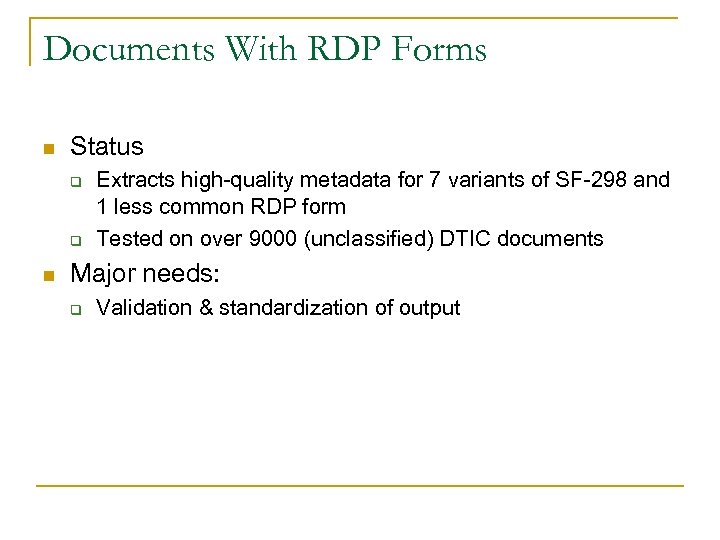

Documents With RDP Forms n Status q q n Extracts high-quality metadata for 7 variants of SF-298 and 1 less common RDP form Tested on over 9000 (unclassified) DTIC documents Major needs: q Validation & standardization of output

Documents With RDP Forms n Status q q n Extracts high-quality metadata for 7 variants of SF-298 and 1 less common RDP form Tested on over 9000 (unclassified) DTIC documents Major needs: q Validation & standardization of output

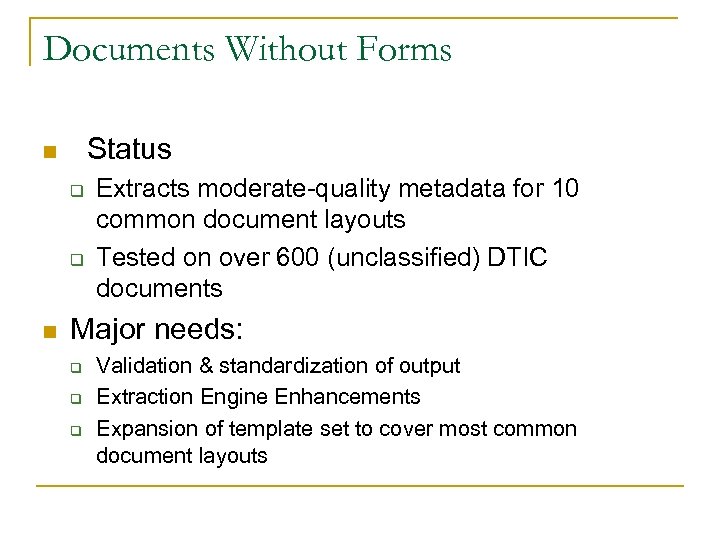

Documents Without Forms Status n q q n Extracts moderate-quality metadata for 10 common document layouts Tested on over 600 (unclassified) DTIC documents Major needs: q q q Validation & standardization of output Extraction Engine Enhancements Expansion of template set to cover most common document layouts

Documents Without Forms Status n q q n Extracts moderate-quality metadata for 10 common document layouts Tested on over 600 (unclassified) DTIC documents Major needs: q q q Validation & standardization of output Extraction Engine Enhancements Expansion of template set to cover most common document layouts

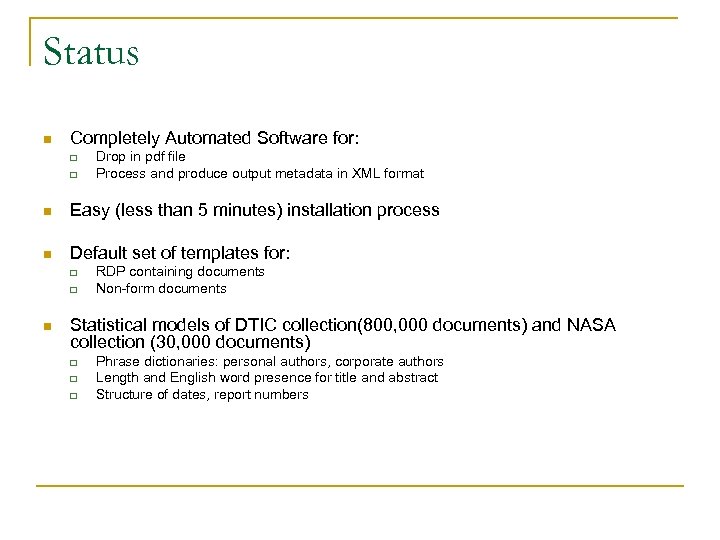

Status n Completely Automated Software for: q q Drop in pdf file Process and produce output metadata in XML format n Easy (less than 5 minutes) installation process n Default set of templates for: q q n RDP containing documents Non-form documents Statistical models of DTIC collection(800, 000 documents) and NASA collection (30, 000 documents) q q q Phrase dictionaries: personal authors, corporate authors Length and English word presence for title and abstract Structure of dates, report numbers

Status n Completely Automated Software for: q q Drop in pdf file Process and produce output metadata in XML format n Easy (less than 5 minutes) installation process n Default set of templates for: q q n RDP containing documents Non-form documents Statistical models of DTIC collection(800, 000 documents) and NASA collection (30, 000 documents) q q q Phrase dictionaries: personal authors, corporate authors Length and English word presence for title and abstract Structure of dates, report numbers

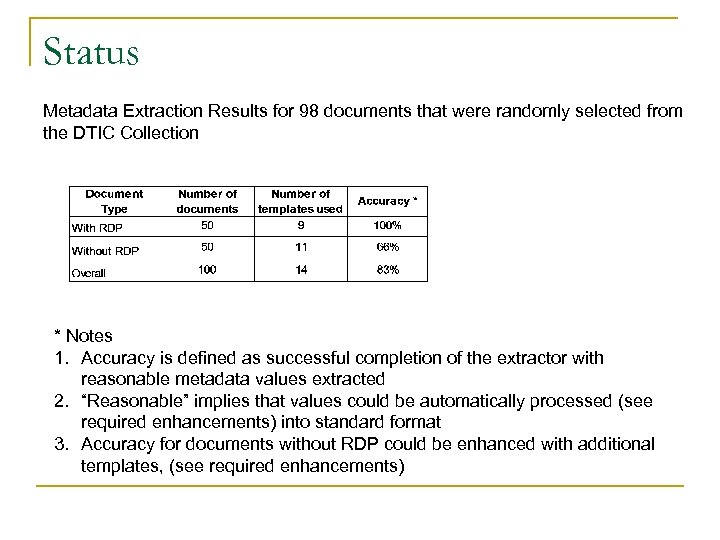

Status Metadata Extraction Results for 98 documents that were randomly selected from the DTIC Collection * Notes 1. Accuracy is defined as successful completion of the extractor with reasonable metadata values extracted 2. “Reasonable” implies that values could be automatically processed (see required enhancements) into standard format 3. Accuracy for documents without RDP could be enhanced with additional templates, (see required enhancements)

Status Metadata Extraction Results for 98 documents that were randomly selected from the DTIC Collection * Notes 1. Accuracy is defined as successful completion of the extractor with reasonable metadata values extracted 2. “Reasonable” implies that values could be automatically processed (see required enhancements) into standard format 3. Accuracy for documents without RDP could be enhanced with additional templates, (see required enhancements)

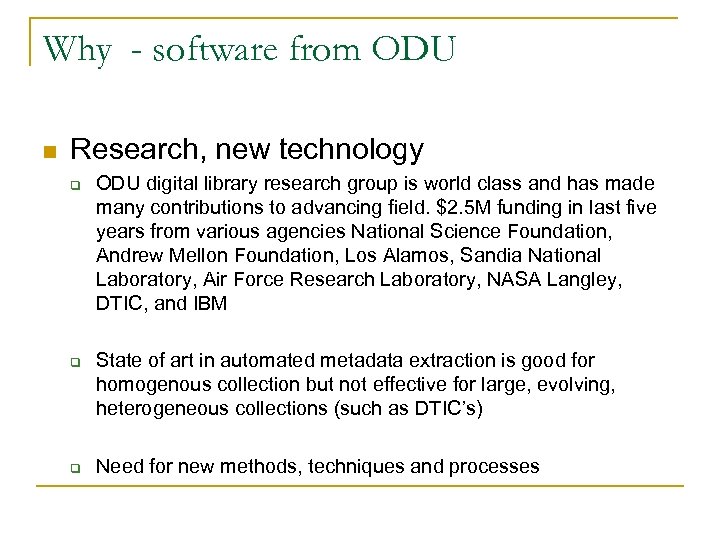

Why - software from ODU n Research, new technology q q q ODU digital library research group is world class and has made many contributions to advancing field. $2. 5 M funding in last five years from various agencies National Science Foundation, Andrew Mellon Foundation, Los Alamos, Sandia National Laboratory, Air Force Research Laboratory, NASA Langley, DTIC, and IBM State of art in automated metadata extraction is good for homogenous collection but not effective for large, evolving, heterogeneous collections (such as DTIC’s) Need for new methods, techniques and processes

Why - software from ODU n Research, new technology q q q ODU digital library research group is world class and has made many contributions to advancing field. $2. 5 M funding in last five years from various agencies National Science Foundation, Andrew Mellon Foundation, Los Alamos, Sandia National Laboratory, Air Force Research Laboratory, NASA Langley, DTIC, and IBM State of art in automated metadata extraction is good for homogenous collection but not effective for large, evolving, heterogeneous collections (such as DTIC’s) Need for new methods, techniques and processes

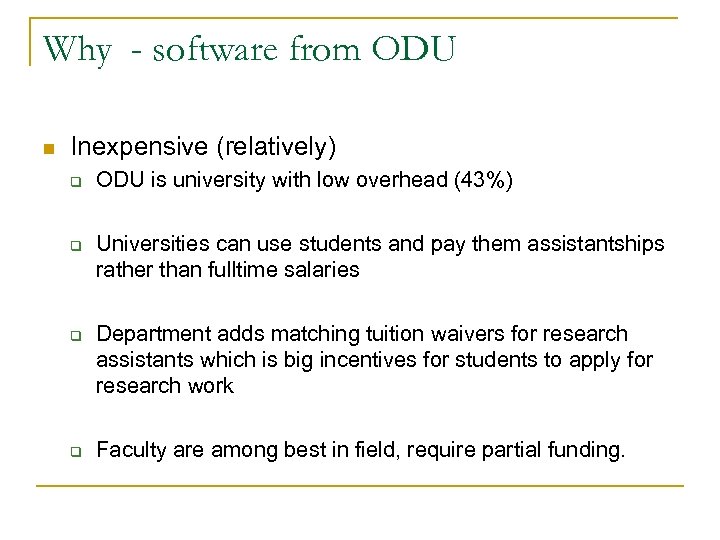

Why - software from ODU n Inexpensive (relatively) q q ODU is university with low overhead (43%) Universities can use students and pay them assistantships rather than fulltime salaries Department adds matching tuition waivers for research assistants which is big incentives for students to apply for research work Faculty are among best in field, require partial funding.

Why - software from ODU n Inexpensive (relatively) q q ODU is university with low overhead (43%) Universities can use students and pay them assistantships rather than fulltime salaries Department adds matching tuition waivers for research assistants which is big incentives for students to apply for research work Faculty are among best in field, require partial funding.

Why - software from ODU n Long term software maintenance through department q q q Department commits continuity independent of faculty on projects Department will find assign faculty and student who can become conversant with code and maintain it (not evolve it) Likely that there would be other faculty who are interested in evolving code for appropriate funding

Why - software from ODU n Long term software maintenance through department q q q Department commits continuity independent of faculty on projects Department will find assign faculty and student who can become conversant with code and maintain it (not evolve it) Likely that there would be other faculty who are interested in evolving code for appropriate funding

Why – DTIC as Lead Agency n Amortize Development Cost q We are working with NASA and plan to get on GPO board soon. NASA gave us partial funding to investigate the applicability of our approach for their collection.

Why – DTIC as Lead Agency n Amortize Development Cost q We are working with NASA and plan to get on GPO board soon. NASA gave us partial funding to investigate the applicability of our approach for their collection.

Why – DTIC as Lead Agency n Cross Fertilization q DTIC has distinctive requirements that can benefit from enhancing the metadata extraction technology for other agencies (for example richer template set) n Heterogeneity: DTIC collects documents… q q q n of many different types from an unusually large number of sources with minimal format restrictions Evolution: DTIC collection spans time frame in which q q submission formats change from typewritten to word processed, scanned to electronic asserts minimal control over layouts & formats

Why – DTIC as Lead Agency n Cross Fertilization q DTIC has distinctive requirements that can benefit from enhancing the metadata extraction technology for other agencies (for example richer template set) n Heterogeneity: DTIC collects documents… q q q n of many different types from an unusually large number of sources with minimal format restrictions Evolution: DTIC collection spans time frame in which q q submission formats change from typewritten to word processed, scanned to electronic asserts minimal control over layouts & formats

Why – DTIC as Lead Agency n Consistent with DTIC Strategic Mission q DTIC is largest organization with most diverse collection and has stature to disseminate to other government agencies

Why – DTIC as Lead Agency n Consistent with DTIC Strategic Mission q DTIC is largest organization with most diverse collection and has stature to disseminate to other government agencies

Required Enhancements – Priority 1 n n n Enhance portability Standardized output Template creation (initial release), Text PDF input MS Word input

Required Enhancements – Priority 1 n n n Enhance portability Standardized output Template creation (initial release), Text PDF input MS Word input

Required Enhancements – Priority 2 n n Prime. OCR input Multipage metadata Template Creation (enhanced release) Template Creation Tool

Required Enhancements – Priority 2 n n Prime. OCR input Multipage metadata Template Creation (enhanced release) Template Creation Tool

Required Enhancements – Priority 3 n Human intervention software

Required Enhancements – Priority 3 n Human intervention software

Time Line n May 2007 to September 2007 q Add flexibility to code q Enable the current product to produce standardized output q Create new templates that will cover the Larger Contributors q Investigate different approaches to handle text pdf documents and finalize the design?

Time Line n May 2007 to September 2007 q Add flexibility to code q Enable the current product to produce standardized output q Create new templates that will cover the Larger Contributors q Investigate different approaches to handle text pdf documents and finalize the design?

Time Line n October 2007 to September 2008 q q q q Validate the extraction according to the DTIC provided Cataloging document. Module that would allow the functional user to create a new template that would easily integrate into the extraction software. Create new templates that will cover the Larger Contributors of DTIC Create a module that converts Prime OCR into IDM Create the code necessary to enable the non-form documents to be able to extract the metadata from more than one single page Implement the support for the text pdf as finalized in the first part Implement support for Word documents Create the code necessary to display the scoring on validation at the documents level (for workers) and collection level (managers)

Time Line n October 2007 to September 2008 q q q q Validate the extraction according to the DTIC provided Cataloging document. Module that would allow the functional user to create a new template that would easily integrate into the extraction software. Create new templates that will cover the Larger Contributors of DTIC Create a module that converts Prime OCR into IDM Create the code necessary to enable the non-form documents to be able to extract the metadata from more than one single page Implement the support for the text pdf as finalized in the first part Implement support for Word documents Create the code necessary to display the scoring on validation at the documents level (for workers) and collection level (managers)

Extra slides

Extra slides

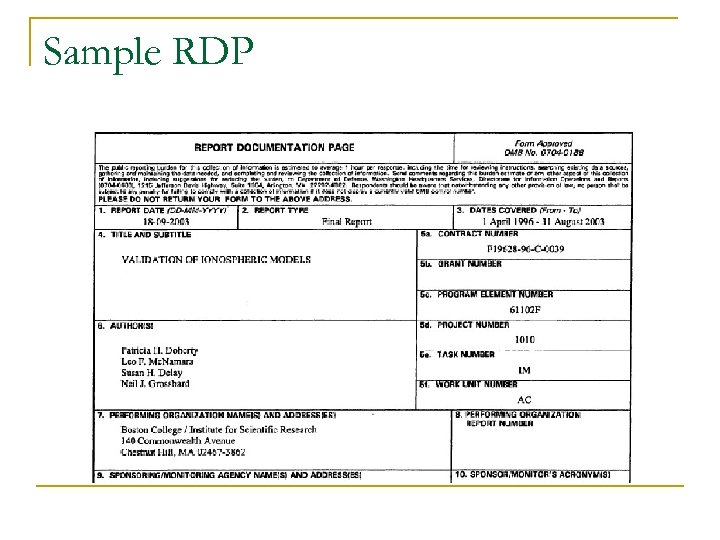

Sample RDP

Sample RDP

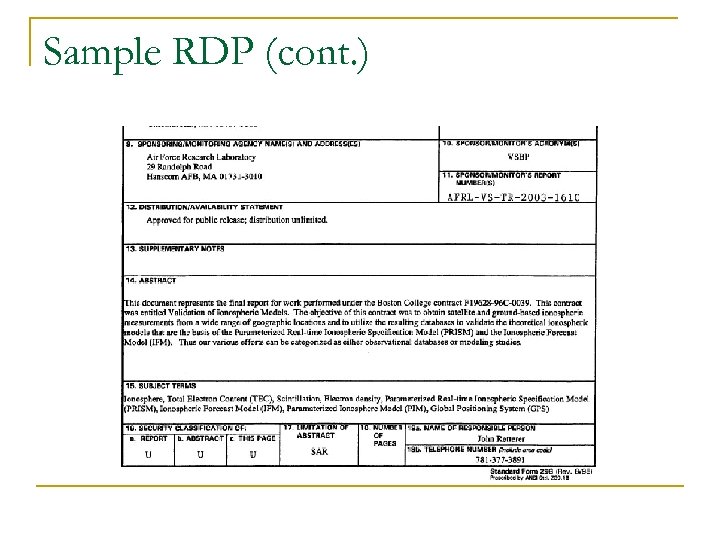

Sample RDP (cont. )

Sample RDP (cont. )

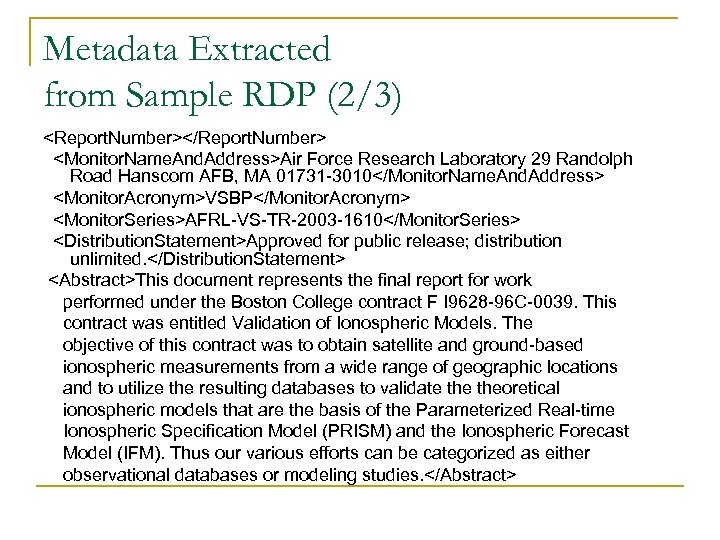

Metadata Extracted from Sample RDP (2/3)

Metadata Extracted from Sample RDP (2/3)

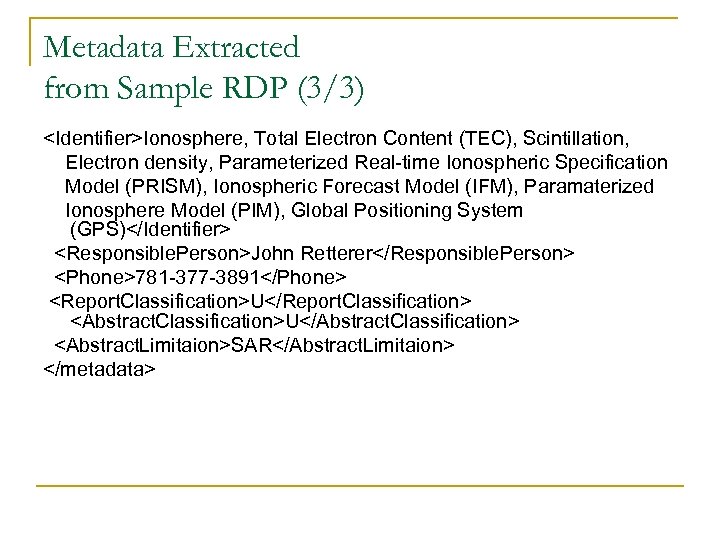

Metadata Extracted from Sample RDP (3/3)

Metadata Extracted from Sample RDP (3/3)

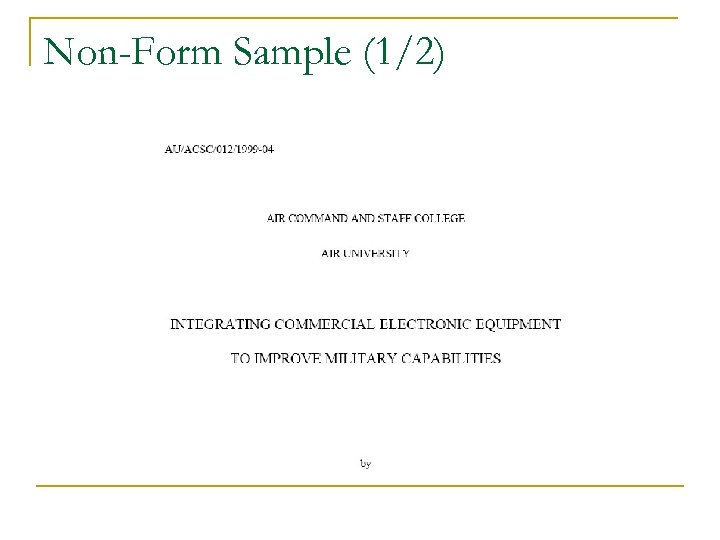

Non-Form Sample (1/2)

Non-Form Sample (1/2)

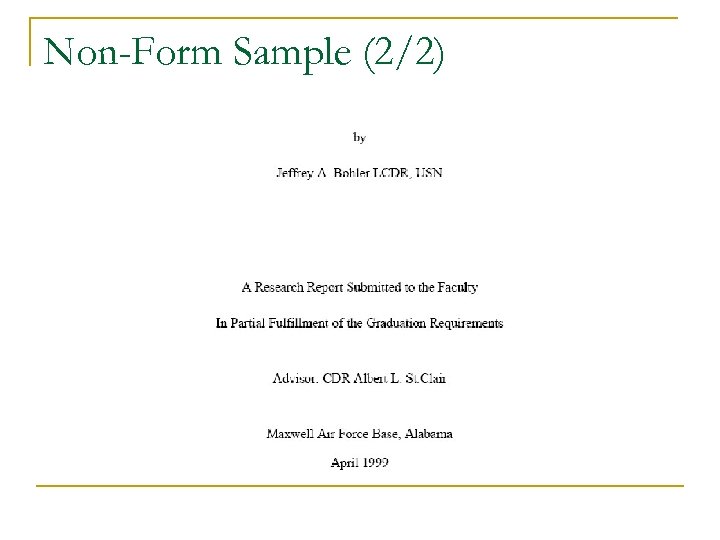

Non-Form Sample (2/2)

Non-Form Sample (2/2)

Enhanced Portability n n Relax hard-coded system dependencies Less technical documentation, particularly as regards operational procedure Improved error logging Priority: 1 q q duration: 2 mos, impact: easier to operate software,

Enhanced Portability n n Relax hard-coded system dependencies Less technical documentation, particularly as regards operational procedure Improved error logging Priority: 1 q q duration: 2 mos, impact: easier to operate software,

Standardized Output n WYSIWYG q n What You See is What You Get WYG != WYW q What You Get is not necessarily What You Want

Standardized Output n WYSIWYG q n What You See is What You Get WYG != WYW q What You Get is not necessarily What You Want

Standardized Output (cont. ) n Field values to adhere to defined standard: q q n Title in ‘title’ format ala: This is a Title Well Formed Date ala: 28 MAR 2007 Personal authors ala: Leo F. Mc. Namara ; Susan H. Delay ; Neil J. Grossbard Contract/grant number, corporate authors, distribution statement, . . Priority: 1 q q q duration: 3 mos, impact: better template selection and metadata ready for DB insertion Dependency: none

Standardized Output (cont. ) n Field values to adhere to defined standard: q q n Title in ‘title’ format ala: This is a Title Well Formed Date ala: 28 MAR 2007 Personal authors ala: Leo F. Mc. Namara ; Susan H. Delay ; Neil J. Grossbard Contract/grant number, corporate authors, distribution statement, . . Priority: 1 q q q duration: 3 mos, impact: better template selection and metadata ready for DB insertion Dependency: none

Template Creation (initial release) n n For RDP relative few (5 templates cover 100% of about 9, 000 out of 10, 000 in testbed) more needed. For documents without RDP need more (currently have 10 templates covering 600 non-RDP documents) to cover largest DTIC contributors q Requires acquiring and exploiting an updated testbed n from last three years n documents as they arrived at DTIC n need about 5, 000 documents n Template set to be enhanced still further in later stages n Priority – 1 q q q duration: 4 mos, impact: closer to production stage, dependency: new testbed

Template Creation (initial release) n n For RDP relative few (5 templates cover 100% of about 9, 000 out of 10, 000 in testbed) more needed. For documents without RDP need more (currently have 10 templates covering 600 non-RDP documents) to cover largest DTIC contributors q Requires acquiring and exploiting an updated testbed n from last three years n documents as they arrived at DTIC n need about 5, 000 documents n Template set to be enhanced still further in later stages n Priority – 1 q q q duration: 4 mos, impact: closer to production stage, dependency: new testbed

Text PDF Input n Current system processes all documents through OCR q q q n n allows input of documents that arrive as scanned images time consuming source of error Increasing percentage of new DTIC documents arrive as “native” or “text” PDF Add processing path to accept text PDF without OCR Priority: 1 Duration: 6 months

Text PDF Input n Current system processes all documents through OCR q q q n n allows input of documents that arrive as scanned images time consuming source of error Increasing percentage of new DTIC documents arrive as “native” or “text” PDF Add processing path to accept text PDF without OCR Priority: 1 Duration: 6 months

MS Word Input n n Could be handled via Word ML or by generating Text PDFs from Word Need solution imposing minimal additional requirements on operating platform Priority: 1 Duration: 2 months

MS Word Input n n Could be handled via Word ML or by generating Text PDFs from Word Need solution imposing minimal additional requirements on operating platform Priority: 1 Duration: 2 months

Required Enhancements n Desirable (Priority 2) q Prime. OCR input q Multipage metadata q Template Creation Tool n Optional (Priority 3) q Human intervention software

Required Enhancements n Desirable (Priority 2) q Prime. OCR input q Multipage metadata q Template Creation Tool n Optional (Priority 3) q Human intervention software

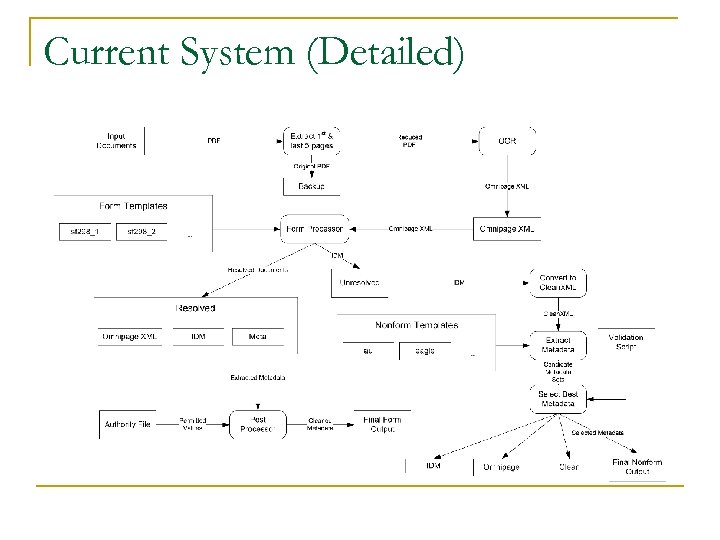

Current System (Detailed)

Current System (Detailed)

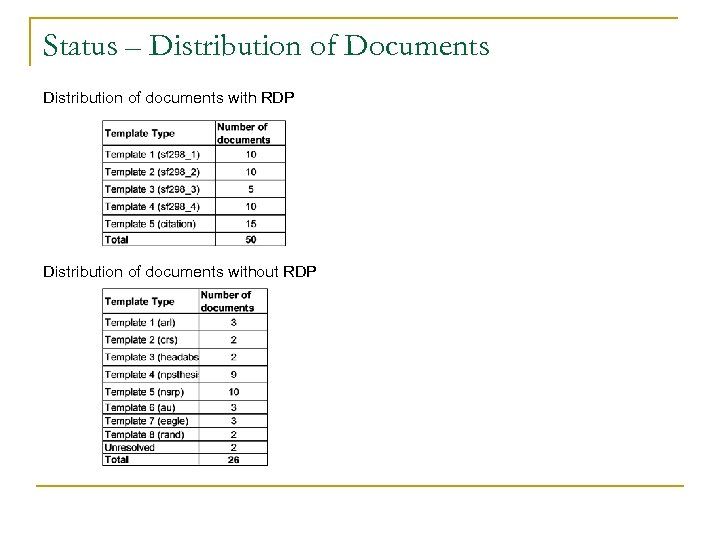

Status – Distribution of Documents Distribution of documents with RDP Distribution of documents without RDP

Status – Distribution of Documents Distribution of documents with RDP Distribution of documents without RDP

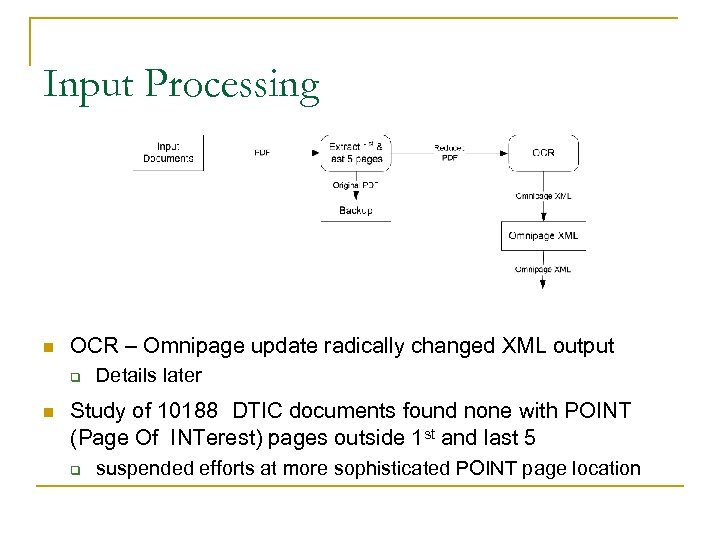

Input Processing n OCR – Omnipage update radically changed XML output q n Details later Study of 10188 DTIC documents found none with POINT (Page Of INTerest) pages outside 1 st and last 5 q suspended efforts at more sophisticated POINT page location

Input Processing n OCR – Omnipage update radically changed XML output q n Details later Study of 10188 DTIC documents found none with POINT (Page Of INTerest) pages outside 1 st and last 5 q suspended efforts at more sophisticated POINT page location

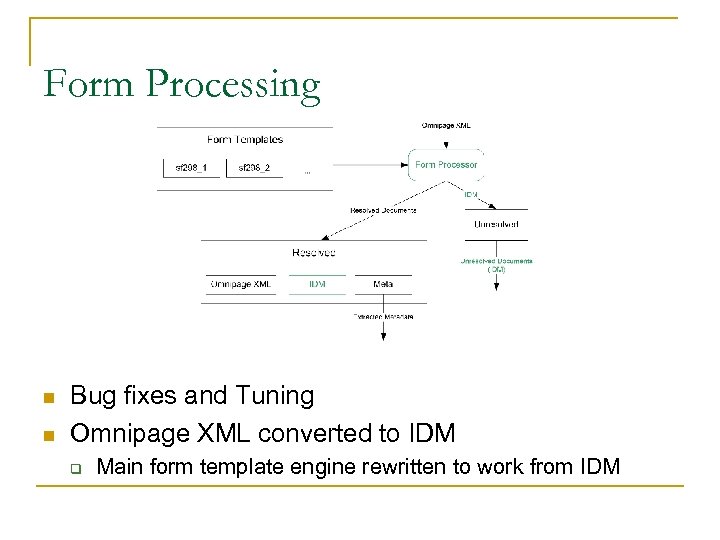

Form Processing n n Bug fixes and Tuning Omnipage XML converted to IDM q Main form template engine rewritten to work from IDM

Form Processing n n Bug fixes and Tuning Omnipage XML converted to IDM q Main form template engine rewritten to work from IDM

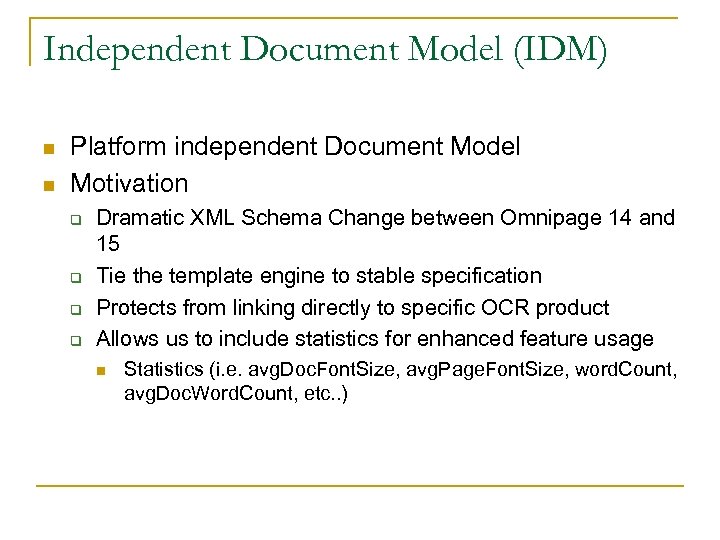

Independent Document Model (IDM) n n Platform independent Document Model Motivation q q Dramatic XML Schema Change between Omnipage 14 and 15 Tie the template engine to stable specification Protects from linking directly to specific OCR product Allows us to include statistics for enhanced feature usage n Statistics (i. e. avg. Doc. Font. Size, avg. Page. Font. Size, word. Count, avg. Doc. Word. Count, etc. . )

Independent Document Model (IDM) n n Platform independent Document Model Motivation q q Dramatic XML Schema Change between Omnipage 14 and 15 Tie the template engine to stable specification Protects from linking directly to specific OCR product Allows us to include statistics for enhanced feature usage n Statistics (i. e. avg. Doc. Font. Size, avg. Page. Font. Size, word. Count, avg. Doc. Word. Count, etc. . )

Generating IDM n Use XSLT 2. 0 stylesheets to transform q q n Supporting new OCR schema only requires generation of new XSLT stylesheet. -- Engine does not change Chain a series of sheets to add functionality (Clean. ML) Schema Specification Available (http: //dtic. cs. odu. edu/devzone/IDM_Specification. doc)

Generating IDM n Use XSLT 2. 0 stylesheets to transform q q n Supporting new OCR schema only requires generation of new XSLT stylesheet. -- Engine does not change Chain a series of sheets to add functionality (Clean. ML) Schema Specification Available (http: //dtic. cs. odu. edu/devzone/IDM_Specification. doc)

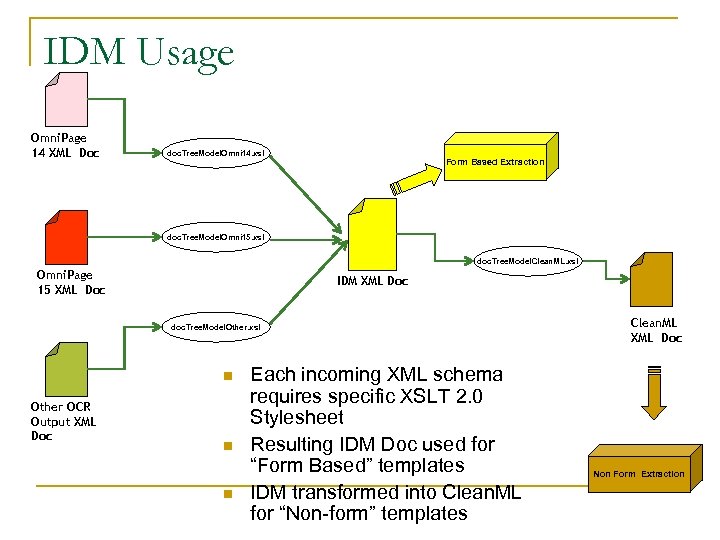

IDM Usage Omni. Page 14 XML Doc doc. Tree. Model. Omni 14. xsl Form Based Extraction doc. Tree. Model. Omni 15. xsl doc. Tree. Model. Clean. ML. xsl Omni. Page 15 XML Doc IDM XML Doc doc. Tree. Model. Other. xsl n Other OCR Output XML Doc n n Each incoming XML schema requires specific XSLT 2. 0 Stylesheet Resulting IDM Doc used for “Form Based” templates IDM transformed into Clean. ML for “Non-form” templates Clean. ML XML Doc Non Form Extraction

IDM Usage Omni. Page 14 XML Doc doc. Tree. Model. Omni 14. xsl Form Based Extraction doc. Tree. Model. Omni 15. xsl doc. Tree. Model. Clean. ML. xsl Omni. Page 15 XML Doc IDM XML Doc doc. Tree. Model. Other. xsl n Other OCR Output XML Doc n n Each incoming XML schema requires specific XSLT 2. 0 Stylesheet Resulting IDM Doc used for “Form Based” templates IDM transformed into Clean. ML for “Non-form” templates Clean. ML XML Doc Non Form Extraction

IDM Tool Status n n n Converters completed to generate IDM from Omnipage 14 and 15 XML q Omnipage 15 proved to have numerous errors in its representation of an OCR’d document q Consequently, not recommended Form-based extraction engine revised to work from IDM Non-form engine still works from our older “Clean. XML” q convertor from IDM to Clean. XML completed as stop-gap measure q direct use of IDM deferred pending review of other engine modifications

IDM Tool Status n n n Converters completed to generate IDM from Omnipage 14 and 15 XML q Omnipage 15 proved to have numerous errors in its representation of an OCR’d document q Consequently, not recommended Form-based extraction engine revised to work from IDM Non-form engine still works from our older “Clean. XML” q convertor from IDM to Clean. XML completed as stop-gap measure q direct use of IDM deferred pending review of other engine modifications

Post Processing n No significant changes

Post Processing n No significant changes

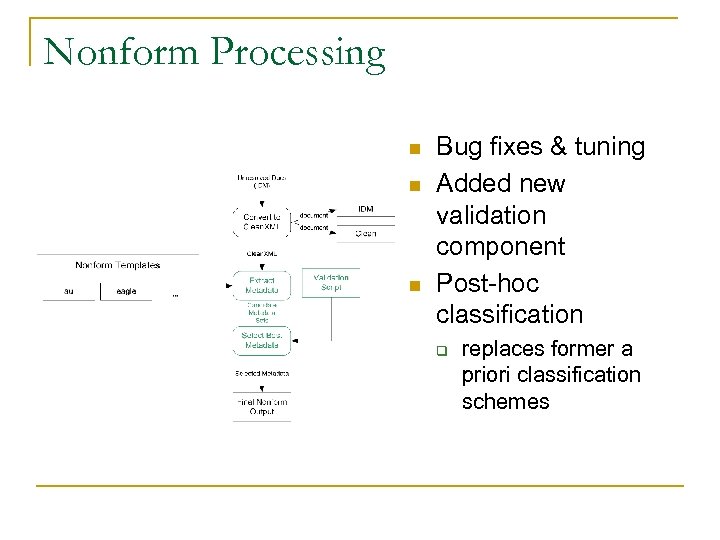

Nonform Processing n n n Bug fixes & tuning Added new validation component Post-hoc classification q replaces former a priori classification schemes

Nonform Processing n n n Bug fixes & tuning Added new validation component Post-hoc classification q replaces former a priori classification schemes

Validation n n Given a set of extracted metadata q mark each field with a confidence value indicating how trustworthy the extracted value is q mark the set with a composite confidence score Fields and Sets with low confidence scores may be referred for additional processing q automated post-processing q human intervention and correction

Validation n n Given a set of extracted metadata q mark each field with a confidence value indicating how trustworthy the extracted value is q mark the set with a composite confidence score Fields and Sets with low confidence scores may be referred for additional processing q automated post-processing q human intervention and correction

Validating Extracted Metadata n n n Techniques must be independent of the extraction method A validation specification is written for each collection, combining Field-specific validation rules q statistical models derived for each field of n text length n % of words from English dictionary n % of phrases from knowledge base prepared for that field q pattern matching

Validating Extracted Metadata n n n Techniques must be independent of the extraction method A validation specification is written for each collection, combining Field-specific validation rules q statistical models derived for each field of n text length n % of words from English dictionary n % of phrases from knowledge base prepared for that field q pattern matching

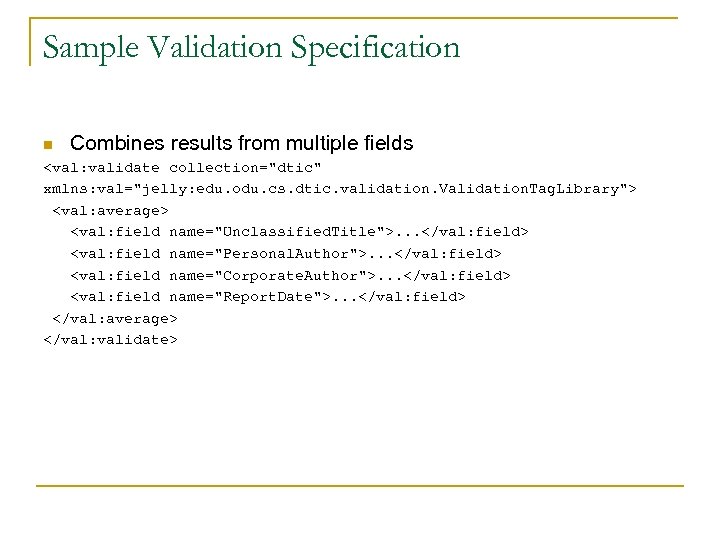

Sample Validation Specification n Combines results from multiple fields

Sample Validation Specification n Combines results from multiple fields

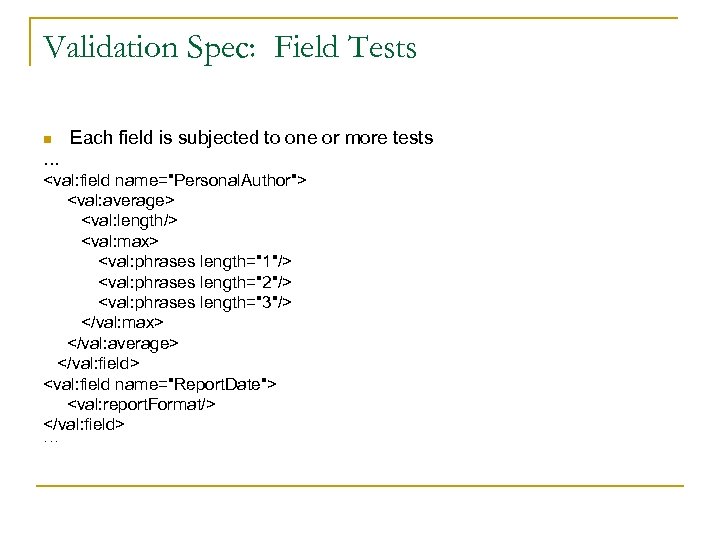

Validation Spec: Field Tests n Each field is subjected to one or more tests …

Validation Spec: Field Tests n Each field is subjected to one or more tests …

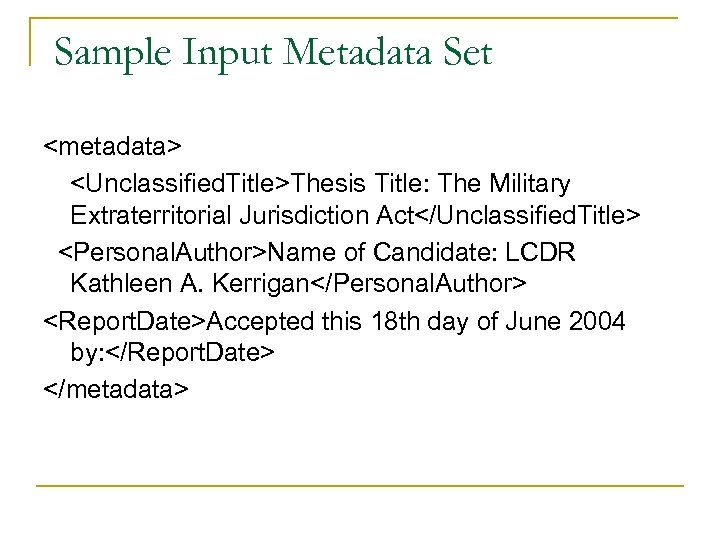

Sample Input Metadata Set

Sample Input Metadata Set

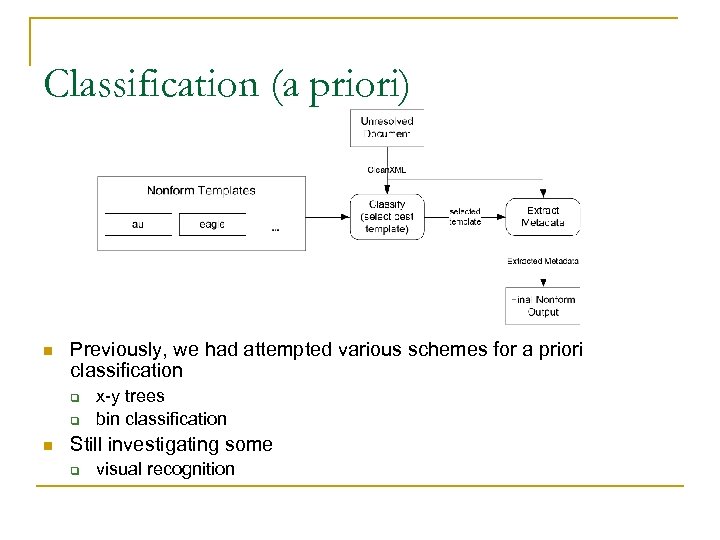

Classification (a priori) n Previously, we had attempted various schemes for a priori classification q q n x-y trees bin classification Still investigating some q visual recognition

Classification (a priori) n Previously, we had attempted various schemes for a priori classification q q n x-y trees bin classification Still investigating some q visual recognition

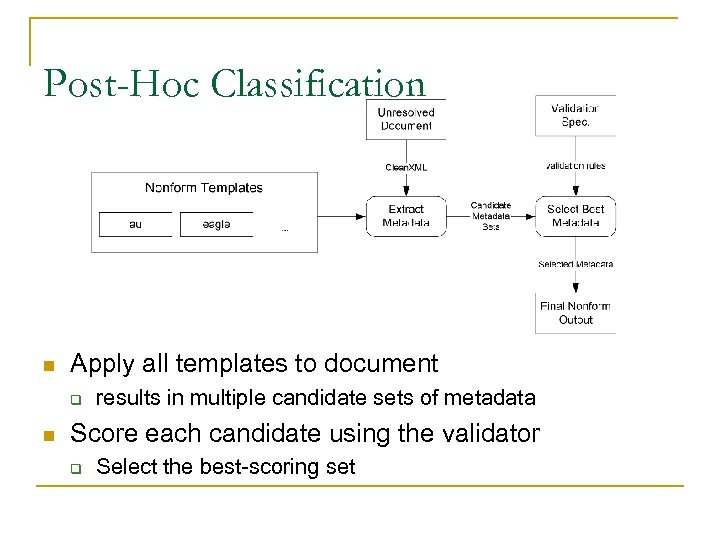

Post-Hoc Classification n Apply all templates to document q n results in multiple candidate sets of metadata Score each candidate using the validator q Select the best-scoring set

Post-Hoc Classification n Apply all templates to document q n results in multiple candidate sets of metadata Score each candidate using the validator q Select the best-scoring set

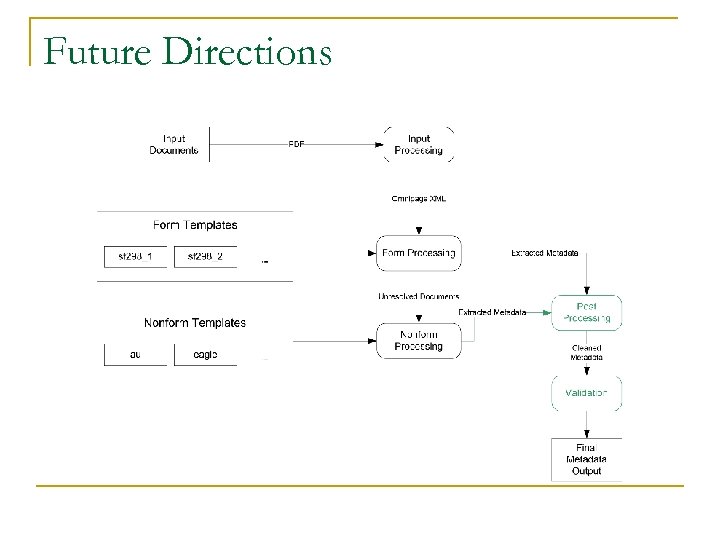

Future Directions

Future Directions