e96f98d889f6e076fc6b440fd07d3823.ppt

- Количество слайдов: 18

Mellanox Technologies Maximize World-Class Cluster Performance April, 2008 Gilad Shainer – Director of Technical Marketing CONFIDENTIAL

Mellanox Technologies Maximize World-Class Cluster Performance April, 2008 Gilad Shainer – Director of Technical Marketing CONFIDENTIAL

Mellanox Technologies § Fabless semiconductor supplier founded in 1999 • Business in California • R&D and Operations in Israel • Global sales offices and support • 250+ employees worldwide § Leading server and storage interconnect products • Infini. Band Ethernet leadership § Shipped over 2. 8 M 10 & 20 Gb/s ports as of Dec 2007 § $106 M raised in Feb 2007 IPO on NASDAQ (MLNX) • Dual Listed on Tel Aviv Stock Exchange (TASE: MLNX) • Profitable since 2005 § Revenues: FY 06=$48. 5 M, FY 07=$84. 1 M • 73% yearly growth • 1 Q 08 guidance ~$24. 8 M § Customers include Cisco, Dawning, Dell, Fujitsu-Siemens, HP, IBM, NEC, Net. App, Sun, Voltaire 2 Mellanox Confidential

Mellanox Technologies § Fabless semiconductor supplier founded in 1999 • Business in California • R&D and Operations in Israel • Global sales offices and support • 250+ employees worldwide § Leading server and storage interconnect products • Infini. Band Ethernet leadership § Shipped over 2. 8 M 10 & 20 Gb/s ports as of Dec 2007 § $106 M raised in Feb 2007 IPO on NASDAQ (MLNX) • Dual Listed on Tel Aviv Stock Exchange (TASE: MLNX) • Profitable since 2005 § Revenues: FY 06=$48. 5 M, FY 07=$84. 1 M • 73% yearly growth • 1 Q 08 guidance ~$24. 8 M § Customers include Cisco, Dawning, Dell, Fujitsu-Siemens, HP, IBM, NEC, Net. App, Sun, Voltaire 2 Mellanox Confidential

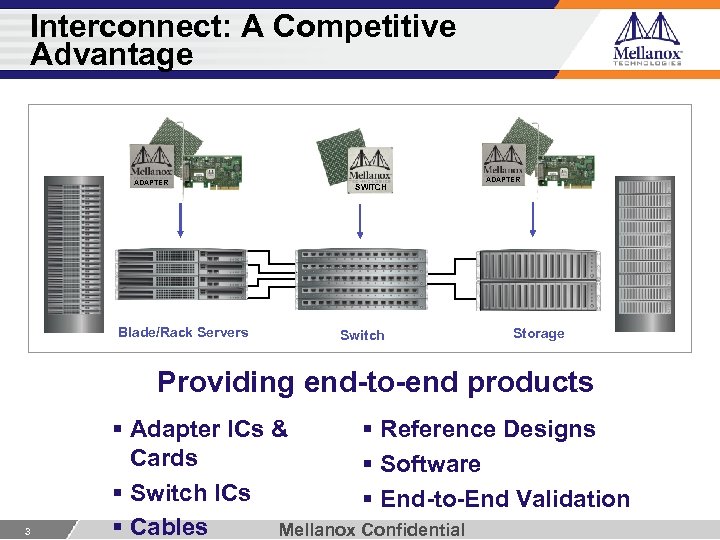

Interconnect: A Competitive Advantage ADAPTER Blade/Rack Servers SWITCH Switch ADAPTER Storage Providing end-to-end products 3 § Adapter ICs & § Reference Designs Cards § Software § Switch ICs § End-to-End Validation § Cables Mellanox Confidential

Interconnect: A Competitive Advantage ADAPTER Blade/Rack Servers SWITCH Switch ADAPTER Storage Providing end-to-end products 3 § Adapter ICs & § Reference Designs Cards § Software § Switch ICs § End-to-End Validation § Cables Mellanox Confidential

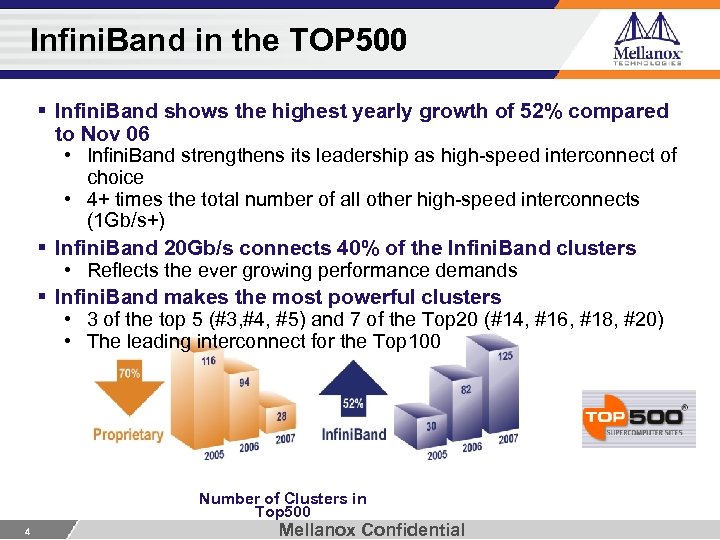

Infini. Band in the TOP 500 § Infini. Band shows the highest yearly growth of 52% compared to Nov 06 • Infini. Band strengthens its leadership as high-speed interconnect of choice • 4+ times the total number of all other high-speed interconnects (1 Gb/s+) § Infini. Band 20 Gb/s connects 40% of the Infini. Band clusters • Reflects the ever growing performance demands § Infini. Band makes the most powerful clusters • 3 of the top 5 (#3, #4, #5) and 7 of the Top 20 (#14, #16, #18, #20) • The leading interconnect for the Top 100 Number of Clusters in Top 500 4 Mellanox Confidential

Infini. Band in the TOP 500 § Infini. Band shows the highest yearly growth of 52% compared to Nov 06 • Infini. Band strengthens its leadership as high-speed interconnect of choice • 4+ times the total number of all other high-speed interconnects (1 Gb/s+) § Infini. Band 20 Gb/s connects 40% of the Infini. Band clusters • Reflects the ever growing performance demands § Infini. Band makes the most powerful clusters • 3 of the top 5 (#3, #4, #5) and 7 of the Top 20 (#14, #16, #18, #20) • The leading interconnect for the Top 100 Number of Clusters in Top 500 4 Mellanox Confidential

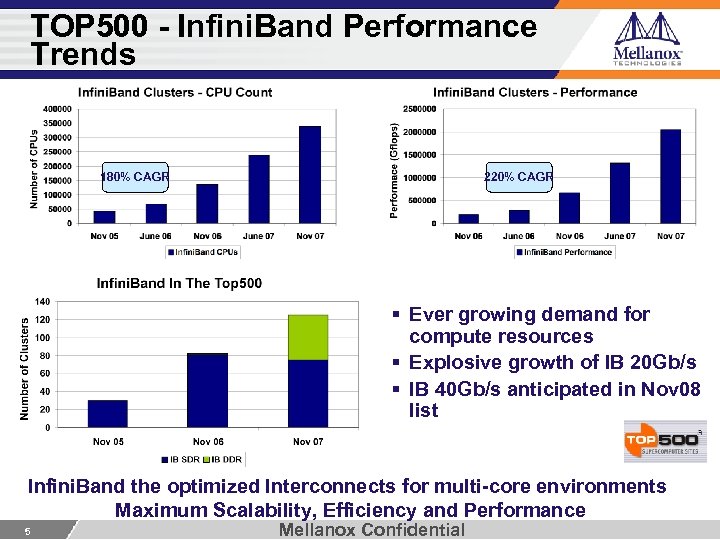

TOP 500 - Infini. Band Performance Trends 180% CAGR 220% CAGR § Ever growing demand for compute resources § Explosive growth of IB 20 Gb/s § IB 40 Gb/s anticipated in Nov 08 list Infini. Band the optimized Interconnects for multi-core environments Maximum Scalability, Efficiency and Performance 5 Mellanox Confidential

TOP 500 - Infini. Band Performance Trends 180% CAGR 220% CAGR § Ever growing demand for compute resources § Explosive growth of IB 20 Gb/s § IB 40 Gb/s anticipated in Nov 08 list Infini. Band the optimized Interconnects for multi-core environments Maximum Scalability, Efficiency and Performance 5 Mellanox Confidential

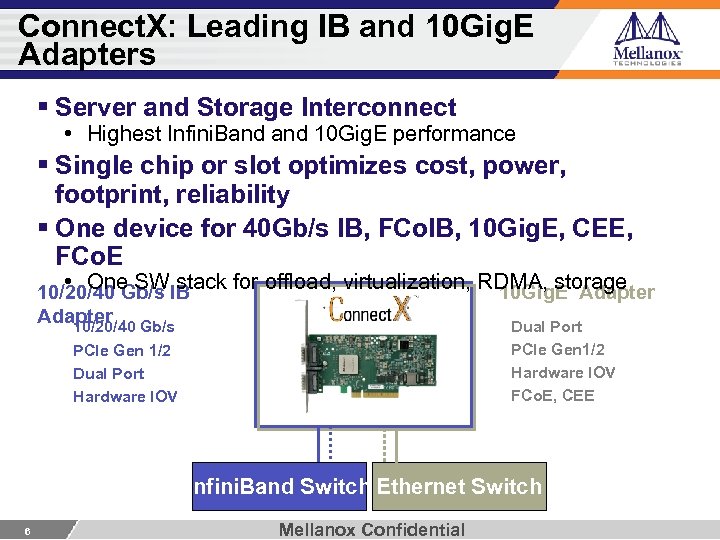

Connect. X: Leading IB and 10 Gig. E Adapters § Server and Storage Interconnect • Highest Infini. Band 10 Gig. E performance § Single chip or slot optimizes cost, power, footprint, reliability § One device for 40 Gb/s IB, FCo. IB, 10 Gig. E, CEE, FCo. E • One SW stack for offload, virtualization, RDMA, storage 10/20/40 Gb/s IB 10 Gig. E Adapter Gb/s Dual Port 10/20/40 PCIe Gen 1/2 Hardware IOV FCo. E, CEE PCIe Gen 1/2 Dual Port Hardware IOV Infini. Band Switch Ethernet Switch 6 Mellanox Confidential

Connect. X: Leading IB and 10 Gig. E Adapters § Server and Storage Interconnect • Highest Infini. Band 10 Gig. E performance § Single chip or slot optimizes cost, power, footprint, reliability § One device for 40 Gb/s IB, FCo. IB, 10 Gig. E, CEE, FCo. E • One SW stack for offload, virtualization, RDMA, storage 10/20/40 Gb/s IB 10 Gig. E Adapter Gb/s Dual Port 10/20/40 PCIe Gen 1/2 Hardware IOV FCo. E, CEE PCIe Gen 1/2 Dual Port Hardware IOV Infini. Band Switch Ethernet Switch 6 Mellanox Confidential

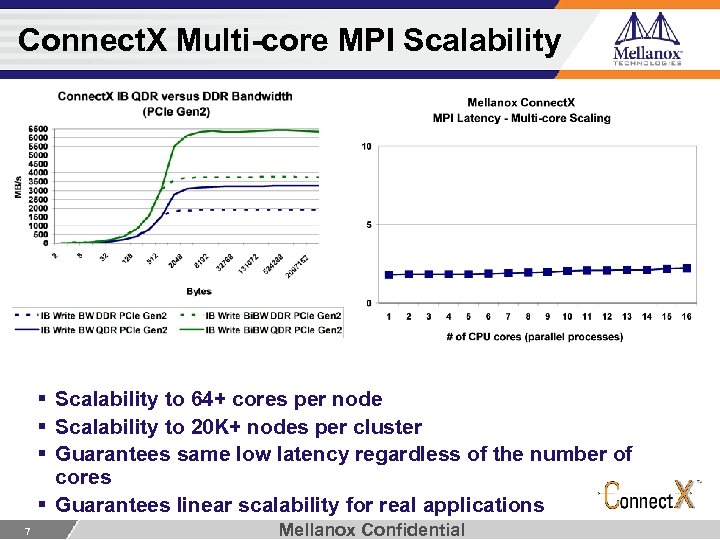

Connect. X Multi-core MPI Scalability § Scalability to 64+ cores per node § Scalability to 20 K+ nodes per cluster § Guarantees same low latency regardless of the number of cores § Guarantees linear scalability for real applications 7 Mellanox Confidential

Connect. X Multi-core MPI Scalability § Scalability to 64+ cores per node § Scalability to 20 K+ nodes per cluster § Guarantees same low latency regardless of the number of cores § Guarantees linear scalability for real applications 7 Mellanox Confidential

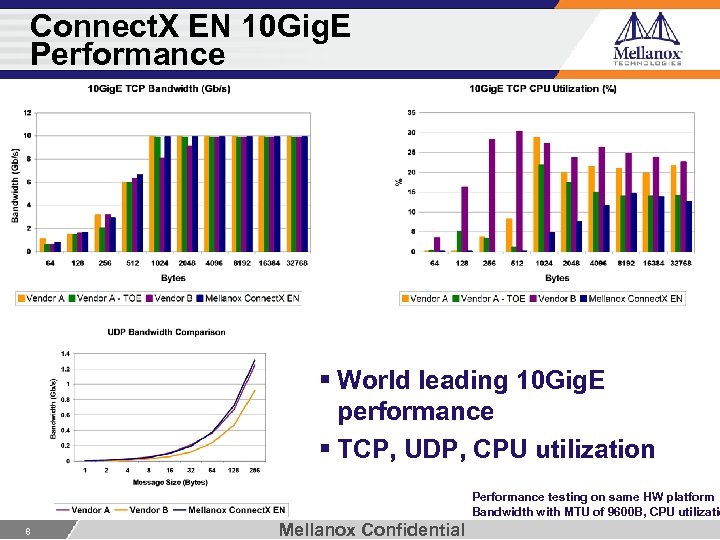

Connect. X EN 10 Gig. E Performance § World leading 10 Gig. E performance § TCP, UDP, CPU utilization Performance testing on same HW platform Bandwidth with MTU of 9600 B, CPU utilizatio 8 Mellanox Confidential

Connect. X EN 10 Gig. E Performance § World leading 10 Gig. E performance § TCP, UDP, CPU utilization Performance testing on same HW platform Bandwidth with MTU of 9600 B, CPU utilizatio 8 Mellanox Confidential

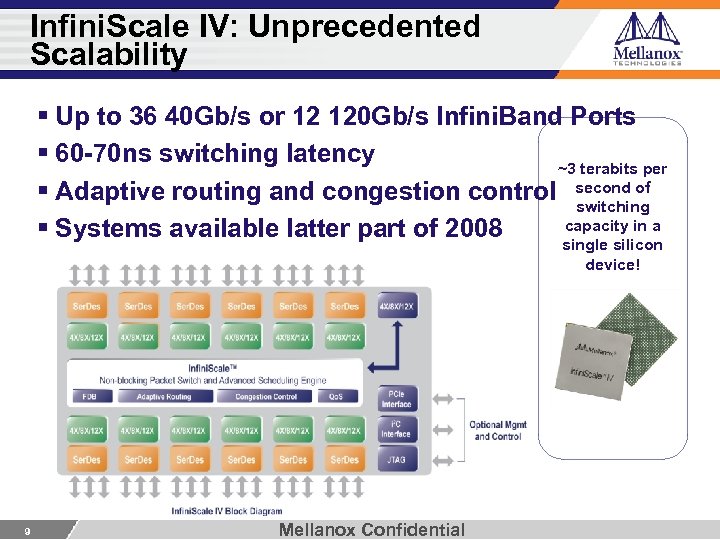

Infini. Scale IV: Unprecedented Scalability § Up to 36 40 Gb/s or 12 120 Gb/s Infini. Band Ports § 60 -70 ns switching latency ~3 terabits per § Adaptive routing and congestion control second of switching capacity in a § Systems available latter part of 2008 single silicon device! 9 Mellanox Confidential

Infini. Scale IV: Unprecedented Scalability § Up to 36 40 Gb/s or 12 120 Gb/s Infini. Band Ports § 60 -70 ns switching latency ~3 terabits per § Adaptive routing and congestion control second of switching capacity in a § Systems available latter part of 2008 single silicon device! 9 Mellanox Confidential

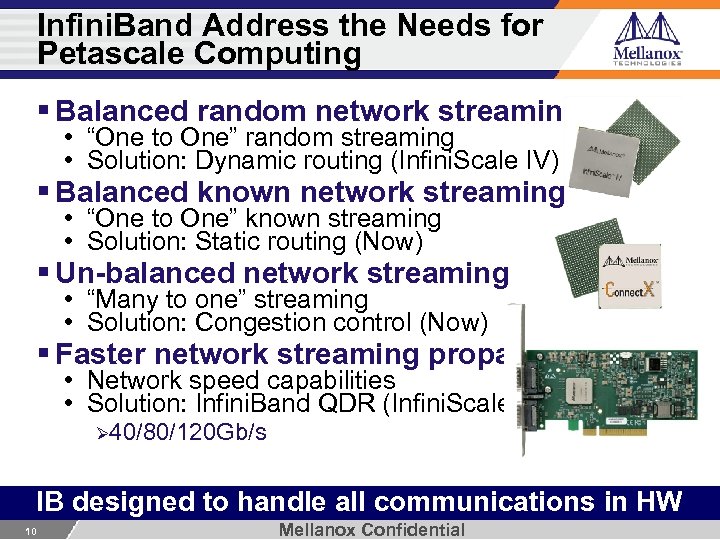

Infini. Band Address the Needs for Petascale Computing § Balanced random network streaming • “One to One” random streaming • Solution: Dynamic routing (Infini. Scale IV) § Balanced known network streaming • “One to One” known streaming • Solution: Static routing (Now) § Un-balanced network streaming • “Many to one” streaming • Solution: Congestion control (Now) § Faster network streaming propagation • Network speed capabilities • Solution: Infini. Band QDR (Infini. Scale IV) Ø 40/80/120 Gb/s IB designed to handle all communications in HW 10 Mellanox Confidential

Infini. Band Address the Needs for Petascale Computing § Balanced random network streaming • “One to One” random streaming • Solution: Dynamic routing (Infini. Scale IV) § Balanced known network streaming • “One to One” known streaming • Solution: Static routing (Now) § Un-balanced network streaming • “Many to one” streaming • Solution: Congestion control (Now) § Faster network streaming propagation • Network speed capabilities • Solution: Infini. Band QDR (Infini. Scale IV) Ø 40/80/120 Gb/s IB designed to handle all communications in HW 10 Mellanox Confidential

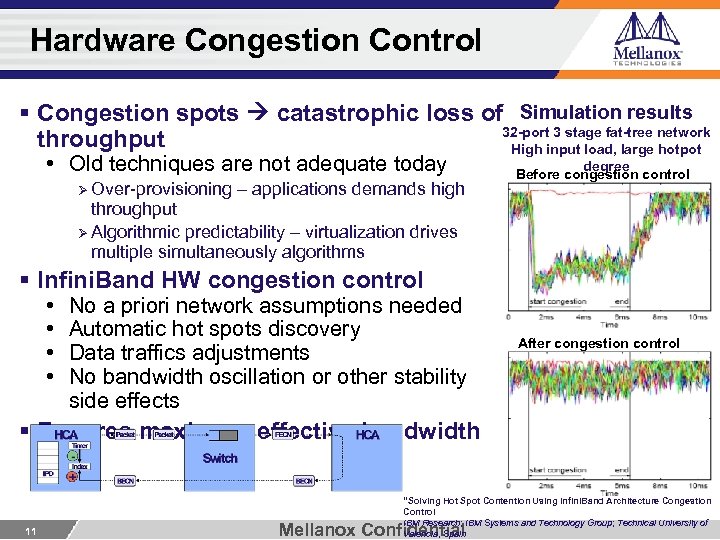

Hardware Congestion Control § Congestion spots catastrophic loss of Simulation results 32 -port 3 stage fat-tree network throughput High input load, large hotpot • Old techniques are not adequate today Ø Over-provisioning – applications demands high degree Before congestion control throughput Ø Algorithmic predictability – virtualization drives multiple simultaneously algorithms § Infini. Band HW congestion control • • No a priori network assumptions needed Automatic hot spots discovery Data traffics adjustments No bandwidth oscillation or other stability side effects After congestion control § Ensures maximum effective bandwidth 11 “Solving Hot Spot Contention Using Infini. Band Architecture Congestion Control IBM Research; IBM Systems and Technology Group; Technical University of Valencia, Spain Mellanox Confidential

Hardware Congestion Control § Congestion spots catastrophic loss of Simulation results 32 -port 3 stage fat-tree network throughput High input load, large hotpot • Old techniques are not adequate today Ø Over-provisioning – applications demands high degree Before congestion control throughput Ø Algorithmic predictability – virtualization drives multiple simultaneously algorithms § Infini. Band HW congestion control • • No a priori network assumptions needed Automatic hot spots discovery Data traffics adjustments No bandwidth oscillation or other stability side effects After congestion control § Ensures maximum effective bandwidth 11 “Solving Hot Spot Contention Using Infini. Band Architecture Congestion Control IBM Research; IBM Systems and Technology Group; Technical University of Valencia, Spain Mellanox Confidential

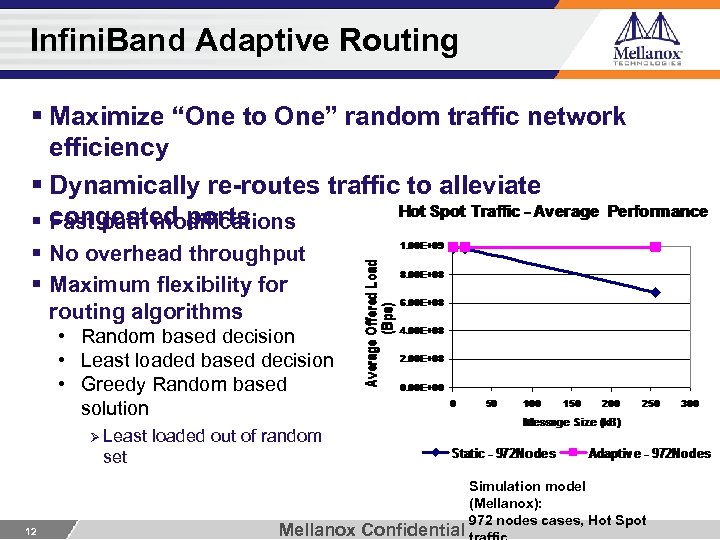

Infini. Band Adaptive Routing § Maximize “One to One” random traffic network efficiency § Dynamically re-routes traffic to alleviate congested ports § Fast path modifications § No overhead throughput § Maximum flexibility for routing algorithms • Random based decision • Least loaded based decision • Greedy Random based solution Ø Least loaded out of random set 12 Mellanox Confidential Simulation model (Mellanox): 972 nodes cases, Hot Spot

Infini. Band Adaptive Routing § Maximize “One to One” random traffic network efficiency § Dynamically re-routes traffic to alleviate congested ports § Fast path modifications § No overhead throughput § Maximum flexibility for routing algorithms • Random based decision • Least loaded based decision • Greedy Random based solution Ø Least loaded out of random set 12 Mellanox Confidential Simulation model (Mellanox): 972 nodes cases, Hot Spot

Infini. Band QDR 40 Gb/s Technology § Superior performance for HPC applications • Highest bandwidth, 1 us node-to-node latency • Low CPU overhead, MPI offloads • Designed for current and future multi-core environments § Addresses the needs for Petascale Computing • Adaptive routing, congestion control, large-scale switching • Fast network streaming propagation § Consolidated I/O for server and storage • Optimize cost and reduce power consumption 13 Mellanox Confidential

Infini. Band QDR 40 Gb/s Technology § Superior performance for HPC applications • Highest bandwidth, 1 us node-to-node latency • Low CPU overhead, MPI offloads • Designed for current and future multi-core environments § Addresses the needs for Petascale Computing • Adaptive routing, congestion control, large-scale switching • Fast network streaming propagation § Consolidated I/O for server and storage • Optimize cost and reduce power consumption 13 Mellanox Confidential

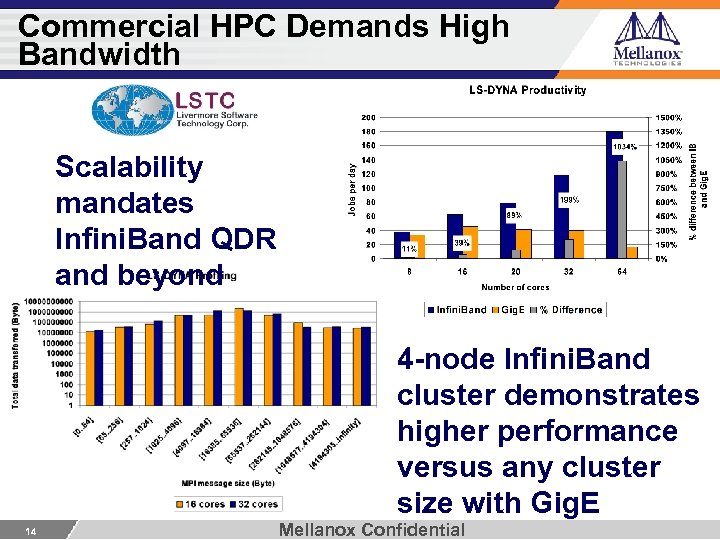

Commercial HPC Demands High Bandwidth Scalability mandates Infini. Band QDR and beyond 4 -node Infini. Band cluster demonstrates higher performance versus any cluster size with Gig. E 14 Mellanox Confidential

Commercial HPC Demands High Bandwidth Scalability mandates Infini. Band QDR and beyond 4 -node Infini. Band cluster demonstrates higher performance versus any cluster size with Gig. E 14 Mellanox Confidential

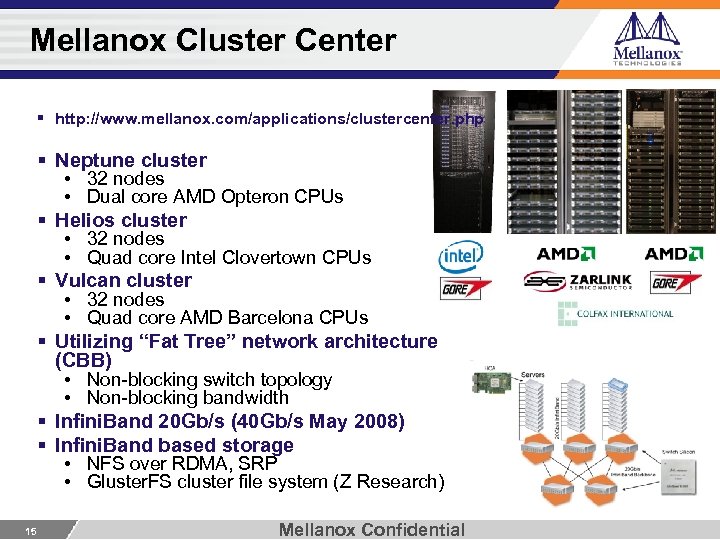

Mellanox Cluster Center § http: //www. mellanox. com/applications/clustercenter. php § Neptune cluster • 32 nodes • Dual core AMD Opteron CPUs § Helios cluster • 32 nodes • Quad core Intel Clovertown CPUs § Vulcan cluster • 32 nodes • Quad core AMD Barcelona CPUs § Utilizing “Fat Tree” network architecture (CBB) • Non-blocking switch topology • Non-blocking bandwidth § Infini. Band 20 Gb/s (40 Gb/s May 2008) § Infini. Band based storage • NFS over RDMA, SRP • Gluster. FS cluster file system (Z Research) 15 Mellanox Confidential

Mellanox Cluster Center § http: //www. mellanox. com/applications/clustercenter. php § Neptune cluster • 32 nodes • Dual core AMD Opteron CPUs § Helios cluster • 32 nodes • Quad core Intel Clovertown CPUs § Vulcan cluster • 32 nodes • Quad core AMD Barcelona CPUs § Utilizing “Fat Tree” network architecture (CBB) • Non-blocking switch topology • Non-blocking bandwidth § Infini. Band 20 Gb/s (40 Gb/s May 2008) § Infini. Band based storage • NFS over RDMA, SRP • Gluster. FS cluster file system (Z Research) 15 Mellanox Confidential

Summary § Market-wide adoption of Infini. Band • • Servers/blades, storage and switch systems Data Centers, High-Performance Computing, Embedded Performance, Price, Power, Reliable, Efficient, Scalable Mature software ecosystem § 4 th Generation adapter extends connectivity to IB and Eth • Market leading performance, capabilities and flexibility • Multiple 1000+ node clusters already deployed § 4 th Generation switch available May 08 • 40 Gb/s server and storage connections, 120 Gb/s switch to switch links • 60 -70 nsec latency, 36 ports in a single switch chip, 3 Terabits/second § Driving key trends in the market 16 • Clustering/blades, low-latency, I/O consolidation, multi-core Mellanox Confidential CPUs and virtualization

Summary § Market-wide adoption of Infini. Band • • Servers/blades, storage and switch systems Data Centers, High-Performance Computing, Embedded Performance, Price, Power, Reliable, Efficient, Scalable Mature software ecosystem § 4 th Generation adapter extends connectivity to IB and Eth • Market leading performance, capabilities and flexibility • Multiple 1000+ node clusters already deployed § 4 th Generation switch available May 08 • 40 Gb/s server and storage connections, 120 Gb/s switch to switch links • 60 -70 nsec latency, 36 ports in a single switch chip, 3 Terabits/second § Driving key trends in the market 16 • Clustering/blades, low-latency, I/O consolidation, multi-core Mellanox Confidential CPUs and virtualization

Thank You Gilad Shainer shainer@mellanox. com CONFIDENTIAL

Thank You Gilad Shainer shainer@mellanox. com CONFIDENTIAL

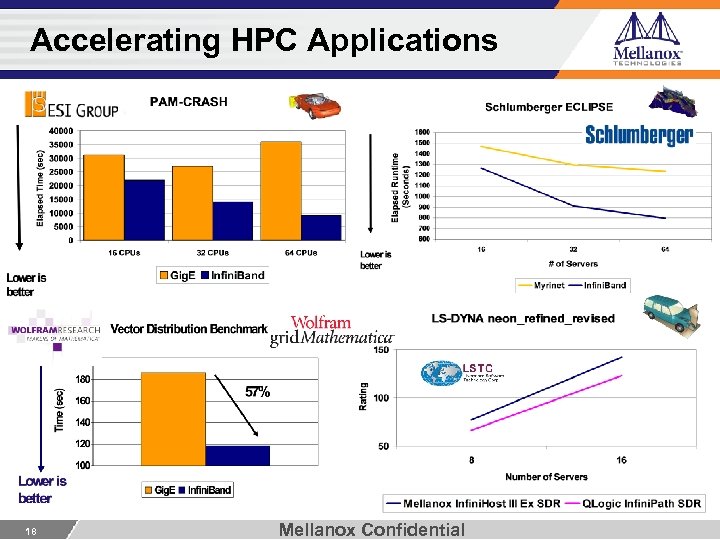

Accelerating HPC Applications 18 Mellanox Confidential

Accelerating HPC Applications 18 Mellanox Confidential