c661555a241f5acb714a5b67b3386404.ppt

- Количество слайдов: 26

Massimo Poesio: Good morning. In this presentation I am going to summarize my research interests, at the intersection of cognition, computation, and language COMPUTATIONAL APPROACHES TO REFERENCE Jeanette Gundel & Massimo Poesio LSA Summer Institute

Massimo Poesio: Good morning. In this presentation I am going to summarize my research interests, at the intersection of cognition, computation, and language COMPUTATIONAL APPROACHES TO REFERENCE Jeanette Gundel & Massimo Poesio LSA Summer Institute

The syllabus l l l Week 1: Linguistic and psychologic evidence concerning referring expressions and their interpretation Week 2: The interpretation of pronouns Week 3: Definite descriptions and demonstratives

The syllabus l l l Week 1: Linguistic and psychologic evidence concerning referring expressions and their interpretation Week 2: The interpretation of pronouns Week 3: Definite descriptions and demonstratives

Today l l l Linguistic evidence about demonstratives (Jeanette) Poesio and Modjeska: Corpus-based verification of the THIS-NP Hypothesis The Byron algorithm

Today l l l Linguistic evidence about demonstratives (Jeanette) Poesio and Modjeska: Corpus-based verification of the THIS-NP Hypothesis The Byron algorithm

Massimo Poesio: Good morning. In this presentation I am going to summarize my research interests, at the intersection of cognition, computation, and language RESOLVING DEMONSTRATIVES: THE PHORA ALGORITHM LSA Summer Institute July 17 th, 2003

Massimo Poesio: Good morning. In this presentation I am going to summarize my research interests, at the intersection of cognition, computation, and language RESOLVING DEMONSTRATIVES: THE PHORA ALGORITHM LSA Summer Institute July 17 th, 2003

This lecture l l Pronouns and demonstratives are different Pronoun resolution algorithms for demonstratives PHORA Evaluation

This lecture l l Pronouns and demonstratives are different Pronoun resolution algorithms for demonstratives PHORA Evaluation

Pronouns vs. demonstratives, recap of the facts Put the apple on the napkin and then move it to the side. Put the apple on the napkin and then move that to the side. John thought about {becoming a bum}. It would hurt his mother and it would make his father furious. It would hurt his mother and that would make his father furious. (Schuster, 1988)

Pronouns vs. demonstratives, recap of the facts Put the apple on the napkin and then move it to the side. Put the apple on the napkin and then move that to the side. John thought about {becoming a bum}. It would hurt his mother and it would make his father furious. It would hurt his mother and that would make his father furious. (Schuster, 1988)

`Uniform’ pronoun resolution algorithms: LRC (Tetreault, 2001) It They Them PRO Total That This Those DEM total Total A Total number 89 19 10 118 127 2 18 147 265 B Expletives 32 0 0 32 2 0 0 2 34 C Det / rel pro 0 0 25 1 8 14 0 D Non-ref (B+C) 32 0 0 32 27 1 8 36 68 E Total ref (A-D) 57 19 10 86 100 1 10 111 186 G Abandon utt 7 0 0 7 7 0 4 11 18 I Eval set 50 19 10 79 93 1 6 100 179 LRC 27 16 8 51 (65%) 10 0 4 14 (14%) 65 (36%)

`Uniform’ pronoun resolution algorithms: LRC (Tetreault, 2001) It They Them PRO Total That This Those DEM total Total A Total number 89 19 10 118 127 2 18 147 265 B Expletives 32 0 0 32 2 0 0 2 34 C Det / rel pro 0 0 25 1 8 14 0 D Non-ref (B+C) 32 0 0 32 27 1 8 36 68 E Total ref (A-D) 57 19 10 86 100 1 10 111 186 G Abandon utt 7 0 0 7 7 0 4 11 18 I Eval set 50 19 10 79 93 1 6 100 179 LRC 27 16 8 51 (65%) 10 0 4 14 (14%) 65 (36%)

PHORA (Byron, 2002) l l Resolves both personal and demonstrative pronouns Using SEPARATE algorithms (cfr. Sidner, 1979)

PHORA (Byron, 2002) l l Resolves both personal and demonstrative pronouns Using SEPARATE algorithms (cfr. Sidner, 1979)

The Discourse Model, I: Mentioned Entities and Activated Entities l l Discourse model contains TWO separated lists of objects MENTIONED ENTITIES: interpretation of NPs – l One for each referring expression: proper names, descriptive NPs, demonstrative pronouns, 3 rd personal, possessive, and reflexive ACTIVATED ENTITIES: – – Each clause may evoke more than one PROXY for linguistic constituents other than NPs REFERRING FUNCTIONS are used to extract interpretation

The Discourse Model, I: Mentioned Entities and Activated Entities l l Discourse model contains TWO separated lists of objects MENTIONED ENTITIES: interpretation of NPs – l One for each referring expression: proper names, descriptive NPs, demonstrative pronouns, 3 rd personal, possessive, and reflexive ACTIVATED ENTITIES: – – Each clause may evoke more than one PROXY for linguistic constituents other than NPs REFERRING FUNCTIONS are used to extract interpretation

The Discourse Model, II l l l Mentioned DEs remain in DM for the entire discourse Activated Des updated after every CLAUSE FOCUS is the first-mentioned entity of a clause; updated after each clause

The Discourse Model, II l l l Mentioned DEs remain in DM for the entire discourse Activated Des updated after every CLAUSE FOCUS is the first-mentioned entity of a clause; updated after each clause

Examples of Proxies Infinitival / gerund TO LOAD THE BOXCARS takes one hour Entire clause ENGINE E 3 IS AT CORNING Complement THAT-clause I think THAT ENGINE E 3 IS AT CORNING Subordinate clause If ENGINE E 3 IS AT CORNING, we should send it to Bath.

Examples of Proxies Infinitival / gerund TO LOAD THE BOXCARS takes one hour Entire clause ENGINE E 3 IS AT CORNING Complement THAT-clause I think THAT ENGINE E 3 IS AT CORNING Subordinate clause If ENGINE E 3 IS AT CORNING, we should send it to Bath.

Outline of the algorithm 1. 2. Build discourse proxies for Discourse Unit n (DUn) For each pronoun p in DUn+1 a. b. Calculate the MOST GENERAL SEMANTIC TYPE T that satisfies the constraints on the predicate argument position the pronoun occurs in Find a referent that matches p using different search orders for personal and demonstrative pronouns

Outline of the algorithm 1. 2. Build discourse proxies for Discourse Unit n (DUn) For each pronoun p in DUn+1 a. b. Calculate the MOST GENERAL SEMANTIC TYPE T that satisfies the constraints on the predicate argument position the pronoun occurs in Find a referent that matches p using different search orders for personal and demonstrative pronouns

The importance of semantic context l l Often the predicate imposes strong constraints on the type of objects that may serve as antecedents of the pronoun Eckert and Strube (2000): I-INCOMPATIBLE vs. A-INCOMPATIBLE – – That’s right Let me help you lifting THAT

The importance of semantic context l l Often the predicate imposes strong constraints on the type of objects that may serve as antecedents of the pronoun Eckert and Strube (2000): I-INCOMPATIBLE vs. A-INCOMPATIBLE – – That’s right Let me help you lifting THAT

Semantic Constraints in PHORA l VERB’s SELECTIONAL RESTRICTIONS: – l PREDICATE NPs: force same type interpret. – l That’s a good route ROUTE(X) PREDICATE ADJECTIVES: – l Load THEM into the boxcar CARGO(X) It’s right CORRECT(X) PROPOSITION(X) NO CONSTRAINT: – That’s good ACCEPTABLE(X)

Semantic Constraints in PHORA l VERB’s SELECTIONAL RESTRICTIONS: – l PREDICATE NPs: force same type interpret. – l That’s a good route ROUTE(X) PREDICATE ADJECTIVES: – l Load THEM into the boxcar CARGO(X) It’s right CORRECT(X) PROPOSITION(X) NO CONSTRAINT: – That’s good ACCEPTABLE(X)

Searching for antecedents l Choose as antecedent the first DE that satisfies agreement features and semantic constraints for the pronoun, searching in different orders for personal and demonstrative pronouns.

Searching for antecedents l Choose as antecedent the first DE that satisfies agreement features and semantic constraints for the pronoun, searching in different orders for personal and demonstrative pronouns.

Search: Personal pronouns 1. 2. 3. 4. Mentioned entities to the left of the pronoun in the current clause, DUn+1, right-to-left The focused entity of DUn The remaining mentioned entities, going backwards one clause at a time, and then leftto-right in the clause Activated entities in DUn

Search: Personal pronouns 1. 2. 3. 4. Mentioned entities to the left of the pronoun in the current clause, DUn+1, right-to-left The focused entity of DUn The remaining mentioned entities, going backwards one clause at a time, and then leftto-right in the clause Activated entities in DUn

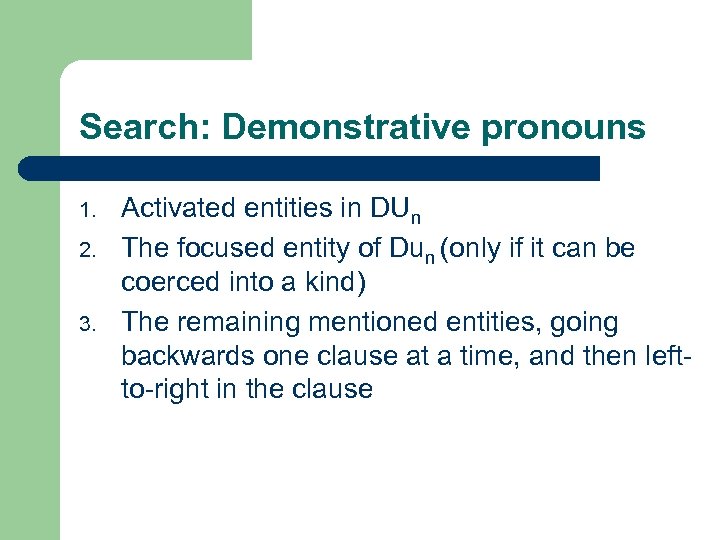

Search: Demonstrative pronouns 1. 2. 3. Activated entities in DUn The focused entity of Dun (only if it can be coerced into a kind) The remaining mentioned entities, going backwards one clause at a time, and then leftto-right in the clause

Search: Demonstrative pronouns 1. 2. 3. Activated entities in DUn The focused entity of Dun (only if it can be coerced into a kind) The remaining mentioned entities, going backwards one clause at a time, and then leftto-right in the clause

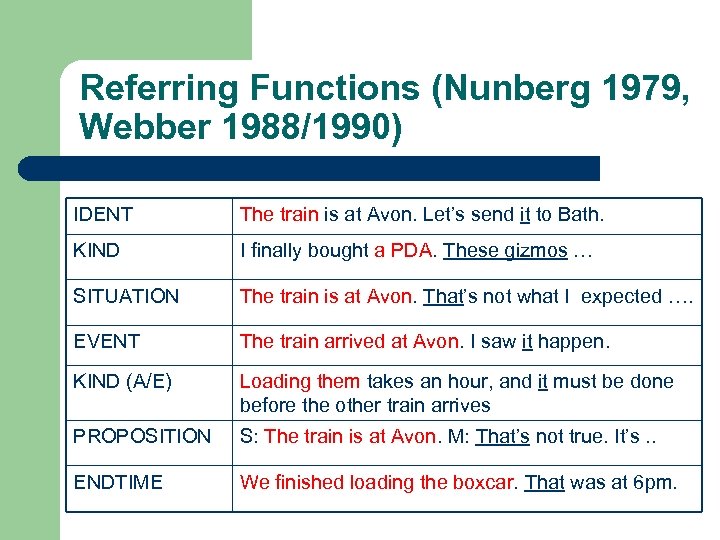

Referring Functions (Nunberg 1979, Webber 1988/1990) IDENT The train is at Avon. Let’s send it to Bath. KIND I finally bought a PDA. These gizmos … SITUATION The train is at Avon. That’s not what I expected …. EVENT The train arrived at Avon. I saw it happen. KIND (A/E) Loading them takes an hour, and it must be done before the other train arrives PROPOSITION S: The train is at Avon. M: That’s not true. It’s. . ENDTIME We finished loading the boxcar. That was at 6 pm.

Referring Functions (Nunberg 1979, Webber 1988/1990) IDENT The train is at Avon. Let’s send it to Bath. KIND I finally bought a PDA. These gizmos … SITUATION The train is at Avon. That’s not what I expected …. EVENT The train arrived at Avon. I saw it happen. KIND (A/E) Loading them takes an hour, and it must be done before the other train arrives PROPOSITION S: The train is at Avon. M: That’s not true. It’s. . ENDTIME We finished loading the boxcar. That was at 6 pm.

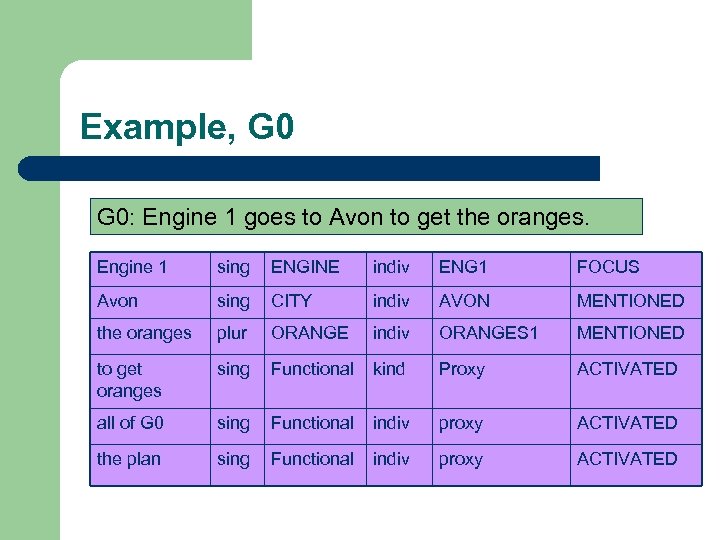

Example, G 0: Engine 1 goes to Avon to get the oranges. Engine 1 sing ENGINE indiv ENG 1 FOCUS Avon sing CITY indiv AVON MENTIONED the oranges plur ORANGE indiv ORANGES 1 MENTIONED to get oranges sing Functional kind Proxy ACTIVATED all of G 0 sing Functional indiv proxy ACTIVATED the plan sing Functional indiv proxy ACTIVATED

Example, G 0: Engine 1 goes to Avon to get the oranges. Engine 1 sing ENGINE indiv ENG 1 FOCUS Avon sing CITY indiv AVON MENTIONED the oranges plur ORANGE indiv ORANGES 1 MENTIONED to get oranges sing Functional kind Proxy ACTIVATED all of G 0 sing Functional indiv proxy ACTIVATED the plan sing Functional indiv proxy ACTIVATED

Example, G 1 a, I G 1 a: So it’ll get there at 3 pm. LF: (ARRIVE : theme x : dest y : time z) SEM CONSTRAINTS: MOVABLE-OBJECT(X) CANDIDATES: ENG 1, ORANGES SEARCH: ENG 1 produced first

Example, G 1 a, I G 1 a: So it’ll get there at 3 pm. LF: (ARRIVE : theme x : dest y : time z) SEM CONSTRAINTS: MOVABLE-OBJECT(X) CANDIDATES: ENG 1, ORANGES SEARCH: ENG 1 produced first

Example, G 1 a, II – Discourse Model update It sing ENGINE indiv ENG 1 FOCUS there sing CITY indiv AVON MENTIONED 3 pm sing TIME indiv 3 pm MENTIONED all of G 1 a sing Functional indiv proxy ACTIVATED the plan sing Functional indiv proxy ACTIVATED

Example, G 1 a, II – Discourse Model update It sing ENGINE indiv ENG 1 FOCUS there sing CITY indiv AVON MENTIONED 3 pm sing TIME indiv 3 pm MENTIONED all of G 1 a sing Functional indiv proxy ACTIVATED the plan sing Functional indiv proxy ACTIVATED

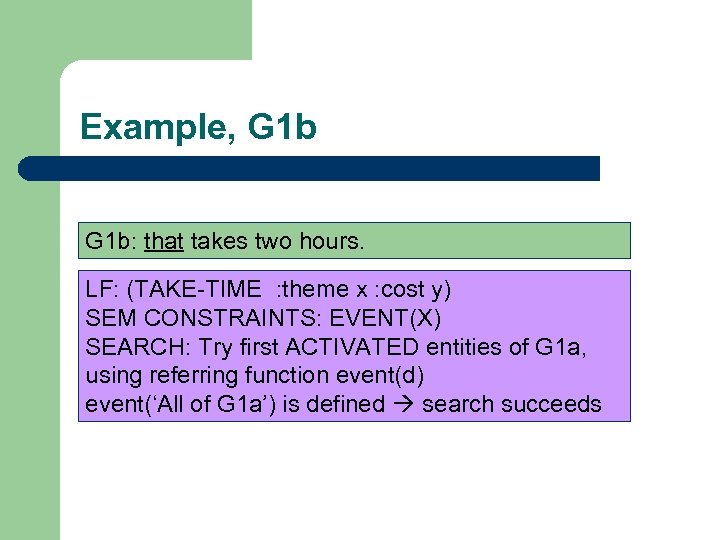

Example, G 1 b: that takes two hours. LF: (TAKE-TIME : theme x : cost y) SEM CONSTRAINTS: EVENT(X) SEARCH: Try first ACTIVATED entities of G 1 a, using referring function event(d) event(‘All of G 1 a’) is defined search succeeds

Example, G 1 b: that takes two hours. LF: (TAKE-TIME : theme x : cost y) SEM CONSTRAINTS: EVENT(X) SEARCH: Try first ACTIVATED entities of G 1 a, using referring function event(d) event(‘All of G 1 a’) is defined search succeeds

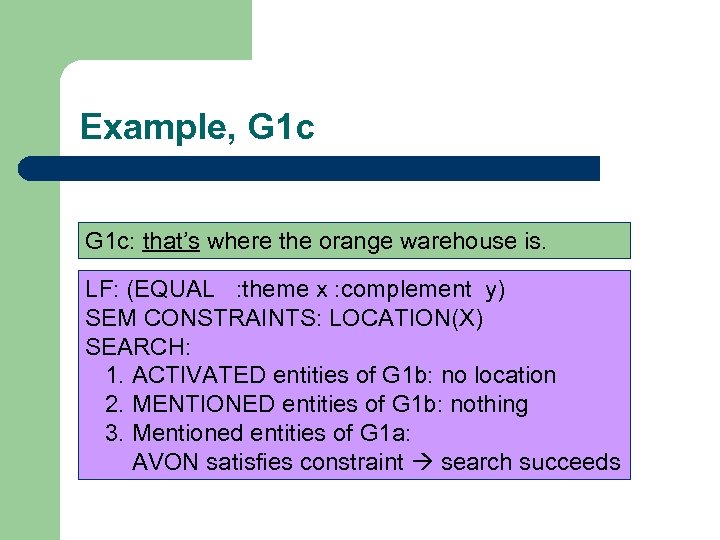

Example, G 1 c: that’s where the orange warehouse is. LF: (EQUAL : theme x : complement y) SEM CONSTRAINTS: LOCATION(X) SEARCH: 1. ACTIVATED entities of G 1 b: no location 2. MENTIONED entities of G 1 b: nothing 3. Mentioned entities of G 1 a: AVON satisfies constraint search succeeds

Example, G 1 c: that’s where the orange warehouse is. LF: (EQUAL : theme x : complement y) SEM CONSTRAINTS: LOCATION(X) SEARCH: 1. ACTIVATED entities of G 1 b: no location 2. MENTIONED entities of G 1 b: nothing 3. Mentioned entities of G 1 a: AVON satisfies constraint search succeeds

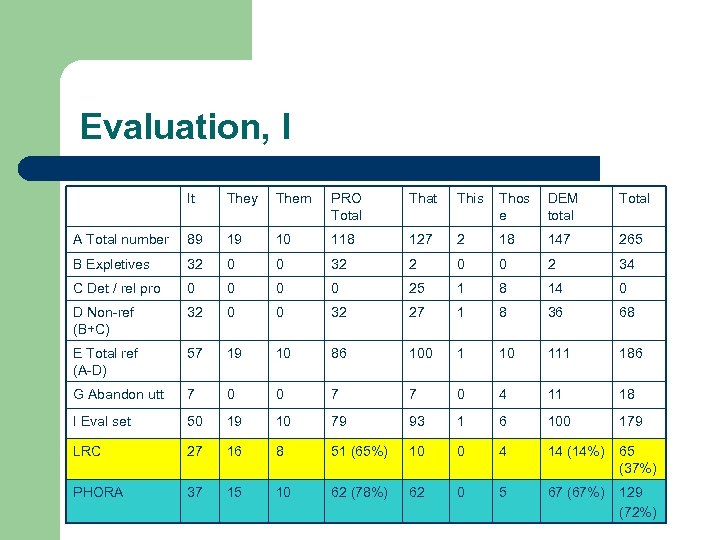

Evaluation, I It They Them PRO Total That This Thos e DEM total Total A Total number 89 19 10 118 127 2 18 147 265 B Expletives 32 0 0 32 2 0 0 2 34 C Det / rel pro 0 0 25 1 8 14 0 D Non-ref (B+C) 32 0 0 32 27 1 8 36 68 E Total ref (A-D) 57 19 10 86 100 1 10 111 186 G Abandon utt 7 0 0 7 7 0 4 11 18 I Eval set 50 19 10 79 93 1 6 100 179 LRC 27 16 8 51 (65%) 10 0 4 14 (14%) 65 (37%) PHORA 37 15 10 62 (78%) 62 0 5 67 (67%) 129 (72%)

Evaluation, I It They Them PRO Total That This Thos e DEM total Total A Total number 89 19 10 118 127 2 18 147 265 B Expletives 32 0 0 32 2 0 0 2 34 C Det / rel pro 0 0 25 1 8 14 0 D Non-ref (B+C) 32 0 0 32 27 1 8 36 68 E Total ref (A-D) 57 19 10 86 100 1 10 111 186 G Abandon utt 7 0 0 7 7 0 4 11 18 I Eval set 50 19 10 79 93 1 6 100 179 LRC 27 16 8 51 (65%) 10 0 4 14 (14%) 65 (37%) PHORA 37 15 10 62 (78%) 62 0 5 67 (67%) 129 (72%)

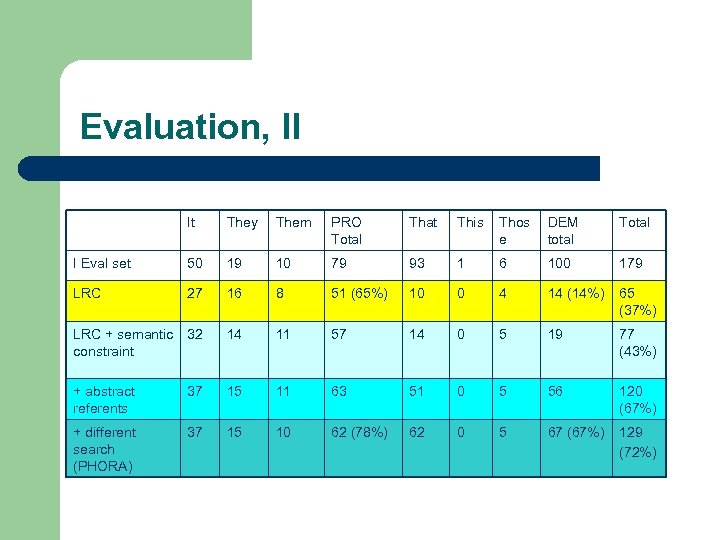

Evaluation, II It They Them PRO Total That This Thos e DEM total Total I Eval set 50 19 10 79 93 1 6 100 179 LRC 27 16 8 51 (65%) 10 0 4 14 (14%) 65 (37%) LRC + semantic constraint 32 14 11 57 14 0 5 19 77 (43%) + abstract referents 37 15 11 63 51 0 5 56 120 (67%) + different search (PHORA) 37 15 10 62 (78%) 62 0 5 67 (67%) 129 (72%)

Evaluation, II It They Them PRO Total That This Thos e DEM total Total I Eval set 50 19 10 79 93 1 6 100 179 LRC 27 16 8 51 (65%) 10 0 4 14 (14%) 65 (37%) LRC + semantic constraint 32 14 11 57 14 0 5 19 77 (43%) + abstract referents 37 15 11 63 51 0 5 56 120 (67%) + different search (PHORA) 37 15 10 62 (78%) 62 0 5 67 (67%) 129 (72%)

References l l M. Eckert and M. Strube. 2000. Dialogue Acts, synchronizing units, and anaphora resolution. Journal of Semantics, 17(1). B. Webber. Structure and ostension in the interpretation of deixis. Language and Cognitive Processes, 1990.

References l l M. Eckert and M. Strube. 2000. Dialogue Acts, synchronizing units, and anaphora resolution. Journal of Semantics, 17(1). B. Webber. Structure and ostension in the interpretation of deixis. Language and Cognitive Processes, 1990.