555bcd169e57a1e60730ec16a885ba3a.ppt

- Количество слайдов: 33

Managing our Grid Node, Involvement under Trust Fabric, External Collaboration, & In-House Projects Adeel-ur-Rehman on behalf of Advanced Scientific Computing Group (ASC)

Scheme of Talk Ø Grid Computing • NCP-LCG 2 (T 2_PK_NCP) Ø Certification Authority • PK-GRID-CA Ø In-House HPC Framework • NCP Cluster Ø Projects with CERN • CMS Collaboration Ø Software Development & Support Ø Assistance for EHEP fellows 3/16/2018 ASM-2013 2

Grid Computing 3/16/2018 ASM-2013 3

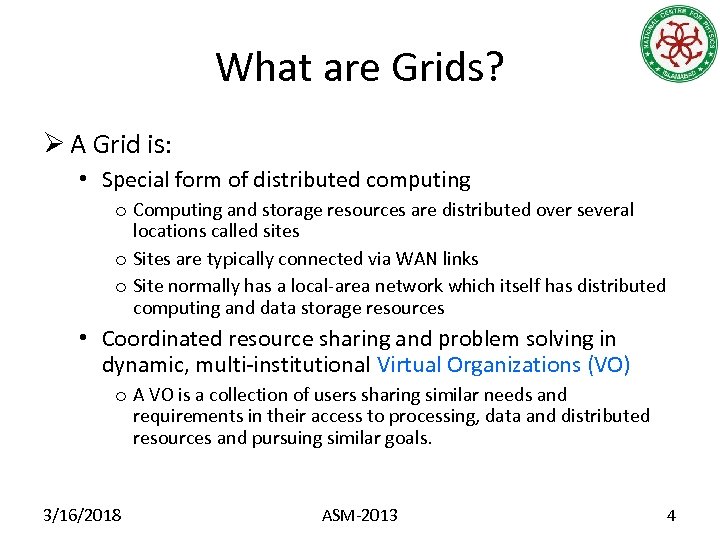

What are Grids? Ø A Grid is: • Special form of distributed computing o Computing and storage resources are distributed over several locations called sites o Sites are typically connected via WAN links o Site normally has a local-area network which itself has distributed computing and data storage resources • Coordinated resource sharing and problem solving in dynamic, multi-institutional Virtual Organizations (VO) o A VO is a collection of users sharing similar needs and requirements in their access to processing, data and distributed resources and pursuing similar goals. 3/16/2018 ASM-2013 4

Grid in terms of VOs 3/16/2018 ASM-2013 5

LHC & WLCG Ø The Large Hadron Collider (LHC) – the huge particle accelerator: • is constructed at the European Laboratory for Particle Physics (CERN), at Franco-Swiss border near Geneva, Switzerland. • is the world’s largest and most powerful particle accelerator. Ø the experiments using it are generating very large amounts of data (in Peta-Bytes/year). 3/16/2018 ASM-2013 6

LHC & WLCG Ø The job of the Worldwide LHC Computing Grid Project (WCLG) is to prepare the computing infrastructure for the: • Simulation • processing • and analysis of LHC data for all initial four of the LHC collaborations: o ALICE, ATLAS, CMS, and LHCB Ø The processing of this data requires enormous computational and storage resources. 3/16/2018 ASM-2013 7

WLCG at NCP Ø Pakistan initiated collaboration with CERN for CMS experiment in 1990 s. Ø Consequently, the effort to bring Pakistan on the WLCG map as a Grid Node also started. Ø A Grid Technology Workshop was organized by NCP from October 20 -22, 2003. Ø The first ever testbed was deployed during the workshop for tutorial. 3/16/2018 ASM-2013 8

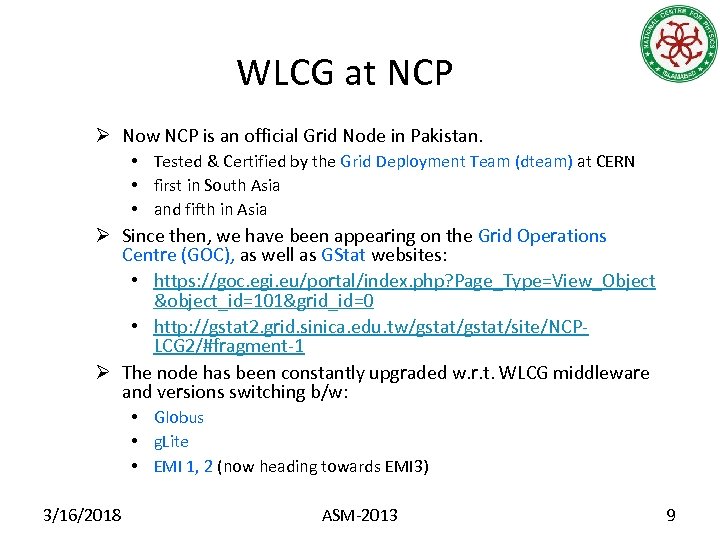

WLCG at NCP Ø Now NCP is an official Grid Node in Pakistan. • Tested & Certified by the Grid Deployment Team (dteam) at CERN • first in South Asia • and fifth in Asia Ø Since then, we have been appearing on the Grid Operations Centre (GOC), as well as GStat websites: • https: //goc. egi. eu/portal/index. php? Page_Type=View_Object &object_id=101&grid_id=0 • http: //gstat 2. grid. sinica. edu. tw/gstat/site/NCPLCG 2/#fragment-1 Ø The node has been constantly upgraded w. r. t. WLCG middleware and versions switching b/w: • Globus • g. Lite • EMI 1, 2 (now heading towards EMI 3) 3/16/2018 ASM-2013 9

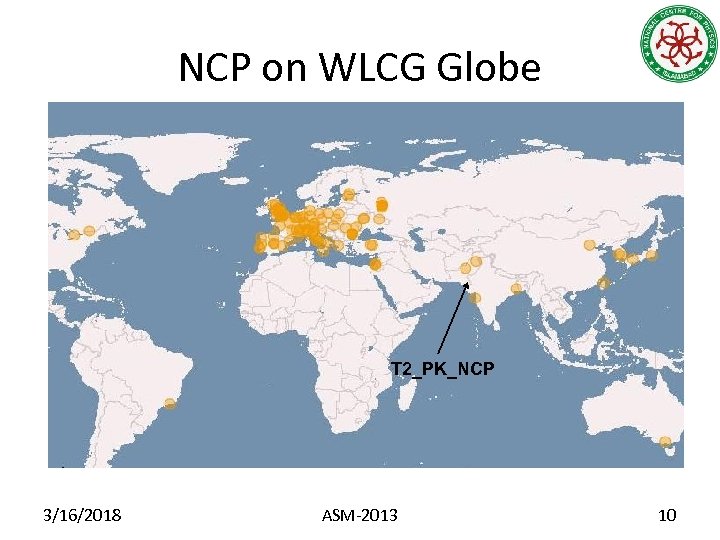

NCP on WLCG Globe T 2_PK_NCP 3/16/2018 ASM-2013 10

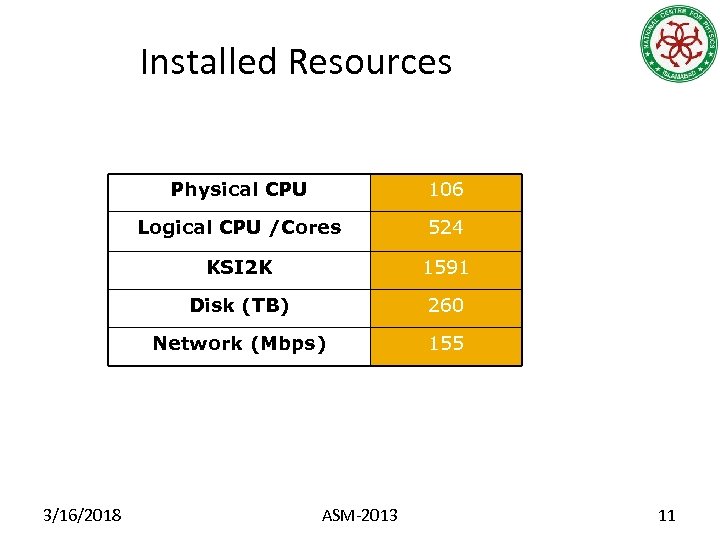

Installed Resources T 2_PK_NCP Site Physical CPU Logical CPU /Cores 524 KSI 2 K 1591 Disk (TB) 260 Network (Mbps) 3/16/2018 106 155 ASM-2013 11

Trust Fabric Involvement 3/16/2018 ASM-2013 12

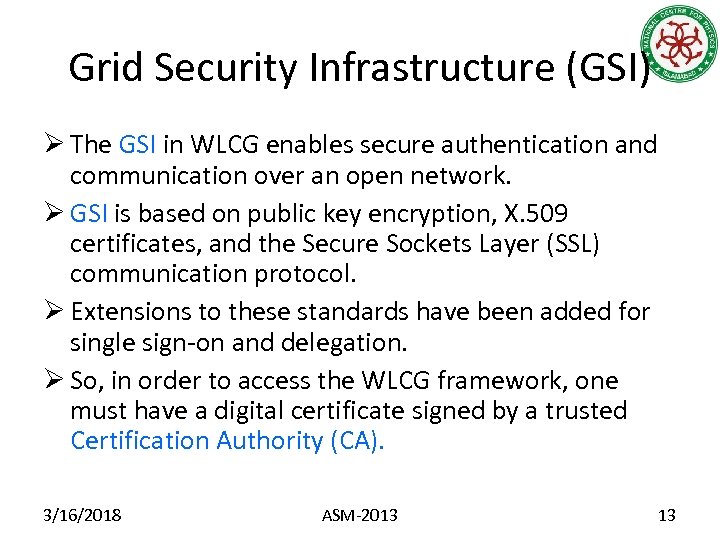

Grid Security Infrastructure (GSI) Ø The GSI in WLCG enables secure authentication and communication over an open network. Ø GSI is based on public key encryption, X. 509 certificates, and the Secure Sockets Layer (SSL) communication protocol. Ø Extensions to these standards have been added for single sign-on and delegation. Ø So, in order to access the WLCG framework, one must have a digital certificate signed by a trusted Certification Authority (CA). 3/16/2018 ASM-2013 13

Certification Authority (CA) Ø A CA is an executive body which issues certificates for users, programs, and machines. Ø A digital certificate is an electronic "credit card" that establishes our credentials when doing business or other transactions on the Web. Ø It uses Public Key Infrastructure (PKI) • Enables users of a public network to exchange data securely using a public/private cryptographic key pair obtained and shared through a trusted authority (CA). 3/16/2018 ASM-2013 14

PK-GRID-CA Ø NCP is itself a CA (non-commercial) which provides X. 509 certificate (user/host) to support the secure environment in grid related projects Ø NCP produced the first Certificate Policy and Certification Practice Statement (CP-CPS) document in December 2003. • http: //www. ncp. edu. pk/pk-grid-ca/docs/cps-1. 1. 1. 0. pdf Ø Reviewed by several members of European Grid Policy Management Authority (EU-Grid-PMA) that works under the umbrella of International Grid Trust Federation (IGTF). Ø IGTF also takes care of APGrid. PMA (for Asia Pacific), and TAGPMA (for the whole America) 3/16/2018 ASM-2013 15

PK-GRID-CA Ø Three revisions were made which resulted from comments and suggestions by PMA members. Ø The CA was presented in September 2004 in the 2 nd meeting of the EU-Grid-PMA held in Brussels. Ø NCP was formally approved by the EU-Grid-PMA as a Certification Authority. Ø PK-Grid-CA had started operations since then. Ø First Certification Authority in Pakistan. Ø For more information: www. ncp. edu. pk/pk-grid-ca 3/16/2018 ASM-2013 16

PK-GRID-CA Ø The routine task comprises of (but not limited to): issuing user/host certificates for our subscribers generating Certificate Revocation Lists (CRLs) revoking certificates when needed signing root key for our CA when due managing CA web portal for handling user requests maintaining users’ records, necessary email correspondence and required cryptographic data pertaining to our root certificate • recording each and every interaction of the CA offline server • monitoring CA premises • maintaining and updating our Certificate Policy/Certification Practice Statement (CP/CPS) document as required etc. • • • 3/16/2018 ASM-2013 17

PK-GRID-CA Statistics Ø Current PK-Grid-CA stats: • Total Certs Issued : 345 o User Certs : 204 o Host Certs : 141 o Certificates Expired : 206 o Certificates Revoked : 54 o Active Certificates : 85 Ø For more info; http: //it. ncp. edu. pk/ca. php 3/16/2018 ASM-2013 18

High Performance Computing Cluster 3/16/2018 ASM-2013 19

NCP Cluster Ø To provide our scientists the access to High Performance Computing resources for running simulation codes to model their research problems. Ø Over 30 researchers from all over the country have been facilitated with our cluster resources. 3/16/2018 ASM-2013 20

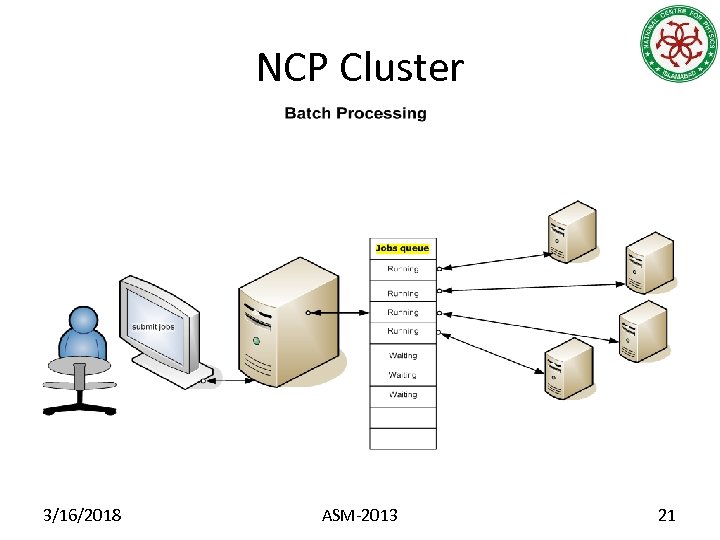

NCP Cluster 3/16/2018 ASM-2013 21

NCP Cluster Ø This cluster has been involved to conduct research and development under diversified areas of study like Ion Channeling, Multi-Particle Interaction, Space Physics, Weather Forecasting, Density Functional Theory (DFT) etc. 3/16/2018 ASM-2013 22

NCP Cluster Ø Hardware Resources and Environment: • • Sun. Fire Intel Xeon Machine 16 GB RAM 8 cores/node with 4 computational nodes Scientific Linux CERN 5. 3 OS f 77, g 77 (gfortran), gcc 3. 4. 6 open. PBS (for batch processing) mpich 2 -1. 0. 6 p 1 (for parallel processing) 3/16/2018 ASM-2013 23

Software Development/Testing with CMS (CERN) Collaboration 3/16/2018 ASM-2013 24

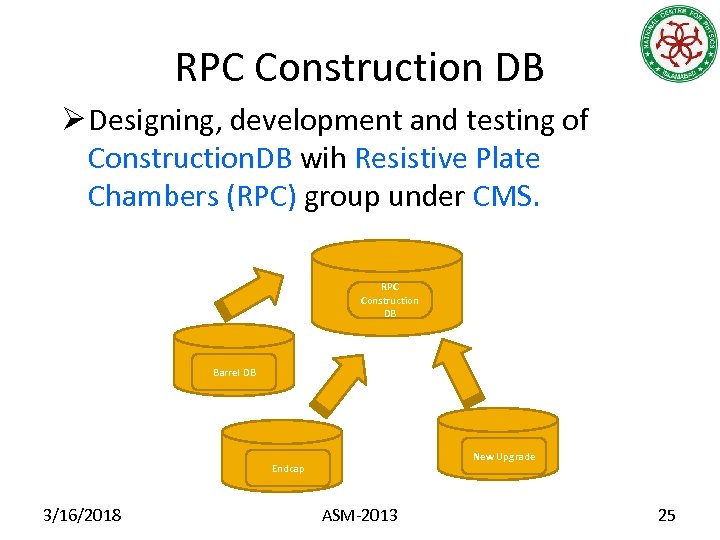

RPC Construction DB Ø Designing, development and testing of Construction. DB wih Resistive Plate Chambers (RPC) group under CMS. RPC Construction DB Barrel DB New Upgrade Endcap 3/16/2018 ASM-2013 25

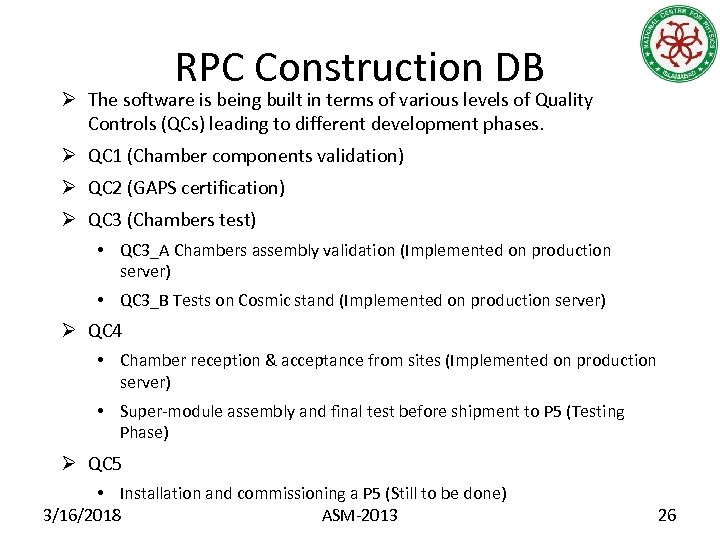

RPC Construction DB Ø The software is being built in terms of various levels of Quality Controls (QCs) leading to different development phases. Ø QC 1 (Chamber components validation) Ø QC 2 (GAPS certification) Ø QC 3 (Chambers test) • QC 3_A Chambers assembly validation (Implemented on production server) • QC 3_B Tests on Cosmic stand (Implemented on production server) Ø QC 4 • Chamber reception & acceptance from sites (Implemented on production server) • Super-module assembly and final test before shipment to P 5 (Testing Phase) Ø QC 5 • Installation and commissioning a P 5 (Still to be done) 3/16/2018 ASM-2013 26

Testing of DQM Sequences Ø Testing performance & goodness of offline Data Quality & Monitoring (DQM) modules within CMSSW project. • Looking for compile-time and run-time failures • Observing memory consumption fluctuation • Notifying the corresponding persons at CERN about our findings in order to decide for the integration of a particular piece of DQM code within CMSSW 3/16/2018 ASM-2013 27

Testing of DQM Sequences Ø The exercise has been carried out over various release cycles of CMSSW like 5_2_X, 5_3_X, 6_0_X, 6_1_X, 6_2_X and currently 7_0_X. Ø Other than that, we are also involved in adopting this workflow as per need of the outer environment such as: • Integration of the test suite within CMSSW • CVS -> Git (Revision Control Systems) • Integration with automated build systems (Jenkins) 3/16/2018 ASM-2013 28

Miscellaneous 3/16/2018 ASM-2013 29

In-House Development and Support Ø Ø Ø Ø Ø Employee Salary System Finance Ledger System (FLS) Online Leave Application System (OLAS) Library Information Management System (LIMS) Hardware Inventory Management System (HIMS) or Network Resource Management System (NRMS) Store Management System Transport Requisition System Redmine Ticketing System ISS Conference Registration System 3/16/2018 ASM-2013 30

Training & Assistance Ø Following types of facilities are offered to our EHEP students for their research work: • Periodic conduction of courses/tutorials: o C++ Programming § Basic § Advanced o Python Programming • Providing help in describing the source code of analysis software such as CMSSW, ROOT etc. • Involvement in troubleshooting the grid site operations on demand. 3/16/2018 ASM-2013 31

References Ø “WLCG Node in Pakistan – Challenges & Experiences”, by Sajjad Asghar, Usman Ahmad Malik & Adeel-ur-Rehman, Managed Grids and Cloud Systems in the Asia-Pacific Research Community 2010, pp 5565, Springer. Ø “Establishment of Public Key Infrastructure in Pakistan”, by Sajjad Asghar, Usman Ahmad Malik & Adeel-ur-Rehman 8 th National Research Conference, SZABIST, Islamabad Ø https: //twiki. cern. ch/twiki/bin/view/CMS/DQMOffline#Tes. T Ø http: //public. web. cern. ch/public/en/LHC-en. html Ø https: //www. eugridpma. org/ 3/16/2018 ASM-2013 32

3/16/2018 ASM-2013 33

555bcd169e57a1e60730ec16a885ba3a.ppt