91069db61b4df025085e5e4b1be0c61b.ppt

- Количество слайдов: 22

Making Simple Decisions Chapter 16 Some material borrowed from Jean-Claude Latombe and Daphne Koller by way of Marie des. Jadines,

Topics • Decision making under uncertainty – Utility theory and rationality – Expected utility – Utility functions – Multiattribute utility functions – Preference structures – Decision networks – Value of information

Uncertain Outcomes of Actions • Some actions may have uncertain outcomes – Action: spend $10 to buy a lottery which pays $1000 to the winner – Outcome: {win, not-win} • Each outcome is associated with some merit (utility) – Win: gain $990 – Not-win: lose $10 • There is a probability distribution associated with the outcomes of this action (0. 0001, 0. 9999). • Should I take this action?

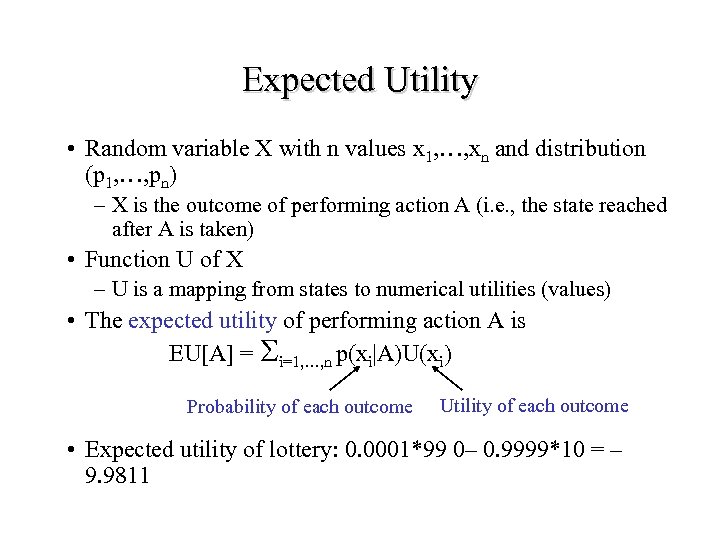

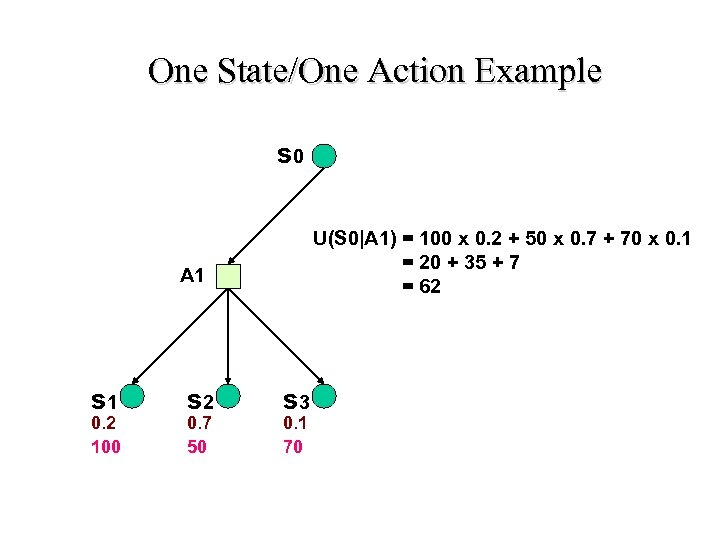

Expected Utility • Random variable X with n values x 1, …, xn and distribution (p 1, …, pn) – X is the outcome of performing action A (i. e. , the state reached after A is taken) • Function U of X – U is a mapping from states to numerical utilities (values) • The expected utility of performing action A is EU[A] = Si=1, …, n p(xi|A)U(xi) Probability of each outcome Utility of each outcome • Expected utility of lottery: 0. 0001*99 0– 0. 9999*10 = – 9. 9811

One State/One Action Example s 0 U(S 0|A 1) = 100 x 0. 2 + 50 x 0. 7 + 70 x 0. 1 = 20 + 35 + 7 = 62 A 1 s 1 0. 2 100 s 2 0. 7 50 s 3 0. 1 70

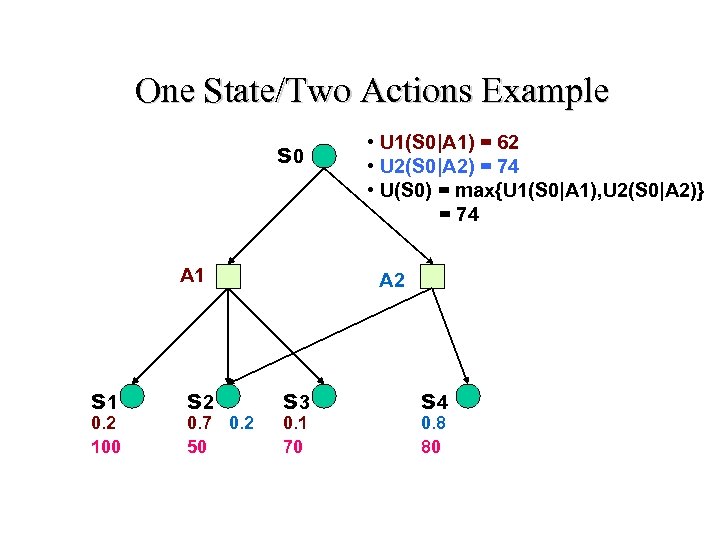

One State/Two Actions Example s 0 A 1 s 1 0. 2 100 s 2 0. 7 0. 2 50 • U 1(S 0|A 1) = 62 • U 2(S 0|A 2) = 74 • U(S 0) = max{U 1(S 0|A 1), U 2(S 0|A 2)} = 74 A 2 s 3 0. 1 70 s 4 0. 8 80

MEU Principle • Decision theory: A rational agent should choose the action that maximizes the agent’s expected utility • Maximizing expected utility (MEU) is a normative criterion for rational choices of actions • Must have complete model of: – Actions – States – Utilities • Even if you have a complete model, will be computationally intractable

Comparing outcomes • Which is better: A = Being rich and sunbathing where it’s warm B = Being rich and sunbathing where it’s cool C = Being poor and sunbathing where it’s warm D = Being poor and sunbathing where it’s cool • Multiattribute utility theory – A clearly dominates B: A > B. A > C. C > D. A > D. What about B vs. C? – Simplest case: Additive value function (just add the individual attribute utilities) – Others use weighted utility, based on the relative importance of these attributes – Learning the combined utility function (similar to joint prob. table)

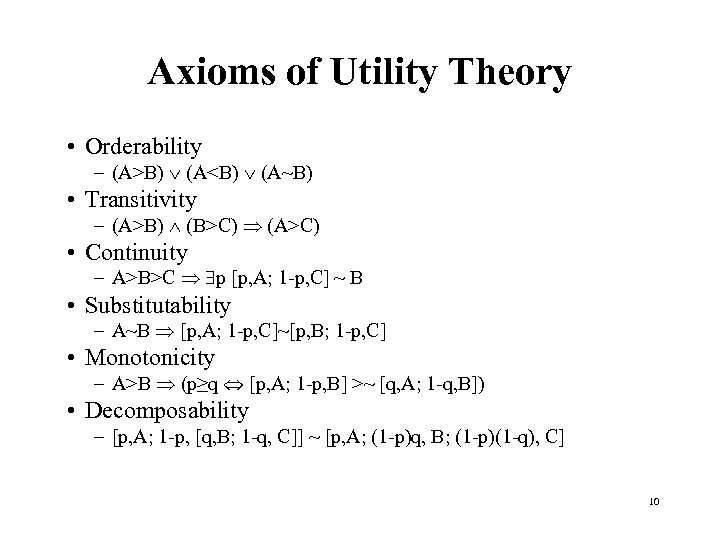

Axioms of Utility Theory • Orderability – (A>B) (A<B) (A~B) • Transitivity – (A>B) (B>C) (A>C) • Continuity – A>B>C p [p, A; 1 -p, C] ~ B • Substitutability – A~B [p, A; 1 -p, C]~[p, B; 1 -p, C] • Monotonicity – A>B (p≥q [p, A; 1 -p, B] >~ [q, A; 1 -q, B]) • Decomposability – [p, A; 1 -p, [q, B; 1 -q, C]] ~ [p, A; (1 -p)q, B; (1 -p)(1 -q), C] 10

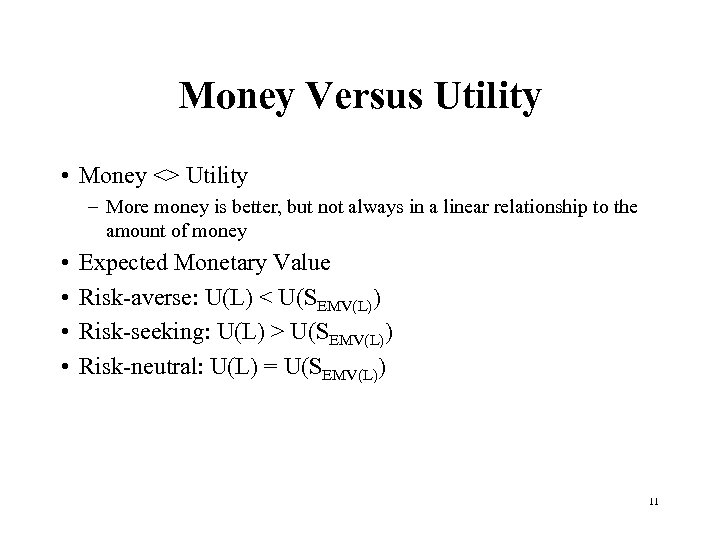

Money Versus Utility • Money <> Utility – More money is better, but not always in a linear relationship to the amount of money • • Expected Monetary Value Risk-averse: U(L) < U(SEMV(L)) Risk-seeking: U(L) > U(SEMV(L)) Risk-neutral: U(L) = U(SEMV(L)) 11

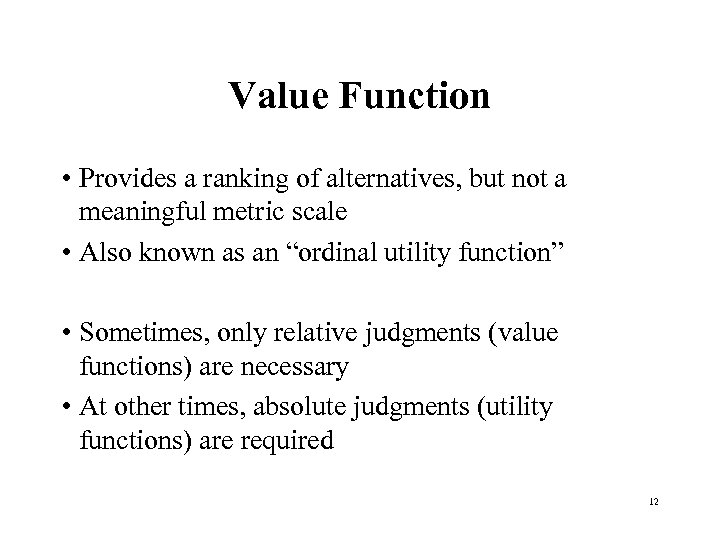

Value Function • Provides a ranking of alternatives, but not a meaningful metric scale • Also known as an “ordinal utility function” • Sometimes, only relative judgments (value functions) are necessary • At other times, absolute judgments (utility functions) are required 12

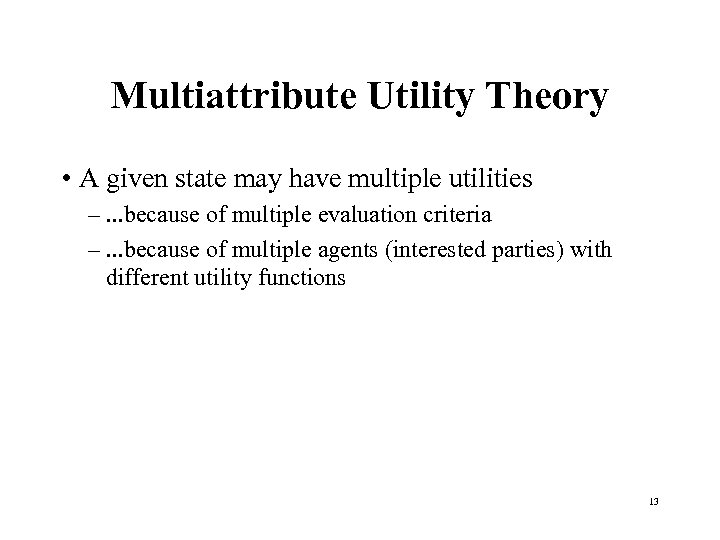

Multiattribute Utility Theory • A given state may have multiple utilities –. . . because of multiple evaluation criteria –. . . because of multiple agents (interested parties) with different utility functions 13

Decision networks • Extend Bayesian nets to handle actions and utilities – a. k. a. influence diagrams • Make use of Bayesian net inference • Useful application: Value of Information

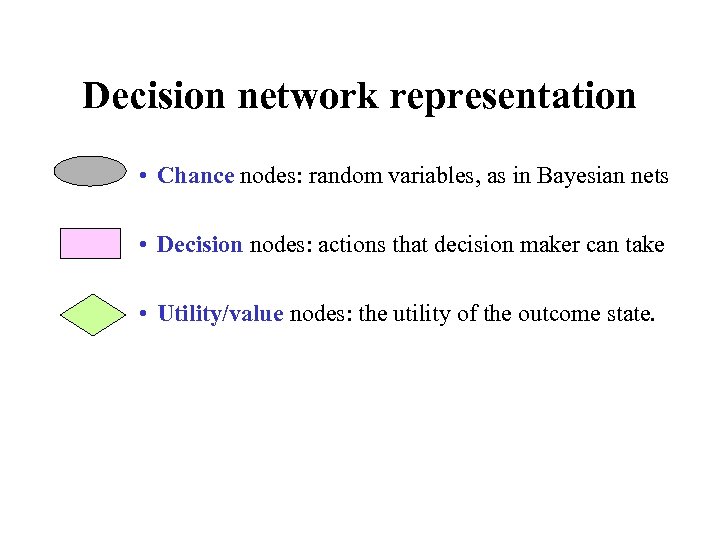

Decision network representation • Chance nodes: random variables, as in Bayesian nets • Decision nodes: actions that decision maker can take • Utility/value nodes: the utility of the outcome state.

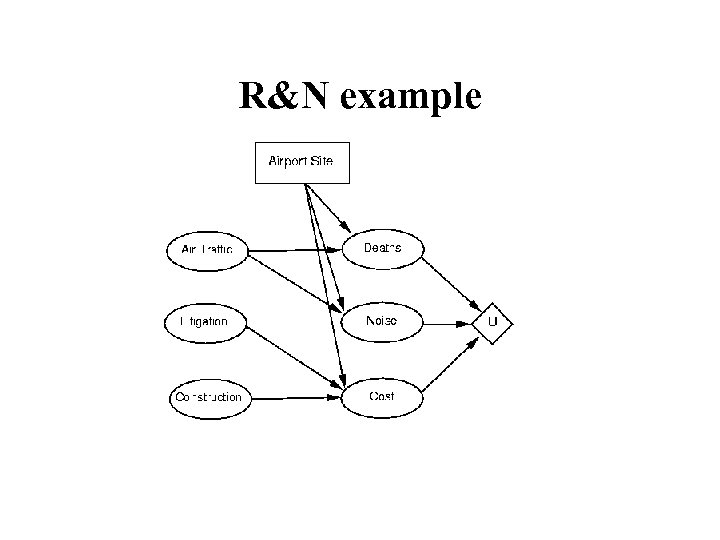

R&N example

Evaluating decision networks • Set the evidence variables for the current state. • For each possible value of the decision node (assume just one): – Set the decision node to that value. – Calculate the posterior probabilities for the parent nodes of the utility node, using BN inference. – Calculate the resulting utility for the action. • Return the action with the highest utility.

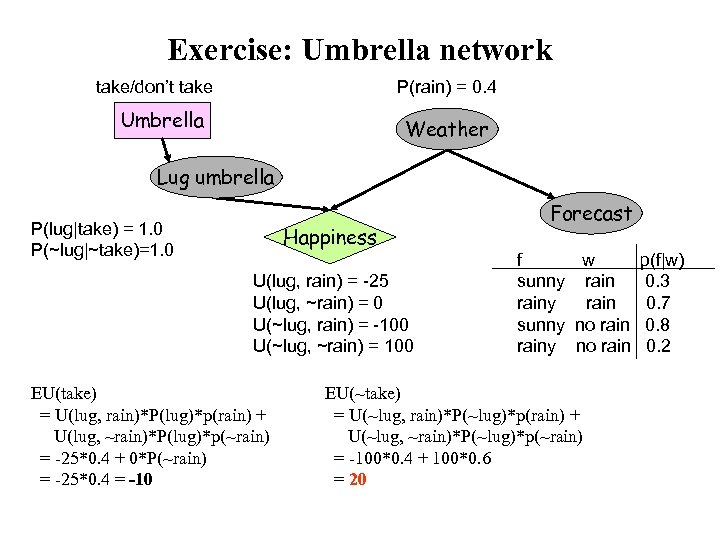

Exercise: Umbrella network take/don’t take P(rain) = 0. 4 Umbrella Weather Lug umbrella P(lug|take) = 1. 0 P(~lug|~take)=1. 0 Happiness U(lug, rain) = -25 U(lug, ~rain) = 0 U(~lug, rain) = -100 U(~lug, ~rain) = 100 EU(take) = U(lug, rain)*P(lug)*p(rain) + U(lug, ~rain)*P(lug)*p(~rain) = -25*0. 4 + 0*P(~rain) = -25*0. 4 = -10 Forecast f w p(f|w) sunny rain 0. 3 rainy rain 0. 7 sunny no rain 0. 8 rainy no rain 0. 2 EU(~take) = U(~lug, rain)*P(~lug)*p(rain) + U(~lug, ~rain)*P(~lug)*p(~rain) = -100*0. 4 + 100*0. 6 = 20

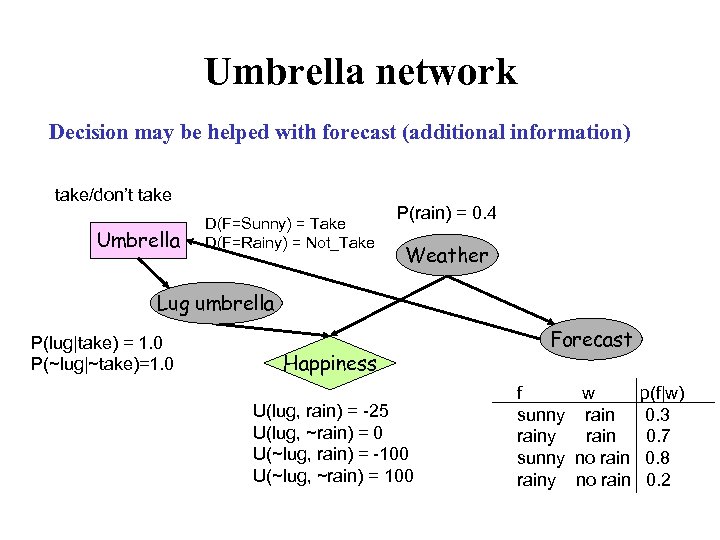

Umbrella network Decision may be helped with forecast (additional information) take/don’t take Umbrella D(F=Sunny) = Take D(F=Rainy) = Not_Take P(rain) = 0. 4 Weather Lug umbrella P(lug|take) = 1. 0 P(~lug|~take)=1. 0 Happiness U(lug, rain) = -25 U(lug, ~rain) = 0 U(~lug, rain) = -100 U(~lug, ~rain) = 100 Forecast f w p(f|w) sunny rain 0. 3 rainy rain 0. 7 sunny no rain 0. 8 rainy no rain 0. 2

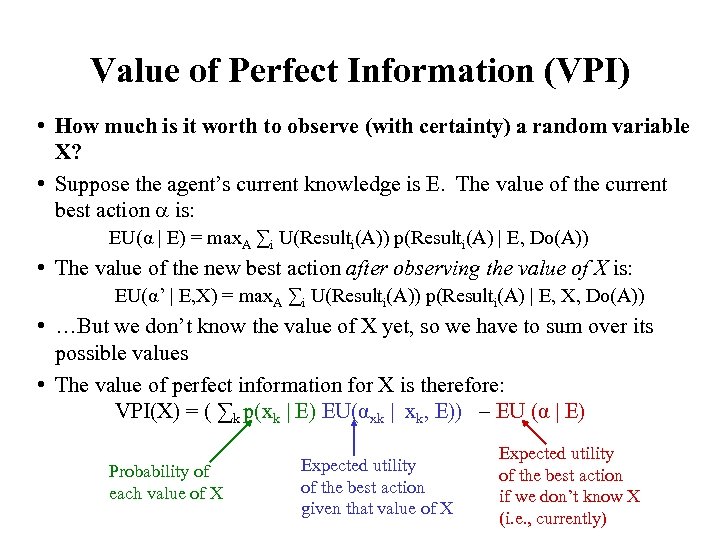

Value of Perfect Information (VPI) • How much is it worth to observe (with certainty) a random variable X? • Suppose the agent’s current knowledge is E. The value of the current best action is: EU(α | E) = max. A ∑i U(Resulti(A)) p(Resulti(A) | E, Do(A)) • The value of the new best action after observing the value of X is: EU(α’ | E, X) = max. A ∑i U(Resulti(A)) p(Resulti(A) | E, X, Do(A)) • …But we don’t know the value of X yet, so we have to sum over its possible values • The value of perfect information for X is therefore: VPI(X) = ( ∑k p(xk | E) EU(αxk | xk, E)) – EU (α | E) Probability of each value of X Expected utility of the best action given that value of X Expected utility of the best action if we don’t know X (i. e. , currently)

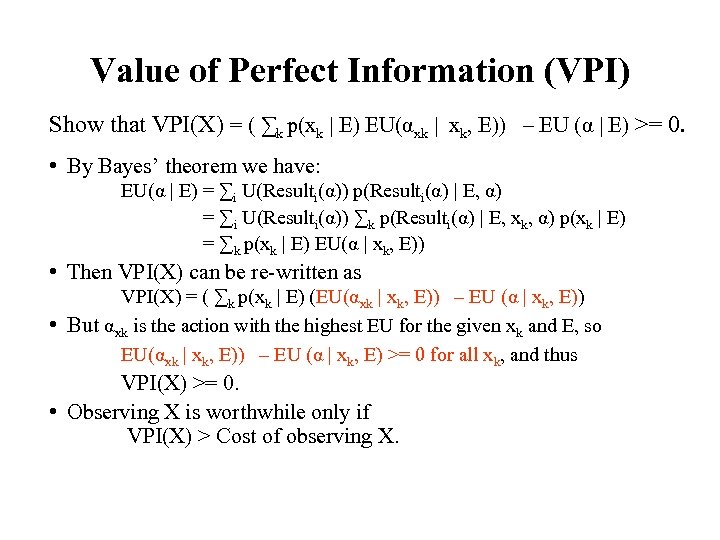

Value of Perfect Information (VPI) Show that VPI(X) = ( ∑k p(xk | E) EU(αxk | xk, E)) – EU (α | E) >= 0. • By Bayes’ theorem we have: EU(α | E) = ∑i U(Resulti(α)) p(Resulti(α) | E, α) = ∑i U(Resulti(α)) ∑k p(Resulti(α) | E, xk, α) p(xk | E) = ∑k p(xk | E) EU(α | xk, E)) • Then VPI(X) can be re-written as VPI(X) = ( ∑k p(xk | E) (EU(αxk | xk, E)) – EU (α | xk, E)) • But αxk is the action with the highest EU for the given xk and E, so EU(αxk | xk, E)) – EU (α | xk, E) >= 0 for all xk, and thus VPI(X) >= 0. • Observing X is worthwhile only if VPI(X) > Cost of observing X.

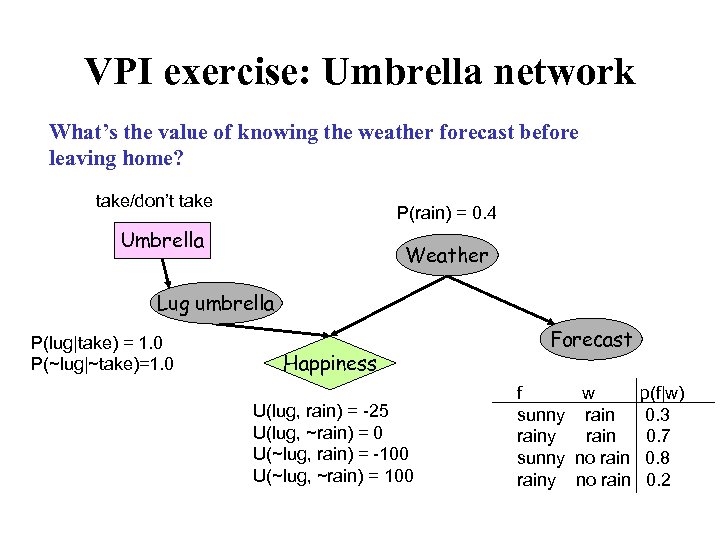

VPI exercise: Umbrella network What’s the value of knowing the weather forecast before leaving home? take/don’t take P(rain) = 0. 4 Umbrella Weather Lug umbrella P(lug|take) = 1. 0 P(~lug|~take)=1. 0 Happiness U(lug, rain) = -25 U(lug, ~rain) = 0 U(~lug, rain) = -100 U(~lug, ~rain) = 100 Forecast f w p(f|w) sunny rain 0. 3 rainy rain 0. 7 sunny no rain 0. 8 rainy no rain 0. 2

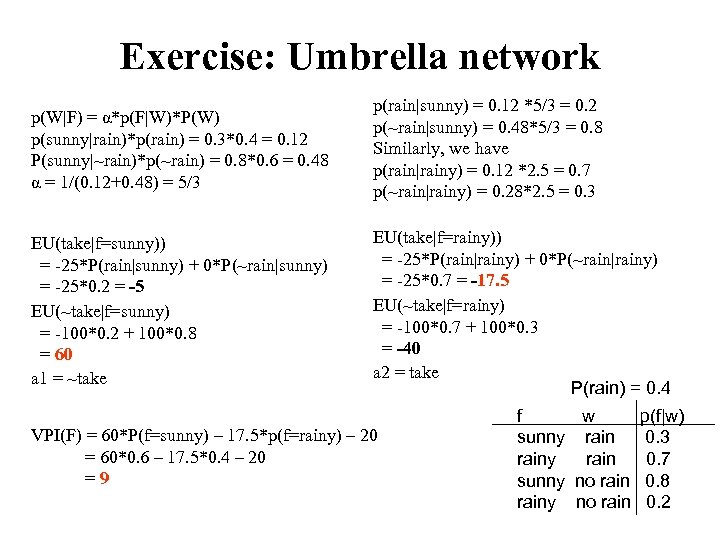

Exercise: Umbrella network p(W|F) = α*p(F|W)*P(W) p(sunny|rain)*p(rain) = 0. 3*0. 4 = 0. 12 P(sunny|~rain)*p(~rain) = 0. 8*0. 6 = 0. 48 α = 1/(0. 12+0. 48) = 5/3 p(rain|sunny) = 0. 12 *5/3 = 0. 2 p(~rain|sunny) = 0. 48*5/3 = 0. 8 Similarly, we have p(rain|rainy) = 0. 12 *2. 5 = 0. 7 p(~rain|rainy) = 0. 28*2. 5 = 0. 3 EU(take|f=sunny)) = -25*P(rain|sunny) + 0*P(~rain|sunny) = -25*0. 2 = -5 EU(~take|f=sunny) = -100*0. 2 + 100*0. 8 = 60 a 1 = ~take EU(take|f=rainy)) = -25*P(rain|rainy) + 0*P(~rain|rainy) = -25*0. 7 = -17. 5 EU(~take|f=rainy) = -100*0. 7 + 100*0. 3 = -40 a 2 = take P(rain) = 0. 4 VPI(F) = 60*P(f=sunny) – 17. 5*p(f=rainy) – 20 = 60*0. 6 – 17. 5*0. 4 – 20 =9 f w p(f|w) sunny rain 0. 3 rainy rain 0. 7 sunny no rain 0. 8 rainy no rain 0. 2

91069db61b4df025085e5e4b1be0c61b.ppt