4e68363feff2545a844dd21b6b226cc6.ppt

- Количество слайдов: 39

Machine Translation Language Model Stephan Vogel 21 February 2011 Machine Translation - Spring 2011 1

Overview • N-gram LMs l Perplexity l Smoothing l Dealing with large LMs Machine Translation - Spring 2011 2

A Word Guessing Game Good afternoon, how are ____ Machine Translation - Spring 2011 3

A Word Guessing Game Good afternoon, how are you? I apologize for being late, I am very _____ Machine Translation - Spring 2011 4

A Word Guessing Game Good afternoon, how are you? I apologize for being late, I am very sorry! Machine Translation - Spring 2011 5

A Word Guessing Game Good afternoon, how are you? I apologize for being late, I am very sorry! My favorite OS is ____ Machine Translation - Spring 2011 6

A Word Guessing Game Good afternoon, how are you? I apologize for being late, I am very sorry! My favorite OS is Linux Mac OS Windows XP Win. CE Machine Translation - Spring 2011 7

A Word Guessing Game Hello, I am very happy to see you, Mr. Machine Translation - Spring 2011 8

A Word Guessing Game Hello, I am very happy to see you, Mr. Black White Jones Smith …. …. Machine Translation - Spring 2011 9

A Word Guessing Game What do we learn from the word guessing game? • For some histories the number of expected words is rather small. • For some histories we can make virtually no prediction about the next word. • The more words fit at some point the more difficult it is to select the correct one (more errors are possible) • The difficulty of generating a word sequence is correlated with the "branching degree" Machine Translation - Spring 2011 10

Language Model in MT l From the translation model, we typically have many alternative translations for words and phrases l Reordering throws phrases around pretty arbitrarily LM needs to help l To select words with the right sense (disambiguate homonyms: e. g. river bank versus money bank) l To select hypothesis where words are ‘in the right place’ l To increase cohesion between words (agreement) Machine Translation - Spring 2011 11

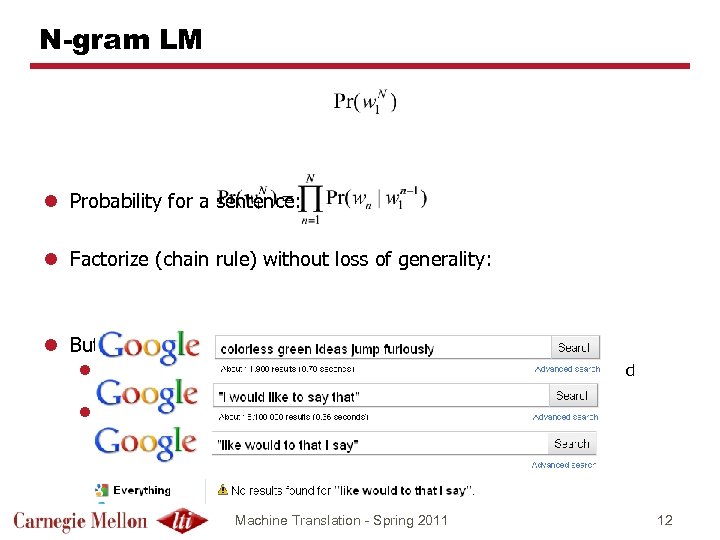

N-gram LM l Probability for a sentence: l Factorize (chain rule) without loss of generality: l But too many possible word sequences: we do not see them l Vocabulary of 10 k and sentence length of 5 words -> 10^15 different word sequences l Of course, most sequences are extremely unlikely Machine Translation - Spring 2011 12

N-gram LM l Probability for a sentence: l Factorize (chain rule) without loss of generality: l Limit length of history l Unigram LM: l Bigram LM: l Trigram LM: Machine Translation - Spring 2011 13

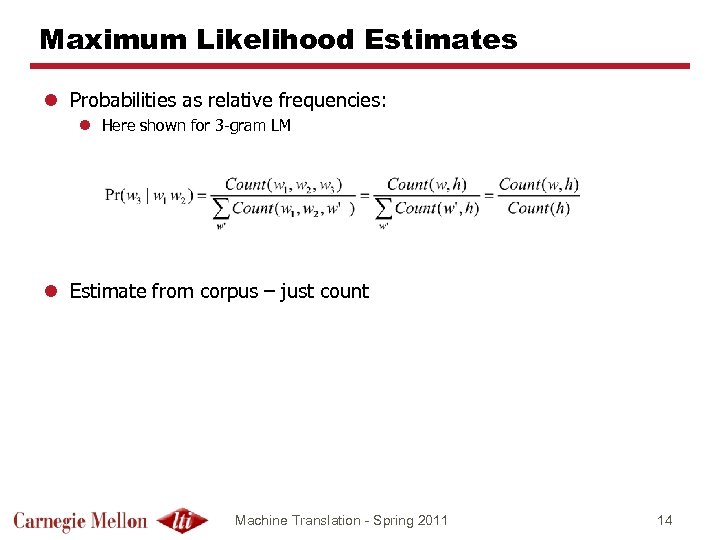

Maximum Likelihood Estimates l Probabilities as relative frequencies: l Here shown for 3 -gram LM l Estimate from corpus – just count Machine Translation - Spring 2011 14

Measuring the Quality of LMs l Obvious approach to finding out whether LM 1 or LM 2 is better: run translation system with both; choose the one that produces better output l But: l Performance of MT system depends also on translation and distortion model, and the interaction between these models l Performance of MT system also depends on pruning. l Expensive and time consuming l We would like to have an independent measure: l Declare a LM to be good, if it restricts the future more strongly (i. e. if it has a smaller average "branching factor"). Machine Translation - Spring 2011 15

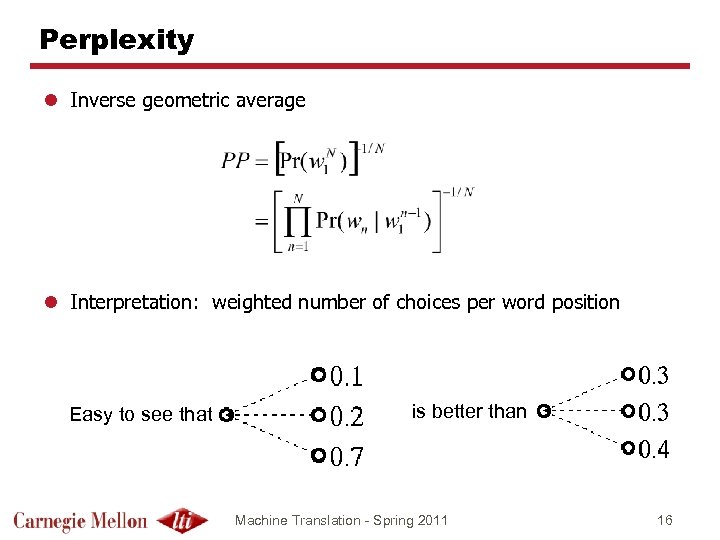

Perplexity l Inverse geometric average l Interpretation: weighted number of choices per word position Easy to see that is better than Machine Translation - Spring 2011 16

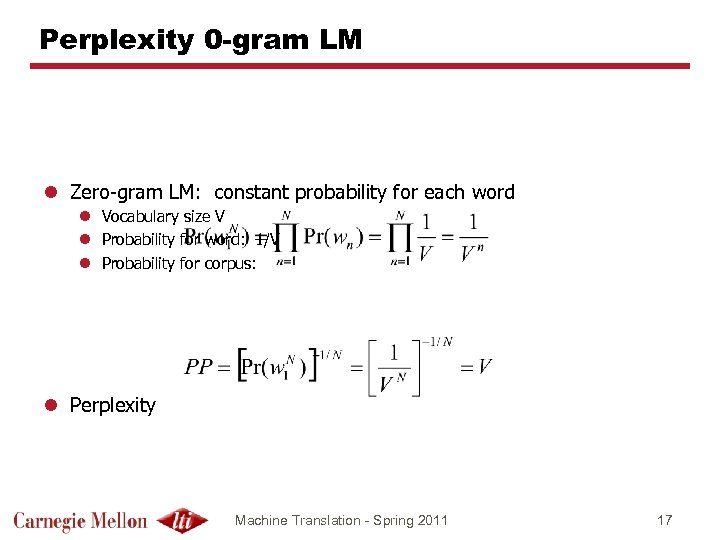

Perplexity 0 -gram LM l Zero-gram LM: constant probability for each word l Vocabulary size V l Probability for word: 1/V l Probability for corpus: l Perplexity Machine Translation - Spring 2011 17

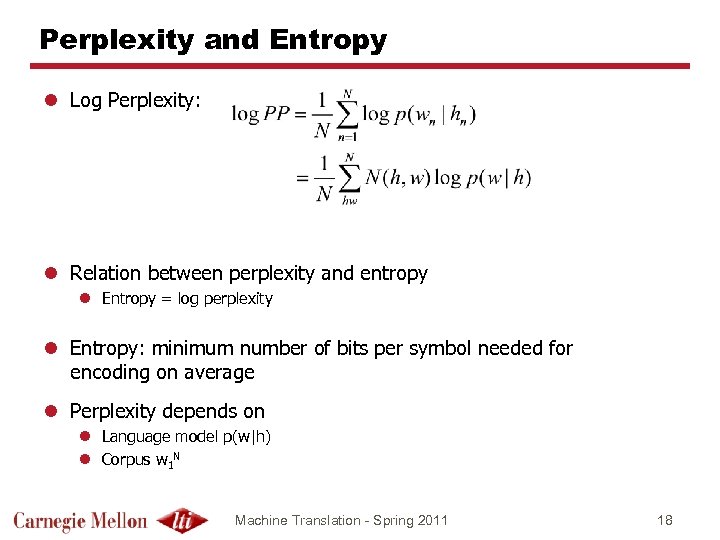

Perplexity and Entropy l Log Perplexity: l Relation between perplexity and entropy l Entropy = log perplexity l Entropy: minimum number of bits per symbol needed for encoding on average l Perplexity depends on l Language model p(w|h) l Corpus w 1 N Machine Translation - Spring 2011 18

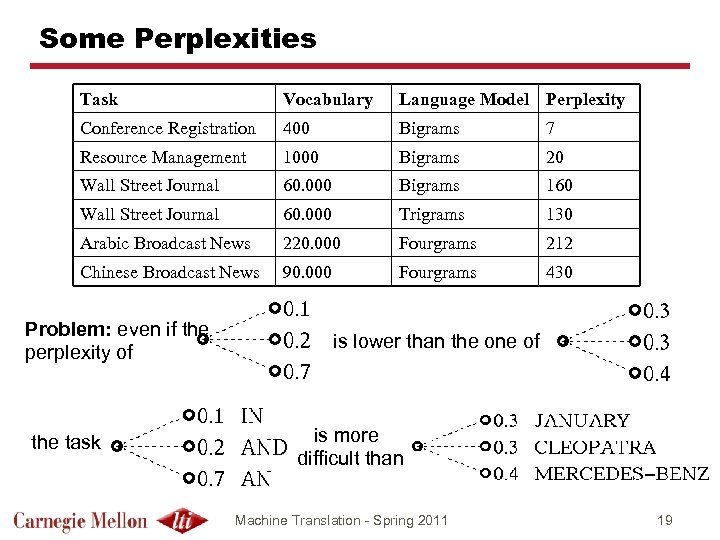

Some Perplexities Task Vocabulary Language Model Perplexity Conference Registration 400 Bigrams 7 Resource Management 1000 Bigrams 20 Wall Street Journal 60. 000 Bigrams 160 Wall Street Journal 60. 000 Trigrams 130 Arabic Broadcast News 220. 000 Fourgrams 212 Chinese Broadcast News 90. 000 Fourgrams 430 Problem: even if the perplexity of the task is lower than the one of is more difficult than Machine Translation - Spring 2011 19

Sparse Data and Smoothing l Many n-grams are not seen -> zero probability l Sentences with such an n-gram would be considered impossible by model l Need smoothing l Essentially: unseen events (in training corpus) still have probability > 0 l Need to deduct probability from seen events and distribute to unseen (and rare) events l Robin Hood principle: take from the rich, redistribute to the poor l Large body of work on smoothing in speech recognition Machine Translation - Spring 2011 20

Smoothing Two types of smoothing: l Backing off: if word wn not seen with history w 1 … wn-1, back-off to shorter history. Different variants: l Absolute discounting l Katz smoothing l (Modified) Kneser-Ney smooting l Linear Interpolation: interpolate with probability distribution for n-grams with shorter histories Machine Translation - Spring 2011 21

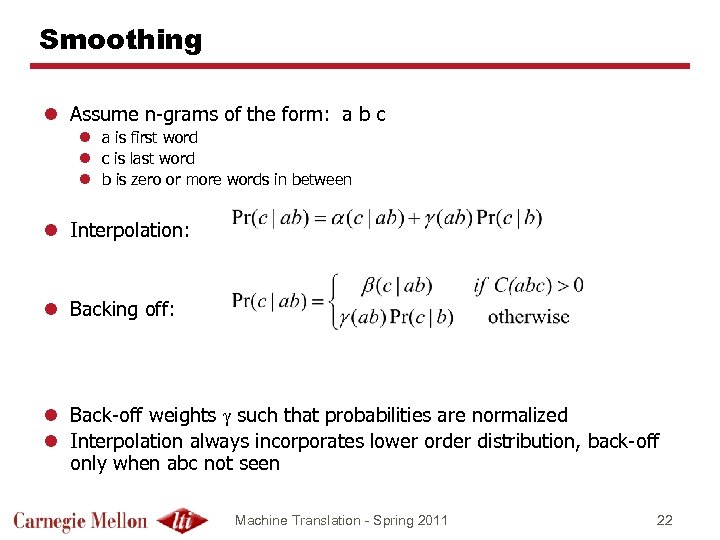

Smoothing l Assume n-grams of the form: a b c l a is first word l c is last word l b is zero or more words in between l Interpolation: l Backing off: l Back-off weights g such that probabilities are normalized l Interpolation always incorporates lower order distribution, back-off only when abc not seen Machine Translation - Spring 2011 22

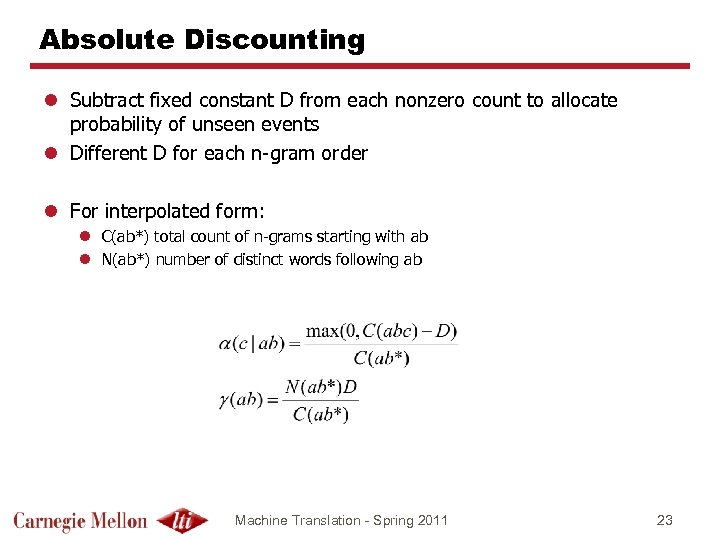

Absolute Discounting l Subtract fixed constant D from each nonzero count to allocate probability of unseen events l Different D for each n-gram order l For interpolated form: l C(ab*) total count of n-grams starting with ab l N(ab*) number of distinct words following ab Machine Translation - Spring 2011 23

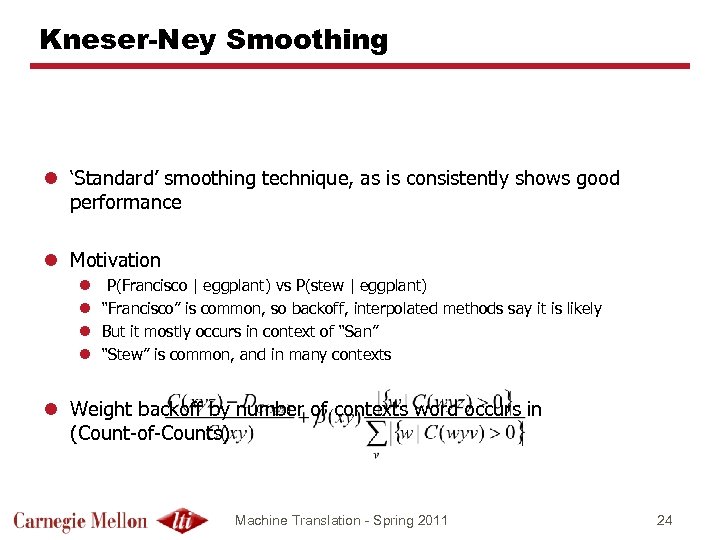

Kneser-Ney Smoothing l ‘Standard’ smoothing technique, as is consistently shows good performance l Motivation l l P(Francisco | eggplant) vs P(stew | eggplant) “Francisco” is common, so backoff, interpolated methods say it is likely But it mostly occurs in context of “San” “Stew” is common, and in many contexts l Weight backoff by number of contexts word occurs in (Count-of-Counts) Machine Translation - Spring 2011 24

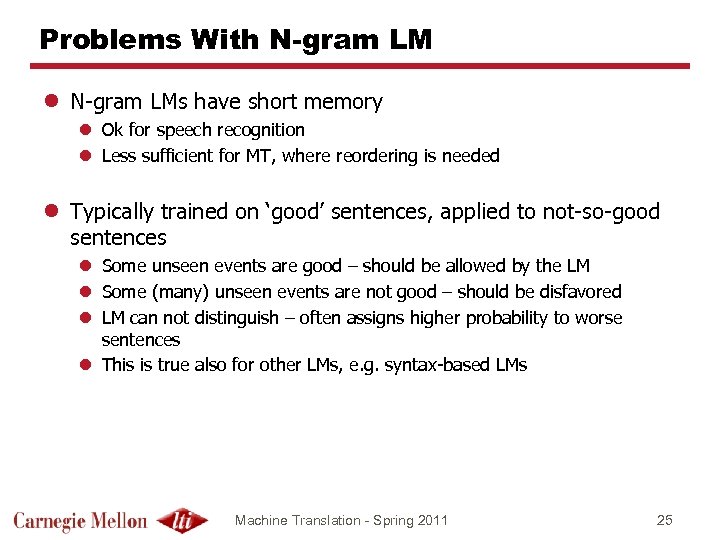

Problems With N-gram LM l N-gram LMs have short memory l Ok for speech recognition l Less sufficient for MT, where reordering is needed l Typically trained on ‘good’ sentences, applied to not-so-good sentences l Some unseen events are good – should be allowed by the LM l Some (many) unseen events are not good – should be disfavored l LM can not distinguish – often assigns higher probability to worse sentences l This is true also for other LMs, e. g. syntax-based LMs Machine Translation - Spring 2011 25

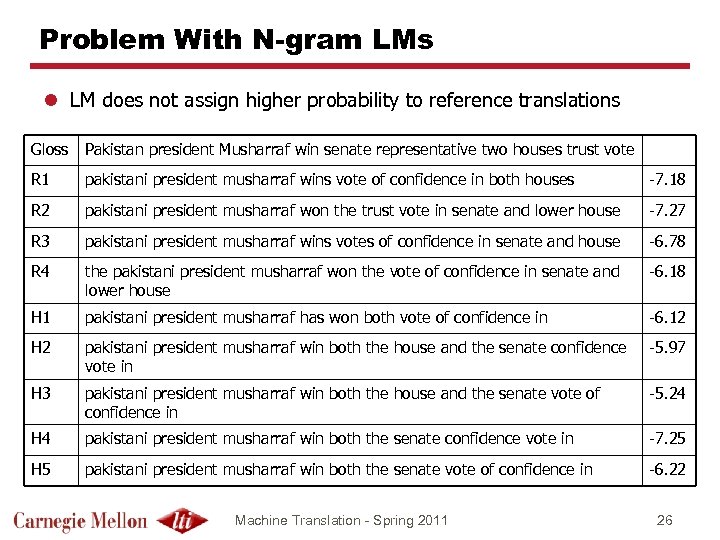

Problem With N-gram LMs l LM does not assign higher probability to reference translations Gloss Pakistan president Musharraf win senate representative two houses trust vote R 1 pakistani president musharraf wins vote of confidence in both houses -7. 18 R 2 pakistani president musharraf won the trust vote in senate and lower house -7. 27 R 3 pakistani president musharraf wins votes of confidence in senate and house -6. 78 R 4 the pakistani president musharraf won the vote of confidence in senate and lower house -6. 18 H 1 pakistani president musharraf has won both vote of confidence in -6. 12 H 2 pakistani president musharraf win both the house and the senate confidence vote in -5. 97 H 3 pakistani president musharraf win both the house and the senate vote of confidence in -5. 24 H 4 pakistani president musharraf win both the senate confidence vote in -7. 25 H 5 pakistani president musharraf win both the senate vote of confidence in -6. 22 Machine Translation - Spring 2011 26

Dealing with Large LMs l Three strategies l Distribute over many machines l Filter l Use lossy compression Machine Translation - Spring 2011 27

Distributed LM l Want to use large LMs l From large corpora l Long histories: 5 -gram, 6 -gram, … l Too much data to fit into memory of one workstation l Use client-server architecture l Each server owns part of the LM data l Use suffix array to find n-gram and get n-gram counts l Efficiency has been greatly improved using batch-mode communication between client and server l Client collects n-gram occurrence counts and calculates probabilities l Simple smoothing: linear interpolation with uniform weights Machine Translation - Spring 2011 28

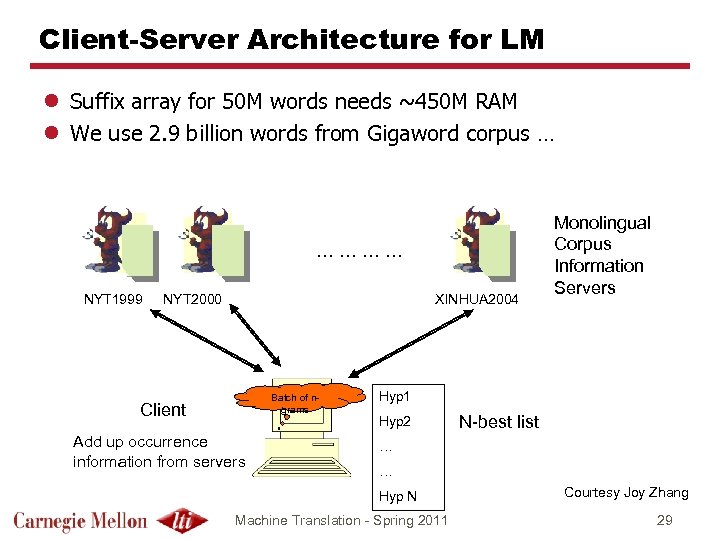

Client-Server Architecture for LM l Suffix array for 50 M words needs ~450 M RAM l We use 2. 9 billion words from Gigaword corpus … … … NYT 1999 NYT 2000 XINHUA 2004 Batch of ngrams Client Add up occurrence information from servers Monolingual Corpus Information Servers Hyp 1 Hyp 2 N-best list … … Hyp N Machine Translation - Spring 2011 Courtesy Joy Zhang 29

Impact on Decoder l Communication overhead is substantial l Cannot query each n-gram probability l Restructure Decoder l Generate many hypotheses, using all information but the LM l Send all queries (LMstatei, Word. Sequencei) to the LM Server l Get back all probabilities and new LM states (pi, New. LMstatei) l Will have some impact on pruning l Early termination when expanding hypotheses cannot use LM score Machine Translation - Spring 2011 30

Filtering LM l Assume we have been able to build large LM l Most entries will not be used to translate current sentence l Extract those entries which may be used l Filter phrase table for current sentence l Establish word list from source sentence and filtered phrase table l Extract all n-grams from LM file which are completely covered by the word list l Filtering does not change the decoding result l All needed ngrams are still available l All probabilities remain unchanged Machine Translation - Spring 2011 31

Filtering LM l Filtering is an expensive operation l Needs to be re-done whenever phrase table changes l Map. Reduce can help l Typical LMs sizes (using pruned phrase table: top 60) l l Min: 100 KB Avg: 75 MB Max: 500 MB Divide by 40 to get approx number of n-grams l For long sentences and weakly pruned phrase tables the filtered LM can still be large (>2 GB) l But works well for n-best list rescoring and system combination Machine Translation - Spring 2011 32

Bloom-Filter LMs l Proposed by David Talbot and Miles Osborne (2007) l Bloom-Filters have long been used for storing data in compact way l General idea: use multiple hash functions to generate a ‘foot print’ for an object Following slides courtesy of Matthias Eck … Machine Translation - Spring 2011 33

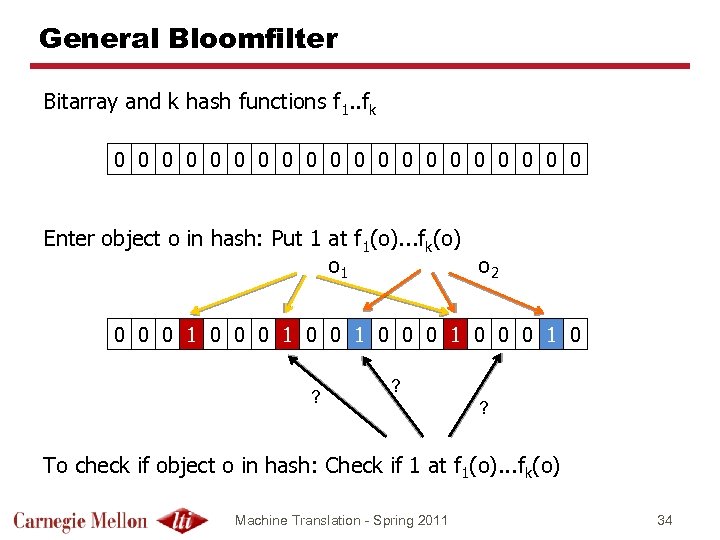

General Bloomfilter Bitarray and k hash functions f 1. . fk 0 0 0 0 0 Enter object o in hash: Put 1 at f 1(o). . . fk(o) o 1 o 2 0 0 0 1 0 0 0 1 0 ? ? ? To check if object o in hash: Check if 1 at f 1(o). . . fk(o) Machine Translation - Spring 2011 34

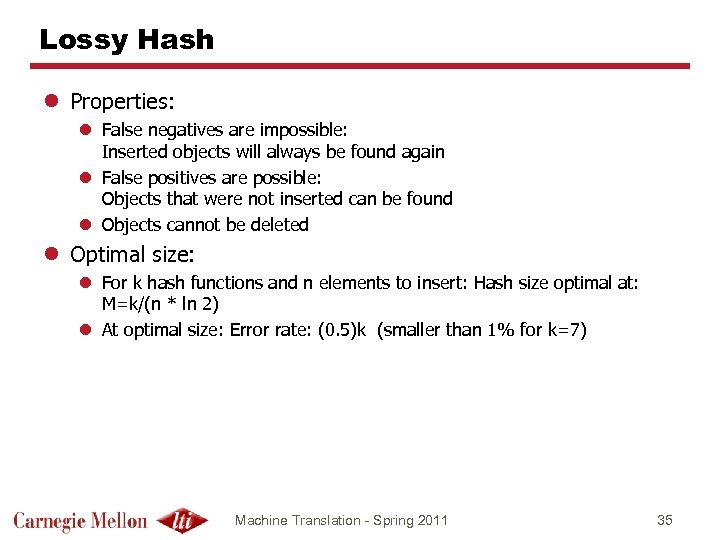

Lossy Hash l Properties: l False negatives are impossible: Inserted objects will always be found again l False positives are possible: Objects that were not inserted can be found l Objects cannot be deleted l Optimal size: l For k hash functions and n elements to insert: Hash size optimal at: M=k/(n * ln 2) l At optimal size: Error rate: (0. 5)k (smaller than 1% for k=7) Machine Translation - Spring 2011 35

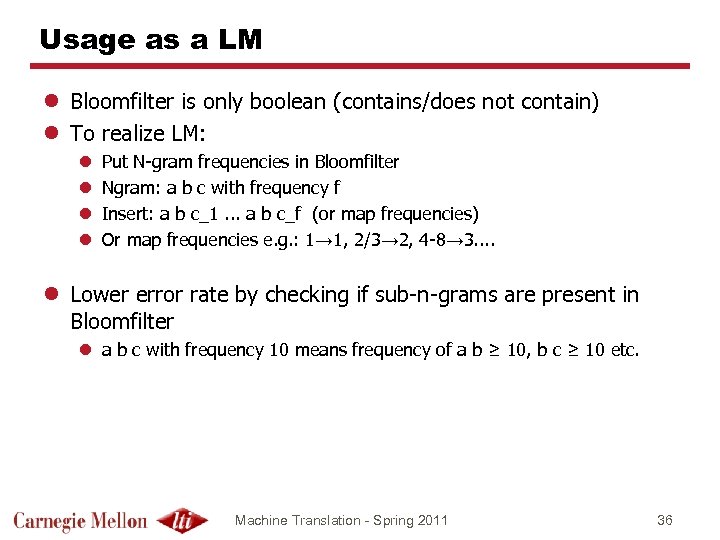

Usage as a LM l Bloomfilter is only boolean (contains/does not contain) l To realize LM: l l Put N-gram frequencies in Bloomfilter Ngram: a b c with frequency f Insert: a b c_1. . . a b c_f (or map frequencies) Or map frequencies e. g. : 1→ 1, 2/3→ 2, 4 -8→ 3. . l Lower error rate by checking if sub-n-grams are present in Bloomfilter l a b c with frequency 10 means frequency of a b ≥ 10, b c ≥ 10 etc. Machine Translation - Spring 2011 36

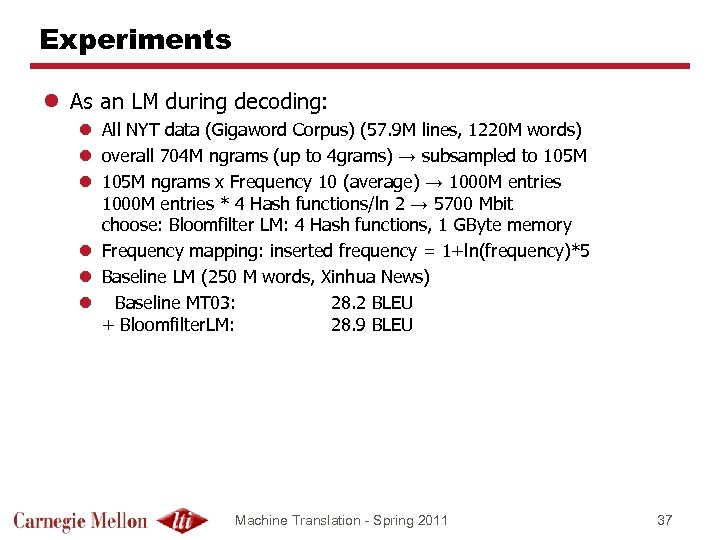

Experiments l As an LM during decoding: l All NYT data (Gigaword Corpus) (57. 9 M lines, 1220 M words) l overall 704 M ngrams (up to 4 grams) → subsampled to 105 M l 105 M ngrams x Frequency 10 (average) → 1000 M entries * 4 Hash functions/ln 2 → 5700 Mbit choose: Bloomfilter LM: 4 Hash functions, 1 GByte memory l Frequency mapping: inserted frequency = 1+ln(frequency)*5 l Baseline LM (250 M words, Xinhua News) l Baseline MT 03: 28. 2 BLEU + Bloomfilter. LM: 28. 9 BLEU Machine Translation - Spring 2011 37

Other LMs l Cache LM: boost n-grams seen recently, e. g. in the current document l Trigger LM: boost current word based on specific words in the past l Class-based LMs: generalize, e. g. l For numbers, named entities l Cluster entire vocabulary l Longer matching history if number of classes is small l Continuous LMs (Holger Schwenk et al 2006) l Based on neural nets l Used only to predict most frequent words Machine Translation - Spring 2011 39

Summary l LM used for translation: typically n-gram l More data, longer histories possible l Large systems use 5 -gram l Smoothing is important l Different smoothing techniques l Kneser-Ney is a good default l Many LM toolkits are available l SRI LM most widely used l Kenneth provides a more memory efficient version (integrated into Moses) l Using very large LMs requires engineering l Map-Reduce or Hadoop l Bloom-Filter l Many extensions possible l Class-based, trigger, cache, … l LSA and topic LMs l Syntactic LMs Machine Translation - Spring 2011 40

4e68363feff2545a844dd21b6b226cc6.ppt