f5f39b586a7be20f48012a645d994cf2.ppt

- Количество слайдов: 29

MACHINE TRANSLATION AND MT TOOLS: GIZA++ AND MOSES -Nirdesh Chauhan

MACHINE TRANSLATION AND MT TOOLS: GIZA++ AND MOSES -Nirdesh Chauhan

Outline Problem statement in SMT Translation models Using Giza++ and Moses

Outline Problem statement in SMT Translation models Using Giza++ and Moses

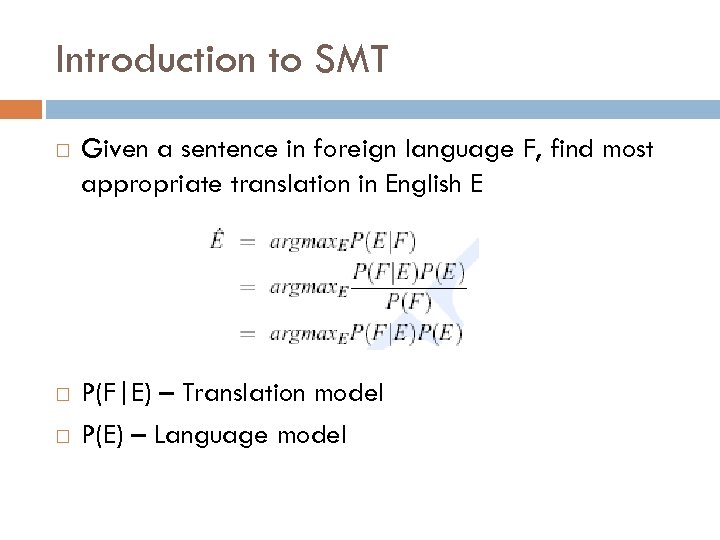

Introduction to SMT Given a sentence in foreign language F, find most appropriate translation in English E P(F|E) – Translation model P(E) – Language model

Introduction to SMT Given a sentence in foreign language F, find most appropriate translation in English E P(F|E) – Translation model P(E) – Language model

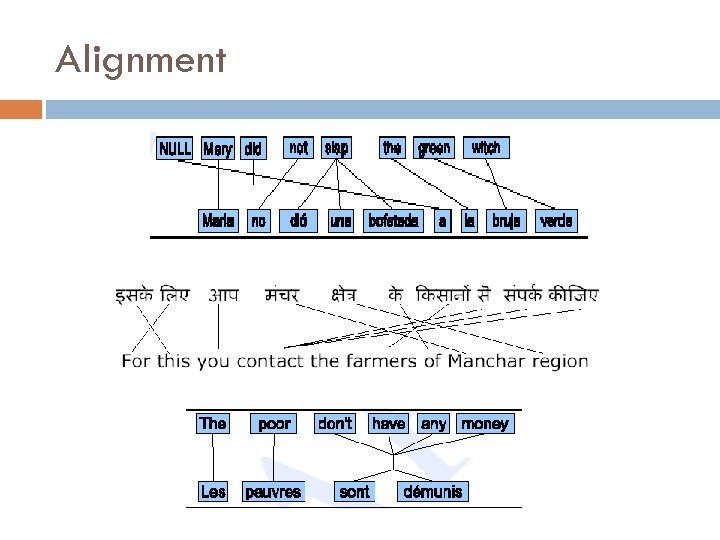

The Generation Process 4 Partition: Think of all possible partitions of the source language Lexicalization: For a give partition, translate each phrase into the foreign language Reordering: permute the set of all foreign words possibly moving across phrase boundaries We need the notion of alignment to better explain mathematic behind the generation process

The Generation Process 4 Partition: Think of all possible partitions of the source language Lexicalization: For a give partition, translate each phrase into the foreign language Reordering: permute the set of all foreign words possibly moving across phrase boundaries We need the notion of alignment to better explain mathematic behind the generation process

Alignment

Alignment

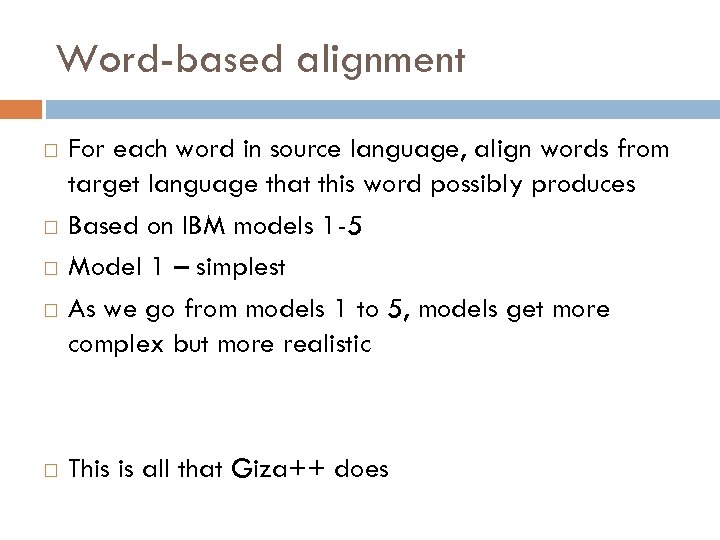

Word-based alignment For each word in source language, align words from target language that this word possibly produces Based on IBM models 1 -5 Model 1 – simplest As we go from models 1 to 5, models get more complex but more realistic This is all that Giza++ does

Word-based alignment For each word in source language, align words from target language that this word possibly produces Based on IBM models 1 -5 Model 1 – simplest As we go from models 1 to 5, models get more complex but more realistic This is all that Giza++ does

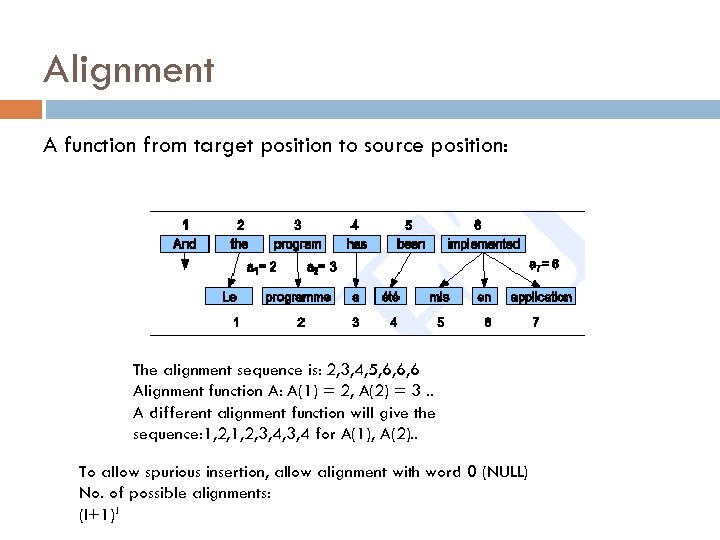

Alignment A function from target position to source position: The alignment sequence is: 2, 3, 4, 5, 6, 6, 6 Alignment function A: A(1) = 2, A(2) = 3. . A different alignment function will give the sequence: 1, 2, 3, 4, 3, 4 for A(1), A(2). . To allow spurious insertion, allow alignment with word 0 (NULL) No. of possible alignments: (I+1)J 7

Alignment A function from target position to source position: The alignment sequence is: 2, 3, 4, 5, 6, 6, 6 Alignment function A: A(1) = 2, A(2) = 3. . A different alignment function will give the sequence: 1, 2, 3, 4, 3, 4 for A(1), A(2). . To allow spurious insertion, allow alignment with word 0 (NULL) No. of possible alignments: (I+1)J 7

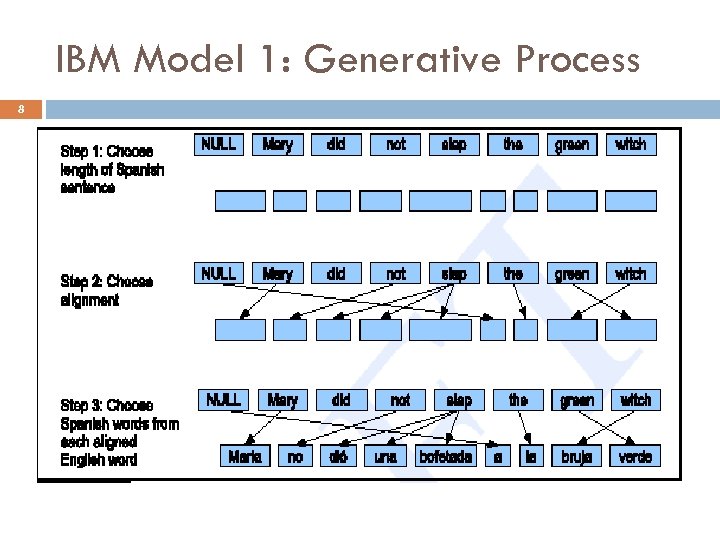

IBM Model 1: Generative Process 8

IBM Model 1: Generative Process 8

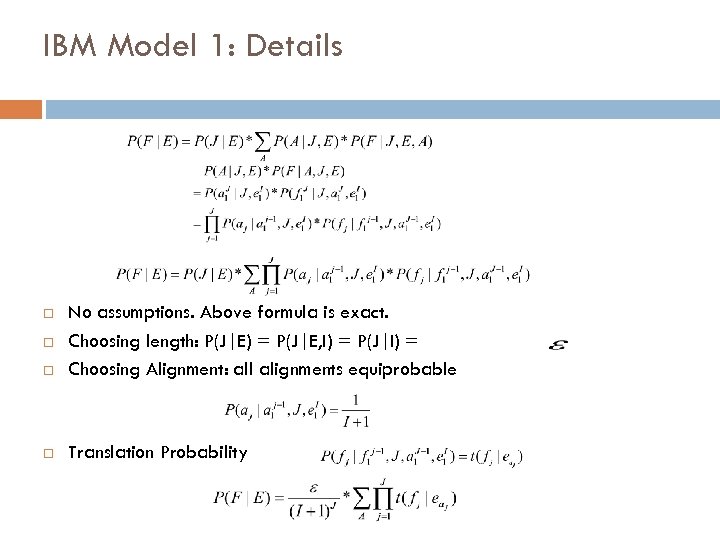

IBM Model 1: Details No assumptions. Above formula is exact. Choosing length: P(J|E) = P(J|E, I) = P(J|I) = Choosing Alignment: all alignments equiprobable Translation Probability 9

IBM Model 1: Details No assumptions. Above formula is exact. Choosing length: P(J|E) = P(J|E, I) = P(J|I) = Choosing Alignment: all alignments equiprobable Translation Probability 9

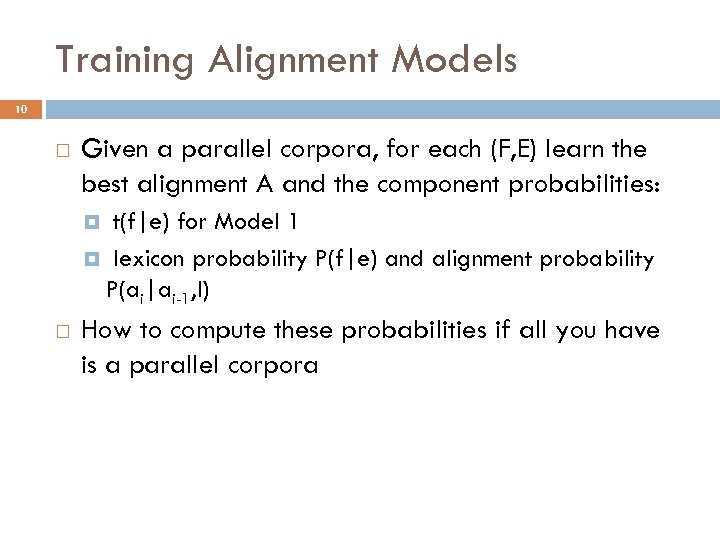

Training Alignment Models 10 Given a parallel corpora, for each (F, E) learn the best alignment A and the component probabilities: t(f|e) for Model 1 lexicon probability P(f|e) and alignment probability P(ai|ai-1, I) How to compute these probabilities if all you have is a parallel corpora

Training Alignment Models 10 Given a parallel corpora, for each (F, E) learn the best alignment A and the component probabilities: t(f|e) for Model 1 lexicon probability P(f|e) and alignment probability P(ai|ai-1, I) How to compute these probabilities if all you have is a parallel corpora

Intuition : Interdependence of Probabilities 11 If you knew which words are probable translation of each other then you can guess which alignment is probable and which one is improbable If you were given alignments with probabilities then you can compute translation probabilities Looks like a chicken and egg problem EM algorithm comes to the rescue

Intuition : Interdependence of Probabilities 11 If you knew which words are probable translation of each other then you can guess which alignment is probable and which one is improbable If you were given alignments with probabilities then you can compute translation probabilities Looks like a chicken and egg problem EM algorithm comes to the rescue

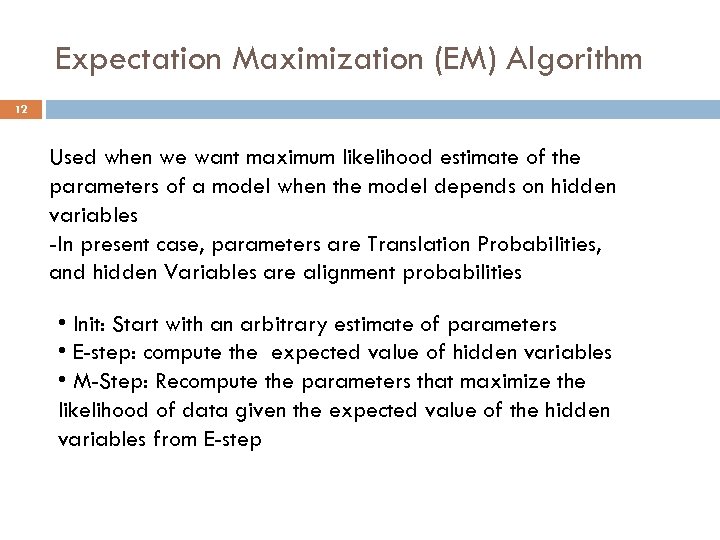

Expectation Maximization (EM) Algorithm 12 Used when we want maximum likelihood estimate of the parameters of a model when the model depends on hidden variables -In present case, parameters are Translation Probabilities, and hidden Variables are alignment probabilities • Init: Start with an arbitrary estimate of parameters • E-step: compute the expected value of hidden variables • M-Step: Recompute the parameters that maximize the likelihood of data given the expected value of the hidden variables from E-step

Expectation Maximization (EM) Algorithm 12 Used when we want maximum likelihood estimate of the parameters of a model when the model depends on hidden variables -In present case, parameters are Translation Probabilities, and hidden Variables are alignment probabilities • Init: Start with an arbitrary estimate of parameters • E-step: compute the expected value of hidden variables • M-Step: Recompute the parameters that maximize the likelihood of data given the expected value of the hidden variables from E-step

Example of EM Algorithm 13 Green house Casa verde The house La case Init: Assume that any word can generate any word with equal prob: P(la|house) = 1/3

Example of EM Algorithm 13 Green house Casa verde The house La case Init: Assume that any word can generate any word with equal prob: P(la|house) = 1/3

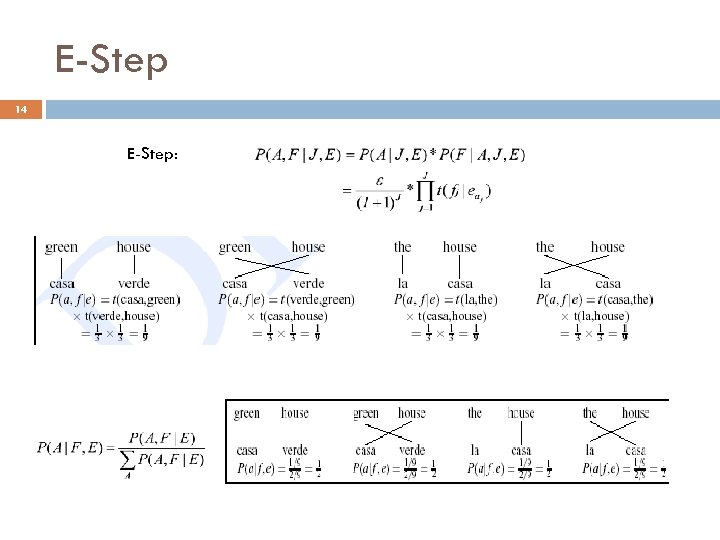

E-Step 14 E-Step:

E-Step 14 E-Step:

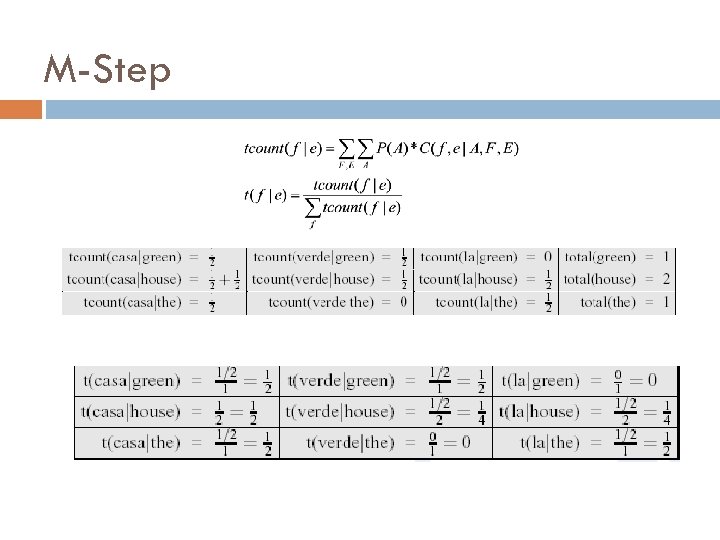

M-Step 15

M-Step 15

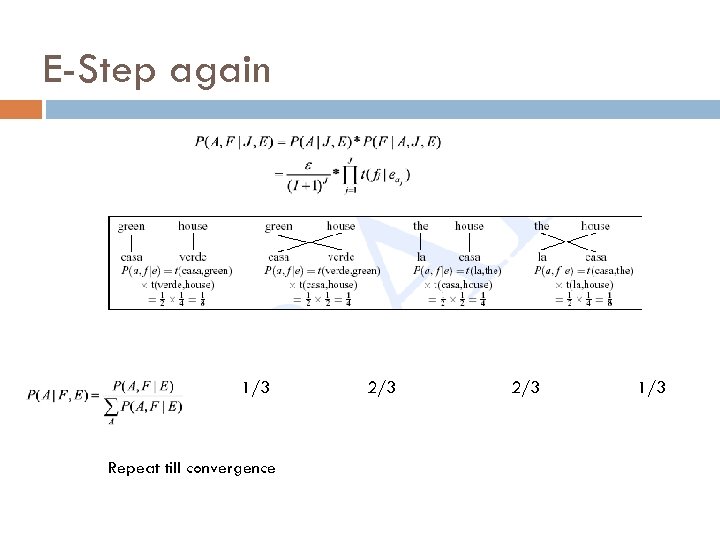

E-Step again 1/3 2/3 1/3 Repeat till convergence 16

E-Step again 1/3 2/3 1/3 Repeat till convergence 16

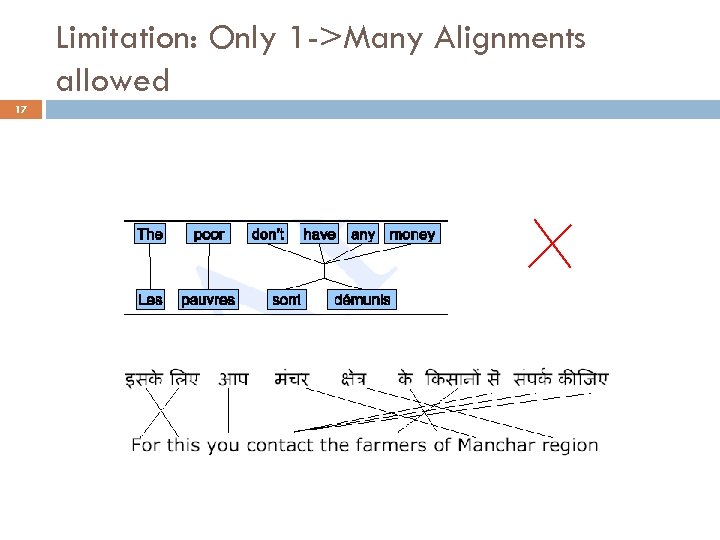

Limitation: Only 1 ->Many Alignments allowed 17

Limitation: Only 1 ->Many Alignments allowed 17

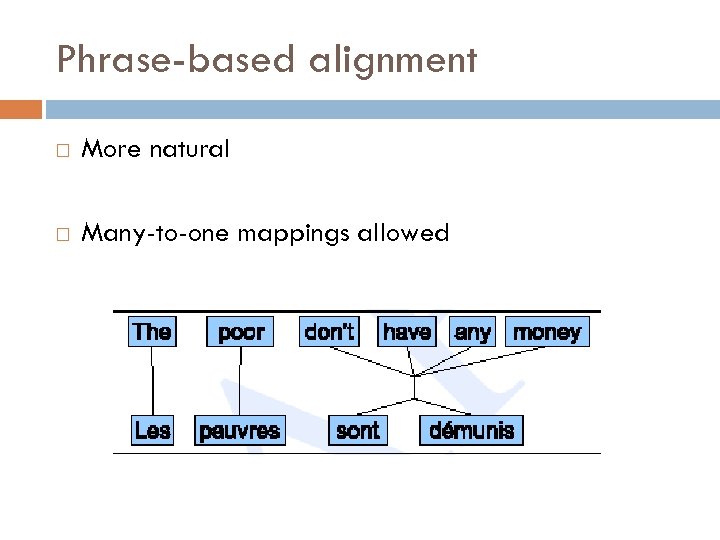

Phrase-based alignment More natural Many-to-one mappings allowed

Phrase-based alignment More natural Many-to-one mappings allowed

Generating Bi-directional Alignments Existing models only generate uni-directional alignments Combine two uni-directional alignments to get many-to-many bidirectional alignments 19

Generating Bi-directional Alignments Existing models only generate uni-directional alignments Combine two uni-directional alignments to get many-to-many bidirectional alignments 19

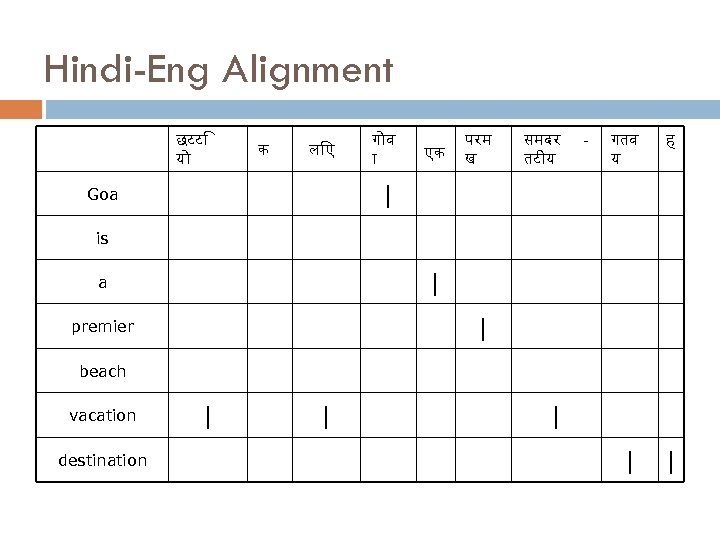

Hindi-Eng Alignment छटट य क ल ए ग व एक परम ख समदर तट य गतव य ह | - | | Goa is | a | premier beach vacation | | | destination 20

Hindi-Eng Alignment छटट य क ल ए ग व एक परम ख समदर तट य गतव य ह | - | | Goa is | a | premier beach vacation | | | destination 20

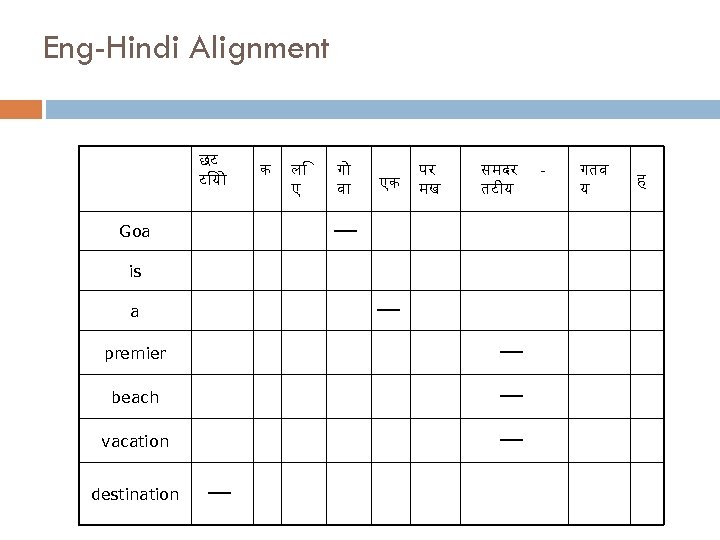

Eng-Hindi Alignment छट ट य ल ए ग व एक पर मख समदर तट य - गतव य | Goa क is | a | premier | beach | destination | vacation 21 ह

Eng-Hindi Alignment छट ट य ल ए ग व एक पर मख समदर तट य - गतव य | Goa क is | a | premier | beach | destination | vacation 21 ह

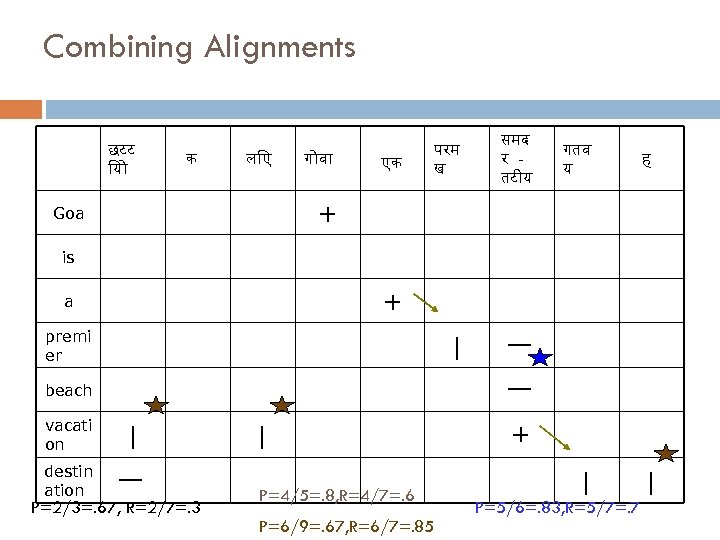

Combining Alignments ल ए ग व एक समद र तट य | क परम ख | छटट य गतव य ह + Goa is + a premi er | beach | destin ation | P=2/3=. 67, R=2/7=. 3 | P=4/5=. 8, R=4/7=. 6 P=6/9=. 67, R=6/7=. 85 + vacati on | P=5/6=. 83, R=5/7=. 7 22 |

Combining Alignments ल ए ग व एक समद र तट य | क परम ख | छटट य गतव य ह + Goa is + a premi er | beach | destin ation | P=2/3=. 67, R=2/7=. 3 | P=4/5=. 8, R=4/7=. 6 P=6/9=. 67, R=6/7=. 85 + vacati on | P=5/6=. 83, R=5/7=. 7 22 |

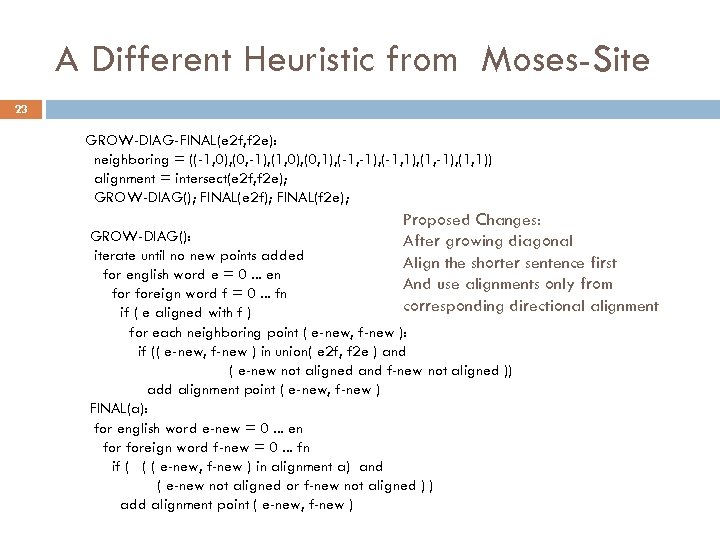

A Different Heuristic from Moses-Site 23 GROW-DIAG-FINAL(e 2 f, f 2 e): neighboring = ((-1, 0), (0, -1), (1, 0), (0, 1), (-1, -1), (-1, 1), (1, -1), (1, 1)) alignment = intersect(e 2 f, f 2 e); GROW-DIAG(); FINAL(e 2 f); FINAL(f 2 e); Proposed Changes: After growing diagonal Align the shorter sentence first And use alignments only from corresponding directional alignment GROW-DIAG(): iterate until no new points added for english word e = 0. . . en foreign word f = 0. . . fn if ( e aligned with f ) for each neighboring point ( e-new, f-new ): if (( e-new, f-new ) in union( e 2 f, f 2 e ) and ( e-new not aligned and f-new not aligned )) add alignment point ( e-new, f-new ) FINAL(a): for english word e-new = 0. . . en foreign word f-new = 0. . . fn if ( ( ( e-new, f-new ) in alignment a) and ( e-new not aligned or f-new not aligned ) ) add alignment point ( e-new, f-new )

A Different Heuristic from Moses-Site 23 GROW-DIAG-FINAL(e 2 f, f 2 e): neighboring = ((-1, 0), (0, -1), (1, 0), (0, 1), (-1, -1), (-1, 1), (1, -1), (1, 1)) alignment = intersect(e 2 f, f 2 e); GROW-DIAG(); FINAL(e 2 f); FINAL(f 2 e); Proposed Changes: After growing diagonal Align the shorter sentence first And use alignments only from corresponding directional alignment GROW-DIAG(): iterate until no new points added for english word e = 0. . . en foreign word f = 0. . . fn if ( e aligned with f ) for each neighboring point ( e-new, f-new ): if (( e-new, f-new ) in union( e 2 f, f 2 e ) and ( e-new not aligned and f-new not aligned )) add alignment point ( e-new, f-new ) FINAL(a): for english word e-new = 0. . . en foreign word f-new = 0. . . fn if ( ( ( e-new, f-new ) in alignment a) and ( e-new not aligned or f-new not aligned ) ) add alignment point ( e-new, f-new )

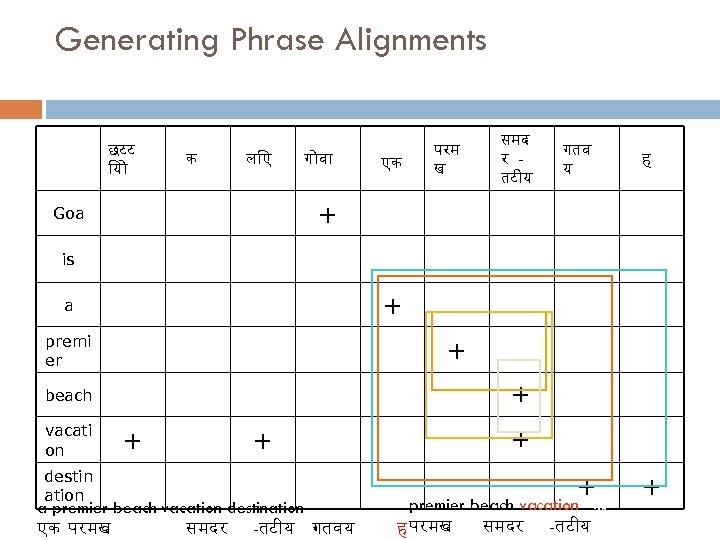

Generating Phrase Alignments छटट य क ल ए ग व एक परम ख समद र तट य गतव य ह + Goa is + a premi er + + beach destin ation + + a premier beach vacation destination एक परमख समदर -तट य गतवय + vacati on + premier beach vacation 24 समदर -तट य ह परमख +

Generating Phrase Alignments छटट य क ल ए ग व एक परम ख समद र तट य गतव य ह + Goa is + a premi er + + beach destin ation + + a premier beach vacation destination एक परमख समदर -तट य गतवय + vacati on + premier beach vacation 24 समदर -तट य ह परमख +

Using Moses and Giza++ Refer to http: //www. statmt. org/moses_steps. html

Using Moses and Giza++ Refer to http: //www. statmt. org/moses_steps. html

Steps Install packages in Moses Input - sentence aligned parallel corpus Training Tuning Generate output on test corpus (decoding)

Steps Install packages in Moses Input - sentence aligned parallel corpus Training Tuning Generate output on test corpus (decoding)

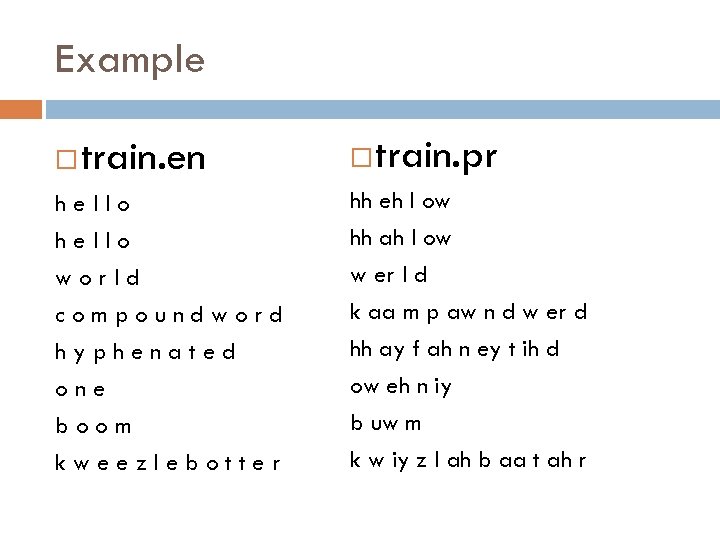

Example train. en hello world compoundword hyphenated one boom kweezlebotter train. pr hh eh l ow hh ah l ow w er l d k aa m p aw n d w er d hh ay f ah n ey t ih d ow eh n iy b uw m k w iy z l ah b aa t ah r

Example train. en hello world compoundword hyphenated one boom kweezlebotter train. pr hh eh l ow hh ah l ow w er l d k aa m p aw n d w er d hh ay f ah n ey t ih d ow eh n iy b uw m k w iy z l ah b aa t ah r

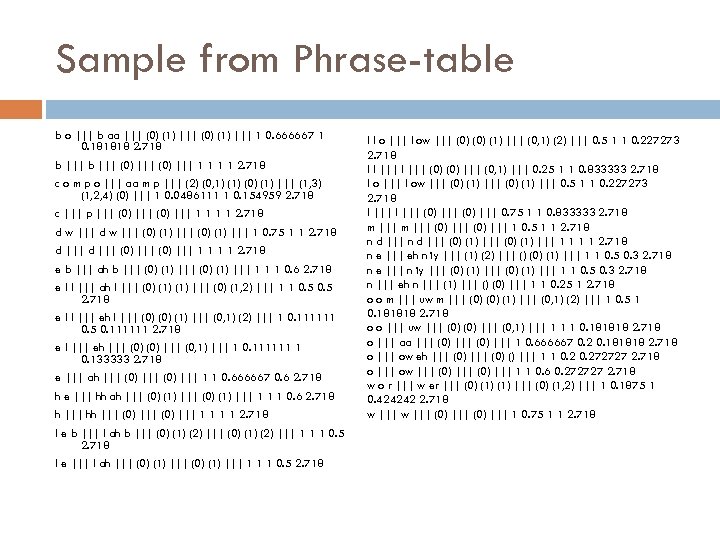

Sample from Phrase-table b o ||| b aa ||| (0) (1) ||| 1 0. 666667 1 0. 181818 2. 718 b ||| (0) ||| 1 1 2. 718 c o m p o ||| aa m p ||| (2) (0, 1) (0) (1) ||| (1, 3) (1, 2, 4) (0) ||| 1 0. 0486111 1 0. 154959 2. 718 c ||| p ||| (0) ||| 1 1 2. 718 d w ||| (0) (1) ||| 1 0. 75 1 1 2. 718 d ||| (0) ||| 1 1 2. 718 e b ||| ah b ||| (0) (1) ||| 1 1 1 0. 6 2. 718 e l l ||| ah l ||| (0) (1) ||| (0) (1, 2) ||| 1 1 0. 5 2. 718 e l l ||| eh l ||| (0) (1) ||| (0, 1) (2) ||| 1 0. 111111 0. 5 0. 111111 2. 718 e l ||| eh ||| (0) ||| (0, 1) ||| 1 0. 111111 1 0. 133333 2. 718 e ||| ah ||| (0) ||| 1 1 0. 666667 0. 6 2. 718 h e ||| hh ah ||| (0) (1) ||| 1 1 1 0. 6 2. 718 h ||| hh ||| (0) ||| 1 1 2. 718 l e b ||| l ah b ||| (0) (1) (2) ||| 1 1 1 0. 5 2. 718 l e ||| l ah ||| (0) (1) ||| 1 1 1 0. 5 2. 718 l l o ||| l ow ||| (0) (1) ||| (0, 1) (2) ||| 0. 5 1 1 0. 227273 2. 718 l l ||| (0) ||| (0, 1) ||| 0. 25 1 1 0. 833333 2. 718 l o ||| l ow ||| (0) (1) ||| 0. 5 1 1 0. 227273 2. 718 l ||| (0) ||| 0. 75 1 1 0. 833333 2. 718 m ||| (0) ||| 1 0. 5 1 1 2. 718 n d ||| (0) (1) ||| 1 1 2. 718 n e ||| eh n iy ||| (1) (2) ||| () (0) (1) ||| 1 1 0. 5 0. 3 2. 718 n e ||| n iy ||| (0) (1) ||| 1 1 0. 5 0. 3 2. 718 n ||| eh n ||| (1) ||| () (0) ||| 1 1 0. 25 1 2. 718 o o m ||| uw m ||| (0) (1) ||| (0, 1) (2) ||| 1 0. 5 1 0. 181818 2. 718 o o ||| uw ||| (0) ||| (0, 1) ||| 1 1 1 0. 181818 2. 718 o ||| aa ||| (0) ||| 1 0. 666667 0. 2 0. 181818 2. 718 o ||| ow eh ||| (0) () ||| 1 1 0. 272727 2. 718 o ||| ow ||| (0) ||| 1 1 0. 6 0. 272727 2. 718 w o r ||| w er ||| (0) (1) ||| (0) (1, 2) ||| 1 0. 1875 1 0. 424242 2. 718 w ||| (0) ||| 1 0. 75 1 1 2. 718

Sample from Phrase-table b o ||| b aa ||| (0) (1) ||| 1 0. 666667 1 0. 181818 2. 718 b ||| (0) ||| 1 1 2. 718 c o m p o ||| aa m p ||| (2) (0, 1) (0) (1) ||| (1, 3) (1, 2, 4) (0) ||| 1 0. 0486111 1 0. 154959 2. 718 c ||| p ||| (0) ||| 1 1 2. 718 d w ||| (0) (1) ||| 1 0. 75 1 1 2. 718 d ||| (0) ||| 1 1 2. 718 e b ||| ah b ||| (0) (1) ||| 1 1 1 0. 6 2. 718 e l l ||| ah l ||| (0) (1) ||| (0) (1, 2) ||| 1 1 0. 5 2. 718 e l l ||| eh l ||| (0) (1) ||| (0, 1) (2) ||| 1 0. 111111 0. 5 0. 111111 2. 718 e l ||| eh ||| (0) ||| (0, 1) ||| 1 0. 111111 1 0. 133333 2. 718 e ||| ah ||| (0) ||| 1 1 0. 666667 0. 6 2. 718 h e ||| hh ah ||| (0) (1) ||| 1 1 1 0. 6 2. 718 h ||| hh ||| (0) ||| 1 1 2. 718 l e b ||| l ah b ||| (0) (1) (2) ||| 1 1 1 0. 5 2. 718 l e ||| l ah ||| (0) (1) ||| 1 1 1 0. 5 2. 718 l l o ||| l ow ||| (0) (1) ||| (0, 1) (2) ||| 0. 5 1 1 0. 227273 2. 718 l l ||| (0) ||| (0, 1) ||| 0. 25 1 1 0. 833333 2. 718 l o ||| l ow ||| (0) (1) ||| 0. 5 1 1 0. 227273 2. 718 l ||| (0) ||| 0. 75 1 1 0. 833333 2. 718 m ||| (0) ||| 1 0. 5 1 1 2. 718 n d ||| (0) (1) ||| 1 1 2. 718 n e ||| eh n iy ||| (1) (2) ||| () (0) (1) ||| 1 1 0. 5 0. 3 2. 718 n e ||| n iy ||| (0) (1) ||| 1 1 0. 5 0. 3 2. 718 n ||| eh n ||| (1) ||| () (0) ||| 1 1 0. 25 1 2. 718 o o m ||| uw m ||| (0) (1) ||| (0, 1) (2) ||| 1 0. 5 1 0. 181818 2. 718 o o ||| uw ||| (0) ||| (0, 1) ||| 1 1 1 0. 181818 2. 718 o ||| aa ||| (0) ||| 1 0. 666667 0. 2 0. 181818 2. 718 o ||| ow eh ||| (0) () ||| 1 1 0. 272727 2. 718 o ||| ow ||| (0) ||| 1 1 0. 6 0. 272727 2. 718 w o r ||| w er ||| (0) (1) ||| (0) (1, 2) ||| 1 0. 1875 1 0. 424242 2. 718 w ||| (0) ||| 1 0. 75 1 1 2. 718

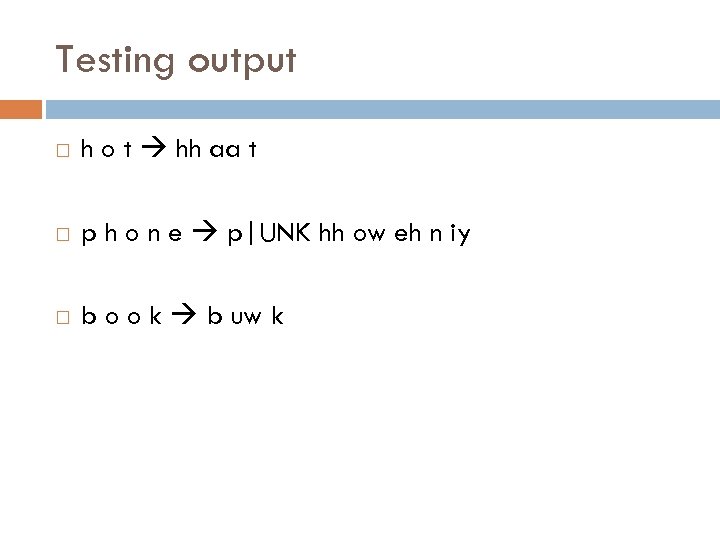

Testing output h o t hh aa t p h o n e p|UNK hh ow eh n iy b o o k b uw k

Testing output h o t hh aa t p h o n e p|UNK hh ow eh n iy b o o k b uw k