747ec7c78e2ee3e35637ffc8ce072698.ppt

- Количество слайдов: 41

Machine Translation- 5 Autumn 2008 Lecture 20 11 Sep 2008

Machine Translation- 5 Autumn 2008 Lecture 20 11 Sep 2008

Decoding p Decoding… n Given a trained model and a foreign sentence produce Argmax P(e|f) p Can’t use Viterbi it’s too restrictive p Need a reasonable efficient search technique that explores the sequence space based on how good the options look… § A* p

Decoding p Decoding… n Given a trained model and a foreign sentence produce Argmax P(e|f) p Can’t use Viterbi it’s too restrictive p Need a reasonable efficient search technique that explores the sequence space based on how good the options look… § A* p

A* p Recall for A* we need n Goal State n Operators n Heuristic

A* p Recall for A* we need n Goal State n Operators n Heuristic

A* p Recall for A* we need n Goal State n Operators n Heuristic Good coverage of source Translation of phrases/words distortions deletions/insertions Probabilities (tweaked)

A* p Recall for A* we need n Goal State n Operators n Heuristic Good coverage of source Translation of phrases/words distortions deletions/insertions Probabilities (tweaked)

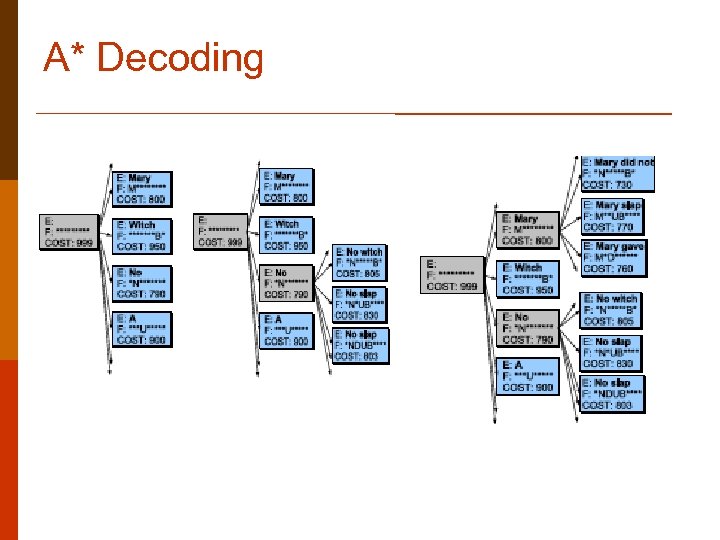

A* Decoding p Why not just use the probability as we go along? n Turns it into Uniform-cost not A* n That favors shorter sequences over longer ones. n Need to counter-balance the probability of the translation so far with its “progress towards the goal”.

A* Decoding p Why not just use the probability as we go along? n Turns it into Uniform-cost not A* n That favors shorter sequences over longer ones. n Need to counter-balance the probability of the translation so far with its “progress towards the goal”.

A*/Beam p Sorry… n Even that doesn’t work because the space is too large n So as we go we’ll prune the space as paths fall below some threshold

A*/Beam p Sorry… n Even that doesn’t work because the space is too large n So as we go we’ll prune the space as paths fall below some threshold

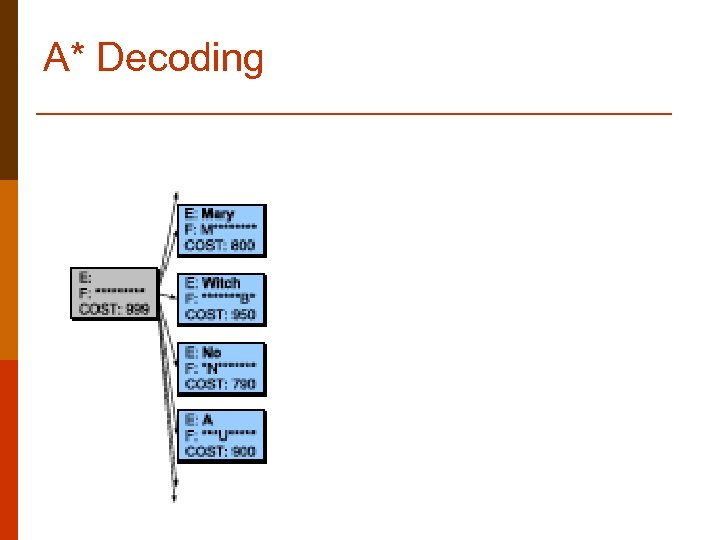

A* Decoding

A* Decoding

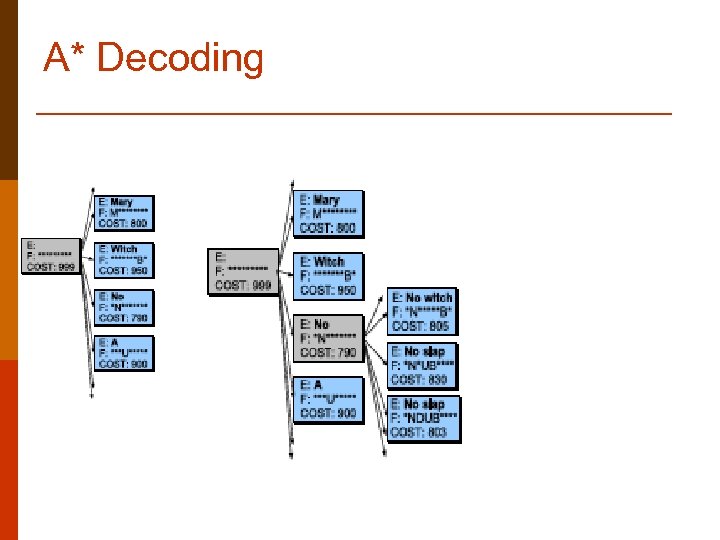

A* Decoding

A* Decoding

A* Decoding

A* Decoding

How do we evaluate MT? Human tests for fluency p Rating tests: Give the raters a scale (1 to 5) and ask them to rate n Or distinct scales for p n Or check for specific problems p p Clarity, Naturalness, Style Cohesion (Lexical chains, anaphora, ellipsis) § Hand-checking for cohesion. Well-formedness § 5 -point scale of syntactic correctness Comprehensibility tests n Noise test n Multiple choice questionnaire Readability tests n cloze

How do we evaluate MT? Human tests for fluency p Rating tests: Give the raters a scale (1 to 5) and ask them to rate n Or distinct scales for p n Or check for specific problems p p Clarity, Naturalness, Style Cohesion (Lexical chains, anaphora, ellipsis) § Hand-checking for cohesion. Well-formedness § 5 -point scale of syntactic correctness Comprehensibility tests n Noise test n Multiple choice questionnaire Readability tests n cloze

How do we evaluate MT? Human tests for fidelity p Adequacy n Does it convey the information in the original? n Ask raters to rate on a scale p Bilingual p raters: give them source and target sentence, ask how much information is preserved p Monolingual raters: give them target + a good human translation Informativeness n Task based: is there enough info to do some task? n Give raters multiple-choice questions about content

How do we evaluate MT? Human tests for fidelity p Adequacy n Does it convey the information in the original? n Ask raters to rate on a scale p Bilingual p raters: give them source and target sentence, ask how much information is preserved p Monolingual raters: give them target + a good human translation Informativeness n Task based: is there enough info to do some task? n Give raters multiple-choice questions about content

Evaluating MT: Problems p Asking humans to judge sentences on a 5 -point scale for 10 factors takes time and money(weeks or months!) p We can’t build language engineering systems if we can only evaluate them once every quarter!!!! p We need a metric that we can run every time we change our algorithm. p It would be OK if it wasn’t perfect, but just tended to correlate with the expensive human metrics, which we could still run in quarterly. Bonnie Dorr

Evaluating MT: Problems p Asking humans to judge sentences on a 5 -point scale for 10 factors takes time and money(weeks or months!) p We can’t build language engineering systems if we can only evaluate them once every quarter!!!! p We need a metric that we can run every time we change our algorithm. p It would be OK if it wasn’t perfect, but just tended to correlate with the expensive human metrics, which we could still run in quarterly. Bonnie Dorr

Automatic evaluation p p p Miller and Beebe-Center (1958) Assume we have one or more human translations of the source passage Compare the automatic translation to these human translations n Bleu n NIST n Meteor n Precision/Recall

Automatic evaluation p p p Miller and Beebe-Center (1958) Assume we have one or more human translations of the source passage Compare the automatic translation to these human translations n Bleu n NIST n Meteor n Precision/Recall

Reference proximity methods p p Assumption of Reference Proximity (ARP): n “…the closer the machine translation is to a professional human translation, the better it is” (Papineni et al. , 2002: 311) Finding a distance between 2 texts n Minimal edit distance n N-gram distance n …

Reference proximity methods p p Assumption of Reference Proximity (ARP): n “…the closer the machine translation is to a professional human translation, the better it is” (Papineni et al. , 2002: 311) Finding a distance between 2 texts n Minimal edit distance n N-gram distance n …

Minimal edit distance p p p Minimal number of editing operations to transform text 1 into text 2 n deletions (sequence xy changed to x) n insertions (x changed to xy) n substitutions (x changed by y) n transpositions (sequence xy changed to yx) Algorithm by Wagner and Fischer (1974). Edit distance implementation: RED method n Akiba Y. , K Imamura and E. Sumita. 2001

Minimal edit distance p p p Minimal number of editing operations to transform text 1 into text 2 n deletions (sequence xy changed to x) n insertions (x changed to xy) n substitutions (x changed by y) n transpositions (sequence xy changed to yx) Algorithm by Wagner and Fischer (1974). Edit distance implementation: RED method n Akiba Y. , K Imamura and E. Sumita. 2001

Problem with edit distance: Legitimate translation variation p ORI: De son côté, le département d'Etat américain, dans un communiqué, a déclaré: ‘Nous ne comprenons pas la décision’ de Paris. p HT-Expert: For its part, the American Department of State said in a communique that ‘We do not understand the decision’ made by Paris. p HT-Reference: For its part, the American State Department stated in a press release: We do not understand the decision of Paris. p MT-Systran: On its side, the American State Department, in an official statement, declared: ‘We do not include/understand the decision’ of Paris.

Problem with edit distance: Legitimate translation variation p ORI: De son côté, le département d'Etat américain, dans un communiqué, a déclaré: ‘Nous ne comprenons pas la décision’ de Paris. p HT-Expert: For its part, the American Department of State said in a communique that ‘We do not understand the decision’ made by Paris. p HT-Reference: For its part, the American State Department stated in a press release: We do not understand the decision of Paris. p MT-Systran: On its side, the American State Department, in an official statement, declared: ‘We do not include/understand the decision’ of Paris.

Legitimate translation variation p p to which human translation should we compute the edit distance? is it possible to integrate both human translations into a reference set?

Legitimate translation variation p p to which human translation should we compute the edit distance? is it possible to integrate both human translations into a reference set?

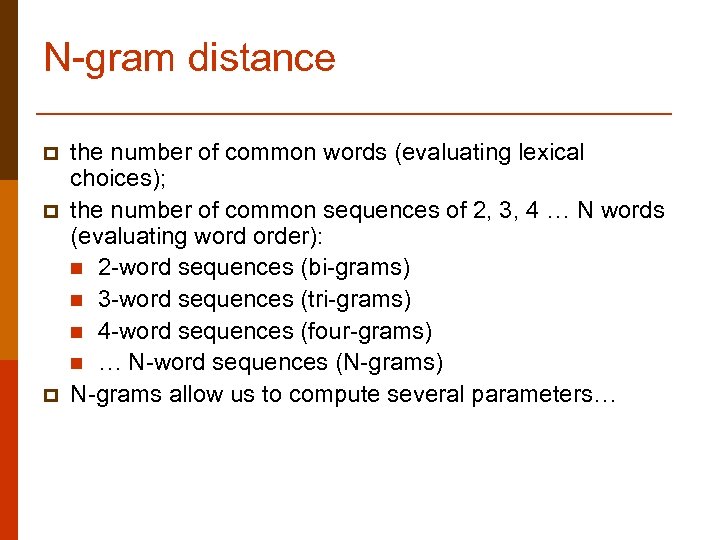

N-gram distance p p p the number of common words (evaluating lexical choices); the number of common sequences of 2, 3, 4 … N words (evaluating word order): n 2 -word sequences (bi-grams) n 3 -word sequences (tri-grams) n 4 -word sequences (four-grams) n … N-word sequences (N-grams) N-grams allow us to compute several parameters…

N-gram distance p p p the number of common words (evaluating lexical choices); the number of common sequences of 2, 3, 4 … N words (evaluating word order): n 2 -word sequences (bi-grams) n 3 -word sequences (tri-grams) n 4 -word sequences (four-grams) n … N-word sequences (N-grams) N-grams allow us to compute several parameters…

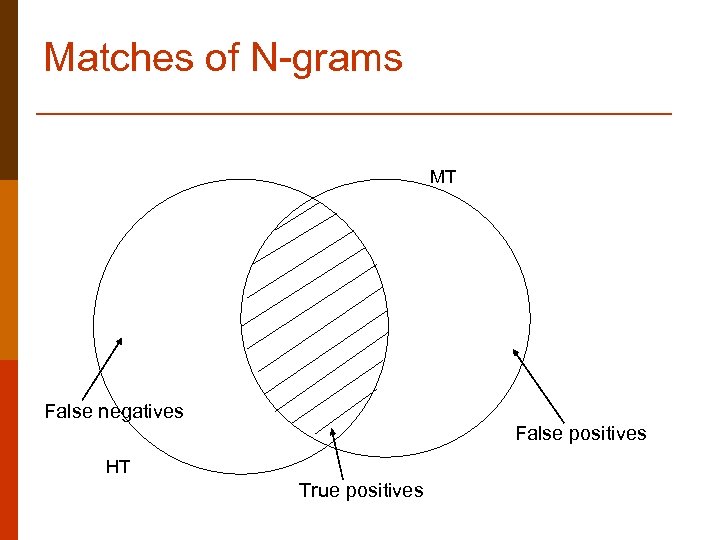

Matches of N-grams MT False negatives False positives HT True positives

Matches of N-grams MT False negatives False positives HT True positives

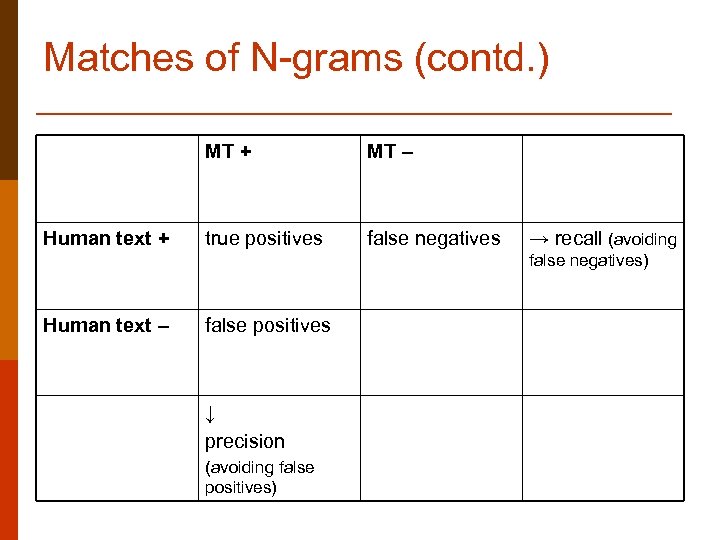

Matches of N-grams (contd. ) MT + Human text + MT – true positives false negatives → recall (avoiding false negatives) Human text – false positives ↓ precision (avoiding false positives)

Matches of N-grams (contd. ) MT + Human text + MT – true positives false negatives → recall (avoiding false negatives) Human text – false positives ↓ precision (avoiding false positives)

Precision and Recall p p Precision = how accurate is the answer? n “Don’t guess, wrong answers are deducted!” Recall = how complete is the answer? n “Guess if not sure!”, don’t miss anything!

Precision and Recall p p Precision = how accurate is the answer? n “Don’t guess, wrong answers are deducted!” Recall = how complete is the answer? n “Guess if not sure!”, don’t miss anything!

Translation variation and N-grams p p N-gram distance to multiple human reference translations Precision on the union of N-gram sets in HT 1, HT 2, HT 3… p p N-grams in all independent human translations taken together with repetitions removed Recall on the intersection of N-gram sets p N-grams common to all sets – only repeated N-grams! (most stable across different human translations)

Translation variation and N-grams p p N-gram distance to multiple human reference translations Precision on the union of N-gram sets in HT 1, HT 2, HT 3… p p N-grams in all independent human translations taken together with repetitions removed Recall on the intersection of N-gram sets p N-grams common to all sets – only repeated N-grams! (most stable across different human translations)

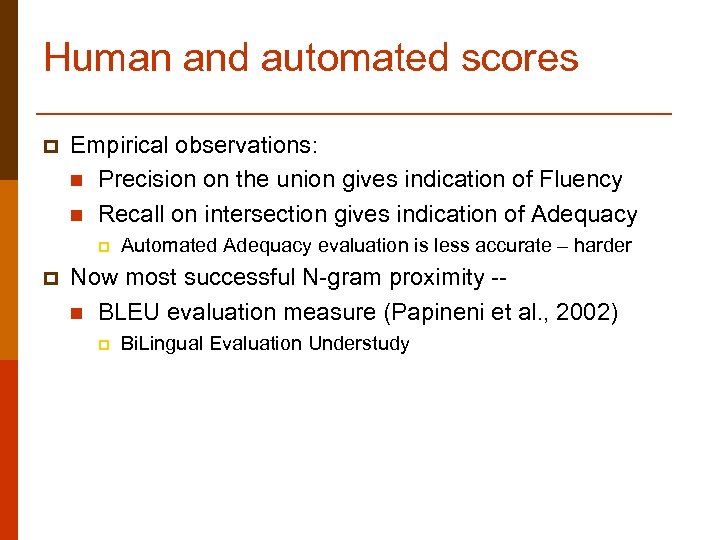

Human and automated scores p Empirical observations: n Precision on the union gives indication of Fluency n Recall on intersection gives indication of Adequacy p p Automated Adequacy evaluation is less accurate – harder Now most successful N-gram proximity -n BLEU evaluation measure (Papineni et al. , 2002) p Bi. Lingual Evaluation Understudy

Human and automated scores p Empirical observations: n Precision on the union gives indication of Fluency n Recall on intersection gives indication of Adequacy p p Automated Adequacy evaluation is less accurate – harder Now most successful N-gram proximity -n BLEU evaluation measure (Papineni et al. , 2002) p Bi. Lingual Evaluation Understudy

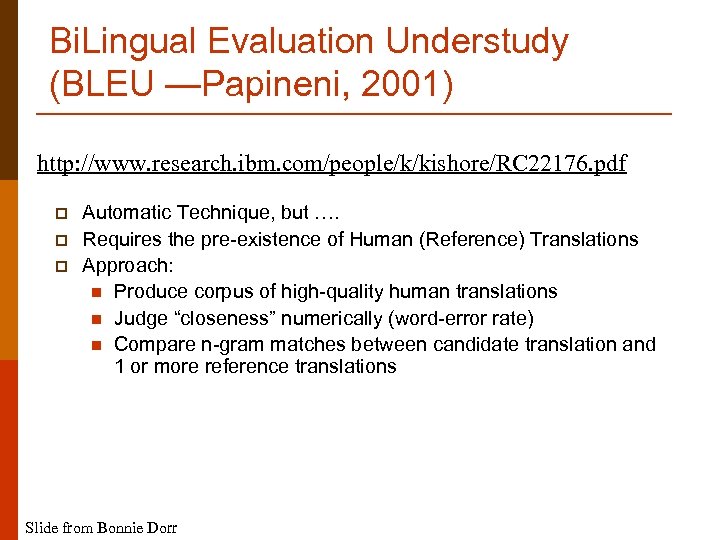

Bi. Lingual Evaluation Understudy (BLEU —Papineni, 2001) http: //www. research. ibm. com/people/k/kishore/RC 22176. pdf p p p Automatic Technique, but …. Requires the pre-existence of Human (Reference) Translations Approach: n Produce corpus of high-quality human translations n Judge “closeness” numerically (word-error rate) n Compare n-gram matches between candidate translation and 1 or more reference translations Slide from Bonnie Dorr

Bi. Lingual Evaluation Understudy (BLEU —Papineni, 2001) http: //www. research. ibm. com/people/k/kishore/RC 22176. pdf p p p Automatic Technique, but …. Requires the pre-existence of Human (Reference) Translations Approach: n Produce corpus of high-quality human translations n Judge “closeness” numerically (word-error rate) n Compare n-gram matches between candidate translation and 1 or more reference translations Slide from Bonnie Dorr

BLEU evaluation measure p p p computes Precision on the union of N-grams accurately predicts Fluency produces scores in the range of [0, 1]

BLEU evaluation measure p p p computes Precision on the union of N-grams accurately predicts Fluency produces scores in the range of [0, 1]

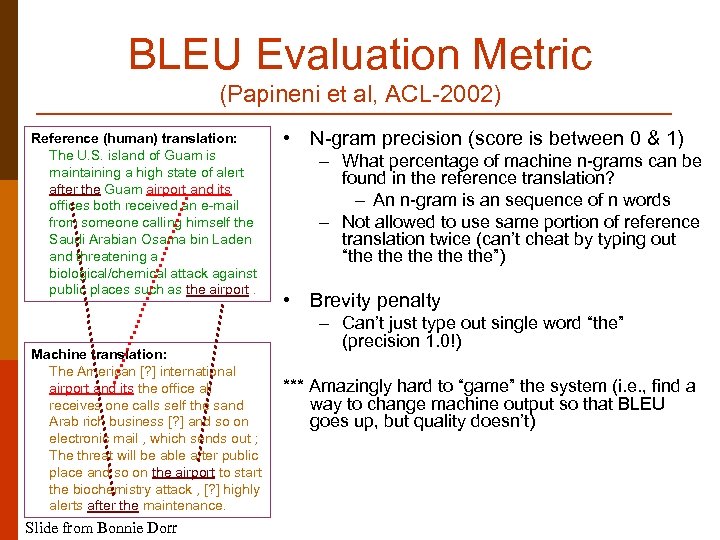

BLEU Evaluation Metric (Papineni et al, ACL-2002) Reference (human) translation: The U. S. island of Guam is maintaining a high state of alert after the Guam airport and its offices both received an e-mail from someone calling himself the Saudi Arabian Osama bin Laden and threatening a biological/chemical attack against public places such as the airport. Machine translation: The American [? ] international airport and its the office all receives one calls self the sand Arab rich business [? ] and so on electronic mail , which sends out ; The threat will be able after public place and so on the airport to start the biochemistry attack , [? ] highly alerts after the maintenance. Slide from Bonnie Dorr • N-gram precision (score is between 0 & 1) – What percentage of machine n-grams can be found in the reference translation? – An n-gram is an sequence of n words – Not allowed to use same portion of reference translation twice (can’t cheat by typing out “the the the”) • Brevity penalty – Can’t just type out single word “the” (precision 1. 0!) *** Amazingly hard to “game” the system (i. e. , find a way to change machine output so that BLEU goes up, but quality doesn’t)

BLEU Evaluation Metric (Papineni et al, ACL-2002) Reference (human) translation: The U. S. island of Guam is maintaining a high state of alert after the Guam airport and its offices both received an e-mail from someone calling himself the Saudi Arabian Osama bin Laden and threatening a biological/chemical attack against public places such as the airport. Machine translation: The American [? ] international airport and its the office all receives one calls self the sand Arab rich business [? ] and so on electronic mail , which sends out ; The threat will be able after public place and so on the airport to start the biochemistry attack , [? ] highly alerts after the maintenance. Slide from Bonnie Dorr • N-gram precision (score is between 0 & 1) – What percentage of machine n-grams can be found in the reference translation? – An n-gram is an sequence of n words – Not allowed to use same portion of reference translation twice (can’t cheat by typing out “the the the”) • Brevity penalty – Can’t just type out single word “the” (precision 1. 0!) *** Amazingly hard to “game” the system (i. e. , find a way to change machine output so that BLEU goes up, but quality doesn’t)

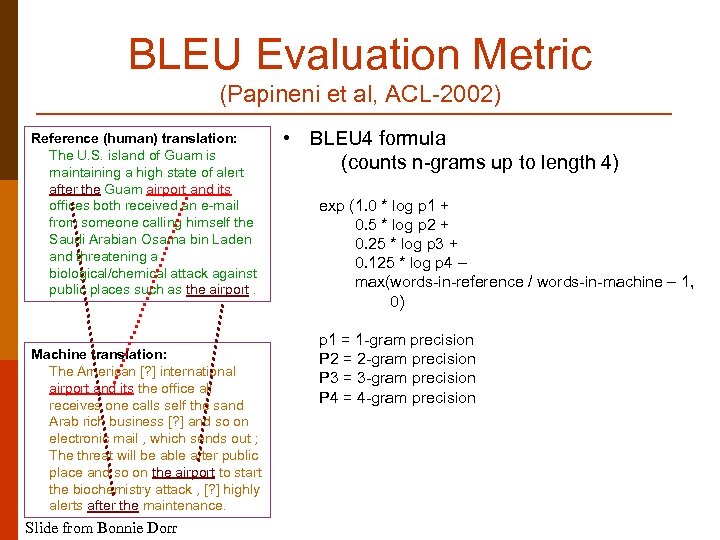

BLEU Evaluation Metric (Papineni et al, ACL-2002) Reference (human) translation: The U. S. island of Guam is maintaining a high state of alert after the Guam airport and its offices both received an e-mail from someone calling himself the Saudi Arabian Osama bin Laden and threatening a biological/chemical attack against public places such as the airport. Machine translation: The American [? ] international airport and its the office all receives one calls self the sand Arab rich business [? ] and so on electronic mail , which sends out ; The threat will be able after public place and so on the airport to start the biochemistry attack , [? ] highly alerts after the maintenance. Slide from Bonnie Dorr • BLEU 4 formula (counts n-grams up to length 4) exp (1. 0 * log p 1 + 0. 5 * log p 2 + 0. 25 * log p 3 + 0. 125 * log p 4 – max(words-in-reference / words-in-machine – 1, 0) p 1 = 1 -gram precision P 2 = 2 -gram precision P 3 = 3 -gram precision P 4 = 4 -gram precision

BLEU Evaluation Metric (Papineni et al, ACL-2002) Reference (human) translation: The U. S. island of Guam is maintaining a high state of alert after the Guam airport and its offices both received an e-mail from someone calling himself the Saudi Arabian Osama bin Laden and threatening a biological/chemical attack against public places such as the airport. Machine translation: The American [? ] international airport and its the office all receives one calls self the sand Arab rich business [? ] and so on electronic mail , which sends out ; The threat will be able after public place and so on the airport to start the biochemistry attack , [? ] highly alerts after the maintenance. Slide from Bonnie Dorr • BLEU 4 formula (counts n-grams up to length 4) exp (1. 0 * log p 1 + 0. 5 * log p 2 + 0. 25 * log p 3 + 0. 125 * log p 4 – max(words-in-reference / words-in-machine – 1, 0) p 1 = 1 -gram precision P 2 = 2 -gram precision P 3 = 3 -gram precision P 4 = 4 -gram precision

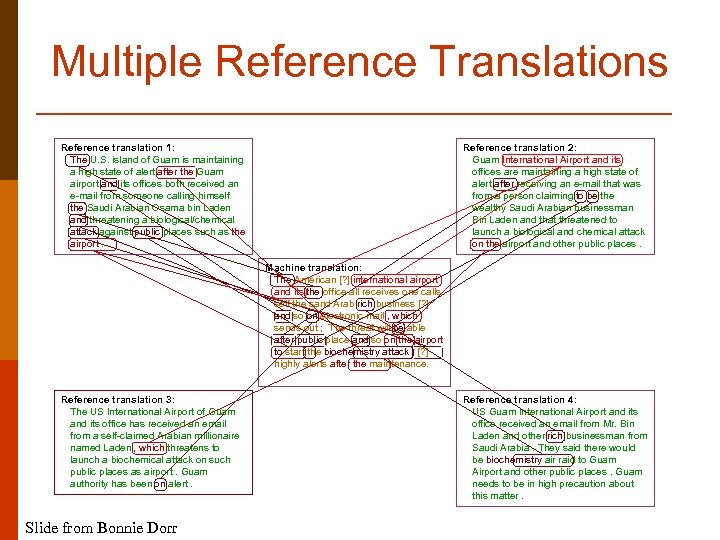

Multiple Reference Translations Reference translation 1: The U. S. island of Guam is maintaining a high state of alert after the Guam airport and its offices both received an e-mail from someone calling himself the Saudi Arabian Osama bin Laden and threatening a biological/chemical attack against public places such as the airport. Reference translation 2: Guam International Airport and its offices are maintaining a high state of alert after receiving an e-mail that was from a person claiming to be the wealthy Saudi Arabian businessman Bin Laden and that threatened to launch a biological and chemical attack on the airport and other public places. Machine translation: The American [? ] international airport and its the office all receives one calls self the sand Arab rich business [? ] and so on electronic mail , which sends out ; The threat will be able after public place and so on the airport to start the biochemistry attack , [? ] highly alerts after the maintenance. Reference translation 3: The US International Airport of Guam and its office has received an email from a self-claimed Arabian millionaire named Laden , which threatens to launch a biochemical attack on such public places as airport. Guam authority has been on alert. Slide from Bonnie Dorr Reference translation 4: US Guam International Airport and its office received an email from Mr. Bin Laden and other rich businessman from Saudi Arabia. They said there would be biochemistry air raid to Guam Airport and other public places. Guam needs to be in high precaution about this matter.

Multiple Reference Translations Reference translation 1: The U. S. island of Guam is maintaining a high state of alert after the Guam airport and its offices both received an e-mail from someone calling himself the Saudi Arabian Osama bin Laden and threatening a biological/chemical attack against public places such as the airport. Reference translation 2: Guam International Airport and its offices are maintaining a high state of alert after receiving an e-mail that was from a person claiming to be the wealthy Saudi Arabian businessman Bin Laden and that threatened to launch a biological and chemical attack on the airport and other public places. Machine translation: The American [? ] international airport and its the office all receives one calls self the sand Arab rich business [? ] and so on electronic mail , which sends out ; The threat will be able after public place and so on the airport to start the biochemistry attack , [? ] highly alerts after the maintenance. Reference translation 3: The US International Airport of Guam and its office has received an email from a self-claimed Arabian millionaire named Laden , which threatens to launch a biochemical attack on such public places as airport. Guam authority has been on alert. Slide from Bonnie Dorr Reference translation 4: US Guam International Airport and its office received an email from Mr. Bin Laden and other rich businessman from Saudi Arabia. They said there would be biochemistry air raid to Guam Airport and other public places. Guam needs to be in high precaution about this matter.

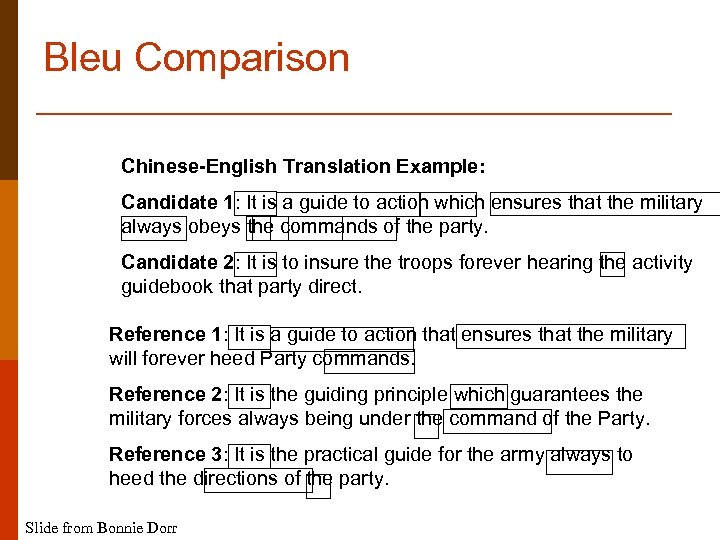

Bleu Comparison Chinese-English Translation Example: Candidate 1: It is a guide to action which ensures that the military always obeys the commands of the party. Candidate 2: It is to insure the troops forever hearing the activity guidebook that party direct. Reference 1: It is a guide to action that ensures that the military will forever heed Party commands. Reference 2: It is the guiding principle which guarantees the military forces always being under the command of the Party. Reference 3: It is the practical guide for the army always to heed the directions of the party. Slide from Bonnie Dorr

Bleu Comparison Chinese-English Translation Example: Candidate 1: It is a guide to action which ensures that the military always obeys the commands of the party. Candidate 2: It is to insure the troops forever hearing the activity guidebook that party direct. Reference 1: It is a guide to action that ensures that the military will forever heed Party commands. Reference 2: It is the guiding principle which guarantees the military forces always being under the command of the Party. Reference 3: It is the practical guide for the army always to heed the directions of the party. Slide from Bonnie Dorr

How Do We Compute Bleu Scores? p Intuition: “What percentage of words in candidate occurred in some human translation? ” p Proposal: count up # of candidate translation words (unigrams) # in any reference translation, divide by the total # of words in # candidate translation But can’t just count total # of overlapping N-grams! n Candidate: the the the n Reference 1: The cat is on the mat Solution: A reference word should be considered exhausted after a matching candidate word is identified. p p Slide from Bonnie Dorr

How Do We Compute Bleu Scores? p Intuition: “What percentage of words in candidate occurred in some human translation? ” p Proposal: count up # of candidate translation words (unigrams) # in any reference translation, divide by the total # of words in # candidate translation But can’t just count total # of overlapping N-grams! n Candidate: the the the n Reference 1: The cat is on the mat Solution: A reference word should be considered exhausted after a matching candidate word is identified. p p Slide from Bonnie Dorr

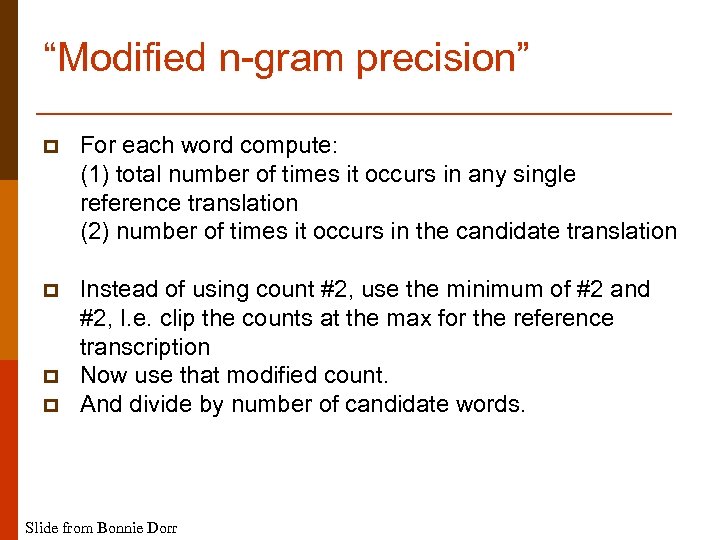

“Modified n-gram precision” p For each word compute: (1) total number of times it occurs in any single reference translation (2) number of times it occurs in the candidate translation p Instead of using count #2, use the minimum of #2 and #2, I. e. clip the counts at the max for the reference transcription Now use that modified count. And divide by number of candidate words. p p Slide from Bonnie Dorr

“Modified n-gram precision” p For each word compute: (1) total number of times it occurs in any single reference translation (2) number of times it occurs in the candidate translation p Instead of using count #2, use the minimum of #2 and #2, I. e. clip the counts at the max for the reference transcription Now use that modified count. And divide by number of candidate words. p p Slide from Bonnie Dorr

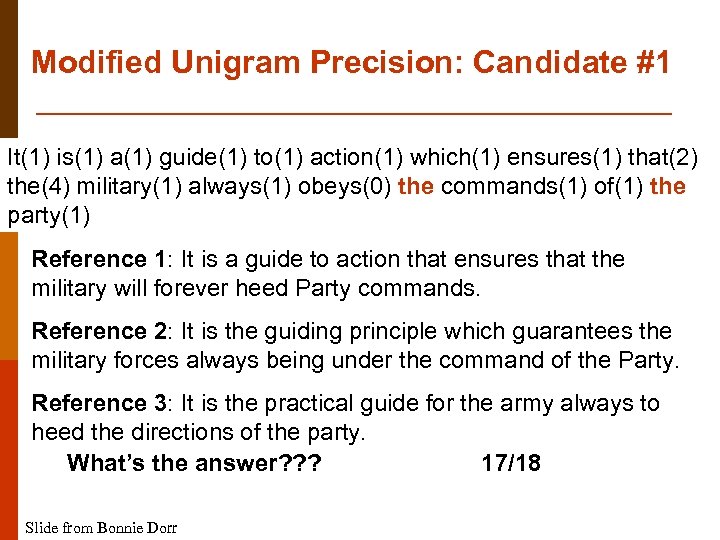

Modified Unigram Precision: Candidate #1 It(1) is(1) a(1) guide(1) to(1) action(1) which(1) ensures(1) that(2) the(4) military(1) always(1) obeys(0) the commands(1) of(1) the party(1) Reference 1: It is a guide to action that ensures that the military will forever heed Party commands. Reference 2: It is the guiding principle which guarantees the military forces always being under the command of the Party. Reference 3: It is the practical guide for the army always to heed the directions of the party. What’s the answer? ? ? 17/18 Slide from Bonnie Dorr

Modified Unigram Precision: Candidate #1 It(1) is(1) a(1) guide(1) to(1) action(1) which(1) ensures(1) that(2) the(4) military(1) always(1) obeys(0) the commands(1) of(1) the party(1) Reference 1: It is a guide to action that ensures that the military will forever heed Party commands. Reference 2: It is the guiding principle which guarantees the military forces always being under the command of the Party. Reference 3: It is the practical guide for the army always to heed the directions of the party. What’s the answer? ? ? 17/18 Slide from Bonnie Dorr

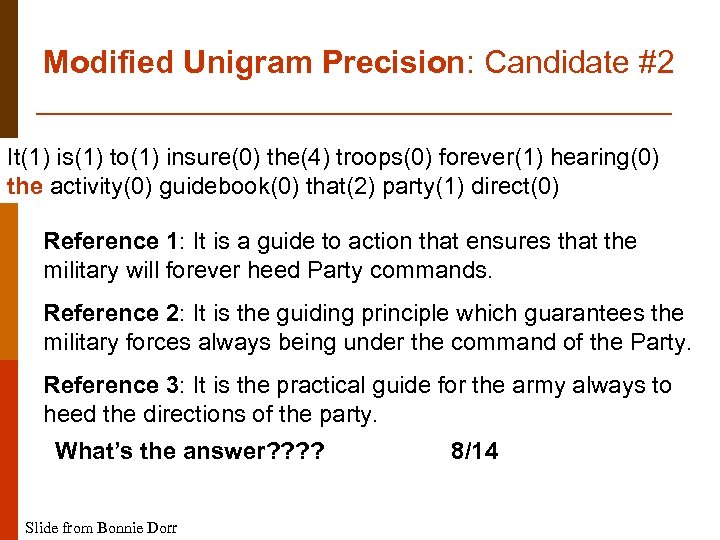

Modified Unigram Precision: Candidate #2 It(1) is(1) to(1) insure(0) the(4) troops(0) forever(1) hearing(0) the activity(0) guidebook(0) that(2) party(1) direct(0) Reference 1: It is a guide to action that ensures that the military will forever heed Party commands. Reference 2: It is the guiding principle which guarantees the military forces always being under the command of the Party. Reference 3: It is the practical guide for the army always to heed the directions of the party. What’s the answer? ? Slide from Bonnie Dorr 8/14

Modified Unigram Precision: Candidate #2 It(1) is(1) to(1) insure(0) the(4) troops(0) forever(1) hearing(0) the activity(0) guidebook(0) that(2) party(1) direct(0) Reference 1: It is a guide to action that ensures that the military will forever heed Party commands. Reference 2: It is the guiding principle which guarantees the military forces always being under the command of the Party. Reference 3: It is the practical guide for the army always to heed the directions of the party. What’s the answer? ? Slide from Bonnie Dorr 8/14

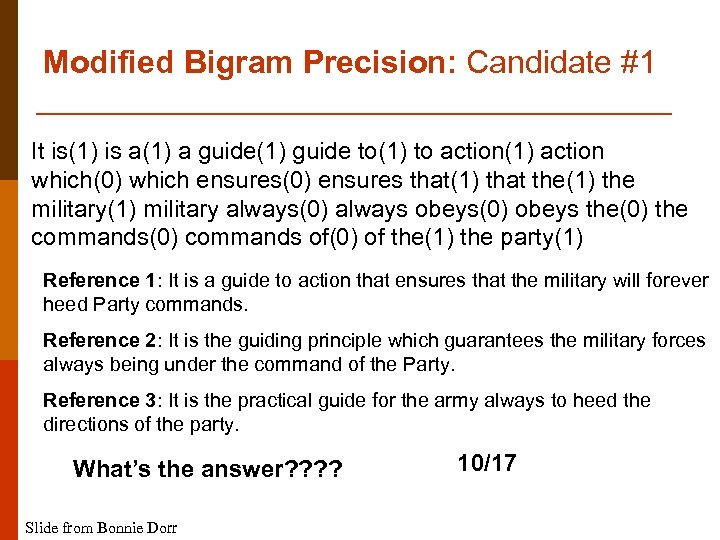

Modified Bigram Precision: Candidate #1 It is(1) is a(1) a guide(1) guide to(1) to action(1) action which(0) which ensures(0) ensures that(1) that the(1) the military(1) military always(0) always obeys(0) obeys the(0) the commands(0) commands of(0) of the(1) the party(1) Reference 1: It is a guide to action that ensures that the military will forever heed Party commands. Reference 2: It is the guiding principle which guarantees the military forces always being under the command of the Party. Reference 3: It is the practical guide for the army always to heed the directions of the party. What’s the answer? ? Slide from Bonnie Dorr 10/17

Modified Bigram Precision: Candidate #1 It is(1) is a(1) a guide(1) guide to(1) to action(1) action which(0) which ensures(0) ensures that(1) that the(1) the military(1) military always(0) always obeys(0) obeys the(0) the commands(0) commands of(0) of the(1) the party(1) Reference 1: It is a guide to action that ensures that the military will forever heed Party commands. Reference 2: It is the guiding principle which guarantees the military forces always being under the command of the Party. Reference 3: It is the practical guide for the army always to heed the directions of the party. What’s the answer? ? Slide from Bonnie Dorr 10/17

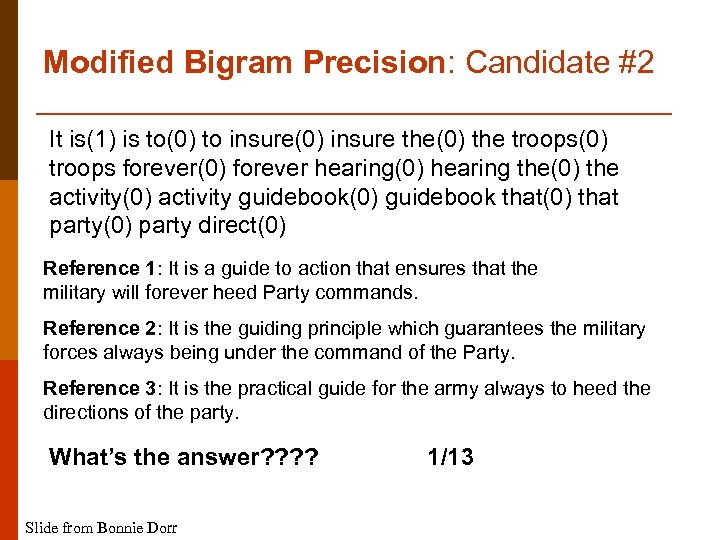

Modified Bigram Precision: Candidate #2 It is(1) is to(0) to insure(0) insure the(0) the troops(0) troops forever(0) forever hearing(0) hearing the(0) the activity(0) activity guidebook(0) guidebook that(0) that party(0) party direct(0) Reference 1: It is a guide to action that ensures that the military will forever heed Party commands. Reference 2: It is the guiding principle which guarantees the military forces always being under the command of the Party. Reference 3: It is the practical guide for the army always to heed the directions of the party. What’s the answer? ? Slide from Bonnie Dorr 1/13

Modified Bigram Precision: Candidate #2 It is(1) is to(0) to insure(0) insure the(0) the troops(0) troops forever(0) forever hearing(0) hearing the(0) the activity(0) activity guidebook(0) guidebook that(0) that party(0) party direct(0) Reference 1: It is a guide to action that ensures that the military will forever heed Party commands. Reference 2: It is the guiding principle which guarantees the military forces always being under the command of the Party. Reference 3: It is the practical guide for the army always to heed the directions of the party. What’s the answer? ? Slide from Bonnie Dorr 1/13

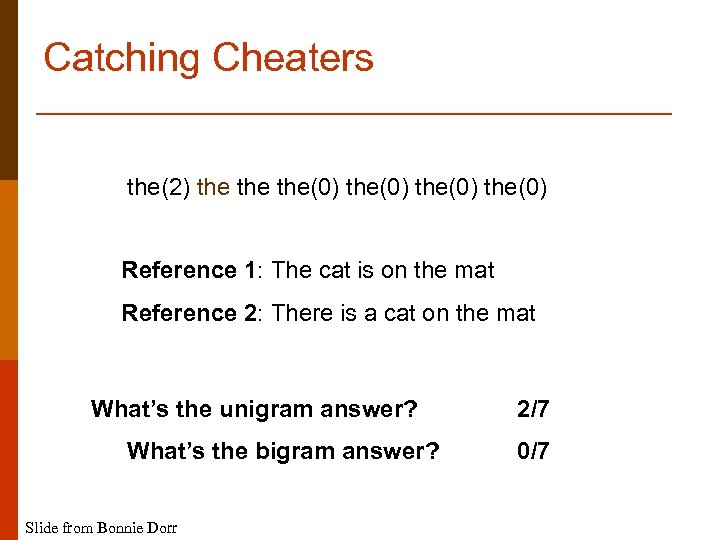

Catching Cheaters the(2) the the(0) Reference 1: The cat is on the mat Reference 2: There is a cat on the mat What’s the unigram answer? What’s the bigram answer? Slide from Bonnie Dorr 2/7 0/7

Catching Cheaters the(2) the the(0) Reference 1: The cat is on the mat Reference 2: There is a cat on the mat What’s the unigram answer? What’s the bigram answer? Slide from Bonnie Dorr 2/7 0/7

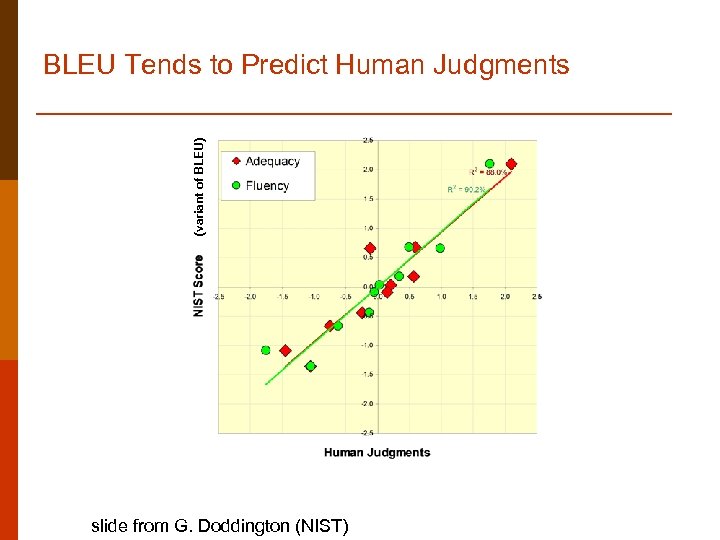

(variant of BLEU) BLEU Tends to Predict Human Judgments slide from G. Doddington (NIST)

(variant of BLEU) BLEU Tends to Predict Human Judgments slide from G. Doddington (NIST)

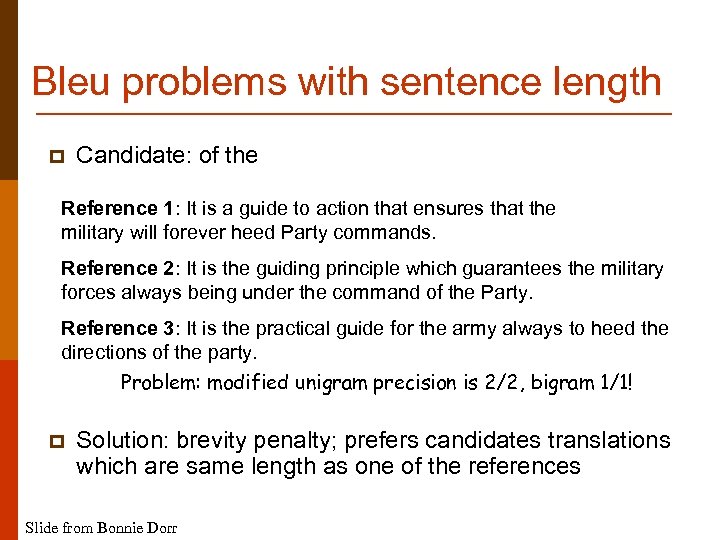

Bleu problems with sentence length p Candidate: of the Reference 1: It is a guide to action that ensures that the military will forever heed Party commands. Reference 2: It is the guiding principle which guarantees the military forces always being under the command of the Party. Reference 3: It is the practical guide for the army always to heed the directions of the party. Problem: modified unigram precision is 2/2, bigram 1/1! p Solution: brevity penalty; prefers candidates translations which are same length as one of the references Slide from Bonnie Dorr

Bleu problems with sentence length p Candidate: of the Reference 1: It is a guide to action that ensures that the military will forever heed Party commands. Reference 2: It is the guiding principle which guarantees the military forces always being under the command of the Party. Reference 3: It is the practical guide for the army always to heed the directions of the party. Problem: modified unigram precision is 2/2, bigram 1/1! p Solution: brevity penalty; prefers candidates translations which are same length as one of the references Slide from Bonnie Dorr

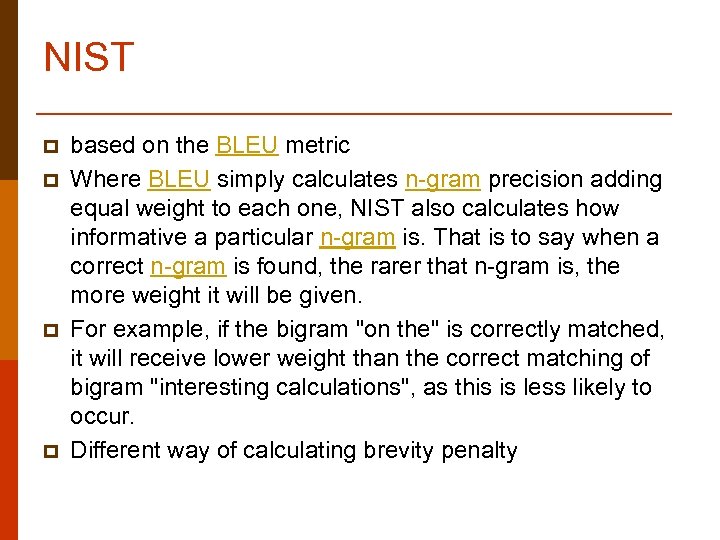

NIST p p based on the BLEU metric Where BLEU simply calculates n-gram precision adding equal weight to each one, NIST also calculates how informative a particular n-gram is. That is to say when a correct n-gram is found, the rarer that n-gram is, the more weight it will be given. For example, if the bigram "on the" is correctly matched, it will receive lower weight than the correct matching of bigram "interesting calculations", as this is less likely to occur. Different way of calculating brevity penalty

NIST p p based on the BLEU metric Where BLEU simply calculates n-gram precision adding equal weight to each one, NIST also calculates how informative a particular n-gram is. That is to say when a correct n-gram is found, the rarer that n-gram is, the more weight it will be given. For example, if the bigram "on the" is correctly matched, it will receive lower weight than the correct matching of bigram "interesting calculations", as this is less likely to occur. Different way of calculating brevity penalty

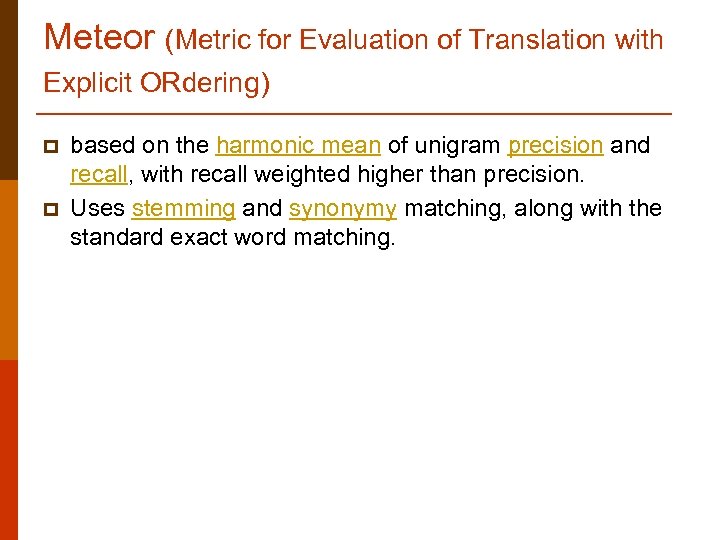

Meteor (Metric for Evaluation of Translation with Explicit ORdering) p p based on the harmonic mean of unigram precision and recall, with recall weighted higher than precision. Uses stemming and synonymy matching, along with the standard exact word matching.

Meteor (Metric for Evaluation of Translation with Explicit ORdering) p p based on the harmonic mean of unigram precision and recall, with recall weighted higher than precision. Uses stemming and synonymy matching, along with the standard exact word matching.

Recent developments: N-gram distance p p paraphrasing instead of multiple RT more weight to more “important” words n relatively more frequent in a given text relations between different human scores accounting for dynamic quality criteria

Recent developments: N-gram distance p p paraphrasing instead of multiple RT more weight to more “important” words n relatively more frequent in a given text relations between different human scores accounting for dynamic quality criteria