392579da15d593cacf80d510e1869f6a.ppt

- Количество слайдов: 105

Machine Learning Part 2: Intermediate and Active Sampling Methods Jaime Carbonell (with contributions from Pinar Donmez and Jingrui He) Carnegie Mellon University jgc@cs. cmu. edu December, 2008 © 2008, Jaime G Carbonell

Machine Learning Part 2: Intermediate and Active Sampling Methods Jaime Carbonell (with contributions from Pinar Donmez and Jingrui He) Carnegie Mellon University jgc@cs. cmu. edu December, 2008 © 2008, Jaime G Carbonell

Beyond “Standard” Learning: o o o Multi-Objective Learning Structuring Unstructured Data n Text Categorization Temporal Prediction n Cycle & trend detection Semi-Supervised Methods n Labeled + Unlabeled Data n Active Learning n Proactive Learning “Unsupervised” Learning n Predictor attributes, but no explicit objective n Clustering methods n Rare category detection December, 2008 © 2008, Jaime G. Carbonell 2

Beyond “Standard” Learning: o o o Multi-Objective Learning Structuring Unstructured Data n Text Categorization Temporal Prediction n Cycle & trend detection Semi-Supervised Methods n Labeled + Unlabeled Data n Active Learning n Proactive Learning “Unsupervised” Learning n Predictor attributes, but no explicit objective n Clustering methods n Rare category detection December, 2008 © 2008, Jaime G. Carbonell 2

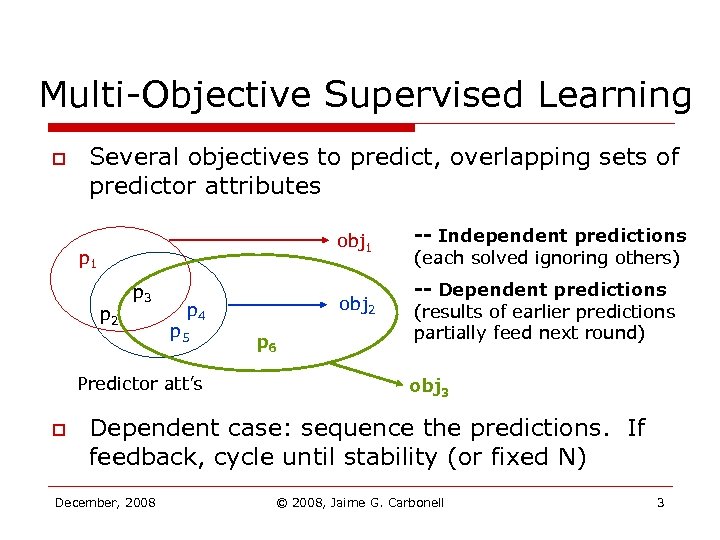

Multi-Objective Supervised Learning o Several objectives to predict, overlapping sets of predictor attributes obj 1 obj 2 p 1 p 2 p 3 p 4 p 5 Predictor att’s o p 6 -- Independent predictions (each solved ignoring others) -- Dependent predictions (results of earlier predictions partially feed next round) obj 3 Dependent case: sequence the predictions. If feedback, cycle until stability (or fixed N) December, 2008 © 2008, Jaime G. Carbonell 3

Multi-Objective Supervised Learning o Several objectives to predict, overlapping sets of predictor attributes obj 1 obj 2 p 1 p 2 p 3 p 4 p 5 Predictor att’s o p 6 -- Independent predictions (each solved ignoring others) -- Dependent predictions (results of earlier predictions partially feed next round) obj 3 Dependent case: sequence the predictions. If feedback, cycle until stability (or fixed N) December, 2008 © 2008, Jaime G. Carbonell 3

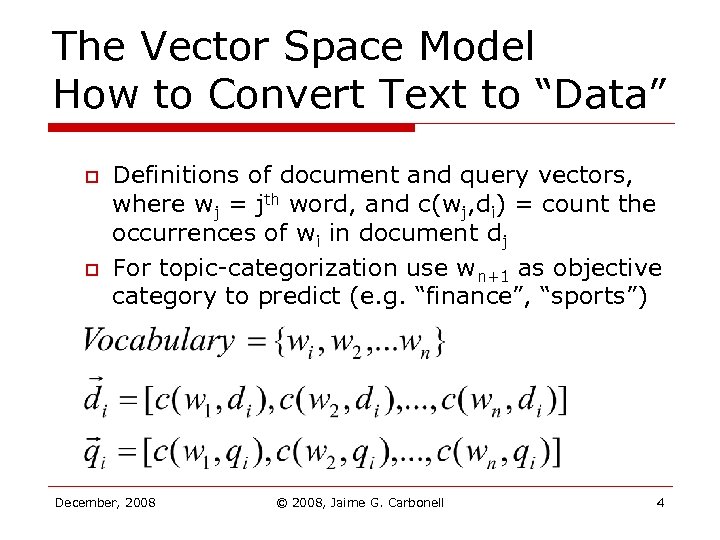

The Vector Space Model How to Convert Text to “Data” o o Definitions of document and query vectors, where wj = jth word, and c(wj, di) = count the occurrences of wi in document dj For topic-categorization use wn+1 as objective category to predict (e. g. “finance”, “sports”) December, 2008 © 2008, Jaime G. Carbonell 4

The Vector Space Model How to Convert Text to “Data” o o Definitions of document and query vectors, where wj = jth word, and c(wj, di) = count the occurrences of wi in document dj For topic-categorization use wn+1 as objective category to predict (e. g. “finance”, “sports”) December, 2008 © 2008, Jaime G. Carbonell 4

Refinements to Word-Based Features o o o Well-known methods n Stop-word removal (e. g. , “it”, “the”, “in”, …) n Phrasing (e. g. , “White House”, “heart attack”, …) n Morphology (e. g. , “countries” => “country”) Feature Expansion n Query expansion (e. g. , “cheap” => “inexpensive”, “discount”, “economic”, …) Feature Transformation & Reduction n Singular-value decomposition (SVD) n Linear discriminant analysis (LDA) December, 2008 © 2008, Jaime G. Carbonell 5

Refinements to Word-Based Features o o o Well-known methods n Stop-word removal (e. g. , “it”, “the”, “in”, …) n Phrasing (e. g. , “White House”, “heart attack”, …) n Morphology (e. g. , “countries” => “country”) Feature Expansion n Query expansion (e. g. , “cheap” => “inexpensive”, “discount”, “economic”, …) Feature Transformation & Reduction n Singular-value decomposition (SVD) n Linear discriminant analysis (LDA) December, 2008 © 2008, Jaime G. Carbonell 5

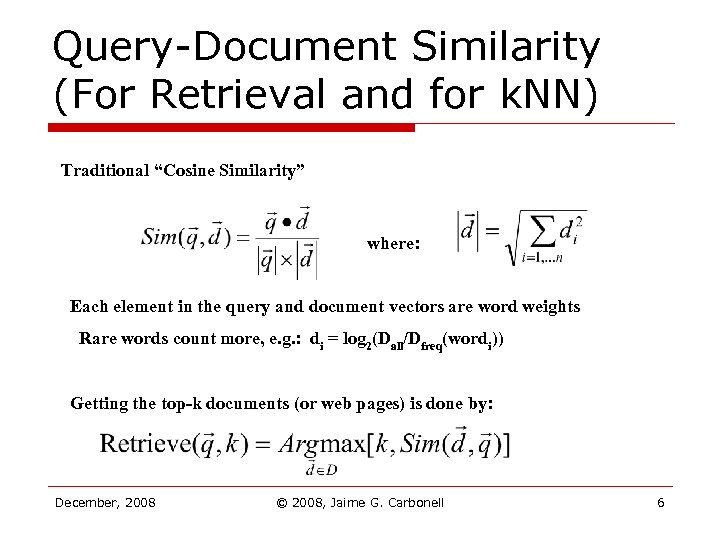

Query-Document Similarity (For Retrieval and for k. NN) Traditional “Cosine Similarity” where: Each element in the query and document vectors are word weights Rare words count more, e. g. : di = log 2(Dall/Dfreq(wordi)) Getting the top-k documents (or web pages) is done by: December, 2008 © 2008, Jaime G. Carbonell 6

Query-Document Similarity (For Retrieval and for k. NN) Traditional “Cosine Similarity” where: Each element in the query and document vectors are word weights Rare words count more, e. g. : di = log 2(Dall/Dfreq(wordi)) Getting the top-k documents (or web pages) is done by: December, 2008 © 2008, Jaime G. Carbonell 6

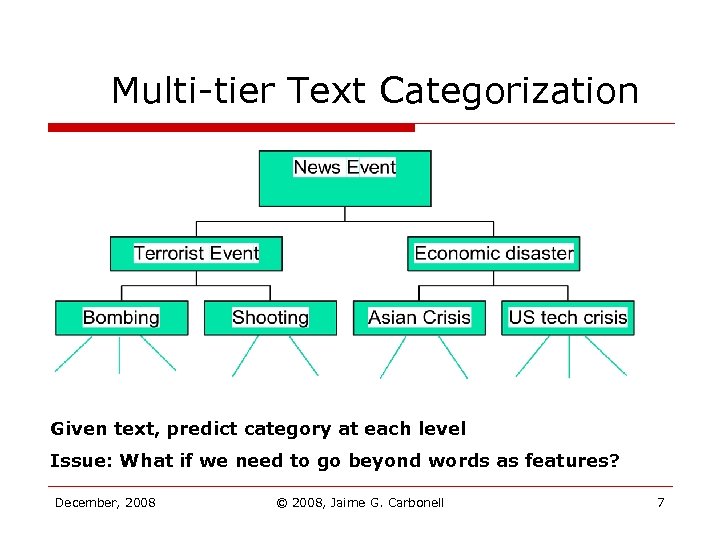

Multi-tier Text Categorization Given text, predict category at each level Issue: What if we need to go beyond words as features? December, 2008 © 2008, Jaime G. Carbonell 7

Multi-tier Text Categorization Given text, predict category at each level Issue: What if we need to go beyond words as features? December, 2008 © 2008, Jaime G. Carbonell 7

Time Series Prediction Process o o Find leading indicators n “predictor” variables from earlier epochs n Code values per distinct time interval o E. g. “sales at t-1, at t-2, t-3 …” o E. g. “advertisement $ at t, t-1, t-2” n Objective is to predict desired variable at current or future epochs o E. g. “sales at t, t+1, t+2” Apply machine learning methods you learned n Regression, d-trees, k. NN, Bayesian, … December, 2008 © 2008, Jaime G. Carbonell 8

Time Series Prediction Process o o Find leading indicators n “predictor” variables from earlier epochs n Code values per distinct time interval o E. g. “sales at t-1, at t-2, t-3 …” o E. g. “advertisement $ at t, t-1, t-2” n Objective is to predict desired variable at current or future epochs o E. g. “sales at t, t+1, t+2” Apply machine learning methods you learned n Regression, d-trees, k. NN, Bayesian, … December, 2008 © 2008, Jaime G. Carbonell 8

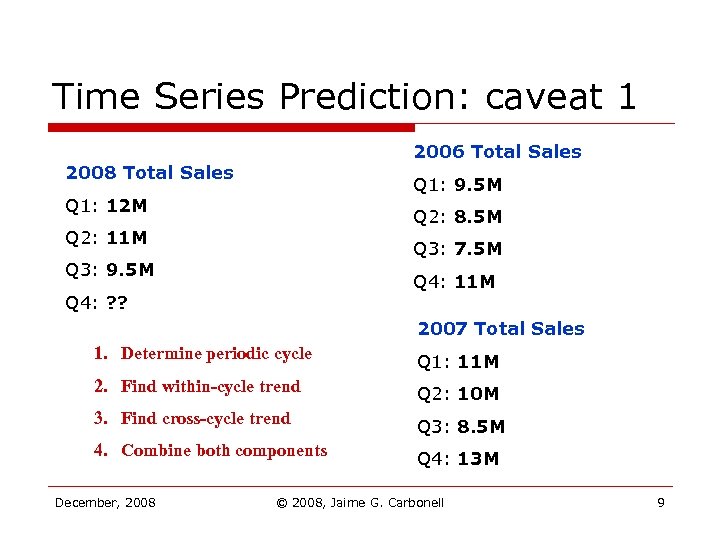

Time Series Prediction: caveat 1 2006 Total Sales 2008 Total Sales Q 1: 9. 5 M Q 1: 12 M Q 2: 8. 5 M Q 2: 11 M Q 3: 7. 5 M Q 3: 9. 5 M Q 4: 11 M Q 4: ? ? 2007 Total Sales 1. Determine periodic cycle Q 1: 11 M 2. Find within-cycle trend Q 2: 10 M 3. Find cross-cycle trend Q 3: 8. 5 M 4. Combine both components Q 4: 13 M December, 2008 © 2008, Jaime G. Carbonell 9

Time Series Prediction: caveat 1 2006 Total Sales 2008 Total Sales Q 1: 9. 5 M Q 1: 12 M Q 2: 8. 5 M Q 2: 11 M Q 3: 7. 5 M Q 3: 9. 5 M Q 4: 11 M Q 4: ? ? 2007 Total Sales 1. Determine periodic cycle Q 1: 11 M 2. Find within-cycle trend Q 2: 10 M 3. Find cross-cycle trend Q 3: 8. 5 M 4. Combine both components Q 4: 13 M December, 2008 © 2008, Jaime G. Carbonell 9

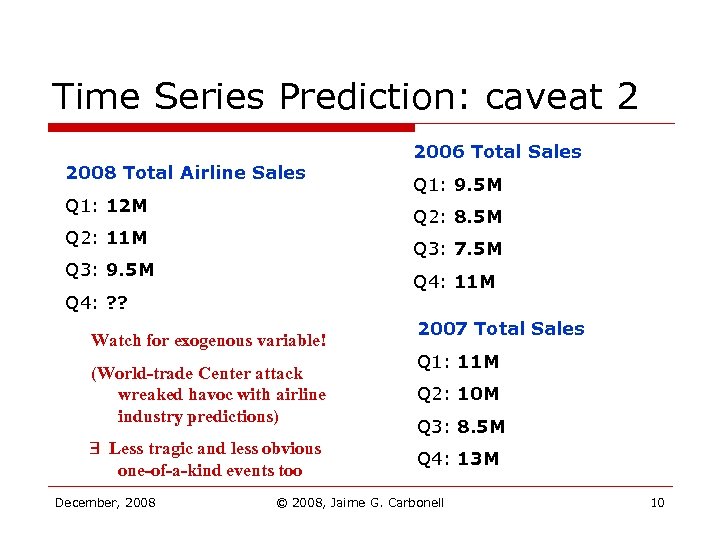

Time Series Prediction: caveat 2 2008 Total Airline Sales Q 1: 12 M Q 1: 9. 5 M Q 2: 8. 5 M Q 2: 11 M Q 3: 7. 5 M Q 3: 9. 5 M Q 4: 11 M Q 4: ? ? Watch for exogenous variable! (World-trade Center attack wreaked havoc with airline industry predictions) Less tragic and less obvious one-of-a-kind events too December, 2008 2006 Total Sales 2007 Total Sales Q 1: 11 M Q 2: 10 M Q 3: 8. 5 M Q 4: 13 M © 2008, Jaime G. Carbonell 10

Time Series Prediction: caveat 2 2008 Total Airline Sales Q 1: 12 M Q 1: 9. 5 M Q 2: 8. 5 M Q 2: 11 M Q 3: 7. 5 M Q 3: 9. 5 M Q 4: 11 M Q 4: ? ? Watch for exogenous variable! (World-trade Center attack wreaked havoc with airline industry predictions) Less tragic and less obvious one-of-a-kind events too December, 2008 2006 Total Sales 2007 Total Sales Q 1: 11 M Q 2: 10 M Q 3: 8. 5 M Q 4: 13 M © 2008, Jaime G. Carbonell 10

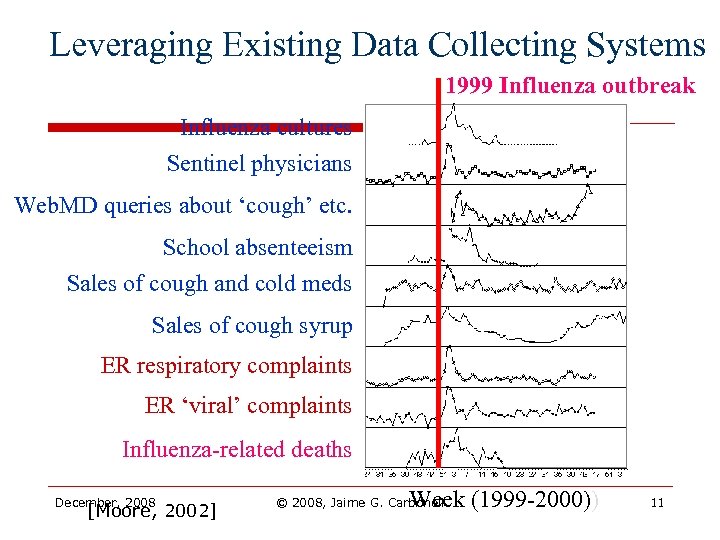

Leveraging Existing Data Collecting Systems 1999 Influenza outbreak Influenza cultures Sentinel physicians Web. MD queries about ‘cough’ etc. School absenteeism Sales of cough and cold meds Sales of cough syrup ER respiratory complaints ER ‘viral’ complaints Influenza-related deaths December, 2008 [Moore, 2002] Week (1999 -2000)) © 2008, Jaime G. Carbonell 11

Leveraging Existing Data Collecting Systems 1999 Influenza outbreak Influenza cultures Sentinel physicians Web. MD queries about ‘cough’ etc. School absenteeism Sales of cough and cold meds Sales of cough syrup ER respiratory complaints ER ‘viral’ complaints Influenza-related deaths December, 2008 [Moore, 2002] Week (1999 -2000)) © 2008, Jaime G. Carbonell 11

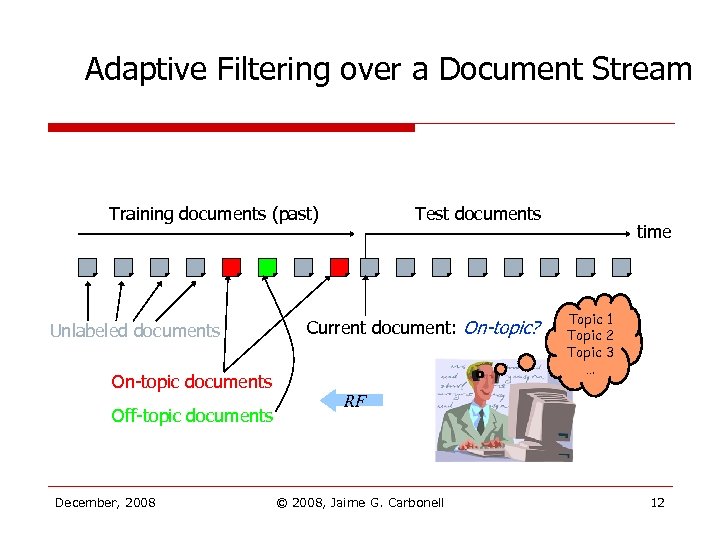

Adaptive Filtering over a Document Stream Training documents (past) Unlabeled documents On-topic documents Off-topic documents December, 2008 Test documents Current document: On-topic? time Topic 1 Topic 2 Topic 3 … RF © 2008, Jaime G. Carbonell 12

Adaptive Filtering over a Document Stream Training documents (past) Unlabeled documents On-topic documents Off-topic documents December, 2008 Test documents Current document: On-topic? time Topic 1 Topic 2 Topic 3 … RF © 2008, Jaime G. Carbonell 12

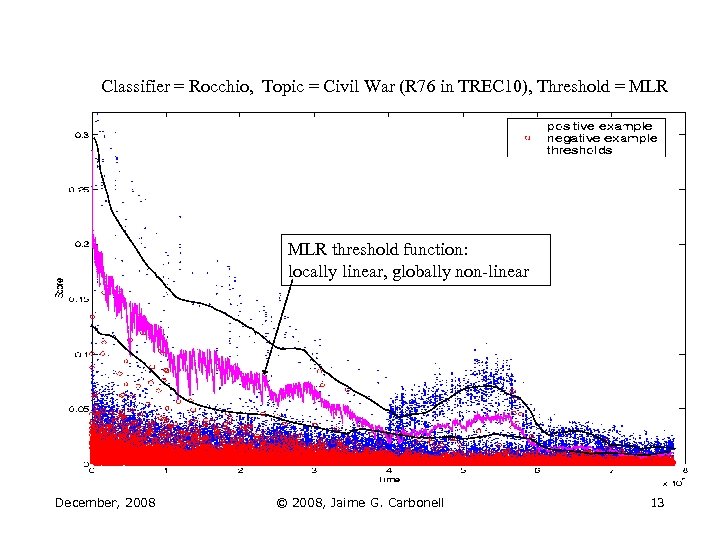

Classifier = Rocchio, Topic = Civil War (R 76 in TREC 10), Threshold = MLR threshold function: locally linear, globally non-linear December, 2008 © 2008, Jaime G. Carbonell 13

Classifier = Rocchio, Topic = Civil War (R 76 in TREC 10), Threshold = MLR threshold function: locally linear, globally non-linear December, 2008 © 2008, Jaime G. Carbonell 13

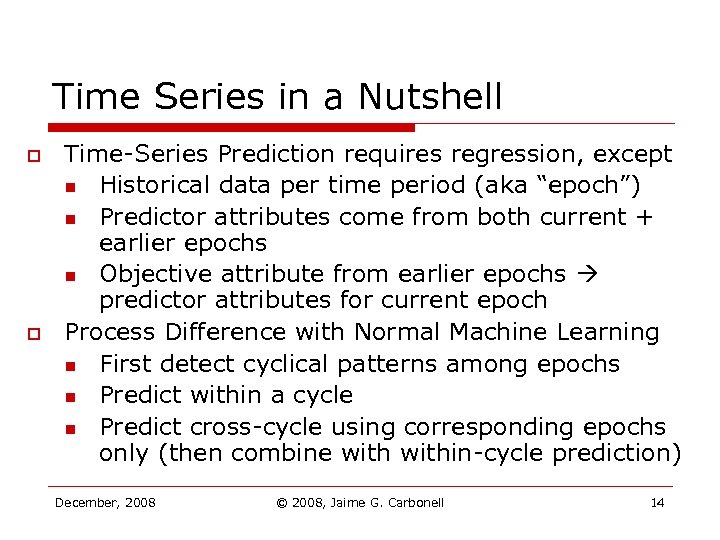

Time Series in a Nutshell o o Time-Series Prediction requires regression, except n Historical data per time period (aka “epoch”) n Predictor attributes come from both current + earlier epochs n Objective attribute from earlier epochs predictor attributes for current epoch Process Difference with Normal Machine Learning n First detect cyclical patterns among epochs n Predict within a cycle n Predict cross-cycle using corresponding epochs only (then combine within-cycle prediction) December, 2008 © 2008, Jaime G. Carbonell 14

Time Series in a Nutshell o o Time-Series Prediction requires regression, except n Historical data per time period (aka “epoch”) n Predictor attributes come from both current + earlier epochs n Objective attribute from earlier epochs predictor attributes for current epoch Process Difference with Normal Machine Learning n First detect cyclical patterns among epochs n Predict within a cycle n Predict cross-cycle using corresponding epochs only (then combine within-cycle prediction) December, 2008 © 2008, Jaime G. Carbonell 14

Active Learning o o Assume: n Very few “labeled” instances n Very many “unlabeled” instances n An omniscient “oracle” which can assign an label to an unlabeled instance Objective: n Select instances to label such that learning accuracy is maximized with the fewest oracle labeling requests December, 2008 © 2008, Jaime G. Carbonell 15

Active Learning o o Assume: n Very few “labeled” instances n Very many “unlabeled” instances n An omniscient “oracle” which can assign an label to an unlabeled instance Objective: n Select instances to label such that learning accuracy is maximized with the fewest oracle labeling requests December, 2008 © 2008, Jaime G. Carbonell 15

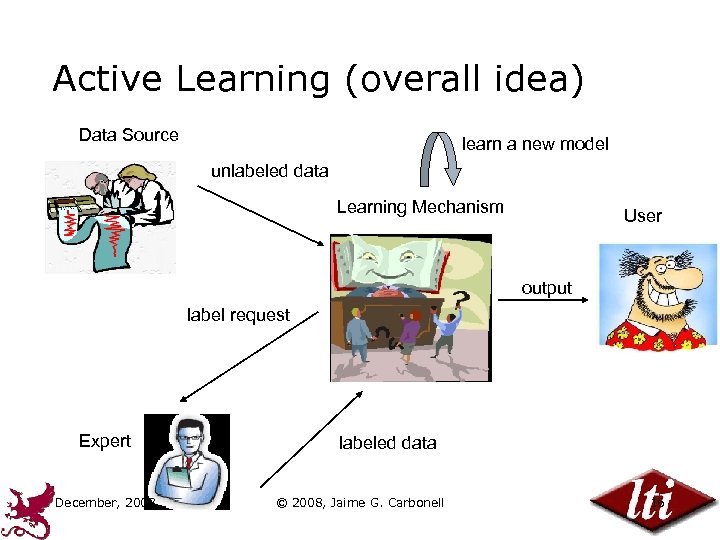

Active Learning (overall idea) Data Source learn a new model unlabeled data Learning Mechanism User output label request Expert December, 2008 labeled data © 2008, Jaime G. Carbonell 16

Active Learning (overall idea) Data Source learn a new model unlabeled data Learning Mechanism User output label request Expert December, 2008 labeled data © 2008, Jaime G. Carbonell 16

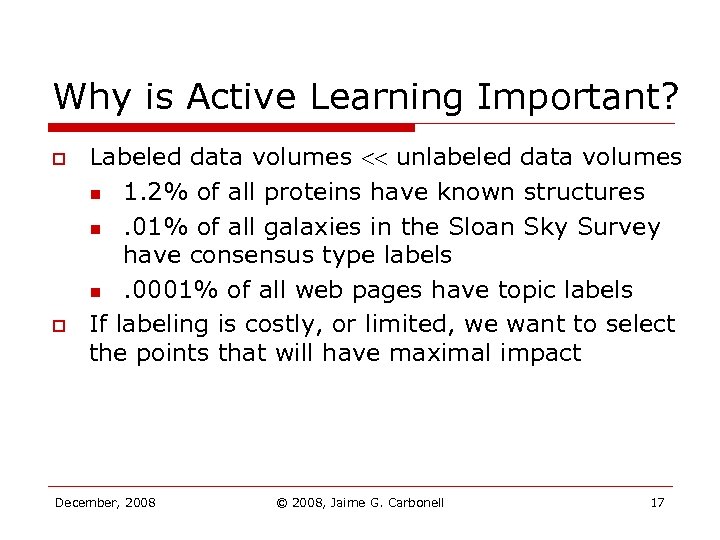

Why is Active Learning Important? o o Labeled data volumes unlabeled data volumes n 1. 2% of all proteins have known structures n. 01% of all galaxies in the Sloan Sky Survey have consensus type labels n. 0001% of all web pages have topic labels If labeling is costly, or limited, we want to select the points that will have maximal impact December, 2008 © 2008, Jaime G. Carbonell 17

Why is Active Learning Important? o o Labeled data volumes unlabeled data volumes n 1. 2% of all proteins have known structures n. 01% of all galaxies in the Sloan Sky Survey have consensus type labels n. 0001% of all web pages have topic labels If labeling is costly, or limited, we want to select the points that will have maximal impact December, 2008 © 2008, Jaime G. Carbonell 17

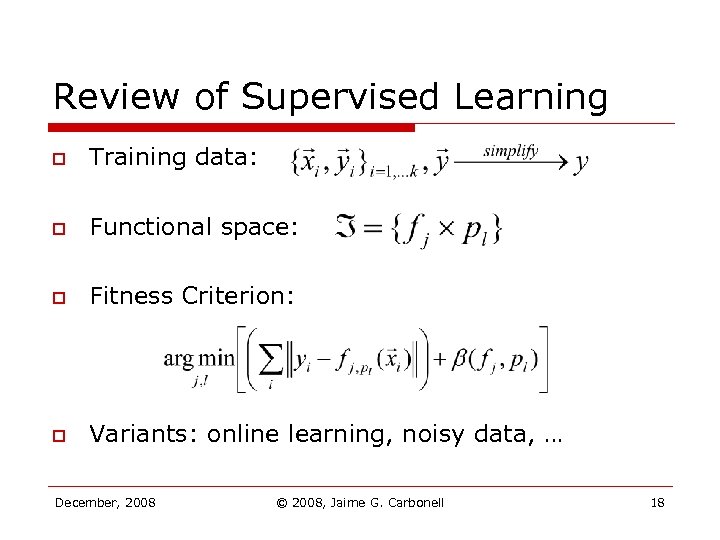

Review of Supervised Learning o Training data: o Functional space: o Fitness Criterion: o Variants: online learning, noisy data, … December, 2008 © 2008, Jaime G. Carbonell 18

Review of Supervised Learning o Training data: o Functional space: o Fitness Criterion: o Variants: online learning, noisy data, … December, 2008 © 2008, Jaime G. Carbonell 18

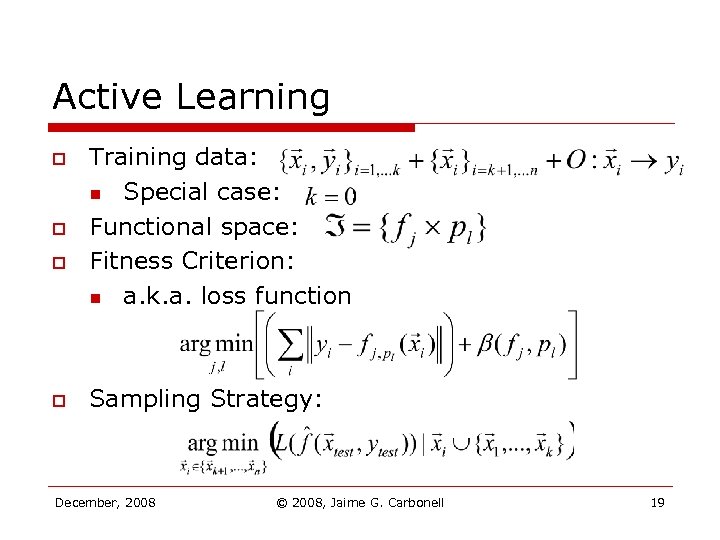

Active Learning o o Training data: n Special case: Functional space: Fitness Criterion: n a. k. a. loss function Sampling Strategy: December, 2008 © 2008, Jaime G. Carbonell 19

Active Learning o o Training data: n Special case: Functional space: Fitness Criterion: n a. k. a. loss function Sampling Strategy: December, 2008 © 2008, Jaime G. Carbonell 19

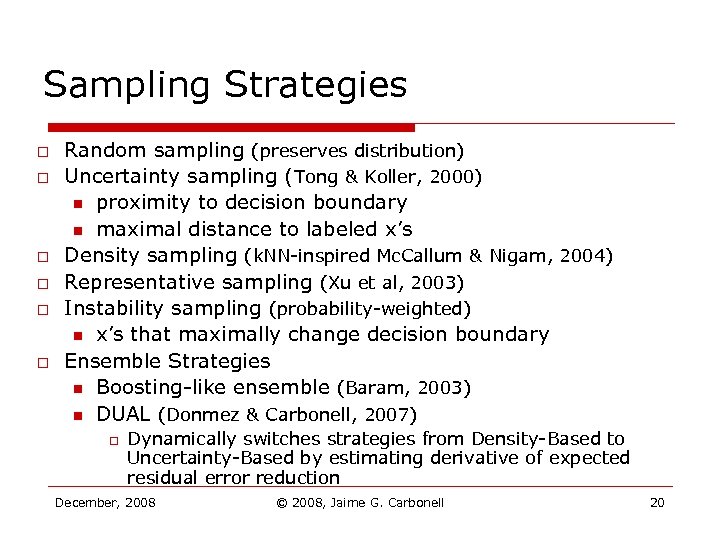

Sampling Strategies o o o Random sampling (preserves distribution) Uncertainty sampling (Tong & Koller, 2000) n proximity to decision boundary n maximal distance to labeled x’s Density sampling (k. NN-inspired Mc. Callum & Nigam, 2004) Representative sampling (Xu et al, 2003) Instability sampling (probability-weighted) n x’s that maximally change decision boundary Ensemble Strategies n Boosting-like ensemble (Baram, 2003) n DUAL (Donmez & Carbonell, 2007) o Dynamically switches strategies from Density-Based to Uncertainty-Based by estimating derivative of expected residual error reduction December, 2008 © 2008, Jaime G. Carbonell 20

Sampling Strategies o o o Random sampling (preserves distribution) Uncertainty sampling (Tong & Koller, 2000) n proximity to decision boundary n maximal distance to labeled x’s Density sampling (k. NN-inspired Mc. Callum & Nigam, 2004) Representative sampling (Xu et al, 2003) Instability sampling (probability-weighted) n x’s that maximally change decision boundary Ensemble Strategies n Boosting-like ensemble (Baram, 2003) n DUAL (Donmez & Carbonell, 2007) o Dynamically switches strategies from Density-Based to Uncertainty-Based by estimating derivative of expected residual error reduction December, 2008 © 2008, Jaime G. Carbonell 20

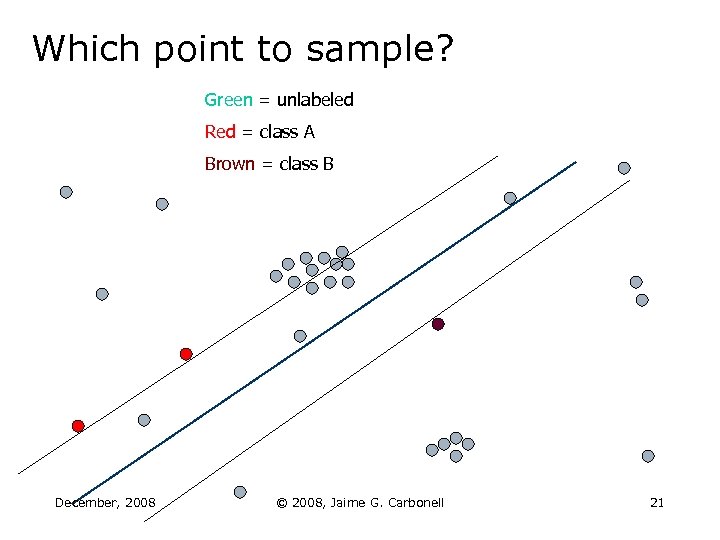

Which point to sample? Green = unlabeled Red = class A Brown = class B December, 2008 © 2008, Jaime G. Carbonell 21

Which point to sample? Green = unlabeled Red = class A Brown = class B December, 2008 © 2008, Jaime G. Carbonell 21

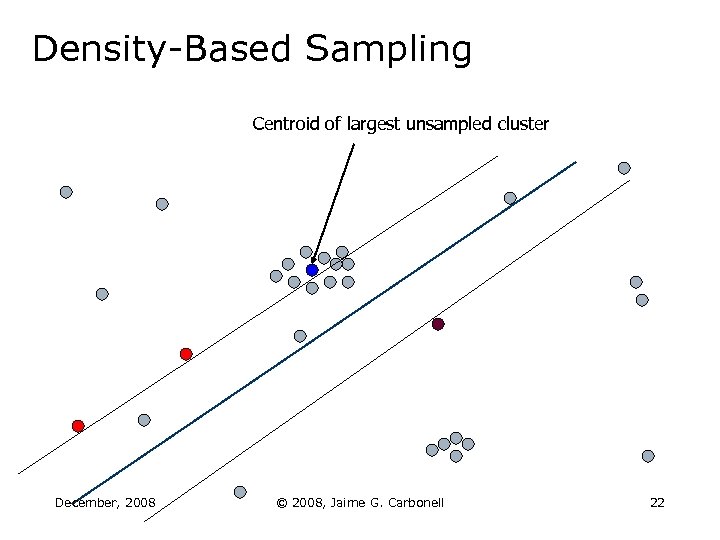

Density-Based Sampling Centroid of largest unsampled cluster December, 2008 © 2008, Jaime G. Carbonell 22

Density-Based Sampling Centroid of largest unsampled cluster December, 2008 © 2008, Jaime G. Carbonell 22

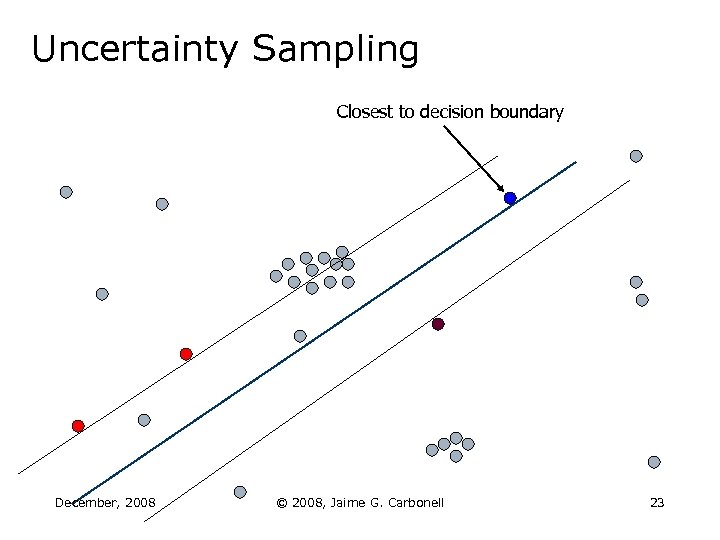

Uncertainty Sampling Closest to decision boundary December, 2008 © 2008, Jaime G. Carbonell 23

Uncertainty Sampling Closest to decision boundary December, 2008 © 2008, Jaime G. Carbonell 23

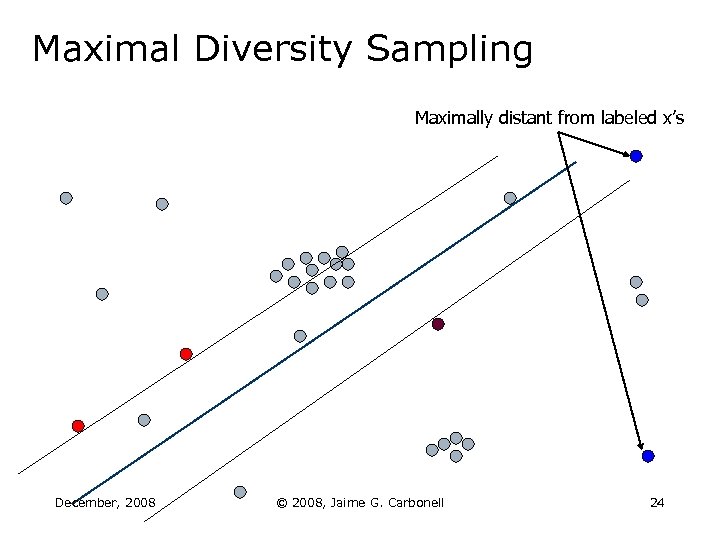

Maximal Diversity Sampling Maximally distant from labeled x’s December, 2008 © 2008, Jaime G. Carbonell 24

Maximal Diversity Sampling Maximally distant from labeled x’s December, 2008 © 2008, Jaime G. Carbonell 24

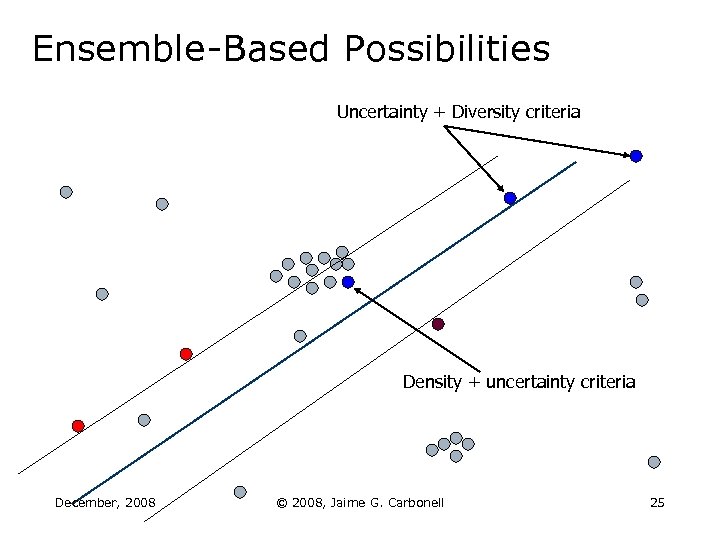

Ensemble-Based Possibilities Uncertainty + Diversity criteria Density + uncertainty criteria December, 2008 © 2008, Jaime G. Carbonell 25

Ensemble-Based Possibilities Uncertainty + Diversity criteria Density + uncertainty criteria December, 2008 © 2008, Jaime G. Carbonell 25

Active Learning Issues o o o o Interaction of active sampling with underlying classifier(s). On-line sampling vs. batch sampling. Active sampling for rank learning and for structured learning (e. g. HMMs, s. CRFs). What if Oracle is fallible, or reluctant, or differentially expensive proactive learning. How does noisy data affect active learning? What if we do not have even the first labeled point(s) for one or more classes? new class discovery. How to “optimally” combine A. L. strategies December, 2008 © 2008, Jaime G. Carbonell 26

Active Learning Issues o o o o Interaction of active sampling with underlying classifier(s). On-line sampling vs. batch sampling. Active sampling for rank learning and for structured learning (e. g. HMMs, s. CRFs). What if Oracle is fallible, or reluctant, or differentially expensive proactive learning. How does noisy data affect active learning? What if we do not have even the first labeled point(s) for one or more classes? new class discovery. How to “optimally” combine A. L. strategies December, 2008 © 2008, Jaime G. Carbonell 26

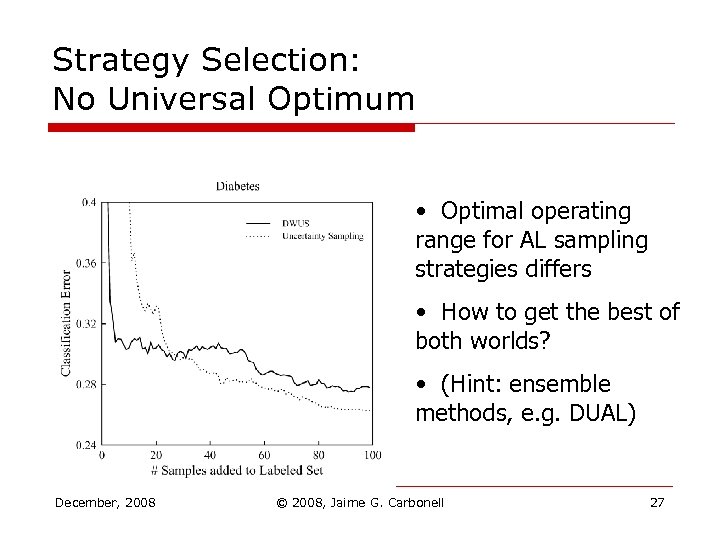

Strategy Selection: No Universal Optimum • Optimal operating range for AL sampling strategies differs • How to get the best of both worlds? • (Hint: ensemble methods, e. g. DUAL) December, 2008 © 2008, Jaime G. Carbonell 27

Strategy Selection: No Universal Optimum • Optimal operating range for AL sampling strategies differs • How to get the best of both worlds? • (Hint: ensemble methods, e. g. DUAL) December, 2008 © 2008, Jaime G. Carbonell 27

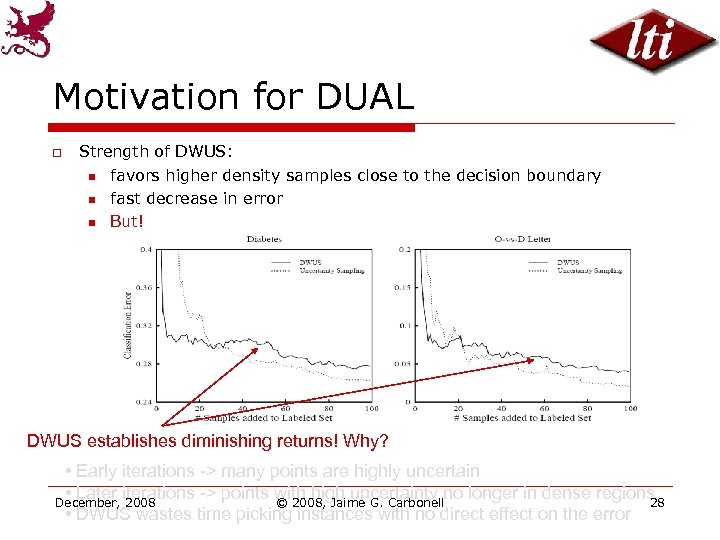

Motivation for DUAL o Strength of DWUS: n favors higher density samples close to the decision boundary n fast decrease in error n But! DWUS establishes diminishing returns! Why? • Early iterations -> many points are highly uncertain • Later iterations -> points with high uncertainty no longer in dense regions December, 2008 © 2008, Jaime G. Carbonell 28 • DWUS wastes time picking instances with no direct effect on the error

Motivation for DUAL o Strength of DWUS: n favors higher density samples close to the decision boundary n fast decrease in error n But! DWUS establishes diminishing returns! Why? • Early iterations -> many points are highly uncertain • Later iterations -> points with high uncertainty no longer in dense regions December, 2008 © 2008, Jaime G. Carbonell 28 • DWUS wastes time picking instances with no direct effect on the error

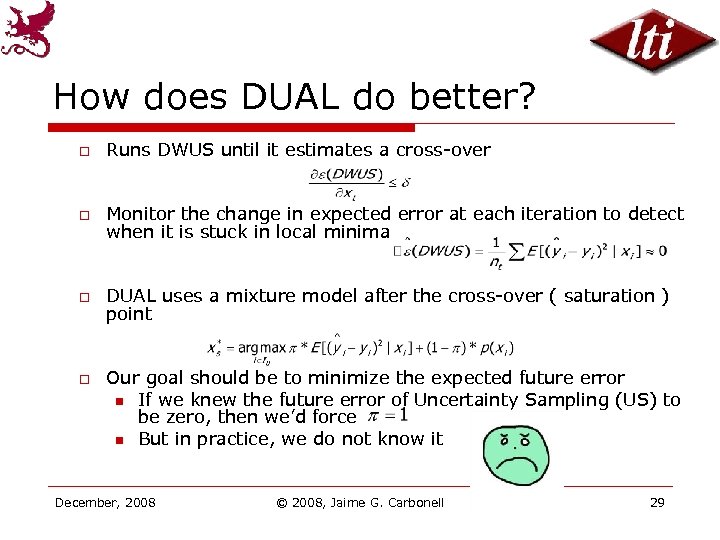

How does DUAL do better? o Runs DWUS until it estimates a cross-over o Monitor the change in expected error at each iteration to detect when it is stuck in local minima o DUAL uses a mixture model after the cross-over ( saturation ) point o Our goal should be to minimize the expected future error n If we knew the future error of Uncertainty Sampling (US) to be zero, then we’d force n But in practice, we do not know it December, 2008 © 2008, Jaime G. Carbonell 29

How does DUAL do better? o Runs DWUS until it estimates a cross-over o Monitor the change in expected error at each iteration to detect when it is stuck in local minima o DUAL uses a mixture model after the cross-over ( saturation ) point o Our goal should be to minimize the expected future error n If we knew the future error of Uncertainty Sampling (US) to be zero, then we’d force n But in practice, we do not know it December, 2008 © 2008, Jaime G. Carbonell 29

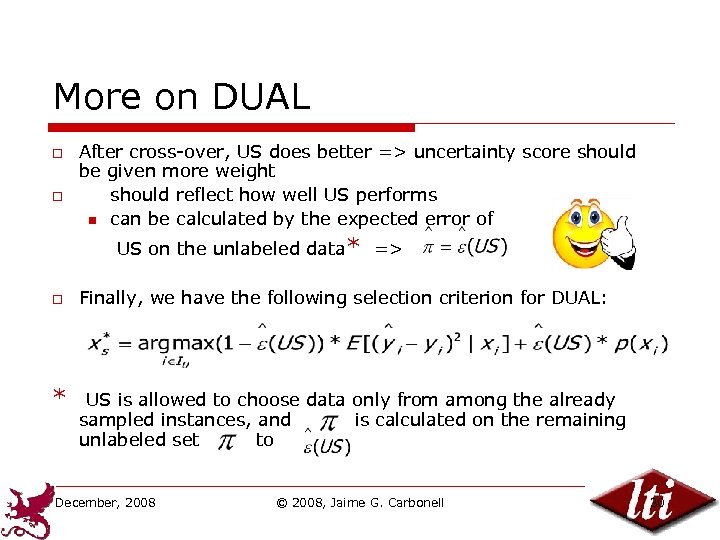

More on DUAL o o After cross-over, US does better => uncertainty score should be given more weight should reflect how well US performs n can be calculated by the expected error of US on the unlabeled data* => o * Finally, we have the following selection criterion for DUAL: US is allowed to choose data only from among the already sampled instances, and is calculated on the remaining unlabeled set to December, 2008 © 2008, Jaime G. Carbonell 30

More on DUAL o o After cross-over, US does better => uncertainty score should be given more weight should reflect how well US performs n can be calculated by the expected error of US on the unlabeled data* => o * Finally, we have the following selection criterion for DUAL: US is allowed to choose data only from among the already sampled instances, and is calculated on the remaining unlabeled set to December, 2008 © 2008, Jaime G. Carbonell 30

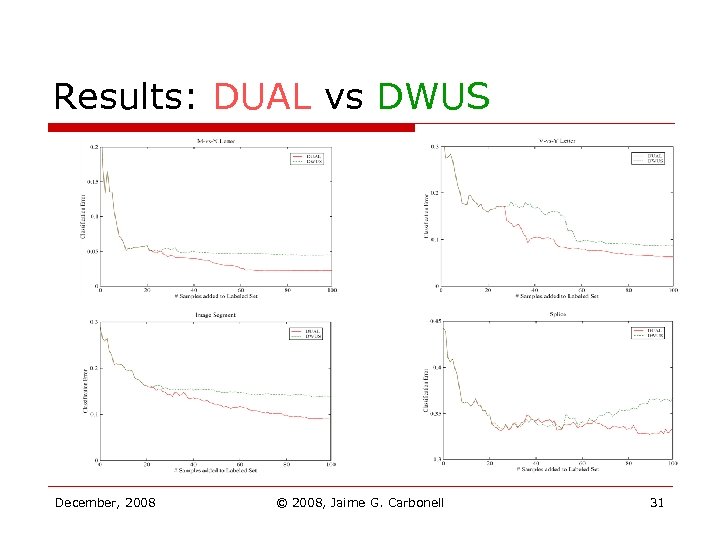

Results: DUAL vs DWUS December, 2008 © 2008, Jaime G. Carbonell 31

Results: DUAL vs DWUS December, 2008 © 2008, Jaime G. Carbonell 31

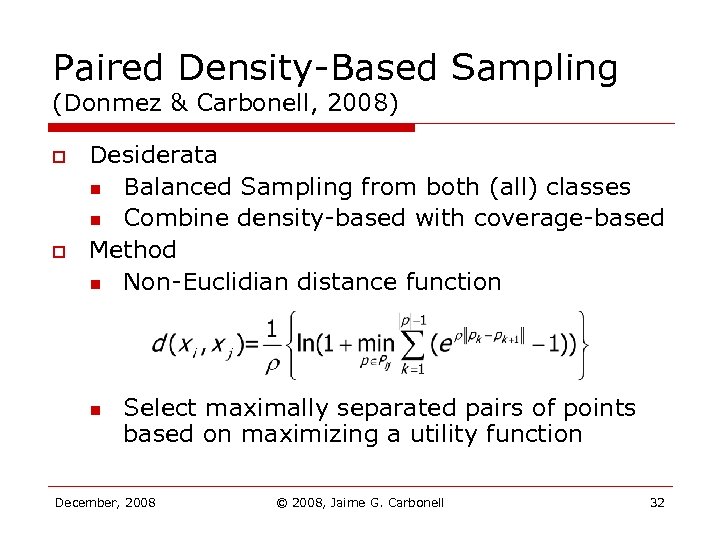

Paired Density-Based Sampling (Donmez & Carbonell, 2008) o o Desiderata n Balanced Sampling from both (all) classes n Combine density-based with coverage-based Method n Non-Euclidian distance function n Select maximally separated pairs of points based on maximizing a utility function December, 2008 © 2008, Jaime G. Carbonell 32

Paired Density-Based Sampling (Donmez & Carbonell, 2008) o o Desiderata n Balanced Sampling from both (all) classes n Combine density-based with coverage-based Method n Non-Euclidian distance function n Select maximally separated pairs of points based on maximizing a utility function December, 2008 © 2008, Jaime G. Carbonell 32

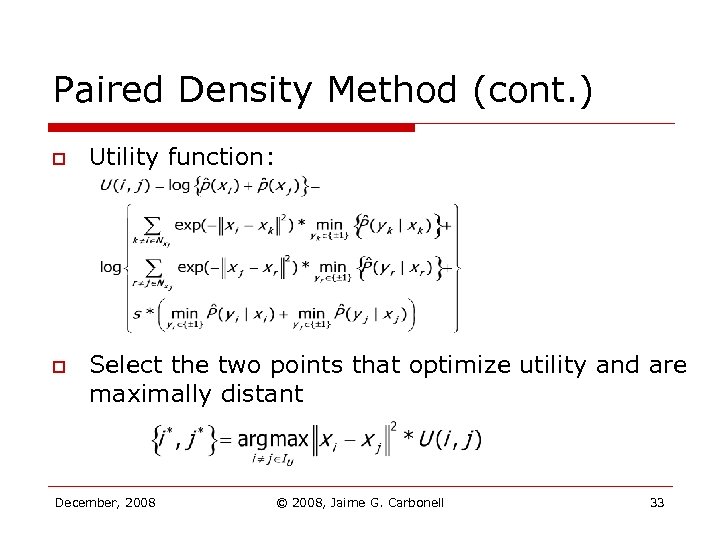

Paired Density Method (cont. ) o o Utility function: Select the two points that optimize utility and are maximally distant December, 2008 © 2008, Jaime G. Carbonell 33

Paired Density Method (cont. ) o o Utility function: Select the two points that optimize utility and are maximally distant December, 2008 © 2008, Jaime G. Carbonell 33

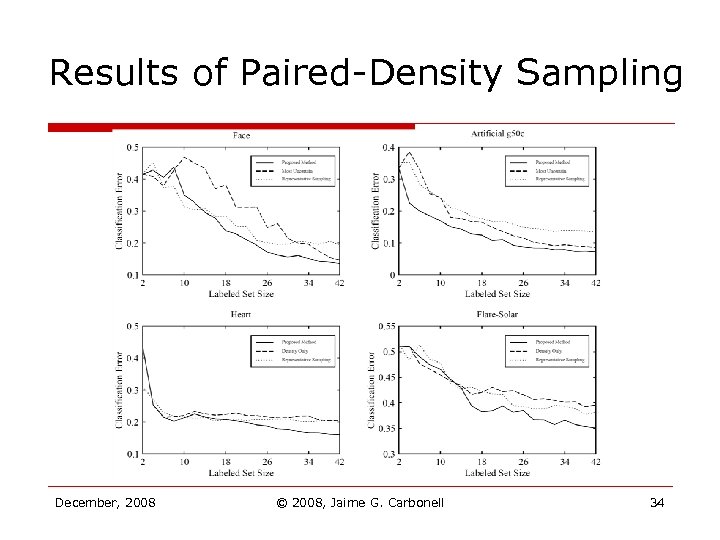

Results of Paired-Density Sampling December, 2008 © 2008, Jaime G. Carbonell 34

Results of Paired-Density Sampling December, 2008 © 2008, Jaime G. Carbonell 34

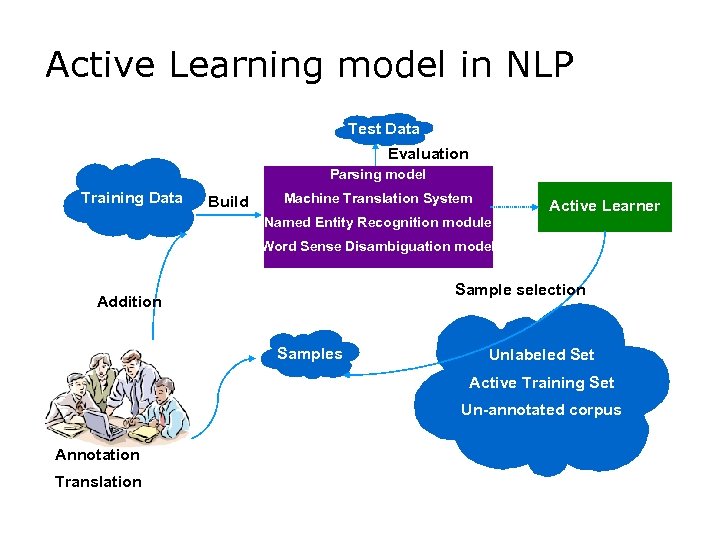

Active Learning model in NLP Test Data Evaluation Parsing model Training Data Build Machine Translation System Named Entity Recognition module Active Learner Word Sense Disambiguation model Sample selection Addition Samples Unlabeled Set Active Training Set Un-annotated corpus Annotation Translation

Active Learning model in NLP Test Data Evaluation Parsing model Training Data Build Machine Translation System Named Entity Recognition module Active Learner Word Sense Disambiguation model Sample selection Addition Samples Unlabeled Set Active Training Set Un-annotated corpus Annotation Translation

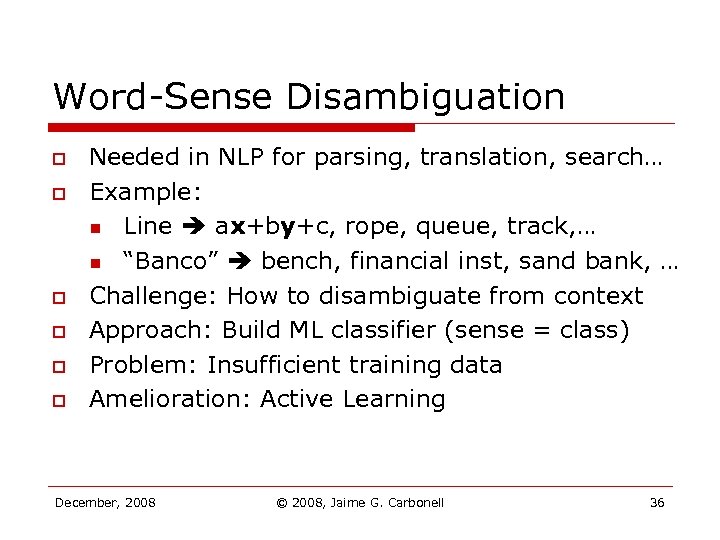

Word-Sense Disambiguation o o o Needed in NLP for parsing, translation, search… Example: n Line ax+by+c, rope, queue, track, … n “Banco” bench, financial inst, sand bank, … Challenge: How to disambiguate from context Approach: Build ML classifier (sense = class) Problem: Insufficient training data Amelioration: Active Learning December, 2008 © 2008, Jaime G. Carbonell 36

Word-Sense Disambiguation o o o Needed in NLP for parsing, translation, search… Example: n Line ax+by+c, rope, queue, track, … n “Banco” bench, financial inst, sand bank, … Challenge: How to disambiguate from context Approach: Build ML classifier (sense = class) Problem: Insufficient training data Amelioration: Active Learning December, 2008 © 2008, Jaime G. Carbonell 36

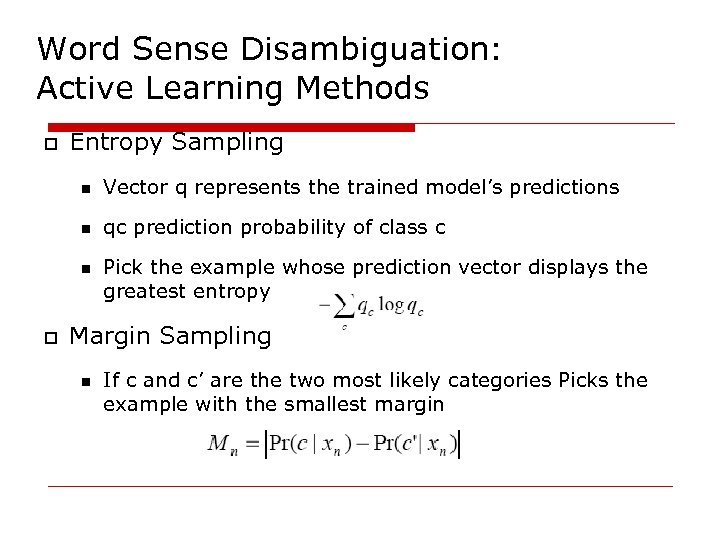

Word Sense Disambiguation: Active Learning Methods o Entropy Sampling n Vector q represents the trained model’s predictions n qc prediction probability of class c n o Pick the example whose prediction vector displays the greatest entropy Margin Sampling n If c and c’ are the two most likely categories Picks the example with the smallest margin

Word Sense Disambiguation: Active Learning Methods o Entropy Sampling n Vector q represents the trained model’s predictions n qc prediction probability of class c n o Pick the example whose prediction vector displays the greatest entropy Margin Sampling n If c and c’ are the two most likely categories Picks the example with the smallest margin

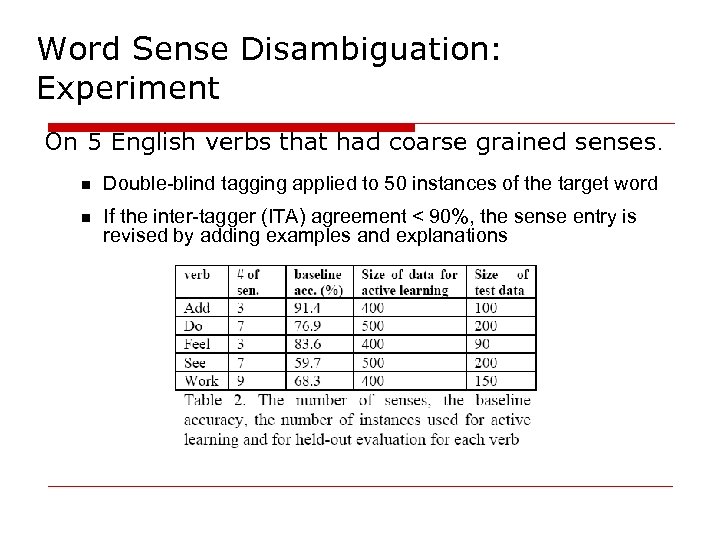

Word Sense Disambiguation: Experiment On 5 English verbs that had coarse grained senses. n Double-blind tagging applied to 50 instances of the target word n If the inter-tagger (ITA) agreement < 90%, the sense entry is revised by adding examples and explanations

Word Sense Disambiguation: Experiment On 5 English verbs that had coarse grained senses. n Double-blind tagging applied to 50 instances of the target word n If the inter-tagger (ITA) agreement < 90%, the sense entry is revised by adding examples and explanations

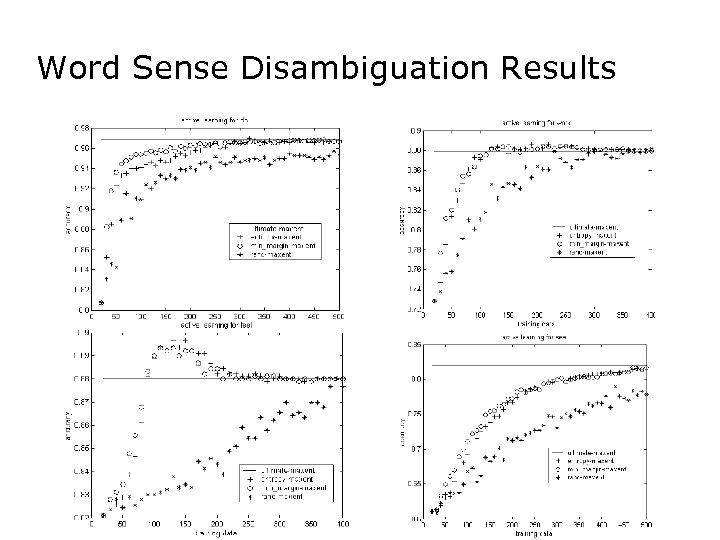

Word Sense Disambiguation Results

Word Sense Disambiguation Results

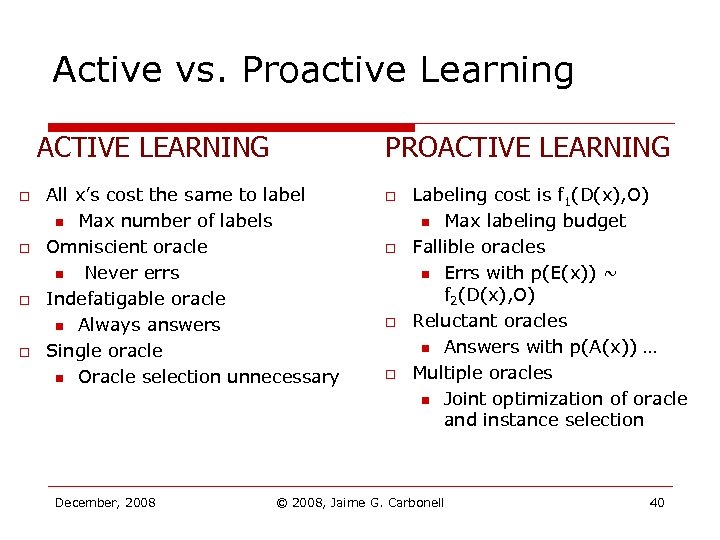

Active vs. Proactive Learning ACTIVE LEARNING o o PROACTIVE LEARNING All x’s cost the same to label n Max number of labels Omniscient oracle n Never errs Indefatigable oracle n Always answers Single oracle n Oracle selection unnecessary December, 2008 o o Labeling cost is f 1(D(x), O) n Max labeling budget Fallible oracles n Errs with p(E(x)) ~ f 2(D(x), O) Reluctant oracles n Answers with p(A(x)) … Multiple oracles n Joint optimization of oracle and instance selection © 2008, Jaime G. Carbonell 40

Active vs. Proactive Learning ACTIVE LEARNING o o PROACTIVE LEARNING All x’s cost the same to label n Max number of labels Omniscient oracle n Never errs Indefatigable oracle n Always answers Single oracle n Oracle selection unnecessary December, 2008 o o Labeling cost is f 1(D(x), O) n Max labeling budget Fallible oracles n Errs with p(E(x)) ~ f 2(D(x), O) Reluctant oracles n Answers with p(A(x)) … Multiple oracles n Joint optimization of oracle and instance selection © 2008, Jaime G. Carbonell 40

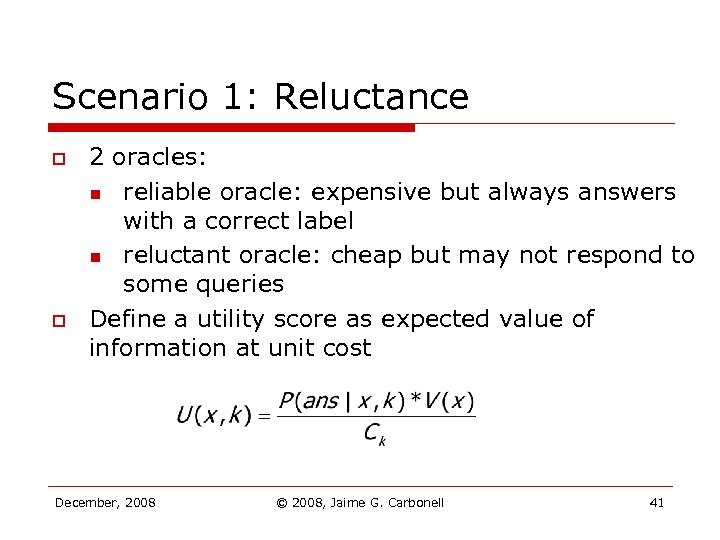

Scenario 1: Reluctance o o 2 oracles: n reliable oracle: expensive but always answers with a correct label n reluctant oracle: cheap but may not respond to some queries Define a utility score as expected value of information at unit cost December, 2008 © 2008, Jaime G. Carbonell 41

Scenario 1: Reluctance o o 2 oracles: n reliable oracle: expensive but always answers with a correct label n reluctant oracle: cheap but may not respond to some queries Define a utility score as expected value of information at unit cost December, 2008 © 2008, Jaime G. Carbonell 41

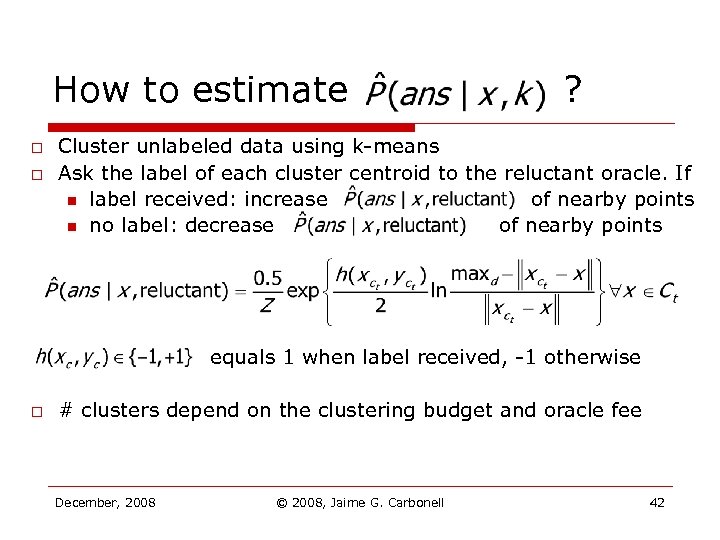

How to estimate o o ? Cluster unlabeled data using k-means Ask the label of each cluster centroid to the reluctant oracle. If n label received: increase of nearby points n no label: decrease of nearby points equals 1 when label received, -1 otherwise o # clusters depend on the clustering budget and oracle fee December, 2008 © 2008, Jaime G. Carbonell 42

How to estimate o o ? Cluster unlabeled data using k-means Ask the label of each cluster centroid to the reluctant oracle. If n label received: increase of nearby points n no label: decrease of nearby points equals 1 when label received, -1 otherwise o # clusters depend on the clustering budget and oracle fee December, 2008 © 2008, Jaime G. Carbonell 42

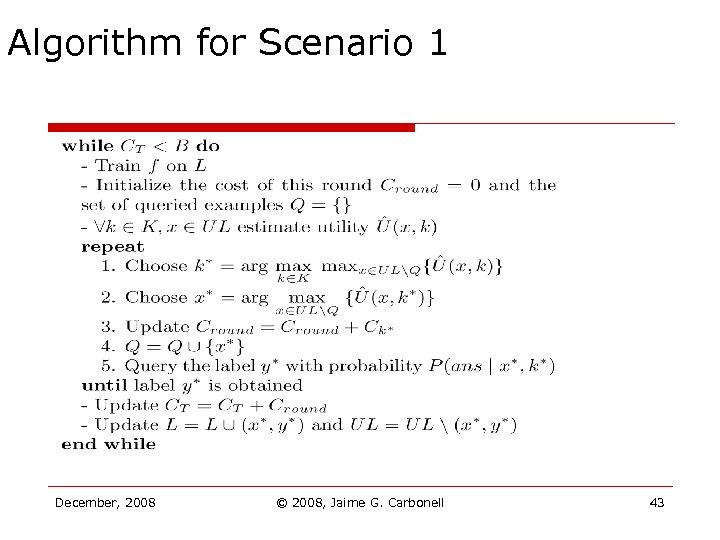

Algorithm for Scenario 1 December, 2008 © 2008, Jaime G. Carbonell 43

Algorithm for Scenario 1 December, 2008 © 2008, Jaime G. Carbonell 43

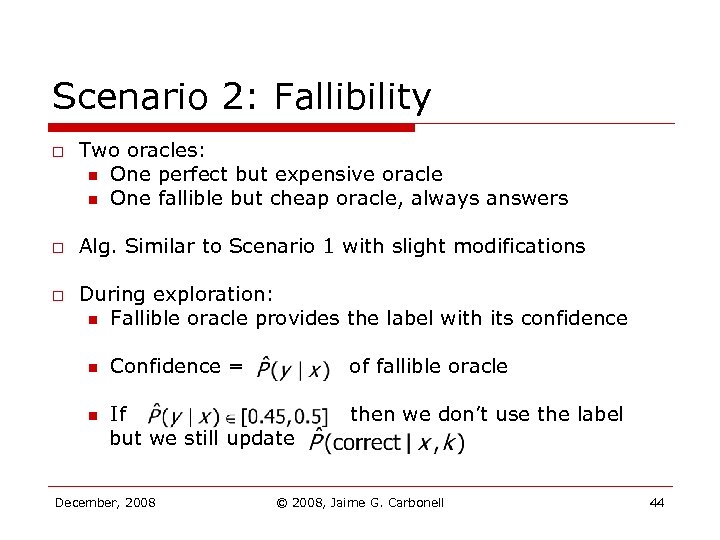

Scenario 2: Fallibility o o o Two oracles: n One perfect but expensive oracle n One fallible but cheap oracle, always answers Alg. Similar to Scenario 1 with slight modifications During exploration: n Fallible oracle provides the label with its confidence n n Confidence = of fallible oracle If but we still update then we don’t use the label December, 2008 © 2008, Jaime G. Carbonell 44

Scenario 2: Fallibility o o o Two oracles: n One perfect but expensive oracle n One fallible but cheap oracle, always answers Alg. Similar to Scenario 1 with slight modifications During exploration: n Fallible oracle provides the label with its confidence n n Confidence = of fallible oracle If but we still update then we don’t use the label December, 2008 © 2008, Jaime G. Carbonell 44

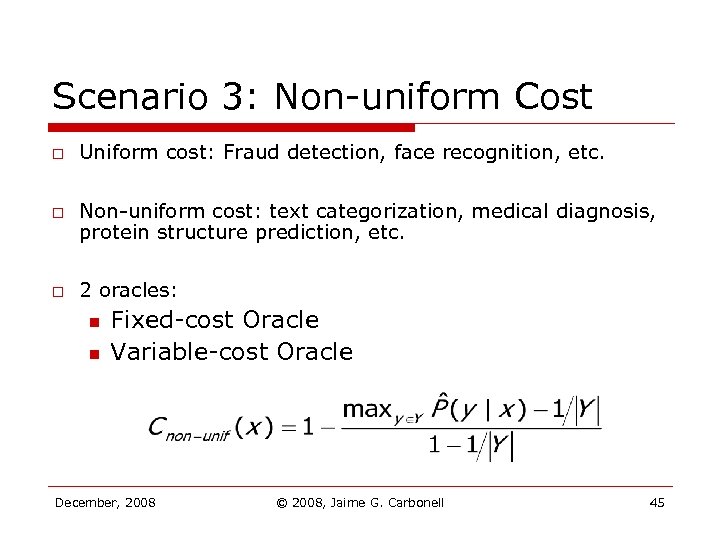

Scenario 3: Non-uniform Cost o o o Uniform cost: Fraud detection, face recognition, etc. Non-uniform cost: text categorization, medical diagnosis, protein structure prediction, etc. 2 oracles: n n Fixed-cost Oracle Variable-cost Oracle December, 2008 © 2008, Jaime G. Carbonell 45

Scenario 3: Non-uniform Cost o o o Uniform cost: Fraud detection, face recognition, etc. Non-uniform cost: text categorization, medical diagnosis, protein structure prediction, etc. 2 oracles: n n Fixed-cost Oracle Variable-cost Oracle December, 2008 © 2008, Jaime G. Carbonell 45

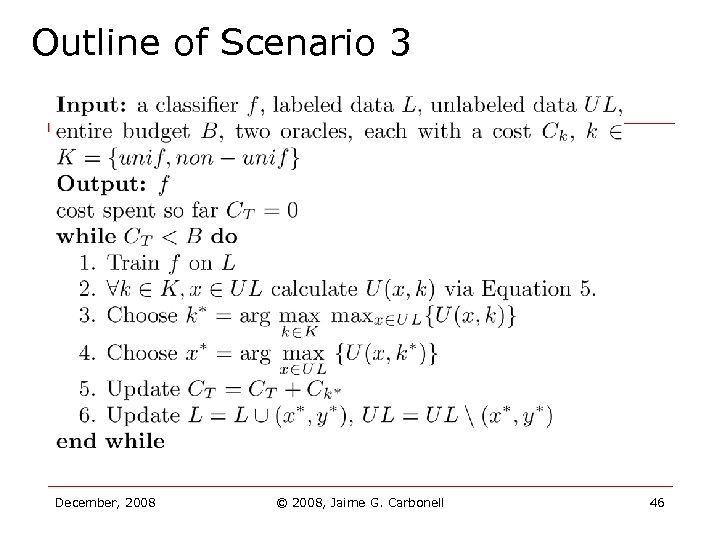

Outline of Scenario 3 December, 2008 © 2008, Jaime G. Carbonell 46

Outline of Scenario 3 December, 2008 © 2008, Jaime G. Carbonell 46

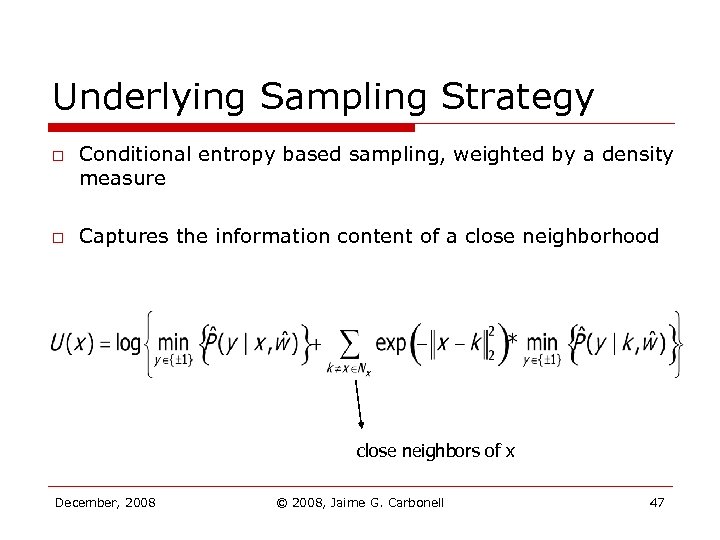

Underlying Sampling Strategy o o Conditional entropy based sampling, weighted by a density measure Captures the information content of a close neighborhood close neighbors of x December, 2008 © 2008, Jaime G. Carbonell 47

Underlying Sampling Strategy o o Conditional entropy based sampling, weighted by a density measure Captures the information content of a close neighborhood close neighbors of x December, 2008 © 2008, Jaime G. Carbonell 47

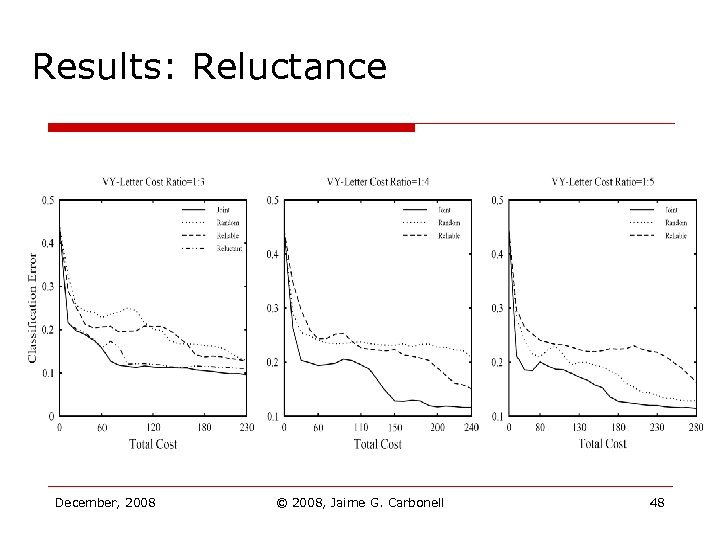

Results: Reluctance December, 2008 © 2008, Jaime G. Carbonell 48

Results: Reluctance December, 2008 © 2008, Jaime G. Carbonell 48

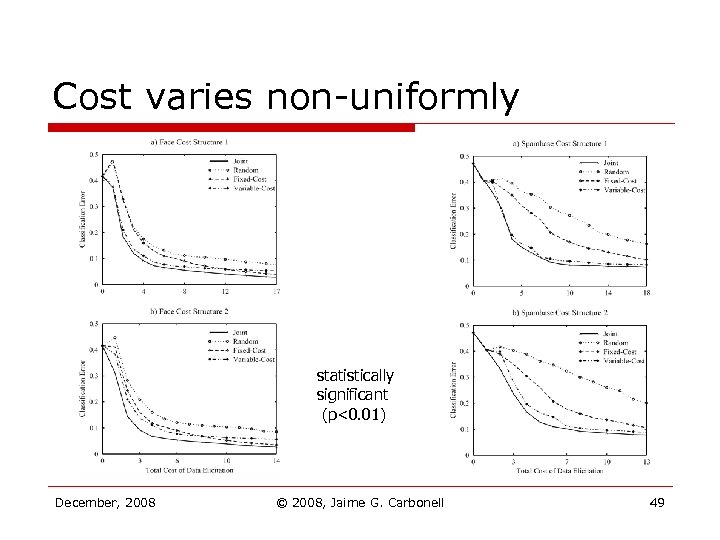

Cost varies non-uniformly statistically significant (p<0. 01) December, 2008 © 2008, Jaime G. Carbonell 49

Cost varies non-uniformly statistically significant (p<0. 01) December, 2008 © 2008, Jaime G. Carbonell 49

Proactive Learning in General o o Multiple Expert (a. k. a. Oracles) n Different areas of expertise n Different costs n Different reliabilities n Different availability What question to ask and whom to query? n Joint optimization of query & oracle selection n Referals among Oracles (with referal fees) n Learn about Oracle capabilities as well as solving the Active Learning problem at hand December, 2008 © 2008, Jaime G. Carbonell 50

Proactive Learning in General o o Multiple Expert (a. k. a. Oracles) n Different areas of expertise n Different costs n Different reliabilities n Different availability What question to ask and whom to query? n Joint optimization of query & oracle selection n Referals among Oracles (with referal fees) n Learn about Oracle capabilities as well as solving the Active Learning problem at hand December, 2008 © 2008, Jaime G. Carbonell 50

Unsupervised Learning in DM o o What does it mean to learn without an objective? n Explore the data for natural groupings n Learn association rules, and later examine whether they can be of any business use Illustrative examples n Market basket analysis later optimize shelf allocation & placements n Cascaded or correlated mechanical faults n Demographic grouping beyond known classes n Plan product bundling offers December, 2008 © 2008, Jaime G. Carbonell 51

Unsupervised Learning in DM o o What does it mean to learn without an objective? n Explore the data for natural groupings n Learn association rules, and later examine whether they can be of any business use Illustrative examples n Market basket analysis later optimize shelf allocation & placements n Cascaded or correlated mechanical faults n Demographic grouping beyond known classes n Plan product bundling offers December, 2008 © 2008, Jaime G. Carbonell 51

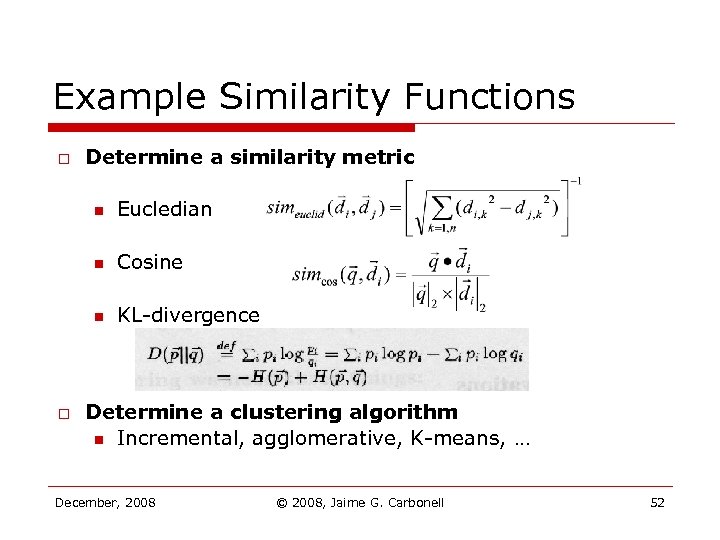

Example Similarity Functions o Determine a similarity metric n n Cosine n o Eucledian KL-divergence Determine a clustering algorithm n Incremental, agglomerative, K-means, … December, 2008 © 2008, Jaime G. Carbonell 52

Example Similarity Functions o Determine a similarity metric n n Cosine n o Eucledian KL-divergence Determine a clustering algorithm n Incremental, agglomerative, K-means, … December, 2008 © 2008, Jaime G. Carbonell 52

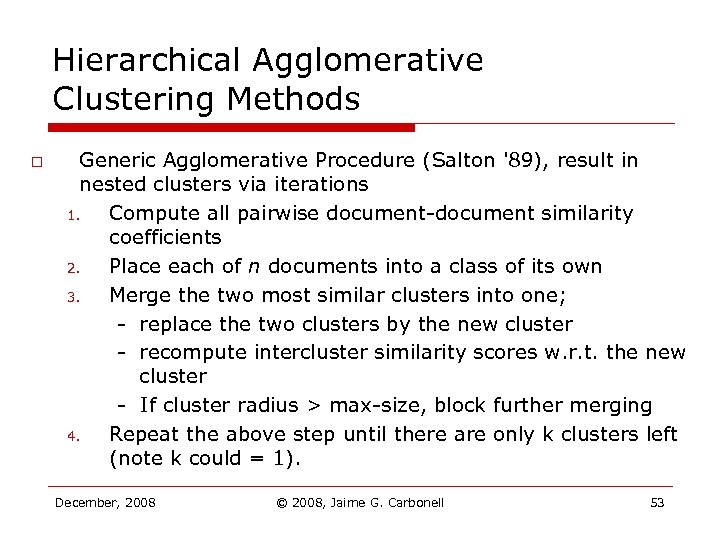

Hierarchical Agglomerative Clustering Methods o Generic Agglomerative Procedure (Salton '89), result in nested clusters via iterations 1. Compute all pairwise document-document similarity coefficients 2. Place each of n documents into a class of its own 3. Merge the two most similar clusters into one; - replace the two clusters by the new cluster - recompute intercluster similarity scores w. r. t. the new cluster - If cluster radius > max-size, block further merging 4. Repeat the above step until there are only k clusters left (note k could = 1). December, 2008 © 2008, Jaime G. Carbonell 53

Hierarchical Agglomerative Clustering Methods o Generic Agglomerative Procedure (Salton '89), result in nested clusters via iterations 1. Compute all pairwise document-document similarity coefficients 2. Place each of n documents into a class of its own 3. Merge the two most similar clusters into one; - replace the two clusters by the new cluster - recompute intercluster similarity scores w. r. t. the new cluster - If cluster radius > max-size, block further merging 4. Repeat the above step until there are only k clusters left (note k could = 1). December, 2008 © 2008, Jaime G. Carbonell 53

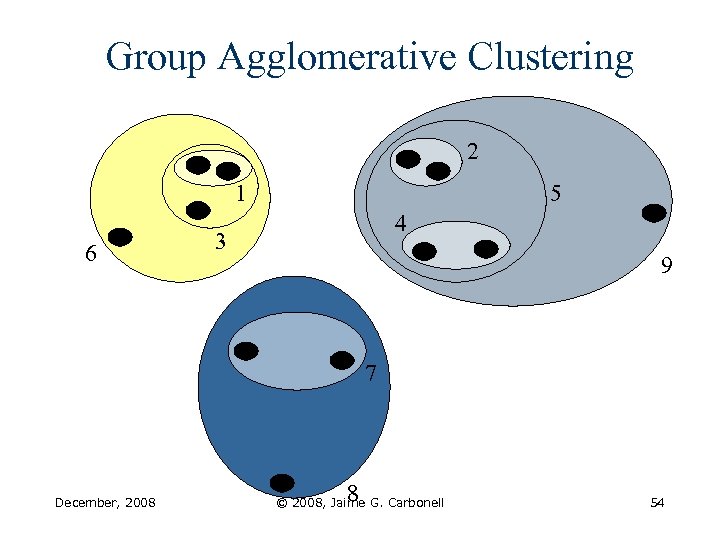

Group Agglomerative Clustering 2 1 6 5 4 3 9 7 December, 2008 8 © 2008, Jaime G. Carbonell 54

Group Agglomerative Clustering 2 1 6 5 4 3 9 7 December, 2008 8 © 2008, Jaime G. Carbonell 54

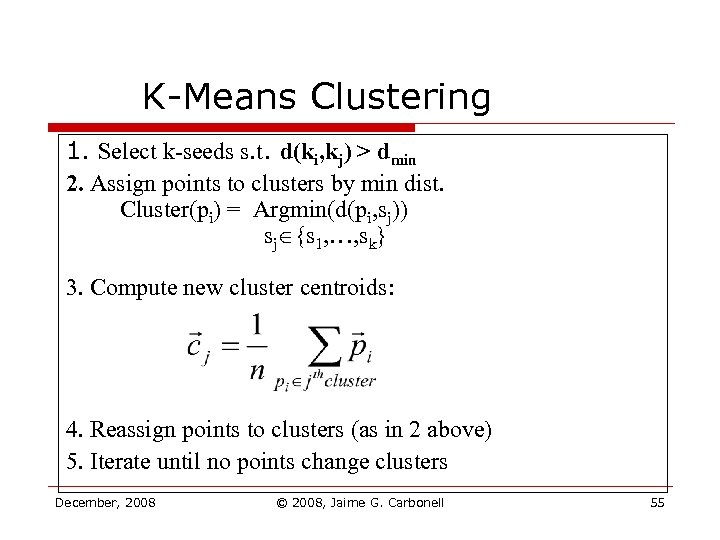

K-Means Clustering 1. Select k-seeds s. t. d(ki, kj) > dmin 2. Assign points to clusters by min dist. Cluster(pi) = Argmin(d(pi, sj)) sj {s 1, …, sk} 3. Compute new cluster centroids: 4. Reassign points to clusters (as in 2 above) 5. Iterate until no points change clusters December, 2008 © 2008, Jaime G. Carbonell 55

K-Means Clustering 1. Select k-seeds s. t. d(ki, kj) > dmin 2. Assign points to clusters by min dist. Cluster(pi) = Argmin(d(pi, sj)) sj {s 1, …, sk} 3. Compute new cluster centroids: 4. Reassign points to clusters (as in 2 above) 5. Iterate until no points change clusters December, 2008 © 2008, Jaime G. Carbonell 55

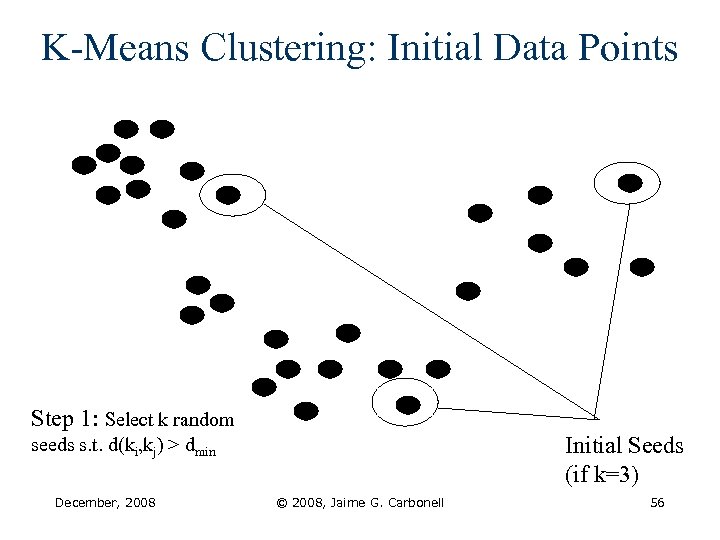

K-Means Clustering: Initial Data Points Step 1: Select k random Initial Seeds (if k=3) seeds s. t. d(ki, kj) > dmin December, 2008 © 2008, Jaime G. Carbonell 56

K-Means Clustering: Initial Data Points Step 1: Select k random Initial Seeds (if k=3) seeds s. t. d(ki, kj) > dmin December, 2008 © 2008, Jaime G. Carbonell 56

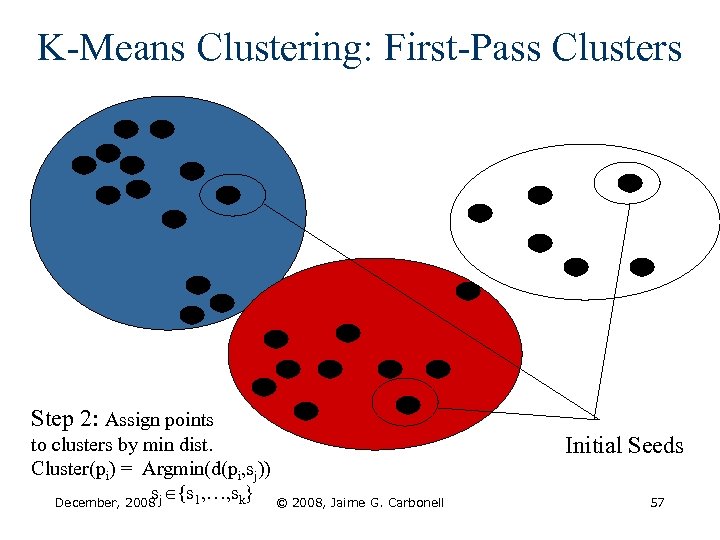

K-Means Clustering: First-Pass Clusters Step 2: Assign points to clusters by min dist. Cluster(pi) = Argmin(d(pi, sj)) s {s 1, …, sk} © 2008, Jaime G. Carbonell December, 2008 j Initial Seeds 57

K-Means Clustering: First-Pass Clusters Step 2: Assign points to clusters by min dist. Cluster(pi) = Argmin(d(pi, sj)) s {s 1, …, sk} © 2008, Jaime G. Carbonell December, 2008 j Initial Seeds 57

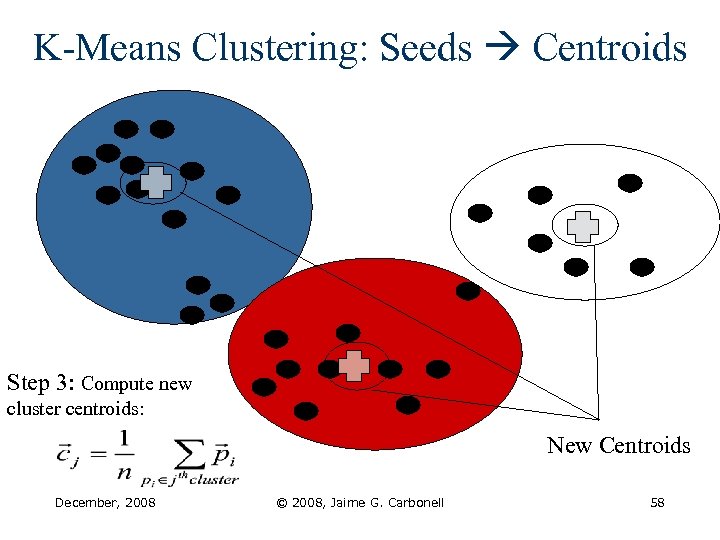

K-Means Clustering: Seeds Centroids Step 3: Compute new cluster centroids: New Centroids December, 2008 © 2008, Jaime G. Carbonell 58

K-Means Clustering: Seeds Centroids Step 3: Compute new cluster centroids: New Centroids December, 2008 © 2008, Jaime G. Carbonell 58

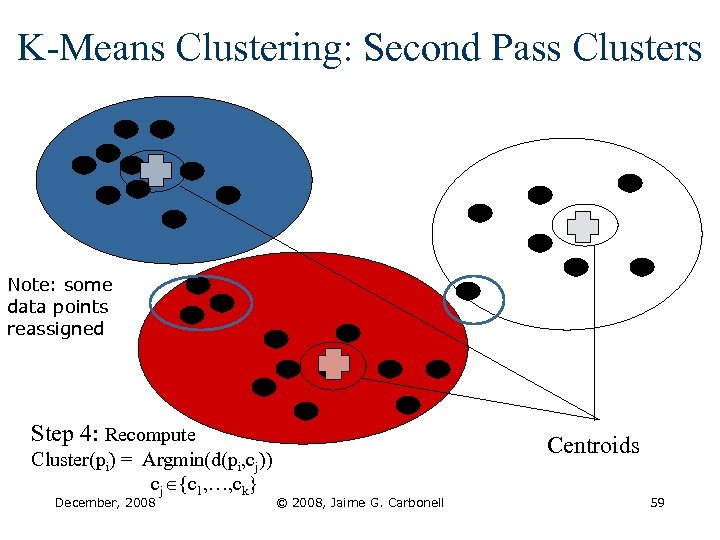

K-Means Clustering: Second Pass Clusters Note: some data points reassigned Step 4: Recompute Cluster(pi) = Argmin(d(pi, cj)) cj {c 1, …, ck} December, 2008 Centroids © 2008, Jaime G. Carbonell 59

K-Means Clustering: Second Pass Clusters Note: some data points reassigned Step 4: Recompute Cluster(pi) = Argmin(d(pi, cj)) cj {c 1, …, ck} December, 2008 Centroids © 2008, Jaime G. Carbonell 59

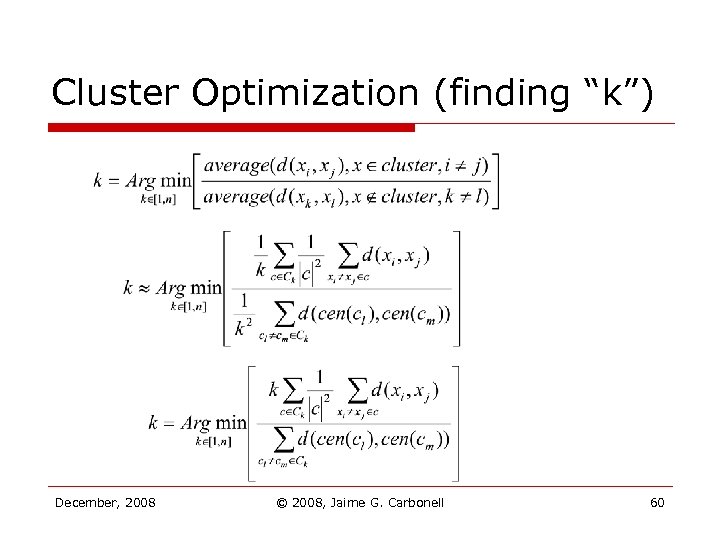

Cluster Optimization (finding “k”) December, 2008 © 2008, Jaime G. Carbonell 60

Cluster Optimization (finding “k”) December, 2008 © 2008, Jaime G. Carbonell 60

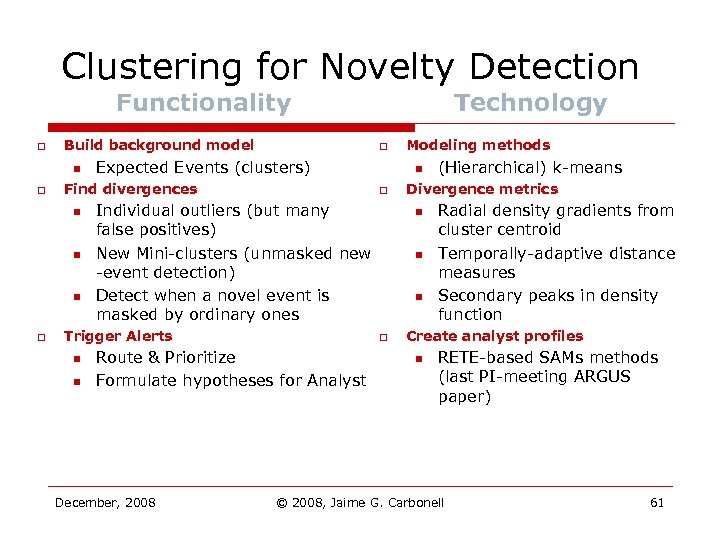

Clustering for Novelty Detection Functionality o Build background model n o n n o o Expected Events (clusters) Find divergences n Individual outliers (but many false positives) New Mini-clusters (unmasked new -event detection) Detect when a novel event is masked by ordinary ones n December, 2008 (Hierarchical) k-means Divergence metrics n n n o Route & Prioritize Formulate hypotheses for Analyst Modeling methods n o Trigger Alerts n Technology Radial density gradients from cluster centroid Temporally-adaptive distance measures Secondary peaks in density function Create analyst profiles n RETE-based SAMs methods (last PI-meeting ARGUS paper) © 2008, Jaime G. Carbonell 61

Clustering for Novelty Detection Functionality o Build background model n o n n o o Expected Events (clusters) Find divergences n Individual outliers (but many false positives) New Mini-clusters (unmasked new -event detection) Detect when a novel event is masked by ordinary ones n December, 2008 (Hierarchical) k-means Divergence metrics n n n o Route & Prioritize Formulate hypotheses for Analyst Modeling methods n o Trigger Alerts n Technology Radial density gradients from cluster centroid Temporally-adaptive distance measures Secondary peaks in density function Create analyst profiles n RETE-based SAMs methods (last PI-meeting ARGUS paper) © 2008, Jaime G. Carbonell 61

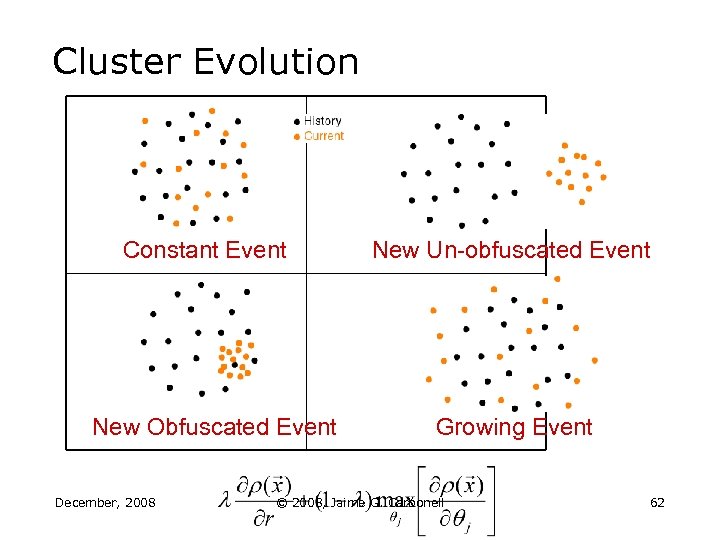

Cluster Evolution Constant Event New Obfuscated Event December, 2008 New Un-obfuscated Event Growing Event © 2008, Jaime G. Carbonell 62

Cluster Evolution Constant Event New Obfuscated Event December, 2008 New Un-obfuscated Event Growing Event © 2008, Jaime G. Carbonell 62

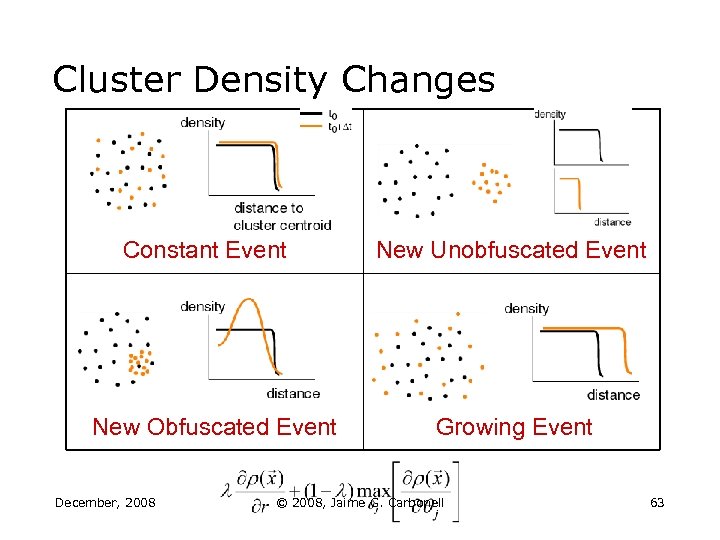

Cluster Density Changes Constant Event New Obfuscated Event December, 2008 New Unobfuscated Event Growing Event © 2008, Jaime G. Carbonell 63

Cluster Density Changes Constant Event New Obfuscated Event December, 2008 New Unobfuscated Event Growing Event © 2008, Jaime G. Carbonell 63

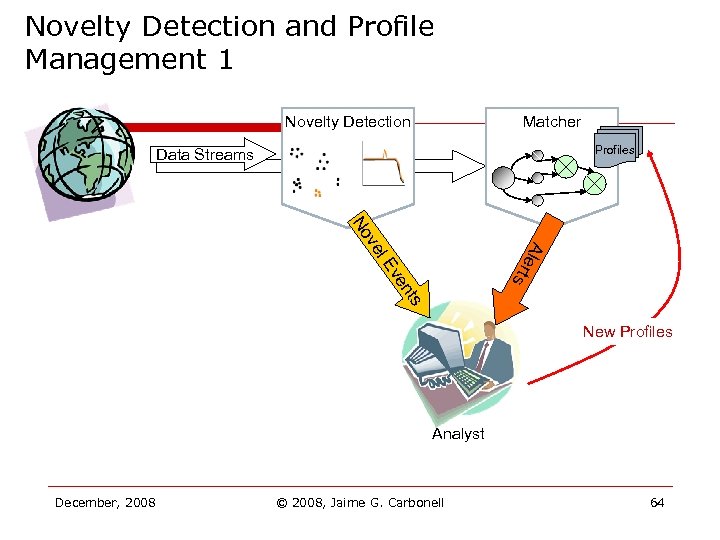

Novelty Detection and Profile Management 1 Novelty Detection Matcher Profiles Data Streams nts ve ts l. E Ale r ve No New Profiles Analyst December, 2008 © 2008, Jaime G. Carbonell 64

Novelty Detection and Profile Management 1 Novelty Detection Matcher Profiles Data Streams nts ve ts l. E Ale r ve No New Profiles Analyst December, 2008 © 2008, Jaime G. Carbonell 64

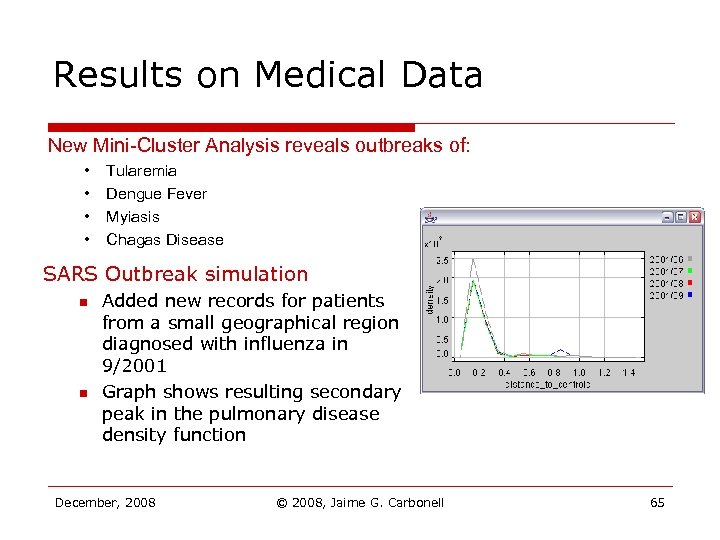

Results on Medical Data New Mini-Cluster Analysis reveals outbreaks of: • • Tularemia Dengue Fever Myiasis Chagas Disease SARS Outbreak simulation n n Added new records for patients from a small geographical region diagnosed with influenza in 9/2001 Graph shows resulting secondary peak in the pulmonary disease density function December, 2008 © 2008, Jaime G. Carbonell 65

Results on Medical Data New Mini-Cluster Analysis reveals outbreaks of: • • Tularemia Dengue Fever Myiasis Chagas Disease SARS Outbreak simulation n n Added new records for patients from a small geographical region diagnosed with influenza in 9/2001 Graph shows resulting secondary peak in the pulmonary disease density function December, 2008 © 2008, Jaime G. Carbonell 65

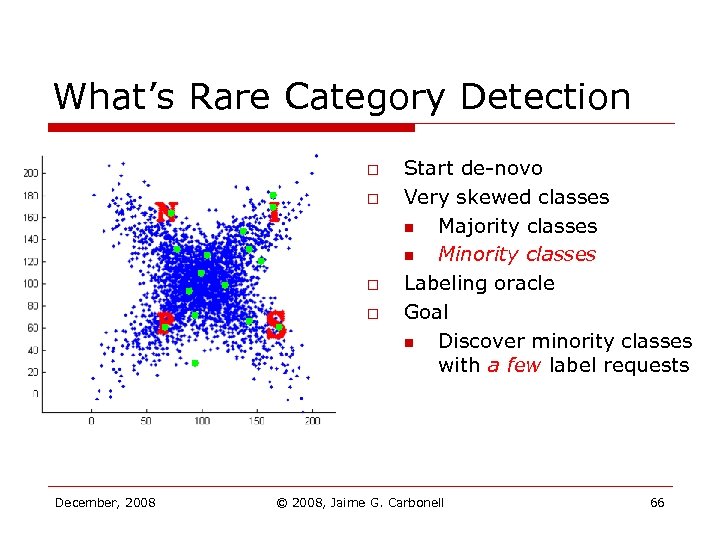

What’s Rare Category Detection o o December, 2008 Start de-novo Very skewed classes n Majority classes n Minority classes Labeling oracle Goal n Discover minority classes with a few label requests © 2008, Jaime G. Carbonell 66

What’s Rare Category Detection o o December, 2008 Start de-novo Very skewed classes n Majority classes n Minority classes Labeling oracle Goal n Discover minority classes with a few label requests © 2008, Jaime G. Carbonell 66

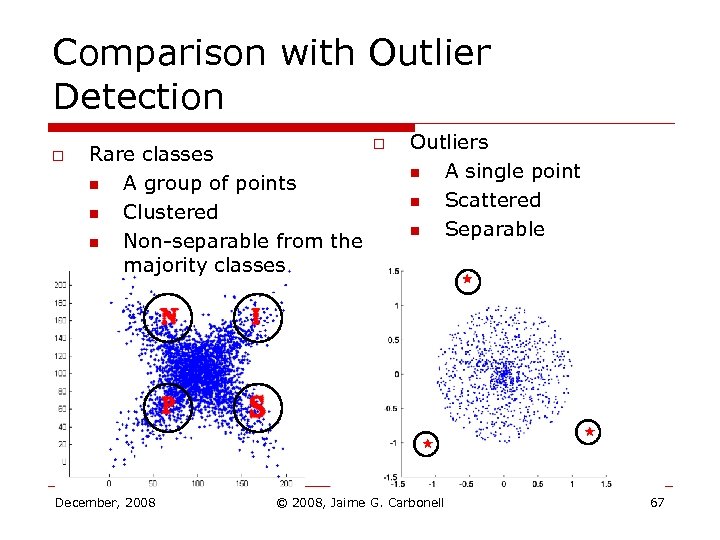

Comparison with Outlier Detection o Rare classes n A group of points n Clustered n Non-separable from the majority classes December, 2008 o Outliers n A single point n Scattered n Separable © 2008, Jaime G. Carbonell 67

Comparison with Outlier Detection o Rare classes n A group of points n Clustered n Non-separable from the majority classes December, 2008 o Outliers n A single point n Scattered n Separable © 2008, Jaime G. Carbonell 67

Fraud detection Network intrusion detection Applications Astronomy December, 2008 Spam image detection © 2008, Jaime G. Carbonell 68

Fraud detection Network intrusion detection Applications Astronomy December, 2008 Spam image detection © 2008, Jaime G. Carbonell 68

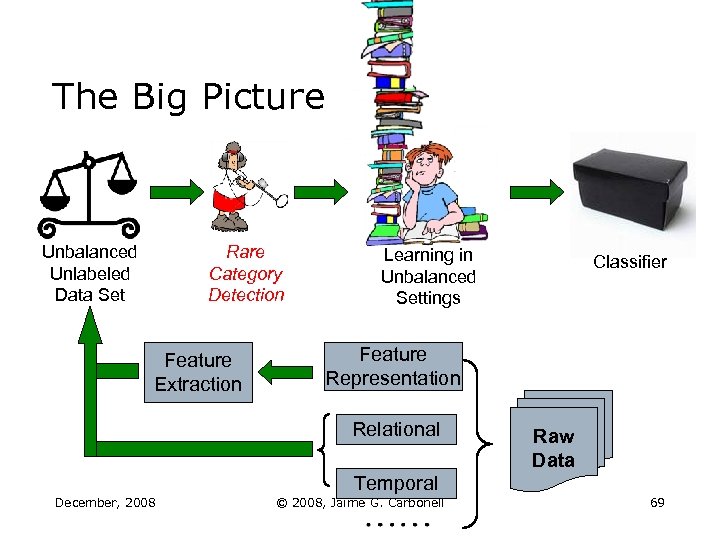

The Big Picture Unbalanced Unlabeled Data Set Rare Category Detection Feature Extraction Learning in Unbalanced Settings Classifier Feature Representation Relational Raw Data Temporal December, 2008 © 2008, Jaime G. Carbonell 69

The Big Picture Unbalanced Unlabeled Data Set Rare Category Detection Feature Extraction Learning in Unbalanced Settings Classifier Feature Representation Relational Raw Data Temporal December, 2008 © 2008, Jaime G. Carbonell 69

Questions We Want to Address o o o How to detect rare categories in an unbalanced, unlabeled data set with the help of an oracle? How to detect rare categories with different data types, such as graph data, stream data, etc? How to do rare category detection with the least information about the data set? How to select relevant features for the rare categories? How to design effective classification algorithms which fully exploit the property of the minority classes (rare category classification)? December, 2008 © 2008, Jaime G. Carbonell 70

Questions We Want to Address o o o How to detect rare categories in an unbalanced, unlabeled data set with the help of an oracle? How to detect rare categories with different data types, such as graph data, stream data, etc? How to do rare category detection with the least information about the data set? How to select relevant features for the rare categories? How to design effective classification algorithms which fully exploit the property of the minority classes (rare category classification)? December, 2008 © 2008, Jaime G. Carbonell 70

Notation o o o Unlabeled examples: m Classes: m-1 rare classes: One majority class: , , Goal: find at least ONE example from each rare class by requesting a few labels December, 2008 © 2008, Jaime G. Carbonell 71

Notation o o o Unlabeled examples: m Classes: m-1 rare classes: One majority class: , , Goal: find at least ONE example from each rare class by requesting a few labels December, 2008 © 2008, Jaime G. Carbonell 71

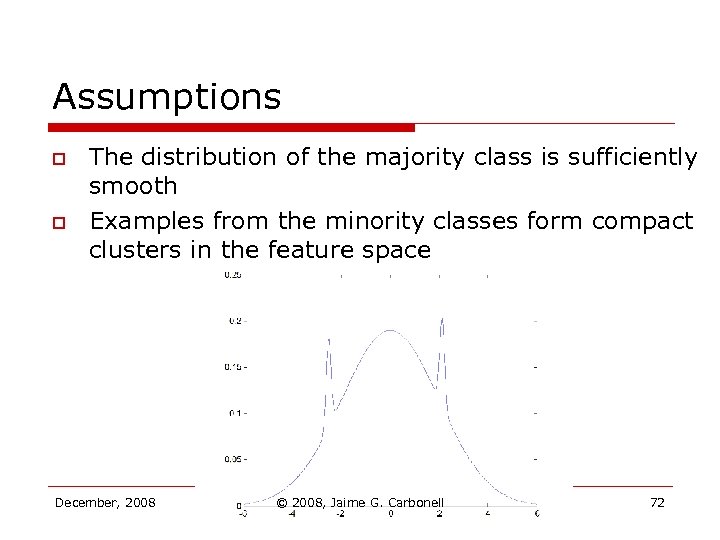

Assumptions o o The distribution of the majority class is sufficiently smooth Examples from the minority classes form compact clusters in the feature space December, 2008 © 2008, Jaime G. Carbonell 72

Assumptions o o The distribution of the majority class is sufficiently smooth Examples from the minority classes form compact clusters in the feature space December, 2008 © 2008, Jaime G. Carbonell 72

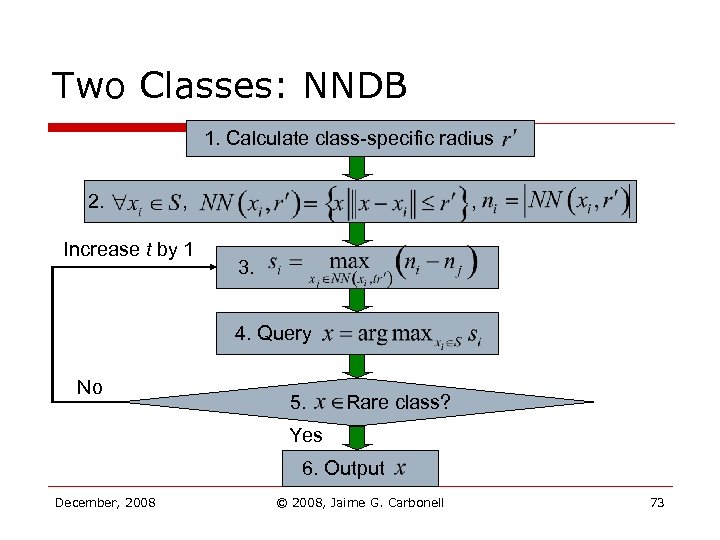

Two Classes: NNDB 1. Calculate class-specific radius 2. , Increase t by 1 , 3. 4. Query No 5. Rare class? Yes 6. Output December, 2008 © 2008, Jaime G. Carbonell 73

Two Classes: NNDB 1. Calculate class-specific radius 2. , Increase t by 1 , 3. 4. Query No 5. Rare class? Yes 6. Output December, 2008 © 2008, Jaime G. Carbonell 73

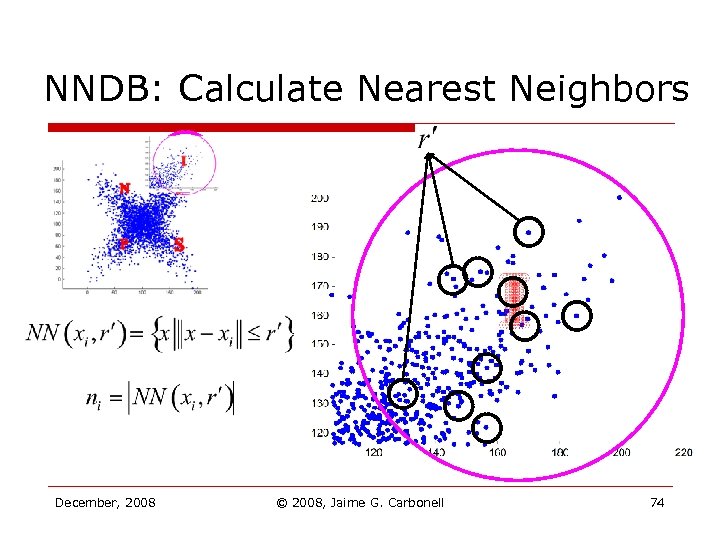

NNDB: Calculate Nearest Neighbors December, 2008 © 2008, Jaime G. Carbonell 74

NNDB: Calculate Nearest Neighbors December, 2008 © 2008, Jaime G. Carbonell 74

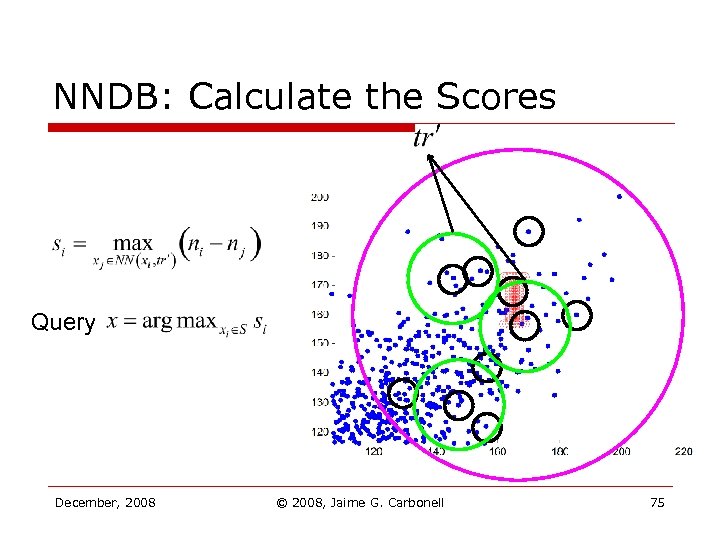

NNDB: Calculate the Scores Query December, 2008 © 2008, Jaime G. Carbonell 75

NNDB: Calculate the Scores Query December, 2008 © 2008, Jaime G. Carbonell 75

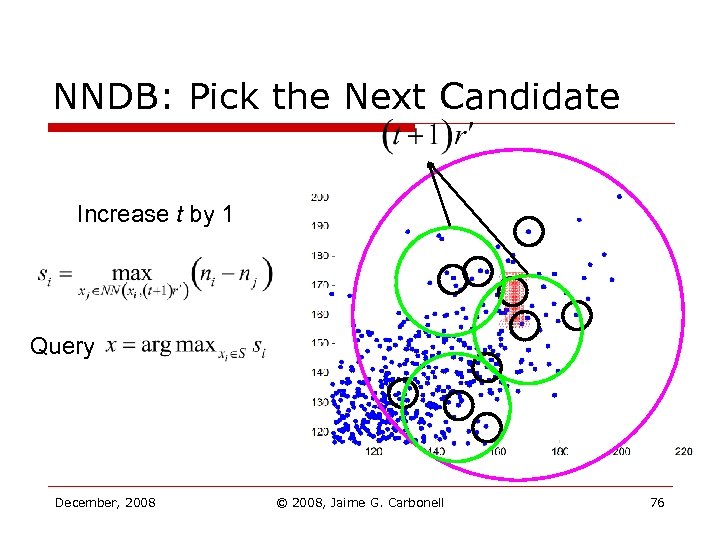

NNDB: Pick the Next Candidate Increase t by 1 Query December, 2008 © 2008, Jaime G. Carbonell 76

NNDB: Pick the Next Candidate Increase t by 1 Query December, 2008 © 2008, Jaime G. Carbonell 76

![Why NNDB Works o o Theoretically n Theorem 1 [He & Carbonell 2007]: under Why NNDB Works o o Theoretically n Theorem 1 [He & Carbonell 2007]: under](https://present5.com/presentation/392579da15d593cacf80d510e1869f6a/image-77.jpg) Why NNDB Works o o Theoretically n Theorem 1 [He & Carbonell 2007]: under certain conditions, with high probability, after a few iteration steps, NNDB queries at least one example whose probability of coming from the minority class is at least 1/3 Intuitively n The score measures the change in local density December, 2008 © 2008, Jaime G. Carbonell 77

Why NNDB Works o o Theoretically n Theorem 1 [He & Carbonell 2007]: under certain conditions, with high probability, after a few iteration steps, NNDB queries at least one example whose probability of coming from the minority class is at least 1/3 Intuitively n The score measures the change in local density December, 2008 © 2008, Jaime G. Carbonell 77

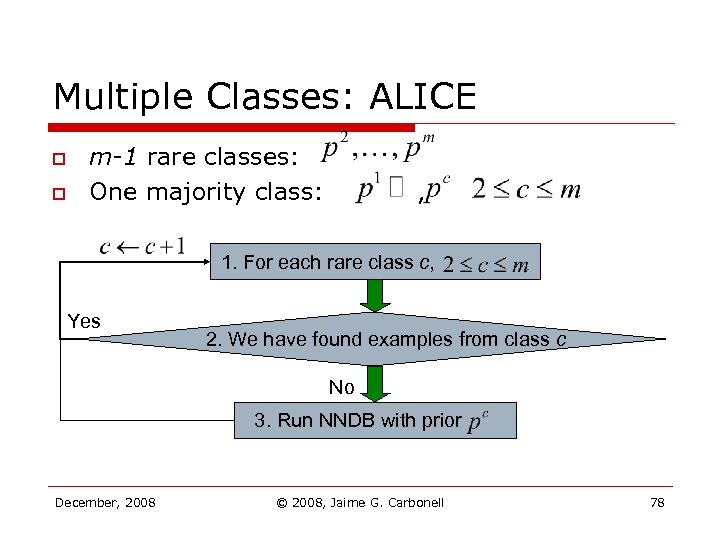

Multiple Classes: ALICE o o m-1 rare classes: One majority class: , 1. For each rare class c, Yes 2. We have found examples from class c No 3. Run NNDB with prior December, 2008 © 2008, Jaime G. Carbonell 78

Multiple Classes: ALICE o o m-1 rare classes: One majority class: , 1. For each rare class c, Yes 2. We have found examples from class c No 3. Run NNDB with prior December, 2008 © 2008, Jaime G. Carbonell 78

![Why ALICE Works o Theoretically n Theorem 2 [He & Carbonell 2008]: under certain Why ALICE Works o Theoretically n Theorem 2 [He & Carbonell 2008]: under certain](https://present5.com/presentation/392579da15d593cacf80d510e1869f6a/image-79.jpg) Why ALICE Works o Theoretically n Theorem 2 [He & Carbonell 2008]: under certain conditions, with high probability, in each outer loop of ALICE, after a few iteration steps in NNDB, ALICE queries at least one example whose probability of coming from one minority class is at least 1/3 December, 2008 © 2008, Jaime G. Carbonell 79

Why ALICE Works o Theoretically n Theorem 2 [He & Carbonell 2008]: under certain conditions, with high probability, in each outer loop of ALICE, after a few iteration steps in NNDB, ALICE queries at least one example whose probability of coming from one minority class is at least 1/3 December, 2008 © 2008, Jaime G. Carbonell 79

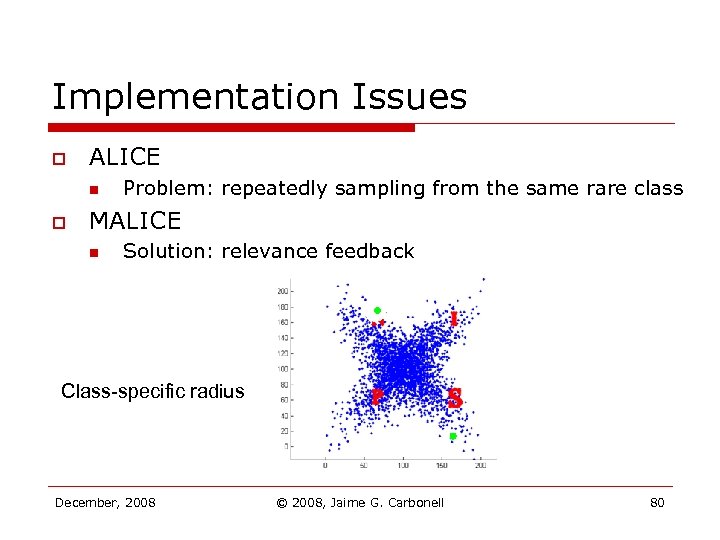

Implementation Issues o ALICE n o Problem: repeatedly sampling from the same rare class MALICE n Solution: relevance feedback Class-specific radius December, 2008 © 2008, Jaime G. Carbonell 80

Implementation Issues o ALICE n o Problem: repeatedly sampling from the same rare class MALICE n Solution: relevance feedback Class-specific radius December, 2008 © 2008, Jaime G. Carbonell 80

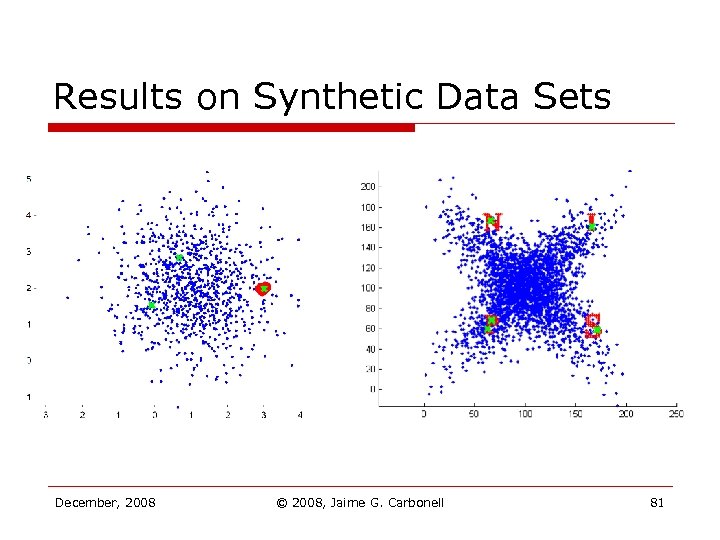

Results on Synthetic Data Sets December, 2008 © 2008, Jaime G. Carbonell 81

Results on Synthetic Data Sets December, 2008 © 2008, Jaime G. Carbonell 81

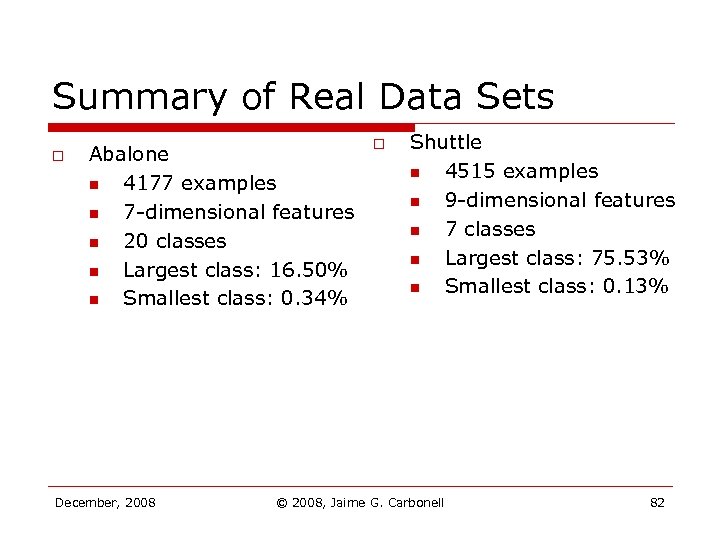

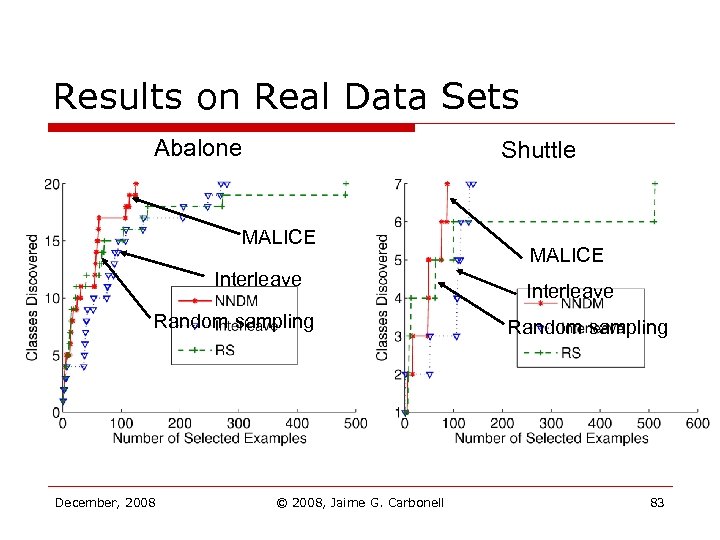

Summary of Real Data Sets o Abalone n 4177 examples n 7 -dimensional features n 20 classes n Largest class: 16. 50% n Smallest class: 0. 34% December, 2008 o Shuttle n 4515 examples n 9 -dimensional features n 7 classes n Largest class: 75. 53% n Smallest class: 0. 13% © 2008, Jaime G. Carbonell 82

Summary of Real Data Sets o Abalone n 4177 examples n 7 -dimensional features n 20 classes n Largest class: 16. 50% n Smallest class: 0. 34% December, 2008 o Shuttle n 4515 examples n 9 -dimensional features n 7 classes n Largest class: 75. 53% n Smallest class: 0. 13% © 2008, Jaime G. Carbonell 82

Results on Real Data Sets Abalone Shuttle MALICE Interleave Random sampling December, 2008 © 2008, Jaime G. Carbonell MALICE Interleave Random sampling 83

Results on Real Data Sets Abalone Shuttle MALICE Interleave Random sampling December, 2008 © 2008, Jaime G. Carbonell MALICE Interleave Random sampling 83

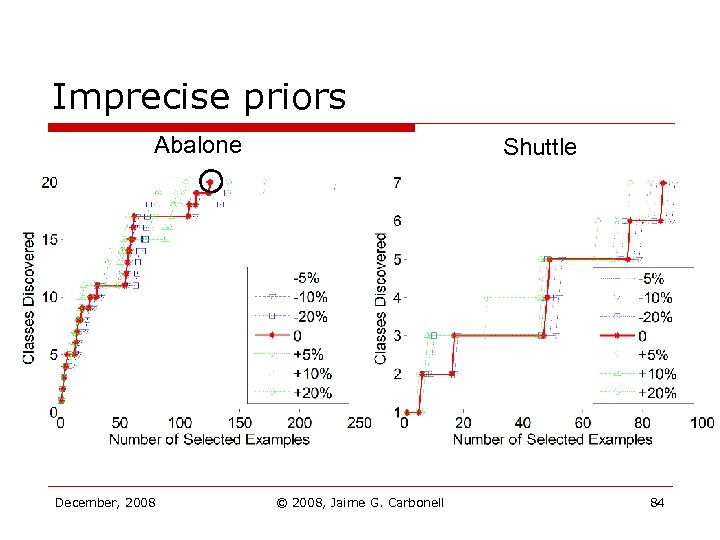

Imprecise priors Abalone December, 2008 Shuttle © 2008, Jaime G. Carbonell 84

Imprecise priors Abalone December, 2008 Shuttle © 2008, Jaime G. Carbonell 84

![Specially Designed Exponential Families [Efron & Tibshirani 1996] o o Favorable compromise between parametric Specially Designed Exponential Families [Efron & Tibshirani 1996] o o Favorable compromise between parametric](https://present5.com/presentation/392579da15d593cacf80d510e1869f6a/image-85.jpg) Specially Designed Exponential Families [Efron & Tibshirani 1996] o o Favorable compromise between parametric and nonparametric density estimation Estimated density Carrier density parameter vector Normalizing parameter December, 2008 © 2008, Jaime G. Carbonell vector of sufficient statistics 85

Specially Designed Exponential Families [Efron & Tibshirani 1996] o o Favorable compromise between parametric and nonparametric density estimation Estimated density Carrier density parameter vector Normalizing parameter December, 2008 © 2008, Jaime G. Carbonell vector of sufficient statistics 85

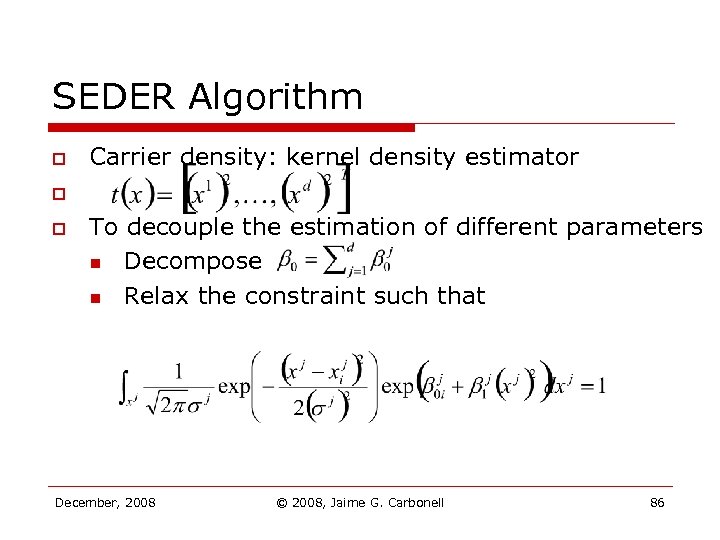

SEDER Algorithm o Carrier density: kernel density estimator o o To decouple the estimation of different parameters n Decompose n Relax the constraint such that December, 2008 © 2008, Jaime G. Carbonell 86

SEDER Algorithm o Carrier density: kernel density estimator o o To decouple the estimation of different parameters n Decompose n Relax the constraint such that December, 2008 © 2008, Jaime G. Carbonell 86

![Parameter Estimation o Theorem 3 [To appear]: the maximum likelihood estimate and of and Parameter Estimation o Theorem 3 [To appear]: the maximum likelihood estimate and of and](https://present5.com/presentation/392579da15d593cacf80d510e1869f6a/image-87.jpg) Parameter Estimation o Theorem 3 [To appear]: the maximum likelihood estimate and of and satisfy the following conditions: where December, 2008 © 2008, Jaime G. Carbonell 87

Parameter Estimation o Theorem 3 [To appear]: the maximum likelihood estimate and of and satisfy the following conditions: where December, 2008 © 2008, Jaime G. Carbonell 87

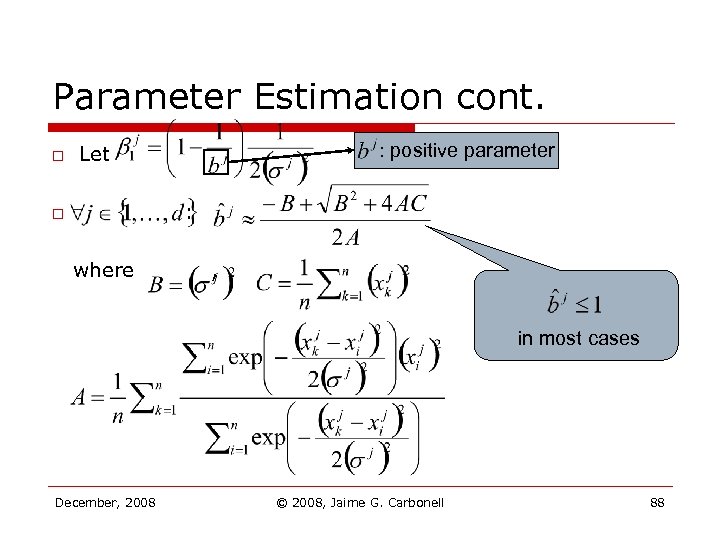

Parameter Estimation cont. o : positive parameter Let : o where , in most cases December, 2008 © 2008, Jaime G. Carbonell 88

Parameter Estimation cont. o : positive parameter Let : o where , in most cases December, 2008 © 2008, Jaime G. Carbonell 88

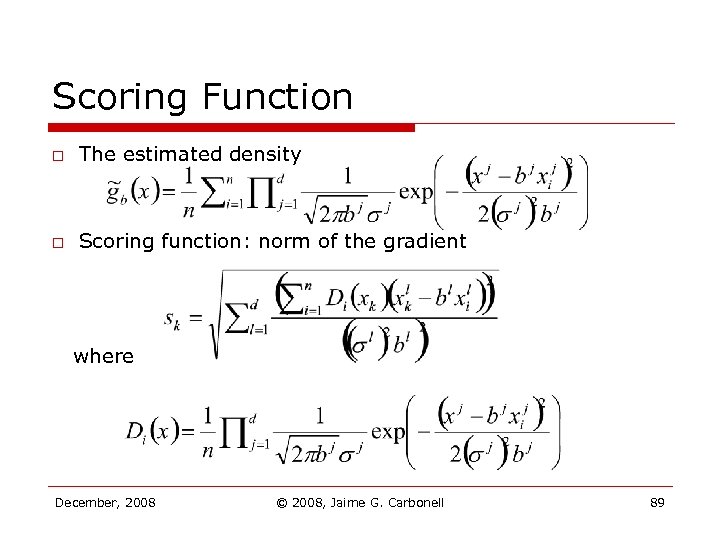

Scoring Function o The estimated density o Scoring function: norm of the gradient where December, 2008 © 2008, Jaime G. Carbonell 89

Scoring Function o The estimated density o Scoring function: norm of the gradient where December, 2008 © 2008, Jaime G. Carbonell 89

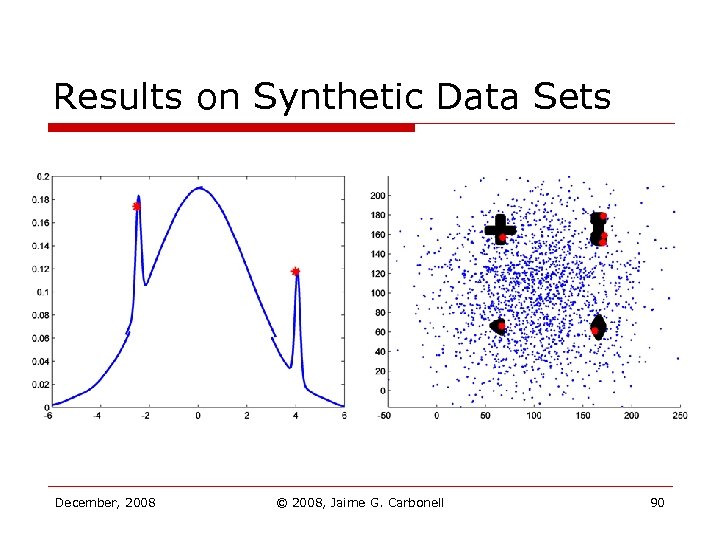

Results on Synthetic Data Sets December, 2008 © 2008, Jaime G. Carbonell 90

Results on Synthetic Data Sets December, 2008 © 2008, Jaime G. Carbonell 90

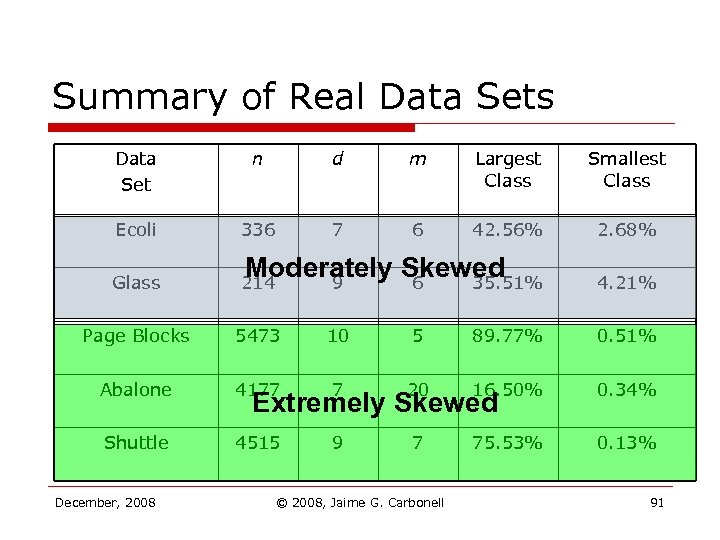

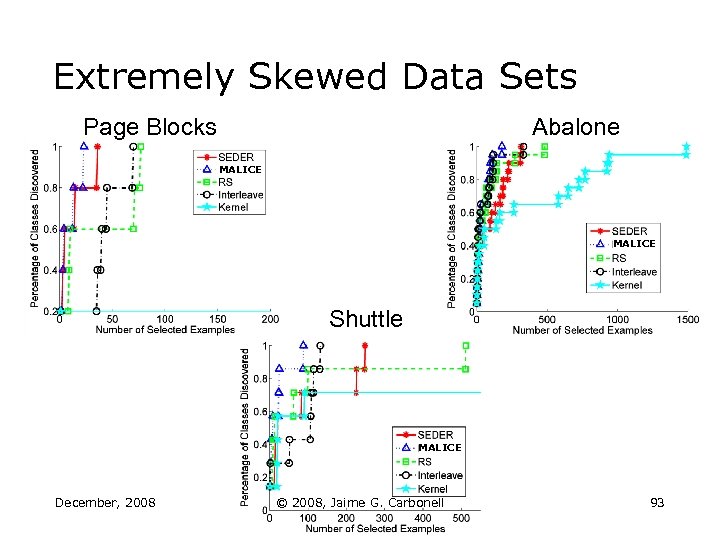

Summary of Real Data Sets Data Set n d m Largest Class Smallest Class Ecoli 336 7 6 42. 56% 2. 68% Glass 214 Moderately Skewed 9 6 35. 51% 4. 21% Page Blocks 5473 10 5 89. 77% 0. 51% Abalone 4177 7 20 16. 50% 0. 34% Shuttle 4515 9 7 75. 53% 0. 13% December, 2008 Extremely Skewed © 2008, Jaime G. Carbonell 91

Summary of Real Data Sets Data Set n d m Largest Class Smallest Class Ecoli 336 7 6 42. 56% 2. 68% Glass 214 Moderately Skewed 9 6 35. 51% 4. 21% Page Blocks 5473 10 5 89. 77% 0. 51% Abalone 4177 7 20 16. 50% 0. 34% Shuttle 4515 9 7 75. 53% 0. 13% December, 2008 Extremely Skewed © 2008, Jaime G. Carbonell 91

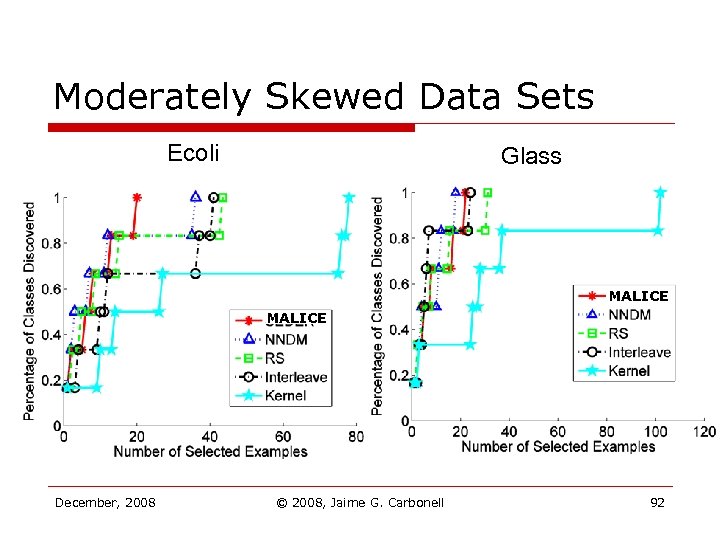

Moderately Skewed Data Sets Ecoli Glass MALICE December, 2008 © 2008, Jaime G. Carbonell 92

Moderately Skewed Data Sets Ecoli Glass MALICE December, 2008 © 2008, Jaime G. Carbonell 92

Extremely Skewed Data Sets Page Blocks Abalone MALICE Shuttle MALICE December, 2008 © 2008, Jaime G. Carbonell 93

Extremely Skewed Data Sets Page Blocks Abalone MALICE Shuttle MALICE December, 2008 © 2008, Jaime G. Carbonell 93

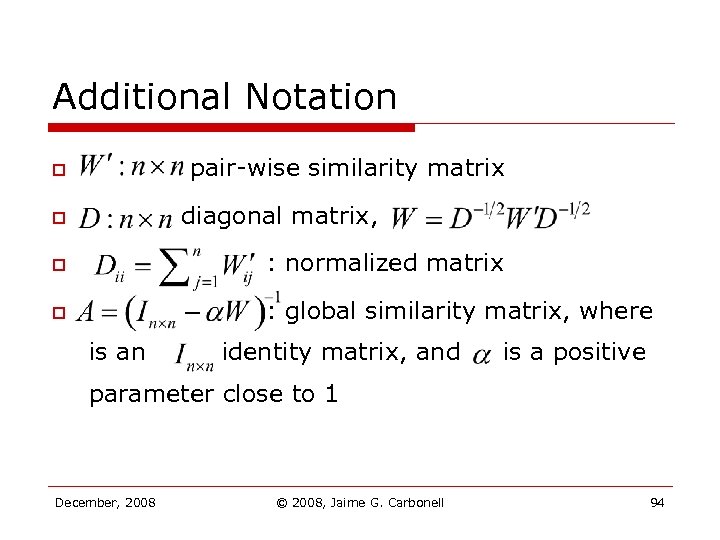

Additional Notation pair-wise similarity matrix o diagonal matrix, o o : normalized matrix o : global similarity matrix, where is an identity matrix, and is a positive parameter close to 1 December, 2008 © 2008, Jaime G. Carbonell 94

Additional Notation pair-wise similarity matrix o diagonal matrix, o o : normalized matrix o : global similarity matrix, where is an identity matrix, and is a positive parameter close to 1 December, 2008 © 2008, Jaime G. Carbonell 94

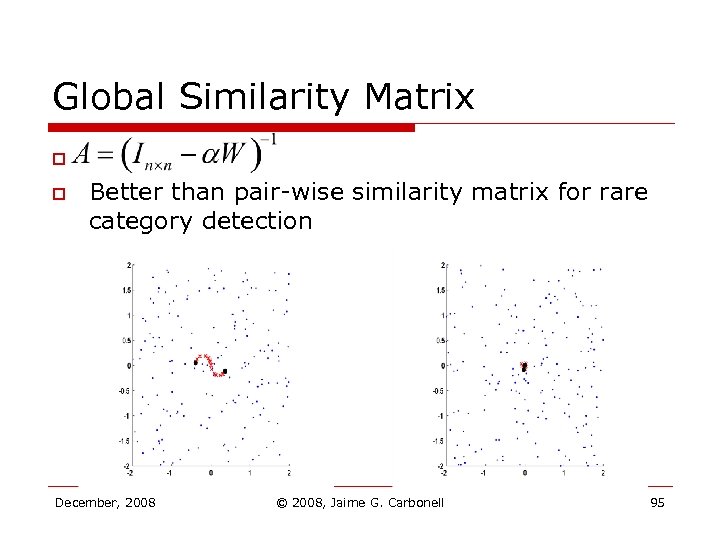

Global Similarity Matrix o o Better than pair-wise similarity matrix for rare category detection December, 2008 © 2008, Jaime G. Carbonell 95

Global Similarity Matrix o o Better than pair-wise similarity matrix for rare category detection December, 2008 © 2008, Jaime G. Carbonell 95

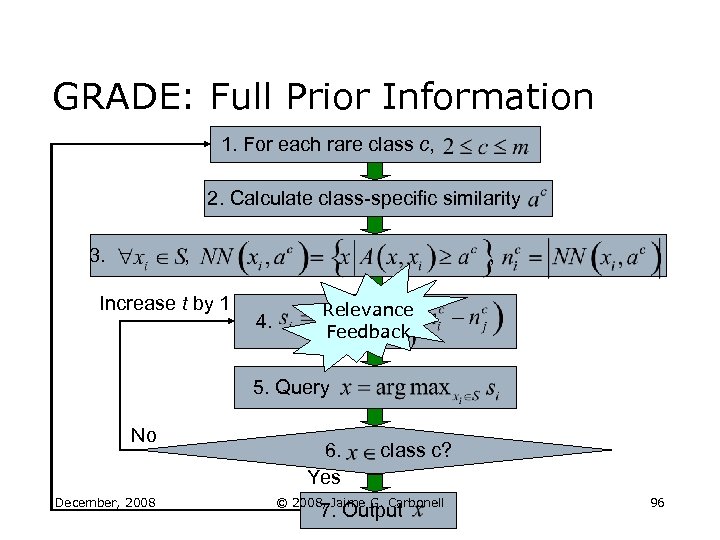

GRADE: Full Prior Information 1. For each rare class c, 2. Calculate class-specific similarity 3. , Increase t by 1 , 4. Relevance Feedback 5. Query No December, 2008 6. Yes class c? © 2008, Jaime G. Carbonell 7. Output 96

GRADE: Full Prior Information 1. For each rare class c, 2. Calculate class-specific similarity 3. , Increase t by 1 , 4. Relevance Feedback 5. Query No December, 2008 6. Yes class c? © 2008, Jaime G. Carbonell 7. Output 96

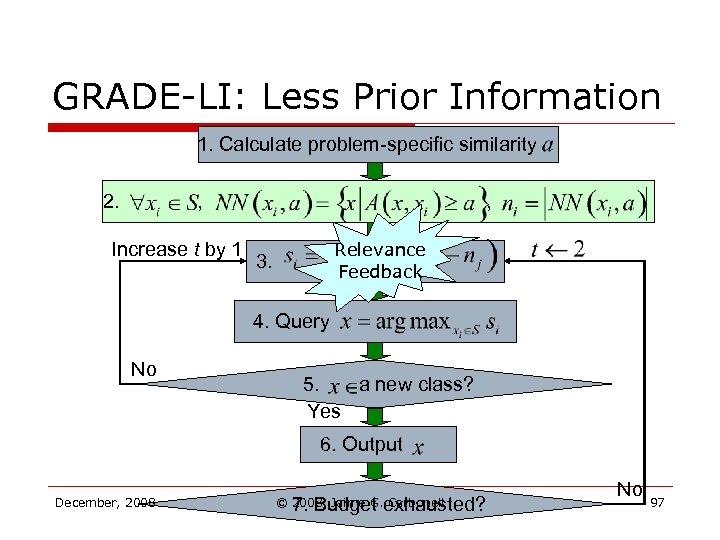

GRADE-LI: Less Prior Information 1. Calculate problem-specific similarity 2. , Increase t by 1 , Relevance Feedback 3. 4. Query No 5. a new class? Yes 6. Output December, 2008 7. Budget exhausted? © 2008, Jaime G. Carbonell No 97

GRADE-LI: Less Prior Information 1. Calculate problem-specific similarity 2. , Increase t by 1 , Relevance Feedback 3. 4. Query No 5. a new class? Yes 6. Output December, 2008 7. Budget exhausted? © 2008, Jaime G. Carbonell No 97

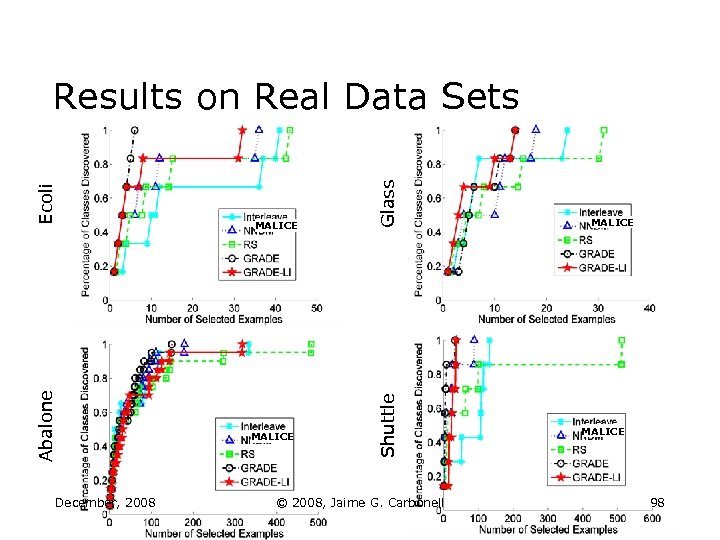

December, 2008 MALICE Glass MALICE Shuttle Abalone Ecoli Results on Real Data Sets © 2008, Jaime G. Carbonell MALICE 98

December, 2008 MALICE Glass MALICE Shuttle Abalone Ecoli Results on Real Data Sets © 2008, Jaime G. Carbonell MALICE 98

Applying Machine Learning for Data Mining in Business o o o Step 1: Have clear objective to Optimize Step 2: Have sufficient data Step 3: Clean, normalize, clean data some more Step 4: Make sure there isn’t an easy solution (e. g. a small number of rules from expert) Step 5: Do the Data Mining for real Step 6: Cross-validate, improve, go to step 5 December, 2008 © 2008, Jaime G. Carbonell 99

Applying Machine Learning for Data Mining in Business o o o Step 1: Have clear objective to Optimize Step 2: Have sufficient data Step 3: Clean, normalize, clean data some more Step 4: Make sure there isn’t an easy solution (e. g. a small number of rules from expert) Step 5: Do the Data Mining for real Step 6: Cross-validate, improve, go to step 5 December, 2008 © 2008, Jaime G. Carbonell 99

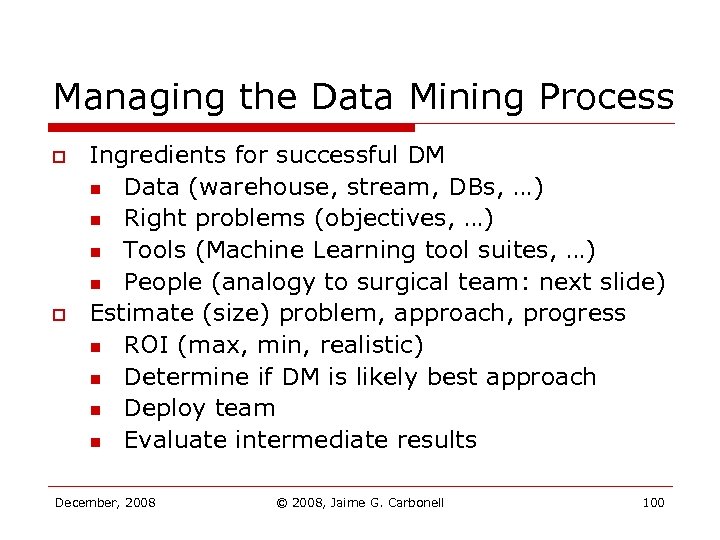

Managing the Data Mining Process o o Ingredients for successful DM n Data (warehouse, stream, DBs, …) n Right problems (objectives, …) n Tools (Machine Learning tool suites, …) n People (analogy to surgical team: next slide) Estimate (size) problem, approach, progress n ROI (max, min, realistic) n Determine if DM is likely best approach n Deploy team n Evaluate intermediate results December, 2008 © 2008, Jaime G. Carbonell 100

Managing the Data Mining Process o o Ingredients for successful DM n Data (warehouse, stream, DBs, …) n Right problems (objectives, …) n Tools (Machine Learning tool suites, …) n People (analogy to surgical team: next slide) Estimate (size) problem, approach, progress n ROI (max, min, realistic) n Determine if DM is likely best approach n Deploy team n Evaluate intermediate results December, 2008 © 2008, Jaime G. Carbonell 100

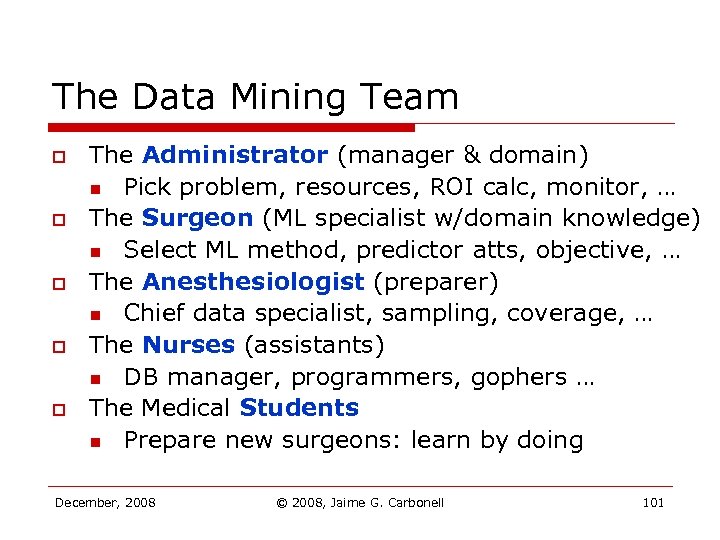

The Data Mining Team o o o The Administrator (manager & domain) n Pick problem, resources, ROI calc, monitor, … The Surgeon (ML specialist w/domain knowledge) n Select ML method, predictor atts, objective, … The Anesthesiologist (preparer) n Chief data specialist, sampling, coverage, … The Nurses (assistants) n DB manager, programmers, gophers … The Medical Students n Prepare new surgeons: learn by doing December, 2008 © 2008, Jaime G. Carbonell 101

The Data Mining Team o o o The Administrator (manager & domain) n Pick problem, resources, ROI calc, monitor, … The Surgeon (ML specialist w/domain knowledge) n Select ML method, predictor atts, objective, … The Anesthesiologist (preparer) n Chief data specialist, sampling, coverage, … The Nurses (assistants) n DB manager, programmers, gophers … The Medical Students n Prepare new surgeons: learn by doing December, 2008 © 2008, Jaime G. Carbonell 101

Need Some Domain Expertise o o Data Preparation n What are good candidate predictor att’s? n How to combine multiple objectives? n How to sample? (e. g. id cyclic periods) Progress monitoring and results interpretation n How accurate must prediction be? n Do we need more or different data? n Are we pursing reasonable objective(s)? Application of DM after accomplished Update of DM when/as environment evolves December, 2008 © 2008, Jaime G. Carbonell 102

Need Some Domain Expertise o o Data Preparation n What are good candidate predictor att’s? n How to combine multiple objectives? n How to sample? (e. g. id cyclic periods) Progress monitoring and results interpretation n How accurate must prediction be? n Do we need more or different data? n Are we pursing reasonable objective(s)? Application of DM after accomplished Update of DM when/as environment evolves December, 2008 © 2008, Jaime G. Carbonell 102

Typical Data Mining Pitfalls o o o o Insufficient data to establish predictive patterns Incorrect selection of predictor attributes n Statistics to the rescue (e. g. 2 test) Unrealistic objectives (e. g. fraud recovery) Inappropriate ML method selection Data preparation problems n Failure to normalize across data sets n Systematic bias in original data collection Belief in DM as panacea or black magic Giving up too soon (very common) December, 2008 © 2008, Jaime G. Carbonell 103

Typical Data Mining Pitfalls o o o o Insufficient data to establish predictive patterns Incorrect selection of predictor attributes n Statistics to the rescue (e. g. 2 test) Unrealistic objectives (e. g. fraud recovery) Inappropriate ML method selection Data preparation problems n Failure to normalize across data sets n Systematic bias in original data collection Belief in DM as panacea or black magic Giving up too soon (very common) December, 2008 © 2008, Jaime G. Carbonell 103

Final Words on Data Mining o o Data Mining is: n 1/3 science (math, algorithms, …) n …and 1/3 engineering (data prep, analysis, …) n …and 1/3 “art” (experience really counts) 10 years ago it was mostly art 10 years from now it will be mostly engineering What to expect from the research labs? n Better supervised algorithms n Focus on unsupervised learning + optimization n Move to incorporate semi-structured (text) data December, 2008 © 2008, Jaime G. Carbonell 104

Final Words on Data Mining o o Data Mining is: n 1/3 science (math, algorithms, …) n …and 1/3 engineering (data prep, analysis, …) n …and 1/3 “art” (experience really counts) 10 years ago it was mostly art 10 years from now it will be mostly engineering What to expect from the research labs? n Better supervised algorithms n Focus on unsupervised learning + optimization n Move to incorporate semi-structured (text) data December, 2008 © 2008, Jaime G. Carbonell 104

THANK YOU! December, 2008 © 2008, Jaime G. Carbonell 105

THANK YOU! December, 2008 © 2008, Jaime G. Carbonell 105