0bbf93abe52d7ef07fe47b9049369b88.ppt

- Количество слайдов: 41

Machine learning & category recognition Cordelia Schmid Jakob Verbeek

Content of the course • Visual object recognition • Robust image description • Machine learning

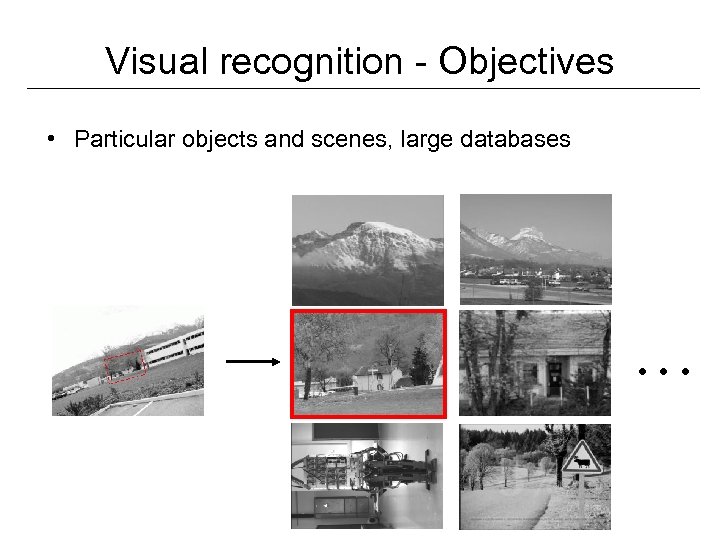

Visual recognition - Objectives • Particular objects and scenes, large databases …

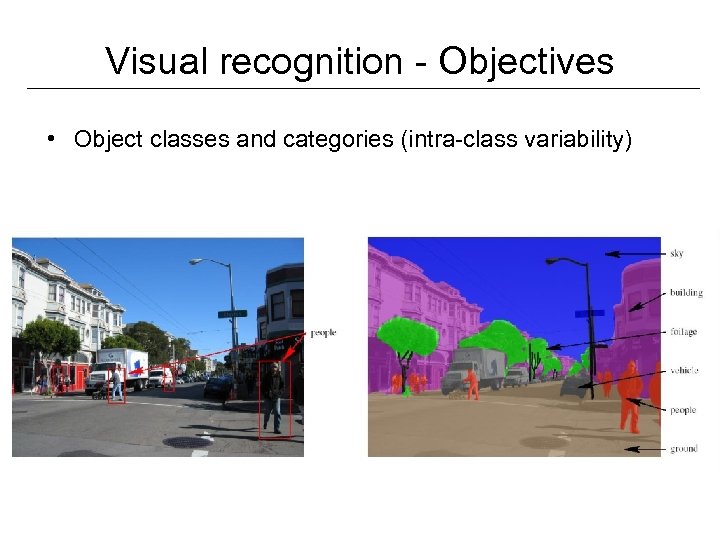

Visual recognition - Objectives • Object classes and categories (intra-class variability)

Visual object recognition

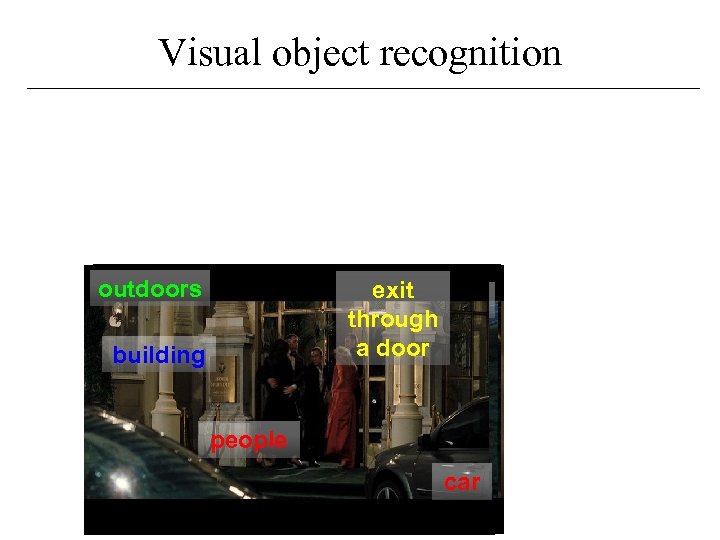

Visual object recognition outdoors countryside indoors outdoors car exit person through house enter person a door building kidnapping car drinking car crash person glass roadcarpeople field car street candle car street

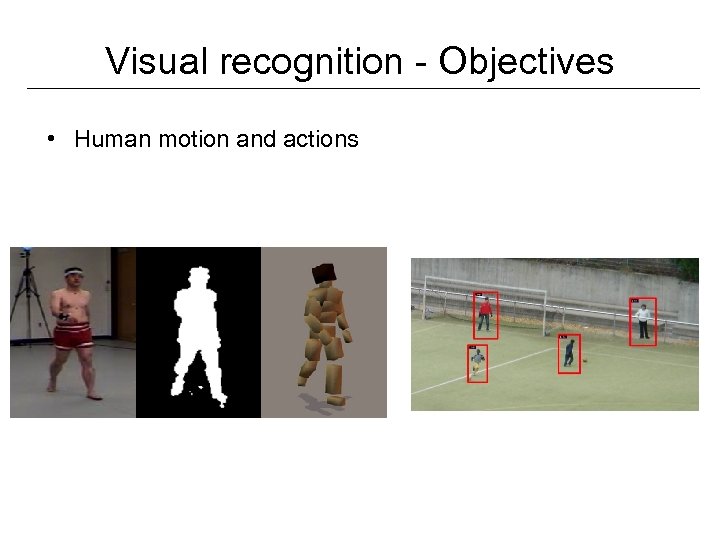

Visual recognition - Objectives • Human motion and actions

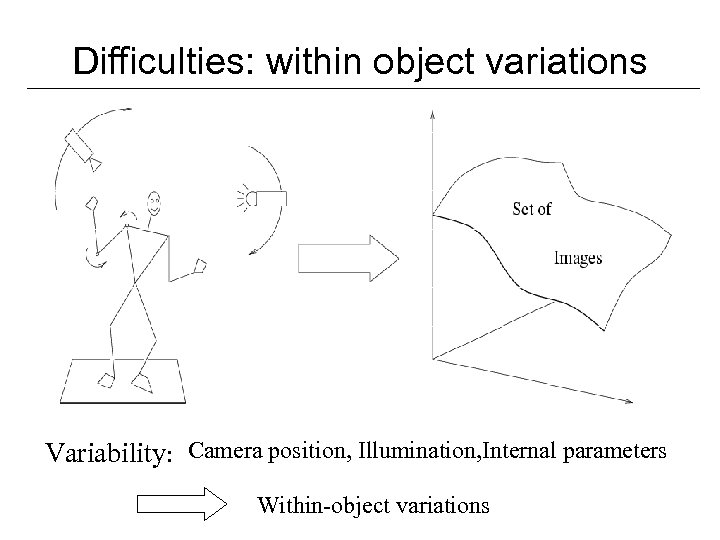

Difficulties: within object variations Variability: Camera position, Illumination, Internal parameters Within-object variations

Difficulties: within-class variations

Visual recognition • Robust image description – Appropriate descriptors for objects and categories • Statistical modeling and machine learning for vision – Selection and adaptation of existing techniques

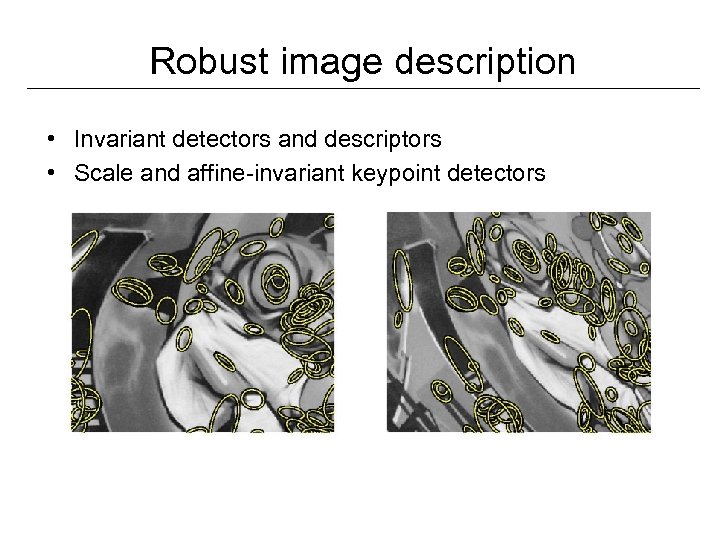

Robust image description • Invariant detectors and descriptors • Scale and affine-invariant keypoint detectors

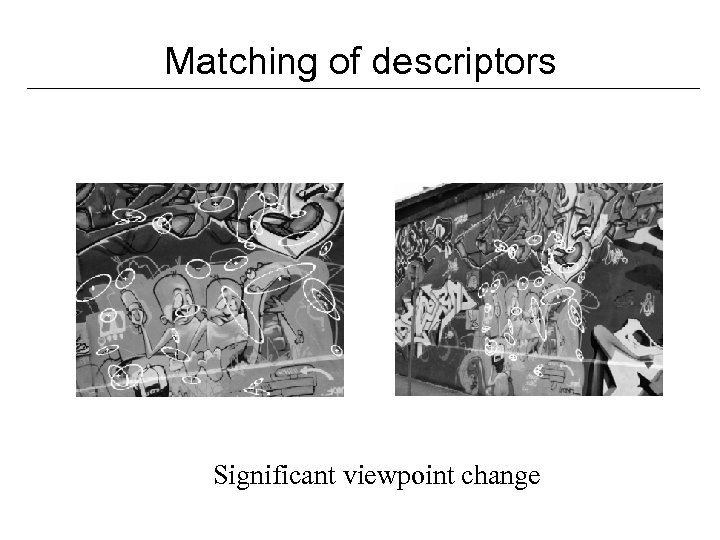

Matching of descriptors Significant viewpoint change

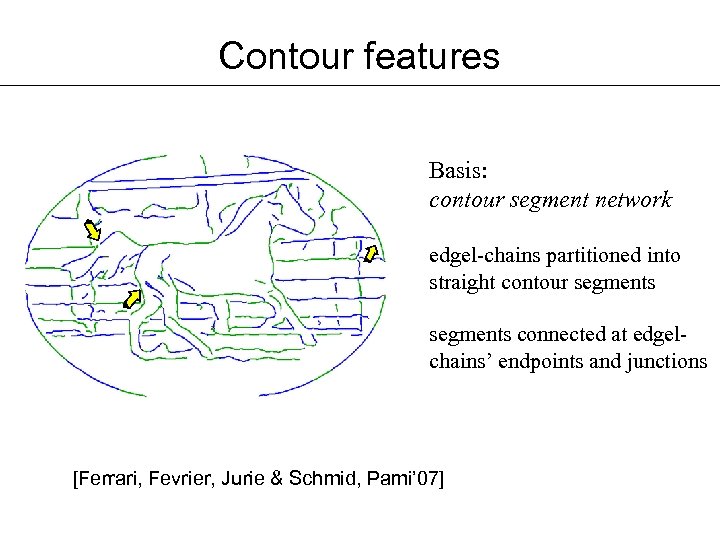

Contour features Basis: contour segment network edgel-chains partitioned into straight contour segments connected at edgelchains’ endpoints and junctions [Ferrari, Fevrier, Jurie & Schmid, Pami’ 07] Ferrari et al. ECCV 2006

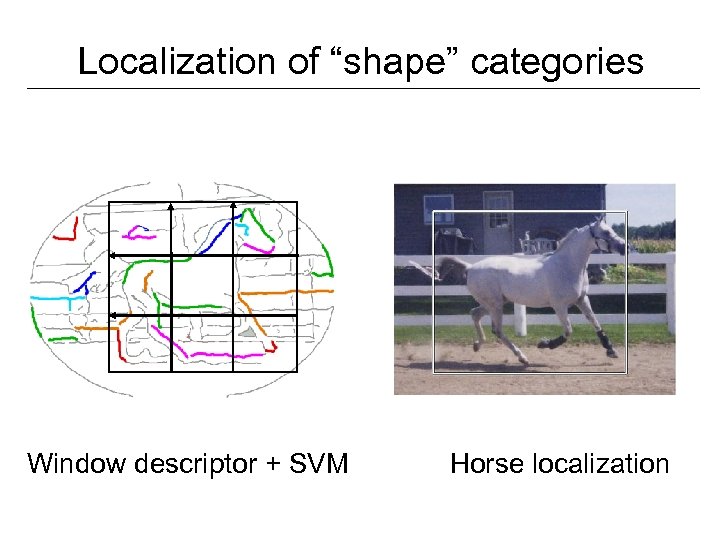

Localization of “shape” categories Window descriptor + SVM Horse localization

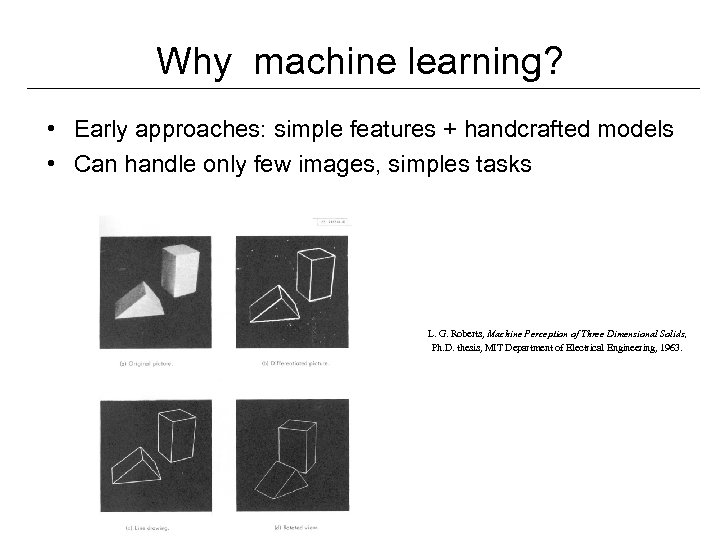

Why machine learning? • Early approaches: simple features + handcrafted models • Can handle only few images, simples tasks L. G. Roberts, Machine Perception of Three Dimensional Solids, Ph. D. thesis, MIT Department of Electrical Engineering, 1963.

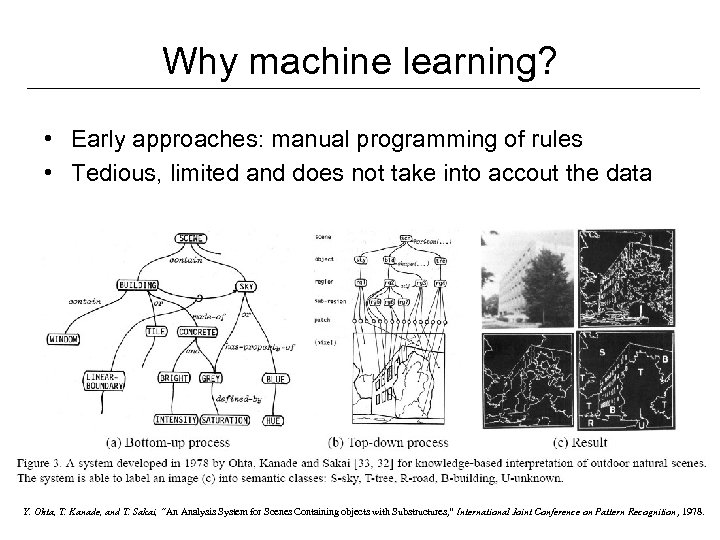

Why machine learning? • Early approaches: manual programming of rules • Tedious, limited and does not take into accout the data Y. Ohta, T. Kanade, and T. Sakai, “An Analysis System for Scenes Containing objects with Substructures, ” International Joint Conference on Pattern Recognition, 1978.

Why machine learning? • Today lots of data, complex tasks Internet images, personal photo albums Movies, news, sports

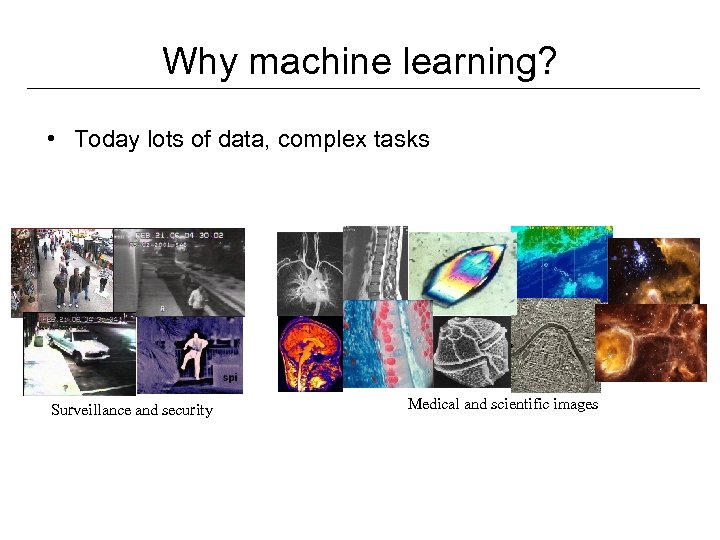

Why machine learning? • Today lots of data, complex tasks Surveillance and security Medical and scientific images

Why machine learning? • Today: Lots of data, complex tasks • Instead of trying to encode rules directly, learn them from examples of inputs and desired outputs

Types of learning problems • Supervised – Classification – Regression • • • Unsupervised Semi-supervised Reinforcement learning Active learning ….

Supervised learning • Given training examples of inputs and corresponding outputs, produce the “correct” outputs for new inputs • Two main scenarios: – Classification: outputs are discrete variables (category labels). Learn a decision boundary that separates one class from the other – Regression: also known as “curve fitting” or “function approximation. ” Learn a continuous input-output mapping from examples (possibly noisy)

Unsupervised Learning • Given only unlabeled data as input, learn some sort of structure • The objective is often more vague or subjective than in supervised learning. This is more of an exploratory/descriptive data analysis

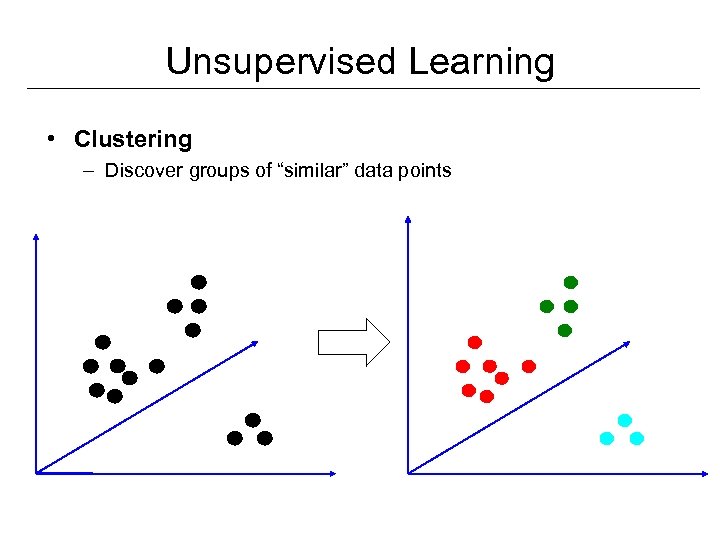

Unsupervised Learning • Clustering – Discover groups of “similar” data points

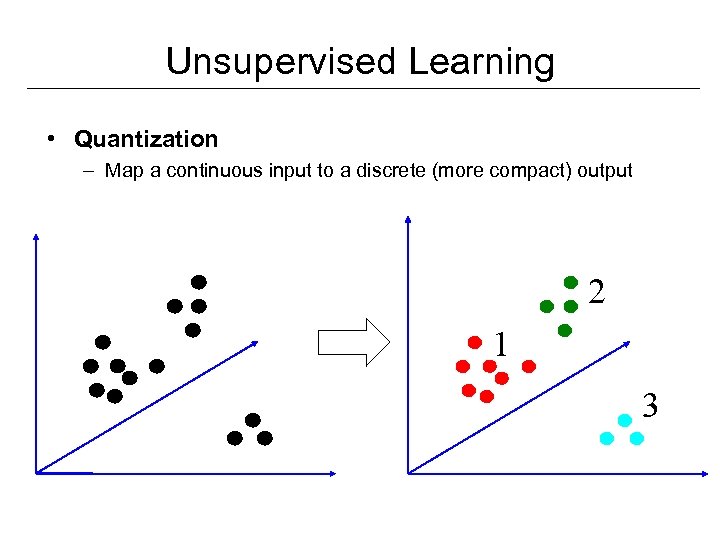

Unsupervised Learning • Quantization – Map a continuous input to a discrete (more compact) output 2 1 3

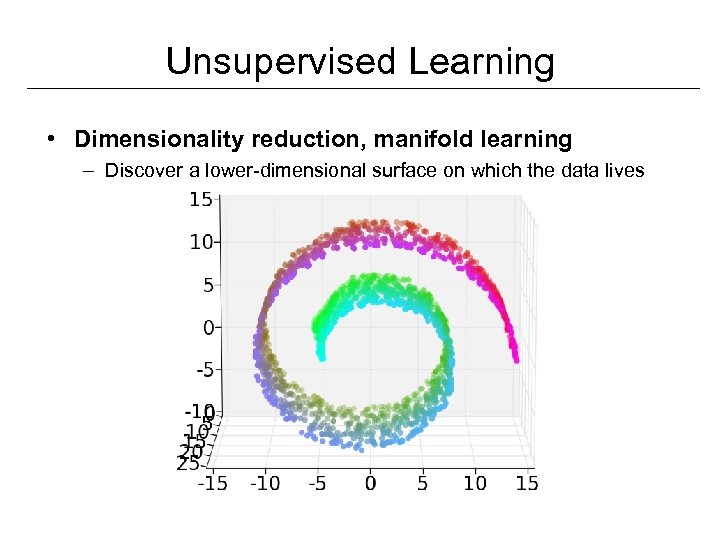

Unsupervised Learning • Dimensionality reduction, manifold learning – Discover a lower-dimensional surface on which the data lives

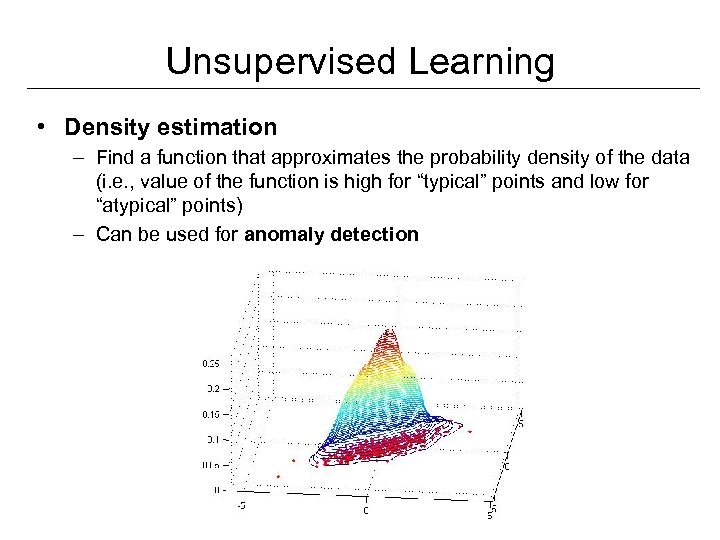

Unsupervised Learning • Density estimation – Find a function that approximates the probability density of the data (i. e. , value of the function is high for “typical” points and low for “atypical” points) – Can be used for anomaly detection

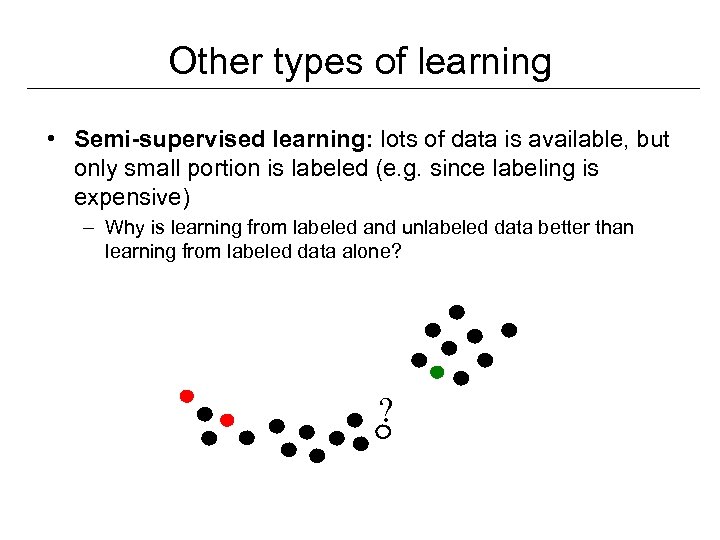

Other types of learning • Semi-supervised learning: lots of data is available, but only small portion is labeled (e. g. since labeling is expensive)

Other types of learning • Semi-supervised learning: lots of data is available, but only small portion is labeled (e. g. since labeling is expensive) – Why is learning from labeled and unlabeled data better than learning from labeled data alone? ?

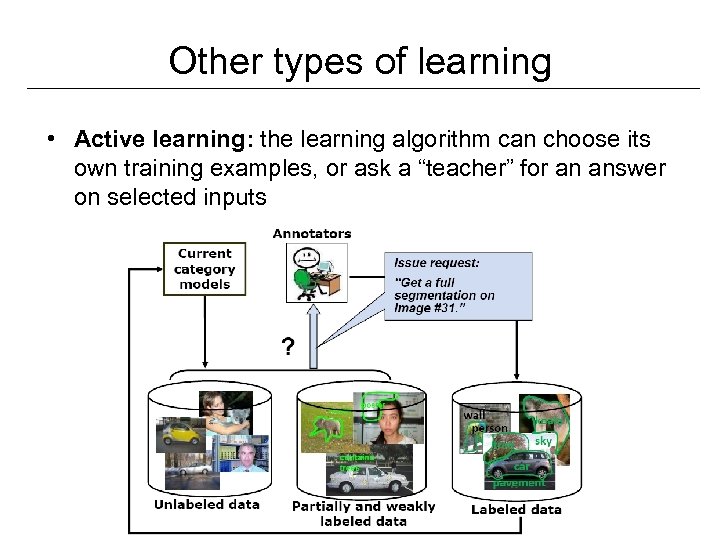

Other types of learning • Active learning: the learning algorithm can choose its own training examples, or ask a “teacher” for an answer on selected inputs

Other types of learning • Reinforcement learning: an agent takes inputs from the environment, and takes actions that affect the environment. Occasionally, the agent gets a scalar reward or punishment. The goal is to learn to produce action sequences that maximize the expected reward (e. g. driving a robot without bumping into obstacles)

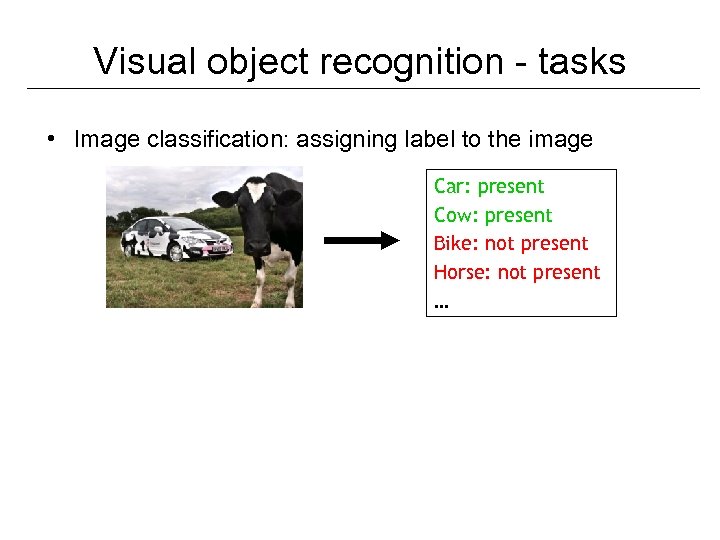

Visual object recognition - tasks • Image classification: assigning label to the image Car: present Cow: present Bike: not present Horse: not present …

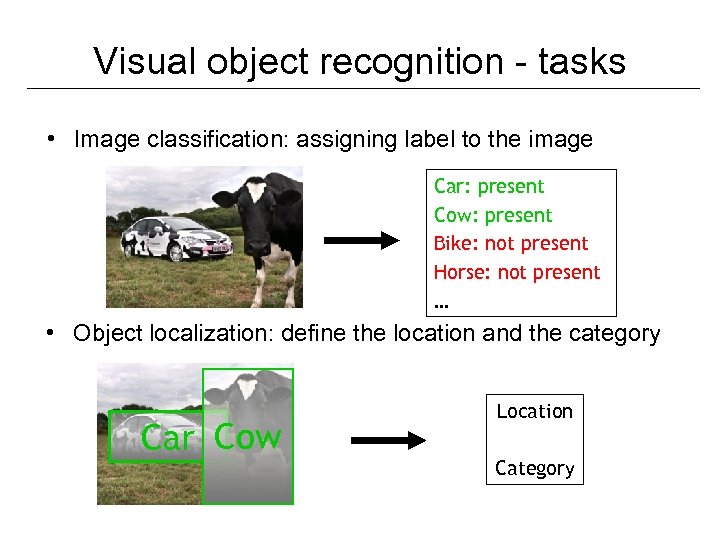

Visual object Tasks recognition - tasks • Image classification: assigning label to the image Car: present Cow: present Bike: not present Horse: not present … • Object localization: define the location and the category Car Cow Location Category

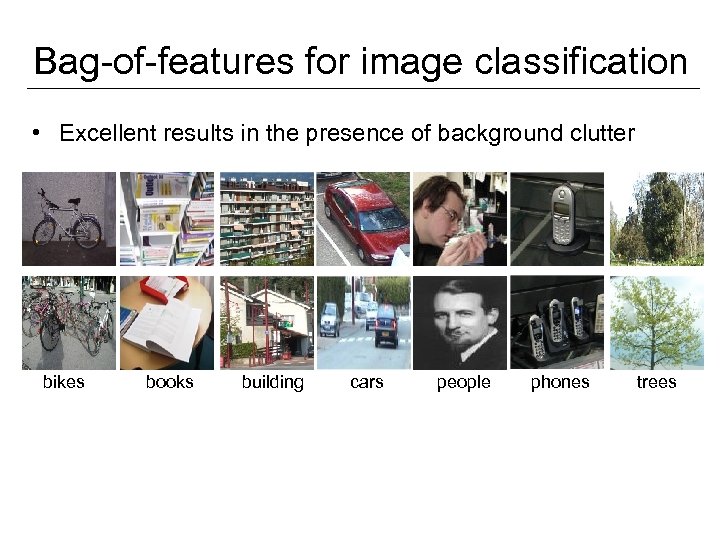

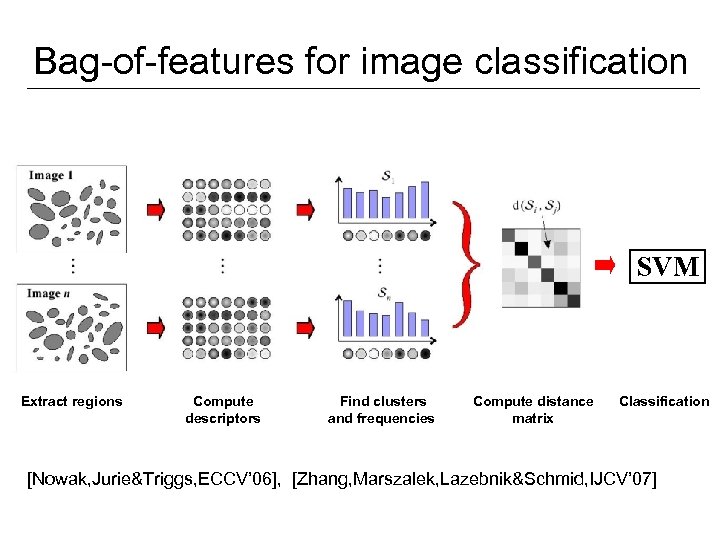

Bag-of-features for image classification • Excellent results in the presence of background clutter bikes books building cars people phones trees

Bag-of-features for image classification SVM Extract regions Compute descriptors Find clusters and frequencies Compute distance matrix Classification [Nowak, Jurie&Triggs, ECCV’ 06], [Zhang, Marszalek, Lazebnik&Schmid, IJCV’ 07]

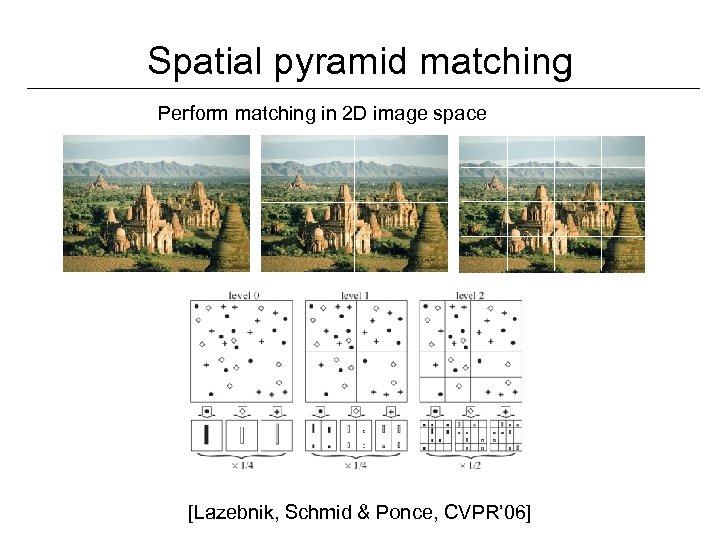

Spatial pyramid matching Perform matching in 2 D image space [Lazebnik, Schmid & Ponce, CVPR’ 06]

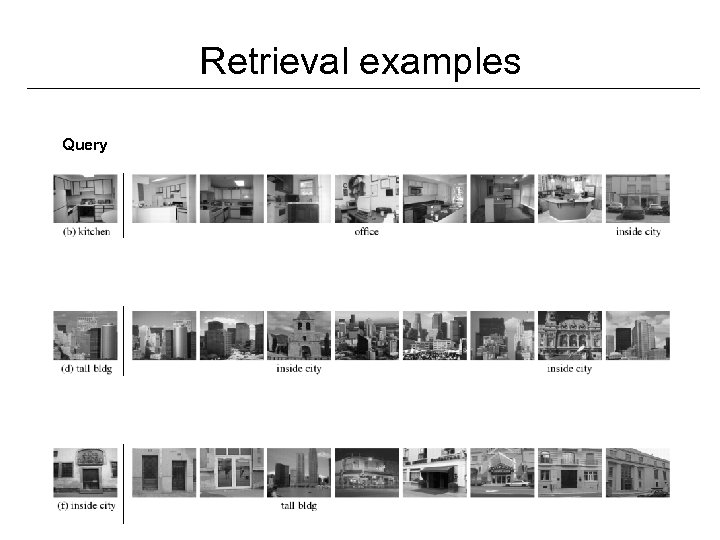

Retrieval examples Query

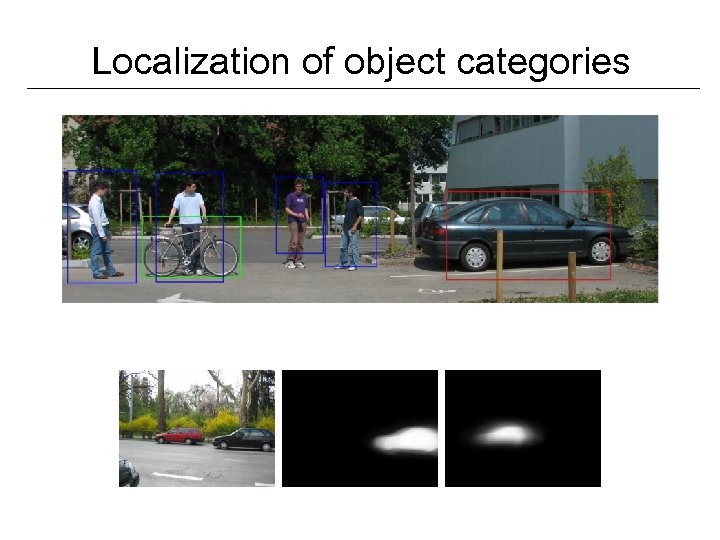

Localization of object categories

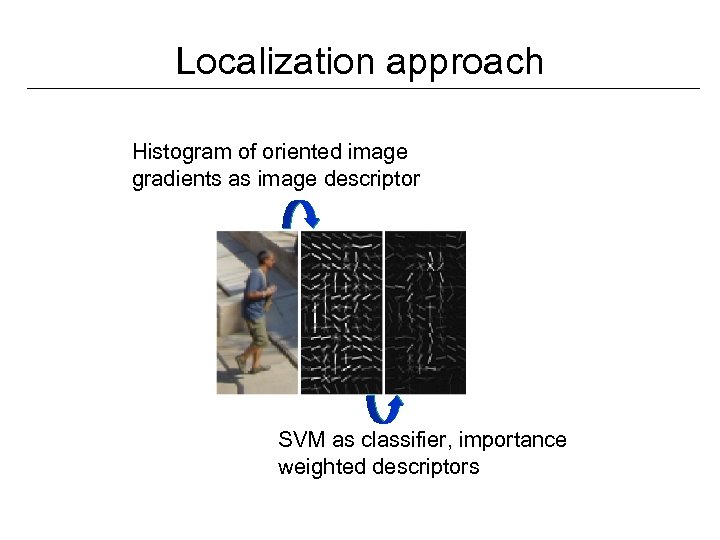

Localization approach Histogram of oriented image gradients as image descriptor SVM as classifier, importance weighted descriptors

![Unsupervised learning using Markov field aspect models [Verbeek & Triggs, CVPR’ 07] • Goal: Unsupervised learning using Markov field aspect models [Verbeek & Triggs, CVPR’ 07] • Goal:](https://present5.com/presentation/0bbf93abe52d7ef07fe47b9049369b88/image-39.jpg)

Unsupervised learning using Markov field aspect models [Verbeek & Triggs, CVPR’ 07] • Goal: automatic interpretation of natural scenes – assign pixels in images to visual categories – learn models from image-wide labeling, without localization • Per training image a list of present categories Example scene interpretation of training image • Approach: capture local and image-wide correlations – Markov fields capture local label contiguity – Aspect models capture image-wide label correlation – Interleave: • Region-to-category assignments using Loopy Belief Propagation and labeling • Category model estimation

![Localization based on shape [Ferrari, Jurie & Schmid, CVPR’ 07] [Marzsalek & Schmid, CVPR’ Localization based on shape [Ferrari, Jurie & Schmid, CVPR’ 07] [Marzsalek & Schmid, CVPR’](https://present5.com/presentation/0bbf93abe52d7ef07fe47b9049369b88/image-40.jpg)

Localization based on shape [Ferrari, Jurie & Schmid, CVPR’ 07] [Marzsalek & Schmid, CVPR’ 07]

Master Internships • Internships are available in the LEAR group – Object localization (C. Schmid) – Video recognition (C. Schmid) – Semi-supervised / text-based learning (J. Verbeek) • If you are interested send an email to us

0bbf93abe52d7ef07fe47b9049369b88.ppt