cd9a0e4b4e5eaa765e02f6742b46391a.ppt

- Количество слайдов: 23

Loading a Cache with Query Results Laura Haas, IBM Almaden Donald Kossmann, Univ. Passau Ioana Ursu, IBM Almaden

Loading a Cache with Query Results Laura Haas, IBM Almaden Donald Kossmann, Univ. Passau Ioana Ursu, IBM Almaden

Background & Motivation • • Applications invoke queries and methods Queries select relevant objects Methods work with relevant objects Example: find hotels and reserve rooms foreach h in (select oid from hotels h where city = Edinburgh) h. request. Room(3, Sep-6, Sep-12); • Other examples: CAX, SAP R/3, Web 2

Background & Motivation • • Applications invoke queries and methods Queries select relevant objects Methods work with relevant objects Example: find hotels and reserve rooms foreach h in (select oid from hotels h where city = Edinburgh) h. request. Room(3, Sep-6, Sep-12); • Other examples: CAX, SAP R/3, Web 2

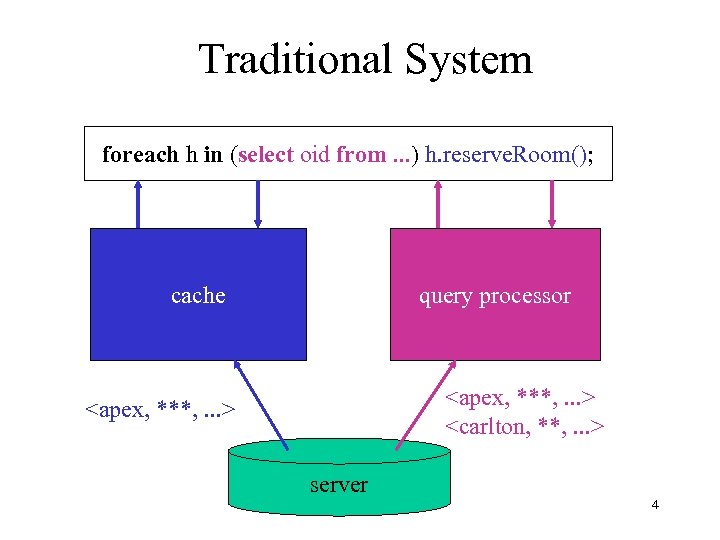

Background and Motivation • Traditional client-server systems: – methods are executed by clients with caching – queries are executed by clients and servers – query processing is independent of caching • Problems: – data must be fetched twice – objects are faulted in individually • Terrible performance in many environments 3

Background and Motivation • Traditional client-server systems: – methods are executed by clients with caching – queries are executed by clients and servers – query processing is independent of caching • Problems: – data must be fetched twice – objects are faulted in individually • Terrible performance in many environments 3

Traditional System foreach h in (select oid from. . . ) h. reserve. Room(); cache query processor

Traditional System foreach h in (select oid from. . . ) h. reserve. Room(); cache query processor

Goal & Solution • Load Cache as a by-product of queries. – copy relevant objects while executing the query • Cache operators do the copying • Extend the query optimizer – which collections should be cached? – when to copy? • Assumption: caching in the granularity of objects 5

Goal & Solution • Load Cache as a by-product of queries. – copy relevant objects while executing the query • Cache operators do the copying • Extend the query optimizer – which collections should be cached? – when to copy? • Assumption: caching in the granularity of objects 5

foreach h in (select oid from. . . ) h. reserve. Rooms(); Join

foreach h in (select oid from. . . ) h. reserve. Rooms(); Join

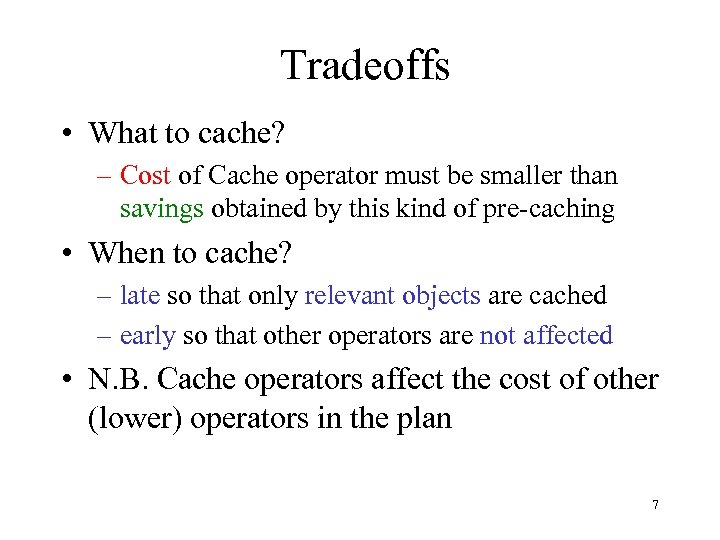

Tradeoffs • What to cache? – Cost of Cache operator must be smaller than savings obtained by this kind of pre-caching • When to cache? – late so that only relevant objects are cached – early so that other operators are not affected • N. B. Cache operators affect the cost of other (lower) operators in the plan 7

Tradeoffs • What to cache? – Cost of Cache operator must be smaller than savings obtained by this kind of pre-caching • When to cache? – late so that only relevant objects are cached – early so that other operators are not affected • N. B. Cache operators affect the cost of other (lower) operators in the plan 7

Early vs. Late Cache Operators: Copying Irrelevant Objects Join

Early vs. Late Cache Operators: Copying Irrelevant Objects Join

Early vs. Late Cache Operators: Late Projections Early Cache - Cheap Join Late Cache - Expensive Join

Early vs. Late Cache Operators: Late Projections Early Cache - Cheap Join Late Cache - Expensive Join

Alternative Approaches • Determine candidate collections for caching; i. e. what to cache: – carry out data flow analysis – analyze select clause of the query; cache if oid is returned • Determine when to cache candidate objects: – heuristics – cost-based approach 10

Alternative Approaches • Determine candidate collections for caching; i. e. what to cache: – carry out data flow analysis – analyze select clause of the query; cache if oid is returned • Determine when to cache candidate objects: – heuristics – cost-based approach 10

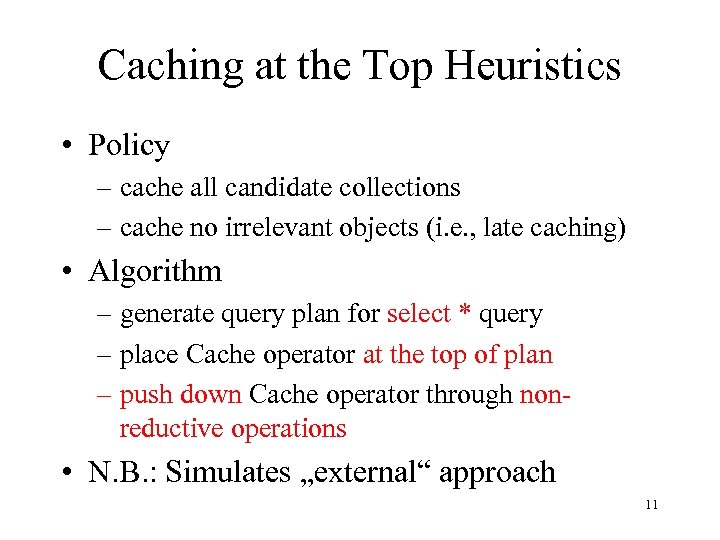

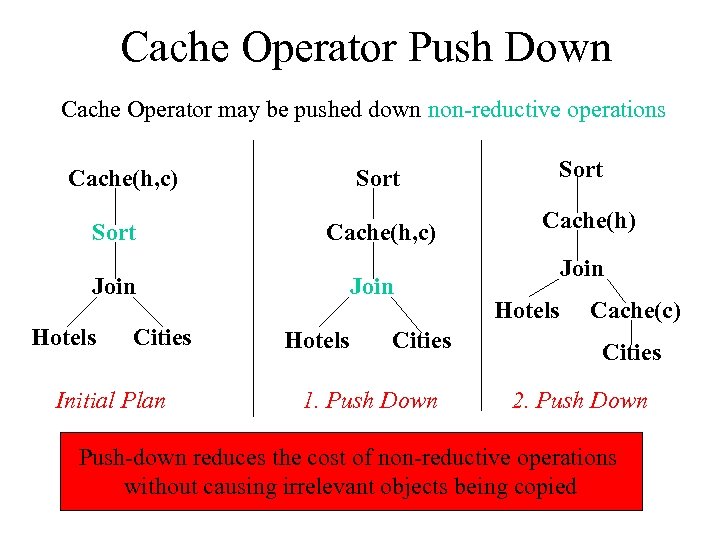

Caching at the Top Heuristics • Policy – cache all candidate collections – cache no irrelevant objects (i. e. , late caching) • Algorithm – generate query plan for select * query – place Cache operator at the top of plan – push down Cache operator through nonreductive operations • N. B. : Simulates „external“ approach 11

Caching at the Top Heuristics • Policy – cache all candidate collections – cache no irrelevant objects (i. e. , late caching) • Algorithm – generate query plan for select * query – place Cache operator at the top of plan – push down Cache operator through nonreductive operations • N. B. : Simulates „external“ approach 11

Cache Operator Push Down Cache Operator may be pushed down non-reductive operations Cache(h, c) Sort Cache(h, c) Join Hotels Cities Initial Plan Join Hotels Cities 1. Push Down Sort Cache(h) Join Hotels Cache(c) Cities 2. Push Down Push-down reduces the cost of non-reductive operations without causing irrelevant objects being copied

Cache Operator Push Down Cache Operator may be pushed down non-reductive operations Cache(h, c) Sort Cache(h, c) Join Hotels Cities Initial Plan Join Hotels Cities 1. Push Down Sort Cache(h) Join Hotels Cache(c) Cities 2. Push Down Push-down reduces the cost of non-reductive operations without causing irrelevant objects being copied

Caching at the Bottom Heuristics • Policy – cache all candidate collections – increase cost of other operations as little as possible (i. e. , early caching) • Algorithm – extend optimizer to produce plan with Cache operators as low as possible (details in paper) – pull-up Cache operators through pipeline Pull-up reduces the number of irrelevant objects that are cached without increasing the cost of pipelined operators

Caching at the Bottom Heuristics • Policy – cache all candidate collections – increase cost of other operations as little as possible (i. e. , early caching) • Algorithm – extend optimizer to produce plan with Cache operators as low as possible (details in paper) – pull-up Cache operators through pipeline Pull-up reduces the number of irrelevant objects that are cached without increasing the cost of pipelined operators

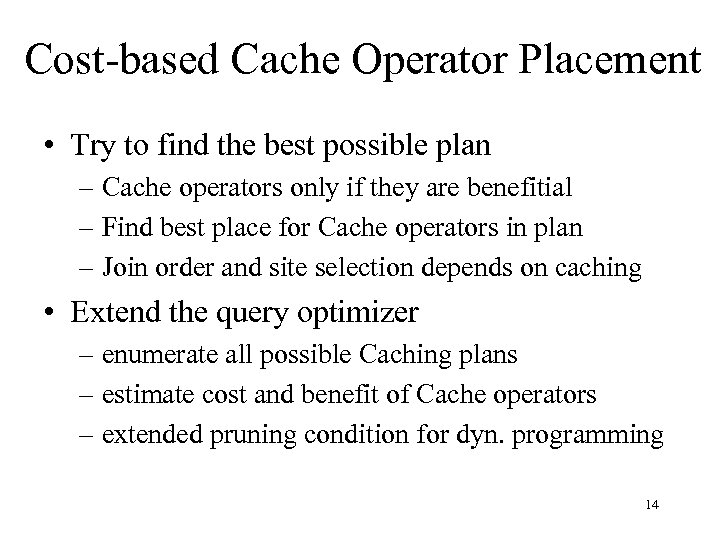

Cost-based Cache Operator Placement • Try to find the best possible plan – Cache operators only if they are benefitial – Find best place for Cache operators in plan – Join order and site selection depends on caching • Extend the query optimizer – enumerate all possible Caching plans – estimate cost and benefit of Cache operators – extended pruning condition for dyn. programming 14

Cost-based Cache Operator Placement • Try to find the best possible plan – Cache operators only if they are benefitial – Find best place for Cache operators in plan – Join order and site selection depends on caching • Extend the query optimizer – enumerate all possible Caching plans – estimate cost and benefit of Cache operators – extended pruning condition for dyn. programming 14

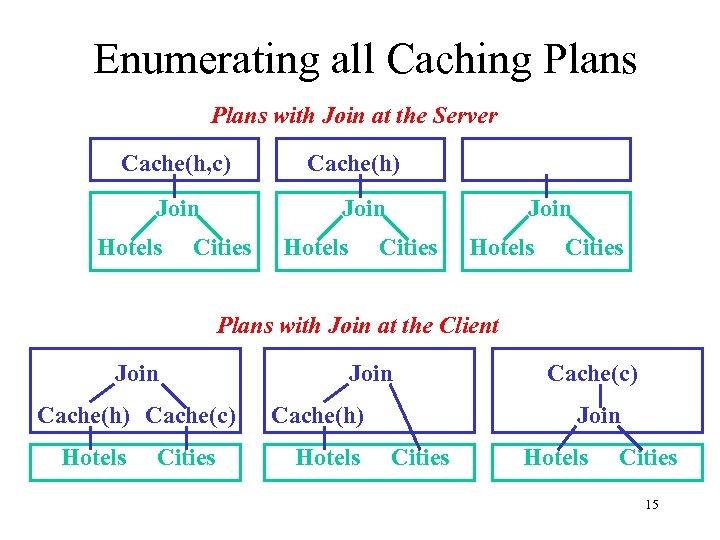

Enumerating all Caching Plans with Join at the Server Cache(h, c) Join Hotels Cache(h) Join Cities Hotels Cities Plans with Join at the Client Join Cache(h) Cache(c) Hotels Cities Join Cache(h) Hotels Cache(c) Join Cities Hotels Cities 15

Enumerating all Caching Plans with Join at the Server Cache(h, c) Join Hotels Cache(h) Join Cities Hotels Cities Plans with Join at the Client Join Cache(h) Cache(c) Hotels Cities Join Cache(h) Hotels Cache(c) Join Cities Hotels Cities 15

Costing of Cache Operators • Overhead of Cache Operators – cost to probe hash table for every object – cost to copy objects which are not yet cached • Benefit of Cache Operators – savings: relevant objects are not refetched – savings depend on costs to fault-in object and current state of the cache • Cost = Overhead - Benefit – only Cache operators with Cost < 0 are useful 16

Costing of Cache Operators • Overhead of Cache Operators – cost to probe hash table for every object – cost to copy objects which are not yet cached • Benefit of Cache Operators – savings: relevant objects are not refetched – savings depend on costs to fault-in object and current state of the cache • Cost = Overhead - Benefit – only Cache operators with Cost < 0 are useful 16

Summary of Approaches • Heuristics – simple to implement – not much additional optimization overhead – poor plans in certain situations • Cost-based – very good plans – huge search space, slows down query optimizer 17

Summary of Approaches • Heuristics – simple to implement – not much additional optimization overhead – poor plans in certain situations • Cost-based – very good plans – huge search space, slows down query optimizer 17

Performance Experiments • Test Environment – Garlic heterogeneous database system – UDB, Lotus Notes, WWW servers • Benchmark – relational BUCKY benchmark database – simple queries to multi-way cross-source joins – simple accessor methods 18

Performance Experiments • Test Environment – Garlic heterogeneous database system – UDB, Lotus Notes, WWW servers • Benchmark – relational BUCKY benchmark database – simple queries to multi-way cross-source joins – simple accessor methods 18

Application Run Time (secs) single-table query + accessor method 19

Application Run Time (secs) single-table query + accessor method 19

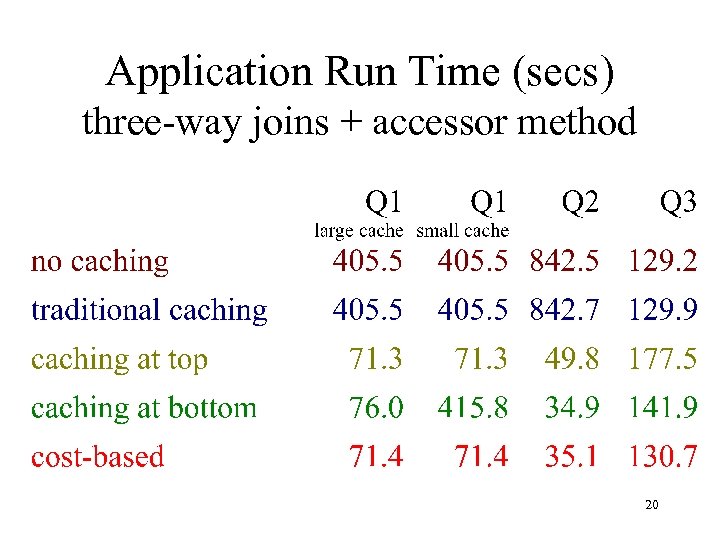

Application Run Time (secs) three-way joins + accessor method 20

Application Run Time (secs) three-way joins + accessor method 20

Query Optimization Times(secs) vary number of candidate collections 21

Query Optimization Times(secs) vary number of candidate collections 21

Conclusions • Loading the cache with query results can result in huge wins – for search & work applications – if client-server interaction is expensive • Use cost-based approach for simple queries – four or less candidate collections • Use heuristics for complex queries • Caching at Bottom heuristics is always at least as good as traditional, do-nothing approach 22

Conclusions • Loading the cache with query results can result in huge wins – for search & work applications – if client-server interaction is expensive • Use cost-based approach for simple queries – four or less candidate collections • Use heuristics for complex queries • Caching at Bottom heuristics is always at least as good as traditional, do-nothing approach 22

Future Work • Explore full range of possible approaches – e. g. cost-based Cache operator pull-up and push -down • Consider tradeoff of optimization time and application run time (meta optimization) – invest in optimization time only if high gains in application run-time can be expected – consider state of the cache, dynamic optimization 23

Future Work • Explore full range of possible approaches – e. g. cost-based Cache operator pull-up and push -down • Consider tradeoff of optimization time and application run time (meta optimization) – invest in optimization time only if high gains in application run-time can be expected – consider state of the cache, dynamic optimization 23