2c1fa324e31c988ae2952315ea22e235.ppt

- Количество слайдов: 16

Lightweight Remote Procedure Call B. Bershad, T. Anderson, E. Lazowska and H. Levy U. Of Washington Appears in SOSP 1989 Presented by: Fabián E. Bustamante CS 443 Advanced OS Fabián E. Bustamante, Spring 2005

Introduction Granularity of protection mech. used by an OS has significant impact on system’s design & use Capability systems – Fine-grained protection – object exists in its own protection domain – All object live within a single name or address space – A process in one domain can act on an object in another only through a protected procedure call – Parameter passing simplified by existence of global name space containing all objects – Problems w/ efficient implementations In distributed computing, large-grained protection mechanisms – RPC facilitates placement of subsystems in different machines – Absence of global address space ameliorated by automatic stub generators & sophisticated run-time libraries – Widely used, efficient and convenient model 2

Observation Small kernel OSs borrows large-grained protection & programming models from distributed computing – Separate component placed in disjoint domains – Messages used for all inter-domain communication But, also adopt their control transfer & comm. model – Independent threads exchanging msgs. containing potentially large, structured values – However, common case – most comm. in an OS are … • Cross-domain (bet/ domains on same machine) instead of crossmachine • Simple because complex data structures are concealed behind abstract system interfaces – Thus model violates the common case → low performance or bad modularity, commonly the latter Handle normal and worst cases separately as a rule, because the requirements for the two are quite different: The normal case must be fast. The worst case must make some progress. B. Lampson, “Hints for computer system design. ” 3

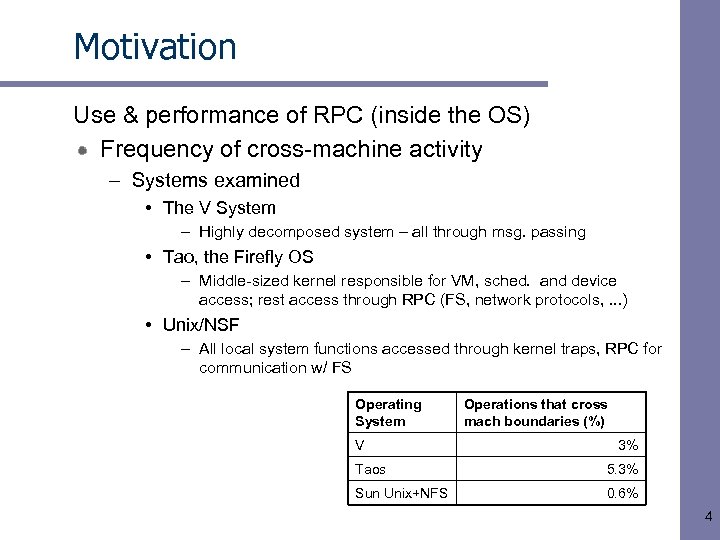

Motivation Use & performance of RPC (inside the OS) Frequency of cross-machine activity – Systems examined • The V System – Highly decomposed system – all through msg. passing • Tao, the Firefly OS – Middle-sized kernel responsible for VM, sched. and device access; rest access through RPC (FS, network protocols, . . . ) • Unix/NSF – All local system functions accessed through kernel traps, RPC for communication w/ FS Operating System V Operations that cross mach boundaries (%) 3% Taos 5. 3% Sun Unix+NFS 0. 6% 4

Motivation Parameter size & complexity – based on static & dynamic analysis of SRC RPC usage in Taos OS 28 RPC services w/ 366 procedures Over 4 days and 1. 5 million cross-domain procedure calls – – 95% calls went to 10/112 procedures 75% to 3/112 Number of bytes transfer - majority < 200 B No data types were recursively defined 5

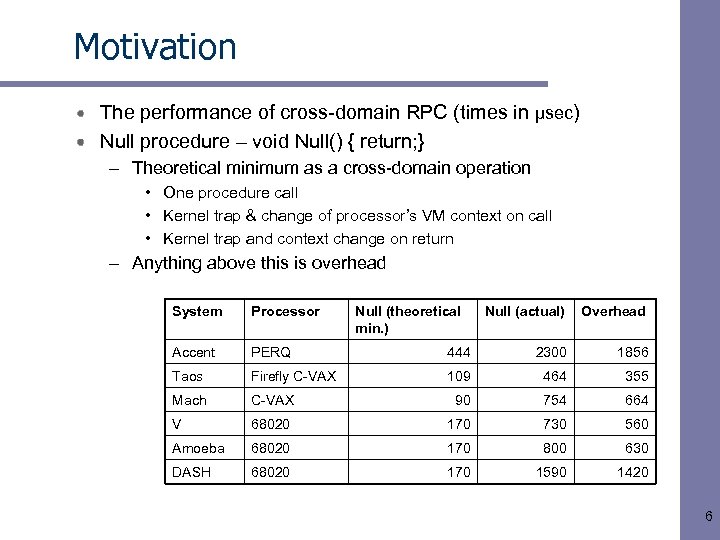

Motivation The performance of cross-domain RPC (times in µsec) Null procedure – void Null() { return; } – Theoretical minimum as a cross-domain operation • One procedure call • Kernel trap & change of processor’s VM context on call • Kernel trap and context change on return – Anything above this is overhead System Processor Accent PERQ Taos Firefly C-VAX Mach Null (theoretical min. ) Null (actual) Overhead 444 2300 1856 109 464 355 C-VAX 90 754 664 V 68020 170 730 560 Amoeba 68020 170 800 630 DASH 68020 170 1590 1420 6

Motivation Overhead – where’s the time going? – Stub overhead – diff. bet/ cross-domain & cross-machine call hidden by lower layers → general but infrequently needed e. g. 70µsec. to run null stub – Message buffer overhead – message exchange bet/ client/server → 4 copies (through kernel) on call/return (alloc & copy) – Access validation – kernel needs to validate sender both ways – Message transfer – queue/de-queue of msg. – Scheduling – while user sees one abstract thread, there’s a thread per domain and needs to be handled – Context switch – from client to server and back – Dispatch – receiver thread in server must interpret msg. & dispatch thread Some optimizations tried – DASH avoids kernel copy by allocating msg. out of a region mapped in both kernel & user domains – Mach & Taos rely on hand-off scheduling to bypass general sched. – Some systems pass few & small parameters in registers – SRC RPC gives up some safety w/ globally shared buffers, no access validation, etc 7

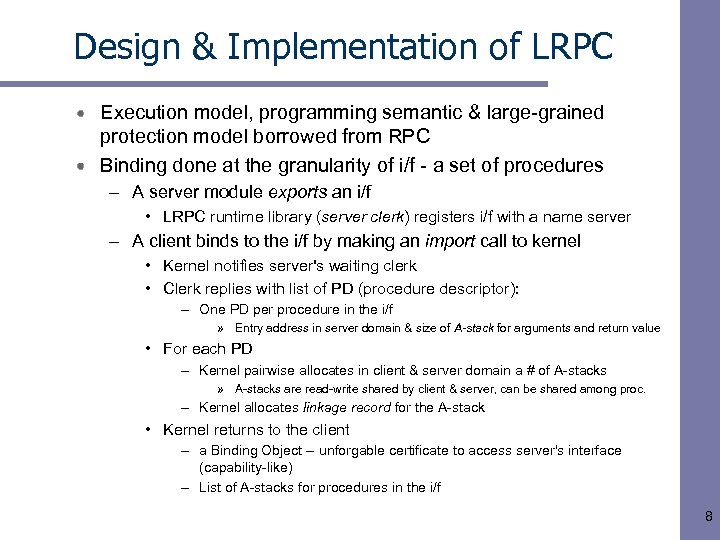

Design & Implementation of LRPC Execution model, programming semantic & large-grained protection model borrowed from RPC Binding done at the granularity of i/f - a set of procedures – A server module exports an i/f • LRPC runtime library (server clerk) registers i/f with a name server – A client binds to the i/f by making an import call to kernel • Kernel notifies server's waiting clerk • Clerk replies with list of PD (procedure descriptor): – One PD per procedure in the i/f » Entry address in server domain & size of A-stack for arguments and return value • For each PD – Kernel pairwise allocates in client & server domain a # of A-stacks » A-stacks are read-write shared by client & server, can be shared among proc. – Kernel allocates linkage record for the A-stack • Kernel returns to the client – a Binding Object -- unforgable certificate to access server's interface (capability-like) – List of A-stacks for procedures in the i/f 8

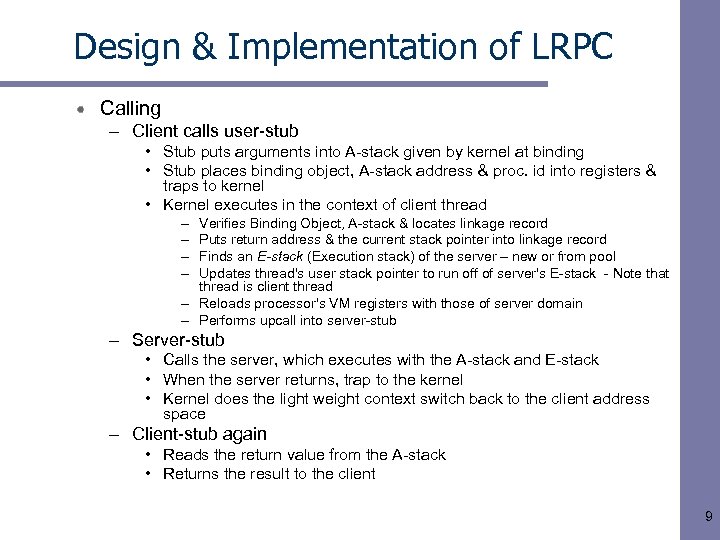

Design & Implementation of LRPC Calling – Client calls user-stub • Stub puts arguments into A-stack given by kernel at binding • Stub places binding object, A-stack address & proc. id into registers & traps to kernel • Kernel executes in the context of client thread – – Verifies Binding Object, A-stack & locates linkage record Puts return address & the current stack pointer into linkage record Finds an E-stack (Execution stack) of the server – new or from pool Updates thread's user stack pointer to run off of server's E-stack - Note that thread is client thread – Reloads processor's VM registers with those of server domain – Performs upcall into server-stub – Server-stub • Calls the server, which executes with the A-stack and E-stack • When the server returns, trap to the kernel • Kernel does the light weight context switch back to the client address space – Client-stub again • Reads the return value from the A-stack • Returns the result to the client 9

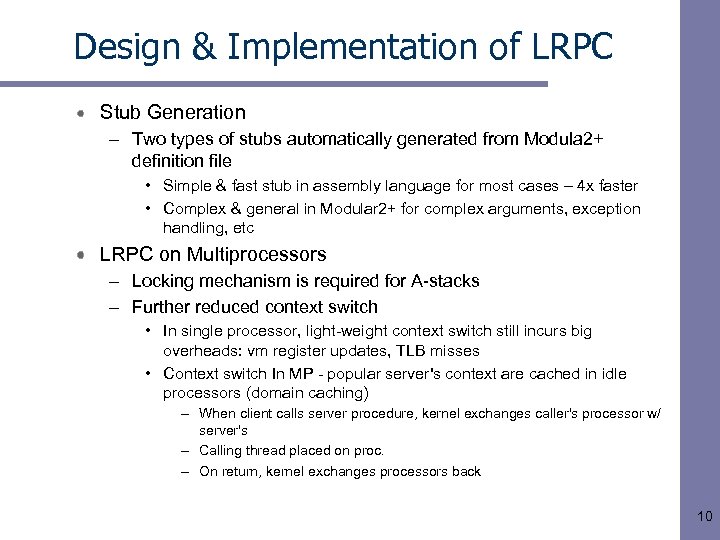

Design & Implementation of LRPC Stub Generation – Two types of stubs automatically generated from Modula 2+ definition file • Simple & fast stub in assembly language for most cases – 4 x faster • Complex & general in Modular 2+ for complex arguments, exception handling, etc LRPC on Multiprocessors – Locking mechanism is required for A-stacks – Further reduced context switch • In single processor, light-weight context switch still incurs big overheads: vm register updates, TLB misses • Context switch In MP - popular server's context are cached in idle processors (domain caching) – When client calls server procedure, kernel exchanges caller's processor w/ server's – Calling thread placed on proc. – On return, kernel exchanges processors back 10

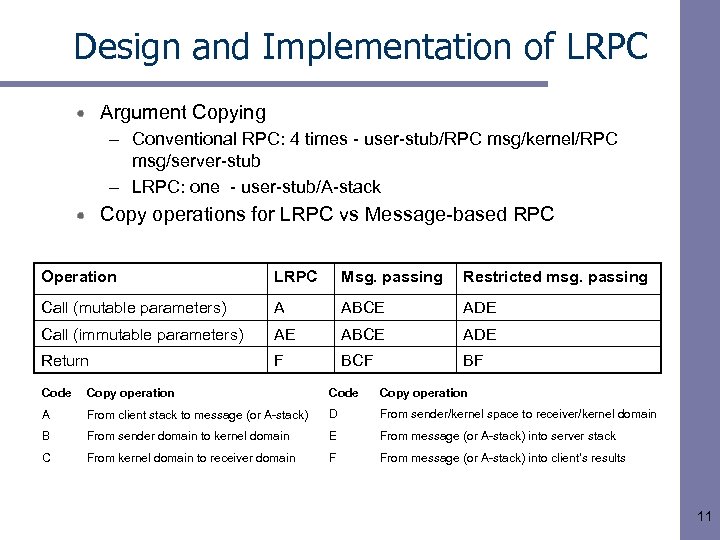

Design and Implementation of LRPC Argument Copying – Conventional RPC: 4 times - user-stub/RPC msg/kernel/RPC msg/server-stub – LRPC: one - user-stub/A-stack Copy operations for LRPC vs Message-based RPC Operation LRPC Msg. passing Restricted msg. passing Call (mutable parameters) A ABCE ADE Call (immutable parameters) AE ABCE ADE Return F BCF BF Code Copy operation A From client stack to message (or A-stack) D From sender/kernel space to receiver/kernel domain B From sender domain to kernel domain E From message (or A-stack) into server stack C From kernel domain to receiver domain F From message (or A-stack) into client’s results 11

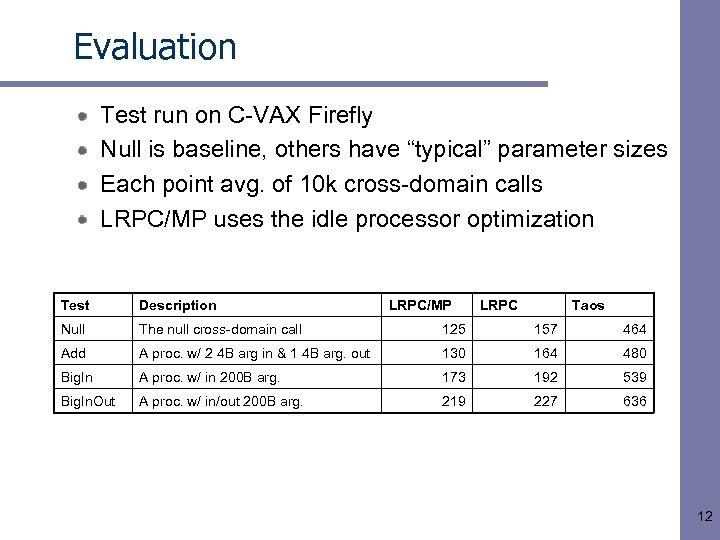

Evaluation Test run on C-VAX Firefly Null is baseline, others have “typical” parameter sizes Each point avg. of 10 k cross-domain calls LRPC/MP uses the idle processor optimization Test Description LRPC/MP LRPC Taos Null The null cross-domain call 125 157 464 Add A proc. w/ 2 4 B arg in & 1 4 B arg. out 130 164 480 Big. In A proc. w/ in 200 B arg. 173 192 539 Big. In. Out A proc. w/ in/out 200 B arg. 219 227 636 12

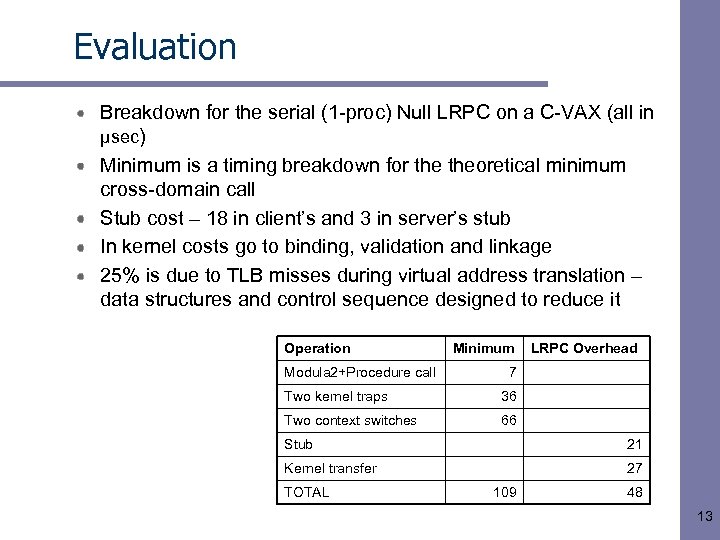

Evaluation Breakdown for the serial (1 -proc) Null LRPC on a C-VAX (all in µsec) Minimum is a timing breakdown for theoretical minimum cross-domain call Stub cost – 18 in client’s and 3 in server’s stub In kernel costs go to binding, validation and linkage 25% is due to TLB misses during virtual address translation – data structures and control sequence designed to reduce it Operation Modula 2+Procedure call Minimum LRPC Overhead 7 Two kernel traps 36 Two context switches 66 Stub 21 Kernel transfer 27 TOTAL 109 48 13

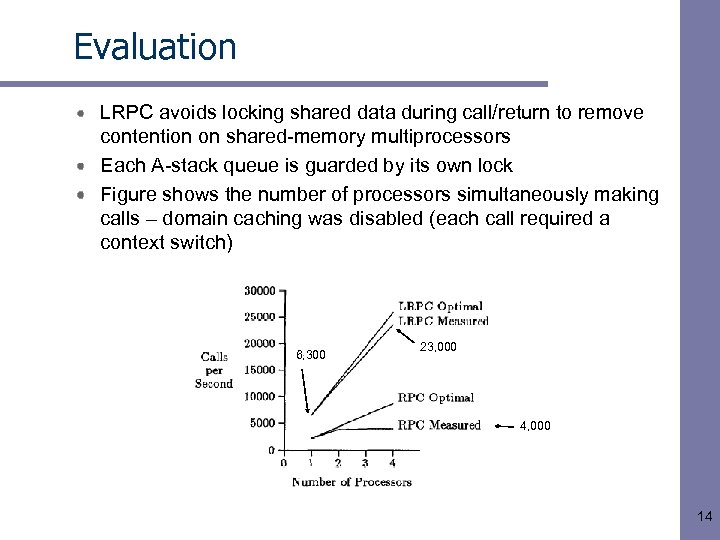

Evaluation LRPC avoids locking shared data during call/return to remove contention on shared-memory multiprocessors Each A-stack queue is guarded by its own lock Figure shows the number of processors simultaneously making calls – domain caching was disabled (each call required a context switch) 6, 300 23, 000 4, 000 14

The uncommon cases Working well in common case & acceptable in less common ones (just a few examples) Transparency & cross-machine calls – Cross-domain/machine? Early decision – first instruction in stub. Cost of indirection is negligible in comparison A-stacks – size and number – PD lists are defined during compilation – If size of arguments is known, A-stack size can be determined statically, otherwise use a default size = Ethernet packet size – Beyond that, use an out-of-band mem. segment ($$ & infrequent) Domain termination – e. g. unhandled exception or user action – All resources reclaimed by the OS • All bindings are revoked • All threads are stopped – If the terminating domain is a server handling a LRPC request, the outstanding call must return to the client domain • To handle outstanding threads, you can create a new one to replace captured ones and later kill the captured one upon return 15

Conclusion LRPC – combining elements from capabilities & RPC systems Adopts common-case approach to comm. A viable comm. alternative for small-kernel OSs Optimized for comm. b/ protection domains in same machine Combines control transfer & comm. model of capability systems w/ the programming semantics of & large-grained protection model of RPC Techniques – Simple control transfer – client’s thread does the work in servers domain – Simple data transfer - ~PC passing parameter mechanism (shared stack) – Simple & highly optimized stubs – Design for concurrency – avoids shared data structure bottlenecks Implemented in the DEC C-VAX Firefly 16

2c1fa324e31c988ae2952315ea22e235.ppt