42403afbca87110094fee8a678c92546.ppt

- Количество слайдов: 61

Lecture 8: Clustering Principles of Information Retrieval Prof. Ray Larson University of California, Berkeley School of Information Some slides in this lecture were originally created by Prof. Marti Hearst IS 240 – Spring 2009. 02. 18 - SLIDE 1

Lecture 8: Clustering Principles of Information Retrieval Prof. Ray Larson University of California, Berkeley School of Information Some slides in this lecture were originally created by Prof. Marti Hearst IS 240 – Spring 2009. 02. 18 - SLIDE 1

Mini-TREC • Need to make groups – Today • Systems – SMART (not recommended…) • ftp: //ftp. cs. cornell. edu/pub/smart – MG (We have a special version if interested) • http: //www. mds. rmit. edu. au/mg/welcome. html – Cheshire II & 3 • II = ftp: //cheshire. berkeley. edu/pub/cheshire & http: //cheshire. berkeley. edu • 3 = http: //cheshire 3. sourceforge. org – Zprise (Older search system from NIST) • http: //www. itl. nist. gov/iaui/894. 02/works/zp 2. html – IRF (new Java-based IR framework from NIST) • http: //www. itl. nist. gov/iaui/894. 02/projects/irf. html – Lemur • http: //www-2. cs. cmu. edu/~lemur – Lucene (Java-based Text search engine) • http: //jakarta. apache. org/lucene/docs/index. html – Others? ? (See http: //searchtools. com ) IS 240 – Spring 2009. 02. 18 - SLIDE 2

Mini-TREC • Need to make groups – Today • Systems – SMART (not recommended…) • ftp: //ftp. cs. cornell. edu/pub/smart – MG (We have a special version if interested) • http: //www. mds. rmit. edu. au/mg/welcome. html – Cheshire II & 3 • II = ftp: //cheshire. berkeley. edu/pub/cheshire & http: //cheshire. berkeley. edu • 3 = http: //cheshire 3. sourceforge. org – Zprise (Older search system from NIST) • http: //www. itl. nist. gov/iaui/894. 02/works/zp 2. html – IRF (new Java-based IR framework from NIST) • http: //www. itl. nist. gov/iaui/894. 02/projects/irf. html – Lemur • http: //www-2. cs. cmu. edu/~lemur – Lucene (Java-based Text search engine) • http: //jakarta. apache. org/lucene/docs/index. html – Others? ? (See http: //searchtools. com ) IS 240 – Spring 2009. 02. 18 - SLIDE 2

Mini-TREC • Proposed Schedule – February 16 – Database and previous Queries – February 25 – report on system acquisition and setup – March 9, New Queries for testing… – April 20, Results due – April 27, Results and system rankings – May 6 Group reports and discussion IS 240 – Spring 2009. 02. 18 - SLIDE 3

Mini-TREC • Proposed Schedule – February 16 – Database and previous Queries – February 25 – report on system acquisition and setup – March 9, New Queries for testing… – April 20, Results due – April 27, Results and system rankings – May 6 Group reports and discussion IS 240 – Spring 2009. 02. 18 - SLIDE 3

Review: IR Models • Set Theoretic Models – Boolean – Fuzzy – Extended Boolean • Vector Models (Algebraic) • Probabilistic Models (probabilistic) IS 240 – Spring 2009. 02. 18 - SLIDE 4

Review: IR Models • Set Theoretic Models – Boolean – Fuzzy – Extended Boolean • Vector Models (Algebraic) • Probabilistic Models (probabilistic) IS 240 – Spring 2009. 02. 18 - SLIDE 4

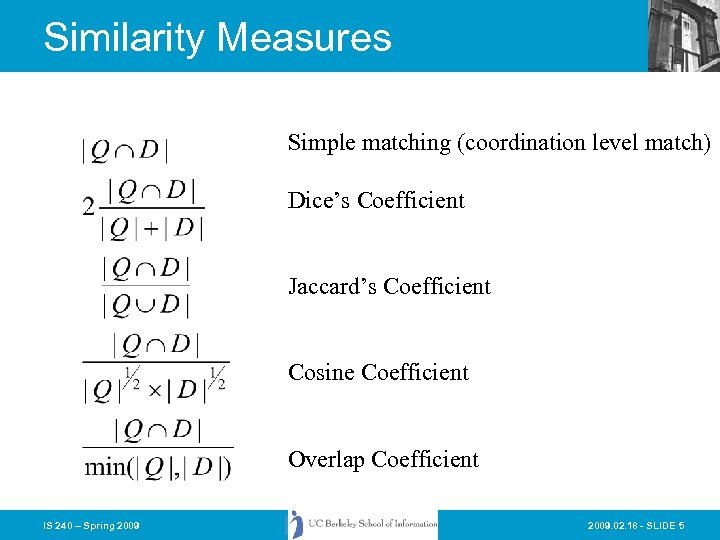

Similarity Measures Simple matching (coordination level match) Dice’s Coefficient Jaccard’s Coefficient Cosine Coefficient Overlap Coefficient IS 240 – Spring 2009. 02. 18 - SLIDE 5

Similarity Measures Simple matching (coordination level match) Dice’s Coefficient Jaccard’s Coefficient Cosine Coefficient Overlap Coefficient IS 240 – Spring 2009. 02. 18 - SLIDE 5

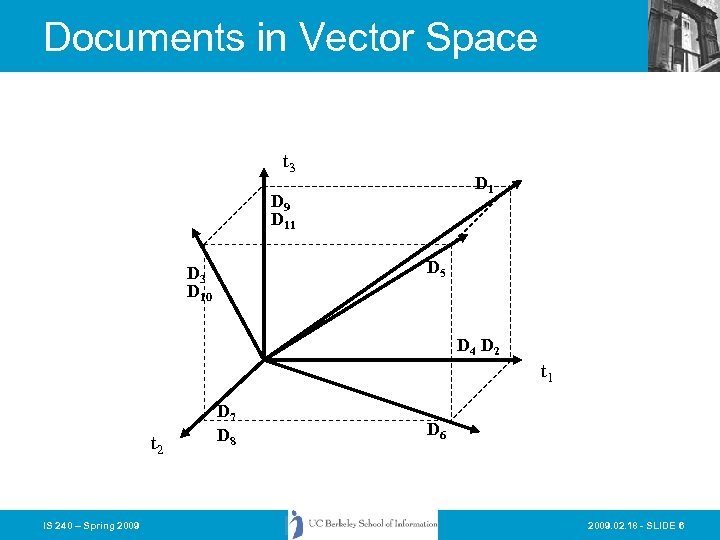

Documents in Vector Space t 3 D 1 D 9 D 11 D 5 D 3 D 10 D 4 D 2 t 1 t 2 IS 240 – Spring 2009 D 7 D 8 D 6 2009. 02. 18 - SLIDE 6

Documents in Vector Space t 3 D 1 D 9 D 11 D 5 D 3 D 10 D 4 D 2 t 1 t 2 IS 240 – Spring 2009 D 7 D 8 D 6 2009. 02. 18 - SLIDE 6

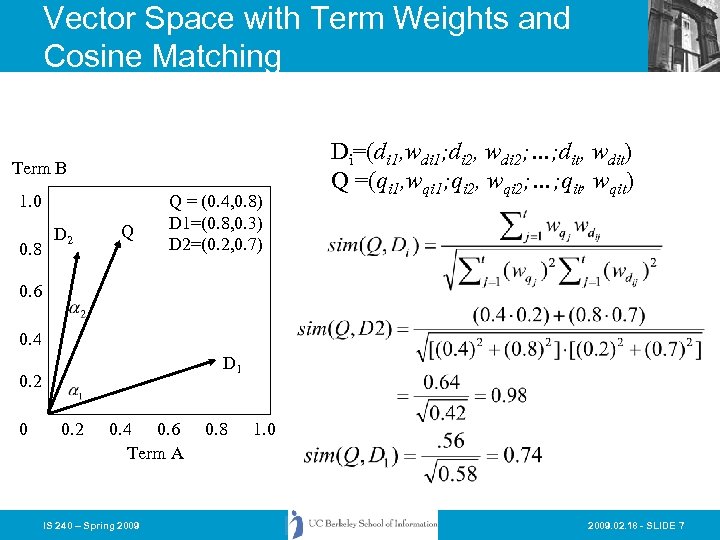

Vector Space with Term Weights and Cosine Matching Term B 1. 0 0. 8 D 2 Q Q = (0. 4, 0. 8) D 1=(0. 8, 0. 3) D 2=(0. 2, 0. 7) Di=(di 1, wdi 1; di 2, wdi 2; …; dit, wdit) Q =(qi 1, wqi 1; qi 2, wqi 2; …; qit, wqit) 0. 6 0. 4 D 1 0. 2 0. 4 0. 6 Term A IS 240 – Spring 2009 0. 8 1. 0 2009. 02. 18 - SLIDE 7

Vector Space with Term Weights and Cosine Matching Term B 1. 0 0. 8 D 2 Q Q = (0. 4, 0. 8) D 1=(0. 8, 0. 3) D 2=(0. 2, 0. 7) Di=(di 1, wdi 1; di 2, wdi 2; …; dit, wdit) Q =(qi 1, wqi 1; qi 2, wqi 2; …; qit, wqit) 0. 6 0. 4 D 1 0. 2 0. 4 0. 6 Term A IS 240 – Spring 2009 0. 8 1. 0 2009. 02. 18 - SLIDE 7

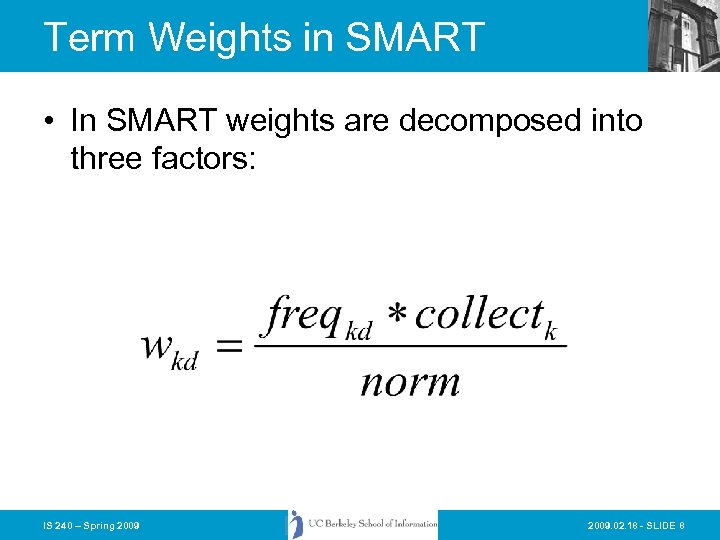

Term Weights in SMART • In SMART weights are decomposed into three factors: IS 240 – Spring 2009. 02. 18 - SLIDE 8

Term Weights in SMART • In SMART weights are decomposed into three factors: IS 240 – Spring 2009. 02. 18 - SLIDE 8

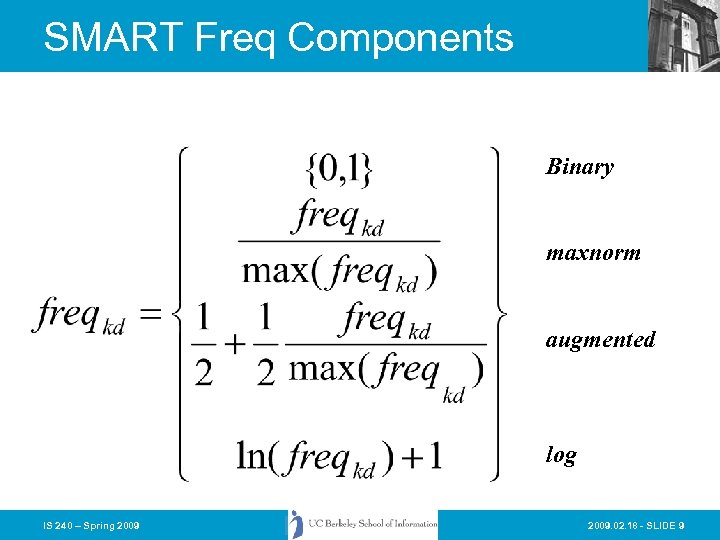

SMART Freq Components Binary maxnorm augmented log IS 240 – Spring 2009. 02. 18 - SLIDE 9

SMART Freq Components Binary maxnorm augmented log IS 240 – Spring 2009. 02. 18 - SLIDE 9

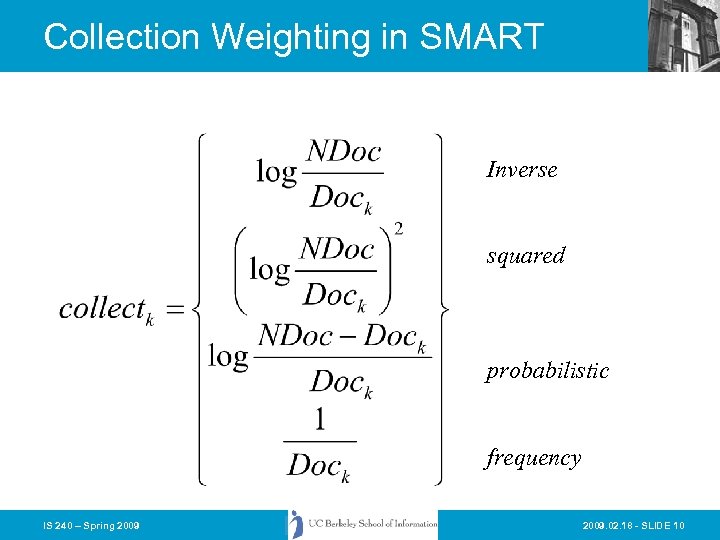

Collection Weighting in SMART Inverse squared probabilistic frequency IS 240 – Spring 2009. 02. 18 - SLIDE 10

Collection Weighting in SMART Inverse squared probabilistic frequency IS 240 – Spring 2009. 02. 18 - SLIDE 10

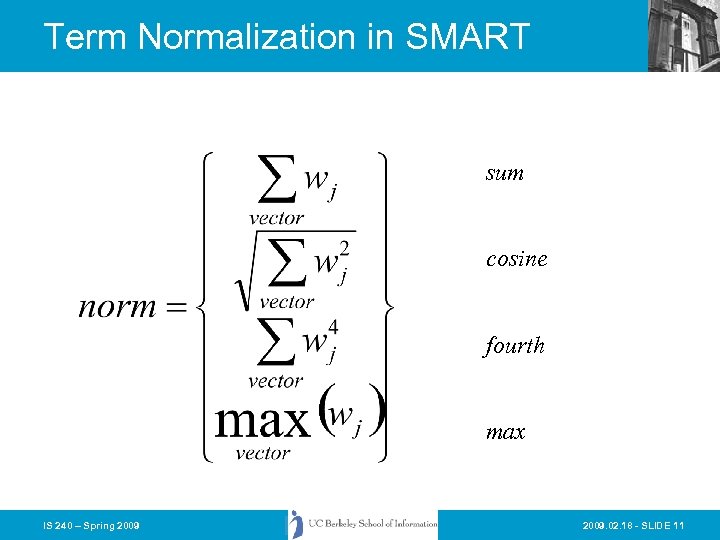

Term Normalization in SMART sum cosine fourth max IS 240 – Spring 2009. 02. 18 - SLIDE 11

Term Normalization in SMART sum cosine fourth max IS 240 – Spring 2009. 02. 18 - SLIDE 11

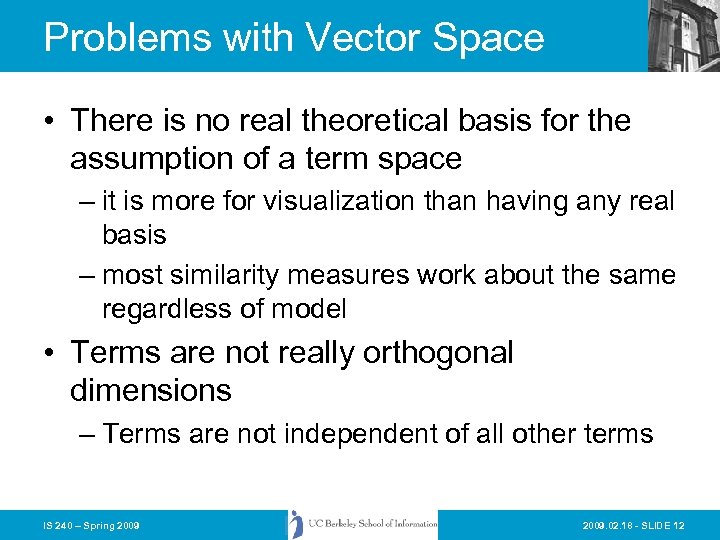

Problems with Vector Space • There is no real theoretical basis for the assumption of a term space – it is more for visualization than having any real basis – most similarity measures work about the same regardless of model • Terms are not really orthogonal dimensions – Terms are not independent of all other terms IS 240 – Spring 2009. 02. 18 - SLIDE 12

Problems with Vector Space • There is no real theoretical basis for the assumption of a term space – it is more for visualization than having any real basis – most similarity measures work about the same regardless of model • Terms are not really orthogonal dimensions – Terms are not independent of all other terms IS 240 – Spring 2009. 02. 18 - SLIDE 12

Today • Clustering • Automatic Classification • Cluster-enhanced search IS 240 – Spring 2009. 02. 18 - SLIDE 13

Today • Clustering • Automatic Classification • Cluster-enhanced search IS 240 – Spring 2009. 02. 18 - SLIDE 13

Overview • Introduction to Automatic Classification and Clustering • Classification of Classification Methods • Classification Clusters and Information Retrieval in Cheshire II • DARPA Unfamiliar Metadata Project 2009. 02. 18 - SLIDE 14

Overview • Introduction to Automatic Classification and Clustering • Classification of Classification Methods • Classification Clusters and Information Retrieval in Cheshire II • DARPA Unfamiliar Metadata Project 2009. 02. 18 - SLIDE 14

Classification • The grouping together of items (including documents or their representations) which are then treated as a unit. The groupings may be predefined or generated algorithmically. The process itself may be manual or automated. • In document classification the items are grouped together because they are likely to be wanted together – For example, items about the same topic. IS 240 – Spring 2009. 02. 18 - SLIDE 15

Classification • The grouping together of items (including documents or their representations) which are then treated as a unit. The groupings may be predefined or generated algorithmically. The process itself may be manual or automated. • In document classification the items are grouped together because they are likely to be wanted together – For example, items about the same topic. IS 240 – Spring 2009. 02. 18 - SLIDE 15

Automatic Indexing and Classification • Automatic indexing is typically the simple deriving of keywords from a document and providing access to all of those words. • More complex Automatic Indexing Systems attempt to select controlled vocabulary terms based on terms in the document. • Automatic classification attempts to automatically group similar documents using either: – A fully automatic clustering method. – An established classification scheme and set of documents already indexed by that scheme. IS 240 – Spring 2009. 02. 18 - SLIDE 16

Automatic Indexing and Classification • Automatic indexing is typically the simple deriving of keywords from a document and providing access to all of those words. • More complex Automatic Indexing Systems attempt to select controlled vocabulary terms based on terms in the document. • Automatic classification attempts to automatically group similar documents using either: – A fully automatic clustering method. – An established classification scheme and set of documents already indexed by that scheme. IS 240 – Spring 2009. 02. 18 - SLIDE 16

Background and Origins • Early suggestion by Fairthorne – “The Mathematics of Classification” • Early experiments by Maron (1961) and Borko and Bernick(1963) • Work in Numerical Taxonomy and its application to Information retrieval Jardine, Sibson, van Rijsbergen, Salton (1970’s). • Early IR clustering work more concerned with efficiency issues than semantic issues. 2009. 02. 18 - SLIDE 17

Background and Origins • Early suggestion by Fairthorne – “The Mathematics of Classification” • Early experiments by Maron (1961) and Borko and Bernick(1963) • Work in Numerical Taxonomy and its application to Information retrieval Jardine, Sibson, van Rijsbergen, Salton (1970’s). • Early IR clustering work more concerned with efficiency issues than semantic issues. 2009. 02. 18 - SLIDE 17

Document Space has High Dimensionality • What happens beyond three dimensions? • Similarity still has to do with how many tokens are shared in common. • More terms -> harder to understand which subsets of words are shared among similar documents. • One approach to handling high dimensionality: Clustering IS 240 – Spring 2009. 02. 18 - SLIDE 18

Document Space has High Dimensionality • What happens beyond three dimensions? • Similarity still has to do with how many tokens are shared in common. • More terms -> harder to understand which subsets of words are shared among similar documents. • One approach to handling high dimensionality: Clustering IS 240 – Spring 2009. 02. 18 - SLIDE 18

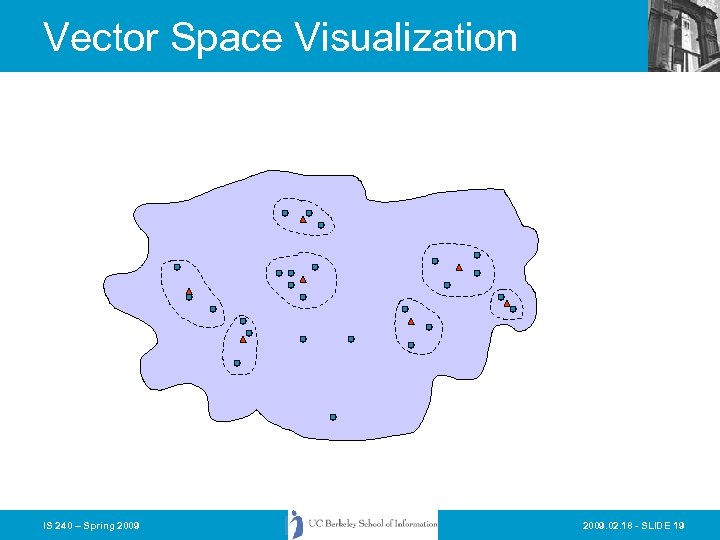

Vector Space Visualization IS 240 – Spring 2009. 02. 18 - SLIDE 19

Vector Space Visualization IS 240 – Spring 2009. 02. 18 - SLIDE 19

Cluster Hypothesis • The basic notion behind the use of classification and clustering methods: • “Closely associated documents tend to be relevant to the same requests. ” – C. J. van Rijsbergen IS 240 – Spring 2009. 02. 18 - SLIDE 20

Cluster Hypothesis • The basic notion behind the use of classification and clustering methods: • “Closely associated documents tend to be relevant to the same requests. ” – C. J. van Rijsbergen IS 240 – Spring 2009. 02. 18 - SLIDE 20

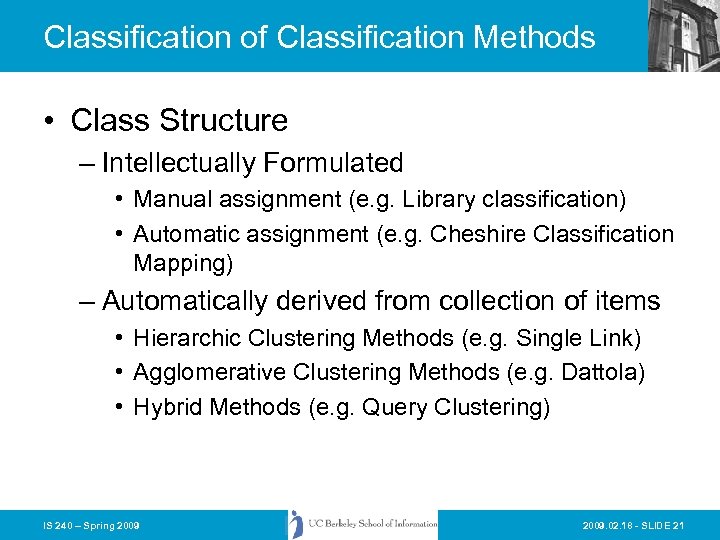

Classification of Classification Methods • Class Structure – Intellectually Formulated • Manual assignment (e. g. Library classification) • Automatic assignment (e. g. Cheshire Classification Mapping) – Automatically derived from collection of items • Hierarchic Clustering Methods (e. g. Single Link) • Agglomerative Clustering Methods (e. g. Dattola) • Hybrid Methods (e. g. Query Clustering) IS 240 – Spring 2009. 02. 18 - SLIDE 21

Classification of Classification Methods • Class Structure – Intellectually Formulated • Manual assignment (e. g. Library classification) • Automatic assignment (e. g. Cheshire Classification Mapping) – Automatically derived from collection of items • Hierarchic Clustering Methods (e. g. Single Link) • Agglomerative Clustering Methods (e. g. Dattola) • Hybrid Methods (e. g. Query Clustering) IS 240 – Spring 2009. 02. 18 - SLIDE 21

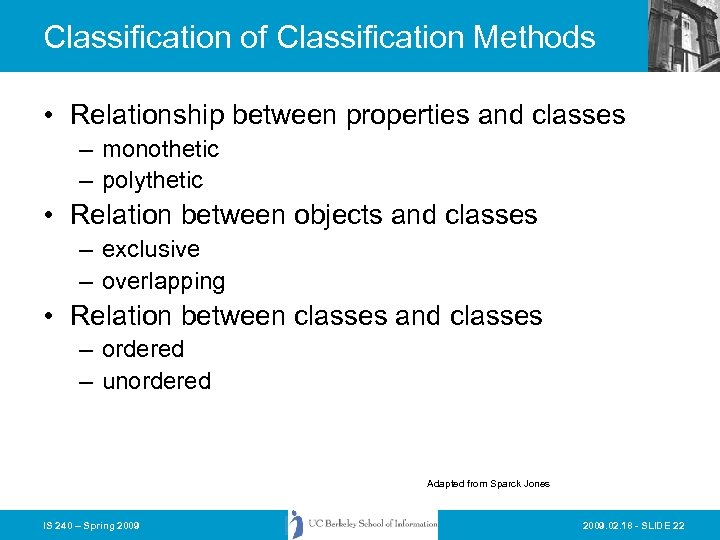

Classification of Classification Methods • Relationship between properties and classes – monothetic – polythetic • Relation between objects and classes – exclusive – overlapping • Relation between classes and classes – ordered – unordered Adapted from Sparck Jones IS 240 – Spring 2009. 02. 18 - SLIDE 22

Classification of Classification Methods • Relationship between properties and classes – monothetic – polythetic • Relation between objects and classes – exclusive – overlapping • Relation between classes and classes – ordered – unordered Adapted from Sparck Jones IS 240 – Spring 2009. 02. 18 - SLIDE 22

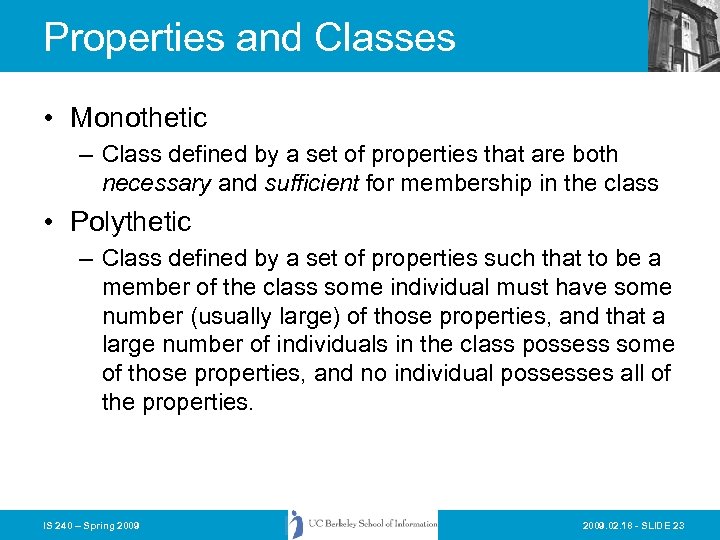

Properties and Classes • Monothetic – Class defined by a set of properties that are both necessary and sufficient for membership in the class • Polythetic – Class defined by a set of properties such that to be a member of the class some individual must have some number (usually large) of those properties, and that a large number of individuals in the class possess some of those properties, and no individual possesses all of the properties. IS 240 – Spring 2009. 02. 18 - SLIDE 23

Properties and Classes • Monothetic – Class defined by a set of properties that are both necessary and sufficient for membership in the class • Polythetic – Class defined by a set of properties such that to be a member of the class some individual must have some number (usually large) of those properties, and that a large number of individuals in the class possess some of those properties, and no individual possesses all of the properties. IS 240 – Spring 2009. 02. 18 - SLIDE 23

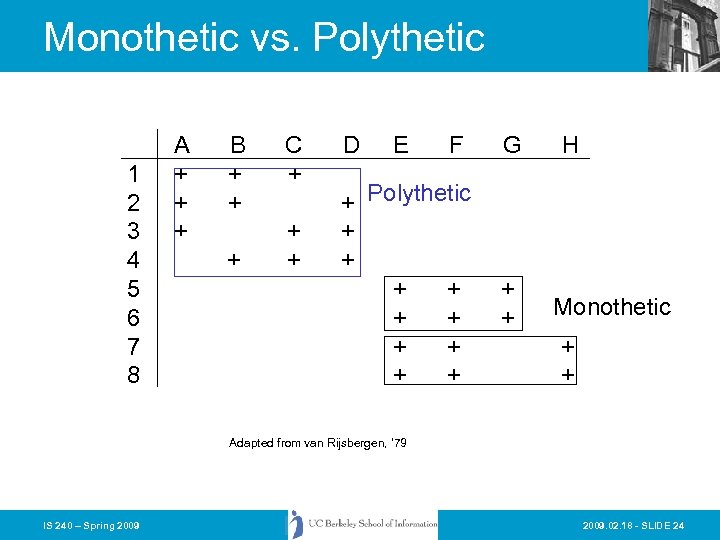

Monothetic vs. Polythetic 1 2 3 4 5 6 7 8 A + + + B + + + C + + + D E F + Polythetic + + + + + G + + H Monothetic + + Adapted from van Rijsbergen, ‘ 79 IS 240 – Spring 2009. 02. 18 - SLIDE 24

Monothetic vs. Polythetic 1 2 3 4 5 6 7 8 A + + + B + + + C + + + D E F + Polythetic + + + + + G + + H Monothetic + + Adapted from van Rijsbergen, ‘ 79 IS 240 – Spring 2009. 02. 18 - SLIDE 24

Exclusive Vs. Overlapping • Item can either belong exclusively to a single class • Items can belong to many classes, sometimes with a “membership weight” IS 240 – Spring 2009. 02. 18 - SLIDE 25

Exclusive Vs. Overlapping • Item can either belong exclusively to a single class • Items can belong to many classes, sometimes with a “membership weight” IS 240 – Spring 2009. 02. 18 - SLIDE 25

Ordered Vs. Unordered • Ordered classes have some sort of structure imposed on them – Hierarchies are typical of ordered classes • Unordered classes have no imposed precedence or structure and each class is considered on the same “level” – Typical in agglomerative methods IS 240 – Spring 2009. 02. 18 - SLIDE 26

Ordered Vs. Unordered • Ordered classes have some sort of structure imposed on them – Hierarchies are typical of ordered classes • Unordered classes have no imposed precedence or structure and each class is considered on the same “level” – Typical in agglomerative methods IS 240 – Spring 2009. 02. 18 - SLIDE 26

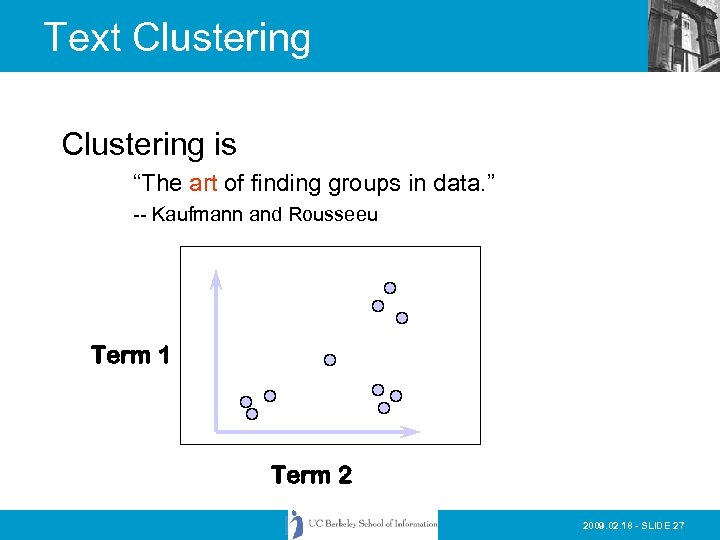

Text Clustering is “The art of finding groups in data. ” -- Kaufmann and Rousseeu Term 1 Term 2 2009. 02. 18 - SLIDE 27

Text Clustering is “The art of finding groups in data. ” -- Kaufmann and Rousseeu Term 1 Term 2 2009. 02. 18 - SLIDE 27

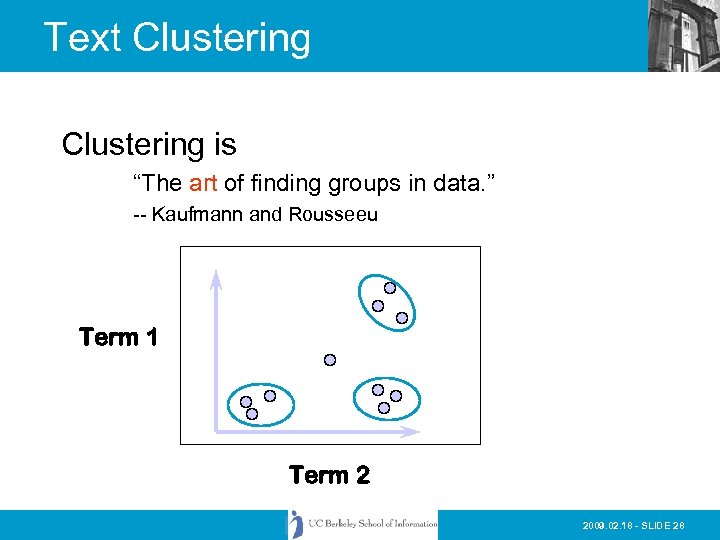

Text Clustering is “The art of finding groups in data. ” -- Kaufmann and Rousseeu Term 1 Term 2 2009. 02. 18 - SLIDE 28

Text Clustering is “The art of finding groups in data. ” -- Kaufmann and Rousseeu Term 1 Term 2 2009. 02. 18 - SLIDE 28

Text Clustering • Finds overall similarities among groups of documents • Finds overall similarities among groups of tokens • Picks out some themes, ignores others IS 240 – Spring 2009. 02. 18 - SLIDE 29

Text Clustering • Finds overall similarities among groups of documents • Finds overall similarities among groups of tokens • Picks out some themes, ignores others IS 240 – Spring 2009. 02. 18 - SLIDE 29

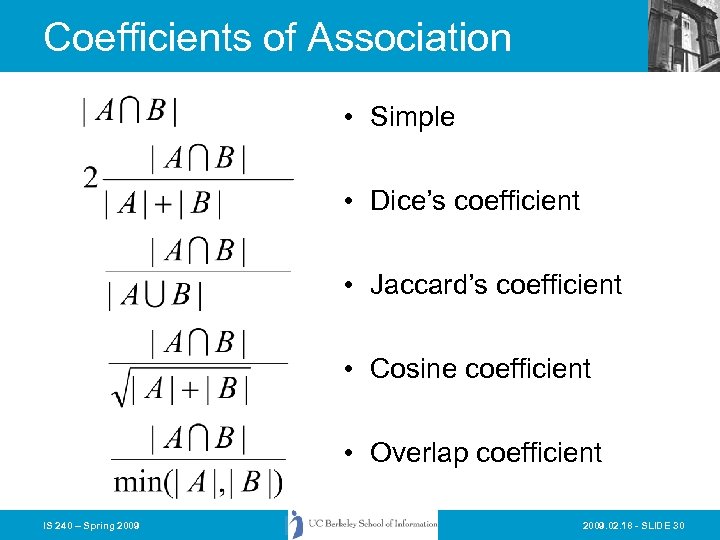

Coefficients of Association • Simple • Dice’s coefficient • Jaccard’s coefficient • Cosine coefficient • Overlap coefficient IS 240 – Spring 2009. 02. 18 - SLIDE 30

Coefficients of Association • Simple • Dice’s coefficient • Jaccard’s coefficient • Cosine coefficient • Overlap coefficient IS 240 – Spring 2009. 02. 18 - SLIDE 30

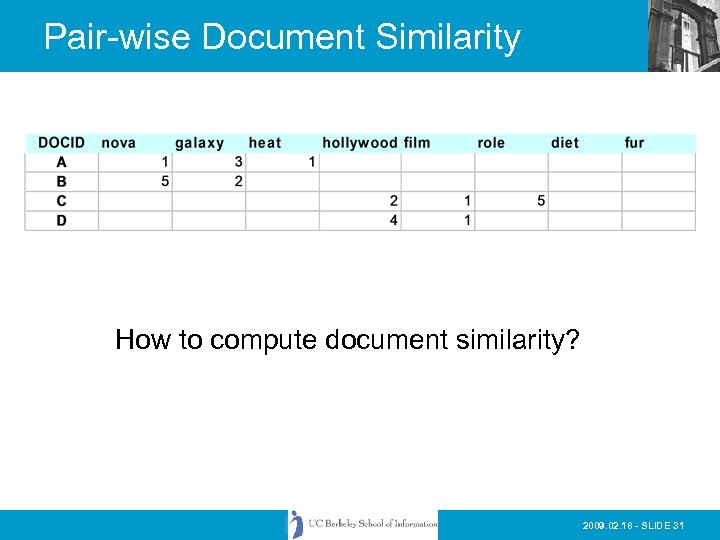

Pair-wise Document Similarity How to compute document similarity? 2009. 02. 18 - SLIDE 31

Pair-wise Document Similarity How to compute document similarity? 2009. 02. 18 - SLIDE 31

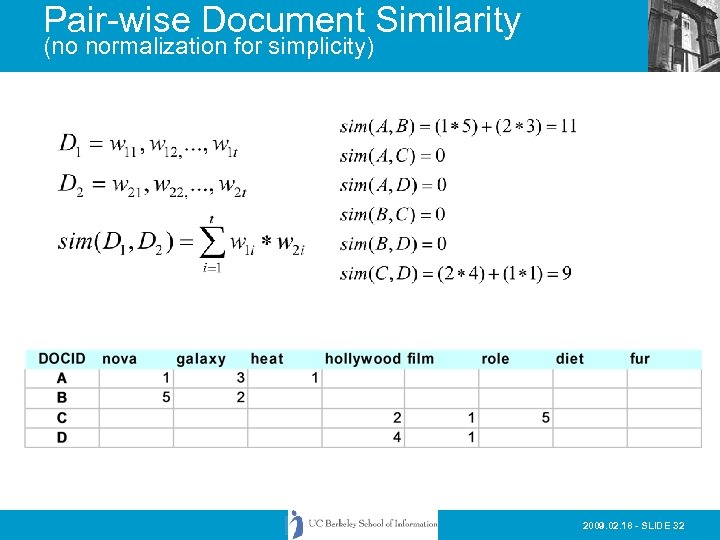

Pair-wise Document Similarity (no normalization for simplicity) 2009. 02. 18 - SLIDE 32

Pair-wise Document Similarity (no normalization for simplicity) 2009. 02. 18 - SLIDE 32

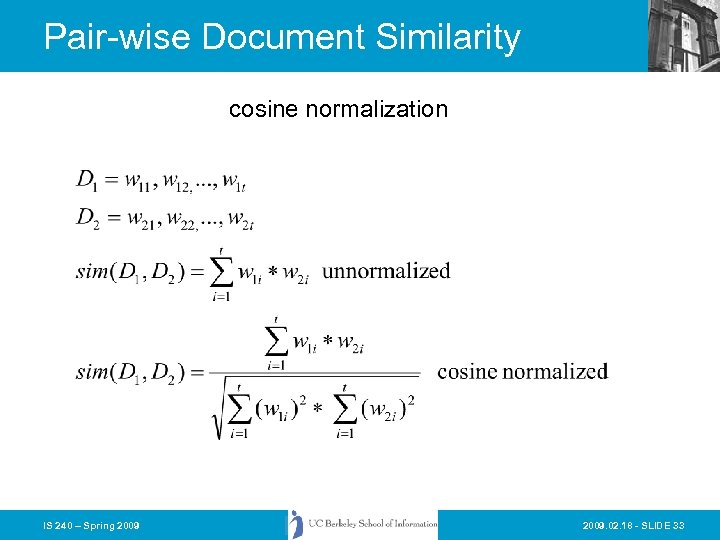

Pair-wise Document Similarity cosine normalization IS 240 – Spring 2009. 02. 18 - SLIDE 33

Pair-wise Document Similarity cosine normalization IS 240 – Spring 2009. 02. 18 - SLIDE 33

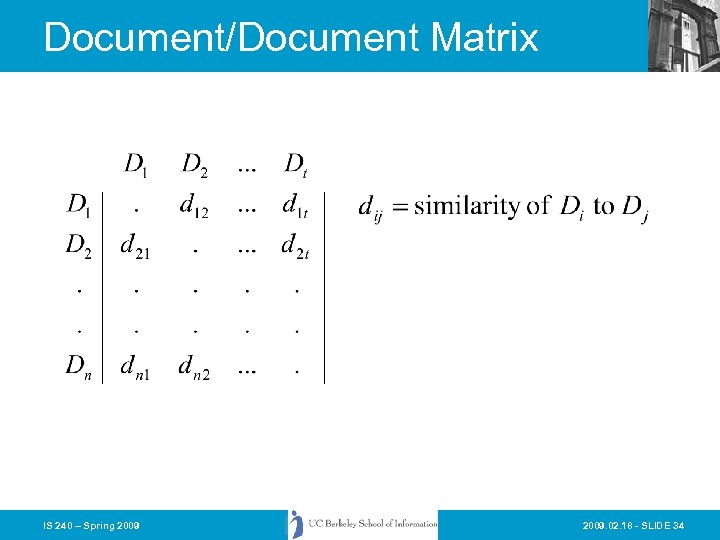

Document/Document Matrix IS 240 – Spring 2009. 02. 18 - SLIDE 34

Document/Document Matrix IS 240 – Spring 2009. 02. 18 - SLIDE 34

Clustering Methods • • Hierarchical Agglomerative Hybrid Automatic Class Assignment IS 240 – Spring 2009. 02. 18 - SLIDE 35

Clustering Methods • • Hierarchical Agglomerative Hybrid Automatic Class Assignment IS 240 – Spring 2009. 02. 18 - SLIDE 35

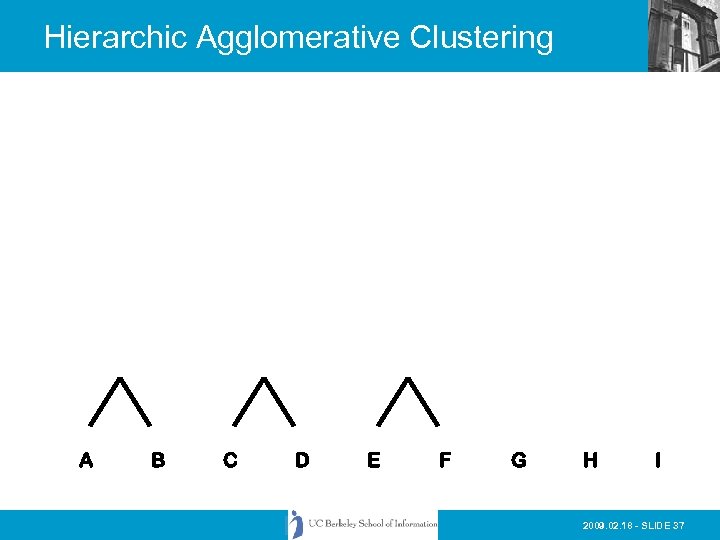

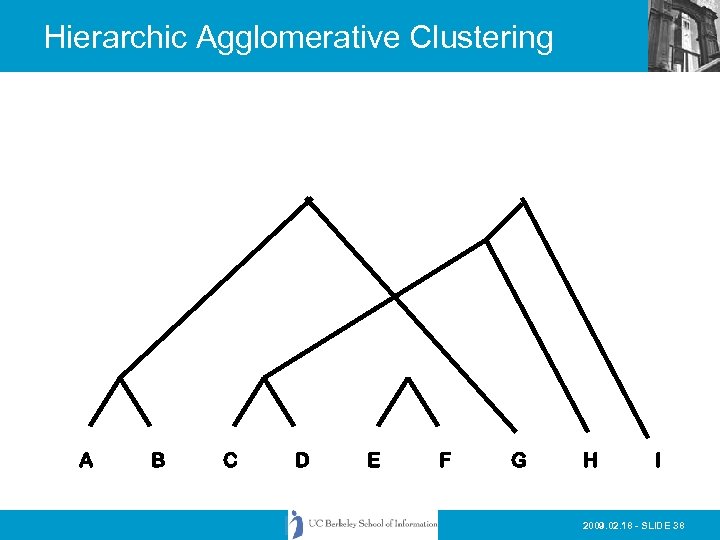

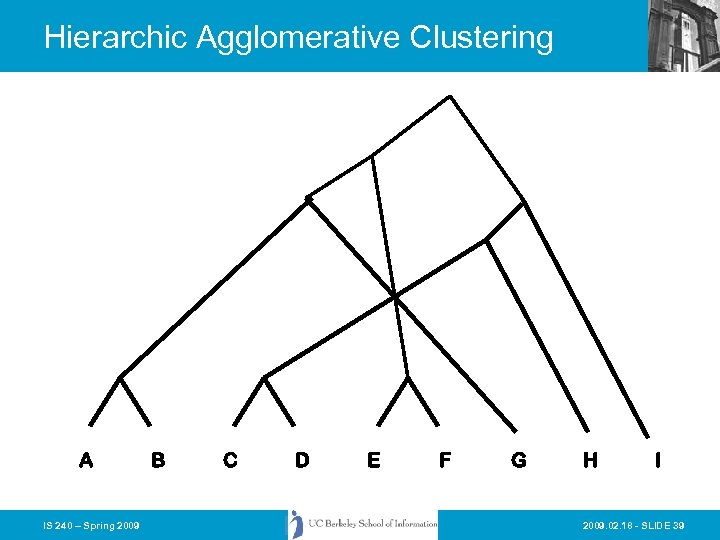

Hierarchic Agglomerative Clustering • Basic method: • 1) Calculate all of the interdocument similarity coefficients • 2) Assign each document to its own cluster • 3) Fuse the most similar pair of current clusters • 4) Update the similarity matrix by deleting the rows for the fused clusters and calculating entries for the row and column representing the new cluster (centroid) • 5) Return to step 3 if there is more than one cluster left IS 240 – Spring 2009. 02. 18 - SLIDE 36

Hierarchic Agglomerative Clustering • Basic method: • 1) Calculate all of the interdocument similarity coefficients • 2) Assign each document to its own cluster • 3) Fuse the most similar pair of current clusters • 4) Update the similarity matrix by deleting the rows for the fused clusters and calculating entries for the row and column representing the new cluster (centroid) • 5) Return to step 3 if there is more than one cluster left IS 240 – Spring 2009. 02. 18 - SLIDE 36

Hierarchic Agglomerative Clustering A B C D E F G H I 2009. 02. 18 - SLIDE 37

Hierarchic Agglomerative Clustering A B C D E F G H I 2009. 02. 18 - SLIDE 37

Hierarchic Agglomerative Clustering A B C D E F G H I 2009. 02. 18 - SLIDE 38

Hierarchic Agglomerative Clustering A B C D E F G H I 2009. 02. 18 - SLIDE 38

Hierarchic Agglomerative Clustering A IS 240 – Spring 2009 B C D E F G H I 2009. 02. 18 - SLIDE 39

Hierarchic Agglomerative Clustering A IS 240 – Spring 2009 B C D E F G H I 2009. 02. 18 - SLIDE 39

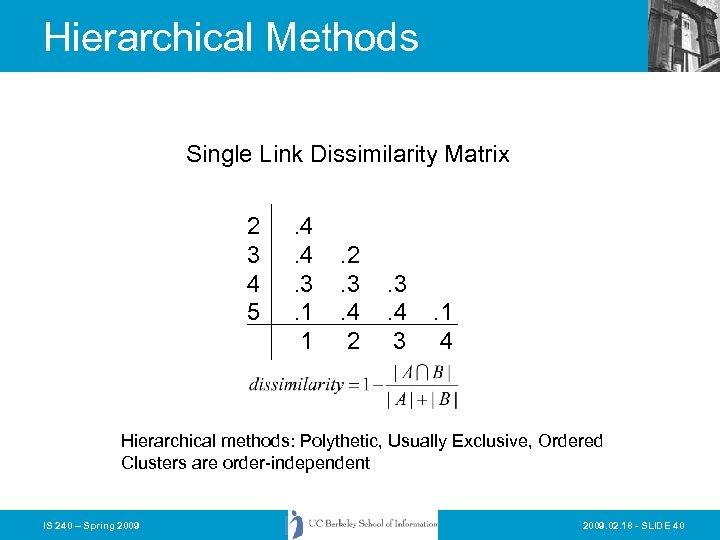

Hierarchical Methods Single Link Dissimilarity Matrix 2 3 4 5 . 4. 4. 3. 1 1 . 2. 3. 4 2 . 3. 4 3 . 1 4 Hierarchical methods: Polythetic, Usually Exclusive, Ordered Clusters are order-independent IS 240 – Spring 2009. 02. 18 - SLIDE 40

Hierarchical Methods Single Link Dissimilarity Matrix 2 3 4 5 . 4. 4. 3. 1 1 . 2. 3. 4 2 . 3. 4 3 . 1 4 Hierarchical methods: Polythetic, Usually Exclusive, Ordered Clusters are order-independent IS 240 – Spring 2009. 02. 18 - SLIDE 40

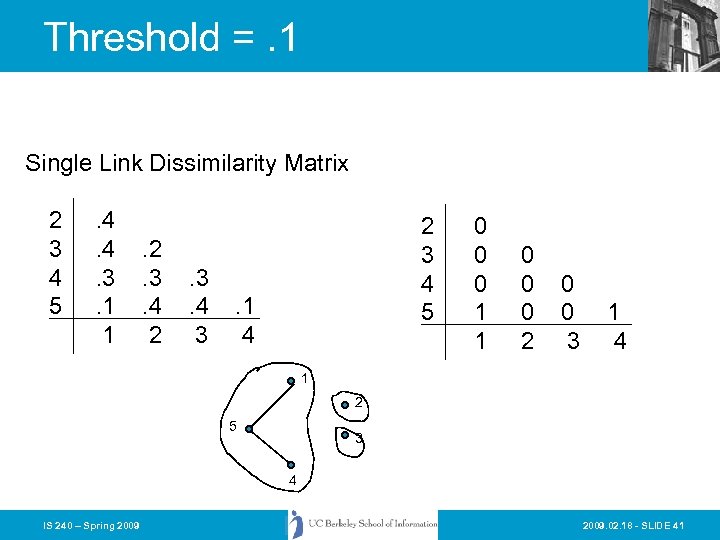

Threshold =. 1 Single Link Dissimilarity Matrix 2 3 4 5 . 4. 4. 3. 1 1 . 2. 3. 4 2 . 3. 4 3 2 3 4 5 . 1 4 0 0 0 1 1 0 0 0 2 0 0 3 1 4 1 2 5 3 4 IS 240 – Spring 2009. 02. 18 - SLIDE 41

Threshold =. 1 Single Link Dissimilarity Matrix 2 3 4 5 . 4. 4. 3. 1 1 . 2. 3. 4 2 . 3. 4 3 2 3 4 5 . 1 4 0 0 0 1 1 0 0 0 2 0 0 3 1 4 1 2 5 3 4 IS 240 – Spring 2009. 02. 18 - SLIDE 41

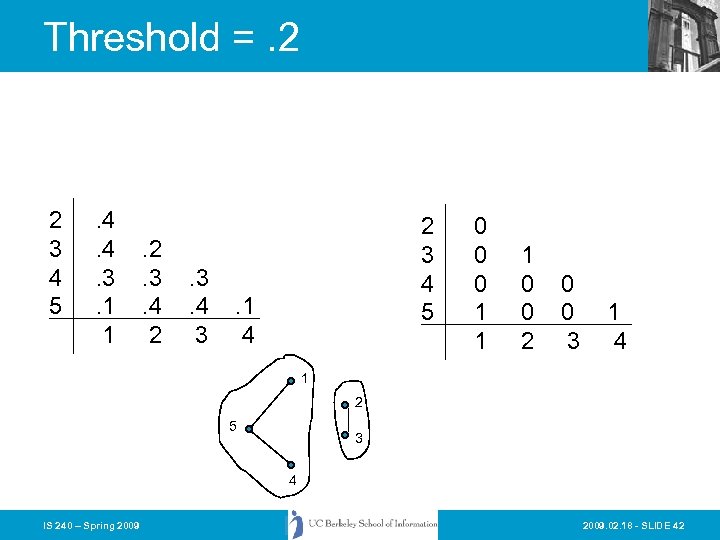

Threshold =. 2 2 3 4 5 . 4. 4. 3. 1 1 . 2. 3. 4 2 . 3. 4 3 2 3 4 5 . 1 4 0 0 0 1 1 1 0 0 2 0 0 3 1 4 1 2 5 3 4 IS 240 – Spring 2009. 02. 18 - SLIDE 42

Threshold =. 2 2 3 4 5 . 4. 4. 3. 1 1 . 2. 3. 4 2 . 3. 4 3 2 3 4 5 . 1 4 0 0 0 1 1 1 0 0 2 0 0 3 1 4 1 2 5 3 4 IS 240 – Spring 2009. 02. 18 - SLIDE 42

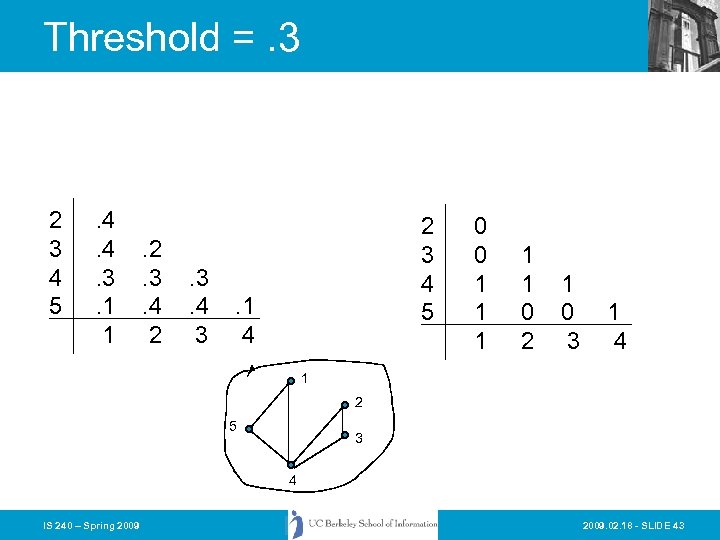

Threshold =. 3 2 3 4 5 . 4. 4. 3. 1 1 . 2. 3. 4 2 . 3. 4 3 2 3 4 5 . 1 4 0 0 1 1 1 0 2 1 0 3 1 4 1 2 5 3 4 IS 240 – Spring 2009. 02. 18 - SLIDE 43

Threshold =. 3 2 3 4 5 . 4. 4. 3. 1 1 . 2. 3. 4 2 . 3. 4 3 2 3 4 5 . 1 4 0 0 1 1 1 0 2 1 0 3 1 4 1 2 5 3 4 IS 240 – Spring 2009. 02. 18 - SLIDE 43

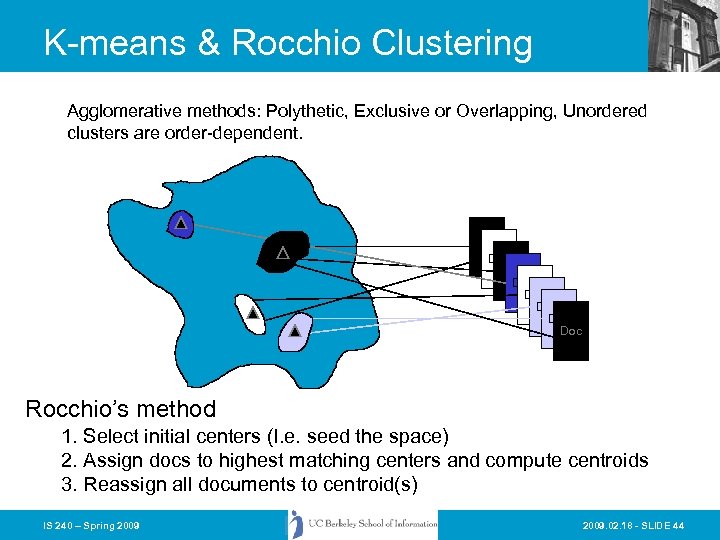

K-means & Rocchio Clustering Agglomerative methods: Polythetic, Exclusive or Overlapping, Unordered clusters are order-dependent. Doc Doc Rocchio’s method 1. Select initial centers (I. e. seed the space) 2. Assign docs to highest matching centers and compute centroids 3. Reassign all documents to centroid(s) IS 240 – Spring 2009. 02. 18 - SLIDE 44

K-means & Rocchio Clustering Agglomerative methods: Polythetic, Exclusive or Overlapping, Unordered clusters are order-dependent. Doc Doc Rocchio’s method 1. Select initial centers (I. e. seed the space) 2. Assign docs to highest matching centers and compute centroids 3. Reassign all documents to centroid(s) IS 240 – Spring 2009. 02. 18 - SLIDE 44

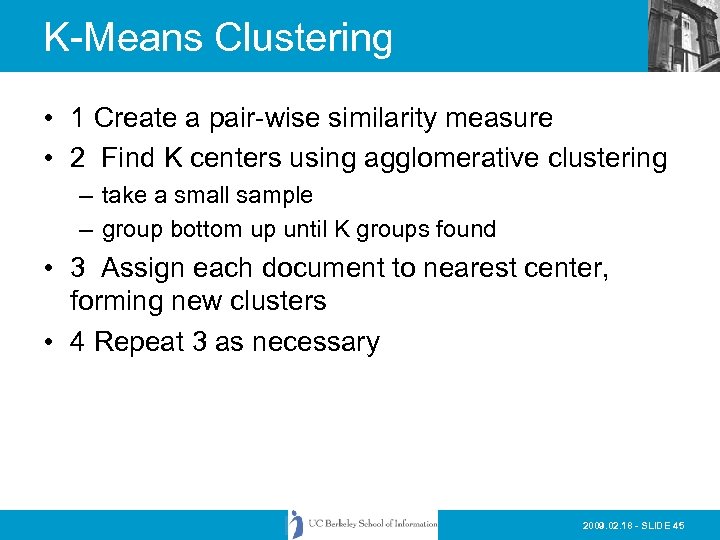

K-Means Clustering • 1 Create a pair-wise similarity measure • 2 Find K centers using agglomerative clustering – take a small sample – group bottom up until K groups found • 3 Assign each document to nearest center, forming new clusters • 4 Repeat 3 as necessary 2009. 02. 18 - SLIDE 45

K-Means Clustering • 1 Create a pair-wise similarity measure • 2 Find K centers using agglomerative clustering – take a small sample – group bottom up until K groups found • 3 Assign each document to nearest center, forming new clusters • 4 Repeat 3 as necessary 2009. 02. 18 - SLIDE 45

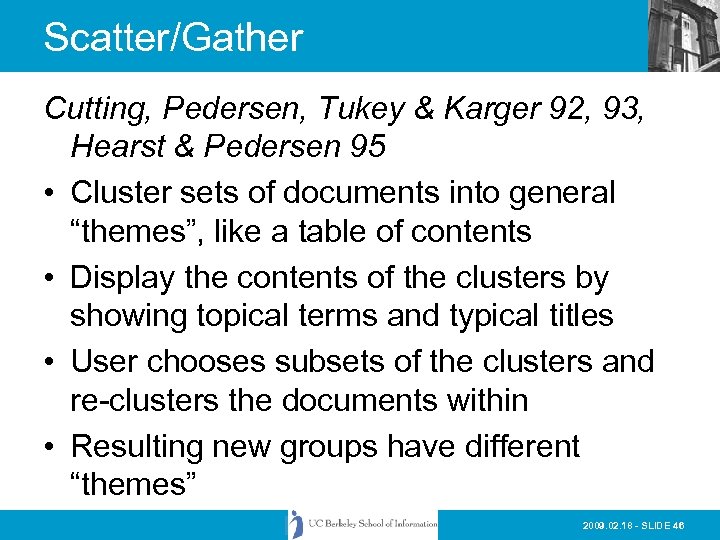

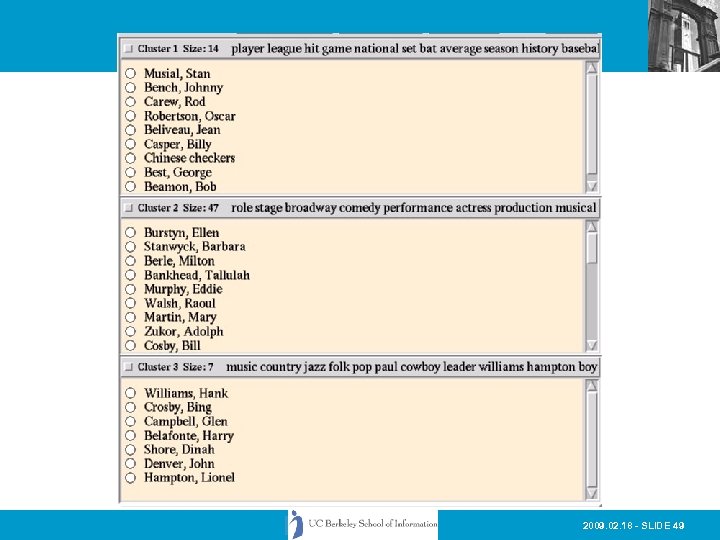

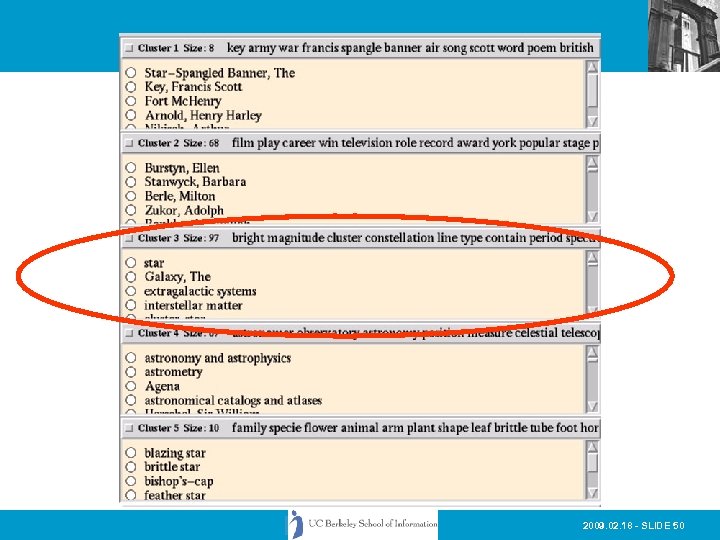

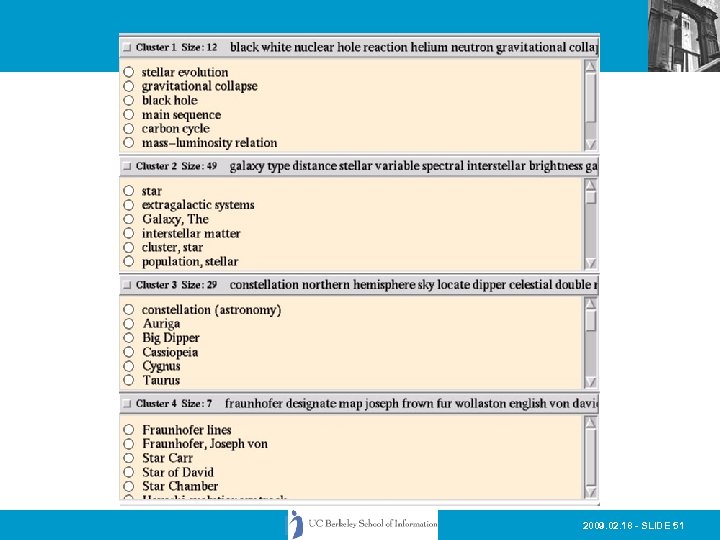

Scatter/Gather Cutting, Pedersen, Tukey & Karger 92, 93, Hearst & Pedersen 95 • Cluster sets of documents into general “themes”, like a table of contents • Display the contents of the clusters by showing topical terms and typical titles • User chooses subsets of the clusters and re-clusters the documents within • Resulting new groups have different “themes” 2009. 02. 18 - SLIDE 46

Scatter/Gather Cutting, Pedersen, Tukey & Karger 92, 93, Hearst & Pedersen 95 • Cluster sets of documents into general “themes”, like a table of contents • Display the contents of the clusters by showing topical terms and typical titles • User chooses subsets of the clusters and re-clusters the documents within • Resulting new groups have different “themes” 2009. 02. 18 - SLIDE 46

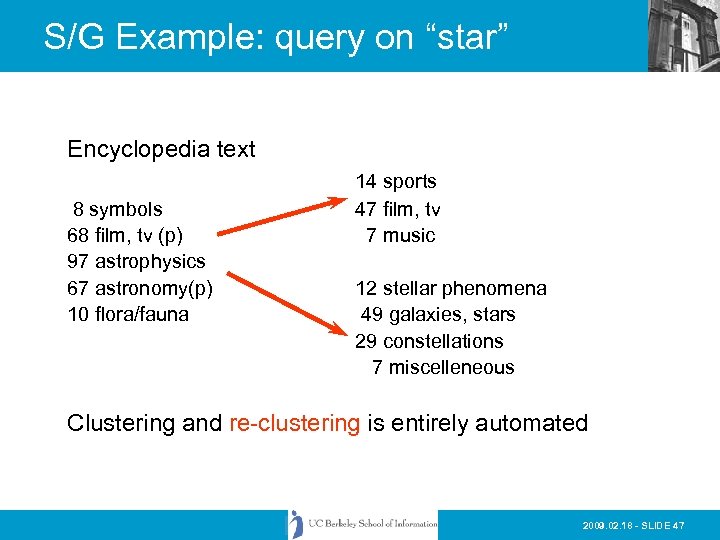

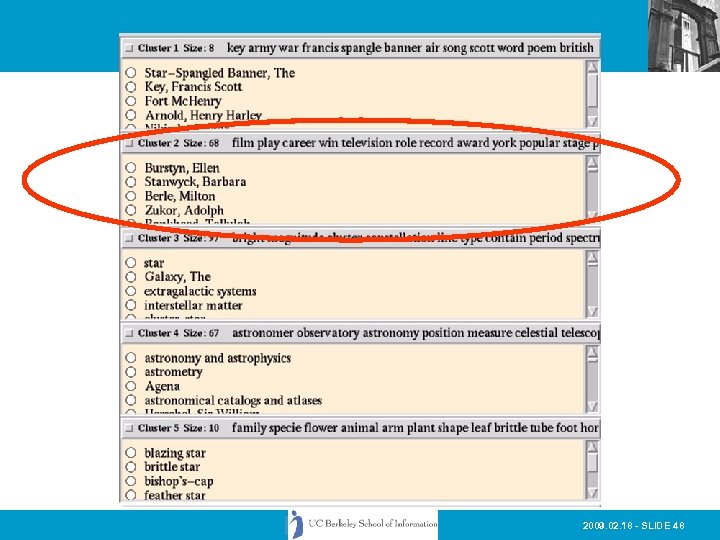

S/G Example: query on “star” Encyclopedia text 8 symbols 68 film, tv (p) 97 astrophysics 67 astronomy(p) 10 flora/fauna 14 sports 47 film, tv 7 music 12 stellar phenomena 49 galaxies, stars 29 constellations 7 miscelleneous Clustering and re-clustering is entirely automated 2009. 02. 18 - SLIDE 47

S/G Example: query on “star” Encyclopedia text 8 symbols 68 film, tv (p) 97 astrophysics 67 astronomy(p) 10 flora/fauna 14 sports 47 film, tv 7 music 12 stellar phenomena 49 galaxies, stars 29 constellations 7 miscelleneous Clustering and re-clustering is entirely automated 2009. 02. 18 - SLIDE 47

2009. 02. 18 - SLIDE 48

2009. 02. 18 - SLIDE 48

2009. 02. 18 - SLIDE 49

2009. 02. 18 - SLIDE 49

2009. 02. 18 - SLIDE 50

2009. 02. 18 - SLIDE 50

2009. 02. 18 - SLIDE 51

2009. 02. 18 - SLIDE 51

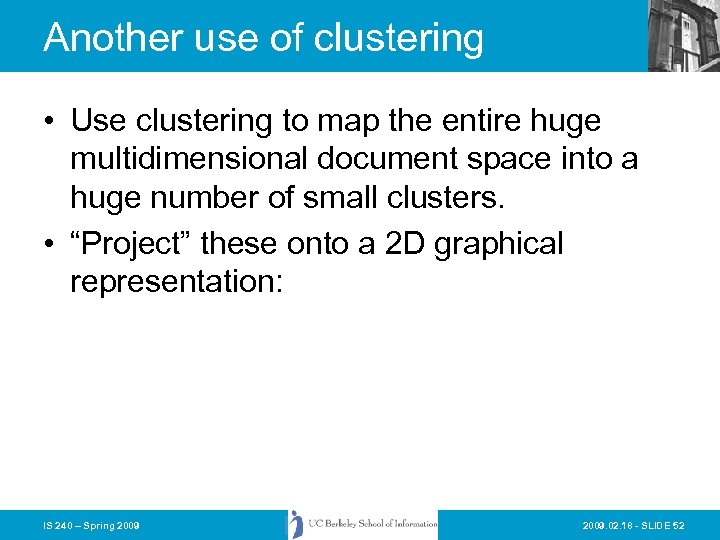

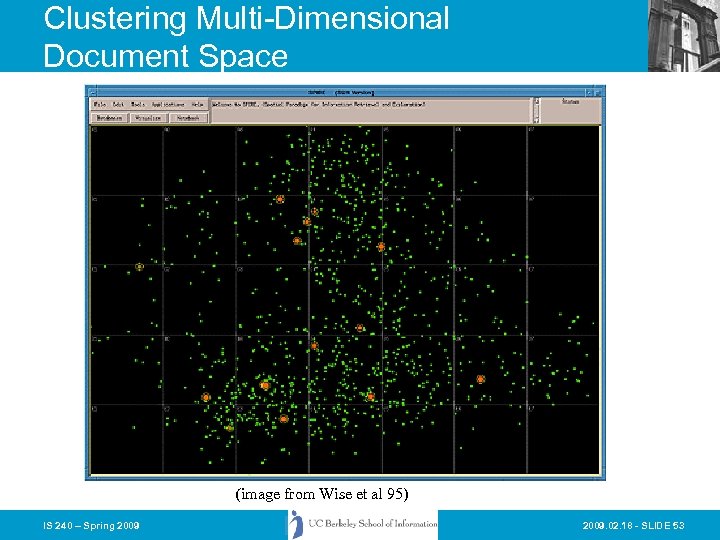

Another use of clustering • Use clustering to map the entire huge multidimensional document space into a huge number of small clusters. • “Project” these onto a 2 D graphical representation: IS 240 – Spring 2009. 02. 18 - SLIDE 52

Another use of clustering • Use clustering to map the entire huge multidimensional document space into a huge number of small clusters. • “Project” these onto a 2 D graphical representation: IS 240 – Spring 2009. 02. 18 - SLIDE 52

Clustering Multi-Dimensional Document Space (image from Wise et al 95) IS 240 – Spring 2009. 02. 18 - SLIDE 53

Clustering Multi-Dimensional Document Space (image from Wise et al 95) IS 240 – Spring 2009. 02. 18 - SLIDE 53

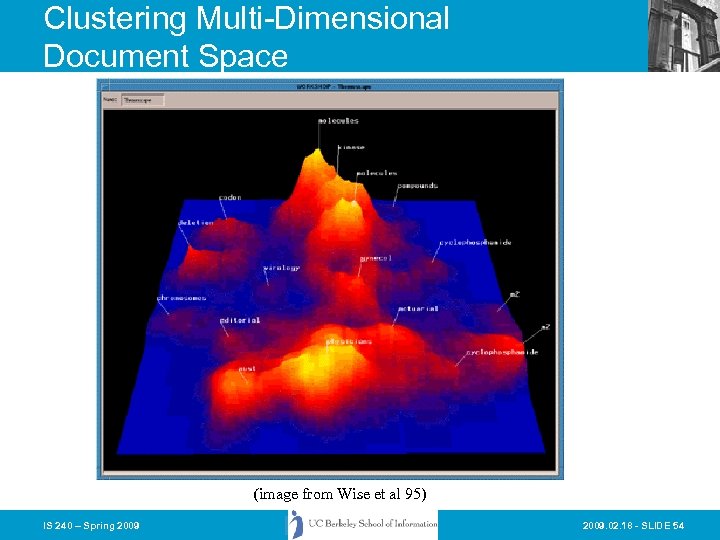

Clustering Multi-Dimensional Document Space (image from Wise et al 95) IS 240 – Spring 2009. 02. 18 - SLIDE 54

Clustering Multi-Dimensional Document Space (image from Wise et al 95) IS 240 – Spring 2009. 02. 18 - SLIDE 54

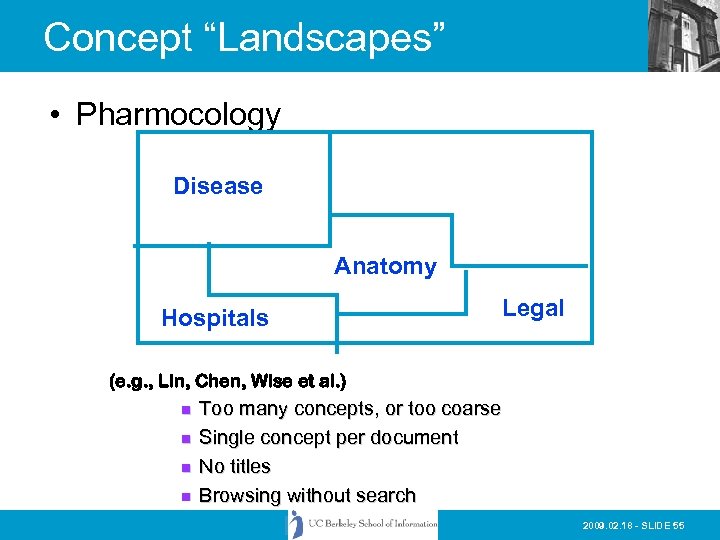

Concept “Landscapes” • Pharmocology Disease Anatomy Hospitals Legal (e. g. , Lin, Chen, Wise et al. ) n n Too many concepts, or too coarse Single concept per document No titles Browsing without search 2009. 02. 18 - SLIDE 55

Concept “Landscapes” • Pharmocology Disease Anatomy Hospitals Legal (e. g. , Lin, Chen, Wise et al. ) n n Too many concepts, or too coarse Single concept per document No titles Browsing without search 2009. 02. 18 - SLIDE 55

Clustering • Advantages: – See some main themes • Disadvantage: – Many ways documents could group together are hidden • Thinking point: what is the relationship to classification systems and facets? IS 240 – Spring 2009. 02. 18 - SLIDE 56

Clustering • Advantages: – See some main themes • Disadvantage: – Many ways documents could group together are hidden • Thinking point: what is the relationship to classification systems and facets? IS 240 – Spring 2009. 02. 18 - SLIDE 56

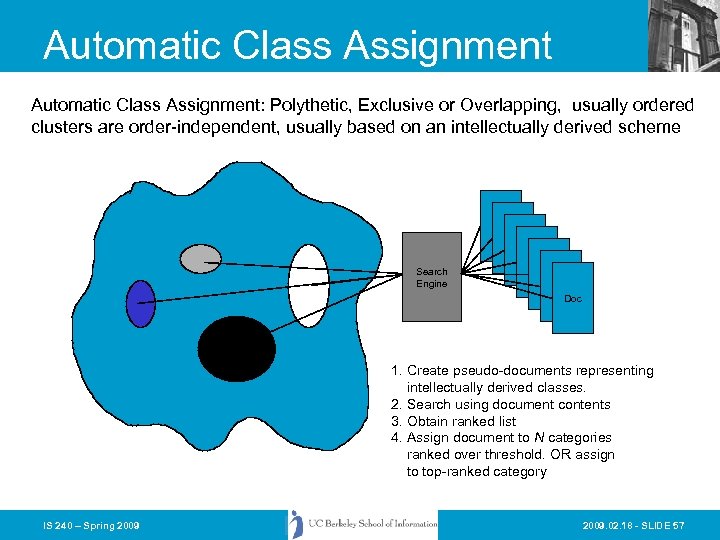

Automatic Class Assignment: Polythetic, Exclusive or Overlapping, usually ordered clusters are order-independent, usually based on an intellectually derived scheme Search Engine Doc Doc 1. Create pseudo-documents representing intellectually derived classes. 2. Search using document contents 3. Obtain ranked list 4. Assign document to N categories ranked over threshold. OR assign to top-ranked category IS 240 – Spring 2009. 02. 18 - SLIDE 57

Automatic Class Assignment: Polythetic, Exclusive or Overlapping, usually ordered clusters are order-independent, usually based on an intellectually derived scheme Search Engine Doc Doc 1. Create pseudo-documents representing intellectually derived classes. 2. Search using document contents 3. Obtain ranked list 4. Assign document to N categories ranked over threshold. OR assign to top-ranked category IS 240 – Spring 2009. 02. 18 - SLIDE 57

Automatic Categorization in Cheshire II • Cheshire supports a method we call “classification clustering” that relies on having a set of records that have been indexed using some controlled vocabulary. • Classification clustering has the following steps… IS 240 – Spring 2009. 02. 18 - SLIDE 58

Automatic Categorization in Cheshire II • Cheshire supports a method we call “classification clustering” that relies on having a set of records that have been indexed using some controlled vocabulary. • Classification clustering has the following steps… IS 240 – Spring 2009. 02. 18 - SLIDE 58

Cheshire II - Cluster Generation • Define basis for clustering records. – Select field (I. e. , the contolled vocabulary terms) to form the basis of the cluster. – Evidence Fields to use as contents of the pseudodocuments. (E. g. the titles or other topical parts) • During indexing cluster keys are generated with basis and evidence from each record. • Cluster keys are sorted and merged on basis and pseudo-documents created for each unique basis element containing all evidence fields. • Pseudo-Documents (Class clusters) are indexed on combined evidence fields. IS 240 – Spring 2009. 02. 18 - SLIDE 59

Cheshire II - Cluster Generation • Define basis for clustering records. – Select field (I. e. , the contolled vocabulary terms) to form the basis of the cluster. – Evidence Fields to use as contents of the pseudodocuments. (E. g. the titles or other topical parts) • During indexing cluster keys are generated with basis and evidence from each record. • Cluster keys are sorted and merged on basis and pseudo-documents created for each unique basis element containing all evidence fields. • Pseudo-Documents (Class clusters) are indexed on combined evidence fields. IS 240 – Spring 2009. 02. 18 - SLIDE 59

Cheshire II - Two-Stage Retrieval • Using the LC Classification System – Pseudo-Document created for each LC class containing terms derived from “content-rich” portions of documents in that class (e. g. , subject headings, titles, etc. ) – Permits searching by any term in the class – Ranked Probabilistic retrieval techniques attempt to present the “Best Matches” to a query first. – User selects classes to feed back for the “second stage” search of documents. • Can be used with any classified/Indexed collection. IS 240 – Spring 2009. 02. 18 - SLIDE 60

Cheshire II - Two-Stage Retrieval • Using the LC Classification System – Pseudo-Document created for each LC class containing terms derived from “content-rich” portions of documents in that class (e. g. , subject headings, titles, etc. ) – Permits searching by any term in the class – Ranked Probabilistic retrieval techniques attempt to present the “Best Matches” to a query first. – User selects classes to feed back for the “second stage” search of documents. • Can be used with any classified/Indexed collection. IS 240 – Spring 2009. 02. 18 - SLIDE 60

Cheshire II Demo • Examples from the: – Sci. Mentor(Bio. Search) project • Journal of Biological Chemistry and MEDLINE data – CHESTER (Econ. Lit) • Journal of Economic Literature subjects – Unfamiliar Metadata & TIDES Projects • Basis for clusters is a normalized Library of Congress Class Number • Evidence is provided by terms from record titles (and subject headings for the “all languages” • Five different training sets (Russian, German, French, Spanish, and All Languages • Testing cross-language retrieval and classification – 4 W Project Search IS 240 – Spring 2009. 02. 18 - SLIDE 61

Cheshire II Demo • Examples from the: – Sci. Mentor(Bio. Search) project • Journal of Biological Chemistry and MEDLINE data – CHESTER (Econ. Lit) • Journal of Economic Literature subjects – Unfamiliar Metadata & TIDES Projects • Basis for clusters is a normalized Library of Congress Class Number • Evidence is provided by terms from record titles (and subject headings for the “all languages” • Five different training sets (Russian, German, French, Spanish, and All Languages • Testing cross-language retrieval and classification – 4 W Project Search IS 240 – Spring 2009. 02. 18 - SLIDE 61