5452351f28ef80c6998f439467dc6113.ppt

- Количество слайдов: 31

Lecture 25 • Multiple Regression Diagnostics (Sections 19. 4 -19. 5) • Polynomial Models (Section 20. 2)

Lecture 25 • Multiple Regression Diagnostics (Sections 19. 4 -19. 5) • Polynomial Models (Section 20. 2)

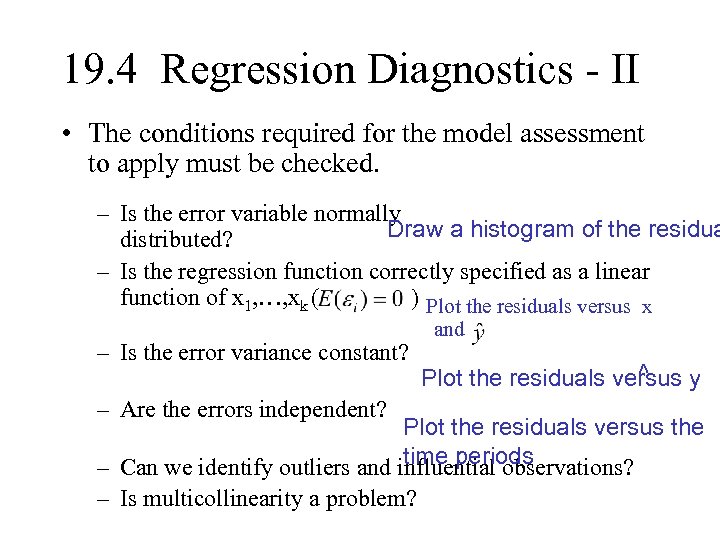

19. 4 Regression Diagnostics - II • The conditions required for the model assessment to apply must be checked. – Is the error variable normally Draw a histogram of the residua distributed? – Is the regression function correctly specified as a linear function of x 1, …, xk ( ) Plot the residuals versus x – Is the error variance constant? – Are the errors independent? and ^ Plot the residuals versus y Plot the residuals versus the time periods – Can we identify outliers and influential observations? – Is multicollinearity a problem?

19. 4 Regression Diagnostics - II • The conditions required for the model assessment to apply must be checked. – Is the error variable normally Draw a histogram of the residua distributed? – Is the regression function correctly specified as a linear function of x 1, …, xk ( ) Plot the residuals versus x – Is the error variance constant? – Are the errors independent? and ^ Plot the residuals versus y Plot the residuals versus the time periods – Can we identify outliers and influential observations? – Is multicollinearity a problem?

Effects of Violated Assumptions • Curvature ( ): slopes no longer meaningful (Potential remedy: Transformations of responses and predictors) • Violations of other assumptions: tests, pvalues, CIs are no longer accurate. That is, inference is invalidated (Remedies may be difficult)

Effects of Violated Assumptions • Curvature ( ): slopes no longer meaningful (Potential remedy: Transformations of responses and predictors) • Violations of other assumptions: tests, pvalues, CIs are no longer accurate. That is, inference is invalidated (Remedies may be difficult)

Influential Observation • Influential observation: An observation is influential if removing it would markedly change the results of the analysis. • In order to be influential, a point must either be (i) an outlier in terms of the relationship between its y and x’s or (ii) have unusually distant x’s (high leverage) and not fall exactly into the relationship between y and x’s that the rest of the data follows.

Influential Observation • Influential observation: An observation is influential if removing it would markedly change the results of the analysis. • In order to be influential, a point must either be (i) an outlier in terms of the relationship between its y and x’s or (ii) have unusually distant x’s (high leverage) and not fall exactly into the relationship between y and x’s that the rest of the data follows.

Simple Linear Regression Example • Data in salary. jmp. Y=Weekly Salary, X=Years of Experience.

Simple Linear Regression Example • Data in salary. jmp. Y=Weekly Salary, X=Years of Experience.

Identification of Influential Observations • Cook’s distance is a measure of the influence of a point – the effect that omitting the observation has on the estimated regression coefficients. • Use Save Columns, Cook’s D Influence to obtain Cook’s Distance. • Plot Cook’s Distances: Graph, Overlay Plot, put Cook’s D Influence in Y and leave X blank (plots Cook’

Identification of Influential Observations • Cook’s distance is a measure of the influence of a point – the effect that omitting the observation has on the estimated regression coefficients. • Use Save Columns, Cook’s D Influence to obtain Cook’s Distance. • Plot Cook’s Distances: Graph, Overlay Plot, put Cook’s D Influence in Y and leave X blank (plots Cook’

Cook’s Distance • Rule of thumb: Observation with Cook’s Distance (Di) >1 has high influence. You should also be concerned about any observation that has Di<1 but has a much bigger Di than any other observation. Ex. 19. 2:

Cook’s Distance • Rule of thumb: Observation with Cook’s Distance (Di) >1 has high influence. You should also be concerned about any observation that has Di<1 but has a much bigger Di than any other observation. Ex. 19. 2:

Strategy for dealing with influential observations/outliers • Do the conclusions change when the obs. is deleted? – If No. Proceed with the obs. Included. Study the obs to see if anything can be learned. – If Yes. Is there reason to believe the case belongs to a population other than the one under investigation? • If Yes. Omit the case and proceed. • If No. Does the case have unusually “distant” independent variables. – If Yes. Omit the case and proceed. Report conclusions for the reduced range of explanatory variables. – If No. Not much can be said. More data are needed to resolve the questions.

Strategy for dealing with influential observations/outliers • Do the conclusions change when the obs. is deleted? – If No. Proceed with the obs. Included. Study the obs to see if anything can be learned. – If Yes. Is there reason to believe the case belongs to a population other than the one under investigation? • If Yes. Omit the case and proceed. • If No. Does the case have unusually “distant” independent variables. – If Yes. Omit the case and proceed. Report conclusions for the reduced range of explanatory variables. – If No. Not much can be said. More data are needed to resolve the questions.

Multicollinearity • Multicollinearity: Condition in which independent variables are highly correlated. • Exact collinearity: Y=Weight, X 1=Height in inches, X 2=Height in feet. Then provide the same predictions. • Multicollinearity causes two kinds of difficulties: – The t statistics appear to be too small. – The b coefficients cannot be interpreted as

Multicollinearity • Multicollinearity: Condition in which independent variables are highly correlated. • Exact collinearity: Y=Weight, X 1=Height in inches, X 2=Height in feet. Then provide the same predictions. • Multicollinearity causes two kinds of difficulties: – The t statistics appear to be too small. – The b coefficients cannot be interpreted as

Multicollinearity Diagnostics • Diagnostics: – High correlation between independent variables – Counterintuitive signs on regression coefficients – Low values for t-statistics despite a significant overall fit, as measured by the F statistic.

Multicollinearity Diagnostics • Diagnostics: – High correlation between independent variables – Counterintuitive signs on regression coefficients – Low values for t-statistics despite a significant overall fit, as measured by the F statistic.

Diagnostics: Multicollinearity • Example 19. 2: Predicting house price (Xm 1902) – A real estate agent believes that a house selling price can be predicted using the house size, number of bedrooms, and lot size. – A random sample of 100 houses was drawn and data recorded. – Analyze the relationship among the four variables

Diagnostics: Multicollinearity • Example 19. 2: Predicting house price (Xm 1902) – A real estate agent believes that a house selling price can be predicted using the house size, number of bedrooms, and lot size. – A random sample of 100 houses was drawn and data recorded. – Analyze the relationship among the four variables

Diagnostics: Multicollinearity • The proposed model is PRICE = b 0 + b 1 BEDROOMS + b 2 H-SIZE +b 3 LOTSIZE +e The model is valid, but no variable is significantly related to the selling price ? !

Diagnostics: Multicollinearity • The proposed model is PRICE = b 0 + b 1 BEDROOMS + b 2 H-SIZE +b 3 LOTSIZE +e The model is valid, but no variable is significantly related to the selling price ? !

Diagnostics: Multicollinearity • Multicollinearity is found to be a problem. • Multicollinearity causes two kinds of difficulties: – The t statistics appear to be too small. – The b coefficients cannot be interpreted as

Diagnostics: Multicollinearity • Multicollinearity is found to be a problem. • Multicollinearity causes two kinds of difficulties: – The t statistics appear to be too small. – The b coefficients cannot be interpreted as

Remedying Violations of the Required Conditions • Nonnormality or heteroscedasticity can be remedied using transformations on the y variable. • The transformations can improve the linear relationship between the dependent variable and the independent variables. • Many computer software systems allow us to make the transformations easily.

Remedying Violations of the Required Conditions • Nonnormality or heteroscedasticity can be remedied using transformations on the y variable. • The transformations can improve the linear relationship between the dependent variable and the independent variables. • Many computer software systems allow us to make the transformations easily.

Transformations, Example. Reducing Nonnormality by Transformations • A brief list of transformations » y’ = log y (for y > 0) • Use when the se increases with y, or • Use when the error distribution is positively skewed » y’ = y 2 • Use when the s 2 e is proportional to E(y), or • Use when the error distribution is negatively skewed » y’ = y 1/2 (for y > 0) • Use when the s 2 e is proportional to E(y) » y’ = 1/y • Use when s 2 e increases significantly when y increases beyond some critical value.

Transformations, Example. Reducing Nonnormality by Transformations • A brief list of transformations » y’ = log y (for y > 0) • Use when the se increases with y, or • Use when the error distribution is positively skewed » y’ = y 2 • Use when the s 2 e is proportional to E(y), or • Use when the error distribution is negatively skewed » y’ = y 1/2 (for y > 0) • Use when the s 2 e is proportional to E(y) » y’ = 1/y • Use when s 2 e increases significantly when y increases beyond some critical value.

Durbin - Watson Test: Are the Errors Autocorrelated? • This test detects first order autocorrelation between consecutive residuals in a time series • If autocorrelation exists the error variables are not independent

Durbin - Watson Test: Are the Errors Autocorrelated? • This test detects first order autocorrelation between consecutive residuals in a time series • If autocorrelation exists the error variables are not independent

Positive First Order Autocorrelation + + + Residuals + 0 + + Time + + Positive first order autocorrelation occurs when consecutive residuals tend to be similar. Then, the value of d is small (less than 2).

Positive First Order Autocorrelation + + + Residuals + 0 + + Time + + Positive first order autocorrelation occurs when consecutive residuals tend to be similar. Then, the value of d is small (less than 2).

Negative First Order Autocorrelation Residuals + + + + 0 Time Negative first order autocorrelation occurs when consecutive residuals tend to markedly differ. Then, the value of d is large (greater than 2).

Negative First Order Autocorrelation Residuals + + + + 0 Time Negative first order autocorrelation occurs when consecutive residuals tend to markedly differ. Then, the value of d is large (greater than 2).

Durbin-Watson Test in JMP • H 0: No first-order autocorrelation. H 1: First-order autocorrelation • Use row diagnostics, Durbin-Watson test in JMP after fitting the model. • Autocorrelation is an estimate of correlation between errors.

Durbin-Watson Test in JMP • H 0: No first-order autocorrelation. H 1: First-order autocorrelation • Use row diagnostics, Durbin-Watson test in JMP after fitting the model. • Autocorrelation is an estimate of correlation between errors.

Testing the Existence of Autocorrelation, Example • Example 19. 3 (Xm 19 -03) – How does the weather affect the sales of lift tickets in a ski resort? – Data of the past 20 years sales of tickets, along with the total snowfall and the average temperature during Christmas week in each year, was collected. – The model hypothesized was TICKETS=b 0+b 1 SNOWFALL+b 2 TEMPERATURE+e – Regression analysis yielded the following results:

Testing the Existence of Autocorrelation, Example • Example 19. 3 (Xm 19 -03) – How does the weather affect the sales of lift tickets in a ski resort? – Data of the past 20 years sales of tickets, along with the total snowfall and the average temperature during Christmas week in each year, was collected. – The model hypothesized was TICKETS=b 0+b 1 SNOWFALL+b 2 TEMPERATURE+e – Regression analysis yielded the following results:

20. 1 Introduction • Regression analysis is one of the most commonly used techniques in statistics. • It is considered powerful for several reasons: – It can cover a variety of mathematical models • linear relationships. • non - linear relationships. • nominal independent variables. – It provides efficient methods for model building

20. 1 Introduction • Regression analysis is one of the most commonly used techniques in statistics. • It is considered powerful for several reasons: – It can cover a variety of mathematical models • linear relationships. • non - linear relationships. • nominal independent variables. – It provides efficient methods for model building

Curvature: Midterm Problem 10

Curvature: Midterm Problem 10

Remedy I: Transformations • Use Tukey’s Bulging Rule to choose a transformation.

Remedy I: Transformations • Use Tukey’s Bulging Rule to choose a transformation.

Remedy II: Polynomial Models y = b 0 + b 1 x 1+ b 2 x 2 +…+ bpxp + e y = b 0 + b 1 x + b 2 x 2 + …+bpxp + e

Remedy II: Polynomial Models y = b 0 + b 1 x 1+ b 2 x 2 +…+ bpxp + e y = b 0 + b 1 x + b 2 x 2 + …+bpxp + e

Quadratic Regression

Quadratic Regression

Polynomial Models with One Predictor Variable • First order model (p = 1) y = b 0 + b 1 x + e • Second order model y= b (p=2) b 0 + b 1 x + 2 x 2 + e e b 2 < 0 b 2 > 0

Polynomial Models with One Predictor Variable • First order model (p = 1) y = b 0 + b 1 x + e • Second order model y= b (p=2) b 0 + b 1 x + 2 x 2 + e e b 2 < 0 b 2 > 0

Polynomial Models with One Predictor Variable • Third order model (p = 3) be y = b 0 + b 1 x + b 2 x 2 + 3 x 3 + e b 3 < 0 b 3 > 0

Polynomial Models with One Predictor Variable • Third order model (p = 3) be y = b 0 + b 1 x + b 2 x 2 + 3 x 3 + e b 3 < 0 b 3 > 0

Interaction • Two independent variables x 1 and x 2 interact if the effect of x 1 on y is influenced by the value of x 2. • Interaction can be brought into the multiple linear regression model by including the independent variable x 1* x 2. • Example:

Interaction • Two independent variables x 1 and x 2 interact if the effect of x 1 on y is influenced by the value of x 2. • Interaction can be brought into the multiple linear regression model by including the independent variable x 1* x 2. • Example:

Interaction Cont. • • “Slope” for x 1=E(y|x 1+1, x 2)-E(y|x 1, x 2)= • • Is the expected income increase from an extra year of education higher for people with IQ 100 or with IQ 130 (or is it the same)?

Interaction Cont. • • “Slope” for x 1=E(y|x 1+1, x 2)-E(y|x 1, x 2)= • • Is the expected income increase from an extra year of education higher for people with IQ 100 or with IQ 130 (or is it the same)?

Polynomial Models with Two Predictor Variables • First order model y = b 0 + b 1 x 1 + b 2 x 2 + e The effect of one predictor variable on y is independent of the effect of the other predictor variable on y. b x 1 X = 3 (3)] + 1 2 [b 0+b 2 x 1 X = 2 +b 1 2 b 2(2)] x 1 X = 1 [b 0+ +b 1 2 b 2(1)] [b 0+ x 1 • First order model, two predictors, and interaction y = b 0 + b 1 x 1 + b 2 x 2 The two variables interact +b 3 x 1 x 2 + e to affect the value of y. (3)]x 1 +[b 1+b 3 X 2 = 3 )] [b 0+b 2(3 [b 0+b 2(2)] +[b 1+b 3(2)]x 1 X 2 = 2 [b 0 +b ( 2 1)] +[b X =1 1 +b 3 (1)] x 1 2 x 1

Polynomial Models with Two Predictor Variables • First order model y = b 0 + b 1 x 1 + b 2 x 2 + e The effect of one predictor variable on y is independent of the effect of the other predictor variable on y. b x 1 X = 3 (3)] + 1 2 [b 0+b 2 x 1 X = 2 +b 1 2 b 2(2)] x 1 X = 1 [b 0+ +b 1 2 b 2(1)] [b 0+ x 1 • First order model, two predictors, and interaction y = b 0 + b 1 x 1 + b 2 x 2 The two variables interact +b 3 x 1 x 2 + e to affect the value of y. (3)]x 1 +[b 1+b 3 X 2 = 3 )] [b 0+b 2(3 [b 0+b 2(2)] +[b 1+b 3(2)]x 1 X 2 = 2 [b 0 +b ( 2 1)] +[b X =1 1 +b 3 (1)] x 1 2 x 1

Polynomial Models with Two Predictor Variables Second order model y = b 0 + b 1 x 1 + b 2 x 2 + b 3 x 12 + b 4 x 22 + e X 2 = 3 y = [b 0+b 2(3)+b 4(32)]+ b 1 x 1 + b 3 x 12 + e X 2 = 2 y = [b 0+b 2(2)+b 4 (22)]+ b 1 x 1 + b 3 x 1 + e 2 X 2 =1 y = [b 0+b 2(1)+b 4(12)]+ b 1 x 1 + b 3 x 12 + e x 1 Second order model with interaction y = b 0 + b 1 x 1 + b 2 x 2 +b 3 x 12 +bbxxx 22++ e e 5 4 12 X 2 = 3 X 2 = 2 X 2 =1

Polynomial Models with Two Predictor Variables Second order model y = b 0 + b 1 x 1 + b 2 x 2 + b 3 x 12 + b 4 x 22 + e X 2 = 3 y = [b 0+b 2(3)+b 4(32)]+ b 1 x 1 + b 3 x 12 + e X 2 = 2 y = [b 0+b 2(2)+b 4 (22)]+ b 1 x 1 + b 3 x 1 + e 2 X 2 =1 y = [b 0+b 2(1)+b 4(12)]+ b 1 x 1 + b 3 x 12 + e x 1 Second order model with interaction y = b 0 + b 1 x 1 + b 2 x 2 +b 3 x 12 +bbxxx 22++ e e 5 4 12 X 2 = 3 X 2 = 2 X 2 =1