Unit I, Lecture 1-2.ppt

- Количество слайдов: 75

Lecture 1 ASSESSMENT LITERACY

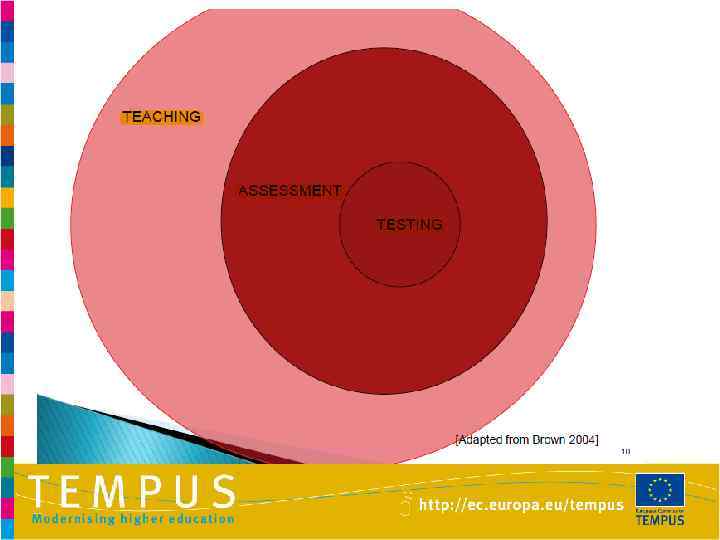

Presentation goals By the end of this unit, you should be able to: • define the fundamental terms and concepts in second language assessment, • explain the relationship between teaching, learning, assessment and testing, • identify and explain the various purposes and types of assessment in relation to second language teaching/learning models and goals, • relate assessment to communicative language teaching, and • classify the types of assessment you use.

Introduction to Assessment Literacy

Definitions of ‘literacy’? • What do we mean/understand by the word ‘literacy’? lit·er·a·cy n. 1. The condition or quality of being literate, especially the ability to read and write. 2. The condition or quality of being knowledgeable in a particular subject or field: cultural literacy; academic literacy

Definitions of ‘academic literacy’? • the ability to read and write effectively within the college context in order to proceed from one level to another • the ability to read and write within the academic context with independence, understanding and a level of engagement with learning • familiarity with a variety of discourses, each with their own conventions • familiarity with the methods of inquiry of specific disciplines

An age of literacy (or literacies)? • ‘academic literacy/ies’ • ‘computer literacy’

‘Assessment literacy’ … • ‘‘a basic grasp of numbers and measurement’ • ‘interpretation skills to make sensible life decisions’ But why is it necessary or important? And who needs it?

Stakeholders • Growing numbers of people involved in language testing and assessment – test takers – teachers and teacher trainers – university staff, e. g. tutors & admissions officers – government agencies and bureaucrats – policymakers – general public (especially parents)

‘Language testing has become big business…’ (Spolsky 2008, p 297)

But… … how ‘assessment literate’ are all those people nowadays involved in language testing and assessment across the many different contexts identified?

Assessment literacy involves… • an understanding of the principles of sound assessment • the know-how required to assess learners effectively and maximise learning • the ability to identify and evaluate appropriate assessments for specific purposes • the ability to analyse empirical data to improve one’s own instructional and assessment practices • the knowledge and understanding to interpret and apply assessment results in appropriate ways • the wisdom to be able to integrate assessment and its outcomes into the overall pedagogic /decisionmaking process

What makes assessment necessary? • Teachers want to know what parts of the course cause difficulties and require special attention /what progress students have made • Students want to know their strengths and weaknesses • Parents want to know how their children are doing PROSET - TEMPUS 12

What makes assessment necessary? • Universities want to choose the best applicants for enrollment • Teachers want to know whether the students are coping with the programme • Employers want to choose the best applicant for the job PROSET - TEMPUS 13

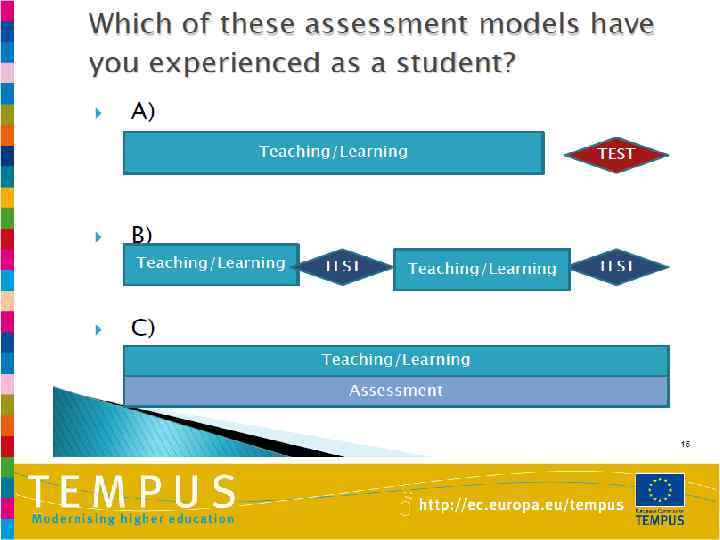

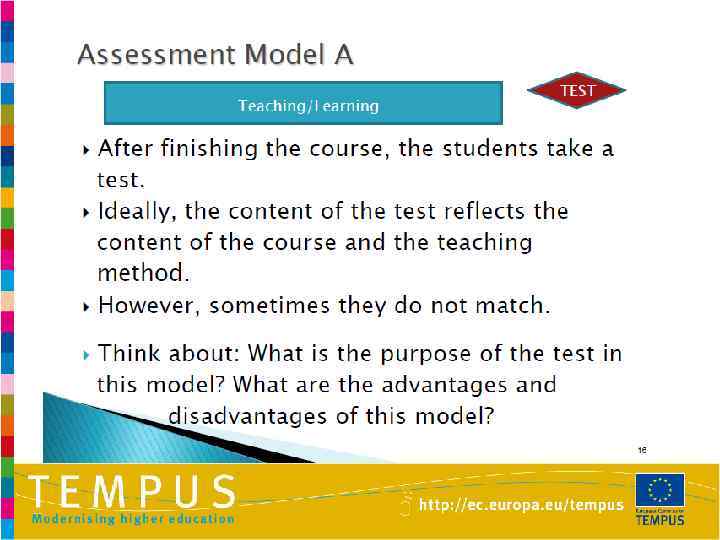

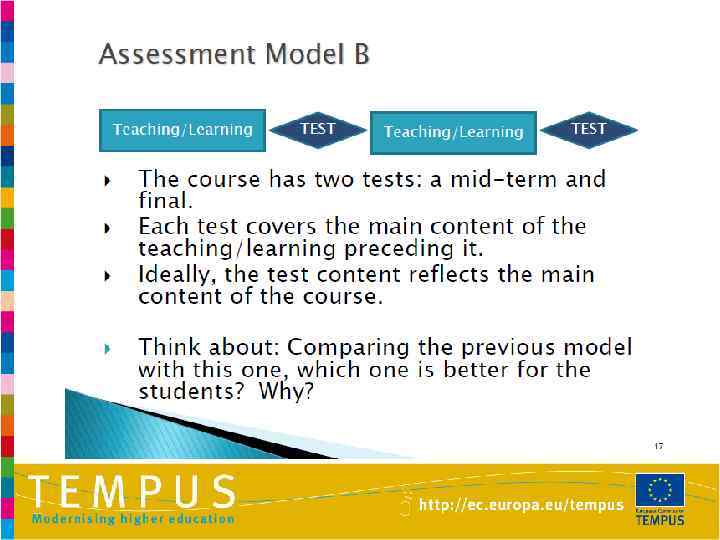

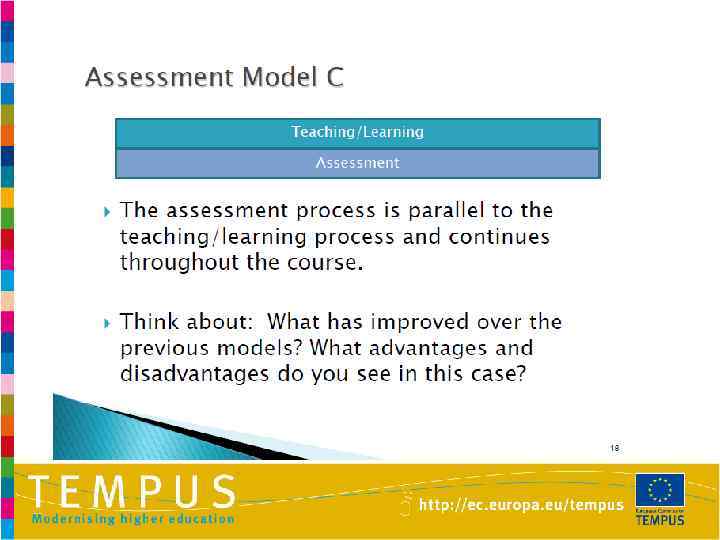

Testing & assessment EVALUATON TESTING PROSET - TEMPUS 15

• • What forms of assessment to uses? Test Portfolio Project work Observation Case study Interview, oral questions Experimental work, etc PROSET - TEMPUS 16

What is a test? Any procedure for measuring • ability • knowledge, • or performance. (Longman Dictionary of Language Teaching and Applied Linguistics) PROSET - TEMPUS 17

What is a test? A test is a measurement instrument designed to elicit a specific sample of individual’s behaviour, … … a test necessarily quantifies characteristics of individuals according to explicit procedures. (L. F. Bachman. Fundamental Considerations in Language Testing, 1990) PROSET - TEMPUS 18

Test as an instrument of assessment (measurement) WHY WHAT HOW PROSET - TEMPUS 23

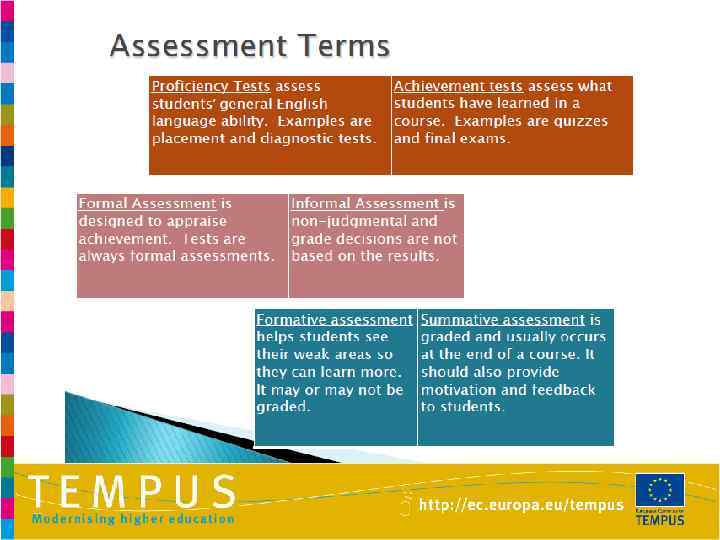

What types of tests do we use? Test Purposes • Proficiency tests • Achievement tests • Placement tests • Diagnostic tests PROSET - TEMPUS 24

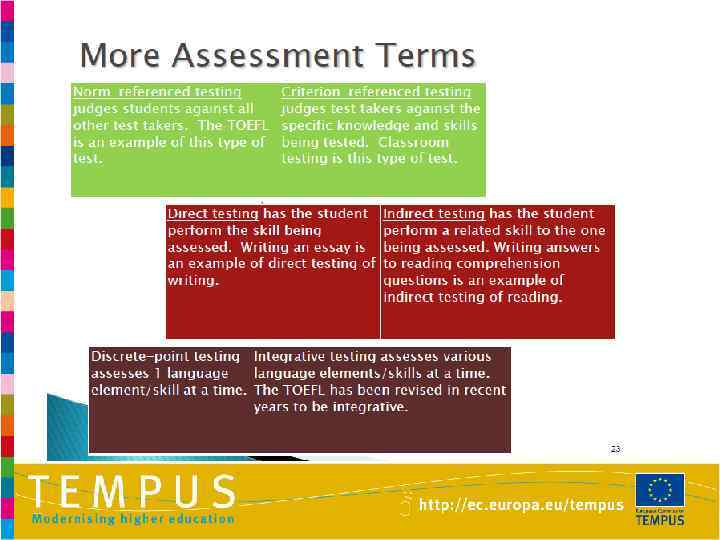

What types of tests do we use? Direct Discrete Point Objective Low stakes Normreferenced PROSET - TEMPUS vs vs vs Indirect Integrated Subjective High stakes Criterionreferenced 27

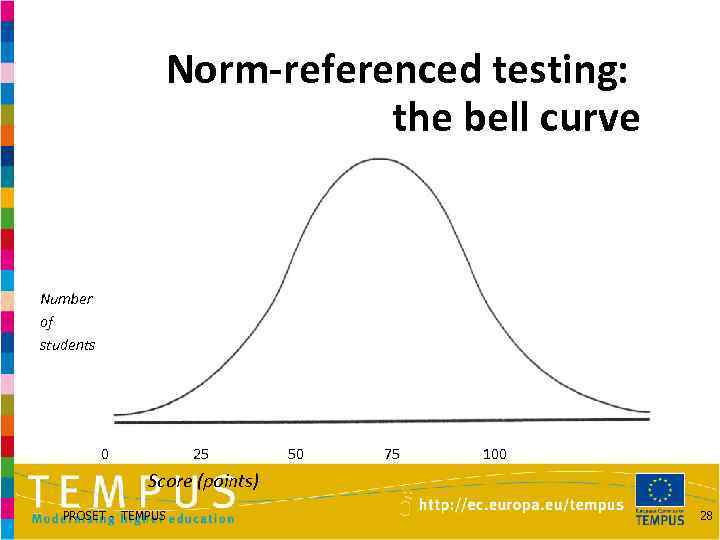

Norm-referenced testing: the bell curve Number of students 0 25 50 75 100 Score (points) PROSET - TEMPUS 28

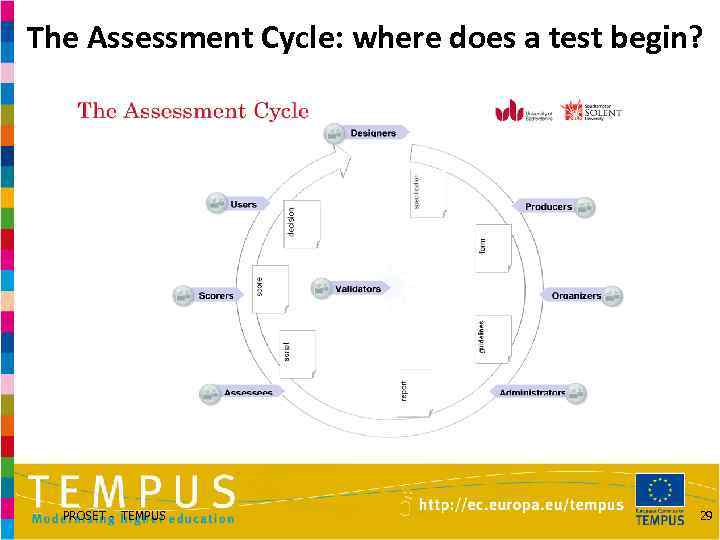

The Assessment Cycle: where does a test begin? PROSET - TEMPUS 29

Attitudes to tests, test scores and their experience of assessment? • Students • Language teachers • Educational boards • Politicians and policy-makers

The over-arching principle of test ‘utility’/‘usefulness’ • Test purpose must be clearly defined and understood • Testing approach must be ‘fit for purpose’ • There must be an appropriate balance of ‘essential test qualities’ (such as validity and reliability)

The 5 Principles of Assessment There are five principles that we need to consider when creating assessments. They are: ü ü ü practicality reliability validity authenticity washback

Practicality Often, this is a very important principle to consider for classroom teachers. Is the assessment (test). . . • too expensive to implement? • too time-consuming to design? • too time-consuming to implement? • too time-consuming to score? • does it require too many people to implement? If the assessment is not practical for your teaching context, it will most likely need to be revised.

Reliability An assessment that is reliable will give the same score to the same type of student regardless of when the assessment is given or who scores it. For example, if Teacher A gives a test to Johnny on Monday morning and scores him with 90/100, a reliable test will give the same result to Johnny if he had taken the test on Monday afternoon with Teacher B or if a similar student to Johnny takes the test on Tuesday with Teacher C.

Reliability Problems An assessment can have problems with reliability if: • it doesn‟t have clear administration instructions. • it doesn‟t have clear scoring instructions. • the test items are written in such a way that they are confusing or several answers are possible. • the testing conditions in the classroom give a disadvantage to students (i. e. , too much outside noise). • students can do well even without knowing the information being assessed. Can you explain why? What can be the reliability solutions?

Validity is concerned with if the assessment measures what it is intended to measure. For example, a valid assessment that is supposed to measure how well a student speaks English will actually indicate the student‟s skill in speaking. Is a speaking test that asks students to read a passage out loud valid? - Not very, because it assesses students ability to read and only minimally assesses speaking (pronunciation).

Validity - Direct testing is more valid than indirect testing. - Valid classroom assessments measure only what has been taught in class. - Valid classroom assessments utilize items or activities that are similar to what students have already practiced in class. - Trick questions and tricky assessments are not valid. Can you explain why?

Authenticity Authentic assessments reflect natural uses of language. Test items are contextualized in authentic assessments (perhaps all relating to one picture or telling a story) There is a direct relationship between the students‟performance on the test/assessment and the ability to complete real-world tasks.

Washbackrefers to the outcomes of the assessment for the learner, the teacher, and the teaching context. Positive washbackfrom an assessment can motivate the student to learn more, positively influence the teacher in what and how to teach, and can improve the classroom environment for more learning. Feedback after assessments improve washback. Tests or assessments that are designed to punish or fail many students do not result in positive washback.

Socio-Cognitive Approaches to Testing and Assessment PROSET - TEMPUS 40

How do examination boards operationalise criterial distinctions between the tests they offer at different levels on the proficiency continuum? (Prof. Cyril Weir) PROSET - TEMPUS 41

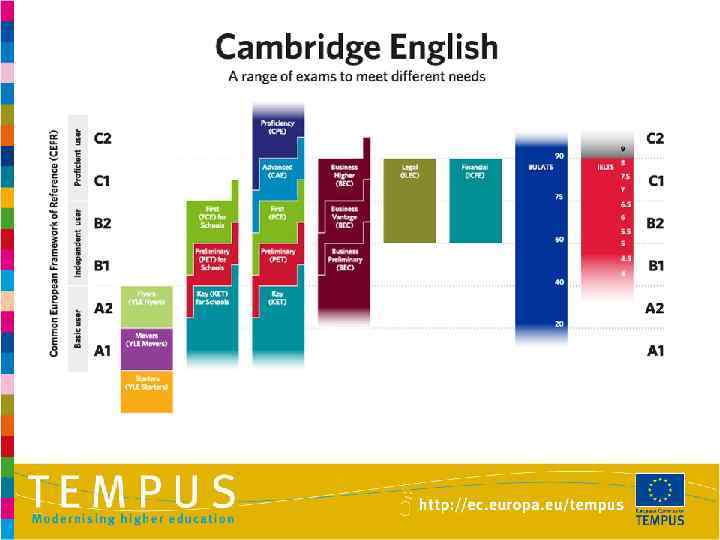

Common European Framework of Reference (CEFR) The CEFR describes language ability on a scale of levels from A 1 for beginners up to C 2 for those who have mastered a language.

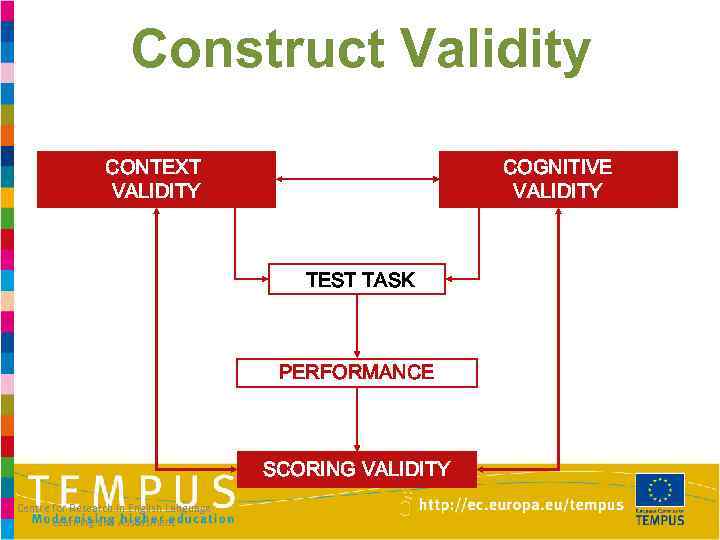

Construct Validity CONTEXT VALIDITY COGNITIVE VALIDITY TEST TASK PERFORMANCE SCORING VALIDITY Centre for Research in English Language Learning and Assessment

Cognitive Validity the extent to which the tasks we employ elicit the cognitive processing involved in task solving.

46

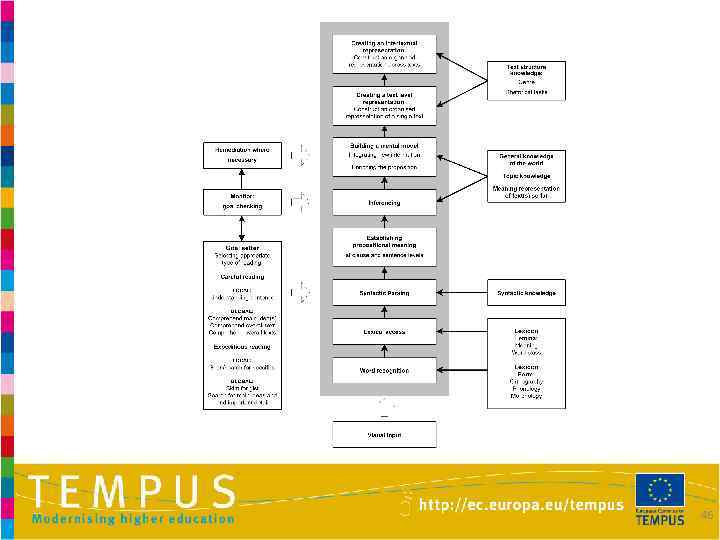

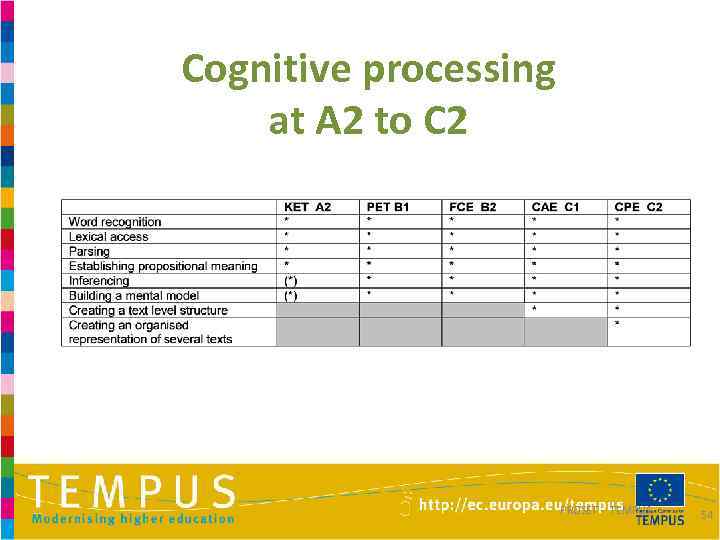

Cognitive demand at different levels In many ways the CEFR specifications are extremely limited in their characterisation of task solving ability at the different levels and we need to be more explicit for testing purposes about: • The types of tasks demanded at each of the stages. • How well calibrated the cognitive processing demands made upon candidates are in the design of the tasks. • The cognitive load imposed by relative task complexity at each stage. 47

Lexical access Accessing the lexical entry containing stored information about a word’s form and its meaning from the lexicon. The form includes orthographic and phonological mental representations of an item and possibly information on its morphology. The lemma includes information on word class and the syntactic structures in which the item can appear and on the range of possible senses for the word. 48

Word Recognition Word recognition is concerned with matching the form of a word in a written text with a mental representation of the orthographic forms of the language. 49

Syntactic parsing Once the meaning of words is accessed, the reader has to group words into phrases, and into larger units at the clause and sentence level to understand the text message. 50

Establishing propositional meaning at the clause or sentence level An abstract representation of a single unit of meaning: a mental record of the core meaning of the sentence without any of the interpretative and associative factors which the reader might bring to bear upon it. 51

Inferencing is necessary so the reader can go beyond explicitly stated ideas as the links between ideas in a passage are often left implicit. Inferencing in this sense is a creative process whereby the brain adds information which is not stated in a text in order to impose coherence. If there was no such thing as inferencing, writing a text which includes every piece of information would be extremely cumbersome and time consuming. 52

Establishing a mental representation across texts In the real world, the reader sometimes has to combine and collate macro-propositional information from more than one text. The need to combine rhetorical and contextual information across texts would seem to place the greatest demands on processing. 53

Cognitive processing at A 2 to C 2 PROSET - TEMPUS 54

Context Validity Context validity relates to the appropriateness of both the linguistic and content demands of the text to be processed, and the features of the task setting that impact on task completion. 55

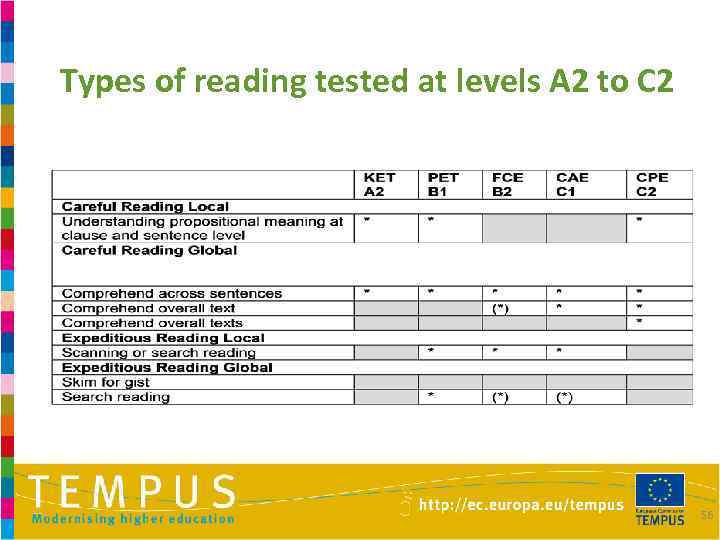

Types of reading tested at levels A 2 to C 2 56

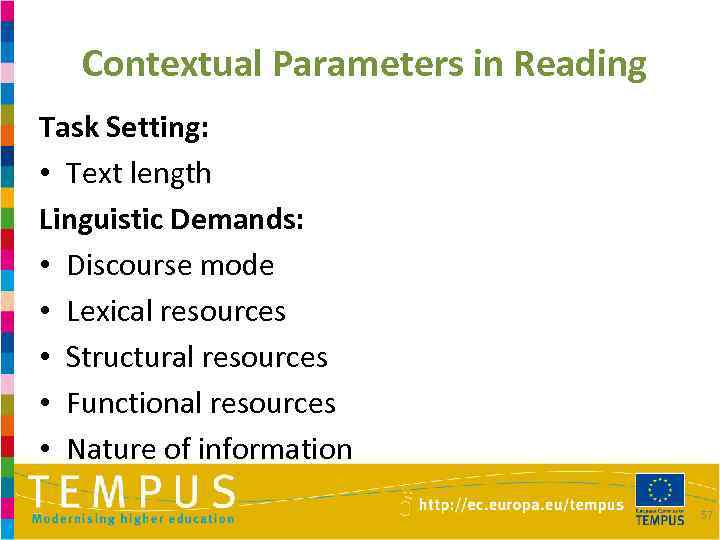

Contextual Parameters in Reading Task Setting: • Text length Linguistic Demands: • Discourse mode • Lexical resources • Structural resources • Functional resources • Nature of information 57

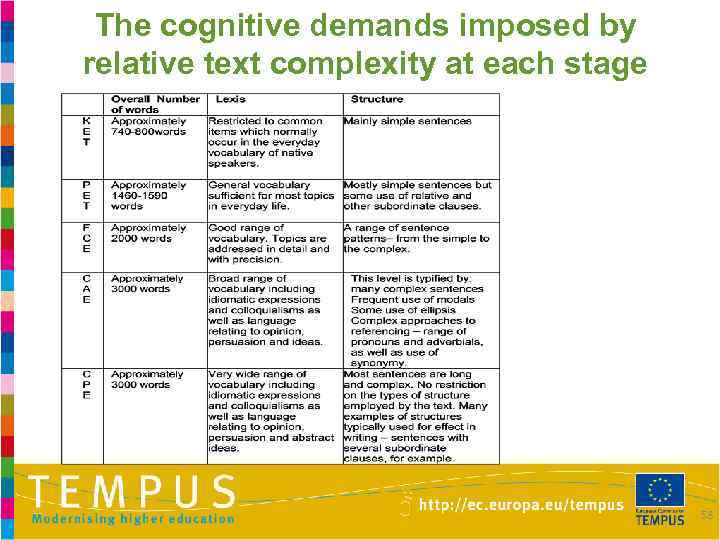

The cognitive demands imposed by relative text complexity at each stage 58

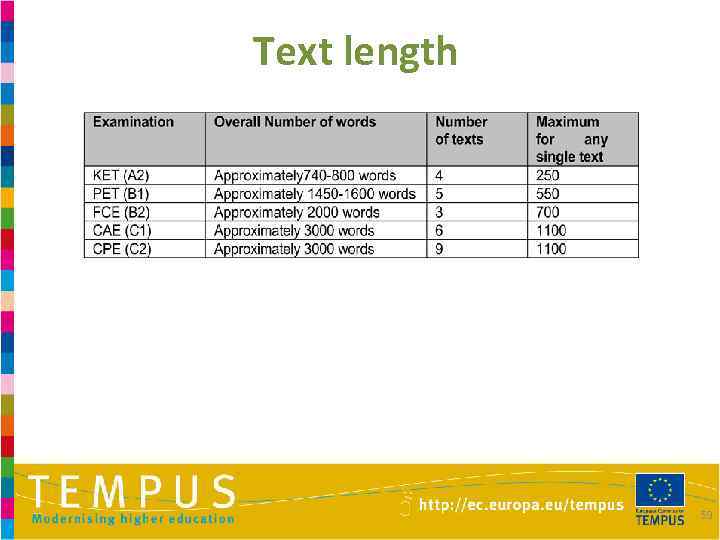

Text length 59

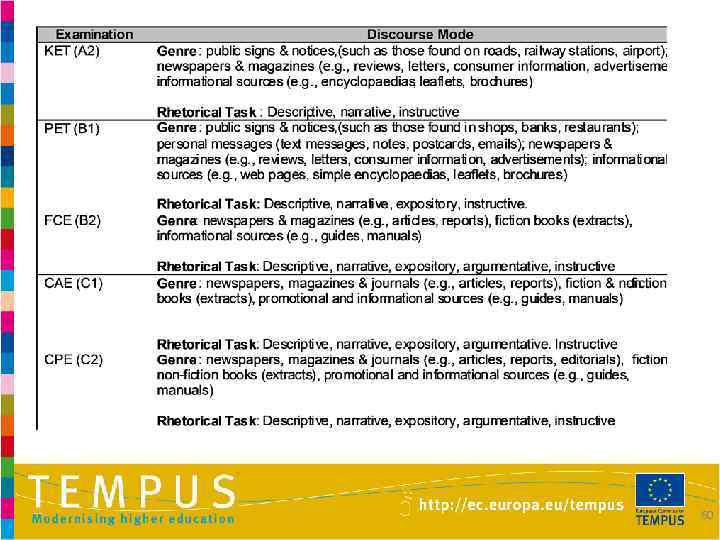

60

What kinds of information should be available about a test? WHO WHAT HOW PROSET - TEMPUS 61

Test specification – the official statement about what the tests and how it tests it … to be followed by test and item writers Multilingual Glossary of Language Testing Terms. CUP, 1998 • Test design statement • Blueprint • Task and item specifications PROSET - TEMPUS 62

Test design statement • the purpose, the knowledge, skills or abilities it is intended to assess • the resources available • the uses of the results, the intended impact of its use PROSET - TEMPUS 63

Blueprint • content • methods • scoring PROSET - TEMPUS 64

Test tasks • Prompt (a reading/listening text, an essay question, a picture to describe) • Response (ticking a box, giving a short answer, describing a picture, etc) PROSET - TEMPUS 65

The building blocks of a test • • • Instructions Item Stem Options Distractors Key PROSET - TEMPUS 66

Responses • Selected response • Constructed response • Personal response (Brown & Hudson, 1998) PROSET - TEMPUS 67

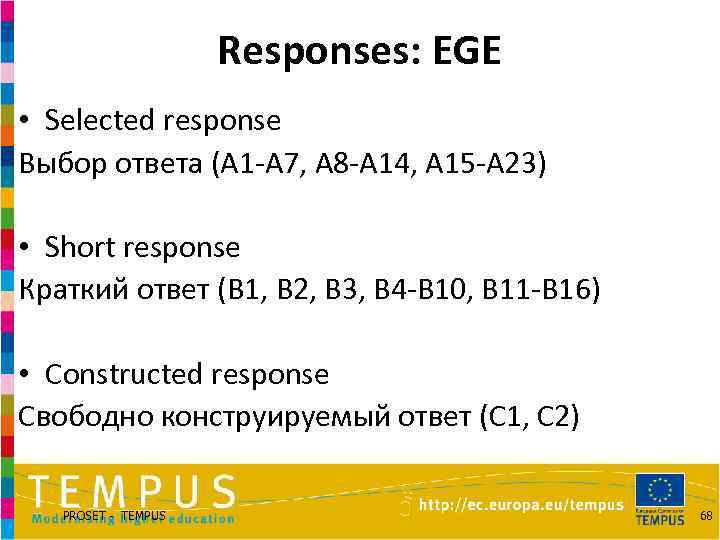

Responses: EGE • Selected response Выбор ответа (A 1 -A 7, A 8 -A 14, A 15 -A 23) • Short response Краткий ответ (B 1, B 2, B 3, B 4 -B 10, B 11 -B 16) • Constructed response Свободно конструируемый ответ (C 1, C 2) PROSET - TEMPUS 68

• What do you think is the relationship between assessment, testing, and teaching? • Distinguish ‘test’ and ‘assessment’ • Identify different types of assessment • Identify different types of tests in terms of their purpose and format • Analyze the assessment cycle in relation to different types of assessment • Identify the structure and components of a test specification (design statement, blueprint, task and item specifications) • Differentiate between cognitive processing at A 2 -C 2 levels of CEFR. • How can assessment motivate learners? How can it demotivate them? • Can the skill of reading be tested in a direct way? • Please, define 5 principles of assessement.

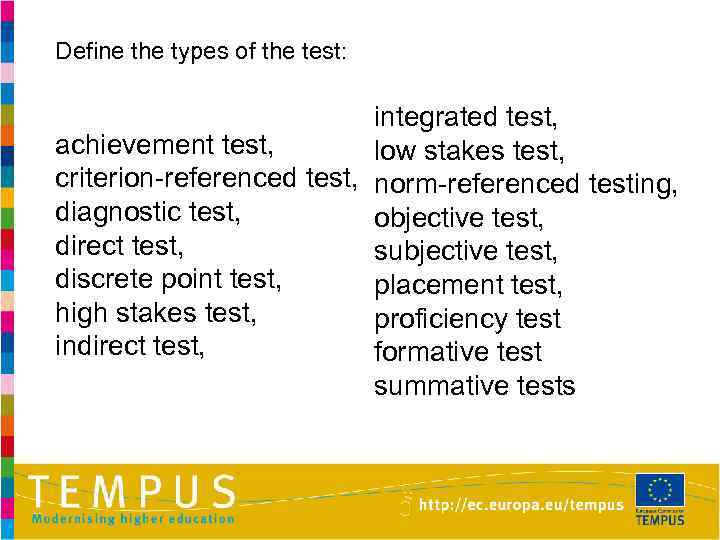

Define the types of the test: integrated test, achievement test, low stakes test, criterion-referenced test, norm-referenced testing, diagnostic test, objective test, direct test, subjective test, discrete point test, placement test, high stakes test, proficiency test indirect test, formative test summative tests

The building blocks of a test: define the terms: • • • Task Instructions Prompt Response Item Stem Options Distractors Key

Find these building blocks in the following EGE tasks:

Task & item specifications (seminar) • How many items are included in each task? • What area of knowledge, skill or ability is assessed in each task? • What should the test-taker do (instructions)? • How are scores on each item and task determined? PROSET - TEMPUS 73

EGE specification: (seminar) • • 11 pages 12 parts (sections) 4 tables an appendix PROSET - TEMPUS 74

Your classroom test specification/plan • • • purpose number of tasks and items what these items assess how they are scored time limit ? ? ? PROSET - TEMPUS 75

Unit I, Lecture 1-2.ppt