2d73f38b19bfdbf807c2bd8e2a36e95a.ppt

- Количество слайдов: 41

Learning to Trade via Direct Reinforcement John Moody and Matthew Saffell, IEEE Trans Neural Networks 12(4), pp. 875 -889, 2001 Summarized by Jangmin O Bio. Intelligence Lab. 1

Learning to Trade via Direct Reinforcement John Moody and Matthew Saffell, IEEE Trans Neural Networks 12(4), pp. 875 -889, 2001 Summarized by Jangmin O Bio. Intelligence Lab. 1

Author l J. Moody ¨ Director of Computational Finance Program and a Professor of CSEE at Oregon Graduate Institute of Science and Technology ¨ Founder & President of Nonlinear Prediction Systems ¨ Program Co-Chair for Computational Finance 2000 ¨ a past General Chair and Program Chair of the NIPS ¨ a member of the editorial board of Quantitative Finance Bio. Intelligence Lab. 2

Author l J. Moody ¨ Director of Computational Finance Program and a Professor of CSEE at Oregon Graduate Institute of Science and Technology ¨ Founder & President of Nonlinear Prediction Systems ¨ Program Co-Chair for Computational Finance 2000 ¨ a past General Chair and Program Chair of the NIPS ¨ a member of the editorial board of Quantitative Finance Bio. Intelligence Lab. 2

I. Introduction Bio. Intelligence Lab. 3

I. Introduction Bio. Intelligence Lab. 3

Optimizing Investment Performance l Characteristic ¨ Path-dependent l Methods : Direct Reinforcement learning (DR) ¨ Recurrent Reinforcement Learning [1, 2] ¨ No need forecasting model ¨ Single security or Asset allocation l Recurrent Reinforcement Learning (RRL) ¨ Adaptive policy search ¨ Learning investment strategy on-line ¨ No need to learn a value function ¨ Immediate rewards available in financial market Bio. Intelligence Lab. 4

Optimizing Investment Performance l Characteristic ¨ Path-dependent l Methods : Direct Reinforcement learning (DR) ¨ Recurrent Reinforcement Learning [1, 2] ¨ No need forecasting model ¨ Single security or Asset allocation l Recurrent Reinforcement Learning (RRL) ¨ Adaptive policy search ¨ Learning investment strategy on-line ¨ No need to learn a value function ¨ Immediate rewards available in financial market Bio. Intelligence Lab. 4

Difference between RRL & Q or TD l Financial decision making problem : suitable to RRL ¨ Immediate feedback available l Performance criteria : risk-adjusted investment returns ¨ Shape ratio ¨ Downside risk minimization l Differential form Bio. Intelligence Lab. 5

Difference between RRL & Q or TD l Financial decision making problem : suitable to RRL ¨ Immediate feedback available l Performance criteria : risk-adjusted investment returns ¨ Shape ratio ¨ Downside risk minimization l Differential form Bio. Intelligence Lab. 5

Experimental Data l U. S. dollar/British Pound foreign exchange market l S&P 500 Stock Index and Treasury Bills l RRL v. s. Q ¨ Bellman’s curse of dimensionality Bio. Intelligence Lab. 6

Experimental Data l U. S. dollar/British Pound foreign exchange market l S&P 500 Stock Index and Treasury Bills l RRL v. s. Q ¨ Bellman’s curse of dimensionality Bio. Intelligence Lab. 6

II. Trading Systems and Performance Criteria Bio. Intelligence Lab. 7

II. Trading Systems and Performance Criteria Bio. Intelligence Lab. 7

Structure of Trading Systems (1) l An agent : assumption ¨ 단일 시장에서 고정 포지션씩 거래 l Trader at time t , Ft {+1, 0, 1} ¨ Long : 매수, Neutral : 관망, Short : 공매도 l 이익 Rt ¨ (t-1, t] 의 끝에 실현, Ft-1 포지션에 따른 손익 + Ft-1 에서 Ft 로 의 포지션 이동에 따른 수수료 l Recurrent 구조로 가야 한다! ¨ 수수료, 마켓 임팩트, 세금등을 고려한 결정을 하기 위해서 Bio. Intelligence Lab. 8

Structure of Trading Systems (1) l An agent : assumption ¨ 단일 시장에서 고정 포지션씩 거래 l Trader at time t , Ft {+1, 0, 1} ¨ Long : 매수, Neutral : 관망, Short : 공매도 l 이익 Rt ¨ (t-1, t] 의 끝에 실현, Ft-1 포지션에 따른 손익 + Ft-1 에서 Ft 로 의 포지션 이동에 따른 수수료 l Recurrent 구조로 가야 한다! ¨ 수수료, 마켓 임팩트, 세금등을 고려한 결정을 하기 위해서 Bio. Intelligence Lab. 8

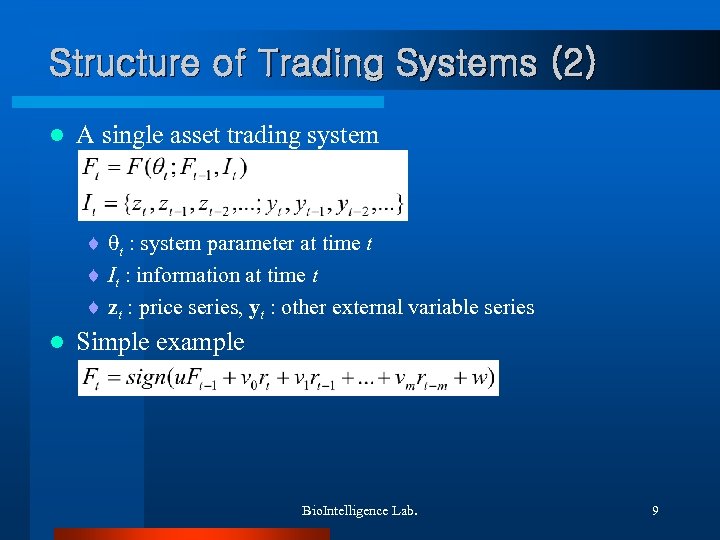

Structure of Trading Systems (2) l A single asset trading system ¨ t : system parameter at time t ¨ It : information at time t ¨ zt : price series, yt : other external variable series l Simple example Bio. Intelligence Lab. 9

Structure of Trading Systems (2) l A single asset trading system ¨ t : system parameter at time t ¨ It : information at time t ¨ zt : price series, yt : other external variable series l Simple example Bio. Intelligence Lab. 9

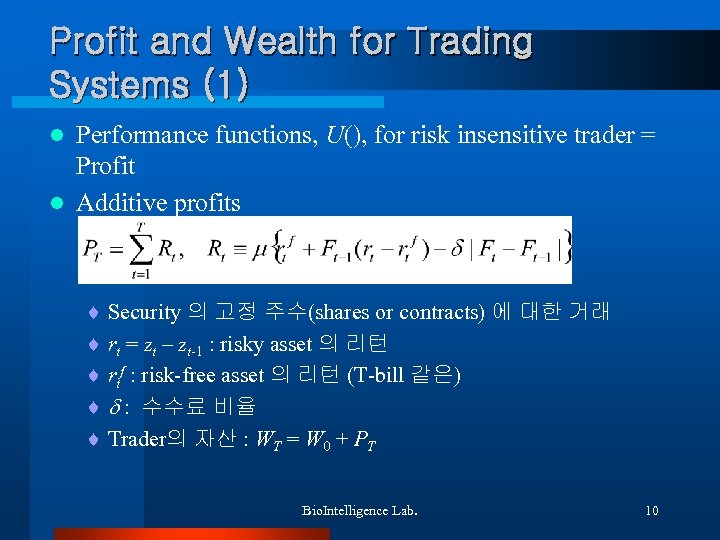

Profit and Wealth for Trading Systems (1) Performance functions, U(), for risk insensitive trader = Profit l Additive profits l ¨ Security 의 고정 주수(shares or contracts) 에 대한 거래 ¨ rt = zt – zt-1 : risky asset 의 리턴 ¨ rtf : risk-free asset 의 리턴 (T-bill 같은) ¨ : 수수료 비율 ¨ Trader의 자산 : WT = W 0 + PT Bio. Intelligence Lab. 10

Profit and Wealth for Trading Systems (1) Performance functions, U(), for risk insensitive trader = Profit l Additive profits l ¨ Security 의 고정 주수(shares or contracts) 에 대한 거래 ¨ rt = zt – zt-1 : risky asset 의 리턴 ¨ rtf : risk-free asset 의 리턴 (T-bill 같은) ¨ : 수수료 비율 ¨ Trader의 자산 : WT = W 0 + PT Bio. Intelligence Lab. 10

Profit and Wealth for Trading Systems (2) l Multiplicative profits ¨ 누적 자산의 일정 비율 > 0 이 투자됨 ¨ rt = (zt/zt-1 – 1) ¨ In case of no short sales, when = 1 Bio. Intelligence Lab. 11

Profit and Wealth for Trading Systems (2) l Multiplicative profits ¨ 누적 자산의 일정 비율 > 0 이 투자됨 ¨ rt = (zt/zt-1 – 1) ¨ In case of no short sales, when = 1 Bio. Intelligence Lab. 11

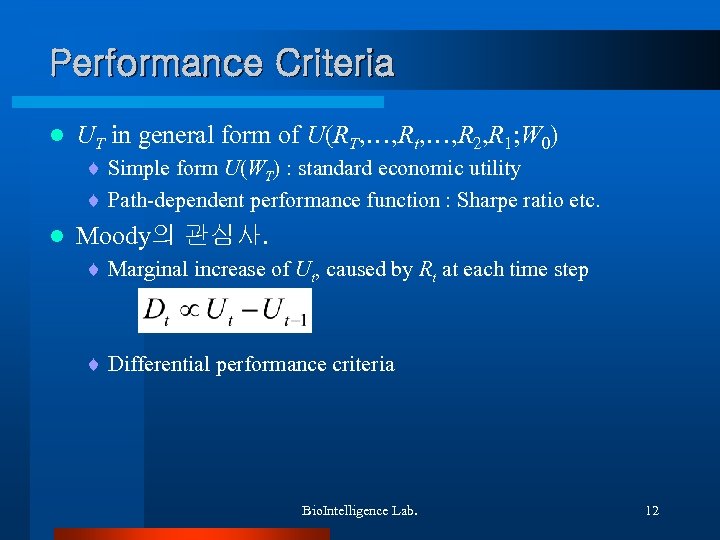

Performance Criteria l UT in general form of U(RT, …, Rt, …, R 2, R 1; W 0) ¨ Simple form U(WT) : standard economic utility ¨ Path-dependent performance function : Sharpe ratio etc. l Moody의 관심사. ¨ Marginal increase of Ut, caused by Rt at each time step ¨ Differential performance criteria Bio. Intelligence Lab. 12

Performance Criteria l UT in general form of U(RT, …, Rt, …, R 2, R 1; W 0) ¨ Simple form U(WT) : standard economic utility ¨ Path-dependent performance function : Sharpe ratio etc. l Moody의 관심사. ¨ Marginal increase of Ut, caused by Rt at each time step ¨ Differential performance criteria Bio. Intelligence Lab. 12

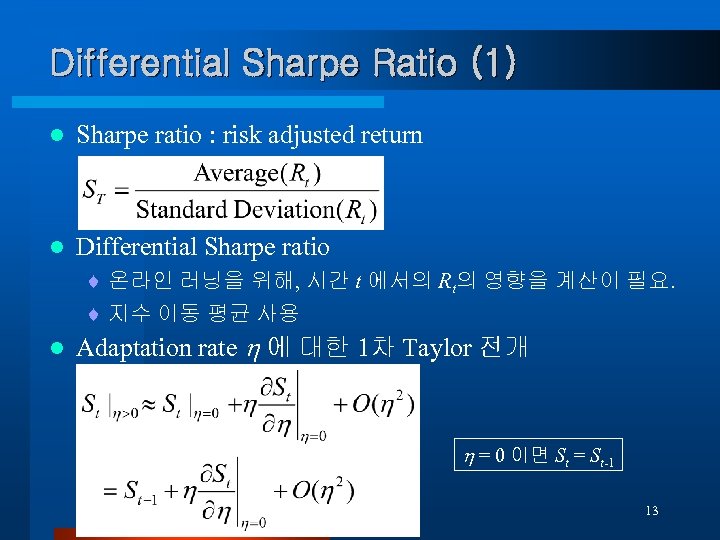

Differential Sharpe Ratio (1) l Sharpe ratio : risk adjusted return l Differential Sharpe ratio ¨ 온라인 러닝을 위해, 시간 t 에서의 Rt의 영향을 계산이 필요. ¨ 지수 이동 평균 사용 l Adaptation rate 에 대한 1차 Taylor 전개 = 0 이면 St = St-1 Bio. Intelligence Lab. 13

Differential Sharpe Ratio (1) l Sharpe ratio : risk adjusted return l Differential Sharpe ratio ¨ 온라인 러닝을 위해, 시간 t 에서의 Rt의 영향을 계산이 필요. ¨ 지수 이동 평균 사용 l Adaptation rate 에 대한 1차 Taylor 전개 = 0 이면 St = St-1 Bio. Intelligence Lab. 13

Differential Sharpe Ratio (2) l Exponential moving average with adaptation rate l Sharpe Ratio Rt > At-1 : increased reward l Taylor 전개로부터, Rt 2 > Bt-1 : increased risk Bio. Intelligence Lab. 14

Differential Sharpe Ratio (2) l Exponential moving average with adaptation rate l Sharpe Ratio Rt > At-1 : increased reward l Taylor 전개로부터, Rt 2 > Bt-1 : increased risk Bio. Intelligence Lab. 14

Differential Sharpe Ratio (3) l Derivative with . ¨ Dt is max at Rt = Bt-1/At-1 l Meaning of differential Sharpe ratio ¨ Making on-line learning possible : At-1과 Bt-1로부터 쉽게 계산 가능 ¨ Recursive updating 이 가능함 ¨ 최근 return 에 강한 가중치 부여 ¨ 해석력 : Rt의 기여도를 알 수 있게됨 Bio. Intelligence Lab. 15

Differential Sharpe Ratio (3) l Derivative with . ¨ Dt is max at Rt = Bt-1/At-1 l Meaning of differential Sharpe ratio ¨ Making on-line learning possible : At-1과 Bt-1로부터 쉽게 계산 가능 ¨ Recursive updating 이 가능함 ¨ 최근 return 에 강한 가중치 부여 ¨ 해석력 : Rt의 기여도를 알 수 있게됨 Bio. Intelligence Lab. 15

III. Learning to Trade Bio. Intelligence Lab. 16

III. Learning to Trade Bio. Intelligence Lab. 16

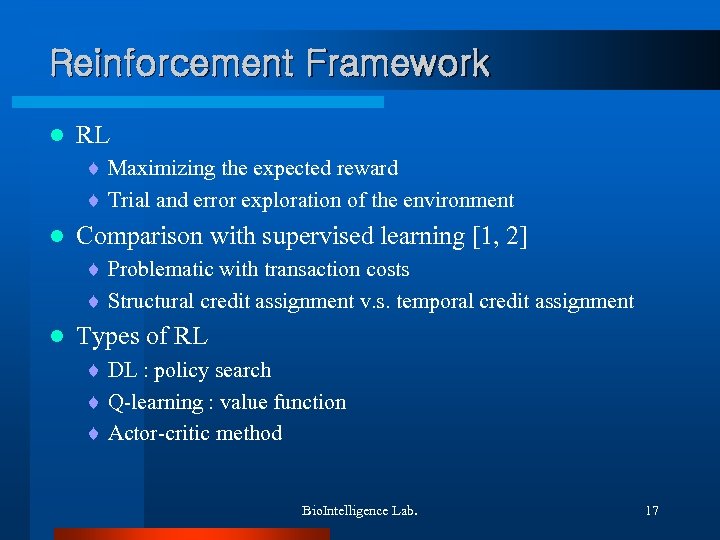

Reinforcement Framework l RL ¨ Maximizing the expected reward ¨ Trial and error exploration of the environment l Comparison with supervised learning [1, 2] ¨ Problematic with transaction costs ¨ Structural credit assignment v. s. temporal credit assignment l Types of RL ¨ DL : policy search ¨ Q-learning : value function ¨ Actor-critic method Bio. Intelligence Lab. 17

Reinforcement Framework l RL ¨ Maximizing the expected reward ¨ Trial and error exploration of the environment l Comparison with supervised learning [1, 2] ¨ Problematic with transaction costs ¨ Structural credit assignment v. s. temporal credit assignment l Types of RL ¨ DL : policy search ¨ Q-learning : value function ¨ Actor-critic method Bio. Intelligence Lab. 17

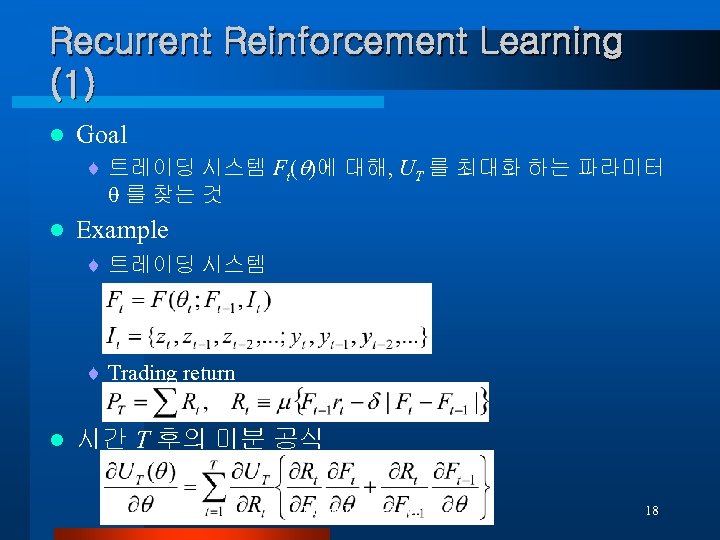

Recurrent Reinforcement Learning (1) l Goal ¨ 트레이딩 시스템 Ft( )에 대해, UT 를 최대화 하는 파라미터 를 찾는 것 l Example ¨ 트레이딩 시스템 ¨ Trading return l 시간 T 후의 미분 공식 Bio. Intelligence Lab. 18

Recurrent Reinforcement Learning (1) l Goal ¨ 트레이딩 시스템 Ft( )에 대해, UT 를 최대화 하는 파라미터 를 찾는 것 l Example ¨ 트레이딩 시스템 ¨ Trading return l 시간 T 후의 미분 공식 Bio. Intelligence Lab. 18

Recurrent Reinforcement Learning (2) l 학습 기법 ¨ Back-propagation through time (BPTT) l Temporal dependencies Differential performance criteria Dt l Stochastic version ¨ Rt에 관계되는 항에만 집중 Bio. Intelligence Lab. 19

Recurrent Reinforcement Learning (2) l 학습 기법 ¨ Back-propagation through time (BPTT) l Temporal dependencies Differential performance criteria Dt l Stochastic version ¨ Rt에 관계되는 항에만 집중 Bio. Intelligence Lab. 19

Recurrent Reinforcement Learning (3) l Remind ¨ Moody 는 특정 액션에 대한 즉각적인 측정치, Dt 를 최적화 하는 것에 초점 l [1, 2] ¨ 포트폴리오 최적화 등 Bio. Intelligence Lab. 20

Recurrent Reinforcement Learning (3) l Remind ¨ Moody 는 특정 액션에 대한 즉각적인 측정치, Dt 를 최적화 하는 것에 초점 l [1, 2] ¨ 포트폴리오 최적화 등 Bio. Intelligence Lab. 20

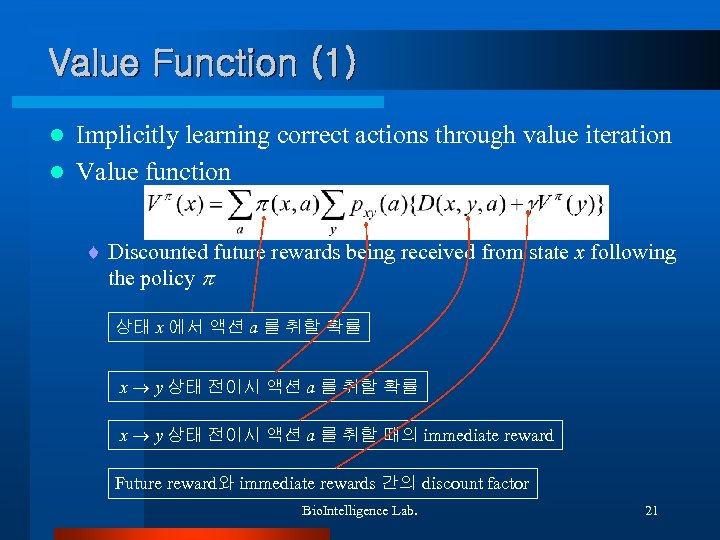

Value Function (1) Implicitly learning correct actions through value iteration l Value function l ¨ Discounted future rewards being received from state x following the policy 상태 x 에서 액션 a 를 취할 확률 x y 상태 전이시 액션 a 를 취할 때의 immediate reward Future reward와 immediate rewards 간의 discount factor Bio. Intelligence Lab. 21

Value Function (1) Implicitly learning correct actions through value iteration l Value function l ¨ Discounted future rewards being received from state x following the policy 상태 x 에서 액션 a 를 취할 확률 x y 상태 전이시 액션 a 를 취할 때의 immediate reward Future reward와 immediate rewards 간의 discount factor Bio. Intelligence Lab. 21

Value Function (2) l Optimal value function & Bellman’s optimally equation l Value iteration update : Converge to optimal solution l Optimal Policy Bio. Intelligence Lab. 22

Value Function (2) l Optimal value function & Bellman’s optimally equation l Value iteration update : Converge to optimal solution l Optimal Policy Bio. Intelligence Lab. 22

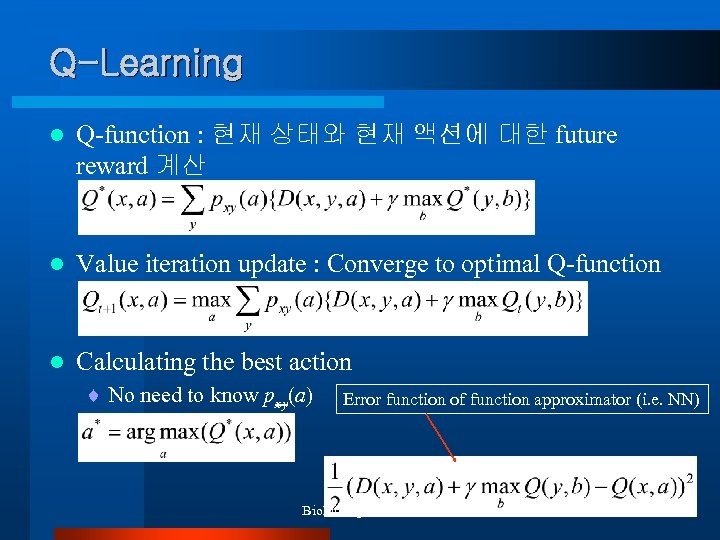

Q-Learning l Q-function : 현재 상태와 현재 액션에 대한 future reward 계산 l Value iteration update : Converge to optimal Q-function l Calculating the best action ¨ No need to know pxy(a) Error function of function approximator (i. e. NN) Bio. Intelligence Lab. 23

Q-Learning l Q-function : 현재 상태와 현재 액션에 대한 future reward 계산 l Value iteration update : Converge to optimal Q-function l Calculating the best action ¨ No need to know pxy(a) Error function of function approximator (i. e. NN) Bio. Intelligence Lab. 23

IV. Empirical Results Artificial price series 2. U. S. Dollar/British Pound Exchange rate 3. Monthly S&P 500 stock index 1. Bio. Intelligence Lab. 24

IV. Empirical Results Artificial price series 2. U. S. Dollar/British Pound Exchange rate 3. Monthly S&P 500 stock index 1. Bio. Intelligence Lab. 24

A trading system based on DR Bio. Intelligence Lab. 25

A trading system based on DR Bio. Intelligence Lab. 25

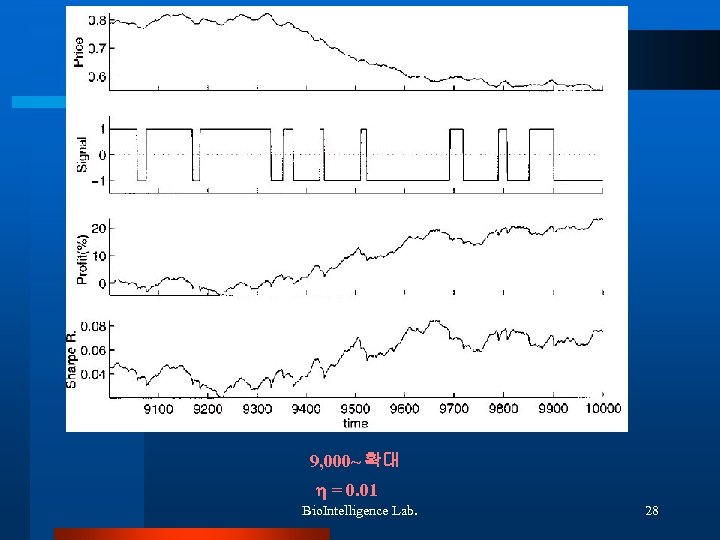

Artificial price series l Data : autoregressive trend processes l 10, 000 samples l 검증 ¨ RRL 이 트레이딩 전략의 학습 도구로 적합한지? ¨ 거래세의 증가에 따른 거래 횟수의 경향은? Bio. Intelligence Lab. 26

Artificial price series l Data : autoregressive trend processes l 10, 000 samples l 검증 ¨ RRL 이 트레이딩 전략의 학습 도구로 적합한지? ¨ 거래세의 증가에 따른 거래 횟수의 경향은? Bio. Intelligence Lab. 26

10, 000 샘플 Error function of function approximator (i. e. NN) {long, short} position only ~2, 000 기간 동안 성능 저하 Bio. Intelligence Lab. 27

10, 000 샘플 Error function of function approximator (i. e. NN) {long, short} position only ~2, 000 기간 동안 성능 저하 Bio. Intelligence Lab. 27

9, 000~ 확대 = 0. 01 Bio. Intelligence Lab. 28

9, 000~ 확대 = 0. 01 Bio. Intelligence Lab. 28

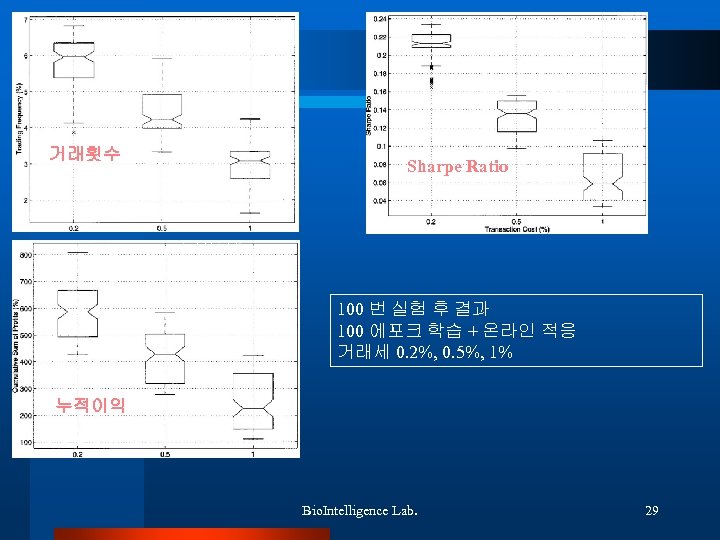

거래횟수 Sharpe Ratio 100 번 실험 후 결과 100 에포크 학습 + 온라인 적응 거래세 0. 2%, 0. 5%, 1% 누적이익 Bio. Intelligence Lab. 29

거래횟수 Sharpe Ratio 100 번 실험 후 결과 100 에포크 학습 + 온라인 적응 거래세 0. 2%, 0. 5%, 1% 누적이익 Bio. Intelligence Lab. 29

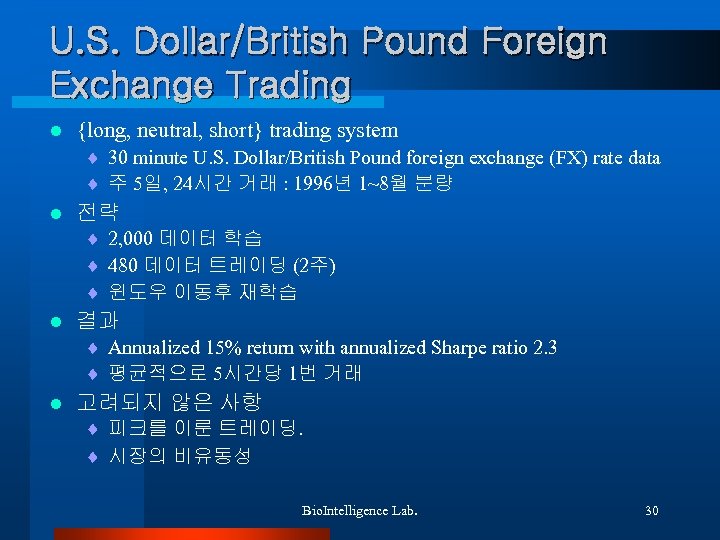

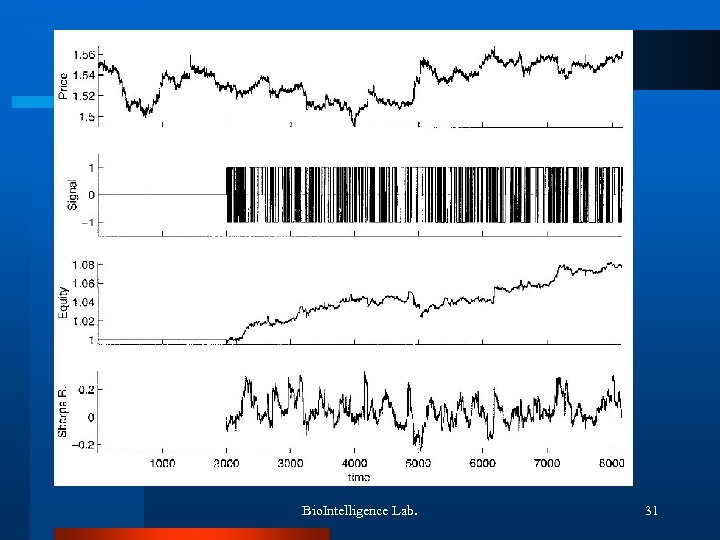

U. S. Dollar/British Pound Foreign Exchange Trading l {long, neutral, short} trading system ¨ 30 minute U. S. Dollar/British Pound foreign exchange (FX) rate data ¨ 주 5일, 24시간 거래 : 1996년 1~8월 분량 l 전략 ¨ 2, 000 데이터 학습 ¨ 480 데이터 트레이딩 (2주) ¨ 윈도우 이동후 재학습 l 결과 ¨ Annualized 15% return with annualized Sharpe ratio 2. 3 ¨ 평균적으로 5시간당 1번 거래 l 고려되지 않은 사항 ¨ 피크를 이룬 트레이딩. ¨ 시장의 비유동성 Bio. Intelligence Lab. 30

U. S. Dollar/British Pound Foreign Exchange Trading l {long, neutral, short} trading system ¨ 30 minute U. S. Dollar/British Pound foreign exchange (FX) rate data ¨ 주 5일, 24시간 거래 : 1996년 1~8월 분량 l 전략 ¨ 2, 000 데이터 학습 ¨ 480 데이터 트레이딩 (2주) ¨ 윈도우 이동후 재학습 l 결과 ¨ Annualized 15% return with annualized Sharpe ratio 2. 3 ¨ 평균적으로 5시간당 1번 거래 l 고려되지 않은 사항 ¨ 피크를 이룬 트레이딩. ¨ 시장의 비유동성 Bio. Intelligence Lab. 30

Bio. Intelligence Lab. 31

Bio. Intelligence Lab. 31

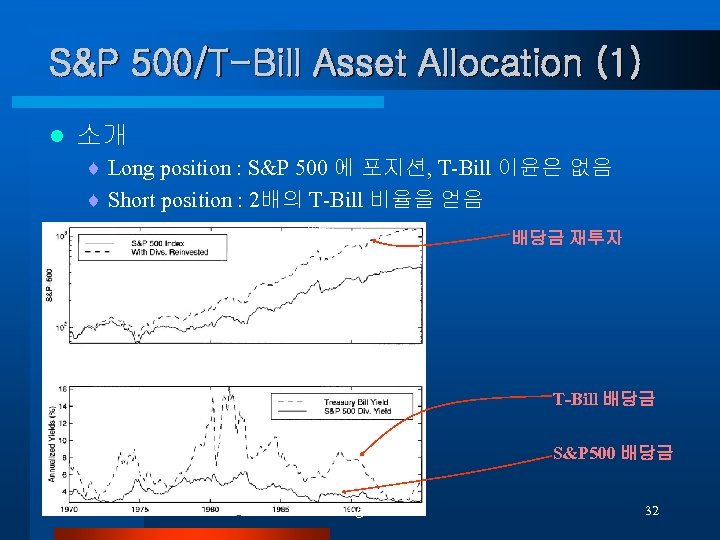

S&P 500/T-Bill Asset Allocation (1) l 소개 ¨ Long position : S&P 500 에 포지션, T-Bill 이윤은 없음 ¨ Short position : 2배의 T-Bill 비율을 얻음 배당금 재투자 T-Bill 배당금 S&P 500 배당금 Bio. Intelligence Lab. 32

S&P 500/T-Bill Asset Allocation (1) l 소개 ¨ Long position : S&P 500 에 포지션, T-Bill 이윤은 없음 ¨ Short position : 2배의 T-Bill 비율을 얻음 배당금 재투자 T-Bill 배당금 S&P 500 배당금 Bio. Intelligence Lab. 32

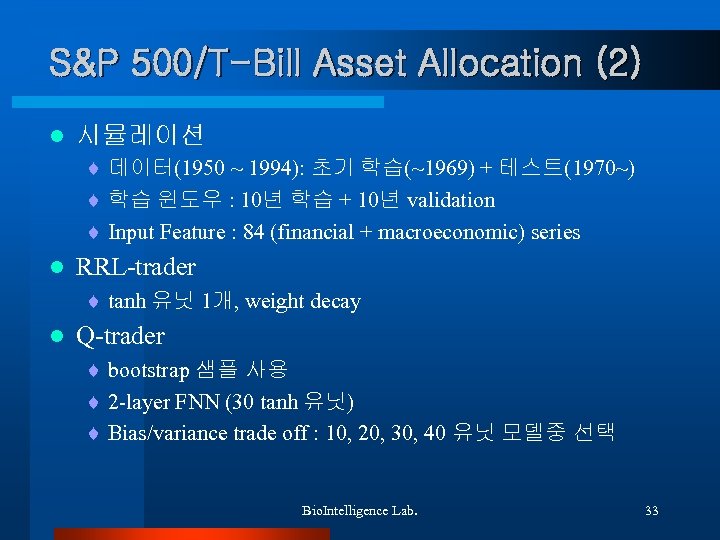

S&P 500/T-Bill Asset Allocation (2) l 시뮬레이션 ¨ 데이터(1950 ~ 1994): 초기 학습(~1969) + 테스트(1970~) ¨ 학습 윈도우 : 10년 학습 + 10년 validation ¨ Input Feature : 84 (financial + macroeconomic) series l RRL-trader ¨ tanh 유닛 1개, weight decay l Q-trader ¨ bootstrap 샘플 사용 ¨ 2 -layer FNN (30 tanh 유닛) ¨ Bias/variance trade off : 10, 20, 30, 40 유닛 모델중 선택 Bio. Intelligence Lab. 33

S&P 500/T-Bill Asset Allocation (2) l 시뮬레이션 ¨ 데이터(1950 ~ 1994): 초기 학습(~1969) + 테스트(1970~) ¨ 학습 윈도우 : 10년 학습 + 10년 validation ¨ Input Feature : 84 (financial + macroeconomic) series l RRL-trader ¨ tanh 유닛 1개, weight decay l Q-trader ¨ bootstrap 샘플 사용 ¨ 2 -layer FNN (30 tanh 유닛) ¨ Bias/variance trade off : 10, 20, 30, 40 유닛 모델중 선택 Bio. Intelligence Lab. 33

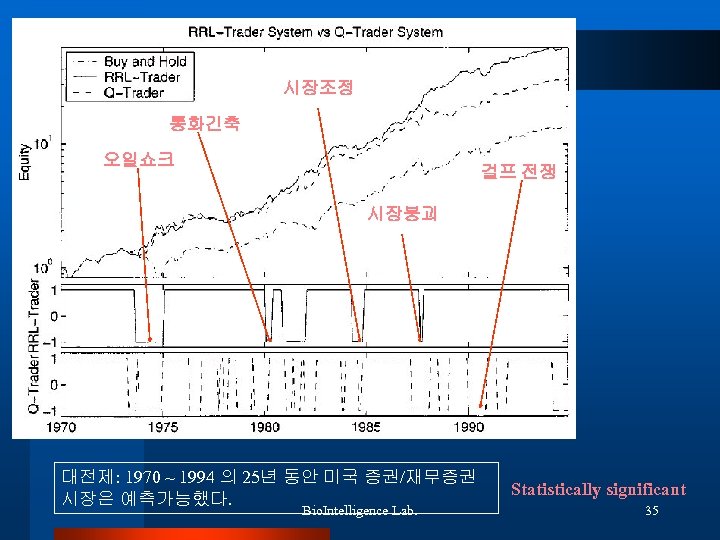

Voting methods RRL : 30 번, Q : 10 번 거래세 0. 5% 이익금 재투자 Multiplicative profit ratio Buy and Hold : 1348% Q-Trader : 3359% RRL-Trader : 5860% Bio. Intelligence Lab. 34

Voting methods RRL : 30 번, Q : 10 번 거래세 0. 5% 이익금 재투자 Multiplicative profit ratio Buy and Hold : 1348% Q-Trader : 3359% RRL-Trader : 5860% Bio. Intelligence Lab. 34

시장조정 통화긴축 오일쇼크 걸프 전쟁 시장붕괴 대전제: 1970 ~ 1994 의 25년 동안 미국 증권/재무증권 시장은 예측가능했다. Bio. Intelligence Lab. Statistically significant 35

시장조정 통화긴축 오일쇼크 걸프 전쟁 시장붕괴 대전제: 1970 ~ 1994 의 25년 동안 미국 증권/재무증권 시장은 예측가능했다. Bio. Intelligence Lab. Statistically significant 35

인플레이션 기대치 Sensitivity Analysis Bio. Intelligence Lab. 36

인플레이션 기대치 Sensitivity Analysis Bio. Intelligence Lab. 36

V. Learn the Policy or Learn the Value? Bio. Intelligence Lab. 37

V. Learn the Policy or Learn the Value? Bio. Intelligence Lab. 37

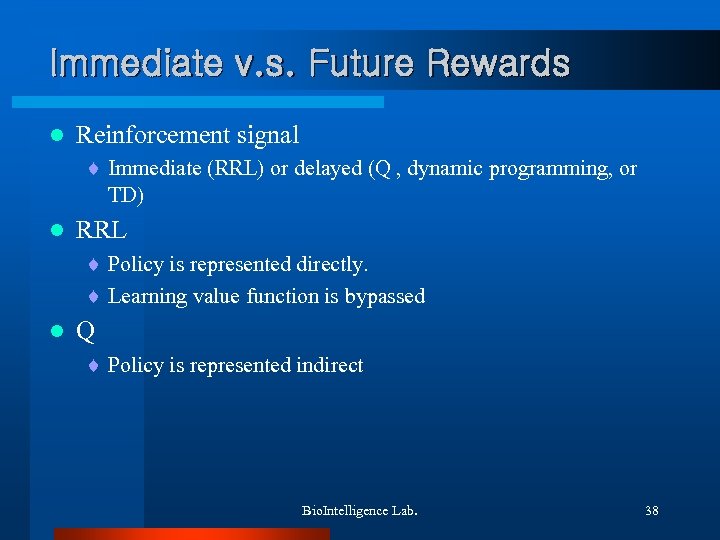

Immediate v. s. Future Rewards l Reinforcement signal ¨ Immediate (RRL) or delayed (Q , dynamic programming, or TD) l RRL ¨ Policy is represented directly. ¨ Learning value function is bypassed l Q ¨ Policy is represented indirect Bio. Intelligence Lab. 38

Immediate v. s. Future Rewards l Reinforcement signal ¨ Immediate (RRL) or delayed (Q , dynamic programming, or TD) l RRL ¨ Policy is represented directly. ¨ Learning value function is bypassed l Q ¨ Policy is represented indirect Bio. Intelligence Lab. 38

Policies v. s. Values l Some limitations of value function approach ¨ Original formulation of Q-learning : discrete action & state spaces ¨ Curse of dimensionality ¨ Policies derived from Q-learning tend to be brittle : small changes in value function may lead large changes in the policy ¨ Large scale noise and non-stationarity may lead severe problems l RRL’s advantages ¨ Policy is represented directly : Simpler functional form is sufficient ¨ Can produce real valued actions ¨ More robust in noisy environment / Quick adaptation to nonstationarity Bio. Intelligence Lab. 39

Policies v. s. Values l Some limitations of value function approach ¨ Original formulation of Q-learning : discrete action & state spaces ¨ Curse of dimensionality ¨ Policies derived from Q-learning tend to be brittle : small changes in value function may lead large changes in the policy ¨ Large scale noise and non-stationarity may lead severe problems l RRL’s advantages ¨ Policy is represented directly : Simpler functional form is sufficient ¨ Can produce real valued actions ¨ More robust in noisy environment / Quick adaptation to nonstationarity Bio. Intelligence Lab. 39

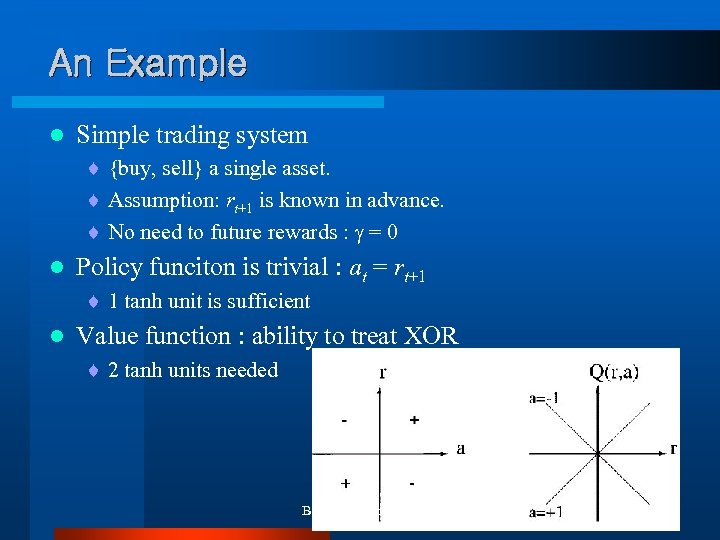

An Example l Simple trading system ¨ {buy, sell} a single asset. ¨ Assumption: rt+1 is known in advance. ¨ No need to future rewards : = 0 l Policy funciton is trivial : at = rt+1 ¨ 1 tanh unit is sufficient l Value function : ability to treat XOR ¨ 2 tanh units needed Bio. Intelligence Lab. 40

An Example l Simple trading system ¨ {buy, sell} a single asset. ¨ Assumption: rt+1 is known in advance. ¨ No need to future rewards : = 0 l Policy funciton is trivial : at = rt+1 ¨ 1 tanh unit is sufficient l Value function : ability to treat XOR ¨ 2 tanh units needed Bio. Intelligence Lab. 40

Conclusion How to train trading systems via DR l RRL algorithm l Differential Sharpe ratio & differential downside deviation ratio l RRL is more efficient than Q-learning in financial area. l Bio. Intelligence Lab. 41

Conclusion How to train trading systems via DR l RRL algorithm l Differential Sharpe ratio & differential downside deviation ratio l RRL is more efficient than Q-learning in financial area. l Bio. Intelligence Lab. 41