e233b6ec45e58ab7aa8691f50570455a.ppt

- Количество слайдов: 23

Learning Repositories Quality Evaluation and Improvement Tools Assoc. Prof. Dr. Eugenijus Kurilovas ITC Mo. E, IMI, VGTU Lithuania

Learning Repositories Quality Evaluation and Improvement Tools Assoc. Prof. Dr. Eugenijus Kurilovas ITC Mo. E, IMI, VGTU Lithuania

The main notions • Learning object (LO) is referred to as “any digital resource that can be reused to support learning” (Wiley, 2000) • LO repositories (LORs) are considered here as properly constituted systems (i. e. , organised LOs collections) consisting of LOs, their metadata and tools / services to manage them • Metadata is considered here as “structured data about data” (Duval et al. , 2002)

The main notions • Learning object (LO) is referred to as “any digital resource that can be reused to support learning” (Wiley, 2000) • LO repositories (LORs) are considered here as properly constituted systems (i. e. , organised LOs collections) consisting of LOs, their metadata and tools / services to manage them • Metadata is considered here as “structured data about data” (Duval et al. , 2002)

The main notions • Quality evaluation is defined as the systematic examination of the extent to which an entity (part, product, service or organisation) is capable of meeting specified requirements • Multiple criteria evaluation method is referred to as the experts’ additive utility function including the alternatives’ evaluation criteria, their ratings (values) and weights • Expert evaluation is referred hereafter to as the multiple criteria evaluation of the software packages (LORs in our case) aimed at the selection of the best alternative based on score-ranking results

The main notions • Quality evaluation is defined as the systematic examination of the extent to which an entity (part, product, service or organisation) is capable of meeting specified requirements • Multiple criteria evaluation method is referred to as the experts’ additive utility function including the alternatives’ evaluation criteria, their ratings (values) and weights • Expert evaluation is referred hereafter to as the multiple criteria evaluation of the software packages (LORs in our case) aimed at the selection of the best alternative based on score-ranking results

The main notions • The software engineering Principle: we can evaluate the software using the two types of evaluation criteria – ‘internal quality’ and ‘quality in use’ criteria • ‘Internal quality’ is a descriptive characteristic that describes the quality of software independently from any particular context of its use • ‘Quality in use’ is evaluative characteristic of software obtained by making a judgment based on criteria that determine the worthiness of software for a particular project

The main notions • The software engineering Principle: we can evaluate the software using the two types of evaluation criteria – ‘internal quality’ and ‘quality in use’ criteria • ‘Internal quality’ is a descriptive characteristic that describes the quality of software independently from any particular context of its use • ‘Quality in use’ is evaluative characteristic of software obtained by making a judgment based on criteria that determine the worthiness of software for a particular project

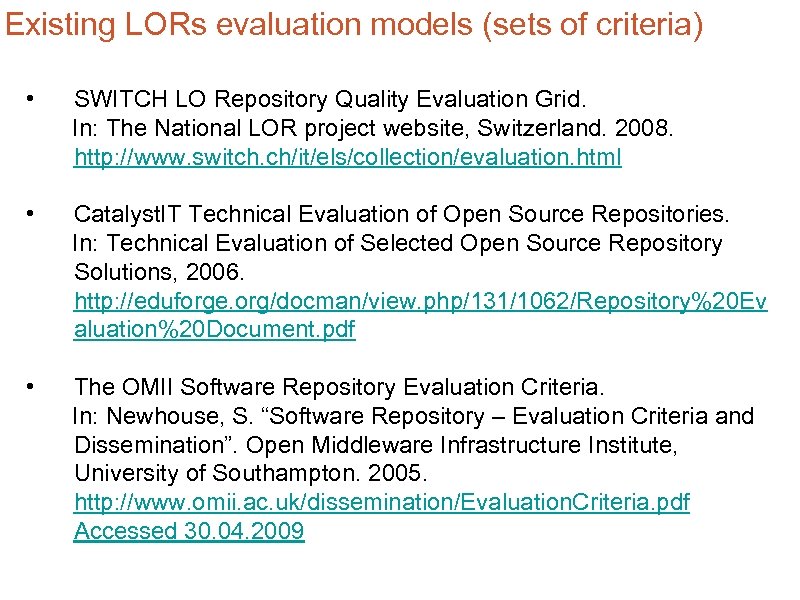

Existing LORs evaluation models (sets of criteria) • SWITCH LO Repository Quality Evaluation Grid. In: The National LOR project website, Switzerland. 2008. http: //www. switch. ch/it/els/collection/evaluation. html • Catalyst. IT Technical Evaluation of Open Source Repositories. In: Technical Evaluation of Selected Open Source Repository Solutions, 2006. http: //eduforge. org/docman/view. php/131/1062/Repository%20 Ev aluation%20 Document. pdf • The OMII Software Repository Evaluation Criteria. In: Newhouse, S. “Software Repository – Evaluation Criteria and Dissemination”. Open Middleware Infrastructure Institute, University of Southampton. 2005. http: //www. omii. ac. uk/dissemination/Evaluation. Criteria. pdf Accessed 30. 04. 2009

Existing LORs evaluation models (sets of criteria) • SWITCH LO Repository Quality Evaluation Grid. In: The National LOR project website, Switzerland. 2008. http: //www. switch. ch/it/els/collection/evaluation. html • Catalyst. IT Technical Evaluation of Open Source Repositories. In: Technical Evaluation of Selected Open Source Repository Solutions, 2006. http: //eduforge. org/docman/view. php/131/1062/Repository%20 Ev aluation%20 Document. pdf • The OMII Software Repository Evaluation Criteria. In: Newhouse, S. “Software Repository – Evaluation Criteria and Dissemination”. Open Middleware Infrastructure Institute, University of Southampton. 2005. http: //www. omii. ac. uk/dissemination/Evaluation. Criteria. pdf Accessed 30. 04. 2009

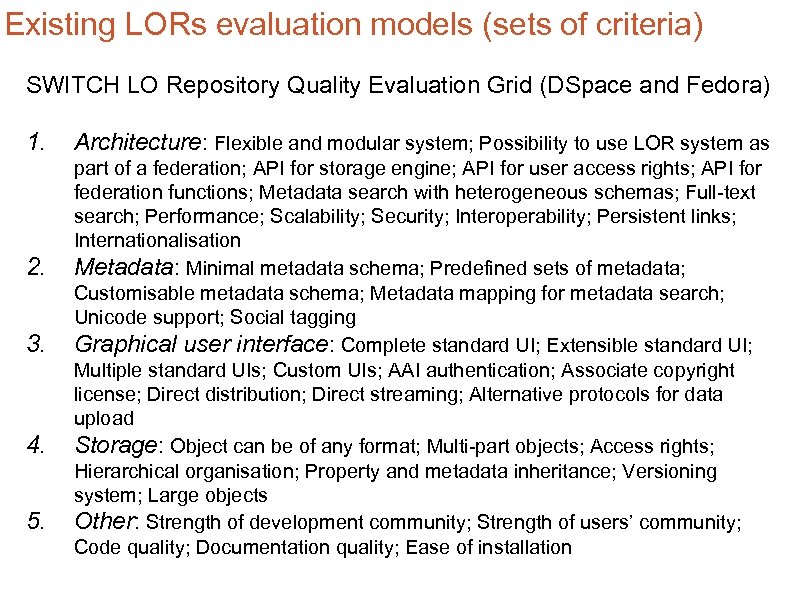

Existing LORs evaluation models (sets of criteria) SWITCH LO Repository Quality Evaluation Grid (DSpace and Fedora) 1. 2. 3. 4. 5. Architecture: Flexible and modular system; Possibility to use LOR system as part of a federation; API for storage engine; API for user access rights; API for federation functions; Metadata search with heterogeneous schemas; Full-text search; Performance; Scalability; Security; Interoperability; Persistent links; Internationalisation Metadata: Minimal metadata schema; Predefined sets of metadata; Customisable metadata schema; Metadata mapping for metadata search; Unicode support; Social tagging Graphical user interface: Complete standard UI; Extensible standard UI; Multiple standard UIs; Custom UIs; AAI authentication; Associate copyright license; Direct distribution; Direct streaming; Alternative protocols for data upload Storage: Object can be of any format; Multi-part objects; Access rights; Hierarchical organisation; Property and metadata inheritance; Versioning system; Large objects Other: Strength of development community; Strength of users’ community; Code quality; Documentation quality; Ease of installation

Existing LORs evaluation models (sets of criteria) SWITCH LO Repository Quality Evaluation Grid (DSpace and Fedora) 1. 2. 3. 4. 5. Architecture: Flexible and modular system; Possibility to use LOR system as part of a federation; API for storage engine; API for user access rights; API for federation functions; Metadata search with heterogeneous schemas; Full-text search; Performance; Scalability; Security; Interoperability; Persistent links; Internationalisation Metadata: Minimal metadata schema; Predefined sets of metadata; Customisable metadata schema; Metadata mapping for metadata search; Unicode support; Social tagging Graphical user interface: Complete standard UI; Extensible standard UI; Multiple standard UIs; Custom UIs; AAI authentication; Associate copyright license; Direct distribution; Direct streaming; Alternative protocols for data upload Storage: Object can be of any format; Multi-part objects; Access rights; Hierarchical organisation; Property and metadata inheritance; Versioning system; Large objects Other: Strength of development community; Strength of users’ community; Code quality; Documentation quality; Ease of installation

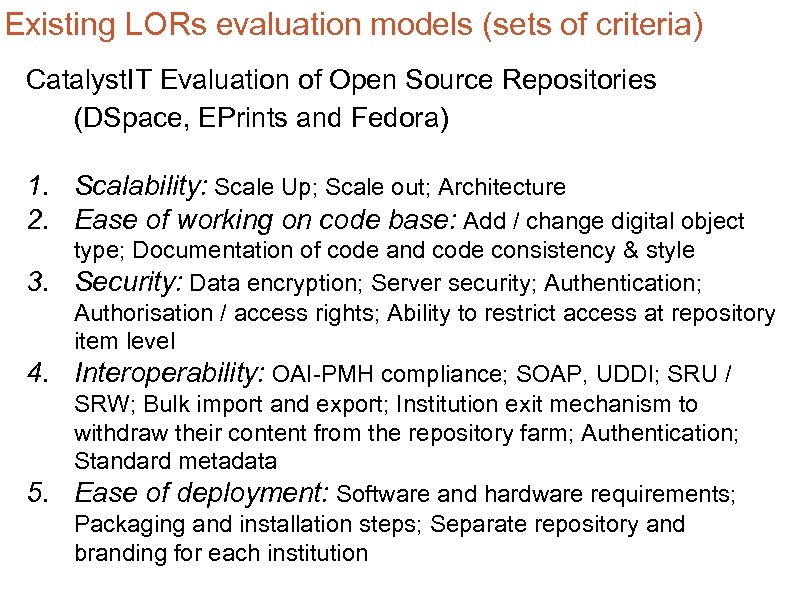

Existing LORs evaluation models (sets of criteria) Catalyst. IT Evaluation of Open Source Repositories (DSpace, EPrints and Fedora) 1. Scalability: Scale Up; Scale out; Architecture 2. Ease of working on code base: Add / change digital object 3. 4. 5. type; Documentation of code and code consistency & style Security: Data encryption; Server security; Authentication; Authorisation / access rights; Ability to restrict access at repository item level Interoperability: OAI-PMH compliance; SOAP, UDDI; SRU / SRW; Bulk import and export; Institution exit mechanism to withdraw their content from the repository farm; Authentication; Standard metadata Ease of deployment: Software and hardware requirements; Packaging and installation steps; Separate repository and branding for each institution

Existing LORs evaluation models (sets of criteria) Catalyst. IT Evaluation of Open Source Repositories (DSpace, EPrints and Fedora) 1. Scalability: Scale Up; Scale out; Architecture 2. Ease of working on code base: Add / change digital object 3. 4. 5. type; Documentation of code and code consistency & style Security: Data encryption; Server security; Authentication; Authorisation / access rights; Ability to restrict access at repository item level Interoperability: OAI-PMH compliance; SOAP, UDDI; SRU / SRW; Bulk import and export; Institution exit mechanism to withdraw their content from the repository farm; Authentication; Standard metadata Ease of deployment: Software and hardware requirements; Packaging and installation steps; Separate repository and branding for each institution

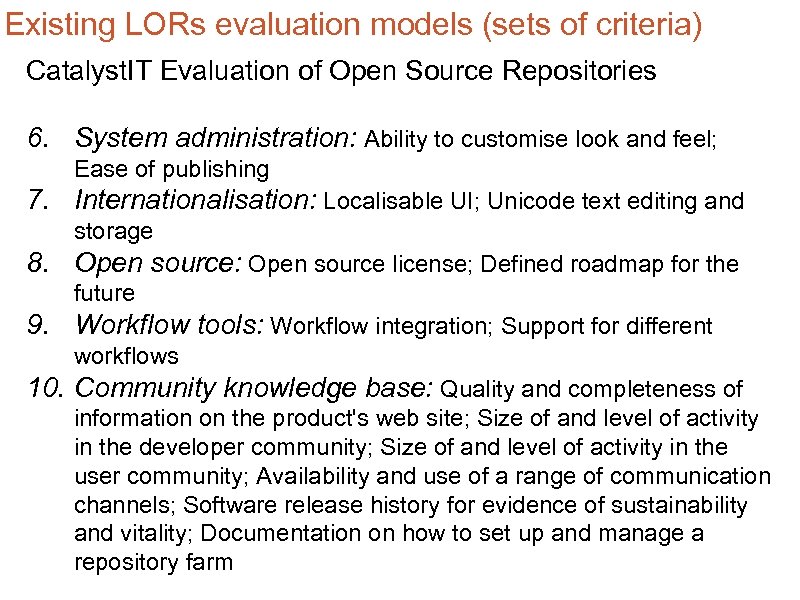

Existing LORs evaluation models (sets of criteria) Catalyst. IT Evaluation of Open Source Repositories 6. System administration: Ability to customise look and feel; Ease of publishing 7. Internationalisation: Localisable UI; Unicode text editing and storage 8. Open source: Open source license; Defined roadmap for the future 9. Workflow tools: Workflow integration; Support for different workflows 10. Community knowledge base: Quality and completeness of information on the product's web site; Size of and level of activity in the developer community; Size of and level of activity in the user community; Availability and use of a range of communication channels; Software release history for evidence of sustainability and vitality; Documentation on how to set up and manage a repository farm

Existing LORs evaluation models (sets of criteria) Catalyst. IT Evaluation of Open Source Repositories 6. System administration: Ability to customise look and feel; Ease of publishing 7. Internationalisation: Localisable UI; Unicode text editing and storage 8. Open source: Open source license; Defined roadmap for the future 9. Workflow tools: Workflow integration; Support for different workflows 10. Community knowledge base: Quality and completeness of information on the product's web site; Size of and level of activity in the developer community; Size of and level of activity in the user community; Availability and use of a range of communication channels; Software release history for evidence of sustainability and vitality; Documentation on how to set up and manage a repository farm

Existing LORs evaluation models (sets of criteria) The OMII Software Repository Evaluation Criteria 1. Documentation: Introductory docs; Prerequisite docs; Installation docs; User docs; Admin docs; Tutorials; Functional specification; Implementation specification; Test documents 2. Technical: Pre-requisites; Deployment; Verification; Stability; Scalability; Coding 3. Management: Support; Sustainability; Standards

Existing LORs evaluation models (sets of criteria) The OMII Software Repository Evaluation Criteria 1. Documentation: Introductory docs; Prerequisite docs; Installation docs; User docs; Admin docs; Tutorials; Functional specification; Implementation specification; Test documents 2. Technical: Pre-requisites; Deployment; Verification; Stability; Scalability; Coding 3. Management: Support; Sustainability; Standards

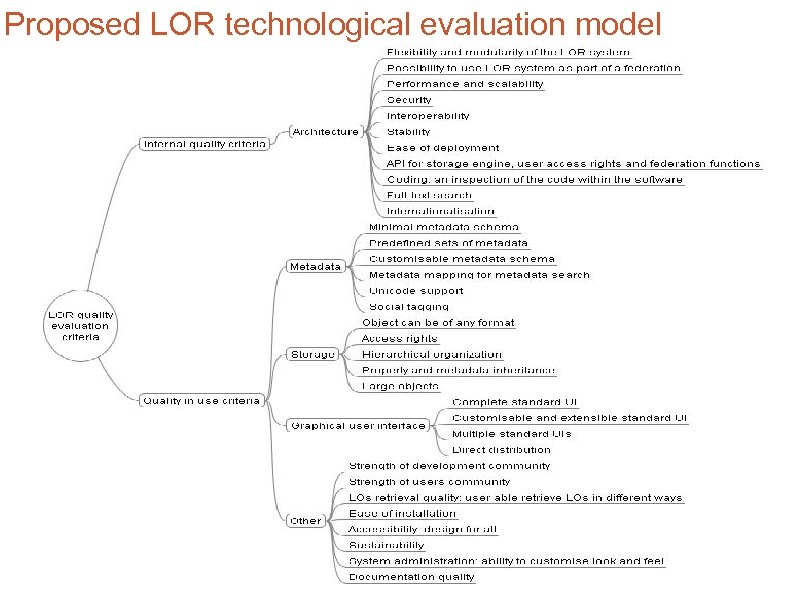

Proposed LOR technological evaluation model

Proposed LOR technological evaluation model

Proposed LOR technological evaluation model • The proposed model is mostly similar to the SWITCH model, but it also includes several criteria developed in the other models. All criteria are grouped here according to the Principle • ‘Internal quality’ criteria are the area of interest of the scientists and software engineers. ‘Quality in use’ criteria are mostly to be analysed by the programmers and users taking into account the users’ feedback on the usability of software • These criteria are comprehensive and do not overlap • The overlapping criteria could be ‘Accessibility: access for all’ (it could also be included into the ‘Architecture’ group, but this criterion also needs users’ evaluation, therefore it is included into ‘Quality in use’ criteria group), and ‘Property and metadata inheritance’ (it could also be included into the ‘Metadata’ group, but it deals also with the ‘Storage’ issues)

Proposed LOR technological evaluation model • The proposed model is mostly similar to the SWITCH model, but it also includes several criteria developed in the other models. All criteria are grouped here according to the Principle • ‘Internal quality’ criteria are the area of interest of the scientists and software engineers. ‘Quality in use’ criteria are mostly to be analysed by the programmers and users taking into account the users’ feedback on the usability of software • These criteria are comprehensive and do not overlap • The overlapping criteria could be ‘Accessibility: access for all’ (it could also be included into the ‘Architecture’ group, but this criterion also needs users’ evaluation, therefore it is included into ‘Quality in use’ criteria group), and ‘Property and metadata inheritance’ (it could also be included into the ‘Metadata’ group, but it deals also with the ‘Storage’ issues)

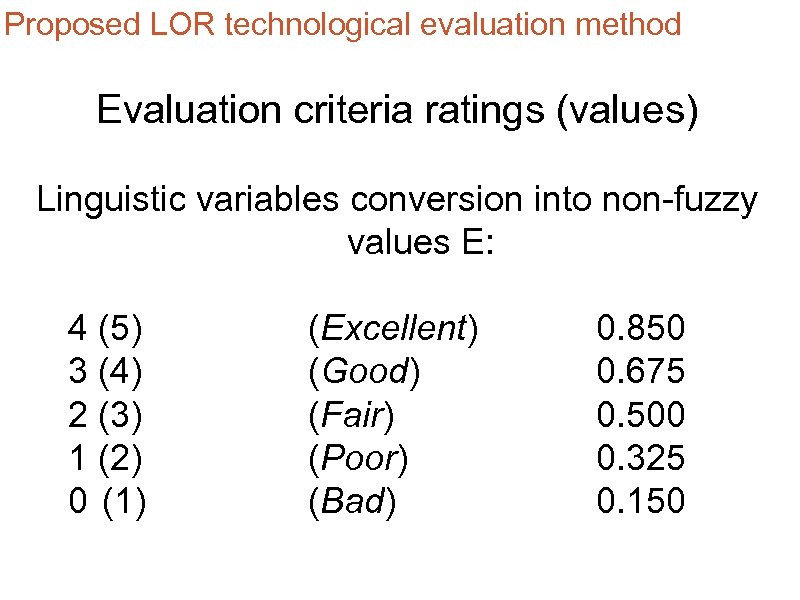

Proposed LOR technological evaluation method Evaluation criteria ratings (values) Linguistic variables conversion into non-fuzzy values E: 4 (5) 3 (4) 2 (3) 1 (2) 0 (1) (Excellent) (Good) (Fair) (Poor) (Bad) 0. 850 0. 675 0. 500 0. 325 0. 150

Proposed LOR technological evaluation method Evaluation criteria ratings (values) Linguistic variables conversion into non-fuzzy values E: 4 (5) 3 (4) 2 (3) 1 (2) 0 (1) (Excellent) (Good) (Fair) (Poor) (Bad) 0. 850 0. 675 0. 500 0. 325 0. 150

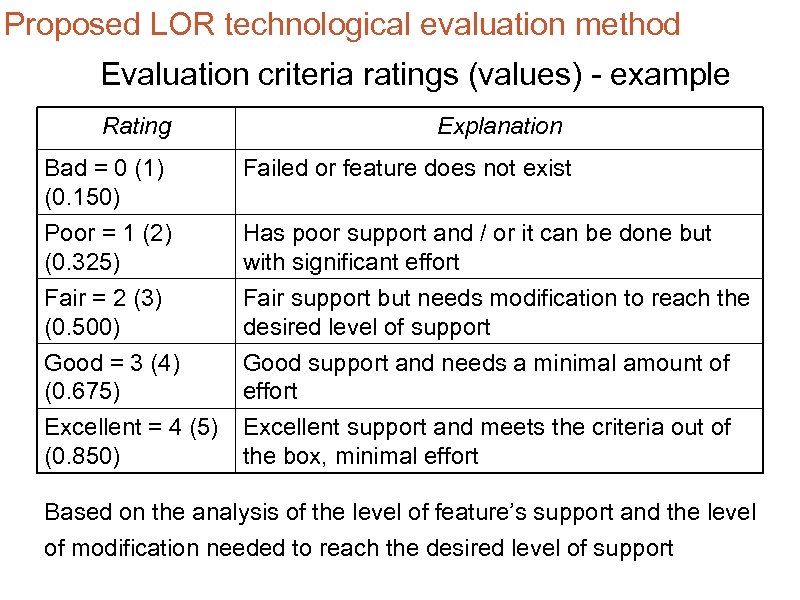

Proposed LOR technological evaluation method Evaluation criteria ratings (values) - example Rating Explanation Bad = 0 (1) (0. 150) Failed or feature does not exist Poor = 1 (2) (0. 325) Fair = 2 (3) (0. 500) Has poor support and / or it can be done but with significant effort Fair support but needs modification to reach the desired level of support Good = 3 (4) (0. 675) Excellent = 4 (5) (0. 850) Good support and needs a minimal amount of effort Excellent support and meets the criteria out of the box, minimal effort Based on the analysis of the level of feature’s support and the level of modification needed to reach the desired level of support

Proposed LOR technological evaluation method Evaluation criteria ratings (values) - example Rating Explanation Bad = 0 (1) (0. 150) Failed or feature does not exist Poor = 1 (2) (0. 325) Fair = 2 (3) (0. 500) Has poor support and / or it can be done but with significant effort Fair support but needs modification to reach the desired level of support Good = 3 (4) (0. 675) Excellent = 4 (5) (0. 850) Good support and needs a minimal amount of effort Excellent support and meets the criteria out of the box, minimal effort Based on the analysis of the level of feature’s support and the level of modification needed to reach the desired level of support

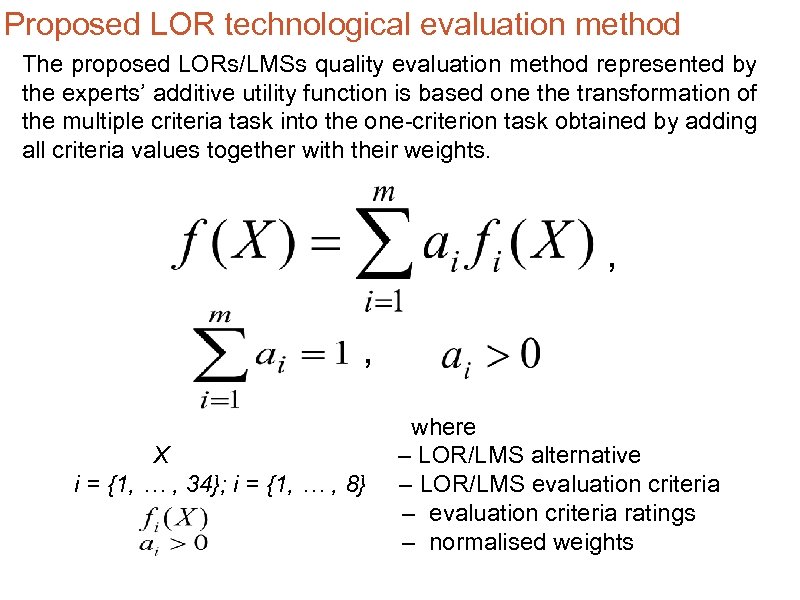

Proposed LOR technological evaluation method The proposed LORs/LMSs quality evaluation method represented by the experts’ additive utility function is based one the transformation of the multiple criteria task into the one-criterion task obtained by adding all criteria values together with their weights. , , X i = {1, … , 34}; i = {1, … , 8} where – LOR/LMS alternative – LOR/LMS evaluation criteria – evaluation criteria ratings – normalised weights

Proposed LOR technological evaluation method The proposed LORs/LMSs quality evaluation method represented by the experts’ additive utility function is based one the transformation of the multiple criteria task into the one-criterion task obtained by adding all criteria values together with their weights. , , X i = {1, … , 34}; i = {1, … , 8} where – LOR/LMS alternative – LOR/LMS evaluation criteria – evaluation criteria ratings – normalised weights

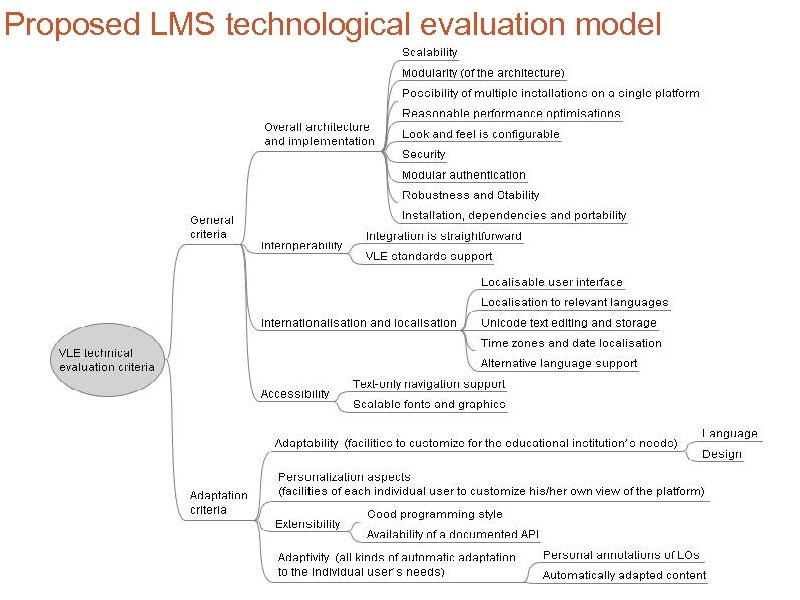

Proposed LMS technological evaluation model

Proposed LMS technological evaluation model

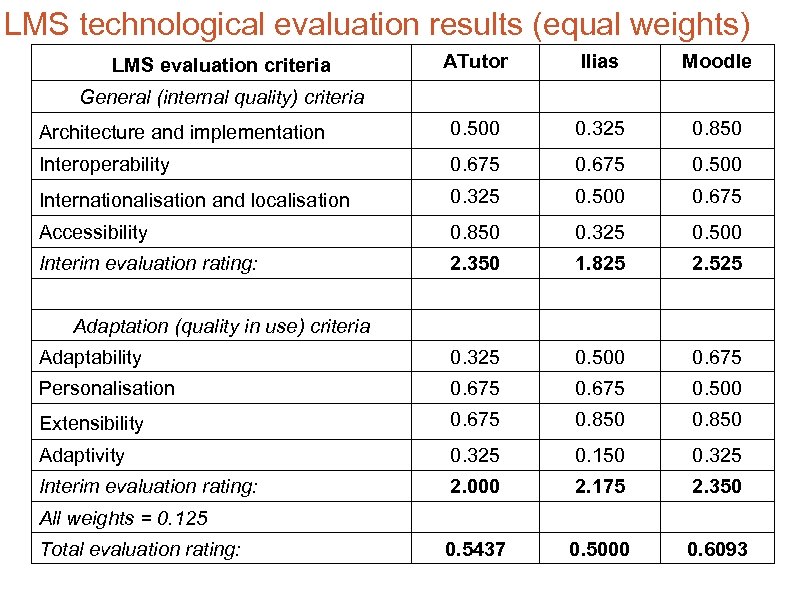

LMS technological evaluation results (equal weights) ATutor Ilias Moodle Architecture and implementation 0. 500 0. 325 0. 850 Interoperability 0. 675 0. 500 Internationalisation and localisation 0. 325 0. 500 0. 675 Accessibility 0. 850 0. 325 0. 500 Interim evaluation rating: 2. 350 1. 825 2. 525 Adaptability 0. 325 0. 500 0. 675 Personalisation 0. 675 0. 500 Extensibility 0. 675 0. 850 Adaptivity 0. 325 0. 150 0. 325 Interim evaluation rating: 2. 000 2. 175 2. 350 0. 5437 0. 5000 0. 6093 LMS evaluation criteria General (internal quality) criteria Adaptation (quality in use) criteria All weights = 0. 125 Total evaluation rating:

LMS technological evaluation results (equal weights) ATutor Ilias Moodle Architecture and implementation 0. 500 0. 325 0. 850 Interoperability 0. 675 0. 500 Internationalisation and localisation 0. 325 0. 500 0. 675 Accessibility 0. 850 0. 325 0. 500 Interim evaluation rating: 2. 350 1. 825 2. 525 Adaptability 0. 325 0. 500 0. 675 Personalisation 0. 675 0. 500 Extensibility 0. 675 0. 850 Adaptivity 0. 325 0. 150 0. 325 Interim evaluation rating: 2. 000 2. 175 2. 350 0. 5437 0. 5000 0. 6093 LMS evaluation criteria General (internal quality) criteria Adaptation (quality in use) criteria All weights = 0. 125 Total evaluation rating:

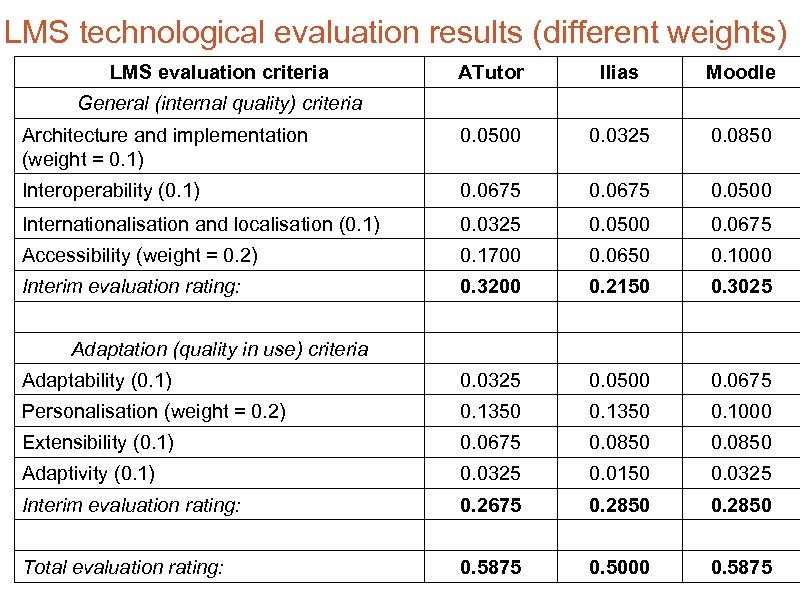

LMS technological evaluation results (different weights) LMS evaluation criteria ATutor Ilias Moodle Architecture and implementation (weight = 0. 1) 0. 0500 0. 0325 0. 0850 Interoperability (0. 1) 0. 0675 0. 0500 Internationalisation and localisation (0. 1) 0. 0325 0. 0500 0. 0675 Accessibility (weight = 0. 2) 0. 1700 0. 0650 0. 1000 Interim evaluation rating: 0. 3200 0. 2150 0. 3025 Adaptability (0. 1) 0. 0325 0. 0500 0. 0675 Personalisation (weight = 0. 2) 0. 1350 0. 1000 Extensibility (0. 1) 0. 0675 0. 0850 Adaptivity (0. 1) 0. 0325 0. 0150 0. 0325 Interim evaluation rating: 0. 2675 0. 2850 Total evaluation rating: 0. 5875 0. 5000 0. 5875 General (internal quality) criteria Adaptation (quality in use) criteria

LMS technological evaluation results (different weights) LMS evaluation criteria ATutor Ilias Moodle Architecture and implementation (weight = 0. 1) 0. 0500 0. 0325 0. 0850 Interoperability (0. 1) 0. 0675 0. 0500 Internationalisation and localisation (0. 1) 0. 0325 0. 0500 0. 0675 Accessibility (weight = 0. 2) 0. 1700 0. 0650 0. 1000 Interim evaluation rating: 0. 3200 0. 2150 0. 3025 Adaptability (0. 1) 0. 0325 0. 0500 0. 0675 Personalisation (weight = 0. 2) 0. 1350 0. 1000 Extensibility (0. 1) 0. 0675 0. 0850 Adaptivity (0. 1) 0. 0325 0. 0150 0. 0325 Interim evaluation rating: 0. 2675 0. 2850 Total evaluation rating: 0. 5875 0. 5000 0. 5875 General (internal quality) criteria Adaptation (quality in use) criteria

Several new initiatives • SE@M: The 3 rd international workshop on Search and Exchange of e-le@rning Materials. Budapest, 4 - 5 November, 2009 http: //www. learningstandards. eu/seam 200 9/call • e. QNet: Quality Network for a European Learning Resource Exchange. LLP project, 2009 – 2012

Several new initiatives • SE@M: The 3 rd international workshop on Search and Exchange of e-le@rning Materials. Budapest, 4 - 5 November, 2009 http: //www. learningstandards. eu/seam 200 9/call • e. QNet: Quality Network for a European Learning Resource Exchange. LLP project, 2009 – 2012

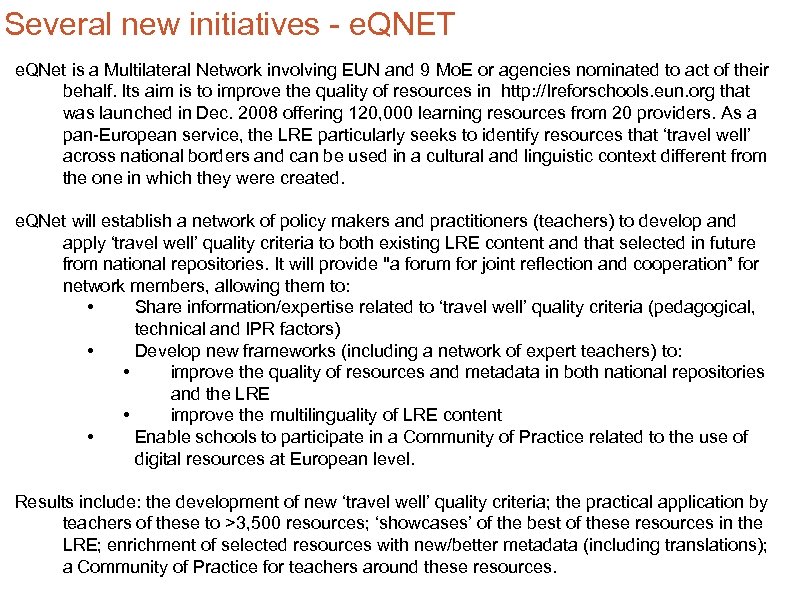

Several new initiatives - e. QNET e. QNet is a Multilateral Network involving EUN and 9 Mo. E or agencies nominated to act of their behalf. Its aim is to improve the quality of resources in http: //lreforschools. eun. org that was launched in Dec. 2008 offering 120, 000 learning resources from 20 providers. As a pan-European service, the LRE particularly seeks to identify resources that ‘travel well’ across national borders and can be used in a cultural and linguistic context different from the one in which they were created. e. QNet will establish a network of policy makers and practitioners (teachers) to develop and apply ‘travel well’ quality criteria to both existing LRE content and that selected in future from national repositories. It will provide "a forum for joint reflection and cooperation” for network members, allowing them to: • Share information/expertise related to ‘travel well’ quality criteria (pedagogical, technical and IPR factors) • Develop new frameworks (including a network of expert teachers) to: • improve the quality of resources and metadata in both national repositories and the LRE • improve the multilinguality of LRE content • Enable schools to participate in a Community of Practice related to the use of digital resources at European level. Results include: the development of new ‘travel well’ quality criteria; the practical application by teachers of these to >3, 500 resources; ‘showcases’ of the best of these resources in the LRE; enrichment of selected resources with new/better metadata (including translations); a Community of Practice for teachers around these resources.

Several new initiatives - e. QNET e. QNet is a Multilateral Network involving EUN and 9 Mo. E or agencies nominated to act of their behalf. Its aim is to improve the quality of resources in http: //lreforschools. eun. org that was launched in Dec. 2008 offering 120, 000 learning resources from 20 providers. As a pan-European service, the LRE particularly seeks to identify resources that ‘travel well’ across national borders and can be used in a cultural and linguistic context different from the one in which they were created. e. QNet will establish a network of policy makers and practitioners (teachers) to develop and apply ‘travel well’ quality criteria to both existing LRE content and that selected in future from national repositories. It will provide "a forum for joint reflection and cooperation” for network members, allowing them to: • Share information/expertise related to ‘travel well’ quality criteria (pedagogical, technical and IPR factors) • Develop new frameworks (including a network of expert teachers) to: • improve the quality of resources and metadata in both national repositories and the LRE • improve the multilinguality of LRE content • Enable schools to participate in a Community of Practice related to the use of digital resources at European level. Results include: the development of new ‘travel well’ quality criteria; the practical application by teachers of these to >3, 500 resources; ‘showcases’ of the best of these resources in the LRE; enrichment of selected resources with new/better metadata (including translations); a Community of Practice for teachers around these resources.

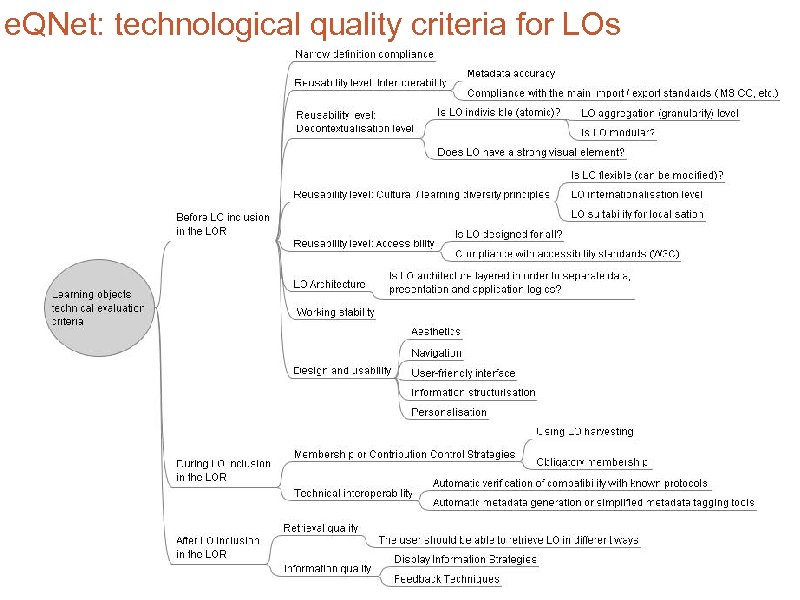

e. QNet: technological quality criteria for LOs

e. QNet: technological quality criteria for LOs

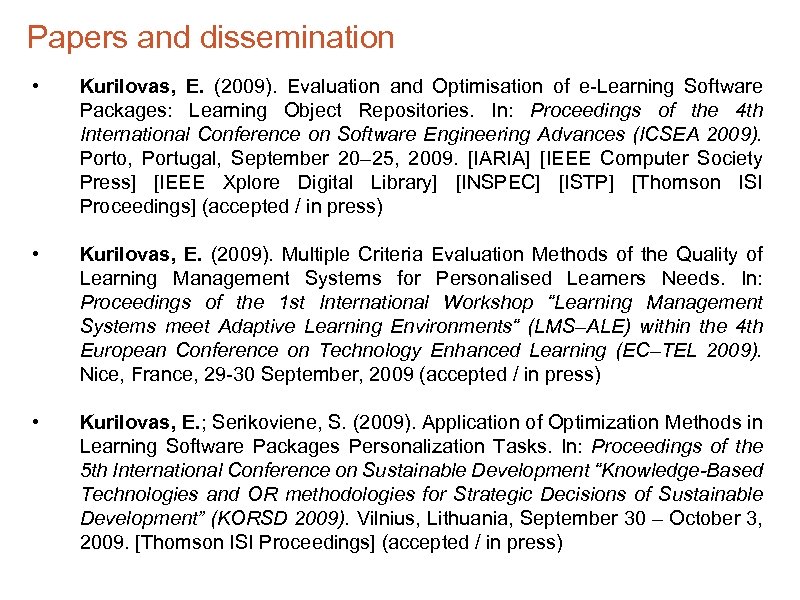

Papers and dissemination • Kurilovas, E. (2009). Evaluation and Optimisation of e-Learning Software Packages: Learning Object Repositories. In: Proceedings of the 4 th International Conference on Software Engineering Advances (ICSEA 2009). Porto, Portugal, September 20– 25, 2009. [IARIA] [IEEE Computer Society Press] [IEEE Xplore Digital Library] [INSPEC] [ISTP] [Thomson ISI Proceedings] (accepted / in press) • Kurilovas, E. (2009). Multiple Criteria Evaluation Methods of the Quality of Learning Management Systems for Personalised Learners Needs. In: Proceedings of the 1 st International Workshop “Learning Management Systems meet Adaptive Learning Environments“ (LMS–ALE) within the 4 th European Conference on Technology Enhanced Learning (EC–TEL 2009). Nice, France, 29 -30 September, 2009 (accepted / in press) • Kurilovas, E. ; Serikoviene, S. (2009). Application of Optimization Methods in Learning Software Packages Personalization Tasks. In: Proceedings of the 5 th International Conference on Sustainable Development “Knowledge-Based Technologies and OR methodologies for Strategic Decisions of Sustainable Development” (KORSD 2009). Vilnius, Lithuania, September 30 – October 3, 2009. [Thomson ISI Proceedings] (accepted / in press)

Papers and dissemination • Kurilovas, E. (2009). Evaluation and Optimisation of e-Learning Software Packages: Learning Object Repositories. In: Proceedings of the 4 th International Conference on Software Engineering Advances (ICSEA 2009). Porto, Portugal, September 20– 25, 2009. [IARIA] [IEEE Computer Society Press] [IEEE Xplore Digital Library] [INSPEC] [ISTP] [Thomson ISI Proceedings] (accepted / in press) • Kurilovas, E. (2009). Multiple Criteria Evaluation Methods of the Quality of Learning Management Systems for Personalised Learners Needs. In: Proceedings of the 1 st International Workshop “Learning Management Systems meet Adaptive Learning Environments“ (LMS–ALE) within the 4 th European Conference on Technology Enhanced Learning (EC–TEL 2009). Nice, France, 29 -30 September, 2009 (accepted / in press) • Kurilovas, E. ; Serikoviene, S. (2009). Application of Optimization Methods in Learning Software Packages Personalization Tasks. In: Proceedings of the 5 th International Conference on Sustainable Development “Knowledge-Based Technologies and OR methodologies for Strategic Decisions of Sustainable Development” (KORSD 2009). Vilnius, Lithuania, September 30 – October 3, 2009. [Thomson ISI Proceedings] (accepted / in press)

Papers and dissemination • Kurilovas, E. (2009). Interoperability, Standards and Metadata for e. Learning. In: G. A. Papadopoulos and C. Badica (Eds. ): Intelligent Distributed Computing III, SCI 237, pp. 121– 130. Springer-Verlag Berlin Heidelberg 2009. Proceedings of the 3 rd International Symposium on Intelligent Distributed Computing (IDC 2009). Agia Napa, Cyprus, October 13– 14, 2009 [Thomson ISI Proceedings] (accepted / in press) • Kurilovas, E. ; Dagienė, V. (2009). Quality Evaluation and Optimisation of e. Learning System Components. In: Proceedings of the 8 th European Conference on e-Learning (ECEL 2009). Bari, Italy, October 29– 30, 2009. [ISTP] [ISSHP] [Thomson ISI Proceedings] (accepted / in press) • Kurilovas, E. (2009). Learning Content Repositories and Learning Management Systems Based on Customization and Metadata In: Proceedings of the 1 st International Conference on Creative Content Technologies (CONTENT 2009). Athens, Greece, November 15– 20, 2009. [IARIA] [IEEE Computer Society Press] [IEEE Xplore Digital Library] [INSPEC] [ISTP] [Thomson ISI Proceedings] (accepted / in press)

Papers and dissemination • Kurilovas, E. (2009). Interoperability, Standards and Metadata for e. Learning. In: G. A. Papadopoulos and C. Badica (Eds. ): Intelligent Distributed Computing III, SCI 237, pp. 121– 130. Springer-Verlag Berlin Heidelberg 2009. Proceedings of the 3 rd International Symposium on Intelligent Distributed Computing (IDC 2009). Agia Napa, Cyprus, October 13– 14, 2009 [Thomson ISI Proceedings] (accepted / in press) • Kurilovas, E. ; Dagienė, V. (2009). Quality Evaluation and Optimisation of e. Learning System Components. In: Proceedings of the 8 th European Conference on e-Learning (ECEL 2009). Bari, Italy, October 29– 30, 2009. [ISTP] [ISSHP] [Thomson ISI Proceedings] (accepted / in press) • Kurilovas, E. (2009). Learning Content Repositories and Learning Management Systems Based on Customization and Metadata In: Proceedings of the 1 st International Conference on Creative Content Technologies (CONTENT 2009). Athens, Greece, November 15– 20, 2009. [IARIA] [IEEE Computer Society Press] [IEEE Xplore Digital Library] [INSPEC] [ISTP] [Thomson ISI Proceedings] (accepted / in press)

Thank you for your attention! Dr. Eugenijus Kurilovas Eugenijus. Kurilovas@itc. smm. lt

Thank you for your attention! Dr. Eugenijus Kurilovas Eugenijus. Kurilovas@itc. smm. lt