cf93410d7fc86a2093110c7068c37a42.ppt

- Количество слайдов: 52

LCG LHC Data and Computing Grid Project LCG Grid@Large workshop Lisboa 29 August 2005 Kors Bos NIKHEF, Amsterdam

LCG LHC Data and Computing Grid Project LCG Grid@Large workshop Lisboa 29 August 2005 Kors Bos NIKHEF, Amsterdam

Creation of matter ? Hubble Telecope: in the Eagle Nebula 7000 ly from Earth in the Serpense Constellation 2 Newborn stars emerging from compact pockets of dense stellar gas

Creation of matter ? Hubble Telecope: in the Eagle Nebula 7000 ly from Earth in the Serpense Constellation 2 Newborn stars emerging from compact pockets of dense stellar gas

You need a real problem to be able to build an operational grid Building a generic grid infrastructure without a killing application for which it is vital to have a working grid is much more difficult Re m ark 1 3

You need a real problem to be able to build an operational grid Building a generic grid infrastructure without a killing application for which it is vital to have a working grid is much more difficult Re m ark 1 3

The Large Hadron Collider § § § § 27 km circumference 1296 superconducting dipoles 2500 other magnets 2 proton beams of 7000 Ge. V each 4 beam crossing points experiments 2835 proton bunches per beam 1. 1 x 1011 protons per bunch 4

The Large Hadron Collider § § § § 27 km circumference 1296 superconducting dipoles 2500 other magnets 2 proton beams of 7000 Ge. V each 4 beam crossing points experiments 2835 proton bunches per beam 1. 1 x 1011 protons per bunch 4

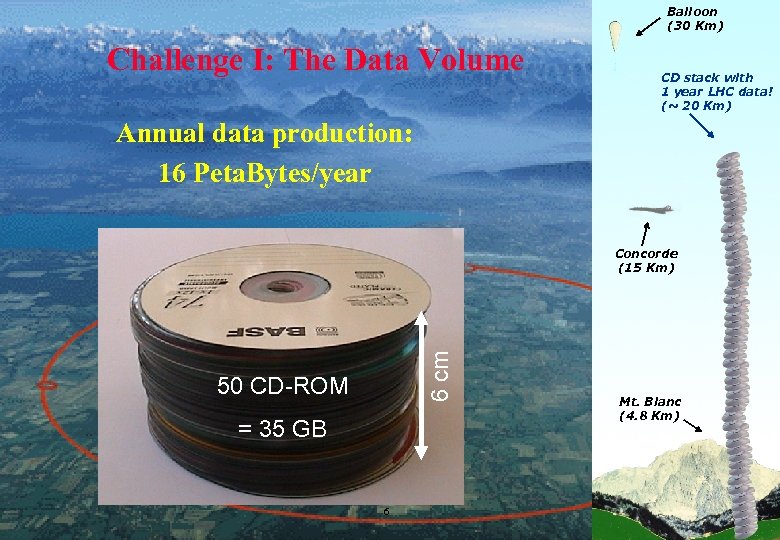

The Data Records data at 200 Hz, event size = 1 MB 200 MB/sec 1 operational yr = 107 seconds 2 PByte/year raw data Simulated data + derived data + calibration data 4 PByte/year There are 4 experiments 16 PByte/year For at least 10 years 5

The Data Records data at 200 Hz, event size = 1 MB 200 MB/sec 1 operational yr = 107 seconds 2 PByte/year raw data Simulated data + derived data + calibration data 4 PByte/year There are 4 experiments 16 PByte/year For at least 10 years 5

Balloon (30 Km) Challenge I: The Data Volume CD stack with 1 year LHC data! (~ 20 Km) Annual data production: 16 Peta. Bytes/year 6 cm Concorde (15 Km) 50 CD-ROM = 35 GB 6 Mt. Blanc (4. 8 Km)

Balloon (30 Km) Challenge I: The Data Volume CD stack with 1 year LHC data! (~ 20 Km) Annual data production: 16 Peta. Bytes/year 6 cm Concorde (15 Km) 50 CD-ROM = 35 GB 6 Mt. Blanc (4. 8 Km)

In data intensive sciences like particle physics the data management is challenge # 1 much more than the computing needs Event reconstruction is an embarrassingly parallel problem. The challenge is to get the data to and from the cpu’s. Keeping track of all versions of the reconstructed data and their location is another. ark m 2 Re 7

In data intensive sciences like particle physics the data management is challenge # 1 much more than the computing needs Event reconstruction is an embarrassingly parallel problem. The challenge is to get the data to and from the cpu’s. Keeping track of all versions of the reconstructed data and their location is another. ark m 2 Re 7

But … Data Analysis is our Challenge II One event has many tracks Reconstruction takes ~1 MSI 2 k/ev. = 10 minutes on a 3 GHz P 4 100, 000 cpu’s needed to keep up with data rate Re-reconstructed many times 10% of all data has to simulated also Simulation takes ~100 times longer Subsequent analysis steps over all data BUT … the problem is embarrassingly parallel ! 8

But … Data Analysis is our Challenge II One event has many tracks Reconstruction takes ~1 MSI 2 k/ev. = 10 minutes on a 3 GHz P 4 100, 000 cpu’s needed to keep up with data rate Re-reconstructed many times 10% of all data has to simulated also Simulation takes ~100 times longer Subsequent analysis steps over all data BUT … the problem is embarrassingly parallel ! 8

Grids offer a cost effective solution for codes that can run in parallel For many problems codes have not been written with parallel architectures in mind. Many applications that currently run on supercomputers (because they are there) could be executed in a more cost effective way on a grid of loosely coupled cpu’s. ark m 3 Re 9

Grids offer a cost effective solution for codes that can run in parallel For many problems codes have not been written with parallel architectures in mind. Many applications that currently run on supercomputers (because they are there) could be executed in a more cost effective way on a grid of loosely coupled cpu’s. ark m 3 Re 9

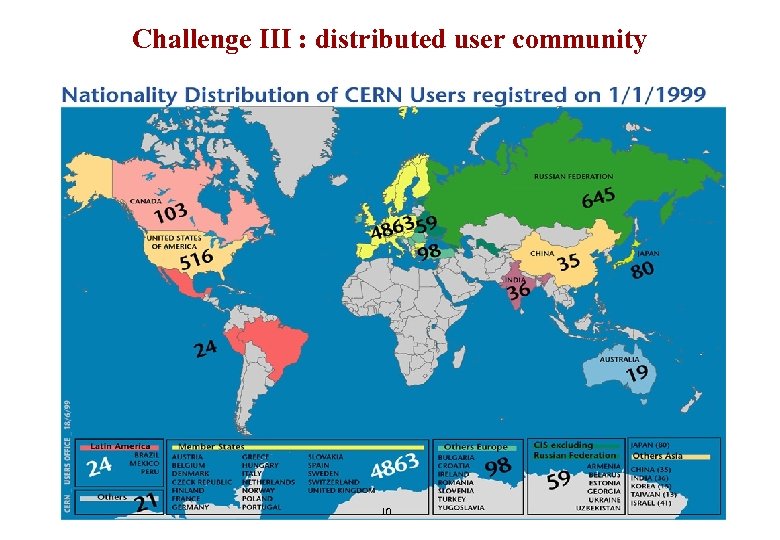

Challenge III : distributed user community 10

Challenge III : distributed user community 10

Grids fit almost perfectly large international scientific collaborations Inversely, if you don’t already have an international collaboration, it is difficult to get grid resources outside your own country as a economic model is not in place yet. ark m 4 Re 11

Grids fit almost perfectly large international scientific collaborations Inversely, if you don’t already have an international collaboration, it is difficult to get grid resources outside your own country as a economic model is not in place yet. ark m 4 Re 11

detector Data Handling and Computation for Physics Analysis event filter (selection & reconstruction) reconstruction processed data event summary data raw data event reprocessing analysis batch physics analysis event simulation 12 interactive physics analysis les. robertson@cern. ch analysis objects (extracted by physics topic)

detector Data Handling and Computation for Physics Analysis event filter (selection & reconstruction) reconstruction processed data event summary data raw data event reprocessing analysis batch physics analysis event simulation 12 interactive physics analysis les. robertson@cern. ch analysis objects (extracted by physics topic)

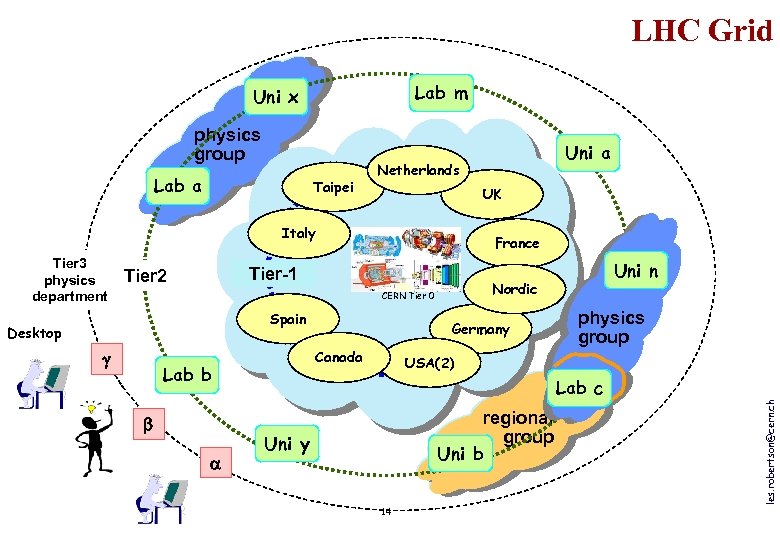

LCG Service Hierarchy Tier-0 – the accelerator centre § Data acquisition & initial processing § Long-term data curation § Distribution of data Tier-1 centres Canada – Triumf (Vancouver) Spain – PIC (Barcelona) France – IN 2 P 3 (Lyon) Germany – Forschunszentrum Karlsruhe Taiwan – Academia SInica (Taipei) UK – CLRC (Oxford) Italy – CNAF (Bologna) US Netherlands – NIKHEF/SARA (Amsterdam) – Fermi. Lab (Illinois) – Brookhaven (NY) Nordic countries – distributed Tier-1 – “online” to the data acquisition process high availability § Managed Mass Storage – grid-enabled data service § Data-heavy analysis § National, regional support Tier-2 – ~100 centres in ~40 countries § Simulation § End-user analysis – batch and interactive 13

LCG Service Hierarchy Tier-0 – the accelerator centre § Data acquisition & initial processing § Long-term data curation § Distribution of data Tier-1 centres Canada – Triumf (Vancouver) Spain – PIC (Barcelona) France – IN 2 P 3 (Lyon) Germany – Forschunszentrum Karlsruhe Taiwan – Academia SInica (Taipei) UK – CLRC (Oxford) Italy – CNAF (Bologna) US Netherlands – NIKHEF/SARA (Amsterdam) – Fermi. Lab (Illinois) – Brookhaven (NY) Nordic countries – distributed Tier-1 – “online” to the data acquisition process high availability § Managed Mass Storage – grid-enabled data service § Data-heavy analysis § National, regional support Tier-2 – ~100 centres in ~40 countries § Simulation § End-user analysis – batch and interactive 13

LHC Grid Lab m Uni x physics group Lab a Taipei Netherlands UK Italy France Tier-1 Tier 2 Nordic CERN Tier 0 Spain Desktop Lab b Germany Canada Uni n physics group USA(2) Lab c regional group Uni b Uni y 14 les. robertson@cern. ch Tier 3 physics department Uni a

LHC Grid Lab m Uni x physics group Lab a Taipei Netherlands UK Italy France Tier-1 Tier 2 Nordic CERN Tier 0 Spain Desktop Lab b Germany Canada Uni n physics group USA(2) Lab c regional group Uni b Uni y 14 les. robertson@cern. ch Tier 3 physics department Uni a

The OPN Network § § § Optical Private Network of permanent 10 Gbit/sec light paths between the Tier-0 CERN and each of the Tier-1’s Tier-1 to Tier-1 preferably also dedicated light paths (cross border fibres) but not strictly part of OPN Additional (separate) fibres for Tier-0 to Tier-1 backup connections Security considerations: ACLs because of limited number of applications and thus ports. Primarily a site issue. In Europe: GEANT 2 will deliver the desired connectivity, in the US: a combination of LHCNet and ESNet and in Asia: ASNet Proposal to be drafted for a Network Operations Centre 15

The OPN Network § § § Optical Private Network of permanent 10 Gbit/sec light paths between the Tier-0 CERN and each of the Tier-1’s Tier-1 to Tier-1 preferably also dedicated light paths (cross border fibres) but not strictly part of OPN Additional (separate) fibres for Tier-0 to Tier-1 backup connections Security considerations: ACLs because of limited number of applications and thus ports. Primarily a site issue. In Europe: GEANT 2 will deliver the desired connectivity, in the US: a combination of LHCNet and ESNet and in Asia: ASNet Proposal to be drafted for a Network Operations Centre 15

The Network for the LHC 16

The Network for the LHC 16

If data flows are known to be frequent (always) between the same end-points it is best to purchase your own wave lengths between those points A routed network would be more expensive a much harder to maintain. And why have a router when the start and destination addresses never change. ark m 5 Re 17

If data flows are known to be frequent (always) between the same end-points it is best to purchase your own wave lengths between those points A routed network would be more expensive a much harder to maintain. And why have a router when the start and destination addresses never change. ark m 5 Re 17

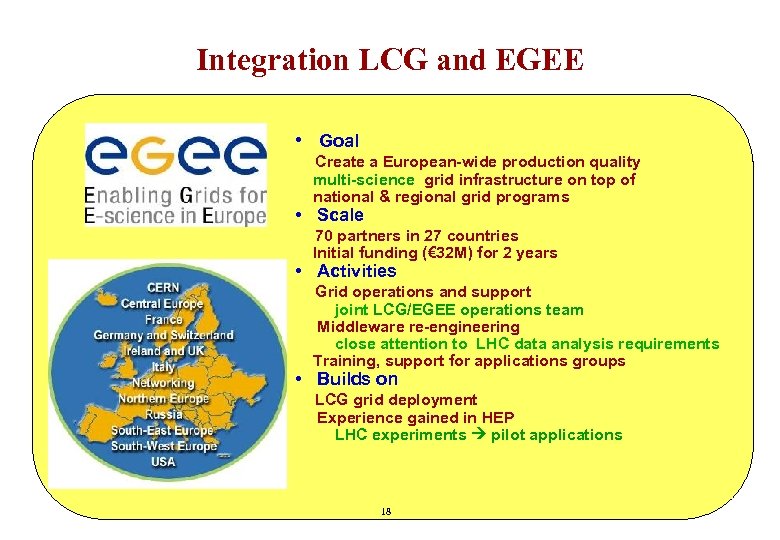

Integration LCG and EGEE • Goal Create a European-wide production quality multi-science grid infrastructure on top of national & regional grid programs • Scale 70 partners in 27 countries Initial funding (€ 32 M) for 2 years • Activities Grid operations and support joint LCG/EGEE operations team Middleware re-engineering close attention to LHC data analysis requirements Training, support for applications groups • Builds on LCG grid deployment Experience gained in HEP LHC experiments pilot applications 18

Integration LCG and EGEE • Goal Create a European-wide production quality multi-science grid infrastructure on top of national & regional grid programs • Scale 70 partners in 27 countries Initial funding (€ 32 M) for 2 years • Activities Grid operations and support joint LCG/EGEE operations team Middleware re-engineering close attention to LHC data analysis requirements Training, support for applications groups • Builds on LCG grid deployment Experience gained in HEP LHC experiments pilot applications 18

30 sites 3200 cpus Inter-operation with the Open Science Grid in the US and with Nordu. Grid: Very early days for standards – still getting basic experience Focus on baseline 25 Universities specific experiment services to meet requirements 4 National Labs 2800 CPUs July 2005 150 Grid sites 34 countries 15, 000 CPUs 8 Peta. Bytes Grid 3 19

30 sites 3200 cpus Inter-operation with the Open Science Grid in the US and with Nordu. Grid: Very early days for standards – still getting basic experience Focus on baseline 25 Universities specific experiment services to meet requirements 4 National Labs 2800 CPUs July 2005 150 Grid sites 34 countries 15, 000 CPUs 8 Peta. Bytes Grid 3 19

There is no one grid and we will have to concentrate on interoperability and insist on standards We are still in early days for standards as only now working grids emerge and multiple implementations can be tested to work together ark m 6 Re 20

There is no one grid and we will have to concentrate on interoperability and insist on standards We are still in early days for standards as only now working grids emerge and multiple implementations can be tested to work together ark m 6 Re 20

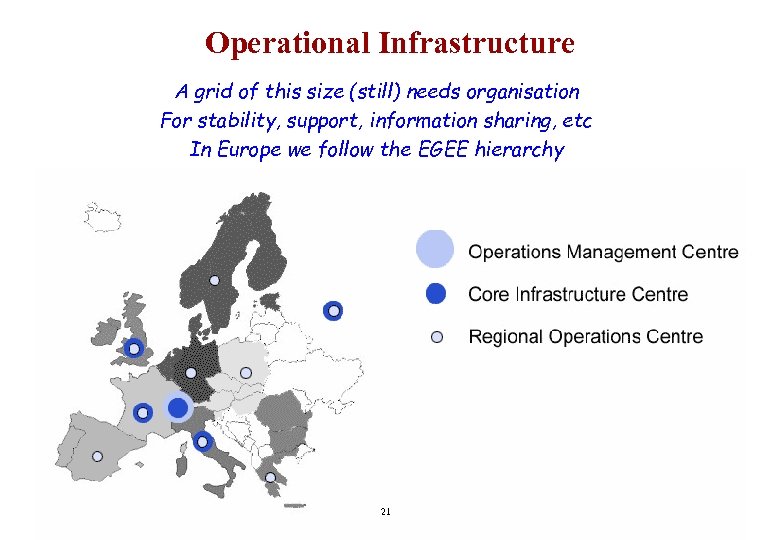

Operational Infrastructure A grid of this size (still) needs organisation For stability, support, information sharing, etc In Europe we follow the EGEE hierarchy 21

Operational Infrastructure A grid of this size (still) needs organisation For stability, support, information sharing, etc In Europe we follow the EGEE hierarchy 21

Although in contradiction with the definition of a pure grid, the infrastructure needs to be well managed and tightly controlled (at least initially) ark m 7 Re 22

Although in contradiction with the definition of a pure grid, the infrastructure needs to be well managed and tightly controlled (at least initially) ark m 7 Re 22

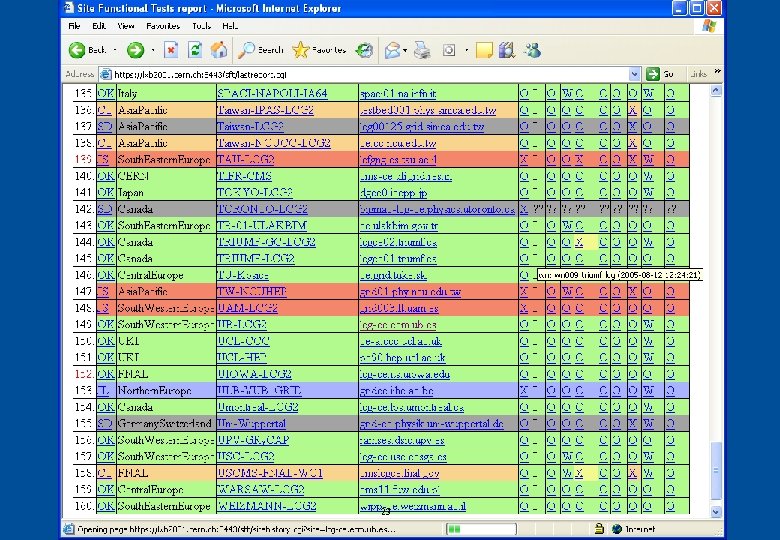

Site Functional Tests Internal testing of the site § § § A job with an ensemble of tests is submitted to each site (CE) once a day This job runs on one CPU for about 10 minutes Data is collected at the Operations Management Centre (CERN) The data is made available publicly through web pages and in a database These tests look at the site from the inside to check the environment a job finds when it arrives there 23

Site Functional Tests Internal testing of the site § § § A job with an ensemble of tests is submitted to each site (CE) once a day This job runs on one CPU for about 10 minutes Data is collected at the Operations Management Centre (CERN) The data is made available publicly through web pages and in a database These tests look at the site from the inside to check the environment a job finds when it arrives there 23

You need a good procedure to exclude malfunctioning sites from a grid One malfunctioning site can destroy a whole well functioning grid f. e. through the “black hole effect” ark m 8 Re 24

You need a good procedure to exclude malfunctioning sites from a grid One malfunctioning site can destroy a whole well functioning grid f. e. through the “black hole effect” ark m 8 Re 24

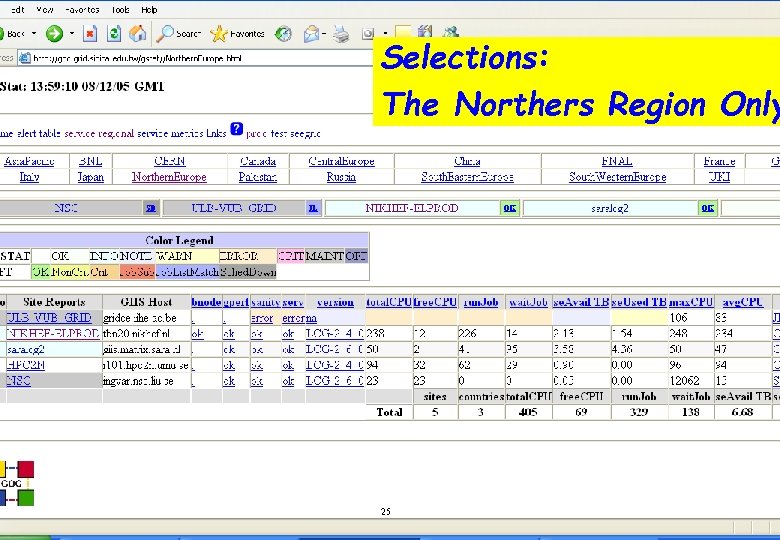

End of that table Grid Status (GStat) Tests Selections: External monitoring of the sites In Table Format Northers Region listed The with all entries Only § BDII and Site BDII monitoring § § § BDII: # of sites found Site BDII: # of ldap objects Old entries indicate the BDII is not being updated Query response time: indication of network and server loading Click Here GIIS monitoring § Collect Glue. Service. URI objects from each site bdii: ¨ Glue. Service. Type: The type of service provided ¨ Glue. Service. Access. Point. URL: How to reach the service ¨ Glue. Service. Access. Control. Rule: Who can access this service § Organized Services ¨ “ALL” by Service Type ¨ “VO_name” by VOs § § Certificate Lifetimes for CEs and SEs Job submission monitoring 25

End of that table Grid Status (GStat) Tests Selections: External monitoring of the sites In Table Format Northers Region listed The with all entries Only § BDII and Site BDII monitoring § § § BDII: # of sites found Site BDII: # of ldap objects Old entries indicate the BDII is not being updated Query response time: indication of network and server loading Click Here GIIS monitoring § Collect Glue. Service. URI objects from each site bdii: ¨ Glue. Service. Type: The type of service provided ¨ Glue. Service. Access. Point. URL: How to reach the service ¨ Glue. Service. Access. Control. Rule: Who can access this service § Organized Services ¨ “ALL” by Service Type ¨ “VO_name” by VOs § § Certificate Lifetimes for CEs and SEs Job submission monitoring 25

You need to monitor how the grid as a whole is functioning The number of sites, number of cpu’s, number of free cpu’s Is one site never getting any jobs or is one site getting all the jobs ark m 9 Re 26

You need to monitor how the grid as a whole is functioning The number of sites, number of cpu’s, number of free cpu’s Is one site never getting any jobs or is one site getting all the jobs ark m 9 Re 26

Grid Operations Monitoring: CIC on Duty § Core Infrastructure Centres: CERN, CNAF, IN 2 P 3, RAL, FZK, Univ. Indiana, Taipei RC RC RC CIC CIC RC RC ROC RC CIC § Tools: CIC ¨ Co. D Dashboard ¨ Monitoring tools and in-depth testing ¨ Communication tool ¨ Problem Tracking Tool § 24/7 Rotating Duty 27 RC ROC RC RC RC OMC CIC RC RC ROC RC RC

Grid Operations Monitoring: CIC on Duty § Core Infrastructure Centres: CERN, CNAF, IN 2 P 3, RAL, FZK, Univ. Indiana, Taipei RC RC RC CIC CIC RC RC ROC RC CIC § Tools: CIC ¨ Co. D Dashboard ¨ Monitoring tools and in-depth testing ¨ Communication tool ¨ Problem Tracking Tool § 24/7 Rotating Duty 27 RC ROC RC RC RC OMC CIC RC RC ROC RC RC

In spite of all atomization you need a person ‘on duty’ to watch the operations and react if something needs to be done System administrators are of the focused on fabric management and have to be alerted when they need to do some grid management 10 k r ma Re 28

In spite of all atomization you need a person ‘on duty’ to watch the operations and react if something needs to be done System administrators are of the focused on fabric management and have to be alerted when they need to do some grid management 10 k r ma Re 28

CIC-On-Duty (COD) workflow Interface CIC Portal Ticketing system GGUS mails Issues: SFT Needs strong link to security groups Gstat Needs strong link to user support Static data … Monitoring tools Monitoring of site responsiveness to problems One week duty rotates among CICs Scheduling of tests and upgrades Procedures and Tools Alarms and follow-up Ensure coordination, availability, reliability When and how to remove a site? CH, UK, France, Germany, Italy, Russia When and how to suspend a site? But also, Univ. Of Indiana, US, ASCC Taipei When and how to add sites? GOC DB 29

CIC-On-Duty (COD) workflow Interface CIC Portal Ticketing system GGUS mails Issues: SFT Needs strong link to security groups Gstat Needs strong link to user support Static data … Monitoring tools Monitoring of site responsiveness to problems One week duty rotates among CICs Scheduling of tests and upgrades Procedures and Tools Alarms and follow-up Ensure coordination, availability, reliability When and how to remove a site? CH, UK, France, Germany, Italy, Russia When and how to suspend a site? But also, Univ. Of Indiana, US, ASCC Taipei When and how to add sites? GOC DB 29

Tools – 1: CIC Dashboard Problem categories • ` Sites list (reporting new problems) • ` Test summary (SFT, GSTAT) GGUS Ticket status 30

Tools – 1: CIC Dashboard Problem categories • ` Sites list (reporting new problems) • ` Test summary (SFT, GSTAT) GGUS Ticket status 30

Critique on Co. D § § § At any time ~40 sites out of 140 have problems CIC-on-duty is sometimes unable to open all new tickets ~80% of resources are concentrated in ~20 biggest sites, failures at big sites have dramatic impact on the availability of resources Cr it e 1 iqu § § § Prioritise sites: big sites before small sites Prioritise problems: severe problems before warnings Remove problem sites loss of resources Separate compute problems from storage problems Storage sites can not be removed! 31

Critique on Co. D § § § At any time ~40 sites out of 140 have problems CIC-on-duty is sometimes unable to open all new tickets ~80% of resources are concentrated in ~20 biggest sites, failures at big sites have dramatic impact on the availability of resources Cr it e 1 iqu § § § Prioritise sites: big sites before small sites Prioritise problems: severe problems before warnings Remove problem sites loss of resources Separate compute problems from storage problems Storage sites can not be removed! 31

Job monitoring is something different from grid monitoring and equally important Grid monitoring gives a systems perspective of the grid, job monitoring is what the user wants to see 1 k 1 ar m Re 32

Job monitoring is something different from grid monitoring and equally important Grid monitoring gives a systems perspective of the grid, job monitoring is what the user wants to see 1 k 1 ar m Re 32

User’s Job Monitoring § § The tool is R-GMA, data is kept in a database Data can be viewed from anywhere A number of R-GMA tables contain information about: Job Status (Job. Status. Raw table) § Information from the logging and bookkeeping service § The Status of the job, Queued, Running, Done etc. § Job-Id, State, Owner, BKServer, Start time, etc § Job Monitor (Job. Monitor table) § The Metrics of the running job every 5 minutes § A script forked off the worker node by the job wrapper § Job-Id, Local-Id, User-dn, Local-user, VO, RB, WCTime, CPUTime, Real Mem, etc. § Grid FTP (Grid. TFP table) § Information about file transfers § Host, user-name, src, dest, file_name, throughput, start_time, stop_time, etc § Job Status (User Defined Tables) § job-step, event-number, etc. 33

User’s Job Monitoring § § The tool is R-GMA, data is kept in a database Data can be viewed from anywhere A number of R-GMA tables contain information about: Job Status (Job. Status. Raw table) § Information from the logging and bookkeeping service § The Status of the job, Queued, Running, Done etc. § Job-Id, State, Owner, BKServer, Start time, etc § Job Monitor (Job. Monitor table) § The Metrics of the running job every 5 minutes § A script forked off the worker node by the job wrapper § Job-Id, Local-Id, User-dn, Local-user, VO, RB, WCTime, CPUTime, Real Mem, etc. § Grid FTP (Grid. TFP table) § Information about file transfers § Host, user-name, src, dest, file_name, throughput, start_time, stop_time, etc § Job Status (User Defined Tables) § job-step, event-number, etc. 33

Critique on User’s Job Monitoring § Querying a data base is not particularly user friendly § Building and populating user defined tables is not straight forward § We have tried to solve the problem with R-GMA but there are other solutions 1 ue iq it Cr 34

Critique on User’s Job Monitoring § Querying a data base is not particularly user friendly § Building and populating user defined tables is not straight forward § We have tried to solve the problem with R-GMA but there are other solutions 1 ue iq it Cr 34

User Support § Covers Helpdesk, User Information, Training, Problem Tracking, Problem Documentation, Support Staff Information, Metrics, . . Tool for problem submission and tracking, Tool to access knowledge base and FAQ’s, Tool to status information and contacts, . . Websites to documentation, information, howto’s, training, support staff, . . § § § § How do I get started? Where do I go for help? What are procedures for certificates, CA’s, VO’s? Are there examples, templates? etc 35 § § § Where is my job? Where is the data? Why did my job fail? I don’t understand the error! It says certificate expired, but I think it is not? It says I’m not the VO, but I am etc

User Support § Covers Helpdesk, User Information, Training, Problem Tracking, Problem Documentation, Support Staff Information, Metrics, . . Tool for problem submission and tracking, Tool to access knowledge base and FAQ’s, Tool to status information and contacts, . . Websites to documentation, information, howto’s, training, support staff, . . § § § § How do I get started? Where do I go for help? What are procedures for certificates, CA’s, VO’s? Are there examples, templates? etc 35 § § § Where is my job? Where is the data? Why did my job fail? I don’t understand the error! It says certificate expired, but I think it is not? It says I’m not the VO, but I am etc

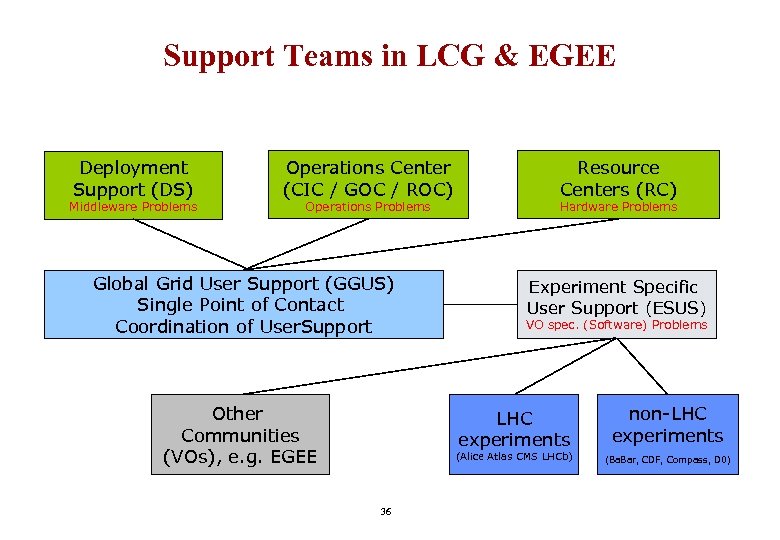

Support Teams in LCG & EGEE Deployment Support (DS) Middleware Problems Operations Center (CIC / GOC / ROC) Operations Problems Global Grid User Support (GGUS) Single Point of Contact Coordination of User. Support Other Communities (VOs), e. g. EGEE Resource Centers (RC) Hardware Problems Experiment Specific User Support (ESUS) VO spec. (Software) Problems LHC experiments (Alice Atlas CMS LHCb) 36 non-LHC experiments (Ba. Bar, CDF, Compass, D 0)

Support Teams in LCG & EGEE Deployment Support (DS) Middleware Problems Operations Center (CIC / GOC / ROC) Operations Problems Global Grid User Support (GGUS) Single Point of Contact Coordination of User. Support Other Communities (VOs), e. g. EGEE Resource Centers (RC) Hardware Problems Experiment Specific User Support (ESUS) VO spec. (Software) Problems LHC experiments (Alice Atlas CMS LHCb) 36 non-LHC experiments (Ba. Bar, CDF, Compass, D 0)

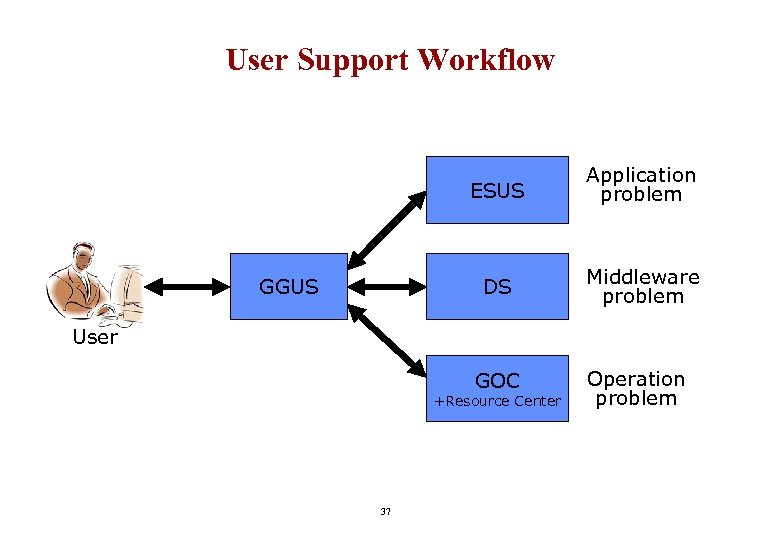

User Support Workflow ESUS DS GGUS Application problem Middleware problem User GOC +Resource Center 37 Operation problem

User Support Workflow ESUS DS GGUS Application problem Middleware problem User GOC +Resource Center 37 Operation problem

The User’s View At present many channels used: EIS contact people: support-eis@cern. ch LCG Rollout list: LCG-ROLLOUT@LISTSERV. RL. AC. UK Experiment specific: GGUS: atlas-lcg@cen. ch http: //www. ggus. org Not a real agreed procedure. GGUS provides a useful portal and problem tracking tools – however requests are forwarded, information spread, etc. Grid. it http: //www. grid-support. ac. uk/ 38

The User’s View At present many channels used: EIS contact people: support-eis@cern. ch LCG Rollout list: LCG-ROLLOUT@LISTSERV. RL. AC. UK Experiment specific: GGUS: atlas-lcg@cen. ch http: //www. ggus. org Not a real agreed procedure. GGUS provides a useful portal and problem tracking tools – however requests are forwarded, information spread, etc. Grid. it http: //www. grid-support. ac. uk/ 38

User’s Support Critique § § § C e iqu rit GGUS doesn’t work as we expected, but maybe the scope was too ambitious: Global Grid User Support The setup was made too much top-down rather than starting from the user. A user wants to talk to her local support people and we should have started from there. Should a user know about GGUS? Anything that can be done locally should be done locally and if that cannot be done be taken a level up like to the ROC GGUS was too formal and set up in too much isolation. (Sysadmins got tickets to resolve without even having heard about GGUS) The new service takes account of these points and is getting better: 3 § § more adapted procedures integration of a simpler ticketing system; improved application specific (VO) support better integration with the EGEE hierarchy and the US system 39

User’s Support Critique § § § C e iqu rit GGUS doesn’t work as we expected, but maybe the scope was too ambitious: Global Grid User Support The setup was made too much top-down rather than starting from the user. A user wants to talk to her local support people and we should have started from there. Should a user know about GGUS? Anything that can be done locally should be done locally and if that cannot be done be taken a level up like to the ROC GGUS was too formal and set up in too much isolation. (Sysadmins got tickets to resolve without even having heard about GGUS) The new service takes account of these points and is getting better: 3 § § more adapted procedures integration of a simpler ticketing system; improved application specific (VO) support better integration with the EGEE hierarchy and the US system 39

Do not organise globally what can be done locally In the Grid community there is a tendency to do everything globally. However there are things that can better be done locally like user support, training, installation tools, fabric monitoring, accounting, etc. e iqu it 4 Cr 40

Do not organise globally what can be done locally In the Grid community there is a tendency to do everything globally. However there are things that can better be done locally like user support, training, installation tools, fabric monitoring, accounting, etc. e iqu it 4 Cr 40

Software Releases Critique § § § A heavy process, not well adapted to many bugs found in early stages Becoming more and more depended on other packages Hard to coordinate upgrades with on-going production work Difficult to make >100 sites upgrade all at once: local restrictions Difficult to account for local differences: operations systems, patches, security Conscious decision to not prescribe an installation tool: default just rpm’s but also YAIM, others use Quattor and yet other tools e iqu it 5 Cr 41

Software Releases Critique § § § A heavy process, not well adapted to many bugs found in early stages Becoming more and more depended on other packages Hard to coordinate upgrades with on-going production work Difficult to make >100 sites upgrade all at once: local restrictions Difficult to account for local differences: operations systems, patches, security Conscious decision to not prescribe an installation tool: default just rpm’s but also YAIM, others use Quattor and yet other tools e iqu it 5 Cr 41

Operational security We now have (written and agreed) procedures for: § § § § § Installation and Resolution of Certification Authorities Release and withdrawal of certificates Joining and leaving the service Adding and Removing Virtual Organisations Adding and Removing users Acceptable Use Policies: Taipei accord Risk assessment Vulnerability Incident response Legal Issues need to be more addressed urgently 42

Operational security We now have (written and agreed) procedures for: § § § § § Installation and Resolution of Certification Authorities Release and withdrawal of certificates Joining and leaving the service Adding and Removing Virtual Organisations Adding and Removing users Acceptable Use Policies: Taipei accord Risk assessment Vulnerability Incident response Legal Issues need to be more addressed urgently 42

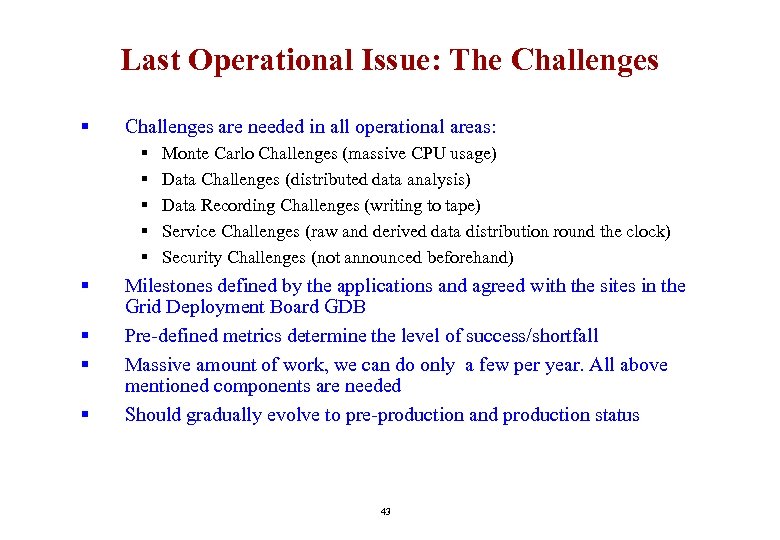

Last Operational Issue: The Challenges § Challenges are needed in all operational areas: § § § § § Monte Carlo Challenges (massive CPU usage) Data Challenges (distributed data analysis) Data Recording Challenges (writing to tape) Service Challenges (raw and derived data distribution round the clock) Security Challenges (not announced beforehand) Milestones defined by the applications and agreed with the sites in the Grid Deployment Board GDB Pre-defined metrics determine the level of success/shortfall Massive amount of work, we can do only a few per year. All above mentioned components are needed Should gradually evolve to pre-production and production status 43

Last Operational Issue: The Challenges § Challenges are needed in all operational areas: § § § § § Monte Carlo Challenges (massive CPU usage) Data Challenges (distributed data analysis) Data Recording Challenges (writing to tape) Service Challenges (raw and derived data distribution round the clock) Security Challenges (not announced beforehand) Milestones defined by the applications and agreed with the sites in the Grid Deployment Board GDB Pre-defined metrics determine the level of success/shortfall Massive amount of work, we can do only a few per year. All above mentioned components are needed Should gradually evolve to pre-production and production status 43

LCG Data Recording Challenge Tape server throughput – 1 -8 March 2005 § § Simulated data acquisition system to tape at CERN In collaboration with ALICE – as part of their 450 M B/sec data challenge Target – one week sustained at 450 MB/sec – achieved 8 March Succeeded! 44

LCG Data Recording Challenge Tape server throughput – 1 -8 March 2005 § § Simulated data acquisition system to tape at CERN In collaboration with ALICE – as part of their 450 M B/sec data challenge Target – one week sustained at 450 MB/sec – achieved 8 March Succeeded! 44

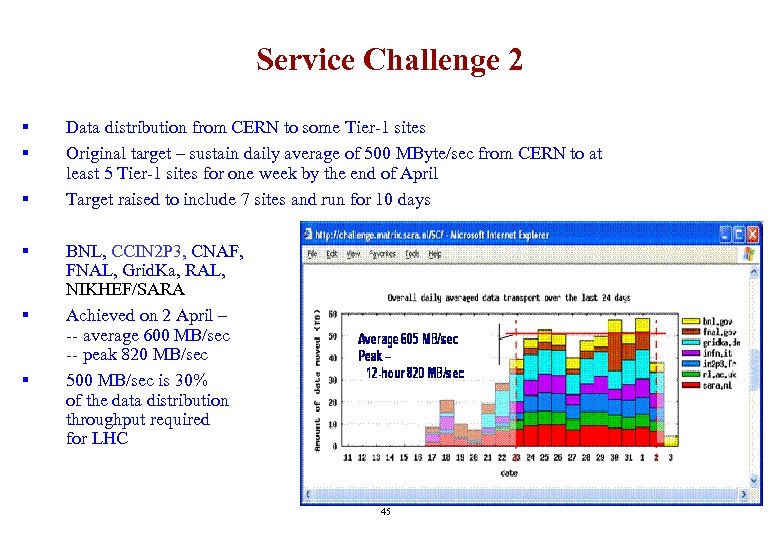

Service Challenge 2 § § § Data distribution from CERN to some Tier-1 sites Original target – sustain daily average of 500 MByte/sec from CERN to at least 5 Tier-1 sites for one week by the end of April Target raised to include 7 sites and run for 10 days BNL, CCIN 2 P 3, CNAF, FNAL, Grid. Ka, RAL, NIKHEF/SARA Achieved on 2 April – -- average 600 MB/sec -- peak 820 MB/sec 500 MB/sec is 30% of the data distribution throughput required for LHC 45

Service Challenge 2 § § § Data distribution from CERN to some Tier-1 sites Original target – sustain daily average of 500 MByte/sec from CERN to at least 5 Tier-1 sites for one week by the end of April Target raised to include 7 sites and run for 10 days BNL, CCIN 2 P 3, CNAF, FNAL, Grid. Ka, RAL, NIKHEF/SARA Achieved on 2 April – -- average 600 MB/sec -- peak 820 MB/sec 500 MB/sec is 30% of the data distribution throughput required for LHC 45

Monte Carlo Challenges Recent ATLAS work Number of jobs/day ~10, 000 concurrent jobs in the system • ATLAS jobs in EGEE/LCG-2 in 2005 • In latest period up to 8 K jobs/day • Several times the current capacity for ATLAS at CERN alone – 46 shows the reality of the grid solution

Monte Carlo Challenges Recent ATLAS work Number of jobs/day ~10, 000 concurrent jobs in the system • ATLAS jobs in EGEE/LCG-2 in 2005 • In latest period up to 8 K jobs/day • Several times the current capacity for ATLAS at CERN alone – 46 shows the reality of the grid solution

Service Challenge 3 (ongoing) Target: > 1 GByte/sec of data out of CERN, disk-to-disk With ~150 MByte/s to individual sites And ~40 Mbytes/s to tape at some Tier-1 sites Sustained for 2 weeks 47

Service Challenge 3 (ongoing) Target: > 1 GByte/sec of data out of CERN, disk-to-disk With ~150 MByte/s to individual sites And ~40 Mbytes/s to tape at some Tier-1 sites Sustained for 2 weeks 47

Challenges are paramount on the way to an operational grid Grid Service Challenges as well as Application Challenges are needed for testing as well as for planning purposes 12 k r ma Re 48

Challenges are paramount on the way to an operational grid Grid Service Challenges as well as Application Challenges are needed for testing as well as for planning purposes 12 k r ma Re 48

Ramping up to the LHC Grid Service 580 days left before LHC turn-on § § § The grid services for LHC will be ramped-up through two Service Challenges SC 3 this year and SC 4 next year These will include the Tier-0, the Tier-1 s and the major Tier-2 s Each service Challenge includes – -- a set-up period § check out the infrastructure/service to iron out the problems before the experiments get fully involved § schedule allows time to provide permanent fixes for problems encountered § A throughput test -- followed by a long stable period for the applications to check out their computing model and software chain 49

Ramping up to the LHC Grid Service 580 days left before LHC turn-on § § § The grid services for LHC will be ramped-up through two Service Challenges SC 3 this year and SC 4 next year These will include the Tier-0, the Tier-1 s and the major Tier-2 s Each service Challenge includes – -- a set-up period § check out the infrastructure/service to iron out the problems before the experiments get fully involved § schedule allows time to provide permanent fixes for problems encountered § A throughput test -- followed by a long stable period for the applications to check out their computing model and software chain 49

Grid Services Achieved § § § We have an operational grid running at ~10, 000 concurrent jobs in the system We have more sites and processors than we anticipated at this stage: ~140 sites, ~12, 000 processors The # of sites is close to that needed for the full LHC grid but only at a few % of the full capacity Grid operations shared responsibility between operations centres We have successfully completed a series of Challenges 34 countries working together in a consensus based organisation 50

Grid Services Achieved § § § We have an operational grid running at ~10, 000 concurrent jobs in the system We have more sites and processors than we anticipated at this stage: ~140 sites, ~12, 000 processors The # of sites is close to that needed for the full LHC grid but only at a few % of the full capacity Grid operations shared responsibility between operations centres We have successfully completed a series of Challenges 34 countries working together in a consensus based organisation 50

Further Improvements Needed § § § § C Reliability is still a major issue - a focus for work this year Not enough experience with data management yet: more Challenges needed Middleware evolution - aim for a solid basic functionality by end 2005 Support improvement in all area’s Security procedures not well tested yet Legal issues become pressing No economic model yet, accounting, resource sharing, budgets, etc. e iqu rit 6 51

Further Improvements Needed § § § § C Reliability is still a major issue - a focus for work this year Not enough experience with data management yet: more Challenges needed Middleware evolution - aim for a solid basic functionality by end 2005 Support improvement in all area’s Security procedures not well tested yet Legal issues become pressing No economic model yet, accounting, resource sharing, budgets, etc. e iqu rit 6 51

LCG The End Kors Bos NIKHEF, Amsterdam

LCG The End Kors Bos NIKHEF, Amsterdam