d37500fa6d8cb517f34a4abadedbf52a.ppt

- Количество слайдов: 57

Lazy Systematic Unit Testing for Java Anthony J H Simons Christopher D Thomson

Lazy Systematic Unit Testing for Java Anthony J H Simons Christopher D Thomson

Overview n Lazy Systematic Unit Testing § testing concepts and methodology n The JWalk Tester tool § flagship of the JWalk 1. 0 toolset n Dynamic analysis and pruning § smart interactive generation and evaluation n Oracle building and test prediction § building a test oracle with minimal user interaction n Head-to-head evaluation § a testing contest: JWalk versus JUnit http: //www. dcs. shef. ac. uk/~ajhs/jwalk/

Overview n Lazy Systematic Unit Testing § testing concepts and methodology n The JWalk Tester tool § flagship of the JWalk 1. 0 toolset n Dynamic analysis and pruning § smart interactive generation and evaluation n Oracle building and test prediction § building a test oracle with minimal user interaction n Head-to-head evaluation § a testing contest: JWalk versus JUnit http: //www. dcs. shef. ac. uk/~ajhs/jwalk/

Motivation n State of the art in agile testing § § n Test-driven development is good, but… …no specification to inform the selection of tests …manual test-sets are fallible (missing, redundant cases) Can we do better in test-case selection? Regression testing : a touchstone? § No specifications in XP, so use saved tests instead, which become guarantors of correct behaviour § Article of faith – passing saved tests guarantees no faults introduced in the modified unit § Actually no, state partitions cause geometric decrease in effective state coverage (Simons, 2005)

Motivation n State of the art in agile testing § § n Test-driven development is good, but… …no specification to inform the selection of tests …manual test-sets are fallible (missing, redundant cases) Can we do better in test-case selection? Regression testing : a touchstone? § No specifications in XP, so use saved tests instead, which become guarantors of correct behaviour § Article of faith – passing saved tests guarantees no faults introduced in the modified unit § Actually no, state partitions cause geometric decrease in effective state coverage (Simons, 2005)

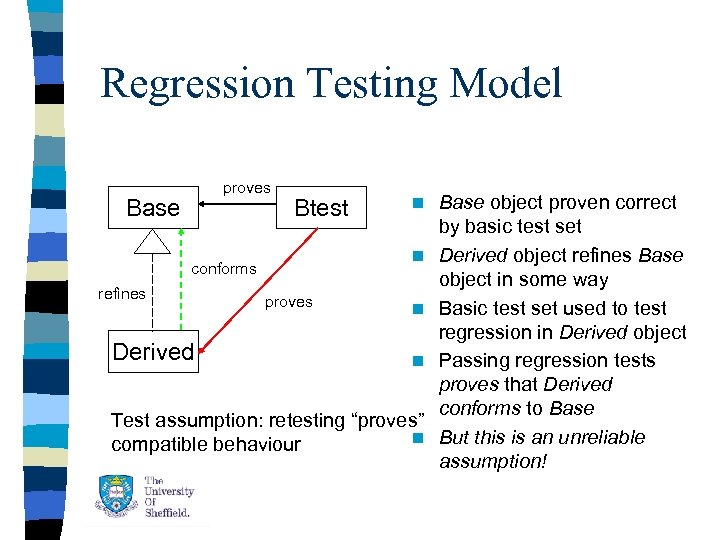

Regression Testing Model proves Base Btest n conforms refines Derived n proves n n Test assumption: retesting “proves” n compatible behaviour Base object proven correct by basic test set Derived object refines Base object in some way Basic test set used to test regression in Derived object Passing regression tests proves that Derived conforms to Base But this is an unreliable assumption!

Regression Testing Model proves Base Btest n conforms refines Derived n proves n n Test assumption: retesting “proves” n compatible behaviour Base object proven correct by basic test set Derived object refines Base object in some way Basic test set used to test regression in Derived object Passing regression tests proves that Derived conforms to Base But this is an unreliable assumption!

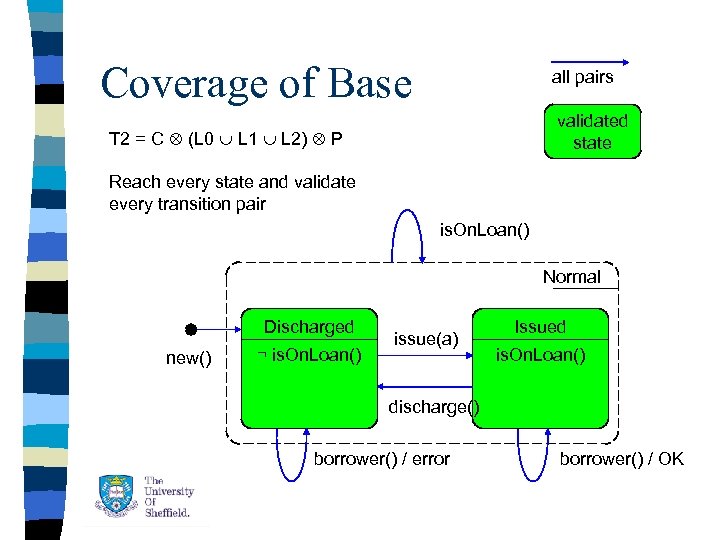

Coverage of Base all pairs validated state T 2 = C (L 0 L 1 L 2) P Reach every state and validate every transition pair is. On. Loan() Normal Discharged new() ¬ is. On. Loan() issue(a) Issued is. On. Loan() discharge() borrower() / error borrower() / OK

Coverage of Base all pairs validated state T 2 = C (L 0 L 1 L 2) P Reach every state and validate every transition pair is. On. Loan() Normal Discharged new() ¬ is. On. Loan() issue(a) Issued is. On. Loan() discharge() borrower() / error borrower() / OK

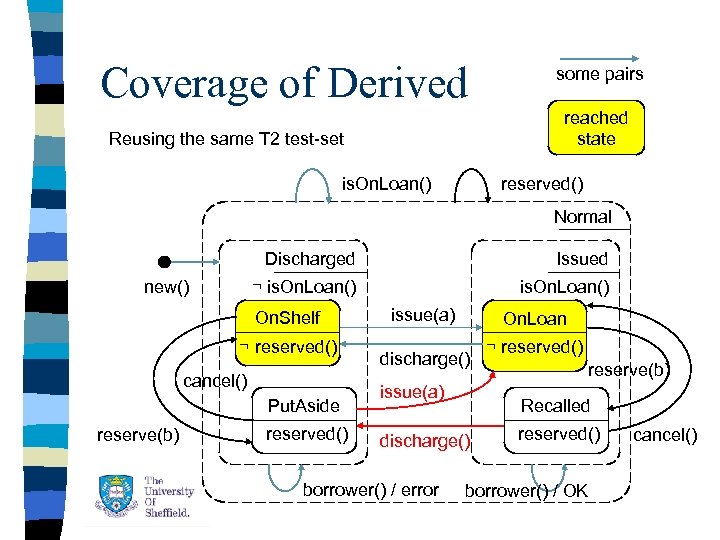

Coverage of Derived some pairs reached state Reusing the same T 2 test-set is. On. Loan() reserved() Normal Discharged ¬ is. On. Loan() new() Issued is. On. Loan() On. Shelf ¬ reserved() cancel() Put. Aside reserve(b) reserved() issue(a) On. Loan discharge() issue(a) reserve(b) Recalled discharge() borrower() / error ¬ reserved() borrower() / OK cancel()

Coverage of Derived some pairs reached state Reusing the same T 2 test-set is. On. Loan() reserved() Normal Discharged ¬ is. On. Loan() new() Issued is. On. Loan() On. Shelf ¬ reserved() cancel() Put. Aside reserve(b) reserved() issue(a) On. Loan discharge() issue(a) reserve(b) Recalled discharge() borrower() / error ¬ reserved() borrower() / OK cancel()

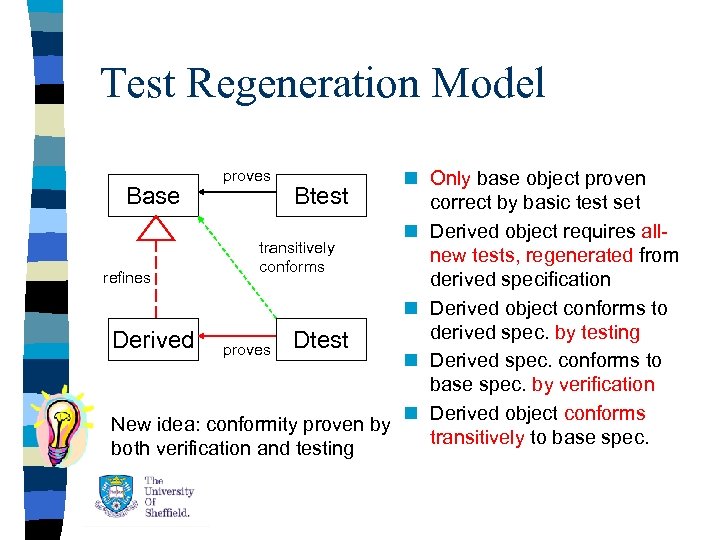

Test Regeneration Model n Only base object proven Base Btest correct by basic test set n Derived object requires alltransitively new tests, regenerated from conforms refines derived specification n Derived object conforms to derived spec. by testing Derived proves Dtest n Derived spec. conforms to base spec. by verification n Derived object conforms New idea: conformity proven by transitively to base spec. both verification and testing proves

Test Regeneration Model n Only base object proven Base Btest correct by basic test set n Derived object requires alltransitively new tests, regenerated from conforms refines derived specification n Derived object conforms to derived spec. by testing Derived proves Dtest n Derived spec. conforms to base spec. by verification n Derived object conforms New idea: conformity proven by transitively to base spec. both verification and testing proves

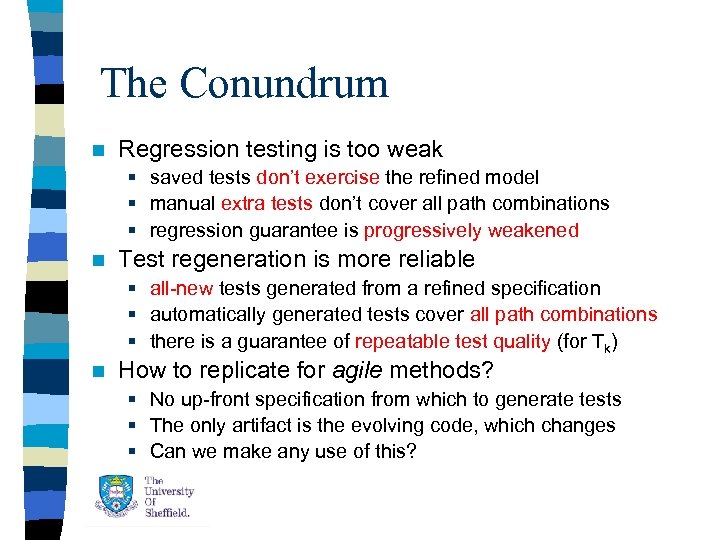

The Conundrum n Regression testing is too weak § saved tests don’t exercise the refined model § manual extra tests don’t cover all path combinations § regression guarantee is progressively weakened n Test regeneration is more reliable § all-new tests generated from a refined specification § automatically generated tests cover all path combinations § there is a guarantee of repeatable test quality (for Tk) n How to replicate for agile methods? § No up-front specification from which to generate tests § The only artifact is the evolving code, which changes § Can we make any use of this?

The Conundrum n Regression testing is too weak § saved tests don’t exercise the refined model § manual extra tests don’t cover all path combinations § regression guarantee is progressively weakened n Test regeneration is more reliable § all-new tests generated from a refined specification § automatically generated tests cover all path combinations § there is a guarantee of repeatable test quality (for Tk) n How to replicate for agile methods? § No up-front specification from which to generate tests § The only artifact is the evolving code, which changes § Can we make any use of this?

Lazy Systematic Unit Testing n Lazy Specification § late inference of a specification from evolving code § semi-automatic, by static and dynamic analysis of code with limited user interaction § specification evolves in step with modified code n Systematic Testing § bounded exhaustive testing, up to the specification § emphasis on completeness, conformance, correctness properties after testing, repeatable test quality http: //en. wikipedia. org/wiki/Lazy_systematic_unit_testing

Lazy Systematic Unit Testing n Lazy Specification § late inference of a specification from evolving code § semi-automatic, by static and dynamic analysis of code with limited user interaction § specification evolves in step with modified code n Systematic Testing § bounded exhaustive testing, up to the specification § emphasis on completeness, conformance, correctness properties after testing, repeatable test quality http: //en. wikipedia. org/wiki/Lazy_systematic_unit_testing

JWalk Tester n Lazy systematic unit testing for Java § static analysis - extracts the public API of a compiled Java class § protocol walk (all paths) – explores, validates all interleaved methods to a given path depth § algebra walk (memory states) – explores, validates all observations on all mutator-method sequences § state walk (high-level states) – explores, validates n-switch transition cover for all high-level states Try me http: //www. dcs. shef. ac. uk/~ajhs/jwalk/

JWalk Tester n Lazy systematic unit testing for Java § static analysis - extracts the public API of a compiled Java class § protocol walk (all paths) – explores, validates all interleaved methods to a given path depth § algebra walk (memory states) – explores, validates all observations on all mutator-method sequences § state walk (high-level states) – explores, validates n-switch transition cover for all high-level states Try me http: //www. dcs. shef. ac. uk/~ajhs/jwalk/

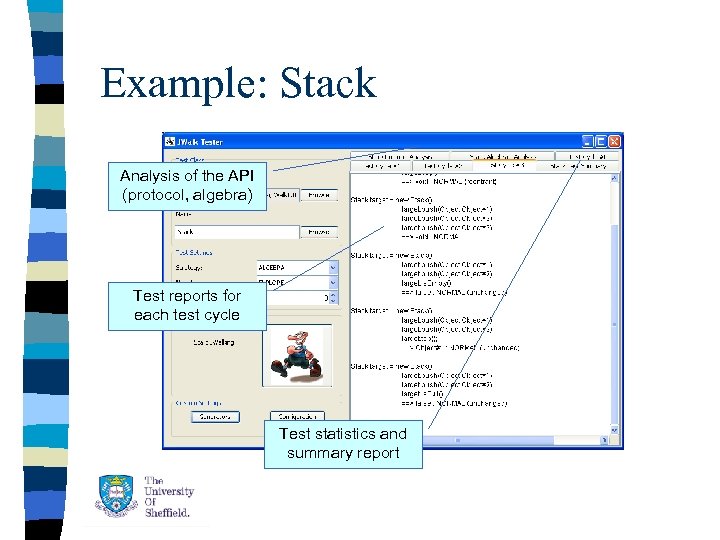

Example: Stack Analysis of the API (protocol, algebra) Test reports for each test cycle Test statistics and summary report

Example: Stack Analysis of the API (protocol, algebra) Test reports for each test cycle Test statistics and summary report

Load the Test Class n Choose a location § the working directory § the root of a package is its parent directory n Choose a test class § browse for the test class within a directory § browse for a packagequalified class within a package n Shortcut § type the (qualified) test class name directly

Load the Test Class n Choose a location § the working directory § the root of a package is its parent directory n Choose a test class § browse for the test class within a directory § browse for a packagequalified class within a package n Shortcut § type the (qualified) test class name directly

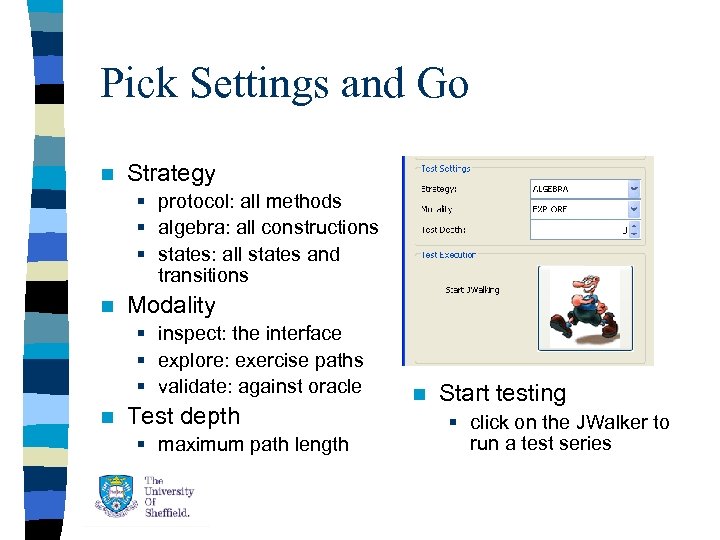

Pick Settings and Go n Strategy § protocol: all methods § algebra: all constructions § states: all states and transitions n Modality § inspect: the interface § explore: exercise paths § validate: against oracle n Test depth § maximum path length n Start testing § click on the JWalker to run a test series

Pick Settings and Go n Strategy § protocol: all methods § algebra: all constructions § states: all states and transitions n Modality § inspect: the interface § explore: exercise paths § validate: against oracle n Test depth § maximum path length n Start testing § click on the JWalker to run a test series

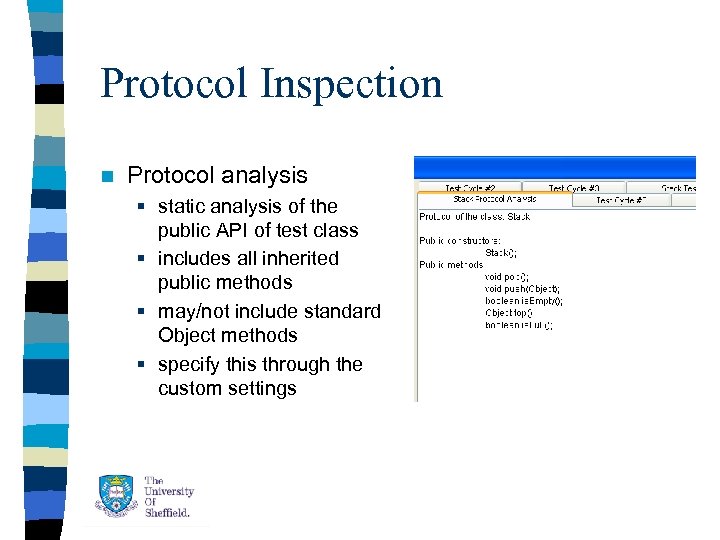

Protocol Inspection n Protocol analysis § static analysis of the public API of test class § includes all inherited public methods § may/not include standard Object methods § specify this through the custom settings

Protocol Inspection n Protocol analysis § static analysis of the public API of test class § includes all inherited public methods § may/not include standard Object methods § specify this through the custom settings

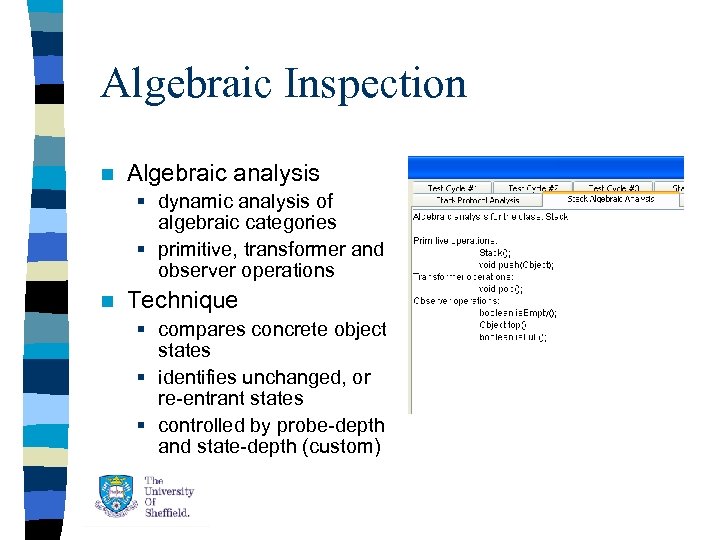

Algebraic Inspection n Algebraic analysis § dynamic analysis of algebraic categories § primitive, transformer and observer operations n Technique § compares concrete object states § identifies unchanged, or re-entrant states § controlled by probe-depth and state-depth (custom)

Algebraic Inspection n Algebraic analysis § dynamic analysis of algebraic categories § primitive, transformer and observer operations n Technique § compares concrete object states § identifies unchanged, or re-entrant states § controlled by probe-depth and state-depth (custom)

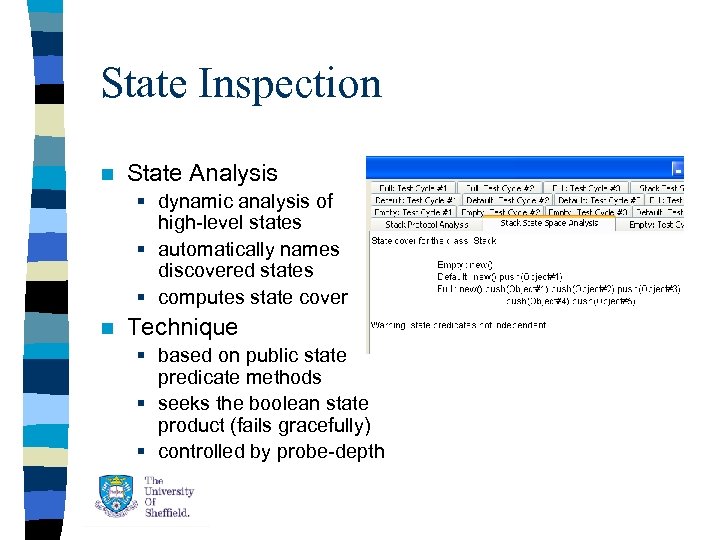

State Inspection n State Analysis § dynamic analysis of high-level states § automatically names discovered states § computes state cover n Technique § based on public state predicate methods § seeks the boolean state product (fails gracefully) § controlled by probe-depth

State Inspection n State Analysis § dynamic analysis of high-level states § automatically names discovered states § computes state cover n Technique § based on public state predicate methods § seeks the boolean state product (fails gracefully) § controlled by probe-depth

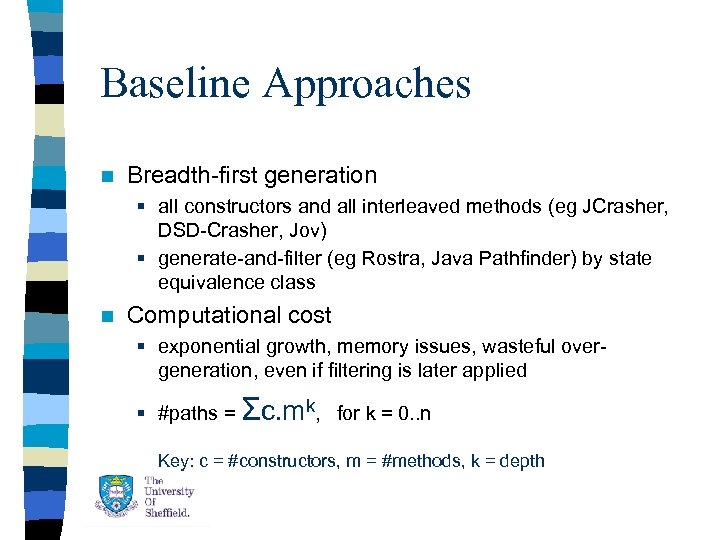

Baseline Approaches n Breadth-first generation § all constructors and all interleaved methods (eg JCrasher, DSD-Crasher, Jov) § generate-and-filter (eg Rostra, Java Pathfinder) by state equivalence class n Computational cost § exponential growth, memory issues, wasteful overgeneration, even if filtering is later applied § #paths = Σc. mk, for k = 0. . n Key: c = #constructors, m = #methods, k = depth

Baseline Approaches n Breadth-first generation § all constructors and all interleaved methods (eg JCrasher, DSD-Crasher, Jov) § generate-and-filter (eg Rostra, Java Pathfinder) by state equivalence class n Computational cost § exponential growth, memory issues, wasteful overgeneration, even if filtering is later applied § #paths = Σc. mk, for k = 0. . n Key: c = #constructors, m = #methods, k = depth

Dynamic Pruning n Interleaved analysis § generate-and-evaluate, pruning active paths on the fly (eg JWalk, Randoop) § remove redundant prefix paths after each test cycle, don’t bother to expand in next cycle n Increasing sophistication § prune prefix paths ending in exceptions (fail again) § JWalk, Randoop (2007) § and prefixes ending in algebraic observers (unchanged) § JWalk 0. 8 (2007) § and prefixes ending in algebraic transformers (reentrant) § JWalk 1. 0 (2009)

Dynamic Pruning n Interleaved analysis § generate-and-evaluate, pruning active paths on the fly (eg JWalk, Randoop) § remove redundant prefix paths after each test cycle, don’t bother to expand in next cycle n Increasing sophistication § prune prefix paths ending in exceptions (fail again) § JWalk, Randoop (2007) § and prefixes ending in algebraic observers (unchanged) § JWalk 0. 8 (2007) § and prefixes ending in algebraic transformers (reentrant) § JWalk 1. 0 (2009)

Protocol Exploration n Protocol strategy § explores all interleaved methods by brute force § explores all paths up to length n (test depth) § repeats invocations of the same method n Pruning § paths raising exceptions in test cycle i § are not extended in test cycle i+1

Protocol Exploration n Protocol strategy § explores all interleaved methods by brute force § explores all paths up to length n (test depth) § repeats invocations of the same method n Pruning § paths raising exceptions in test cycle i § are not extended in test cycle i+1

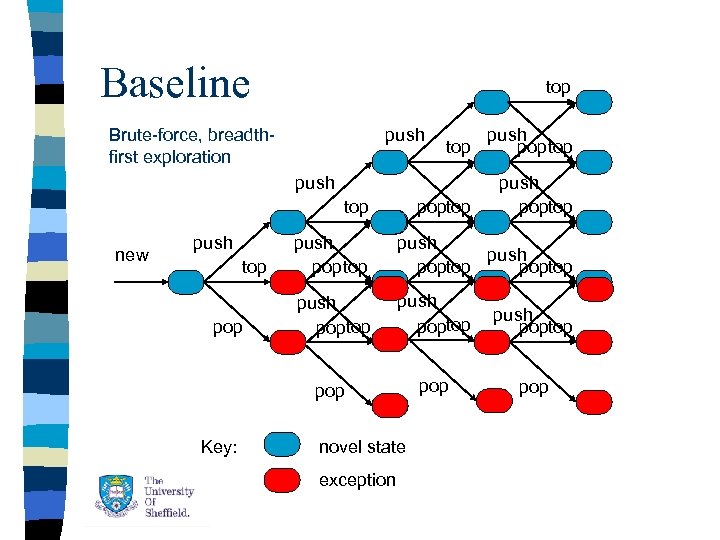

Baseline top Brute-force, breadthfirst exploration push top push pop top poptop push top new push pop Key: push poptop push poptop pop top push pop top pop novel state exception

Baseline top Brute-force, breadthfirst exploration push top push pop top poptop push top new push pop Key: push poptop push poptop pop top push pop top pop novel state exception

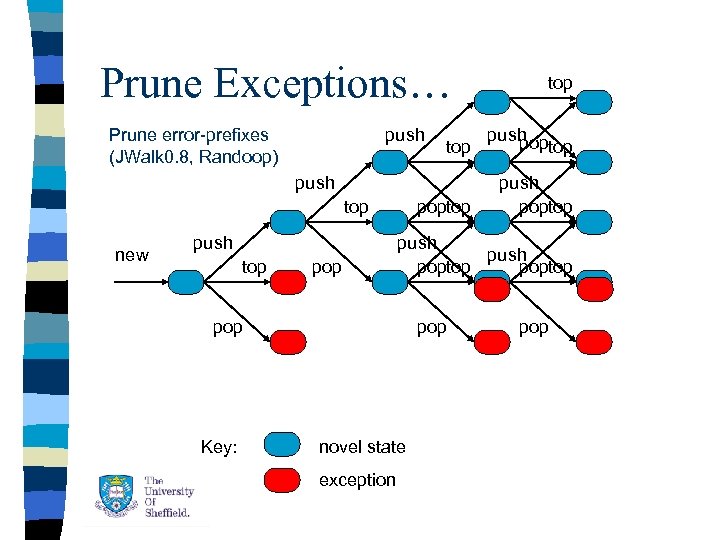

Prune Exceptions… Prune error-prefixes (JWalk 0. 8, Randoop) push top push poptop push top new push top push poptop pop Key: pop novel state exception top pop

Prune Exceptions… Prune error-prefixes (JWalk 0. 8, Randoop) push top push poptop push top new push top push poptop pop Key: pop novel state exception top pop

Algebraic Exploration n Algebraic strategy § explores all algebraic constructions § grows paths using only primitive operations § observes paths ending in any kind of operation n Pruning § prunes paths ending in exceptions (next cycle) § also with re-entrant or unchanged states

Algebraic Exploration n Algebraic strategy § explores all algebraic constructions § grows paths using only primitive operations § observes paths ending in any kind of operation n Pruning § prunes paths ending in exceptions (next cycle) § also with re-entrant or unchanged states

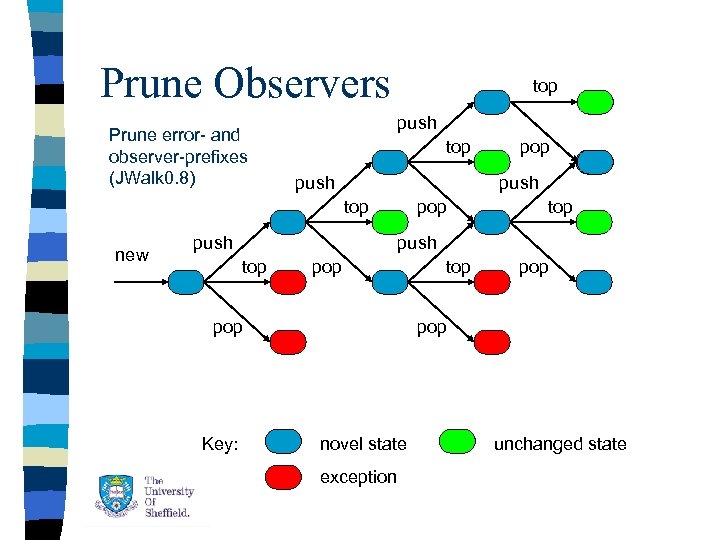

Prune Observers Prune error- and observer-prefixes (JWalk 0. 8) top push top new push pop top push top pop Key: pop top pop novel state exception unchanged state

Prune Observers Prune error- and observer-prefixes (JWalk 0. 8) top push top new push pop top push top pop Key: pop top pop novel state exception unchanged state

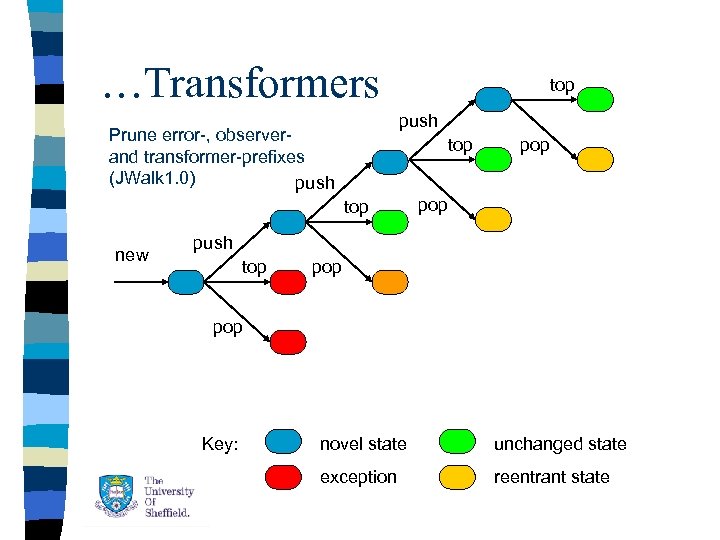

…Transformers top push Prune error-, observerand transformer-prefixes (JWalk 1. 0) push top new pop push top pop Key: novel state unchanged state exception reentrant state

…Transformers top push Prune error-, observerand transformer-prefixes (JWalk 1. 0) push top new pop push top pop Key: novel state unchanged state exception reentrant state

State Exploration n State strategy § reaches every high-level state § explores all transition paths up to length n, from each state § has n-switch coverage n Pruning § grows only primitive paths to reach all states § prunes paths ending in exceptions (next cycle)

State Exploration n State strategy § reaches every high-level state § explores all transition paths up to length n, from each state § has n-switch coverage n Pruning § grows only primitive paths to reach all states § prunes paths ending in exceptions (next cycle)

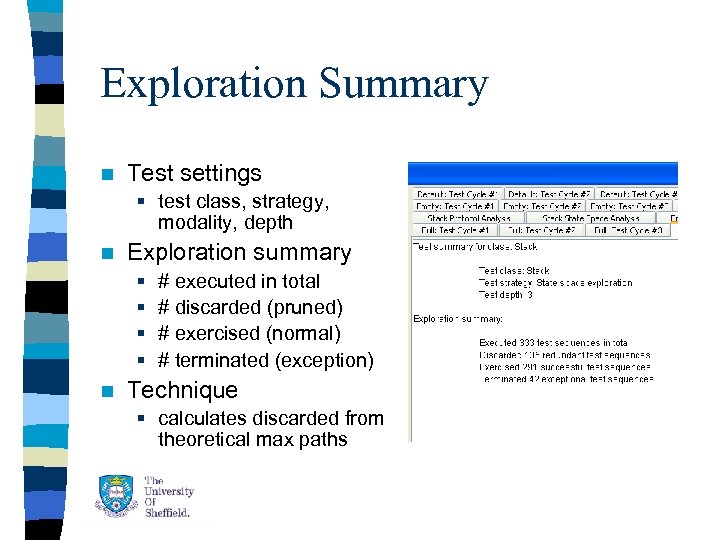

Exploration Summary n Test settings § test class, strategy, modality, depth n Exploration summary § § n # executed in total # discarded (pruned) # exercised (normal) # terminated (exception) Technique § calculates discarded from theoretical max paths

Exploration Summary n Test settings § test class, strategy, modality, depth n Exploration summary § § n # executed in total # discarded (pruned) # exercised (normal) # terminated (exception) Technique § calculates discarded from theoretical max paths

The Same State? n Some earlier approaches § § n distinguish observers, mutators by signature (Rostra) intrusive state equality predicate methods (ASTOOT) external (partial) state equality predicates (Rostra) subsumption of execution traces in JVM (Pathfinder) Some algebraic approaches § shallow, deep equality under all observers (TACCLE) § but assumes observations are also comparable § very costly to compute from first principles § serialise object states and hash (Henkel & Diwan) § but not all objects are serialisable § no control over depth of comparison

The Same State? n Some earlier approaches § § n distinguish observers, mutators by signature (Rostra) intrusive state equality predicate methods (ASTOOT) external (partial) state equality predicates (Rostra) subsumption of execution traces in JVM (Pathfinder) Some algebraic approaches § shallow, deep equality under all observers (TACCLE) § but assumes observations are also comparable § very costly to compute from first principles § serialise object states and hash (Henkel & Diwan) § but not all objects are serialisable § no control over depth of comparison

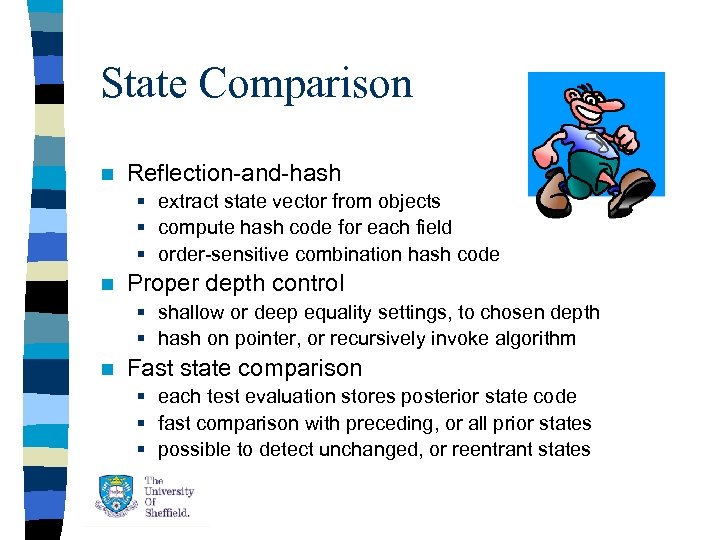

State Comparison n Reflection-and-hash § extract state vector from objects § compute hash code for each field § order-sensitive combination hash code n Proper depth control § shallow or deep equality settings, to chosen depth § hash on pointer, or recursively invoke algorithm n Fast state comparison § each test evaluation stores posterior state code § fast comparison with preceding, or all prior states § possible to detect unchanged, or reentrant states

State Comparison n Reflection-and-hash § extract state vector from objects § compute hash code for each field § order-sensitive combination hash code n Proper depth control § shallow or deep equality settings, to chosen depth § hash on pointer, or recursively invoke algorithm n Fast state comparison § each test evaluation stores posterior state code § fast comparison with preceding, or all prior states § possible to detect unchanged, or reentrant states

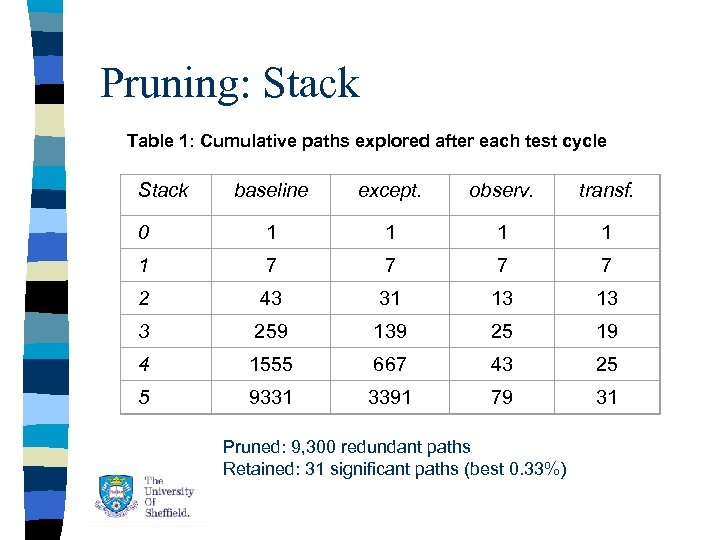

Pruning: Stack Table 1: Cumulative paths explored after each test cycle Stack baseline except. observ. transf. 0 1 1 1 7 7 2 43 31 13 13 3 259 139 25 19 4 1555 667 43 25 5 9331 3391 79 31 Pruned: 9, 300 redundant paths Retained: 31 significant paths (best 0. 33%)

Pruning: Stack Table 1: Cumulative paths explored after each test cycle Stack baseline except. observ. transf. 0 1 1 1 7 7 2 43 31 13 13 3 259 139 25 19 4 1555 667 43 25 5 9331 3391 79 31 Pruned: 9, 300 redundant paths Retained: 31 significant paths (best 0. 33%)

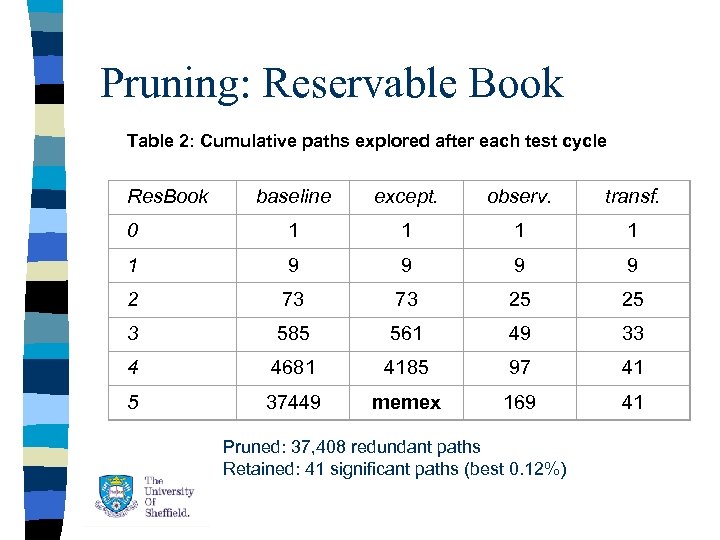

Pruning: Reservable Book Table 2: Cumulative paths explored after each test cycle Res. Book baseline except. observ. transf. 0 1 1 1 9 9 2 73 73 25 25 3 585 561 49 33 4 4681 4185 97 41 5 37449 memex 169 41 Pruned: 37, 408 redundant paths Retained: 41 significant paths (best 0. 12%)

Pruning: Reservable Book Table 2: Cumulative paths explored after each test cycle Res. Book baseline except. observ. transf. 0 1 1 1 9 9 2 73 73 25 25 3 585 561 49 33 4 4681 4185 97 41 5 37449 memex 169 41 Pruned: 37, 408 redundant paths Retained: 41 significant paths (best 0. 12%)

Validation Modality n Lazy specification § interacts with tester to confirm key results § uses predictive rules to infer further results § stores key results in reusable test oracle n Technique § key results found at the leaves of the algebra tree § apply predictions to other test strategies Tester accepts or rejects outcome

Validation Modality n Lazy specification § interacts with tester to confirm key results § uses predictive rules to infer further results § stores key results in reusable test oracle n Technique § key results found at the leaves of the algebra tree § apply predictions to other test strategies Tester accepts or rejects outcome

Test Result Prediction n Semi-automatic validation § the user confirms or rejects key results § these constitute a test oracle, used in prediction § eventually > 90% test outcomes predicted n JWalk test result prediction rules § eg: predict repeat failure § new(). pop(). push(e) == new(). pop() § eg: predict same state § target. size(). push(e) == target. push(e) § eg: predict same result § target. push(e). pop(). size() == target. size() Try me

Test Result Prediction n Semi-automatic validation § the user confirms or rejects key results § these constitute a test oracle, used in prediction § eventually > 90% test outcomes predicted n JWalk test result prediction rules § eg: predict repeat failure § new(). pop(). push(e) == new(). pop() § eg: predict same state § target. size(). push(e) == target. push(e) § eg: predict same result § target. push(e). pop(). size() == target. size() Try me

Kinds of Prediction n Strong prediction § From known results, guarantee further outcomes in the same equivalence class § eg: observer prefixes empirically checked before making any inference, unchanged state is guaranteed § target. push(e). size(). top() == target. push(e). top() n Weak prediction § From known facts, guess further outcomes; an incorrect guess will be revealed in the next cycle § eg: methods with void type usually return no result, but may raise an exception § target. pop() predicted to have no result § target. pop(). size() == -1 reveals an error

Kinds of Prediction n Strong prediction § From known results, guarantee further outcomes in the same equivalence class § eg: observer prefixes empirically checked before making any inference, unchanged state is guaranteed § target. push(e). size(). top() == target. push(e). top() n Weak prediction § From known facts, guess further outcomes; an incorrect guess will be revealed in the next cycle § eg: methods with void type usually return no result, but may raise an exception § target. pop() predicted to have no result § target. pop(). size() == -1 reveals an error

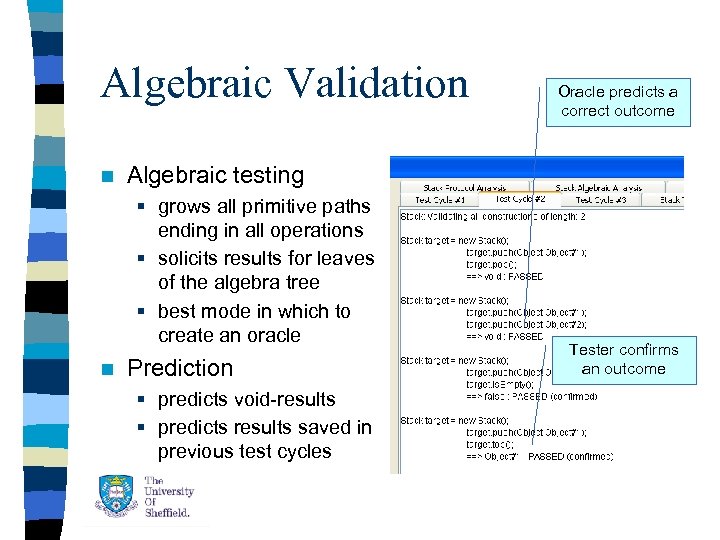

Algebraic Validation n Algebraic testing § grows all primitive paths ending in all operations § solicits results for leaves of the algebra tree § best mode in which to create an oracle n Oracle predicts a correct outcome Prediction § predicts void-results § predicts results saved in previous test cycles Tester confirms an outcome

Algebraic Validation n Algebraic testing § grows all primitive paths ending in all operations § solicits results for leaves of the algebra tree § best mode in which to create an oracle n Oracle predicts a correct outcome Prediction § predicts void-results § predicts results saved in previous test cycles Tester confirms an outcome

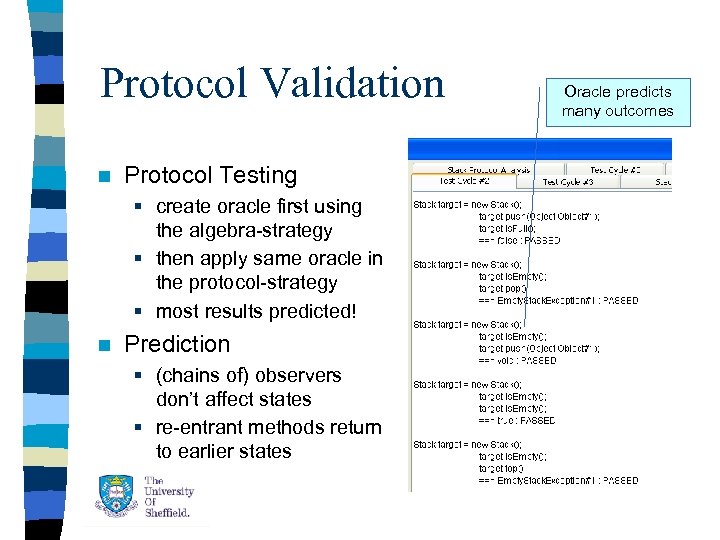

Protocol Validation n Protocol Testing § create oracle first using the algebra-strategy § then apply same oracle in the protocol-strategy § most results predicted! n Prediction § (chains of) observers don’t affect states § re-entrant methods return to earlier states Oracle predicts many outcomes

Protocol Validation n Protocol Testing § create oracle first using the algebra-strategy § then apply same oracle in the protocol-strategy § most results predicted! n Prediction § (chains of) observers don’t affect states § re-entrant methods return to earlier states Oracle predicts many outcomes

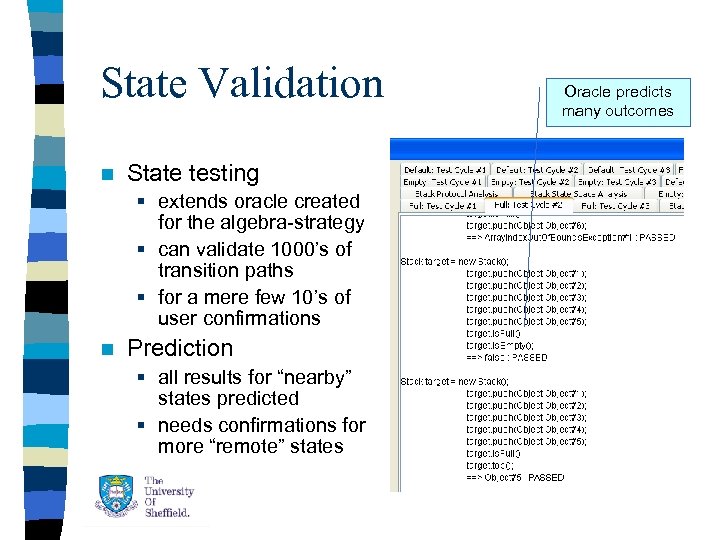

State Validation n State testing § extends oracle created for the algebra-strategy § can validate 1000’s of transition paths § for a mere few 10’s of user confirmations n Prediction § all results for “nearby” states predicted § needs confirmations for more “remote” states Oracle predicts many outcomes

State Validation n State testing § extends oracle created for the algebra-strategy § can validate 1000’s of transition paths § for a mere few 10’s of user confirmations n Prediction § all results for “nearby” states predicted § needs confirmations for more “remote” states Oracle predicts many outcomes

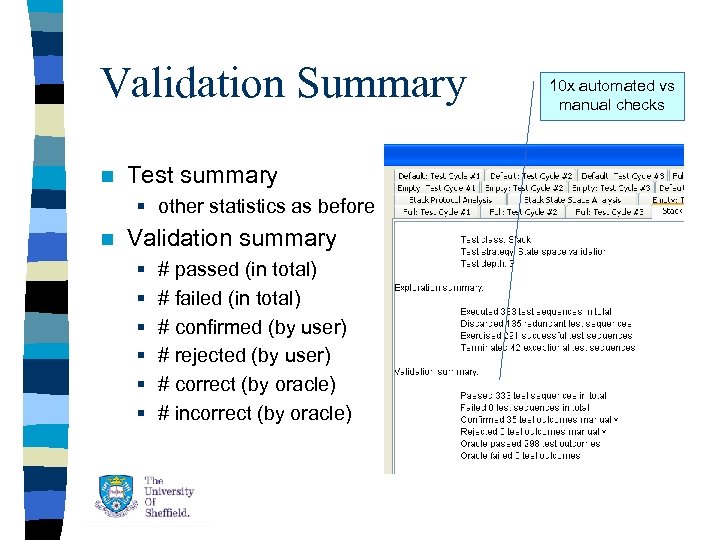

Validation Summary n Test summary § other statistics as before n Validation summary § § § # passed (in total) # failed (in total) # confirmed (by user) # rejected (by user) # correct (by oracle) # incorrect (by oracle) 10 x automated vs manual checks

Validation Summary n Test summary § other statistics as before n Validation summary § § § # passed (in total) # failed (in total) # confirmed (by user) # rejected (by user) # correct (by oracle) # incorrect (by oracle) 10 x automated vs manual checks

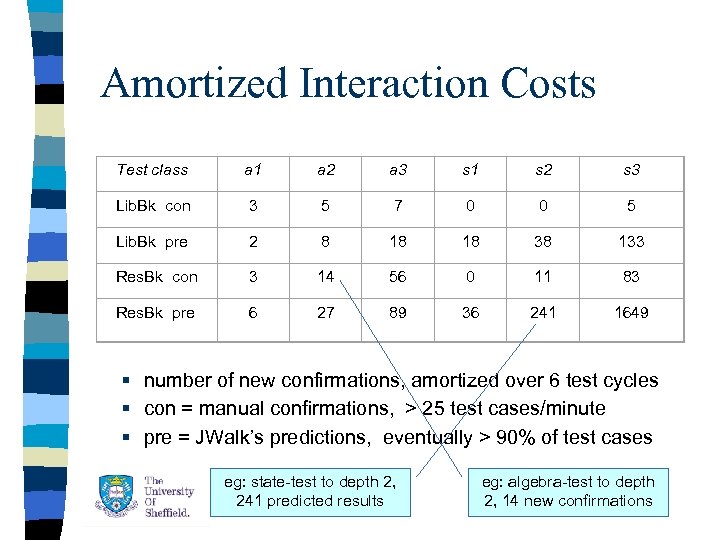

Amortized Interaction Costs Test class a 1 a 2 a 3 s 1 s 2 s 3 Lib. Bk con 3 5 7 0 0 5 Lib. Bk pre 2 8 18 18 38 133 Res. Bk con 3 14 56 0 11 83 Res. Bk pre 6 27 89 36 241 1649 § number of new confirmations, amortized over 6 test cycles § con = manual confirmations, > 25 test cases/minute § pre = JWalk’s predictions, eventually > 90% of test cases eg: state-test to depth 2, 241 predicted results eg: algebra-test to depth 2, 14 new confirmations

Amortized Interaction Costs Test class a 1 a 2 a 3 s 1 s 2 s 3 Lib. Bk con 3 5 7 0 0 5 Lib. Bk pre 2 8 18 18 38 133 Res. Bk con 3 14 56 0 11 83 Res. Bk pre 6 27 89 36 241 1649 § number of new confirmations, amortized over 6 test cycles § con = manual confirmations, > 25 test cases/minute § pre = JWalk’s predictions, eventually > 90% of test cases eg: state-test to depth 2, 241 predicted results eg: algebra-test to depth 2, 14 new confirmations

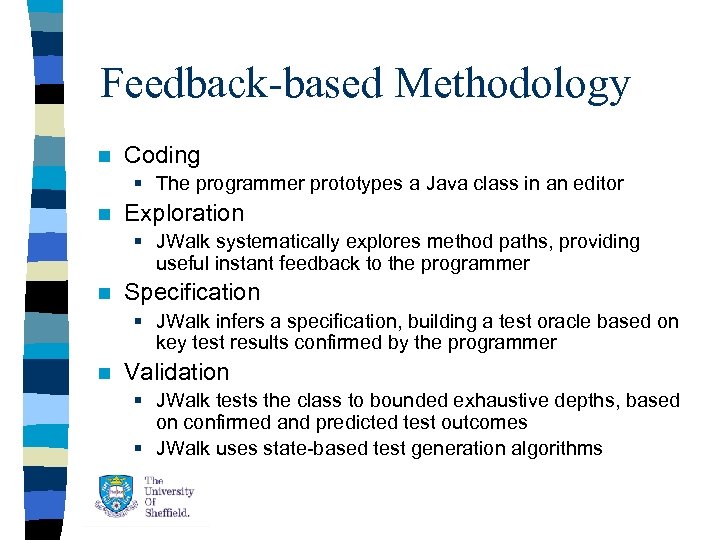

Feedback-based Methodology n Coding § The programmer prototypes a Java class in an editor n Exploration § JWalk systematically explores method paths, providing useful instant feedback to the programmer n Specification § JWalk infers a specification, building a test oracle based on key test results confirmed by the programmer n Validation § JWalk tests the class to bounded exhaustive depths, based on confirmed and predicted test outcomes § JWalk uses state-based test generation algorithms

Feedback-based Methodology n Coding § The programmer prototypes a Java class in an editor n Exploration § JWalk systematically explores method paths, providing useful instant feedback to the programmer n Specification § JWalk infers a specification, building a test oracle based on key test results confirmed by the programmer n Validation § JWalk tests the class to bounded exhaustive depths, based on confirmed and predicted test outcomes § JWalk uses state-based test generation algorithms

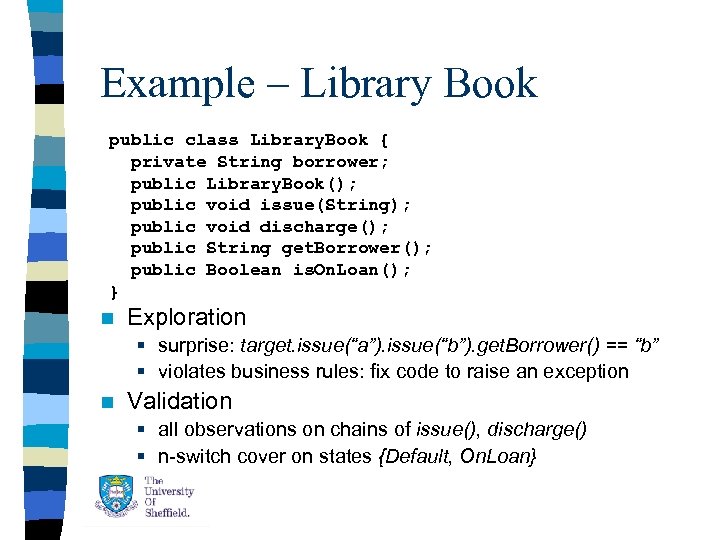

Example – Library Book public class Library. Book { private String borrower; public Library. Book(); public void issue(String); public void discharge(); public String get. Borrower(); public Boolean is. On. Loan(); } n Exploration § surprise: target. issue(“a”). issue(“b”). get. Borrower() == “b” § violates business rules: fix code to raise an exception n Validation § all observations on chains of issue(), discharge() § n-switch cover on states {Default, On. Loan}

Example – Library Book public class Library. Book { private String borrower; public Library. Book(); public void issue(String); public void discharge(); public String get. Borrower(); public Boolean is. On. Loan(); } n Exploration § surprise: target. issue(“a”). issue(“b”). get. Borrower() == “b” § violates business rules: fix code to raise an exception n Validation § all observations on chains of issue(), discharge() § n-switch cover on states {Default, On. Loan}

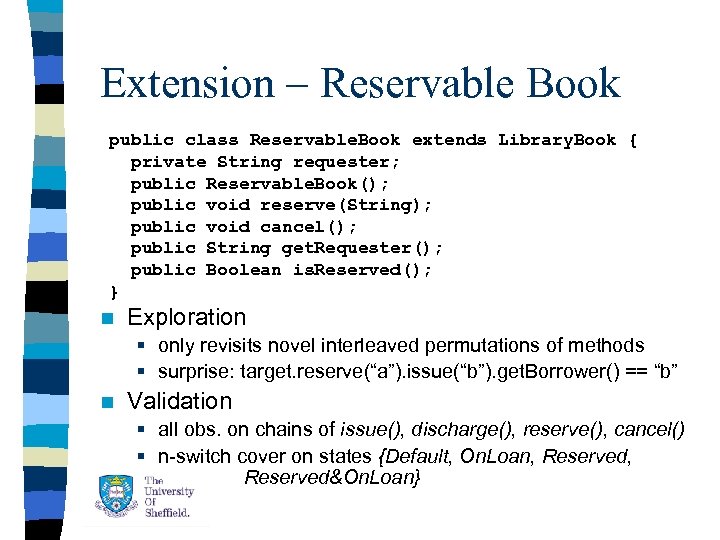

Extension – Reservable Book public class Reservable. Book extends Library. Book { private String requester; public Reservable. Book(); public void reserve(String); public void cancel(); public String get. Requester(); public Boolean is. Reserved(); } n Exploration § only revisits novel interleaved permutations of methods § surprise: target. reserve(“a”). issue(“b”). get. Borrower() == “b” n Validation § all obs. on chains of issue(), discharge(), reserve(), cancel() § n-switch cover on states {Default, On. Loan, Reserved&On. Loan}

Extension – Reservable Book public class Reservable. Book extends Library. Book { private String requester; public Reservable. Book(); public void reserve(String); public void cancel(); public String get. Requester(); public Boolean is. Reserved(); } n Exploration § only revisits novel interleaved permutations of methods § surprise: target. reserve(“a”). issue(“b”). get. Borrower() == “b” n Validation § all obs. on chains of issue(), discharge(), reserve(), cancel() § n-switch cover on states {Default, On. Loan, Reserved&On. Loan}

Evaluation n User Acceptance § programmers find JWalk habitable § they can concentrate on creative aspects (coding) while JWalk handles systematic aspects (validation, testing) n Main Cost is Confirmations § not so burdensome, since amortized over many test cycles § metric: measure amortized confirmations per test cycle n Comparison with JUnit § common testing objective for manual and lazy systematic testing; evaluate coverage and testing effort § Eclipse+JUnit vs. JWalk. Editor: given the task of testing the “transition cover + all equivalence partitions of inputs”

Evaluation n User Acceptance § programmers find JWalk habitable § they can concentrate on creative aspects (coding) while JWalk handles systematic aspects (validation, testing) n Main Cost is Confirmations § not so burdensome, since amortized over many test cycles § metric: measure amortized confirmations per test cycle n Comparison with JUnit § common testing objective for manual and lazy systematic testing; evaluate coverage and testing effort § Eclipse+JUnit vs. JWalk. Editor: given the task of testing the “transition cover + all equivalence partitions of inputs”

Comparison with n JUnit manual testing method § Manual test creation takes skill, time and effort (eg: ~20 min to develop manual cases for Reservable. Book) § The programmer missed certain corner-cases § eg: target. discharge() - a nullop? § The programmer redundantly tested some properties § eg: assert. True(target != null) - multiple times § The state coverage for Library. Book was incomplete, due to the programmer missing hard-to-see cases § The saved tests were not reusable for Reservable. Book, for which all-new tests were written to test new interleavings

Comparison with n JUnit manual testing method § Manual test creation takes skill, time and effort (eg: ~20 min to develop manual cases for Reservable. Book) § The programmer missed certain corner-cases § eg: target. discharge() - a nullop? § The programmer redundantly tested some properties § eg: assert. True(target != null) - multiple times § The state coverage for Library. Book was incomplete, due to the programmer missing hard-to-see cases § The saved tests were not reusable for Reservable. Book, for which all-new tests were written to test new interleavings

Advantages of JWalk n JWalk lazy systematic testing § JWalk automates test case selection relieves the programmer of the burden of thinking up the right test cases! § Each test case is guaranteed to test a unique property § Interactive test result confirmation is very fast (eg: ~80 sec in total for 36 unique test cases in Reservable. Book) § All states and transitions covered, including nullops, to the chosen depth § The test oracle created for Library. Book formed the basis for the new oracle for Reservable. Book, but… § JWalk presented only those sequences involving new methods, and all interleavings with inherited methods

Advantages of JWalk n JWalk lazy systematic testing § JWalk automates test case selection relieves the programmer of the burden of thinking up the right test cases! § Each test case is guaranteed to test a unique property § Interactive test result confirmation is very fast (eg: ~80 sec in total for 36 unique test cases in Reservable. Book) § All states and transitions covered, including nullops, to the chosen depth § The test oracle created for Library. Book formed the basis for the new oracle for Reservable. Book, but… § JWalk presented only those sequences involving new methods, and all interleavings with inherited methods

Measuring the Testing? n Suppose an ideal test set § § n BR : behavioural response (set) T : tests to be evaluated (bag – duplicates? ) TE = BR T : effective tests (set) TR = T – TE : redundant tests (bag) Define test metrics § Ef(T) = (|TE | – |TR |) / |BR| : effectiveness § Ad(T) = |TE | / |BR| : adequacy

Measuring the Testing? n Suppose an ideal test set § § n BR : behavioural response (set) T : tests to be evaluated (bag – duplicates? ) TE = BR T : effective tests (set) TR = T – TE : redundant tests (bag) Define test metrics § Ef(T) = (|TE | – |TR |) / |BR| : effectiveness § Ad(T) = |TE | / |BR| : adequacy

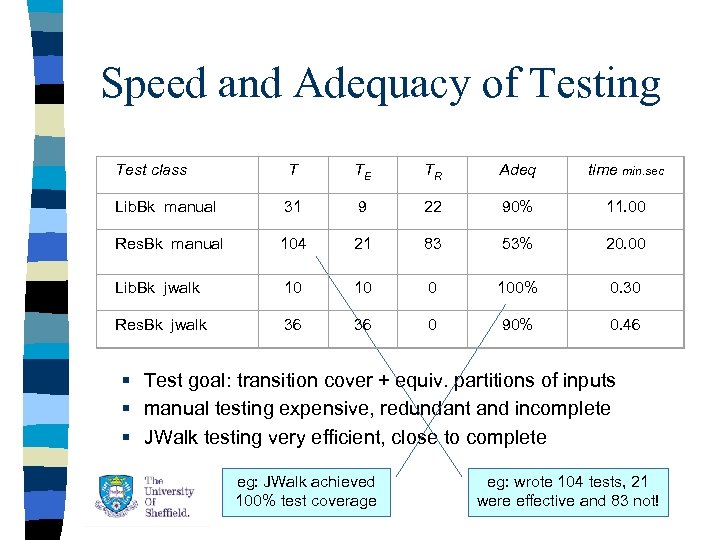

Speed and Adequacy of Testing Test class T TE TR Adeq time min. sec Lib. Bk manual 31 9 22 90% 11. 00 Res. Bk manual 104 21 83 53% 20. 00 Lib. Bk jwalk 10 100% 0. 30 Res. Bk jwalk 36 36 0 90% 0. 46 § Test goal: transition cover + equiv. partitions of inputs § manual testing expensive, redundant and incomplete § JWalk testing very efficient, close to complete eg: JWalk achieved 100% test coverage eg: wrote 104 tests, 21 were effective and 83 not!

Speed and Adequacy of Testing Test class T TE TR Adeq time min. sec Lib. Bk manual 31 9 22 90% 11. 00 Res. Bk manual 104 21 83 53% 20. 00 Lib. Bk jwalk 10 100% 0. 30 Res. Bk jwalk 36 36 0 90% 0. 46 § Test goal: transition cover + equiv. partitions of inputs § manual testing expensive, redundant and incomplete § JWalk testing very efficient, close to complete eg: JWalk achieved 100% test coverage eg: wrote 104 tests, 21 were effective and 83 not!

Some Conclusions n JUnit: expert manual testing § § n massive over-generation of tests (w. r. t. goal) sometimes adequate, but not effective stronger (t 2, t 3); duplicated; and missed tests hopelessly inefficient – also debugging test suites! JWalk: lazy systematic testing § § near-ideal coverage, adequate and effective a few input partitions missed (simple generation strategy) very efficient use of the tester’s time – sec. not min. or: two orders (x 1000) more tests, for same effort

Some Conclusions n JUnit: expert manual testing § § n massive over-generation of tests (w. r. t. goal) sometimes adequate, but not effective stronger (t 2, t 3); duplicated; and missed tests hopelessly inefficient – also debugging test suites! JWalk: lazy systematic testing § § near-ideal coverage, adequate and effective a few input partitions missed (simple generation strategy) very efficient use of the tester’s time – sec. not min. or: two orders (x 1000) more tests, for same effort

More Conclusions n Feedback-based development § unexpected gain: automatic validation of prototype code § c. f. Alloy’s model checking from a partial specification n Moral for testing § § automatically executing saved tests is not so great need systematic test generation tools to get coverage automate the parts that humans get wrong! let humans focus on right/wrong responses.

More Conclusions n Feedback-based development § unexpected gain: automatic validation of prototype code § c. f. Alloy’s model checking from a partial specification n Moral for testing § § automatically executing saved tests is not so great need systematic test generation tools to get coverage automate the parts that humans get wrong! let humans focus on right/wrong responses.

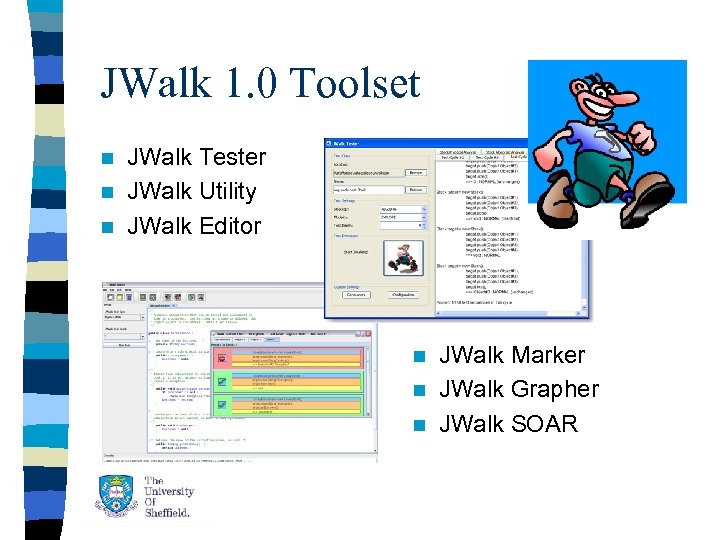

JWalk 1. 0 Toolset JWalk Tester n JWalk Utility n JWalk Editor n JWalk Marker n JWalk Grapher n JWalk SOAR n

JWalk 1. 0 Toolset JWalk Tester n JWalk Utility n JWalk Editor n JWalk Marker n JWalk Grapher n JWalk SOAR n

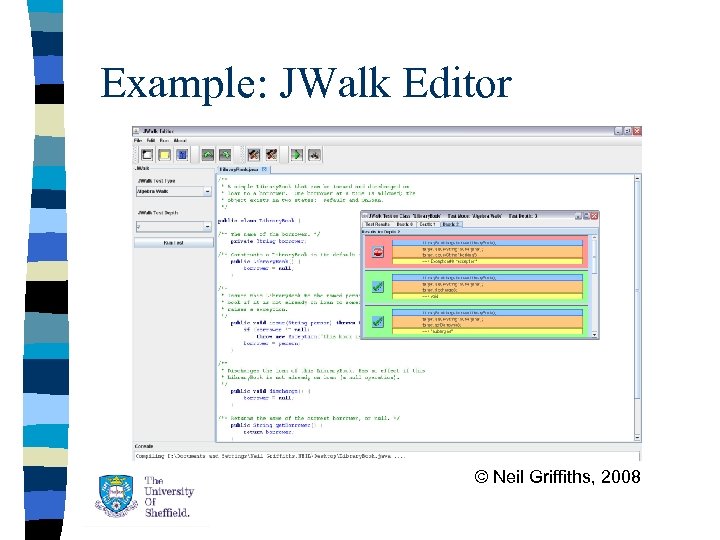

Example: JWalk Editor © Neil Griffiths, 2008

Example: JWalk Editor © Neil Griffiths, 2008

Any Questions? Put me to the test! http: //www. dcs. shef. ac. uk/~ajhs/jwalk/ © Anthony Simons, 2009, with help from Chris Thomson, Neil Griffiths, Mihai Gabriel Glont, Arne-Michael Toersel

Any Questions? Put me to the test! http: //www. dcs. shef. ac. uk/~ajhs/jwalk/ © Anthony Simons, 2009, with help from Chris Thomson, Neil Griffiths, Mihai Gabriel Glont, Arne-Michael Toersel

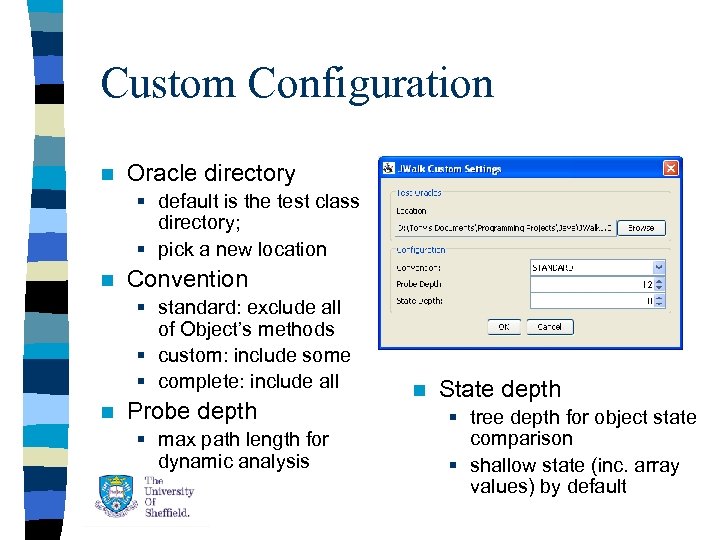

Custom Configuration n Oracle directory § default is the test class directory; § pick a new location n Convention § standard: exclude all of Object’s methods § custom: include some § complete: include all n Probe depth § max path length for dynamic analysis n State depth § tree depth for object state comparison § shallow state (inc. array values) by default

Custom Configuration n Oracle directory § default is the test class directory; § pick a new location n Convention § standard: exclude all of Object’s methods § custom: include some § complete: include all n Probe depth § max path length for dynamic analysis n State depth § tree depth for object state comparison § shallow state (inc. array values) by default

Generators n The heart of JWalk § synthesise test input values on demand § try to assure even spread of inputs for a given type § by default, supply monotonic sequences of values n Master. Generator § built-in Object. Generator is fairly comprehensive § synthesises basic values, arrays, standard objects, etc. n Custom. Generator § take control of how particular types are synthesised § provide custom generators; add to a master as delegates § eg: String. Generator, Enum. Generator, Interface. Generator

Generators n The heart of JWalk § synthesise test input values on demand § try to assure even spread of inputs for a given type § by default, supply monotonic sequences of values n Master. Generator § built-in Object. Generator is fairly comprehensive § synthesises basic values, arrays, standard objects, etc. n Custom. Generator § take control of how particular types are synthesised § provide custom generators; add to a master as delegates § eg: String. Generator, Enum. Generator, Interface. Generator

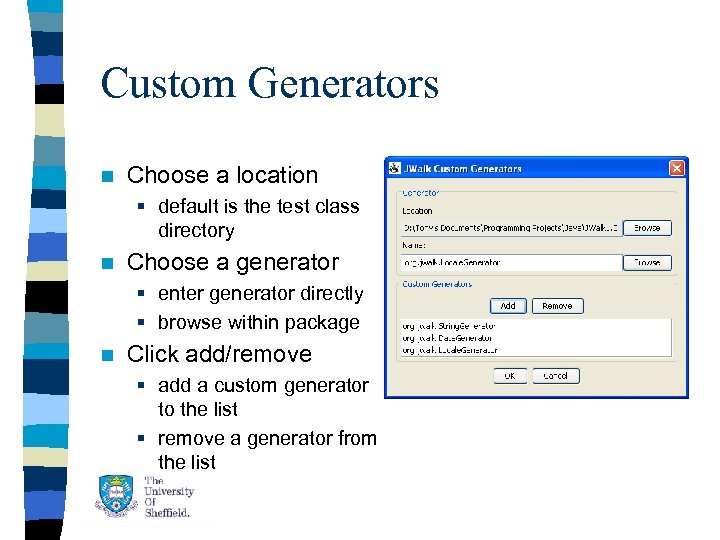

Custom Generators n Choose a location § default is the test class directory n Choose a generator § enter generator directly § browse within package n Click add/remove § add a custom generator to the list § remove a generator from the list

Custom Generators n Choose a location § default is the test class directory n Choose a generator § enter generator directly § browse within package n Click add/remove § add a custom generator to the list § remove a generator from the list

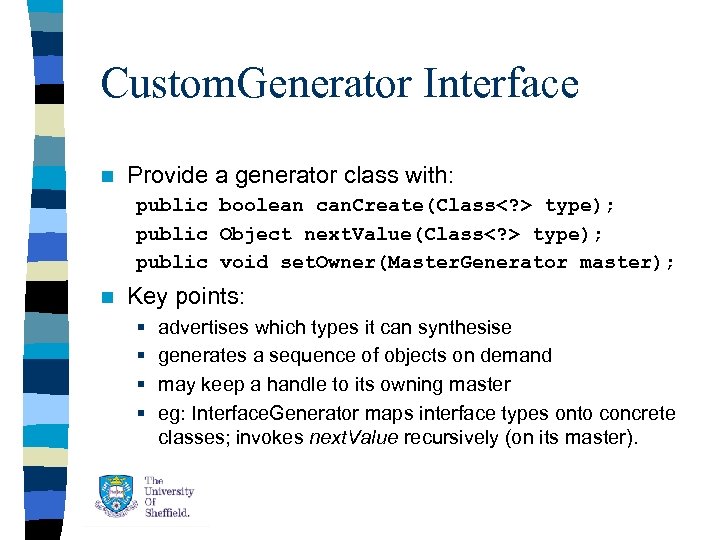

Custom. Generator Interface n Provide a generator class with: public boolean can. Create(Class type); public Object next. Value(Class type); public void set. Owner(Master. Generator master); n Key points: § § advertises which types it can synthesise generates a sequence of objects on demand may keep a handle to its owning master eg: Interface. Generator maps interface types onto concrete classes; invokes next. Value recursively (on its master).

Custom. Generator Interface n Provide a generator class with: public boolean can. Create(Class type); public Object next. Value(Class type); public void set. Owner(Master. Generator master); n Key points: § § advertises which types it can synthesise generates a sequence of objects on demand may keep a handle to its owning master eg: Interface. Generator maps interface types onto concrete classes; invokes next. Value recursively (on its master).

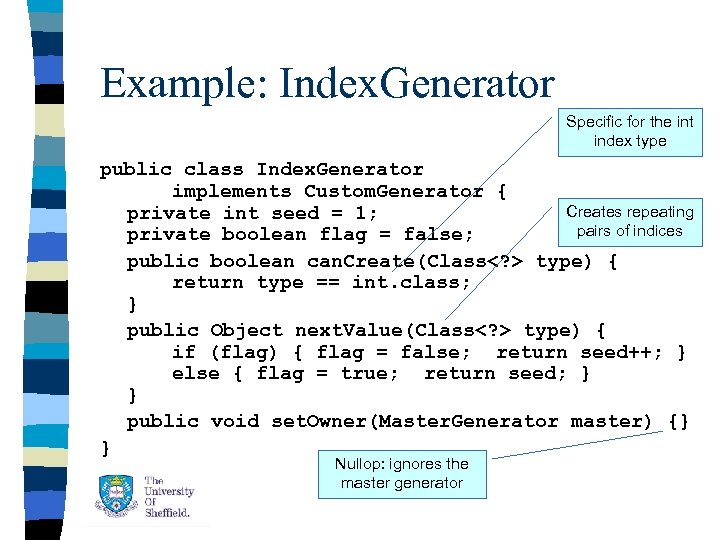

Example: Index. Generator Specific for the int index type public class Index. Generator implements Custom. Generator { Creates repeating private int seed = 1; pairs of indices private boolean flag = false; public boolean can. Create(Class type) { return type == int. class; } public Object next. Value(Class type) { if (flag) { flag = false; return seed++; } else { flag = true; return seed; } } public void set. Owner(Master. Generator master) {} } Nullop: ignores the master generator

Example: Index. Generator Specific for the int index type public class Index. Generator implements Custom. Generator { Creates repeating private int seed = 1; pairs of indices private boolean flag = false; public boolean can. Create(Class type) { return type == int. class; } public Object next. Value(Class type) { if (flag) { flag = false; return seed++; } else { flag = true; return seed; } } public void set. Owner(Master. Generator master) {} } Nullop: ignores the master generator

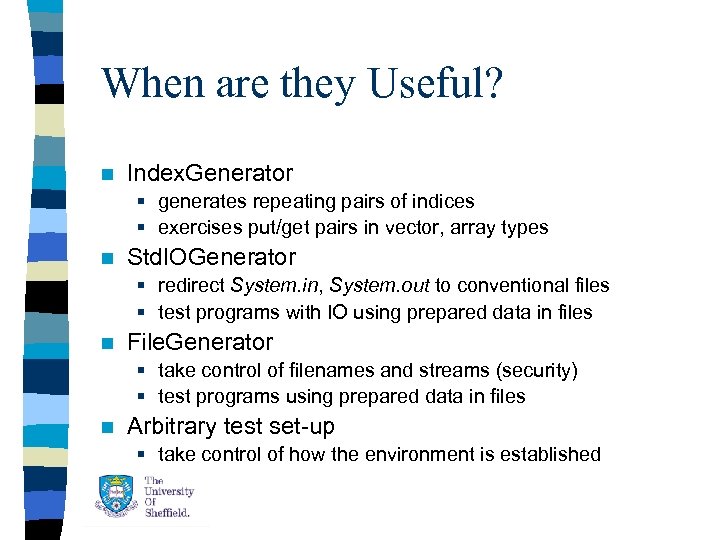

When are they Useful? n Index. Generator § generates repeating pairs of indices § exercises put/get pairs in vector, array types n Std. IOGenerator § redirect System. in, System. out to conventional files § test programs with IO using prepared data in files n File. Generator § take control of filenames and streams (security) § test programs using prepared data in files n Arbitrary test set-up § take control of how the environment is established

When are they Useful? n Index. Generator § generates repeating pairs of indices § exercises put/get pairs in vector, array types n Std. IOGenerator § redirect System. in, System. out to conventional files § test programs with IO using prepared data in files n File. Generator § take control of filenames and streams (security) § test programs using prepared data in files n Arbitrary test set-up § take control of how the environment is established