04481046047520f6efa3d9a802641606.ppt

- Количество слайдов: 41

Large-Scale Extraction and Use of Knowledge from Text Peter Clark and Phil Harrison Boeing Research and Technology Seattle, WA

Large-Scale Extraction and Use of Knowledge from Text Peter Clark and Phil Harrison Boeing Research and Technology Seattle, WA

Motivation and Goals § World knowledge is required for many AI tasks § especially natural language processing “The man ate spaghetti with a fork” § BUT: Not many knowledge resources are available § This work: Create large knowledge resource § called DART (Discovery& Aggregation of Relations in Text) § based on Schubert’s conjecture § publically available

Motivation and Goals § World knowledge is required for many AI tasks § especially natural language processing “The man ate spaghetti with a fork” § BUT: Not many knowledge resources are available § This work: Create large knowledge resource § called DART (Discovery& Aggregation of Relations in Text) § based on Schubert’s conjecture § publically available

Outline § § Extracting Knowledge from Text Using the Knowledge 1. Parsing 2. Recognizing Textual Entailment 3. Human Evaluation § Future Work

Outline § § Extracting Knowledge from Text Using the Knowledge 1. Parsing 2. Recognizing Textual Entailment 3. Human Evaluation § Future Work

Extracting Knowledge from Text Schubert’s Conjecture: There is a largely untapped source of general knowledge in texts, lying at a level beneath the explicit assertional content, which can be harnessed. “The camouflaged helicopter landed near the embassy. ” helicopters can land helicopters can be camouflaged

Extracting Knowledge from Text Schubert’s Conjecture: There is a largely untapped source of general knowledge in texts, lying at a level beneath the explicit assertional content, which can be harnessed. “The camouflaged helicopter landed near the embassy. ” helicopters can land helicopters can be camouflaged

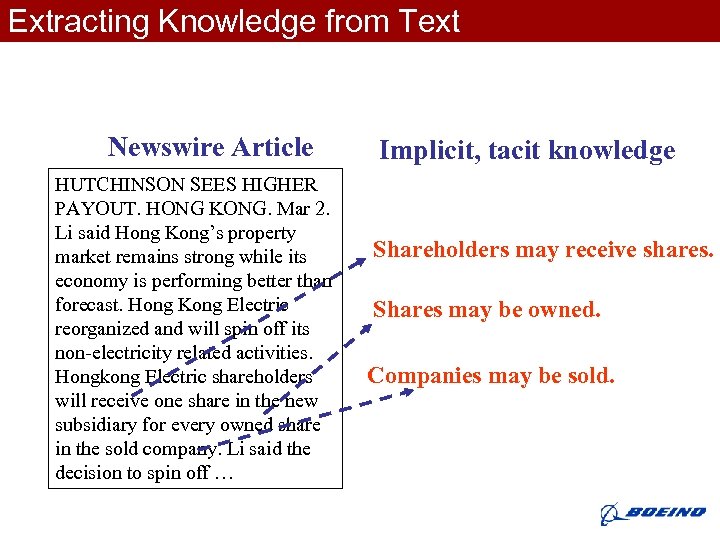

Extracting Knowledge from Text Newswire Article HUTCHINSON SEES HIGHER PAYOUT. HONG KONG. Mar 2. Li said Hong Kong’s property market remains strong while its economy is performing better than forecast. Hong Kong Electric reorganized and will spin off its non-electricity related activities. Hongkong Electric shareholders will receive one share in the new subsidiary for every owned share in the sold company. Li said the decision to spin off … Implicit, tacit knowledge Shareholders may receive shares. Shares may be owned. Companies may be sold.

Extracting Knowledge from Text Newswire Article HUTCHINSON SEES HIGHER PAYOUT. HONG KONG. Mar 2. Li said Hong Kong’s property market remains strong while its economy is performing better than forecast. Hong Kong Electric reorganized and will spin off its non-electricity related activities. Hongkong Electric shareholders will receive one share in the new subsidiary for every owned share in the sold company. Li said the decision to spin off … Implicit, tacit knowledge Shareholders may receive shares. Shares may be owned. Companies may be sold.

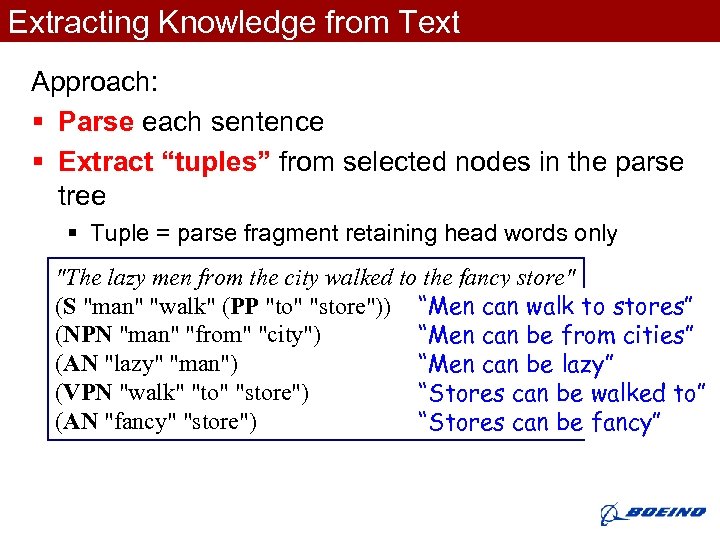

Extracting Knowledge from Text Approach: § Parse each sentence § Extract “tuples” from selected nodes in the parse tree § Tuple = parse fragment retaining head words only "The lazy men from the city walked to the fancy store" (S "man" "walk" (PP "to" "store")) “Men can walk to stores” (NPN "man" "from" "city") “Men can be from cities” (AN "lazy" "man") “Men can be lazy” (VPN "walk" "to" "store") “Stores can be walked to” (AN "fancy" "store") “Stores can be fancy”

Extracting Knowledge from Text Approach: § Parse each sentence § Extract “tuples” from selected nodes in the parse tree § Tuple = parse fragment retaining head words only "The lazy men from the city walked to the fancy store" (S "man" "walk" (PP "to" "store")) “Men can walk to stores” (NPN "man" "from" "city") “Men can be from cities” (AN "lazy" "man") “Men can be lazy” (VPN "walk" "to" "store") “Stores can be walked to” (AN "fancy" "store") “Stores can be fancy”

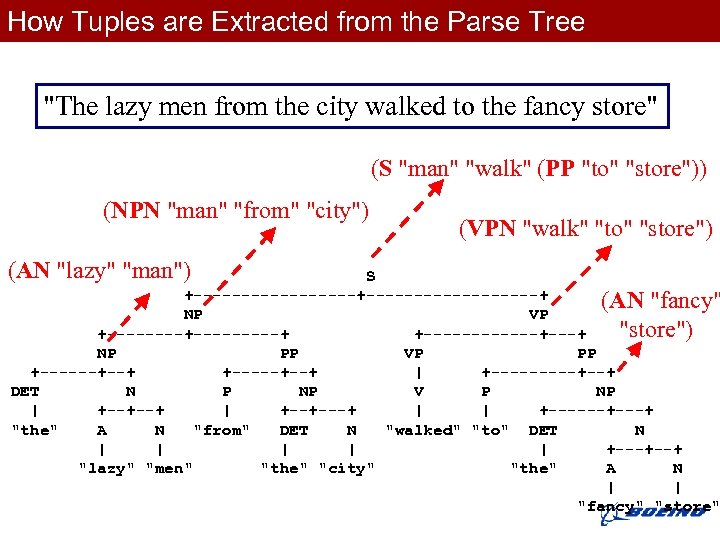

How Tuples are Extracted from the Parse Tree Extracting Knowledge from Text "The lazy men from the city walked to the fancy store" (S "man" "walk" (PP "to" "store")) (NPN "man" "from" "city") (AN "lazy" "man") (VPN "walk" "to" "store") S +------------------+ (AN "fancy" NP VP +---------+ +------+---+ "store") NP PP VP PP +------+--+ +-----+--+ | +-----+--+ DET N P NP V P NP | +--+--+ | +--+---+ | | +------+ "the" A N "from" DET N "walked" "to" DET N | | | +---+--+ "lazy" "men" "the" "city" "the" A N | | "fancy" "store"

How Tuples are Extracted from the Parse Tree Extracting Knowledge from Text "The lazy men from the city walked to the fancy store" (S "man" "walk" (PP "to" "store")) (NPN "man" "from" "city") (AN "lazy" "man") (VPN "walk" "to" "store") S +------------------+ (AN "fancy" NP VP +---------+ +------+---+ "store") NP PP VP PP +------+--+ +-----+--+ | +-----+--+ DET N P NP V P NP | +--+--+ | +--+---+ | | +------+ "the" A N "from" DET N "walked" "to" DET N | | | +---+--+ "lazy" "men" "the" "city" "the" A N | | "fancy" "store"

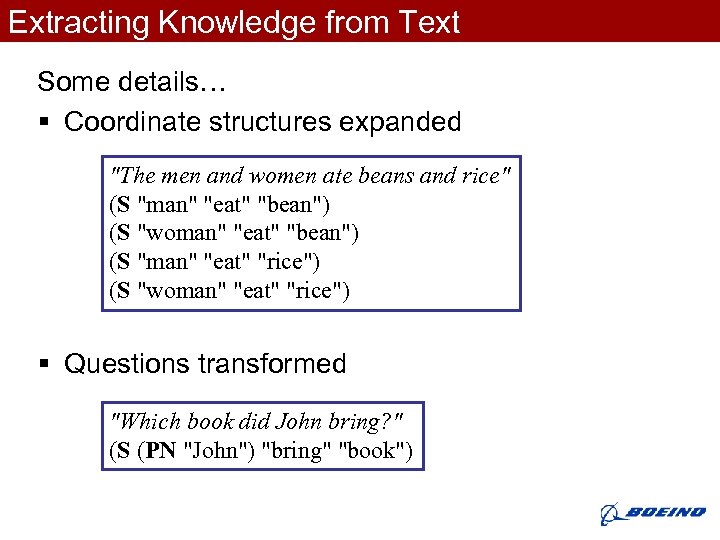

Extracting Knowledge from Text Some details… § Coordinate structures expanded "The men and women ate beans and rice" (S "man" "eat" "bean") (S "woman" "eat" "bean") (S "man" "eat" "rice") (S "woman" "eat" "rice") § Questions transformed "Which book did John bring? " (S (PN "John") "bring" "book")

Extracting Knowledge from Text Some details… § Coordinate structures expanded "The men and women ate beans and rice" (S "man" "eat" "bean") (S "woman" "eat" "bean") (S "man" "eat" "rice") (S "woman" "eat" "rice") § Questions transformed "Which book did John bring? " (S (PN "John") "bring" "book")

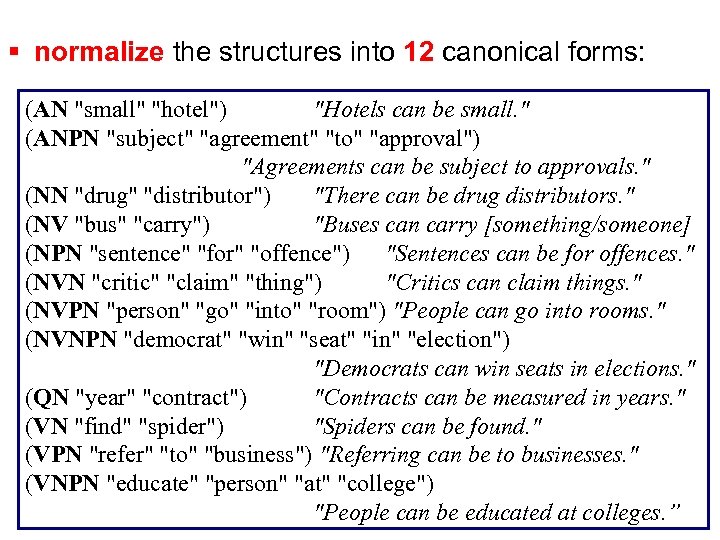

§ normalize the structures into 12 canonical forms: (AN "small" "hotel") "Hotels can be small. " (ANPN "subject" "agreement" "to" "approval") "Agreements can be subject to approvals. " (NN "drug" "distributor") "There can be drug distributors. " (NV "bus" "carry") "Buses can carry [something/someone] (NPN "sentence" "for" "offence") "Sentences can be for offences. " (NVN "critic" "claim" "thing") "Critics can claim things. " (NVPN "person" "go" "into" "room") "People can go into rooms. " (NVNPN "democrat" "win" "seat" "in" "election") "Democrats can win seats in elections. " (QN "year" "contract") "Contracts can be measured in years. " (VN "find" "spider") "Spiders can be found. " (VPN "refer" "to" "business") "Referring can be to businesses. " (VNPN "educate" "person" "at" "college") "People can be educated at colleges. ”

§ normalize the structures into 12 canonical forms: (AN "small" "hotel") "Hotels can be small. " (ANPN "subject" "agreement" "to" "approval") "Agreements can be subject to approvals. " (NN "drug" "distributor") "There can be drug distributors. " (NV "bus" "carry") "Buses can carry [something/someone] (NPN "sentence" "for" "offence") "Sentences can be for offences. " (NVN "critic" "claim" "thing") "Critics can claim things. " (NVPN "person" "go" "into" "room") "People can go into rooms. " (NVNPN "democrat" "win" "seat" "in" "election") "Democrats can win seats in elections. " (QN "year" "contract") "Contracts can be measured in years. " (VN "find" "spider") "Spiders can be found. " (VPN "refer" "to" "business") "Referring can be to businesses. " (VNPN "educate" "person" "at" "college") "People can be educated at colleges. ”

Generating the DART Database § Run over Reuters + British National Corpus § 2 months CPU § Result: the DART database (Discovery and Aggregation of Relations in Text) § 23 million propositions (110 million with duplicates)

Generating the DART Database § Run over Reuters + British National Corpus § 2 months CPU § Result: the DART database (Discovery and Aggregation of Relations in Text) § 23 million propositions (110 million with duplicates)

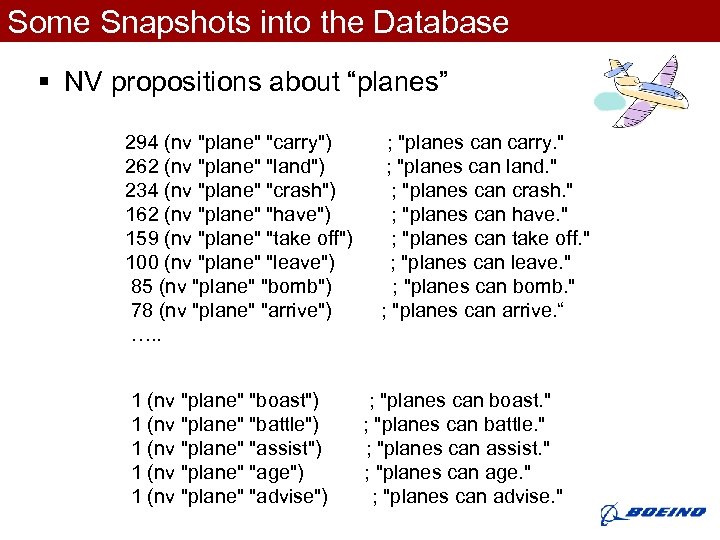

Some Snapshots into the Database § NV propositions about “planes” 294 (nv "plane" "carry") 262 (nv "plane" "land") 234 (nv "plane" "crash") 162 (nv "plane" "have") 159 (nv "plane" "take off") 100 (nv "plane" "leave") 85 (nv "plane" "bomb") 78 (nv "plane" "arrive") …. . 1 (nv "plane" "boast") 1 (nv "plane" "battle") 1 (nv "plane" "assist") 1 (nv "plane" "age") 1 (nv "plane" "advise") ; "planes can carry. " ; "planes can land. " ; "planes can crash. " ; "planes can have. " ; "planes can take off. " ; "planes can leave. " ; "planes can bomb. " ; "planes can arrive. “ ; "planes can boast. " ; "planes can battle. " ; "planes can assist. " ; "planes can age. " ; "planes can advise. "

Some Snapshots into the Database § NV propositions about “planes” 294 (nv "plane" "carry") 262 (nv "plane" "land") 234 (nv "plane" "crash") 162 (nv "plane" "have") 159 (nv "plane" "take off") 100 (nv "plane" "leave") 85 (nv "plane" "bomb") 78 (nv "plane" "arrive") …. . 1 (nv "plane" "boast") 1 (nv "plane" "battle") 1 (nv "plane" "assist") 1 (nv "plane" "age") 1 (nv "plane" "advise") ; "planes can carry. " ; "planes can land. " ; "planes can crash. " ; "planes can have. " ; "planes can take off. " ; "planes can leave. " ; "planes can bomb. " ; "planes can arrive. “ ; "planes can boast. " ; "planes can battle. " ; "planes can assist. " ; "planes can age. " ; "planes can advise. "

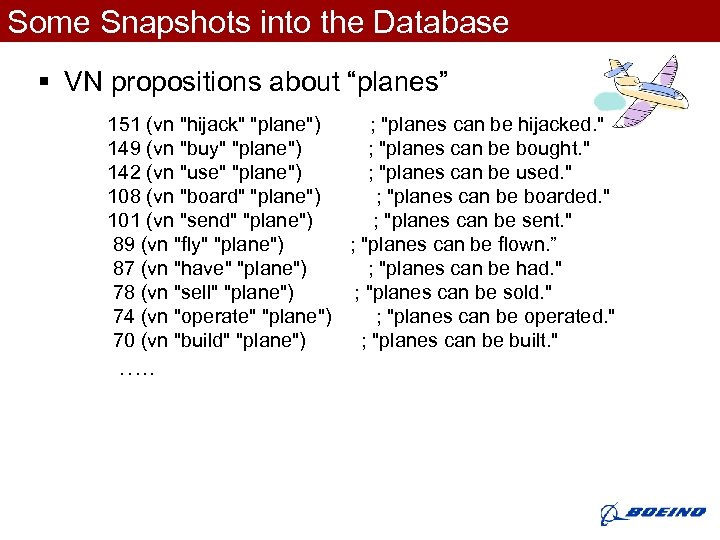

Some Snapshots into the Database § VN propositions about “planes” 151 (vn "hijack" "plane") ; "planes can be hijacked. " 149 (vn "buy" "plane") ; "planes can be bought. " 142 (vn "use" "plane") ; "planes can be used. " 108 (vn "board" "plane") ; "planes can be boarded. " 101 (vn "send" "plane") ; "planes can be sent. " 89 (vn "fly" "plane") ; "planes can be flown. ” 87 (vn "have" "plane") ; "planes can be had. " 78 (vn "sell" "plane") ; "planes can be sold. " 74 (vn "operate" "plane") ; "planes can be operated. " 70 (vn "build" "plane") ; "planes can be built. " …. .

Some Snapshots into the Database § VN propositions about “planes” 151 (vn "hijack" "plane") ; "planes can be hijacked. " 149 (vn "buy" "plane") ; "planes can be bought. " 142 (vn "use" "plane") ; "planes can be used. " 108 (vn "board" "plane") ; "planes can be boarded. " 101 (vn "send" "plane") ; "planes can be sent. " 89 (vn "fly" "plane") ; "planes can be flown. ” 87 (vn "have" "plane") ; "planes can be had. " 78 (vn "sell" "plane") ; "planes can be sold. " 74 (vn "operate" "plane") ; "planes can be operated. " 70 (vn "build" "plane") ; "planes can be built. " …. .

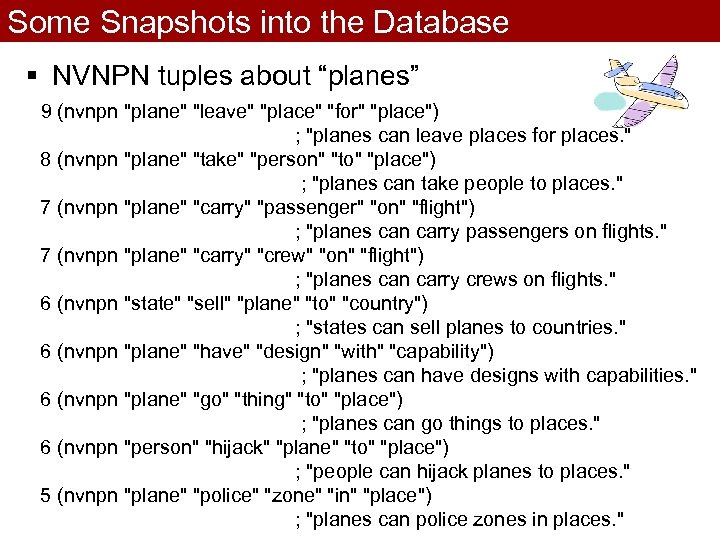

Some Snapshots into the Database § NVNPN tuples about “planes” 9 (nvnpn "plane" "leave" "place" "for" "place") ; "planes can leave places for places. " 8 (nvnpn "plane" "take" "person" "to" "place") ; "planes can take people to places. " 7 (nvnpn "plane" "carry" "passenger" "on" "flight") ; "planes can carry passengers on flights. " 7 (nvnpn "plane" "carry" "crew" "on" "flight") ; "planes can carry crews on flights. " 6 (nvnpn "state" "sell" "plane" "to" "country") ; "states can sell planes to countries. " 6 (nvnpn "plane" "have" "design" "with" "capability") ; "planes can have designs with capabilities. " 6 (nvnpn "plane" "go" "thing" "to" "place") ; "planes can go things to places. " 6 (nvnpn "person" "hijack" "plane" "to" "place") ; "people can hijack planes to places. " 5 (nvnpn "plane" "police" "zone" "in" "place") ; "planes can police zones in places. "

Some Snapshots into the Database § NVNPN tuples about “planes” 9 (nvnpn "plane" "leave" "place" "for" "place") ; "planes can leave places for places. " 8 (nvnpn "plane" "take" "person" "to" "place") ; "planes can take people to places. " 7 (nvnpn "plane" "carry" "passenger" "on" "flight") ; "planes can carry passengers on flights. " 7 (nvnpn "plane" "carry" "crew" "on" "flight") ; "planes can carry crews on flights. " 6 (nvnpn "state" "sell" "plane" "to" "country") ; "states can sell planes to countries. " 6 (nvnpn "plane" "have" "design" "with" "capability") ; "planes can have designs with capabilities. " 6 (nvnpn "plane" "go" "thing" "to" "place") ; "planes can go things to places. " 6 (nvnpn "person" "hijack" "plane" "to" "place") ; "people can hijack planes to places. " 5 (nvnpn "plane" "police" "zone" "in" "place") ; "planes can police zones in places. "

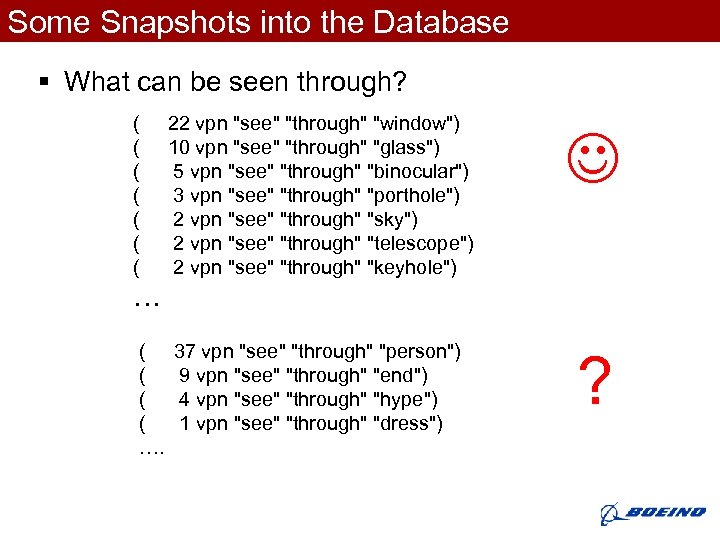

Some Snapshots into the Database § What can be seen through? ( ( ( ( 22 vpn "see" "through" "window") 10 vpn "see" "through" "glass") 5 vpn "see" "through" "binocular") 3 vpn "see" "through" "porthole") 2 vpn "see" "through" "sky") 2 vpn "see" "through" "telescope") 2 vpn "see" "through" "keyhole") … ( ( …. 37 vpn "see" "through" "person") 9 vpn "see" "through" "end") 4 vpn "see" "through" "hype") 1 vpn "see" "through" "dress") ?

Some Snapshots into the Database § What can be seen through? ( ( ( ( 22 vpn "see" "through" "window") 10 vpn "see" "through" "glass") 5 vpn "see" "through" "binocular") 3 vpn "see" "through" "porthole") 2 vpn "see" "through" "sky") 2 vpn "see" "through" "telescope") 2 vpn "see" "through" "keyhole") … ( ( …. 37 vpn "see" "through" "person") 9 vpn "see" "through" "end") 4 vpn "see" "through" "hype") 1 vpn "see" "through" "dress") ?

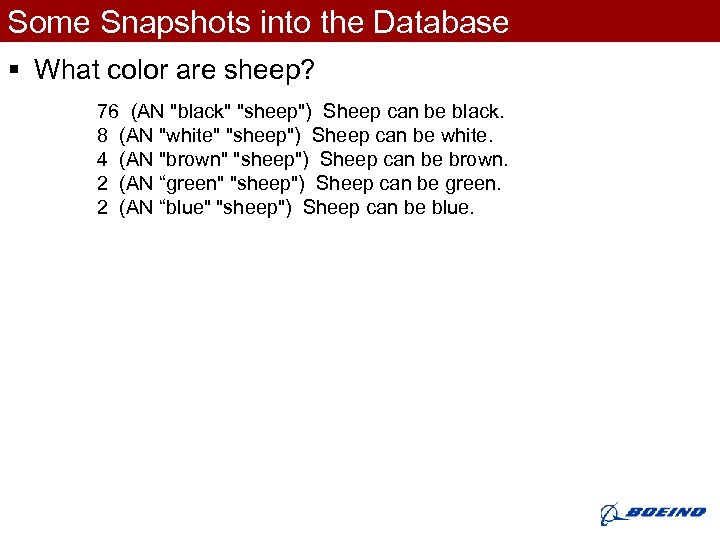

Some Snapshots into the Database § What color are sheep? 76 (AN "black" "sheep") Sheep can be black. 8 (AN "white" "sheep") Sheep can be white. 4 (AN "brown" "sheep") Sheep can be brown. 2 (AN “green" "sheep") Sheep can be green. 2 (AN “blue" "sheep") Sheep can be blue.

Some Snapshots into the Database § What color are sheep? 76 (AN "black" "sheep") Sheep can be black. 8 (AN "white" "sheep") Sheep can be white. 4 (AN "brown" "sheep") Sheep can be brown. 2 (AN “green" "sheep") Sheep can be green. 2 (AN “blue" "sheep") Sheep can be blue.

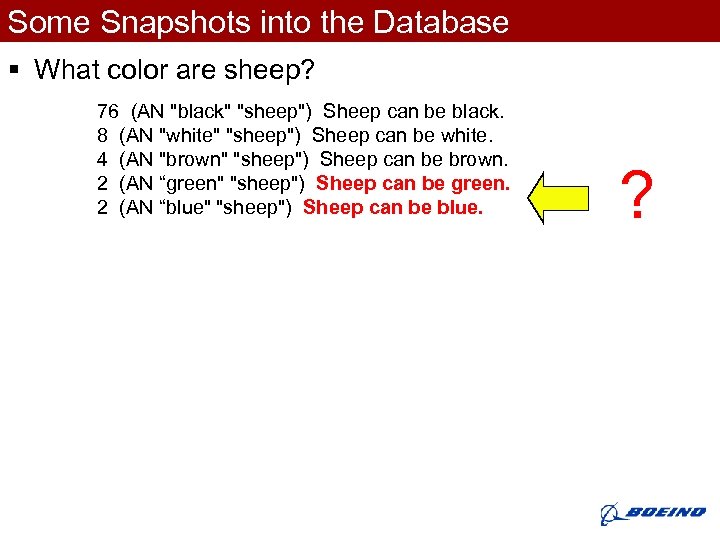

Some Snapshots into the Database § What color are sheep? 76 (AN "black" "sheep") Sheep can be black. 8 (AN "white" "sheep") Sheep can be white. 4 (AN "brown" "sheep") Sheep can be brown. 2 (AN “green" "sheep") Sheep can be green. 2 (AN “blue" "sheep") Sheep can be blue. ?

Some Snapshots into the Database § What color are sheep? 76 (AN "black" "sheep") Sheep can be black. 8 (AN "white" "sheep") Sheep can be white. 4 (AN "brown" "sheep") Sheep can be brown. 2 (AN “green" "sheep") Sheep can be green. 2 (AN “blue" "sheep") Sheep can be blue. ?

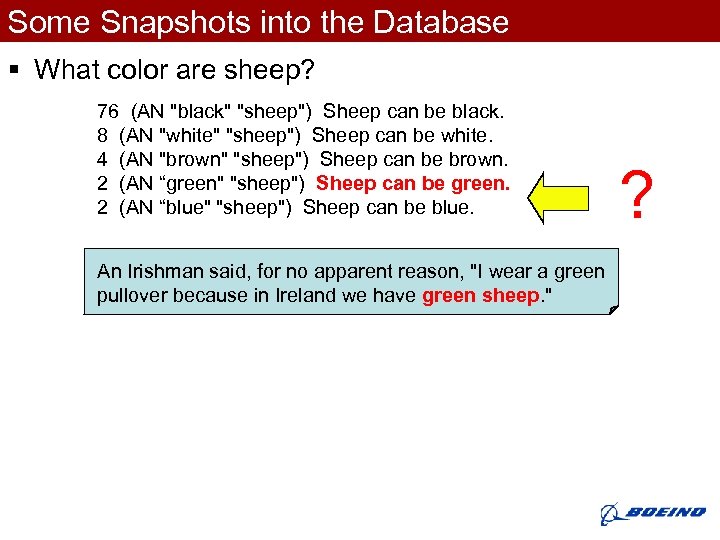

Some Snapshots into the Database § What color are sheep? 76 (AN "black" "sheep") Sheep can be black. 8 (AN "white" "sheep") Sheep can be white. 4 (AN "brown" "sheep") Sheep can be brown. 2 (AN “green" "sheep") Sheep can be green. 2 (AN “blue" "sheep") Sheep can be blue. An Irishman said, for no apparent reason, "I wear a green pullover because in Ireland we have green sheep. " ?

Some Snapshots into the Database § What color are sheep? 76 (AN "black" "sheep") Sheep can be black. 8 (AN "white" "sheep") Sheep can be white. 4 (AN "brown" "sheep") Sheep can be brown. 2 (AN “green" "sheep") Sheep can be green. 2 (AN “blue" "sheep") Sheep can be blue. An Irishman said, for no apparent reason, "I wear a green pullover because in Ireland we have green sheep. " ?

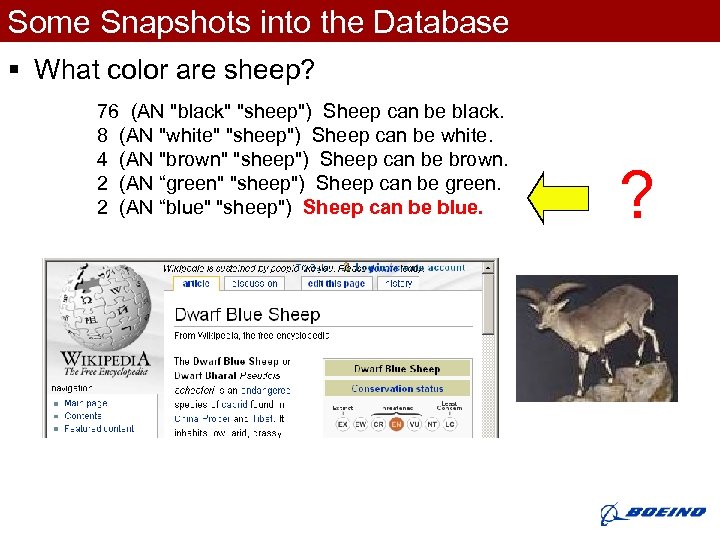

Some Snapshots into the Database § What color are sheep? 76 (AN "black" "sheep") Sheep can be black. 8 (AN "white" "sheep") Sheep can be white. 4 (AN "brown" "sheep") Sheep can be brown. 2 (AN “green" "sheep") Sheep can be green. 2 (AN “blue" "sheep") Sheep can be blue. ?

Some Snapshots into the Database § What color are sheep? 76 (AN "black" "sheep") Sheep can be black. 8 (AN "white" "sheep") Sheep can be white. 4 (AN "brown" "sheep") Sheep can be brown. 2 (AN “green" "sheep") Sheep can be green. 2 (AN “blue" "sheep") Sheep can be blue. ?

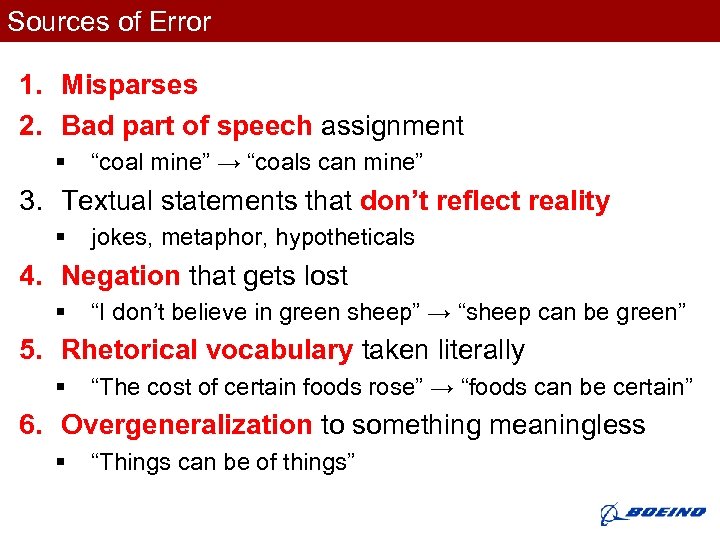

Sources of Error 1. Misparses 2. Bad part of speech assignment § “coal mine” → “coals can mine” 3. Textual statements that don’t reflect reality § jokes, metaphor, hypotheticals 4. Negation that gets lost § “I don’t believe in green sheep” → “sheep can be green” 5. Rhetorical vocabulary taken literally § “The cost of certain foods rose” → “foods can be certain” 6. Overgeneralization to something meaningless § “Things can be of things”

Sources of Error 1. Misparses 2. Bad part of speech assignment § “coal mine” → “coals can mine” 3. Textual statements that don’t reflect reality § jokes, metaphor, hypotheticals 4. Negation that gets lost § “I don’t believe in green sheep” → “sheep can be green” 5. Rhetorical vocabulary taken literally § “The cost of certain foods rose” → “foods can be certain” 6. Overgeneralization to something meaningless § “Things can be of things”

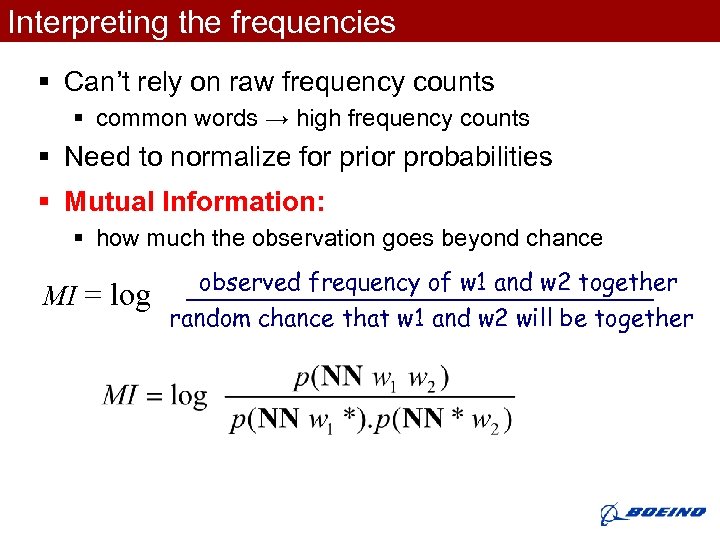

Interpreting the frequencies § Can’t rely on raw frequency counts § common words → high frequency counts § Need to normalize for prior probabilities § Mutual Information: § how much the observation goes beyond chance MI = log observed frequency of w 1 and w 2 together random chance that w 1 and w 2 will be together

Interpreting the frequencies § Can’t rely on raw frequency counts § common words → high frequency counts § Need to normalize for prior probabilities § Mutual Information: § how much the observation goes beyond chance MI = log observed frequency of w 1 and w 2 together random chance that w 1 and w 2 will be together

Outline § § Extracting Knowledge from Text Using the Knowledge 1. Parsing 2. Recognizing Textual Entailment 3. Human Evaluation § Future Work

Outline § § Extracting Knowledge from Text Using the Knowledge 1. Parsing 2. Recognizing Textual Entailment 3. Human Evaluation § Future Work

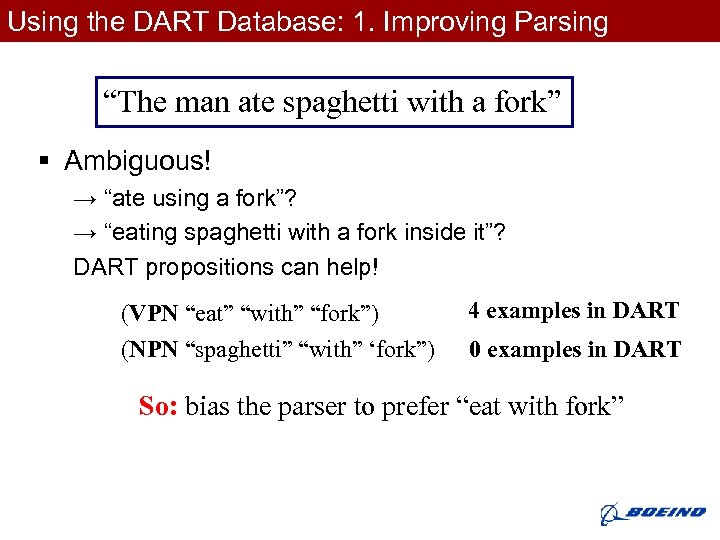

Using the DART Database: 1. Improving Parsing “The man ate spaghetti with a fork” § Ambiguous! → “ate using a fork”? → “eating spaghetti with a fork inside it”? DART propositions can help! (VPN “eat” “with” “fork”) (NPN “spaghetti” “with” ‘fork”) 4 examples in DART 0 examples in DART So: bias the parser to prefer “eat with fork”

Using the DART Database: 1. Improving Parsing “The man ate spaghetti with a fork” § Ambiguous! → “ate using a fork”? → “eating spaghetti with a fork inside it”? DART propositions can help! (VPN “eat” “with” “fork”) (NPN “spaghetti” “with” ‘fork”) 4 examples in DART 0 examples in DART So: bias the parser to prefer “eat with fork”

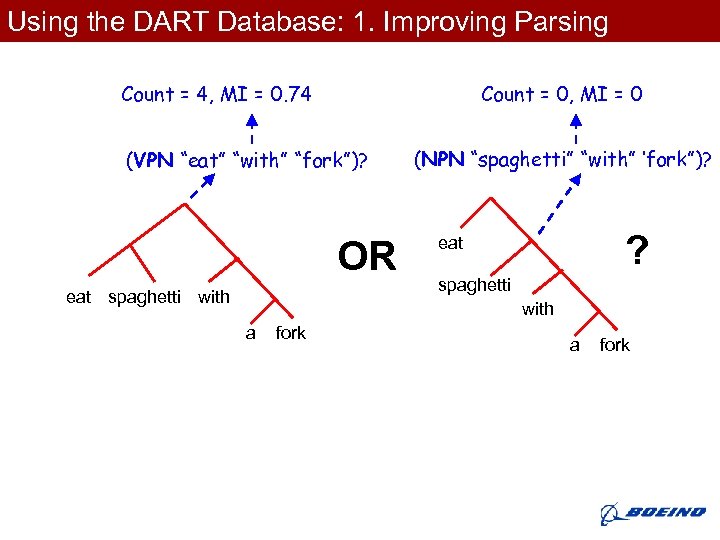

Using the DART Database: 1. Improving Parsing Count = 4, MI = 0. 74 Count = 0, MI = 0 (VPN “eat” “with” “fork”)? OR eat spaghetti with (NPN “spaghetti” “with” ‘fork”)? ? eat spaghetti with a fork

Using the DART Database: 1. Improving Parsing Count = 4, MI = 0. 74 Count = 0, MI = 0 (VPN “eat” “with” “fork”)? OR eat spaghetti with (NPN “spaghetti” “with” ‘fork”)? ? eat spaghetti with a fork

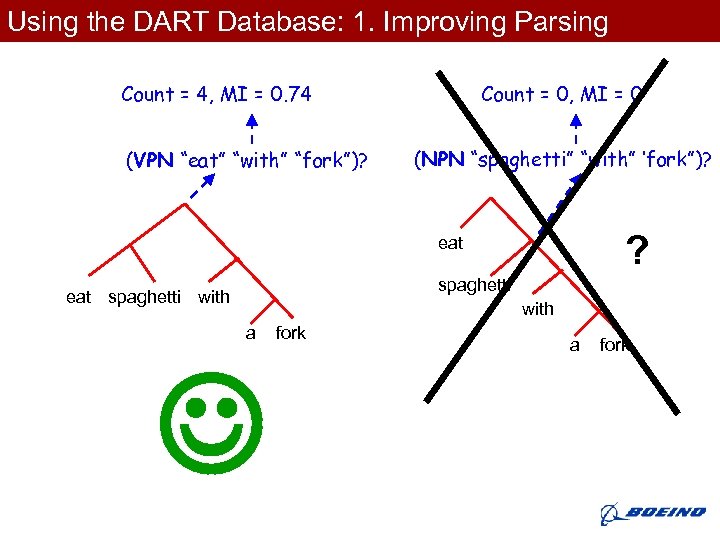

Using the DART Database: 1. Improving Parsing Count = 4, MI = 0. 74 (VPN “eat” “with” “fork”)? Count = 0, MI = 0 (NPN “spaghetti” “with” ‘fork”)? ? eat spaghetti with a fork

Using the DART Database: 1. Improving Parsing Count = 4, MI = 0. 74 (VPN “eat” “with” “fork”)? Count = 0, MI = 0 (NPN “spaghetti” “with” ‘fork”)? ? eat spaghetti with a fork

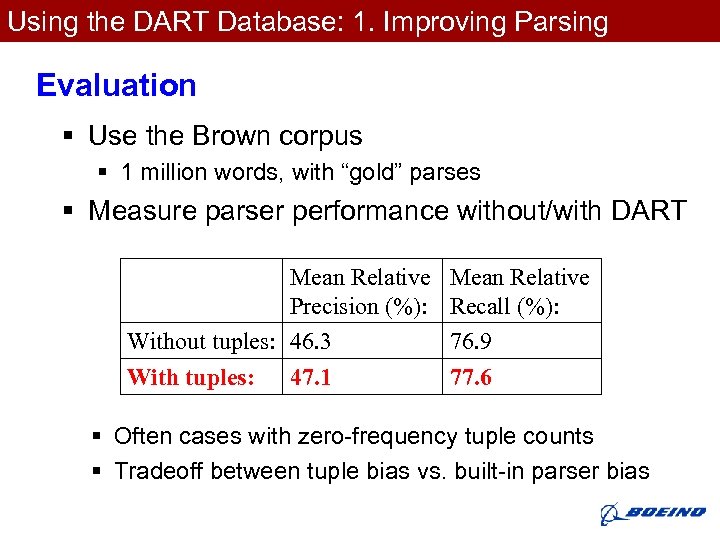

Using the DART Database: 1. Improving Parsing Evaluation § Use the Brown corpus § 1 million words, with “gold” parses § Measure parser performance without/with DART Mean Relative Precision (%): Without tuples: 46. 3 With tuples: 47. 1 Mean Relative Recall (%): 76. 9 77. 6 § Often cases with zero-frequency tuple counts § Tradeoff between tuple bias vs. built-in parser bias

Using the DART Database: 1. Improving Parsing Evaluation § Use the Brown corpus § 1 million words, with “gold” parses § Measure parser performance without/with DART Mean Relative Precision (%): Without tuples: 46. 3 With tuples: 47. 1 Mean Relative Recall (%): 76. 9 77. 6 § Often cases with zero-frequency tuple counts § Tradeoff between tuple bias vs. built-in parser bias

Outline § § Extracting Knowledge from Text Using the Knowledge 1. Parsing 2. Recognizing Textual Entailment 3. Human Evaluation § Future Work

Outline § § Extracting Knowledge from Text Using the Knowledge 1. Parsing 2. Recognizing Textual Entailment 3. Human Evaluation § Future Work

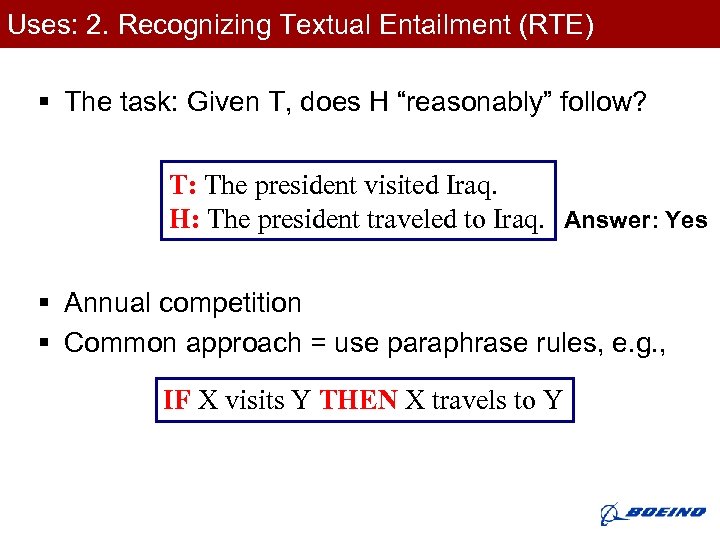

Uses: 2. Recognizing Textual Entailment (RTE) § The task: Given T, does H “reasonably” follow? T: The president visited Iraq. H: The president traveled to Iraq. Answer: Yes § Annual competition § Common approach = use paraphrase rules, e. g. , IF X visits Y THEN X travels to Y

Uses: 2. Recognizing Textual Entailment (RTE) § The task: Given T, does H “reasonably” follow? T: The president visited Iraq. H: The president traveled to Iraq. Answer: Yes § Annual competition § Common approach = use paraphrase rules, e. g. , IF X visits Y THEN X travels to Y

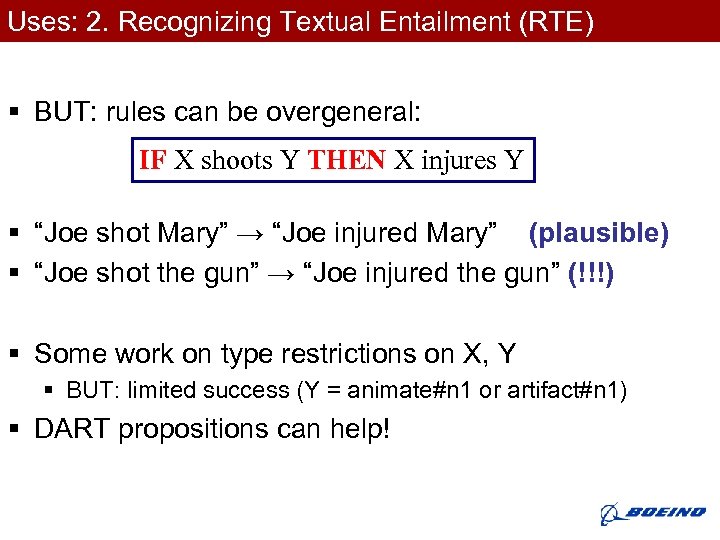

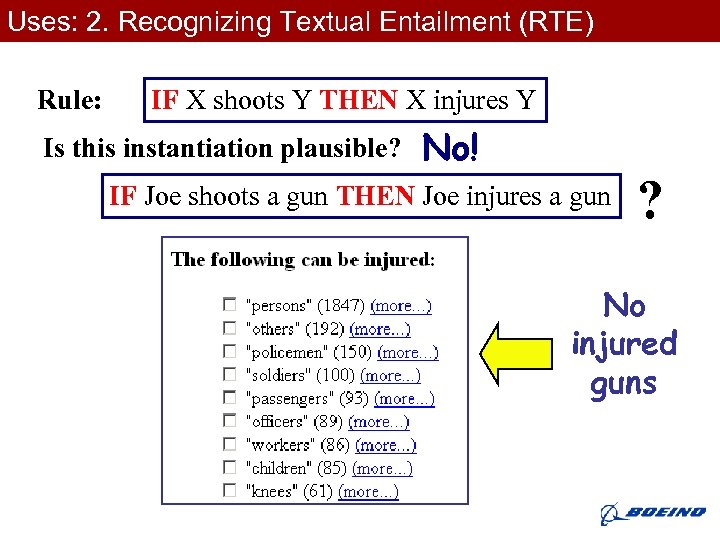

Uses: 2. Recognizing Textual Entailment (RTE) § BUT: rules can be overgeneral: IF X shoots Y THEN X injures Y § “Joe shot Mary” → “Joe injured Mary” (plausible) § “Joe shot the gun” → “Joe injured the gun” (!!!) § Some work on type restrictions on X, Y § BUT: limited success (Y = animate#n 1 or artifact#n 1) § DART propositions can help!

Uses: 2. Recognizing Textual Entailment (RTE) § BUT: rules can be overgeneral: IF X shoots Y THEN X injures Y § “Joe shot Mary” → “Joe injured Mary” (plausible) § “Joe shot the gun” → “Joe injured the gun” (!!!) § Some work on type restrictions on X, Y § BUT: limited success (Y = animate#n 1 or artifact#n 1) § DART propositions can help!

Uses: 2. Recognizing Textual Entailment (RTE) Rule: IF X shoots Y THEN X injures Y Is this instantiation plausible? No! IF Joe shoots a gun THEN Joe injures a gun ? No injured guns

Uses: 2. Recognizing Textual Entailment (RTE) Rule: IF X shoots Y THEN X injures Y Is this instantiation plausible? No! IF Joe shoots a gun THEN Joe injures a gun ? No injured guns

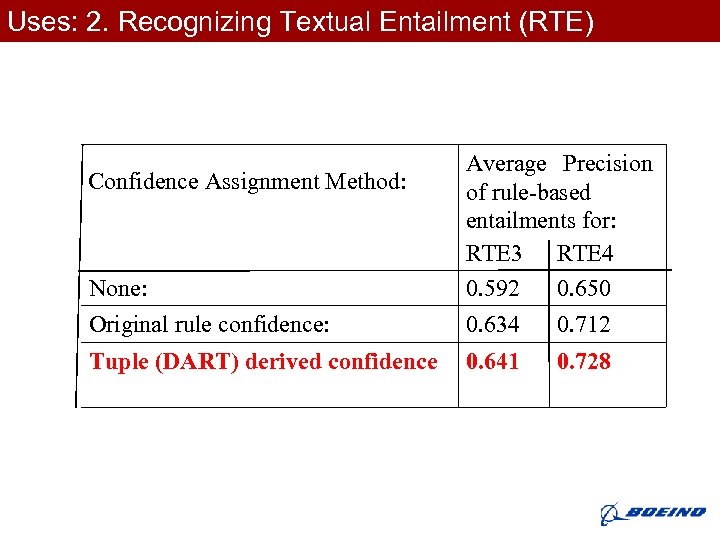

Uses: 2. Recognizing Textual Entailment (RTE) Confidence Assignment Method: None: Average Precision of rule-based entailments for: RTE 3 RTE 4 0. 592 0. 650 Original rule confidence: 0. 634 0. 712 Tuple (DART) derived confidence 0. 641 0. 728

Uses: 2. Recognizing Textual Entailment (RTE) Confidence Assignment Method: None: Average Precision of rule-based entailments for: RTE 3 RTE 4 0. 592 0. 650 Original rule confidence: 0. 634 0. 712 Tuple (DART) derived confidence 0. 641 0. 728

Outline § § Extracting Knowledge from Text Using the Knowledge 1. Parsing 2. Recognizing Textual Entailment 3. Human Evaluation § Future Work

Outline § § Extracting Knowledge from Text Using the Knowledge 1. Parsing 2. Recognizing Textual Entailment 3. Human Evaluation § Future Work

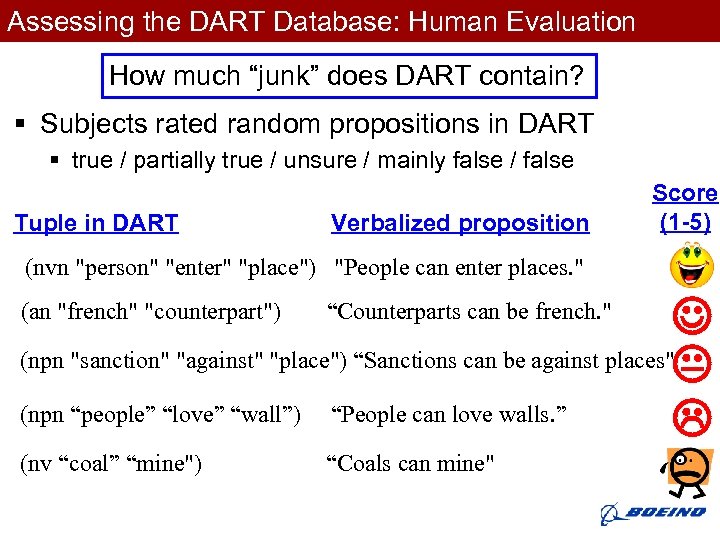

Assessing the DART Database: Human Evaluation How much “junk” does DART contain? § Subjects rated random propositions in DART § true / partially true / unsure / mainly false / false Tuple in DART Verbalized proposition Score (1 -5) (nvn "person" "enter" "place") "People can enter places. " (npn "sanction" "against" "place") “Sanctions can be against places" (npn “people” “love” “wall”) “People can love walls. ” (an "french" "counterpart") “Counterparts can be french. " (nv “coal” “mine") “Coals can mine"

Assessing the DART Database: Human Evaluation How much “junk” does DART contain? § Subjects rated random propositions in DART § true / partially true / unsure / mainly false / false Tuple in DART Verbalized proposition Score (1 -5) (nvn "person" "enter" "place") "People can enter places. " (npn "sanction" "against" "place") “Sanctions can be against places" (npn “people” “love” “wall”) “People can love walls. ” (an "french" "counterpart") “Counterparts can be french. " (nv “coal” “mine") “Coals can mine"

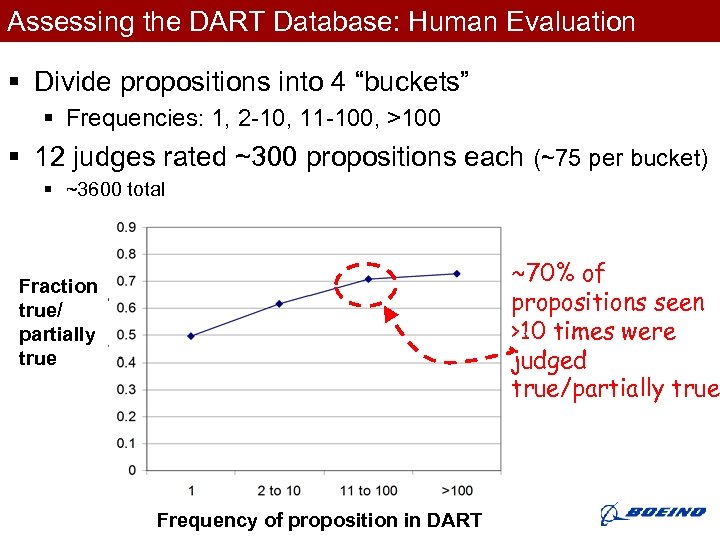

Assessing the DART Database: Human Evaluation § Divide propositions into 4 “buckets” § Frequencies: 1, 2 -10, 11 -100, >100 § 12 judges rated ~300 propositions each (~75 per bucket) § ~3600 total ~70% of propositions seen >10 times were judged true/partially true Fraction true/ partially true Frequency of proposition in DART

Assessing the DART Database: Human Evaluation § Divide propositions into 4 “buckets” § Frequencies: 1, 2 -10, 11 -100, >100 § 12 judges rated ~300 propositions each (~75 per bucket) § ~3600 total ~70% of propositions seen >10 times were judged true/partially true Fraction true/ partially true Frequency of proposition in DART

Outline § § Extracting Knowledge from Text Using the Knowledge 1. Parsing 2. Recognizing Textual Entailment 3. Human Evaluation § Discussion and Future Work

Outline § § Extracting Knowledge from Text Using the Knowledge 1. Parsing 2. Recognizing Textual Entailment 3. Human Evaluation § Discussion and Future Work

Other Resources § KNEXT – inspiration for this work § we’ve expanded size, types of proposition, and evaluation § Text. Runner § larger, less structured database of triples (only), e. g. , (“iodine” “will kill” “the lactic bacteria”) (“my friends” “had” “a car”) § Concept. Net § by Web volunteers, ~20 relations, less structured, e. g. (Capable. Of “a car” “crash”) (Used. For “a car” “getting to work”)

Other Resources § KNEXT – inspiration for this work § we’ve expanded size, types of proposition, and evaluation § Text. Runner § larger, less structured database of triples (only), e. g. , (“iodine” “will kill” “the lactic bacteria”) (“my friends” “had” “a car”) § Concept. Net § by Web volunteers, ~20 relations, less structured, e. g. (Capable. Of “a car” “crash”) (Used. For “a car” “getting to work”)

Future Directions: Generalizing DART propositions § Biggest limitation: § Still often near-zero tuples for “good” propositions § Can we generalize the propositions in DART? § thus cover the zero/near-zero cases

Future Directions: Generalizing DART propositions § Biggest limitation: § Still often near-zero tuples for “good” propositions § Can we generalize the propositions in DART? § thus cover the zero/near-zero cases

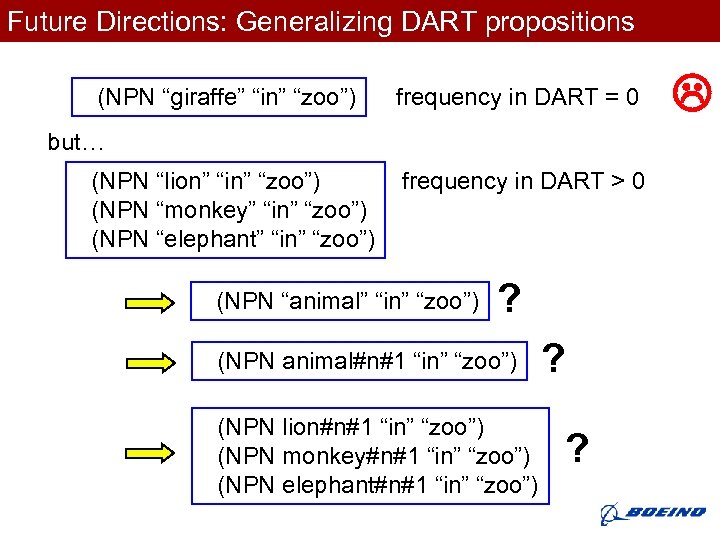

Future Directions: Generalizing DART propositions (NPN “giraffe” “in” “zoo”) frequency in DART = 0 but… (NPN “lion” “in” “zoo”) (NPN “monkey” “in” “zoo”) (NPN “elephant” “in” “zoo”) frequency in DART > 0 (NPN “animal” “in” “zoo”) ? (NPN animal#n#1 “in” “zoo”) (NPN lion#n#1 “in” “zoo”) (NPN monkey#n#1 “in” “zoo”) (NPN elephant#n#1 “in” “zoo”) ? ?

Future Directions: Generalizing DART propositions (NPN “giraffe” “in” “zoo”) frequency in DART = 0 but… (NPN “lion” “in” “zoo”) (NPN “monkey” “in” “zoo”) (NPN “elephant” “in” “zoo”) frequency in DART > 0 (NPN “animal” “in” “zoo”) ? (NPN animal#n#1 “in” “zoo”) (NPN lion#n#1 “in” “zoo”) (NPN monkey#n#1 “in” “zoo”) (NPN elephant#n#1 “in” “zoo”) ? ?

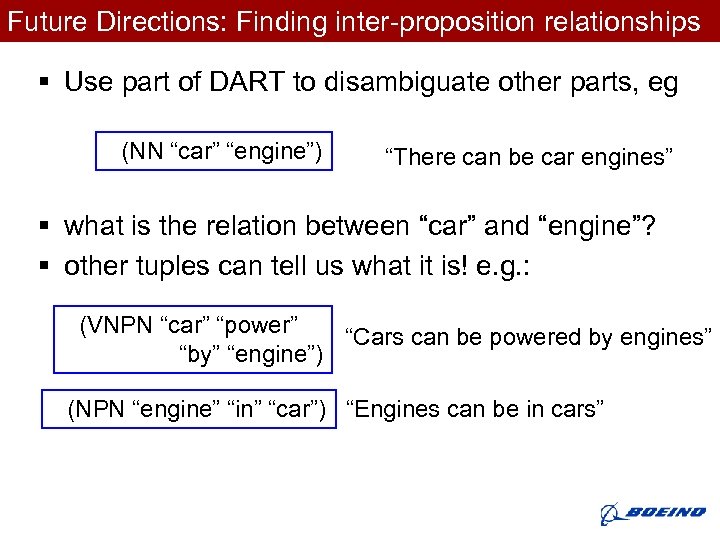

Future Directions: Finding inter-proposition relationships § Use part of DART to disambiguate other parts, eg (NN “car” “engine”) “There can be car engines” § what is the relation between “car” and “engine”? § other tuples can tell us what it is! e. g. : (VNPN “car” “power” “Cars can be powered by engines” “by” “engine”) (NPN “engine” “in” “car”) “Engines can be in cars”

Future Directions: Finding inter-proposition relationships § Use part of DART to disambiguate other parts, eg (NN “car” “engine”) “There can be car engines” § what is the relation between “car” and “engine”? § other tuples can tell us what it is! e. g. : (VNPN “car” “power” “Cars can be powered by engines” “by” “engine”) (NPN “engine” “in” “car”) “Engines can be in cars”

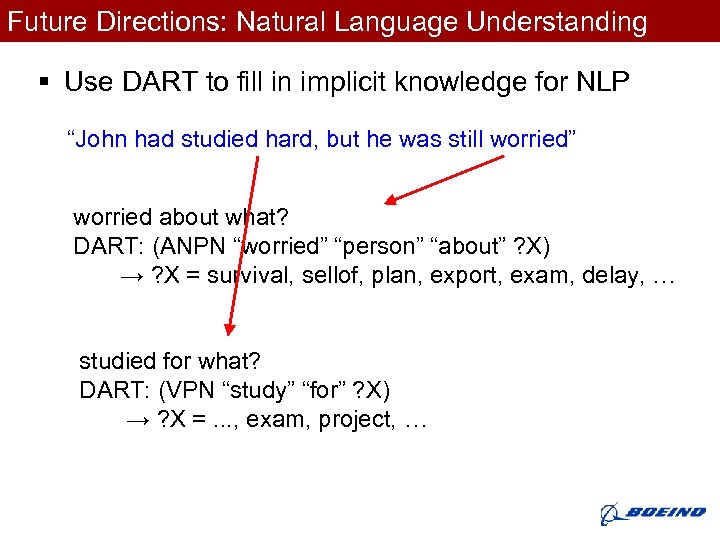

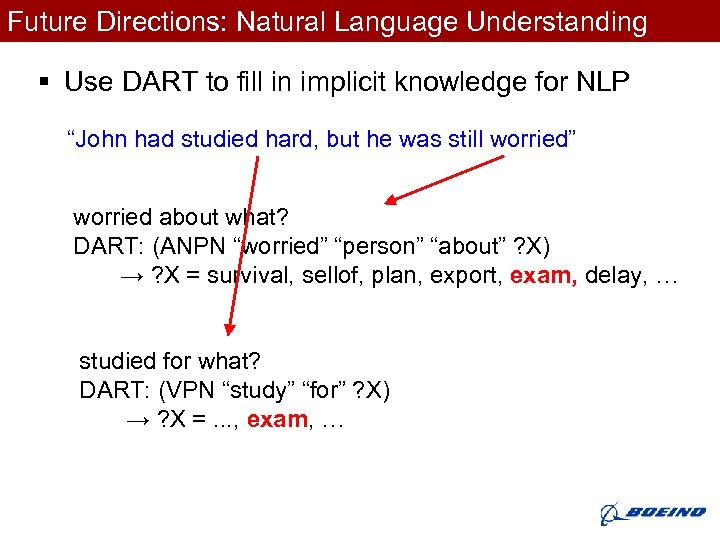

Future Directions: Natural Language Understanding § Use DART to fill in implicit knowledge for NLP “John had studied hard, but he was still worried” worried about what? DART: (ANPN “worried” “person” “about” ? X) → ? X = survival, sellof, plan, export, exam, delay, … studied for what? DART: (VPN “study” “for” ? X) → ? X =. . . , exam, project, …

Future Directions: Natural Language Understanding § Use DART to fill in implicit knowledge for NLP “John had studied hard, but he was still worried” worried about what? DART: (ANPN “worried” “person” “about” ? X) → ? X = survival, sellof, plan, export, exam, delay, … studied for what? DART: (VPN “study” “for” ? X) → ? X =. . . , exam, project, …

Future Directions: Natural Language Understanding § Use DART to fill in implicit knowledge for NLP “John had studied hard, but he was still worried” worried about what? DART: (ANPN “worried” “person” “about” ? X) → ? X = survival, sellof, plan, export, exam, delay, … studied for what? DART: (VPN “study” “for” ? X) → ? X =. . . , exam, …

Future Directions: Natural Language Understanding § Use DART to fill in implicit knowledge for NLP “John had studied hard, but he was still worried” worried about what? DART: (ANPN “worried” “person” “about” ? X) → ? X = survival, sellof, plan, export, exam, delay, … studied for what? DART: (VPN “study” “for” ? X) → ? X =. . . , exam, …

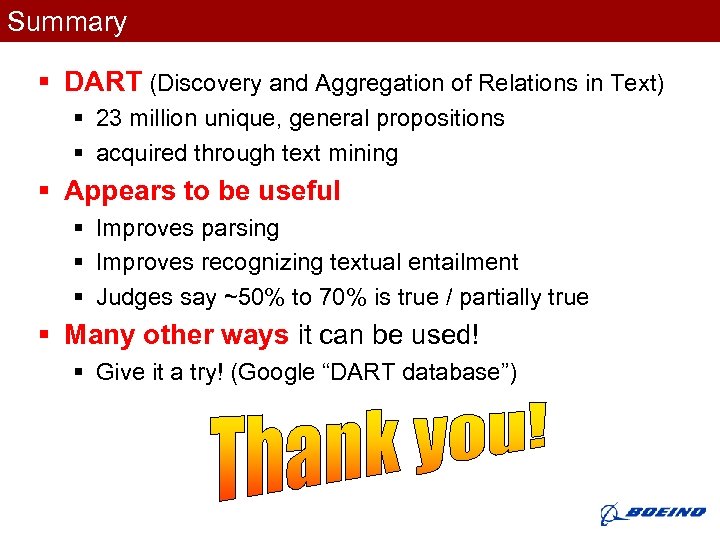

Summary § DART (Discovery and Aggregation of Relations in Text) § 23 million unique, general propositions § acquired through text mining § Appears to be useful § Improves parsing § Improves recognizing textual entailment § Judges say ~50% to 70% is true / partially true § Many other ways it can be used! § Give it a try! (Google “DART database”)

Summary § DART (Discovery and Aggregation of Relations in Text) § 23 million unique, general propositions § acquired through text mining § Appears to be useful § Improves parsing § Improves recognizing textual entailment § Judges say ~50% to 70% is true / partially true § Many other ways it can be used! § Give it a try! (Google “DART database”)