286672f48168709f2e72b7180b2321a6.ppt

- Количество слайдов: 46

Language and Speech Technology: Parsing Jan Odijk January 2011 LOT Winter School 2011 1

Language and Speech Technology: Parsing Jan Odijk January 2011 LOT Winter School 2011 1

Overview • Grammars & Grammar Types • Parsing – Naïve Parsing – Earley Parser – Example (using handouts) • Earley Parser Extensions • Parsers & CLARIN 2

Overview • Grammars & Grammar Types • Parsing – Naïve Parsing – Earley Parser – Example (using handouts) • Earley Parser Extensions • Parsers & CLARIN 2

Overview • Grammars & Grammar Types • Parsing – Naïve Parsing – Earley Parser – Example (using handouts) • Earley Parser Extensions • Parsers & CLARIN 3

Overview • Grammars & Grammar Types • Parsing – Naïve Parsing – Earley Parser – Example (using handouts) • Earley Parser Extensions • Parsers & CLARIN 3

Grammars • Grammar G = (VT, VN, P, S) where – VT terminal vocabulary – VN nonterminal vocabulary – P set of rules α→β (lhs → rhs) • α Є V N+ • β Є (VN U VT)* – S Є VN (start symbol) 4

Grammars • Grammar G = (VT, VN, P, S) where – VT terminal vocabulary – VN nonterminal vocabulary – P set of rules α→β (lhs → rhs) • α Є V N+ • β Є (VN U VT)* – S Є VN (start symbol) 4

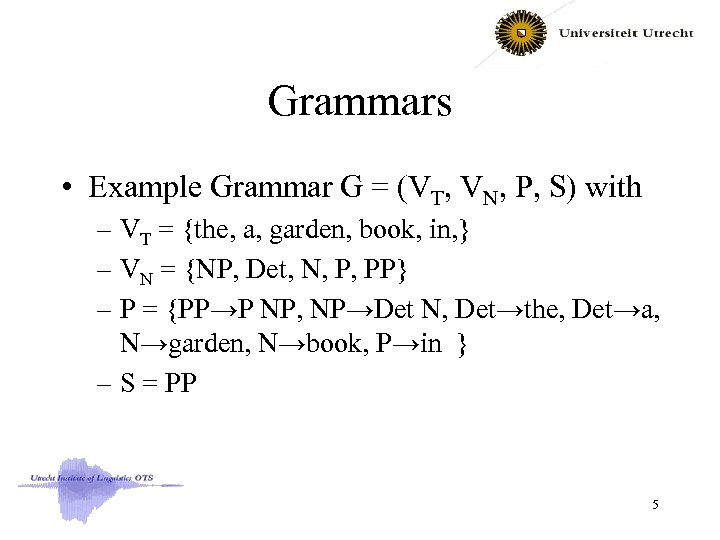

Grammars • Example Grammar G = (VT, VN, P, S) with – VT = {the, a, garden, book, in, } – VN = {NP, Det, N, P, PP} – P = {PP→P NP, NP→Det N, Det→the, Det→a, N→garden, N→book, P→in } – S = PP 5

Grammars • Example Grammar G = (VT, VN, P, S) with – VT = {the, a, garden, book, in, } – VN = {NP, Det, N, P, PP} – P = {PP→P NP, NP→Det N, Det→the, Det→a, N→garden, N→book, P→in } – S = PP 5

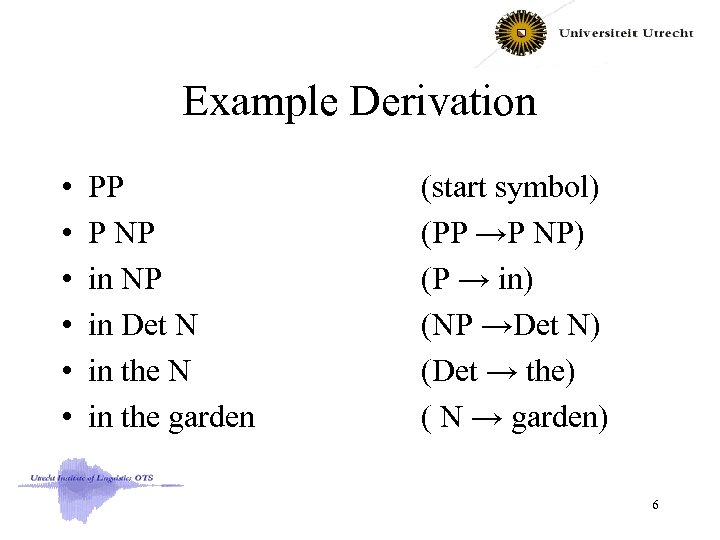

Example Derivation • • • PP P NP in Det N in the garden (start symbol) (PP →P NP) (P → in) (NP →Det N) (Det → the) ( N → garden) 6

Example Derivation • • • PP P NP in Det N in the garden (start symbol) (PP →P NP) (P → in) (NP →Det N) (Det → the) ( N → garden) 6

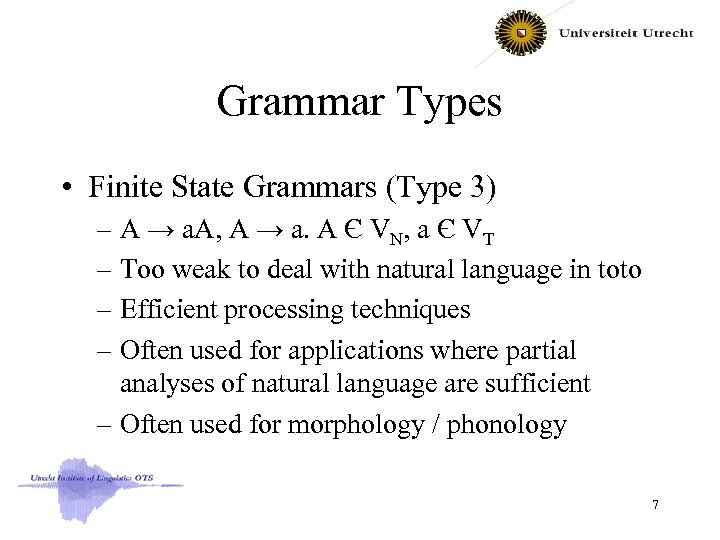

Grammar Types • Finite State Grammars (Type 3) – A → a. A, A → a. A Є VN, a Є VT – Too weak to deal with natural language in toto – Efficient processing techniques – Often used for applications where partial analyses of natural language are sufficient – Often used for morphology / phonology 7

Grammar Types • Finite State Grammars (Type 3) – A → a. A, A → a. A Є VN, a Є VT – Too weak to deal with natural language in toto – Efficient processing techniques – Often used for applications where partial analyses of natural language are sufficient – Often used for morphology / phonology 7

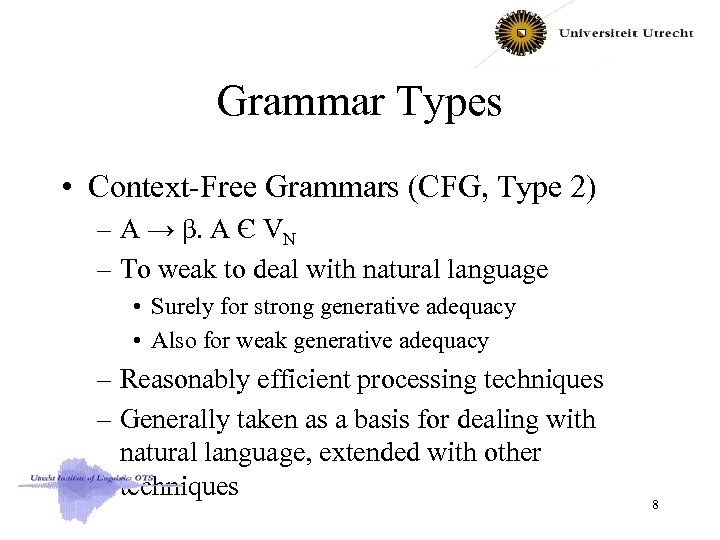

Grammar Types • Context-Free Grammars (CFG, Type 2) – A → β. A Є VN – To weak to deal with natural language • Surely for strong generative adequacy • Also for weak generative adequacy – Reasonably efficient processing techniques – Generally taken as a basis for dealing with natural language, extended with other techniques 8

Grammar Types • Context-Free Grammars (CFG, Type 2) – A → β. A Є VN – To weak to deal with natural language • Surely for strong generative adequacy • Also for weak generative adequacy – Reasonably efficient processing techniques – Generally taken as a basis for dealing with natural language, extended with other techniques 8

Grammar Types • Context-Sensitive Grammars (Type 1) – α→β, |α| <= |β| – Usually not considered in the context of NLP • Type-0 grammars – No restrictions – Usually not considered except in combination with CFG 9

Grammar Types • Context-Sensitive Grammars (Type 1) – α→β, |α| <= |β| – Usually not considered in the context of NLP • Type-0 grammars – No restrictions – Usually not considered except in combination with CFG 9

Overview • Grammars & Grammar Types • Parsing – Naïve Parsing – Earley Parser – Example (using handouts) • Earley Parser Extensions • Parsers & CLARIN 10

Overview • Grammars & Grammar Types • Parsing – Naïve Parsing – Earley Parser – Example (using handouts) • Earley Parser Extensions • Parsers & CLARIN 10

Parsing • Parsing – Is an algorithm • It must finish! – For assigning syntactic structures • Ambiguity! – To a sequence of terminal symbols – In accordance with a given grammar – (If possible, efficient) 11

Parsing • Parsing – Is an algorithm • It must finish! – For assigning syntactic structures • Ambiguity! – To a sequence of terminal symbols – In accordance with a given grammar – (If possible, efficient) 11

Parsing for CFGs • Focus here on – Parser for CFGs – for natural language – More specifically: Earley parser • Why? – Most NLP systems with a grammar use a parser for CFG as a basis – Basic techniques will also recur in parsers for 12 different grammar types

Parsing for CFGs • Focus here on – Parser for CFGs – for natural language – More specifically: Earley parser • Why? – Most NLP systems with a grammar use a parser for CFG as a basis – Basic techniques will also recur in parsers for 12 different grammar types

Overview • Grammars & Grammar Types • Parsing – Naïve Parsing – Earley Parser – Example (using handouts) • Earley Parser Extensions • Parsers & CLARIN 13

Overview • Grammars & Grammar Types • Parsing – Naïve Parsing – Earley Parser – Example (using handouts) • Earley Parser Extensions • Parsers & CLARIN 13

Naïve Parsing • see handout • Problems for naïve parsing – A lot of re-parsing of subtrees – Bottom-up • Wastes time and space on trees that cannot lead to S – Top-down • Wastes time and space on trees that cannot match input string 14

Naïve Parsing • see handout • Problems for naïve parsing – A lot of re-parsing of subtrees – Bottom-up • Wastes time and space on trees that cannot lead to S – Top-down • Wastes time and space on trees that cannot match input string 14

Naïve parsing • Top-down – Recursion problem • Can be solved for right-recursion by matching with input tokens, but • Problem with left recursion remains: – NP → NP PP • Ambiguity – Temporary ambiguity – Real ambiguity 15

Naïve parsing • Top-down – Recursion problem • Can be solved for right-recursion by matching with input tokens, but • Problem with left recursion remains: – NP → NP PP • Ambiguity – Temporary ambiguity – Real ambiguity 15

Naïve parsing • Naïve Parsing Complexity – Time needed to parse is exponential: – cn (c a constant, length input tokens) – (in the worst case) • Takes too much time • Is not practically feasible 16

Naïve parsing • Naïve Parsing Complexity – Time needed to parse is exponential: – cn (c a constant, length input tokens) – (in the worst case) • Takes too much time • Is not practically feasible 16

Overview • Grammars & Grammar Types • Parsing – Naïve Parsing – Earley Parser – Example (using handouts) • Earley Parser Extensions • Parsers & CLARIN 17

Overview • Grammars & Grammar Types • Parsing – Naïve Parsing – Earley Parser – Example (using handouts) • Earley Parser Extensions • Parsers & CLARIN 17

Earley Parser • Top-down approach but – Predictor avoids wasting time and space on irrelevant trees – Does not build actual structures, but stores enough information to reconstructures – Uses dynamic programming technique to avoid recomputation of subtrees – Avoids problems with left recursion – Makes complexity cubic: n 3 18

Earley Parser • Top-down approach but – Predictor avoids wasting time and space on irrelevant trees – Does not build actual structures, but stores enough information to reconstructures – Uses dynamic programming technique to avoid recomputation of subtrees – Avoids problems with left recursion – Makes complexity cubic: n 3 18

Earley Parser • Number positions in input string (0. . N) • 0 book 1 that 2 flight 3 • Notation [i, j] stands for the string from position i to position j – [0, 1] = “book” – [1, 3] = “that flight” – [2, 2]= “” 19

Earley Parser • Number positions in input string (0. . N) • 0 book 1 that 2 flight 3 • Notation [i, j] stands for the string from position i to position j – [0, 1] = “book” – [1, 3] = “that flight” – [2, 2]= “” 19

Earley Parser • Dotted Rules – is a grammar rule + indication of progress – ie. Which elements of the rhs have been seen yet and which ones not yet – Indicated by a dot (we use an asterisk) • Example – S → Aux NP * VP – Aux and NP have been dealt with but VP not yet 20

Earley Parser • Dotted Rules – is a grammar rule + indication of progress – ie. Which elements of the rhs have been seen yet and which ones not yet – Indicated by a dot (we use an asterisk) • Example – S → Aux NP * VP – Aux and NP have been dealt with but VP not yet 20

![Earley Parser • Input: – Sequence of N words (words[1. . N]), and – Earley Parser • Input: – Sequence of N words (words[1. . N]), and –](https://present5.com/presentation/286672f48168709f2e72b7180b2321a6/image-21.jpg) Earley Parser • Input: – Sequence of N words (words[1. . N]), and – grammar • Output: – a Store = (agenda, chart) • (sometimes chart = N+1 chart entries: chart[0. . N]) 21

Earley Parser • Input: – Sequence of N words (words[1. . N]), and – grammar • Output: – a Store = (agenda, chart) • (sometimes chart = N+1 chart entries: chart[0. . N]) 21

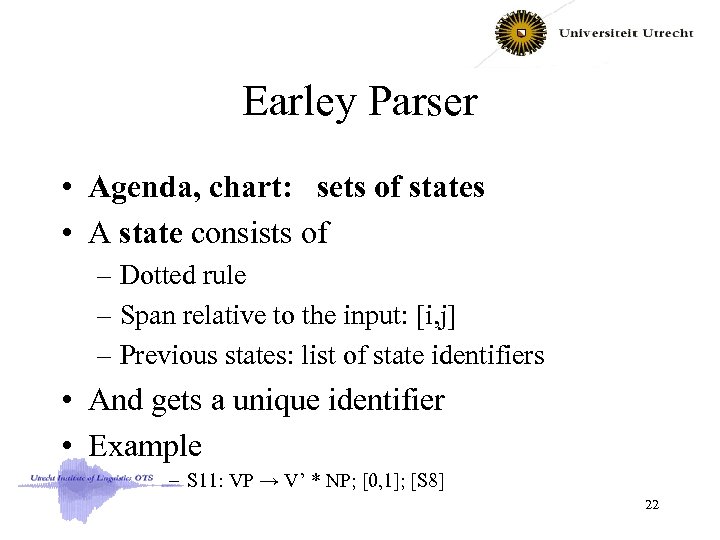

Earley Parser • Agenda, chart: sets of states • A state consists of – Dotted rule – Span relative to the input: [i, j] – Previous states: list of state identifiers • And gets a unique identifier • Example – S 11: VP → V’ * NP; [0, 1]; [S 8] 22

Earley Parser • Agenda, chart: sets of states • A state consists of – Dotted rule – Span relative to the input: [i, j] – Previous states: list of state identifiers • And gets a unique identifier • Example – S 11: VP → V’ * NP; [0, 1]; [S 8] 22

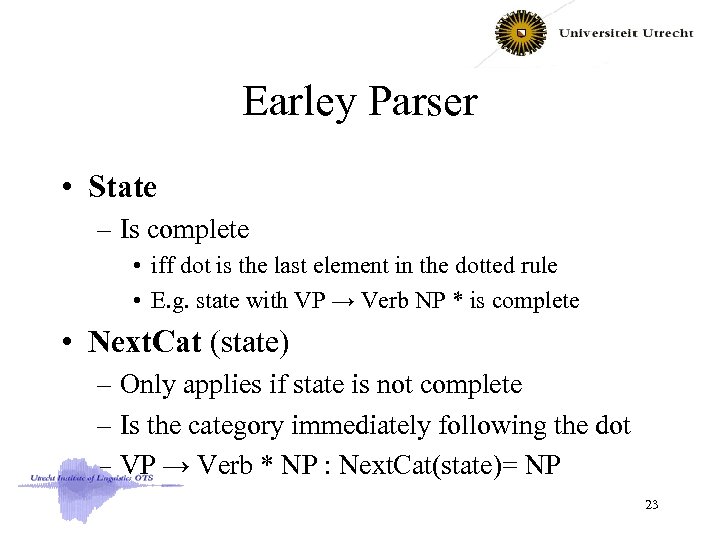

Earley Parser • State – Is complete • iff dot is the last element in the dotted rule • E. g. state with VP → Verb NP * is complete • Next. Cat (state) – Only applies if state is not complete – Is the category immediately following the dot – VP → Verb * NP : Next. Cat(state)= NP 23

Earley Parser • State – Is complete • iff dot is the last element in the dotted rule • E. g. state with VP → Verb NP * is complete • Next. Cat (state) – Only applies if state is not complete – Is the category immediately following the dot – VP → Verb * NP : Next. Cat(state)= NP 23

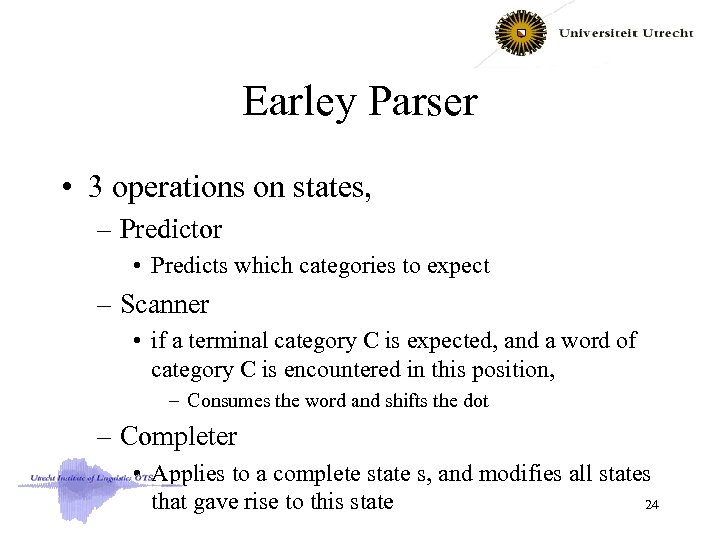

Earley Parser • 3 operations on states, – Predictor • Predicts which categories to expect – Scanner • if a terminal category C is expected, and a word of category C is encountered in this position, – Consumes the word and shifts the dot – Completer • Applies to a complete state s, and modifies all states 24 that gave rise to this state

Earley Parser • 3 operations on states, – Predictor • Predicts which categories to expect – Scanner • if a terminal category C is expected, and a word of category C is encountered in this position, – Consumes the word and shifts the dot – Completer • Applies to a complete state s, and modifies all states 24 that gave rise to this state

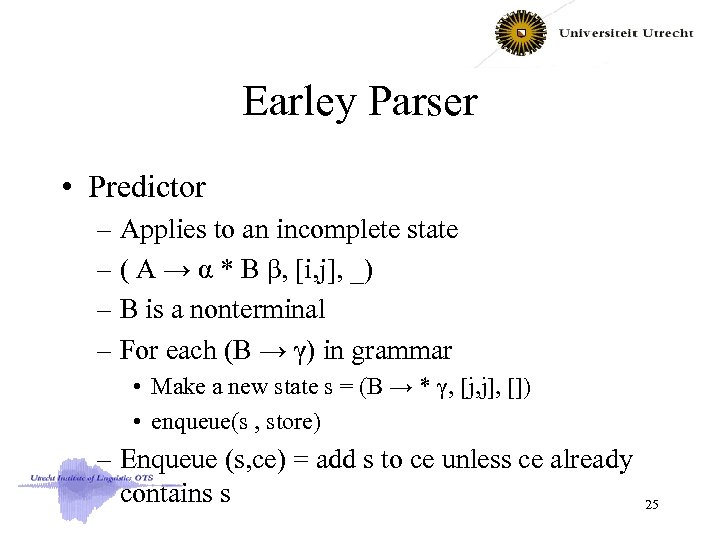

Earley Parser • Predictor – Applies to an incomplete state – ( A → α * B β, [i, j], _) – B is a nonterminal – For each (B → γ) in grammar • Make a new state s = (B → * γ, [j, j], []) • enqueue(s , store) – Enqueue (s, ce) = add s to ce unless ce already contains s 25

Earley Parser • Predictor – Applies to an incomplete state – ( A → α * B β, [i, j], _) – B is a nonterminal – For each (B → γ) in grammar • Make a new state s = (B → * γ, [j, j], []) • enqueue(s , store) – Enqueue (s, ce) = add s to ce unless ce already contains s 25

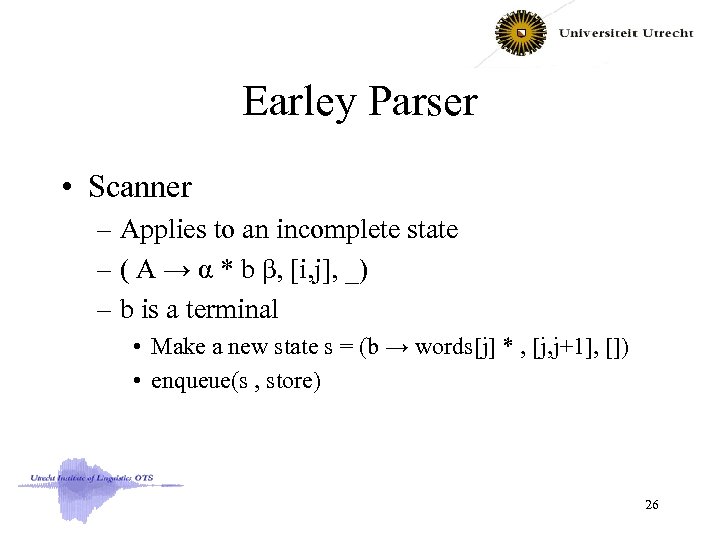

Earley Parser • Scanner – Applies to an incomplete state – ( A → α * b β, [i, j], _) – b is a terminal • Make a new state s = (b → words[j] * , [j, j+1], []) • enqueue(s , store) 26

Earley Parser • Scanner – Applies to an incomplete state – ( A → α * b β, [i, j], _) – b is a terminal • Make a new state s = (b → words[j] * , [j, j+1], []) • enqueue(s , store) 26

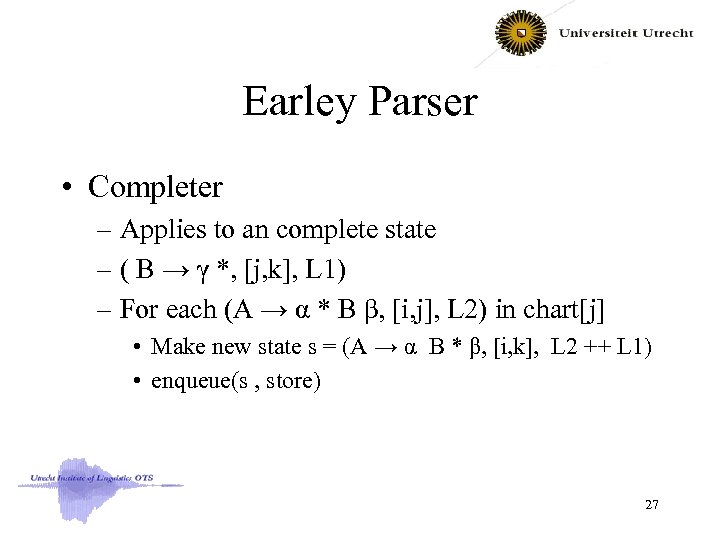

Earley Parser • Completer – Applies to an complete state – ( B → γ *, [j, k], L 1) – For each (A → α * B β, [i, j], L 2) in chart[j] • Make new state s = (A → α B * β, [i, k], L 2 ++ L 1) • enqueue(s , store) 27

Earley Parser • Completer – Applies to an complete state – ( B → γ *, [j, k], L 1) – For each (A → α * B β, [i, j], L 2) in chart[j] • Make new state s = (A → α B * β, [i, k], L 2 ++ L 1) • enqueue(s , store) 27

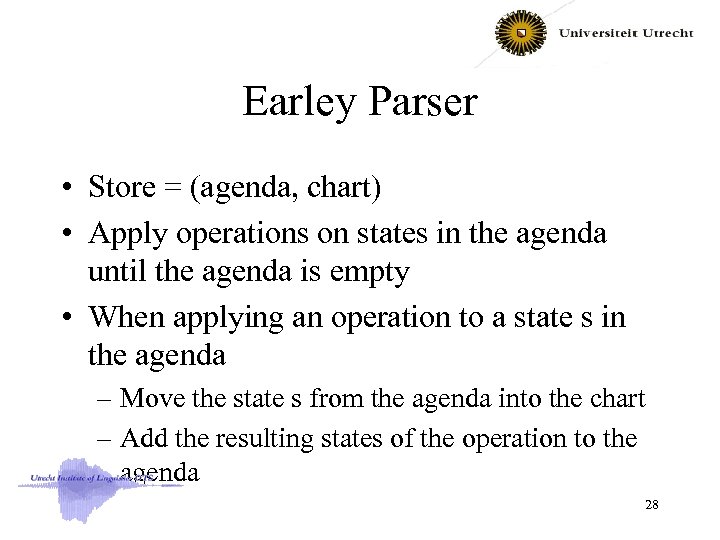

Earley Parser • Store = (agenda, chart) • Apply operations on states in the agenda until the agenda is empty • When applying an operation to a state s in the agenda – Move the state s from the agenda into the chart – Add the resulting states of the operation to the agenda 28

Earley Parser • Store = (agenda, chart) • Apply operations on states in the agenda until the agenda is empty • When applying an operation to a state s in the agenda – Move the state s from the agenda into the chart – Add the resulting states of the operation to the agenda 28

![Earley Parser • Initial store = ([Г → *S], emptychart) – Where Г is Earley Parser • Initial store = ([Г → *S], emptychart) – Where Г is](https://present5.com/presentation/286672f48168709f2e72b7180b2321a6/image-29.jpg) Earley Parser • Initial store = ([Г → *S], emptychart) – Where Г is a ‘fresh’ nonterminal start symbol • Input sentence accepted – Iff there is a state (Г → S *, [0, N], LS) in the chart and the agenda is empty • Parse tree(s) can be reconstructed via the list of earlier states (LS) 29

Earley Parser • Initial store = ([Г → *S], emptychart) – Where Г is a ‘fresh’ nonterminal start symbol • Input sentence accepted – Iff there is a state (Г → S *, [0, N], LS) in the chart and the agenda is empty • Parse tree(s) can be reconstructed via the list of earlier states (LS) 29

Overview • Grammars & Grammar Types • Parsing – Naïve Parsing – Earley Parser – Example (using handouts) • Earley Parser Extensions • Parsers & CLARIN 30

Overview • Grammars & Grammar Types • Parsing – Naïve Parsing – Earley Parser – Example (using handouts) • Earley Parser Extensions • Parsers & CLARIN 30

Overview • Grammars & Grammar Types • Parsing – Naïve Parsing – Earley Parser – Example (using handouts) • Earley Parser Extensions • Parsers & CLARIN 31

Overview • Grammars & Grammar Types • Parsing – Naïve Parsing – Earley Parser – Example (using handouts) • Earley Parser Extensions • Parsers & CLARIN 31

Earley Parser Extensions • Replace elements of V by feature sets (attribute-value matrices, AVMs) – Harmless if finitely valued – E. g. instead of NP [cat=N, bar=max, case=Nom] – Usually other relation than ‘=‘ used for comparison • E. g. ‘is compatible with’, ‘unifies with’, ‘subsumes’ 32

Earley Parser Extensions • Replace elements of V by feature sets (attribute-value matrices, AVMs) – Harmless if finitely valued – E. g. instead of NP [cat=N, bar=max, case=Nom] – Usually other relation than ‘=‘ used for comparison • E. g. ‘is compatible with’, ‘unifies with’, ‘subsumes’ 32

Earley Parser Extensions • Replace rhs of rules by regular expressions over V (or AVMs) • E. g. VP → V NP? (AP | PP)* abbreviates • VP → V, VP → V NP, VP → V APor. PP, VP → V NP APor. PP, • APor. PP → AP APor. PP, APor. PP → PP APor. PP, APor. PP → AP, APor. PP → PP • Where APor. PP is a ‘fresh’ virtual nonterminal • Virtual : is discarded when constructing the trees 33

Earley Parser Extensions • Replace rhs of rules by regular expressions over V (or AVMs) • E. g. VP → V NP? (AP | PP)* abbreviates • VP → V, VP → V NP, VP → V APor. PP, VP → V NP APor. PP, • APor. PP → AP APor. PP, APor. PP → PP APor. PP, APor. PP → AP, APor. PP → PP • Where APor. PP is a ‘fresh’ virtual nonterminal • Virtual : is discarded when constructing the trees 33

Earley Parser Extensions • My grammatical formalism has no PS rules! • But only ‘lexical projection’ of syntactic selection properties (subcategorization list) • E. g. buy: [cat=V, subcat = [_ NP PP, _ NP]] • create PS rules on the fly – If buy occurs in the input tokens, create rules • VP → buy NP PP and VP → buy NP – From the lexical entry – And use these rules to parse 34

Earley Parser Extensions • My grammatical formalism has no PS rules! • But only ‘lexical projection’ of syntactic selection properties (subcategorization list) • E. g. buy: [cat=V, subcat = [_ NP PP, _ NP]] • create PS rules on the fly – If buy occurs in the input tokens, create rules • VP → buy NP PP and VP → buy NP – From the lexical entry – And use these rules to parse 34

Earley Parser Extensions • My grammar contains ε-rules: – NP → ε – Where ε stands for the empty string – (i. e. NP matches the empty string in the input token list) • Earley parser can deal with these! • But extensive use creates many ambiguities! 35

Earley Parser Extensions • My grammar contains ε-rules: – NP → ε – Where ε stands for the empty string – (i. e. NP matches the empty string in the input token list) • Earley parser can deal with these! • But extensive use creates many ambiguities! 35

Earley Parser Extensions • My grammar contains empty categories – Independent • PRO as subject of non-finite verbs – PRO buying books is fun • pro as subject of finite verbs in pro-drop languages – pro no hablo Español • Pro as subject of imperatives – pro schaam je! • Epsilon rules can be used or represent this at other level 36

Earley Parser Extensions • My grammar contains empty categories – Independent • PRO as subject of non-finite verbs – PRO buying books is fun • pro as subject of finite verbs in pro-drop languages – pro no hablo Español • Pro as subject of imperatives – pro schaam je! • Epsilon rules can be used or represent this at other level 36

Earley Parser Extensions • My grammar contains empty categories – Dependent • trace of wh-movement – What did you buy t • Trace of Verb movement (e. g V 2 in Dutch, German, Aux movement in English – Hij belt hem op t – Did you t buy a book? – Epsilon rules are not sufficient 37

Earley Parser Extensions • My grammar contains empty categories – Dependent • trace of wh-movement – What did you buy t • Trace of Verb movement (e. g V 2 in Dutch, German, Aux movement in English – Hij belt hem op t – Did you t buy a book? – Epsilon rules are not sufficient 37

Earley Parser Extensions • Other types (levels) of representation • LFG: (c-structure, f-structure) • HPSG: DAGs (special type of AVMs) • (constituent structure, semantic representation) • Use CFG as backbone grammar – Which accepts a superset of the language – For each rule specify how to construct other level of representation – Extend Earley parser to deal with this 38

Earley Parser Extensions • Other types (levels) of representation • LFG: (c-structure, f-structure) • HPSG: DAGs (special type of AVMs) • (constituent structure, semantic representation) • Use CFG as backbone grammar – Which accepts a superset of the language – For each rule specify how to construct other level of representation – Extend Earley parser to deal with this 38

Earley Parser Extensions • Other types (levels) of representation • f-structure, DAGs, semantic representations are not finitely valued • Thus it will affect efficiency • But allows dealing with e. g. – Non-context-free aspects of a language – Unbounded dependencies (e. g. by ‘gap-threading’) 39

Earley Parser Extensions • Other types (levels) of representation • f-structure, DAGs, semantic representations are not finitely valued • Thus it will affect efficiency • But allows dealing with e. g. – Non-context-free aspects of a language – Unbounded dependencies (e. g. by ‘gap-threading’) 39

Earley Parser in Practice • Parsers for natural language yield – Many many parse trees for an input sentence • • Many more than you can imagine (thousands) Even for relatively short, simple sentences They are all syntactically correct But make no sense semantically 40

Earley Parser in Practice • Parsers for natural language yield – Many many parse trees for an input sentence • • Many more than you can imagine (thousands) Even for relatively short, simple sentences They are all syntactically correct But make no sense semantically 40

Earley Parser in Practice • Additional constraining is required – To reduce the temporary ambiguities – To come up with the ‘best’ parse • Can be done by semantic constraints – But only feasible for very small domains • Is most often done using probabilities – Rule probabilities derived from frequencies in treebanks 41

Earley Parser in Practice • Additional constraining is required – To reduce the temporary ambiguities – To come up with the ‘best’ parse • Can be done by semantic constraints – But only feasible for very small domains • Is most often done using probabilities – Rule probabilities derived from frequencies in treebanks 41

Parsers: Some Examples • Dutch: Alpino parser • Stanford parsers – English, Arabic, Chinese • English: ACL Overview 42

Parsers: Some Examples • Dutch: Alpino parser • Stanford parsers – English, Arabic, Chinese • English: ACL Overview 42

Overview • Grammars & Grammar Types • Parsing – Naïve Parsing – Earley Parser – Example (using handouts) • Earley Parser Extensions • Parsers & CLARIN 43

Overview • Grammars & Grammar Types • Parsing – Naïve Parsing – Earley Parser – Example (using handouts) • Earley Parser Extensions • Parsers & CLARIN 43

Parsers & CLARIN • Parser allows one to automatically analyze large text corpora • Resulting in treebanks • Can be used for linguistic research – But with care!! • Example: Lassy Demo (Dutch) – Simple search interface to LASSY-small Treebank 44 – Use an SVG compatible browser (e. g. Firefox)

Parsers & CLARIN • Parser allows one to automatically analyze large text corpora • Resulting in treebanks • Can be used for linguistic research – But with care!! • Example: Lassy Demo (Dutch) – Simple search interface to LASSY-small Treebank 44 – Use an SVG compatible browser (e. g. Firefox)

Parsers & CLARIN • Example of linguistic research using a treebank: • Van Eynde 2009: A treebank-driven investigation of predicative complements in Dutch 45

Parsers & CLARIN • Example of linguistic research using a treebank: • Van Eynde 2009: A treebank-driven investigation of predicative complements in Dutch 45

Thanks for your attention! 46

Thanks for your attention! 46