44ad92eaee6844d9760f4932ad1d29a0.ppt

- Количество слайдов: 22

Lambda scheduling algorithm for file transfers on high-speed optical circuits Hojun Lee Polytechnic Univ. Malathi Veeraraghavan Univ. of Virginia Contact: mv@cs. virginia. edu Hua Li and Edwin Chong Colorado State Univ.

Outline v Background & Problem statement v Varying-Bandwidth List Scheduling (VBLS) v Conclusions and future work 2

Background n Many optical network testbeds being created for e. Science applications n n n Canarie’s Ca*net 4 - Canada Translight – USA SURFnet – Netherlands UKLight – UK Target applications: n n Terabyte/petabyte file transfers Remote visualization, computational steering 3

Background n n These optical networks are circuit-switched Circuit-switched network operation: n n n Establish a circuit reserve capacity at each switch on end-toend path Dedicated resources implies “rate guarantee” Sounds great – but what’s the catch? n n Answer: implement dynamic provisioning of circuits n n n Cost, if network resources are not SHARED on some basis User holds a “lambda” for some short duration and releases for others to use How “dynamic? ” The greater the sharing, the lower the costs Our proposed approach (NSF project called CHEETAH): n Hold “lambdas” only for the duration of file transfers 4

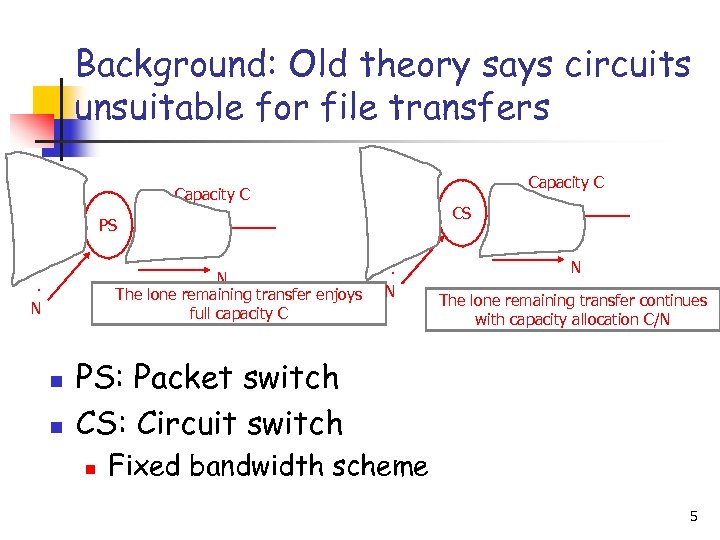

Background: Old theory says circuits unsuitable for file transfers 1 1 1 2 PS 3 . . N Capacity C n 1 CS 2 3 N The lone remaining transfer enjoys Each transfer gets C/N capacity full capacity C n 2 3 . . N Capacity C 2 3 N Each transfer is allocated C/N capacity The lone remaining transfer continues with capacity allocation C/N PS: Packet switch CS: Circuit switch n Fixed bandwidth scheme 5

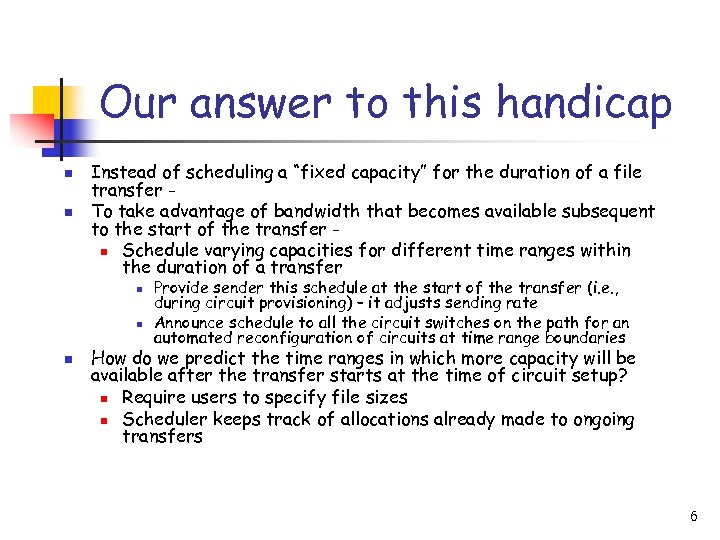

Our answer to this handicap n n Instead of scheduling a “fixed capacity” for the duration of a file transfer To take advantage of bandwidth that becomes available subsequent to the start of the transfer n Schedule varying capacities for different time ranges within the duration of a transfer n n n Provide sender this schedule at the start of the transfer (i. e. , during circuit provisioning) – it adjusts sending rate Announce schedule to all the circuit switches on the path for an automated reconfiguration of circuits at time range boundaries How do we predict the time ranges in which more capacity will be available after the transfer starts at the time of circuit setup? n Require users to specify file sizes n Scheduler keeps track of allocations already made to ongoing transfers 6

Problem statement n n Hence our problem is not how to schedule lambdas for fixed durations, but Rather it is how to schedule lambdas for file transfers 7

Scheduling requests n Specify n n File size Maximum rate n n n File transfers, unlike real-time audio/video, can be allocated “any” capacity; higher the rate, smaller the transfer delay End host processing, network interface card and disk limitations place an upper bound on the rate allocated for the file transfer Requested start time n n Allows users to specify a delayed start time Immediate-request vs. book-ahead calls (pricing) 8

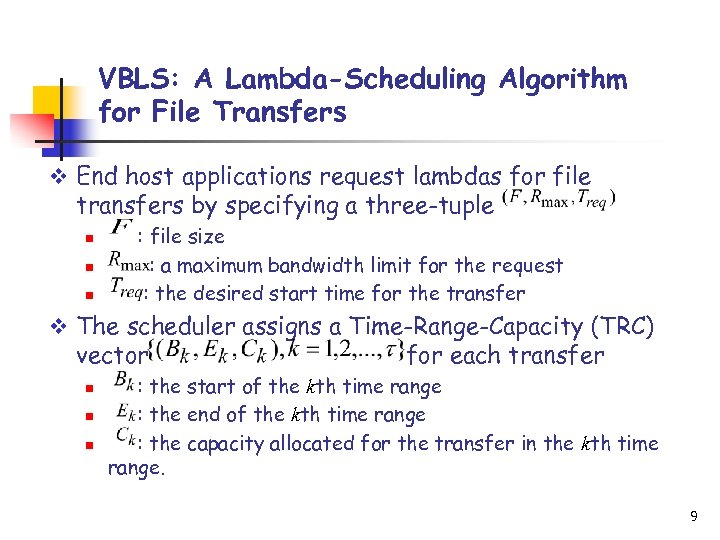

VBLS: A Lambda-Scheduling Algorithm for File Transfers v End host applications request lambdas for file transfers by specifying a three-tuple n n n : file size : a maximum bandwidth limit for the request : the desired start time for the transfer v The scheduler assigns a Time-Range-Capacity (TRC) vector n n n for each transfer : the start of the kth time range : the end of the kth time range : the capacity allocated for the transfer in the kth time range. 9

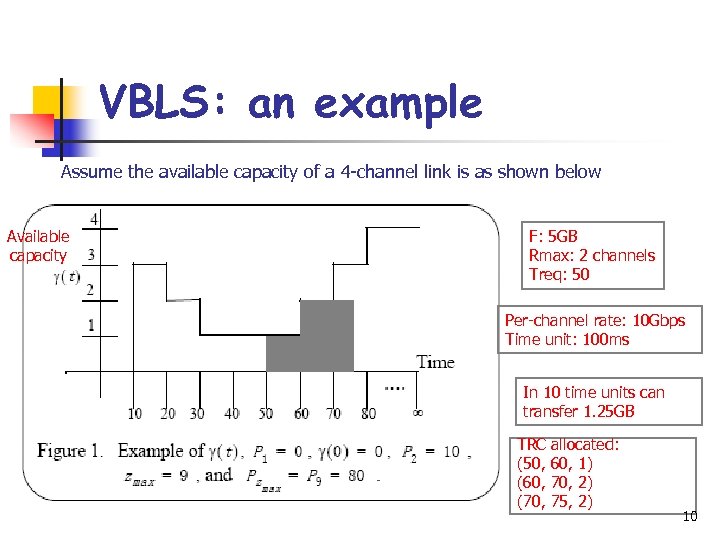

VBLS: an example Assume the available capacity of a 4 -channel link is as shown below Available capacity F: 5 GB Rmax: 2 channels Treq: 50 Per-channel rate: 10 Gbps Time unit: 100 ms In 10 time units can transfer 1. 25 GB TRC allocated: (50, 60, 1) (60, 70, 2) (70, 75, 2) 10

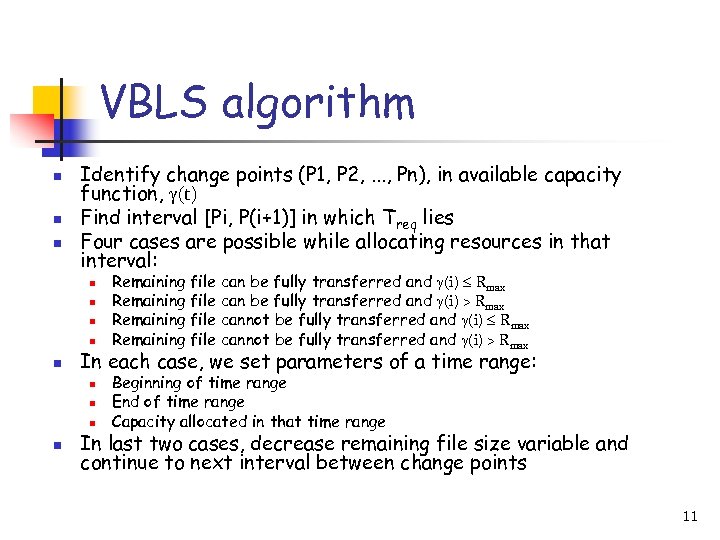

VBLS algorithm n n n Identify change points (P 1, P 2, . . . , Pn), in available capacity function, (t) Find interval [Pi, P(i+1)] in which Treq lies Four cases are possible while allocating resources in that interval: n n n In each case, we set parameters of a time range: n n Remaining file can be fully transferred and (i) Rmax Remaining file can be fully transferred and (i) > Rmax Remaining file cannot be fully transferred and (i) > Rmax Beginning of time range End of time range Capacity allocated in that time range In last two cases, decrease remaining file size variable and continue to next interval between change points 11

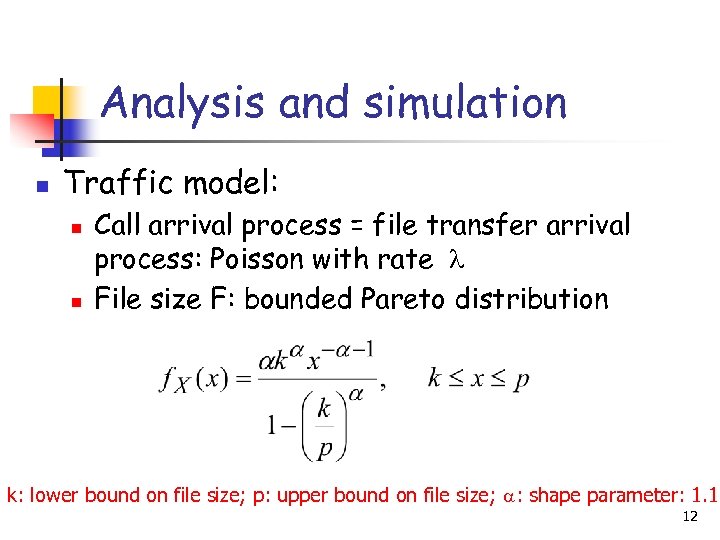

Analysis and simulation n Traffic model: n n Call arrival process = file transfer arrival process: Poisson with rate File size F: bounded Pareto distribution k: lower bound on file size; p: upper bound on file size; : shape parameter: 1. 1 12

Validation of simulation program with analysis n Simple case: n n n All calls specify same maximum rate, which is set equal to link capacity C M/G/1 model with ‘G’ being bounded Pareto Analytical result available 13

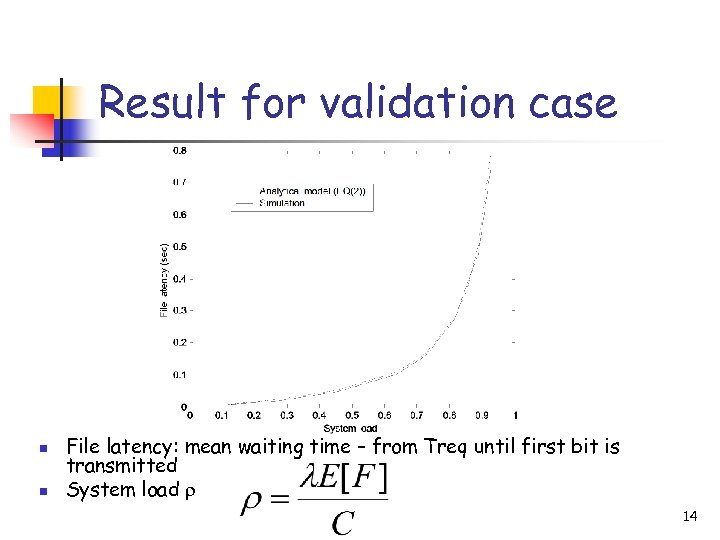

Result for validation case n n File latency: mean waiting time – from Treq until first bit is transmitted System load 14

Sensitivity analysis: Effect of maximum rate n All calls request same Rmax of 1, 5, 100 channels on a link of capacity C=100 channels n n Mean latency smallest in the 1 -channel case Mean file transfer delay (which is latency + service time) is smallest in 100 channel case 15

Sensitivity analysis: Effect of file size lower bound (k) and upper bound (p) n All calls request same Rmax of 1, 5, 10 channels on a link of capacity C=100 channels n n Case 1: k=500 MB; p = 10 GB Case 2: k= 10 GB; p = 100 GB File latency is more in Case 2 because variance is higher in Case 2 (shape of bounded Pareto distribution) Increasing upper bound p, increases variance and hence file latency increases 16

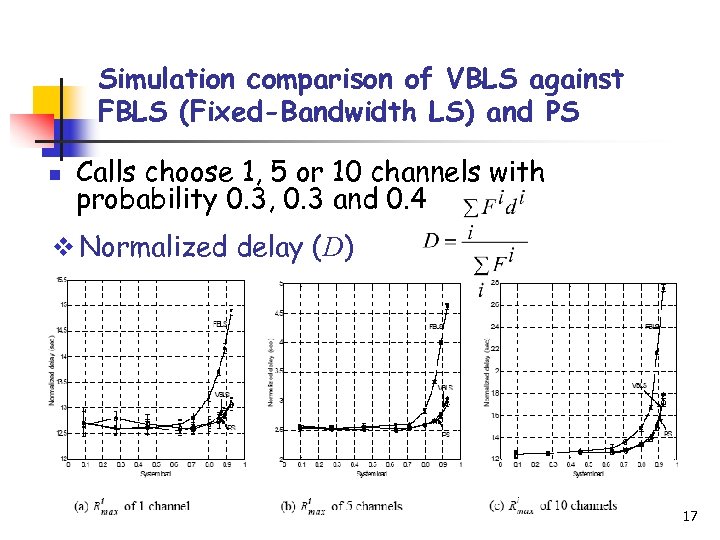

Simulation comparison of VBLS against FBLS (Fixed-Bandwidth LS) and PS n Calls choose 1, 5 or 10 channels with probability 0. 3, 0. 3 and 0. 4 v Normalized delay (D) 17

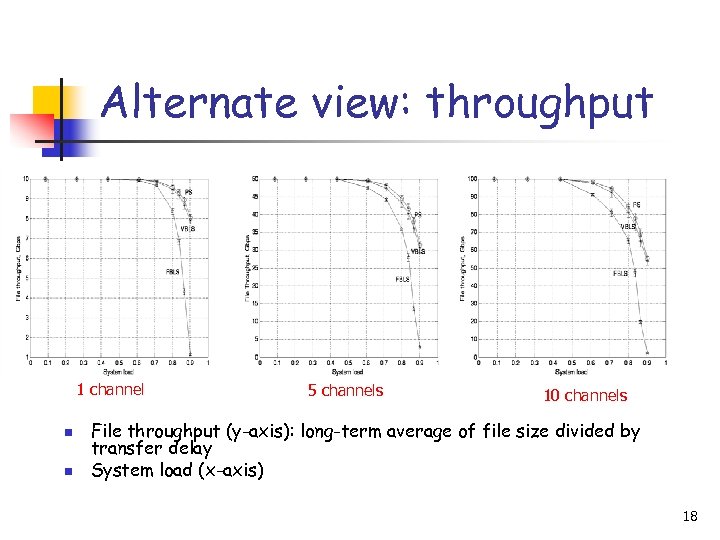

Alternate view: throughput 1 channel n n 5 channels 10 channels File throughput (y-axis): long-term average of file size divided by transfer delay System load (x-axis) 18

Observation v VBLS v Achieves close to idealized PS (infinite buffer) performance v Finite-buffer PS networks need something like TCP – reduces idealized PS throughput levels v Compare with current TCP enhancements under design v which are implementing run-time discovery of available bandwidth to ideally adjust sending rates to match available bandwidth v goal: avoid packet losses and consequent rate drops 19

Practical considerations n Extending VBLS scheme to multiple links n Clock synchronization & Propagation delay n n n Accounting for retransmissions Available capacity function n Staggered schedule Cannot be continuous, has to be discrete Wasted resources because of discretization Cost of achieving PS-like performance n n Circuit switches now more complex Need electronics to do timer-based reconfigurations of circuits 20

Extensions n Add a second class of requests: n n n Holding time Minimum rate Maximum rate Requested start time Useful for remote visualization and other interactive applications 21

Conclusions and future work v VBLS overcomes a well-known drawback of using circuits for file transfers v fixed-bandwidth allocation fails to take advantage of bandwidth that becomes available subsequent to the start of a transfer v Simulations showed that VBLS can improve performance over fixed-bandwidth schemes significantly for file transfers v Cost: implementation complexity v Future work: to include a second class of user requests for lambdas, targeted at interactive applications such as remote visualization and simulation steering 22

44ad92eaee6844d9760f4932ad1d29a0.ppt