d8c67ef7fb4ada8dde0634a1ee601c88.ppt

- Количество слайдов: 31

L 643: Evaluation of Information Systems Week 6: February 11, 2008

L 643: Evaluation of Information Systems Week 6: February 11, 2008

Mid-term Evaluation l l List 3 things you like about the course. List 3 things you don’t like about the course & make suggestions (be realistic… I can only change so much). Put your index card in the envelope… I will be back in 7 minutes. Don’t put your name on the cards! I am looking for honest feedback. 2

Mid-term Evaluation l l List 3 things you like about the course. List 3 things you don’t like about the course & make suggestions (be realistic… I can only change so much). Put your index card in the envelope… I will be back in 7 minutes. Don’t put your name on the cards! I am looking for honest feedback. 2

Statistics over IT Projects Failure Rates l l l 51% viewed their ERP implementation as unsuccessful (The Robbins-Gioia Survey, 2001) 40% of the projects failed to achieve their business case within 1 year of going live (The Conference Board Survey, 2001) The typical failure rate for IT projects is 70%; some “ 30% fail outright; another 40% drag on for years, propped up by huge cash infusions until they are finally shut down (Hugos, 2003) 3

Statistics over IT Projects Failure Rates l l l 51% viewed their ERP implementation as unsuccessful (The Robbins-Gioia Survey, 2001) 40% of the projects failed to achieve their business case within 1 year of going live (The Conference Board Survey, 2001) The typical failure rate for IT projects is 70%; some “ 30% fail outright; another 40% drag on for years, propped up by huge cash infusions until they are finally shut down (Hugos, 2003) 3

System Quality (Shepperd, 1995) l l Quality (~= beauty) is in the eye of the beholder Software quality: l The totality of features and characteristics of a product, process or service that bear on its ability to satisfy stated or implied needs (defined in ISO Shepperd, p. 67) 4

System Quality (Shepperd, 1995) l l Quality (~= beauty) is in the eye of the beholder Software quality: l The totality of features and characteristics of a product, process or service that bear on its ability to satisfy stated or implied needs (defined in ISO Shepperd, p. 67) 4

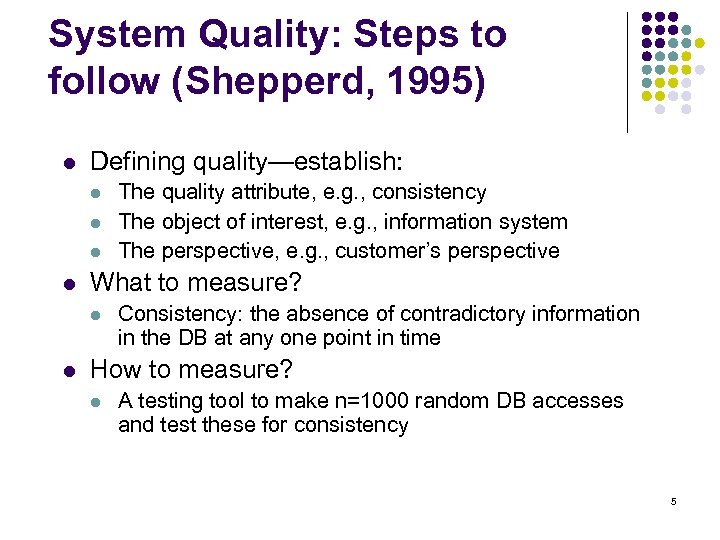

System Quality: Steps to follow (Shepperd, 1995) l Defining quality—establish: l l What to measure? l l The quality attribute, e. g. , consistency The object of interest, e. g. , information system The perspective, e. g. , customer’s perspective Consistency: the absence of contradictory information in the DB at any one point in time How to measure? l A testing tool to make n=1000 random DB accesses and test these for consistency 5

System Quality: Steps to follow (Shepperd, 1995) l Defining quality—establish: l l What to measure? l l The quality attribute, e. g. , consistency The object of interest, e. g. , information system The perspective, e. g. , customer’s perspective Consistency: the absence of contradictory information in the DB at any one point in time How to measure? l A testing tool to make n=1000 random DB accesses and test these for consistency 5

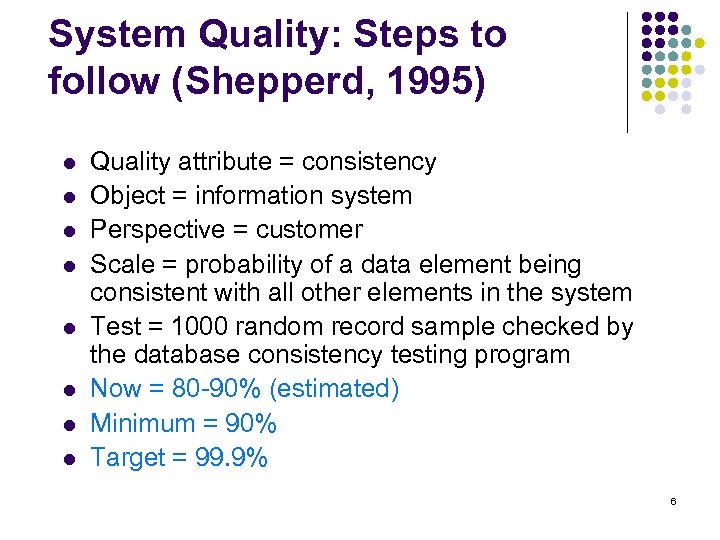

System Quality: Steps to follow (Shepperd, 1995) l l l l Quality attribute = consistency Object = information system Perspective = customer Scale = probability of a data element being consistent with all other elements in the system Test = 1000 random record sample checked by the database consistency testing program Now = 80 -90% (estimated) Minimum = 90% Target = 99. 9% 6

System Quality: Steps to follow (Shepperd, 1995) l l l l Quality attribute = consistency Object = information system Perspective = customer Scale = probability of a data element being consistent with all other elements in the system Test = 1000 random record sample checked by the database consistency testing program Now = 80 -90% (estimated) Minimum = 90% Target = 99. 9% 6

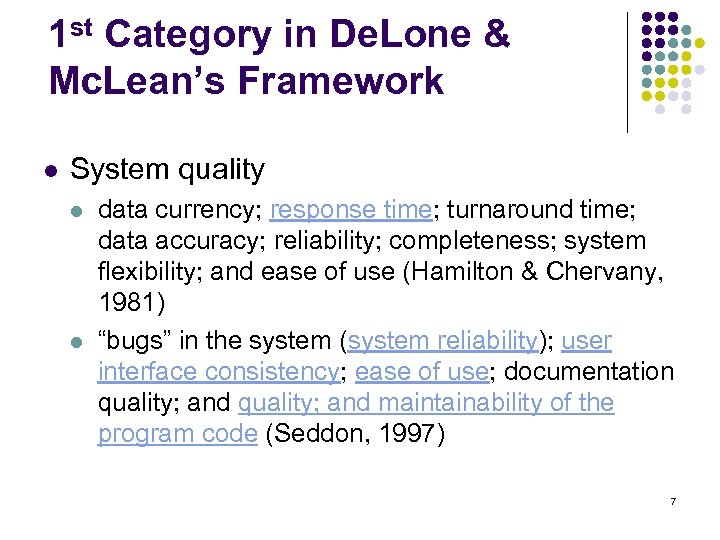

1 st Category in De. Lone & Mc. Lean’s Framework l System quality l l data currency; response time; turnaround time; data accuracy; reliability; completeness; system flexibility; and ease of use (Hamilton & Chervany, 1981) “bugs” in the system (system reliability); user interface consistency; ease of use; documentation quality; and maintainability of the program code (Seddon, 1997) 7

1 st Category in De. Lone & Mc. Lean’s Framework l System quality l l data currency; response time; turnaround time; data accuracy; reliability; completeness; system flexibility; and ease of use (Hamilton & Chervany, 1981) “bugs” in the system (system reliability); user interface consistency; ease of use; documentation quality; and maintainability of the program code (Seddon, 1997) 7

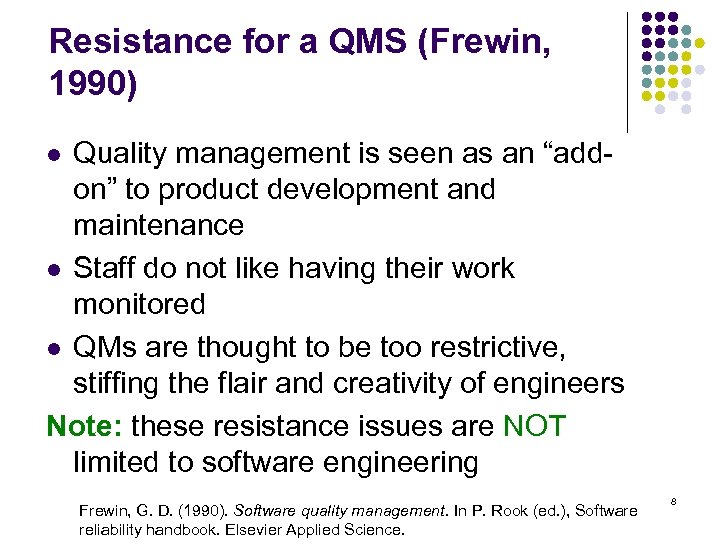

Resistance for a QMS (Frewin, 1990) Quality management is seen as an “addon” to product development and maintenance l Staff do not like having their work monitored l QMs are thought to be too restrictive, stiffing the flair and creativity of engineers Note: these resistance issues are NOT limited to software engineering l Frewin, G. D. (1990). Software quality management. In P. Rook (ed. ), Software reliability handbook. Elsevier Applied Science. 8

Resistance for a QMS (Frewin, 1990) Quality management is seen as an “addon” to product development and maintenance l Staff do not like having their work monitored l QMs are thought to be too restrictive, stiffing the flair and creativity of engineers Note: these resistance issues are NOT limited to software engineering l Frewin, G. D. (1990). Software quality management. In P. Rook (ed. ), Software reliability handbook. Elsevier Applied Science. 8

Accessibility l Section 504 states that "no qualified individual with a disability in the United States shall be excluded from, denied the benefits of, or be subjected to discrimination under" any program or activity that either receives Federal financial assistance or is conducted by any Executive agency or the United States Postal Service. http: //www. usdoj. gov/crt/ada/cguide. htm 9

Accessibility l Section 504 states that "no qualified individual with a disability in the United States shall be excluded from, denied the benefits of, or be subjected to discrimination under" any program or activity that either receives Federal financial assistance or is conducted by any Executive agency or the United States Postal Service. http: //www. usdoj. gov/crt/ada/cguide. htm 9

Accessibility l 1998 Amendment to Section 508 of the U. S. rehabilitation Act of 1973 extended law to cover electronic and IT language (http: //www. usdoj. gov/crt/508/) l ADA lawsuits filed: l l National Federation of the Blind (NFB) v. Target (Feb 2006) NFB vs. Connecticut Attorney General’s Office l H & R Block, HDVest, Intuit, and Gilman & Ciocia for IRS efiling make these sites accessible by 2000 tax season 10

Accessibility l 1998 Amendment to Section 508 of the U. S. rehabilitation Act of 1973 extended law to cover electronic and IT language (http: //www. usdoj. gov/crt/508/) l ADA lawsuits filed: l l National Federation of the Blind (NFB) v. Target (Feb 2006) NFB vs. Connecticut Attorney General’s Office l H & R Block, HDVest, Intuit, and Gilman & Ciocia for IRS efiling make these sites accessible by 2000 tax season 10

Accessibility (Mankoff et al. , 2005) l Compared the following four evaluation methods to test accessibility of websites: l l Expert review Screen reader Remote Automated (e. g. , W 3 C) 11

Accessibility (Mankoff et al. , 2005) l Compared the following four evaluation methods to test accessibility of websites: l l Expert review Screen reader Remote Automated (e. g. , W 3 C) 11

Web Rating Tools l Gomez (http: //www. gomez. com) l l l E. g. , Airline flight search benchmark Free website performance test Alex l Traffic ranking 12

Web Rating Tools l Gomez (http: //www. gomez. com) l l l E. g. , Airline flight search benchmark Free website performance test Alex l Traffic ranking 12

User-Perceived Web Quality (Aladwani & Palvia, 2002) l 4 dimensions of web quality: l l l Technical adequacy Specific content Content accuracy Web appearance User-perceived web quality instrument (see Table 5, p. 474) 13

User-Perceived Web Quality (Aladwani & Palvia, 2002) l 4 dimensions of web quality: l l l Technical adequacy Specific content Content accuracy Web appearance User-perceived web quality instrument (see Table 5, p. 474) 13

Data Accuracy l An example of the book: “Failing forward” l Human errors 14

Data Accuracy l An example of the book: “Failing forward” l Human errors 14

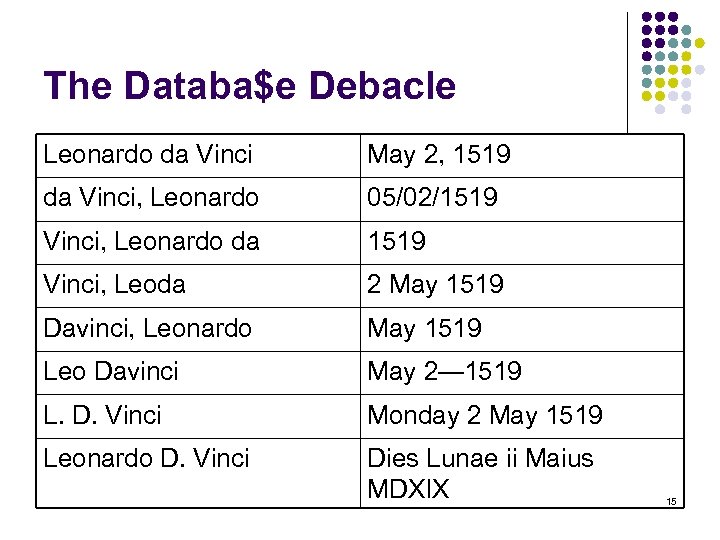

The Databa$e Debacle Leonardo da Vinci May 2, 1519 da Vinci, Leonardo 05/02/1519 Vinci, Leonardo da 1519 Vinci, Leoda 2 May 1519 Davinci, Leonardo May 1519 Leo Davinci May 2— 1519 L. D. Vinci Monday 2 May 1519 Leonardo D. Vinci Dies Lunae ii Maius MDXIX 15

The Databa$e Debacle Leonardo da Vinci May 2, 1519 da Vinci, Leonardo 05/02/1519 Vinci, Leonardo da 1519 Vinci, Leoda 2 May 1519 Davinci, Leonardo May 1519 Leo Davinci May 2— 1519 L. D. Vinci Monday 2 May 1519 Leonardo D. Vinci Dies Lunae ii Maius MDXIX 15

Trends in Data Quality (Agosta, 2005) l l Data quality now includes meta data quality (c. f. , tags in Flickr. com) Data profiling is the first step l l The use of analytical techniques about data for the purpose of developing a thorough knowledge of its content, structure and quality IQ tools (e. g. , Data. Flux) are available Policy-based information quality is necessary Design a better process of controlling IQ 16 Agosta, L. (2005). Trends in data quality. DM Review, 15(2), 34 -35.

Trends in Data Quality (Agosta, 2005) l l Data quality now includes meta data quality (c. f. , tags in Flickr. com) Data profiling is the first step l l The use of analytical techniques about data for the purpose of developing a thorough knowledge of its content, structure and quality IQ tools (e. g. , Data. Flux) are available Policy-based information quality is necessary Design a better process of controlling IQ 16 Agosta, L. (2005). Trends in data quality. DM Review, 15(2), 34 -35.

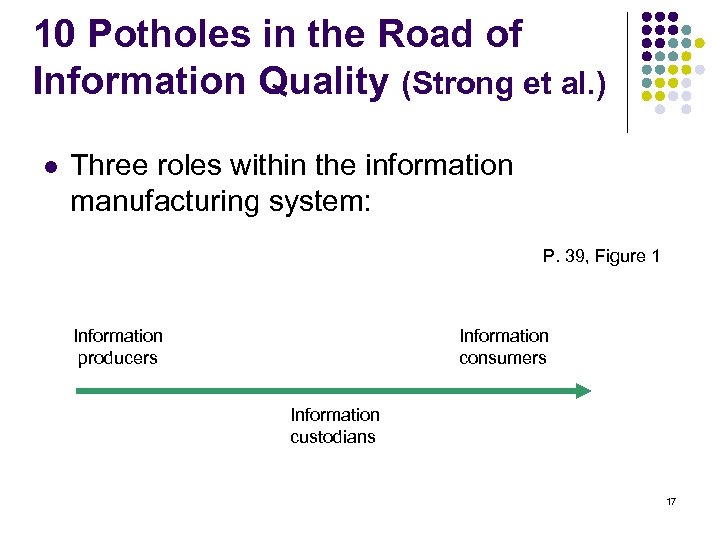

10 Potholes in the Road of Information Quality (Strong et al. ) l Three roles within the information manufacturing system: P. 39, Figure 1 Information producers Information consumers Information custodians 17

10 Potholes in the Road of Information Quality (Strong et al. ) l Three roles within the information manufacturing system: P. 39, Figure 1 Information producers Information consumers Information custodians 17

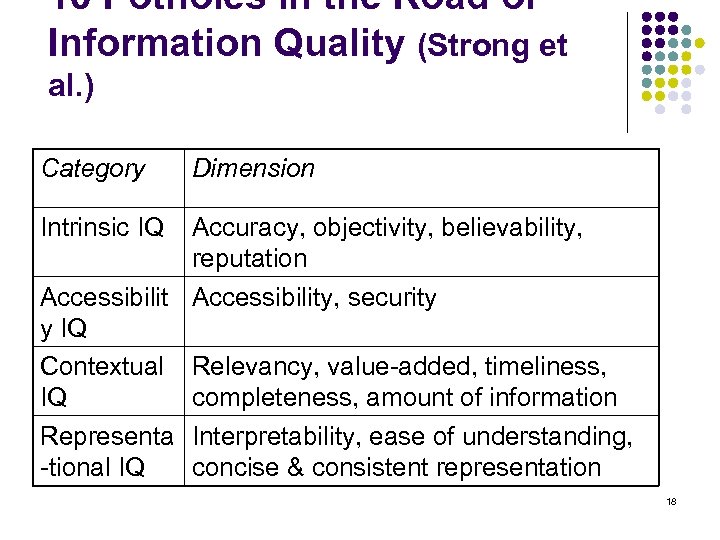

10 Potholes in the Road of Information Quality (Strong et al. ) Category Dimension Intrinsic IQ Accuracy, objectivity, believability, reputation Accessibility, security y IQ Contextual Relevancy, value-added, timeliness, IQ completeness, amount of information Representa Interpretability, ease of understanding, -tional IQ concise & consistent representation 18

10 Potholes in the Road of Information Quality (Strong et al. ) Category Dimension Intrinsic IQ Accuracy, objectivity, believability, reputation Accessibility, security y IQ Contextual Relevancy, value-added, timeliness, IQ completeness, amount of information Representa Interpretability, ease of understanding, -tional IQ concise & consistent representation 18

Problem with Numbers in Systems l This example is typical and illustrates how potholes can quickly multiply l http: //www. fbi. gov/ucr. htm l See 1999, (p. 14) Wyoming (c. f. , Laramie project) 19

Problem with Numbers in Systems l This example is typical and illustrates how potholes can quickly multiply l http: //www. fbi. gov/ucr. htm l See 1999, (p. 14) Wyoming (c. f. , Laramie project) 19

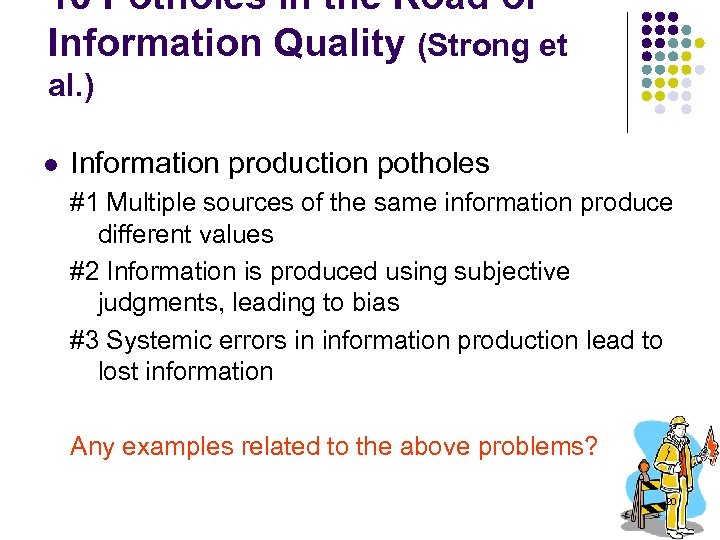

10 Potholes in the Road of Information Quality (Strong et al. ) l Information production potholes #1 Multiple sources of the same information produce different values #2 Information is produced using subjective judgments, leading to bias #3 Systemic errors in information production lead to lost information Any examples related to the above problems? 20

10 Potholes in the Road of Information Quality (Strong et al. ) l Information production potholes #1 Multiple sources of the same information produce different values #2 Information is produced using subjective judgments, leading to bias #3 Systemic errors in information production lead to lost information Any examples related to the above problems? 20

10 Potholes in the Road of Information Quality (Strong et al. ) l Information storage potholes #4 Large volumes of stored information make it difficult to access it in a reasonable time #5 Distributed heterogeneous systems lead to inconsistent definitions, formats, and values #6 Nonnumeric information is difficult to index Any examples related to the above problems? 21

10 Potholes in the Road of Information Quality (Strong et al. ) l Information storage potholes #4 Large volumes of stored information make it difficult to access it in a reasonable time #5 Distributed heterogeneous systems lead to inconsistent definitions, formats, and values #6 Nonnumeric information is difficult to index Any examples related to the above problems? 21

10 Potholes in the Road of Information Quality (Strong et al. ) l Information utilization potholes #7 Automated content analysis across information collections is not yet available #8 Consumers’ needs for information changes #9 Easy access to information may conflict with requirements for security, privacy, and confidentiality #10 Lack of sufficient computing resources limits access Any examples related to the above problems? 22

10 Potholes in the Road of Information Quality (Strong et al. ) l Information utilization potholes #7 Automated content analysis across information collections is not yet available #8 Consumers’ needs for information changes #9 Easy access to information may conflict with requirements for security, privacy, and confidentiality #10 Lack of sufficient computing resources limits access Any examples related to the above problems? 22

A Framework of IQ Assessment (Stvilia et al. 2005) l In Wikipedia 4 types of “agents” exists: l l Editor agents – add new content Information Quality Assurance agents – control & enhance the quality of content Malicious agents – degrade article quality Environmental agents – represent temporal changes in the real world 23

A Framework of IQ Assessment (Stvilia et al. 2005) l In Wikipedia 4 types of “agents” exists: l l Editor agents – add new content Information Quality Assurance agents – control & enhance the quality of content Malicious agents – degrade article quality Environmental agents – represent temporal changes in the real world 23

A Framework of IQ Assessment (Stvilia et al. 2005) Featured article quality assessment criteria: l 1. 2. 3. 4. 5. 6. 7. 8. Comprehensive Accurate and verifiable by including references Stable – not changing often Well-written Uncontroversial Compliance with Wikipedia standards and project guides Having appropriate images w/ acceptable copyright status Having appropriate length, using summary style and focusing on the main topic 24

A Framework of IQ Assessment (Stvilia et al. 2005) Featured article quality assessment criteria: l 1. 2. 3. 4. 5. 6. 7. 8. Comprehensive Accurate and verifiable by including references Stable – not changing often Well-written Uncontroversial Compliance with Wikipedia standards and project guides Having appropriate images w/ acceptable copyright status Having appropriate length, using summary style and focusing on the main topic 24

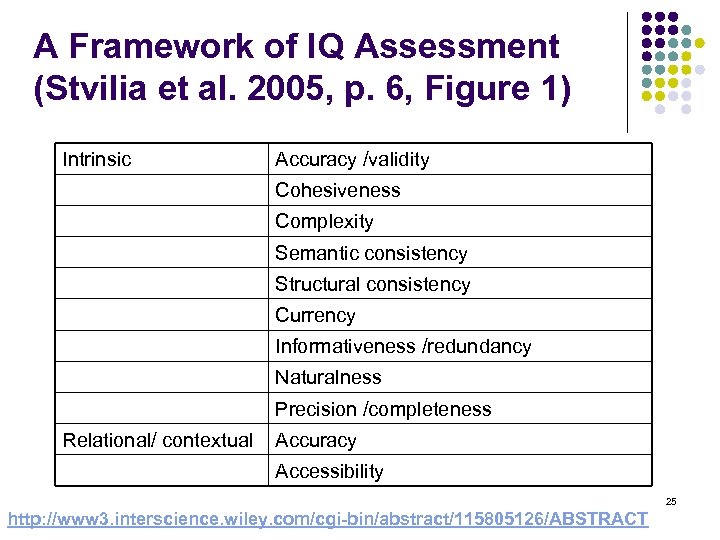

A Framework of IQ Assessment (Stvilia et al. 2005, p. 6, Figure 1) Intrinsic Accuracy /validity Cohesiveness Complexity Semantic consistency Structural consistency Currency Informativeness /redundancy Naturalness Precision /completeness Relational/ contextual Accuracy Accessibility 25 http: //www 3. interscience. wiley. com/cgi-bin/abstract/115805126/ABSTRACT

A Framework of IQ Assessment (Stvilia et al. 2005, p. 6, Figure 1) Intrinsic Accuracy /validity Cohesiveness Complexity Semantic consistency Structural consistency Currency Informativeness /redundancy Naturalness Precision /completeness Relational/ contextual Accuracy Accessibility 25 http: //www 3. interscience. wiley. com/cgi-bin/abstract/115805126/ABSTRACT

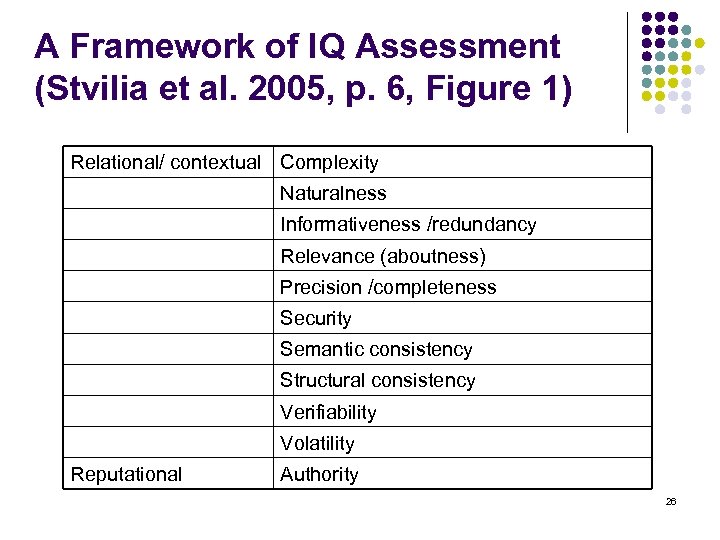

A Framework of IQ Assessment (Stvilia et al. 2005, p. 6, Figure 1) Relational/ contextual Complexity Naturalness Informativeness /redundancy Relevance (aboutness) Precision /completeness Security Semantic consistency Structural consistency Verifiability Volatility Reputational Authority 26

A Framework of IQ Assessment (Stvilia et al. 2005, p. 6, Figure 1) Relational/ contextual Complexity Naturalness Informativeness /redundancy Relevance (aboutness) Precision /completeness Security Semantic consistency Structural consistency Verifiability Volatility Reputational Authority 26

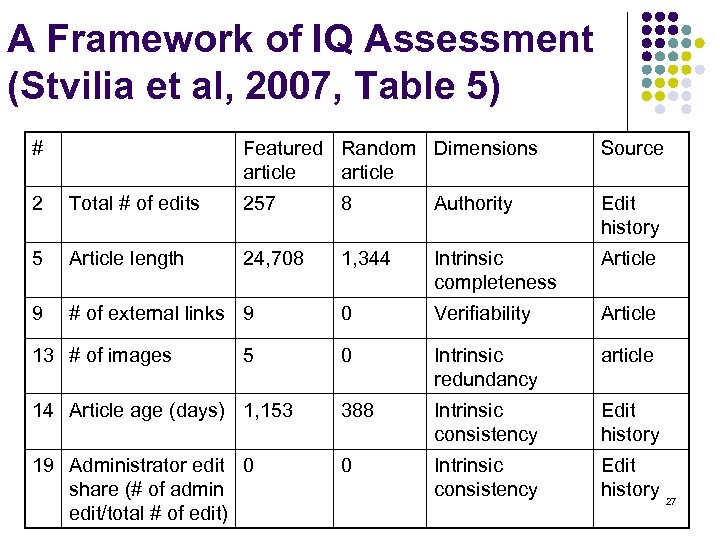

A Framework of IQ Assessment (Stvilia et al, 2007, Table 5) # Featured Random Dimensions article Source 2 Total # of edits 257 8 Authority Edit history 5 Article length 24, 708 1, 344 Intrinsic completeness Article 9 # of external links 9 0 Verifiability Article 0 Intrinsic redundancy article 14 Article age (days) 1, 153 388 Intrinsic consistency Edit history 19 Administrator edit 0 share (# of admin edit/total # of edit) 0 Intrinsic consistency Edit history 13 # of images 5 27

A Framework of IQ Assessment (Stvilia et al, 2007, Table 5) # Featured Random Dimensions article Source 2 Total # of edits 257 8 Authority Edit history 5 Article length 24, 708 1, 344 Intrinsic completeness Article 9 # of external links 9 0 Verifiability Article 0 Intrinsic redundancy article 14 Article age (days) 1, 153 388 Intrinsic consistency Edit history 19 Administrator edit 0 share (# of admin edit/total # of edit) 0 Intrinsic consistency Edit history 13 # of images 5 27

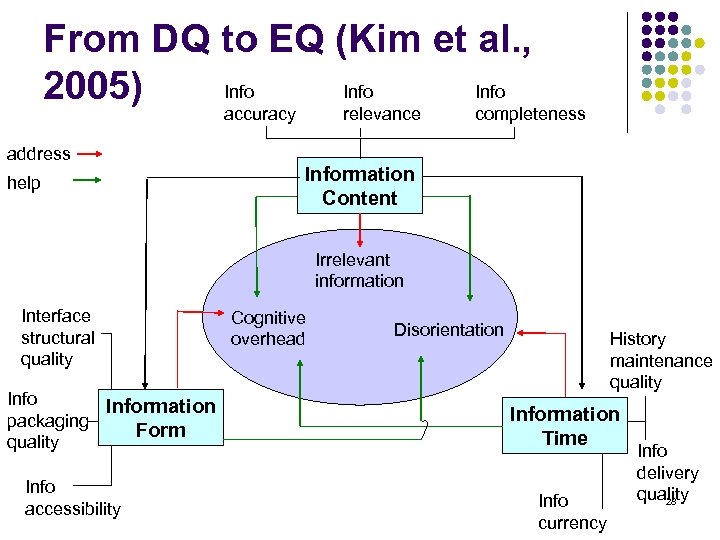

From DQ to EQ (Kim et al. , Info 2005) accuracy address help relevance completeness Information Content Irrelevant information Interface structural quality Information packaging Form quality Info accessibility Cognitive overhead Disorientation History maintenance quality Information Time Info currency Info delivery quality 28

From DQ to EQ (Kim et al. , Info 2005) accuracy address help relevance completeness Information Content Irrelevant information Interface structural quality Information packaging Form quality Info accessibility Cognitive overhead Disorientation History maintenance quality Information Time Info currency Info delivery quality 28

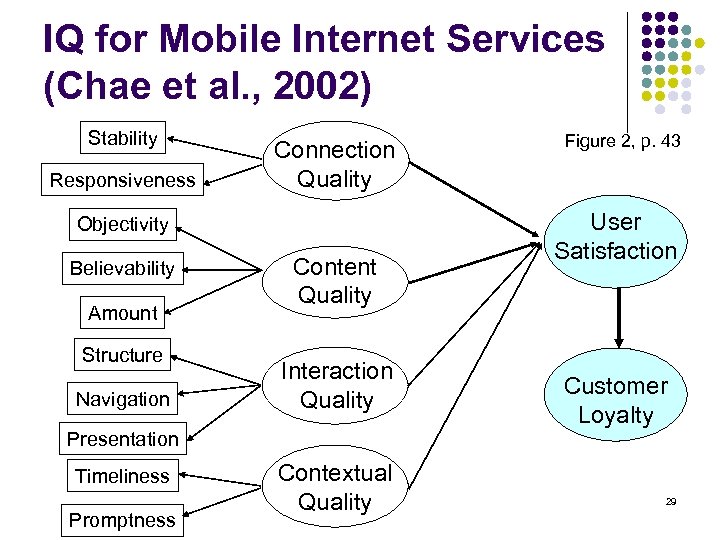

IQ for Mobile Internet Services (Chae et al. , 2002) Stability Responsiveness Connection Quality Objectivity Believability Amount Structure Navigation Content Quality Interaction Quality Presentation Timeliness Promptness Contextual Quality Figure 2, p. 43 User Satisfaction Customer Loyalty 29

IQ for Mobile Internet Services (Chae et al. , 2002) Stability Responsiveness Connection Quality Objectivity Believability Amount Structure Navigation Content Quality Interaction Quality Presentation Timeliness Promptness Contextual Quality Figure 2, p. 43 User Satisfaction Customer Loyalty 29

Group Activity We need 5 teams (5 people / team) Each team will create a metric and evaluate information quality of www. amazon. com and www. bn. com: l l 1. 2. 3. 4. 5. l Objectives of IS The purpose of the measurement What to measure (link to #1) How to measure Limitations Discuss the results with the whole class 30

Group Activity We need 5 teams (5 people / team) Each team will create a metric and evaluate information quality of www. amazon. com and www. bn. com: l l 1. 2. 3. 4. 5. l Objectives of IS The purpose of the measurement What to measure (link to #1) How to measure Limitations Discuss the results with the whole class 30

To Wrap-Up l “if they know what to look for, organizations can anticipate and handle IQ problems before they trigger a crisis” (Strong) l We can all help build a stronger and smoother “road to information quality” through understanding, mindfulness, and diligence l And if we do not do this, who will? 31

To Wrap-Up l “if they know what to look for, organizations can anticipate and handle IQ problems before they trigger a crisis” (Strong) l We can all help build a stronger and smoother “road to information quality” through understanding, mindfulness, and diligence l And if we do not do this, who will? 31