c8a3fe420c57b3b5c5ce39387001a0a3.ppt

- Количество слайдов: 39

Knowledge Support for Mining Parallel Performance Data Allen D. Malony, Kevin Huck {malony, khuck}@cs. uoregon. edu http: //www. cs. uoregon. edu/research/tau Department of Computer and Information Science Performance Research Laboratory University of Oregon

Knowledge Support for Mining Parallel Performance Data Allen D. Malony, Kevin Huck {malony, khuck}@cs. uoregon. edu http: //www. cs. uoregon. edu/research/tau Department of Computer and Information Science Performance Research Laboratory University of Oregon

Outline r r Why mine parallel performance data? Our first attempt ¦ ¦ r r How did we do? Why knowledge-driven data mining? Perf. Explorer v 2 ¦ ¦ r Perf. DMF Perf. Explorer Analysis process automation Metadata encoding and incorporation Inference engine Object persistence and provenance Analysis examples APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 2

Outline r r Why mine parallel performance data? Our first attempt ¦ ¦ r r How did we do? Why knowledge-driven data mining? Perf. Explorer v 2 ¦ ¦ r Perf. DMF Perf. Explorer Analysis process automation Metadata encoding and incorporation Inference engine Object persistence and provenance Analysis examples APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 2

Motivation for Performance Data Mining r High-end parallel applications and systems evolution ¦ ¦ ¦ r r Evolution trends change performance landscape Parallel performance data becomes more complex ¦ ¦ r r r More sophisticated, integrated, heterogeneous operation Higher levels of abstraction Larger scales of execution Multivariate, higher dimensionality, heterogeneous Greater scale and larger data size Standard analysis techniques overwhelmed Need data management and analysis automation Provide foundation for performance analytics APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 3

Motivation for Performance Data Mining r High-end parallel applications and systems evolution ¦ ¦ ¦ r r Evolution trends change performance landscape Parallel performance data becomes more complex ¦ ¦ r r r More sophisticated, integrated, heterogeneous operation Higher levels of abstraction Larger scales of execution Multivariate, higher dimensionality, heterogeneous Greater scale and larger data size Standard analysis techniques overwhelmed Need data management and analysis automation Provide foundation for performance analytics APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 3

Performance Data Mining Objectives r Conduct parallel performance analysis in a systematic, collaborative and reusable manner ¦ ¦ ¦ r r Multi-experiment performance analysis Large-scale performance data reduction ¦ r Manage performance data and complexity Discover performance relationship and properties Automate performance investigation process Summarize characteristics of large processor runs Implement extensible analysis framework ¦ ¦ Abtraction / automation of data mining operations Interface to existing analysis and data mining tools APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 4

Performance Data Mining Objectives r Conduct parallel performance analysis in a systematic, collaborative and reusable manner ¦ ¦ ¦ r r Multi-experiment performance analysis Large-scale performance data reduction ¦ r Manage performance data and complexity Discover performance relationship and properties Automate performance investigation process Summarize characteristics of large processor runs Implement extensible analysis framework ¦ ¦ Abtraction / automation of data mining operations Interface to existing analysis and data mining tools APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 4

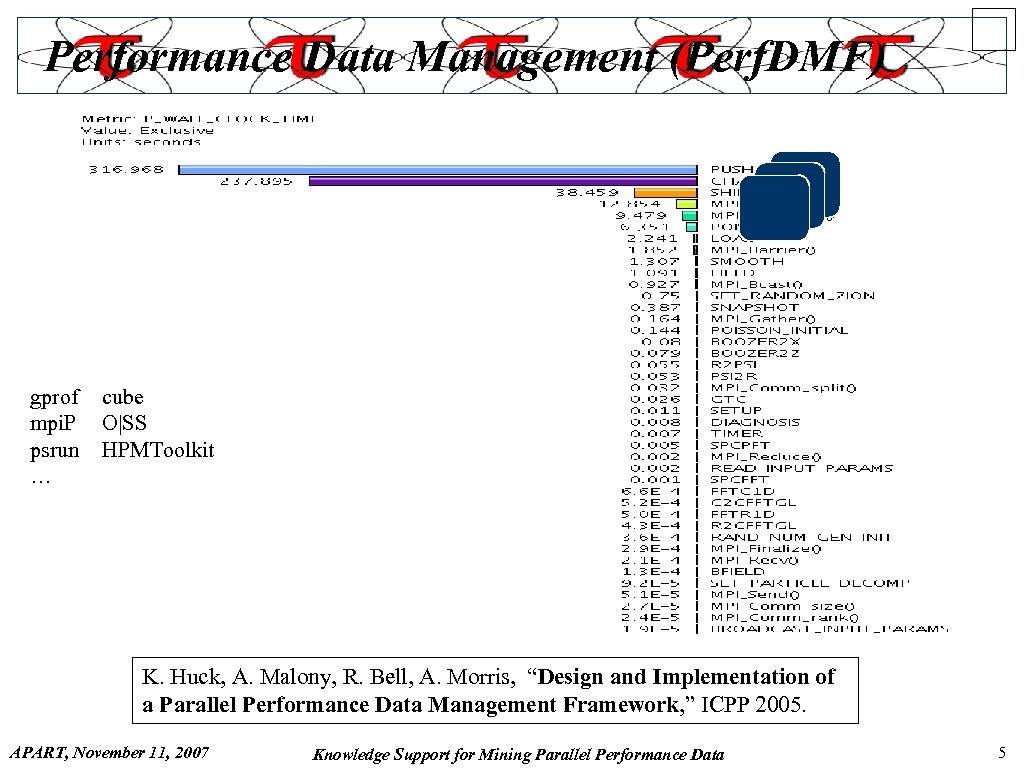

Performance Data Management (Perf. DMF) gprof mpi. P psrun … cube O|SS HPMToolkit K. Huck, A. Malony, R. Bell, A. Morris, “Design and Implementation of a Parallel Performance Data Management Framework, ” ICPP 2005. APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 5

Performance Data Management (Perf. DMF) gprof mpi. P psrun … cube O|SS HPMToolkit K. Huck, A. Malony, R. Bell, A. Morris, “Design and Implementation of a Parallel Performance Data Management Framework, ” ICPP 2005. APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 5

Analysis Framework (Perf. Explorer) r Leverage existing TAU infrastructure ¦ ¦ r Support large-scale performance analysis ¦ ¦ r Focus on parallel profiles Build on Perf. DMF Multiple experiments Parametric studies Apply data mining operations ¦ ¦ ¦ Comparative, clustering, correlation, dimension reduction, … Interface to existing tools (Weka, R) Abstraction/automation APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 6

Analysis Framework (Perf. Explorer) r Leverage existing TAU infrastructure ¦ ¦ r Support large-scale performance analysis ¦ ¦ r Focus on parallel profiles Build on Perf. DMF Multiple experiments Parametric studies Apply data mining operations ¦ ¦ ¦ Comparative, clustering, correlation, dimension reduction, … Interface to existing tools (Weka, R) Abstraction/automation APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 6

Performance Data Mining (Perf. Explorer) K. Huck and A. Malony, “Perf. Explorer: A Performance Data Mining Framework For Large-Scale Parallel Computing, ” SC 2005. APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 7

Performance Data Mining (Perf. Explorer) K. Huck and A. Malony, “Perf. Explorer: A Performance Data Mining Framework For Large-Scale Parallel Computing, ” SC 2005. APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 7

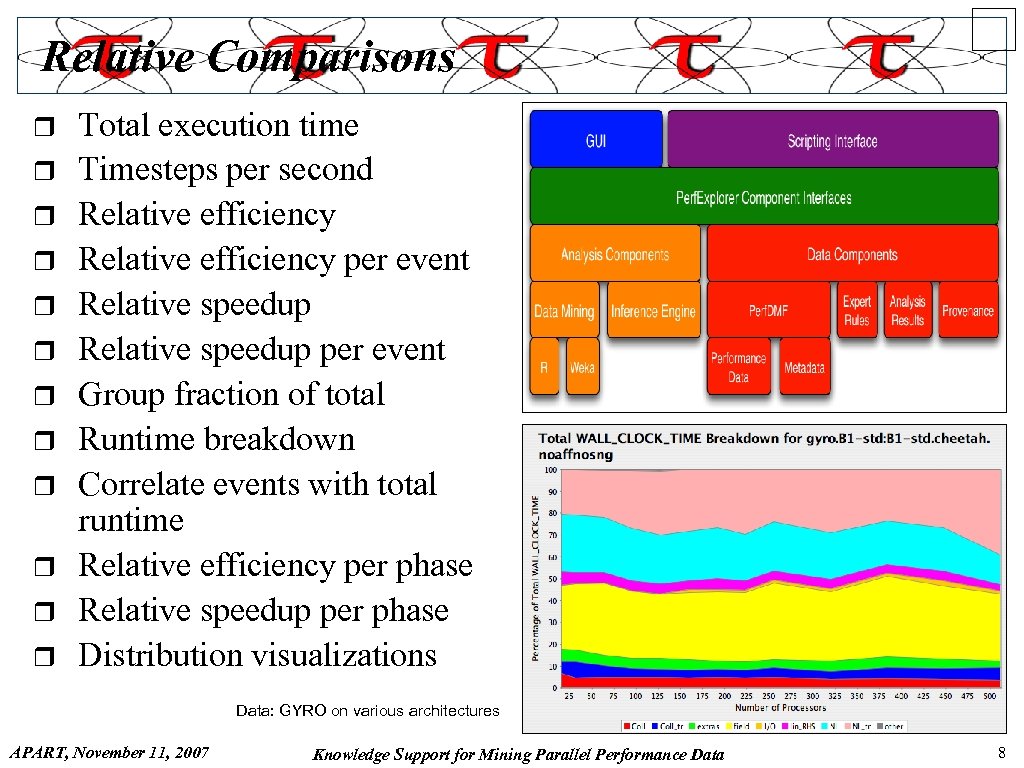

Relative Comparisons r r r Total execution time Timesteps per second Relative efficiency per event Relative speedup per event Group fraction of total Runtime breakdown Correlate events with total runtime Relative efficiency per phase Relative speedup per phase Distribution visualizations Data: GYRO on various architectures APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 8

Relative Comparisons r r r Total execution time Timesteps per second Relative efficiency per event Relative speedup per event Group fraction of total Runtime breakdown Correlate events with total runtime Relative efficiency per phase Relative speedup per phase Distribution visualizations Data: GYRO on various architectures APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 8

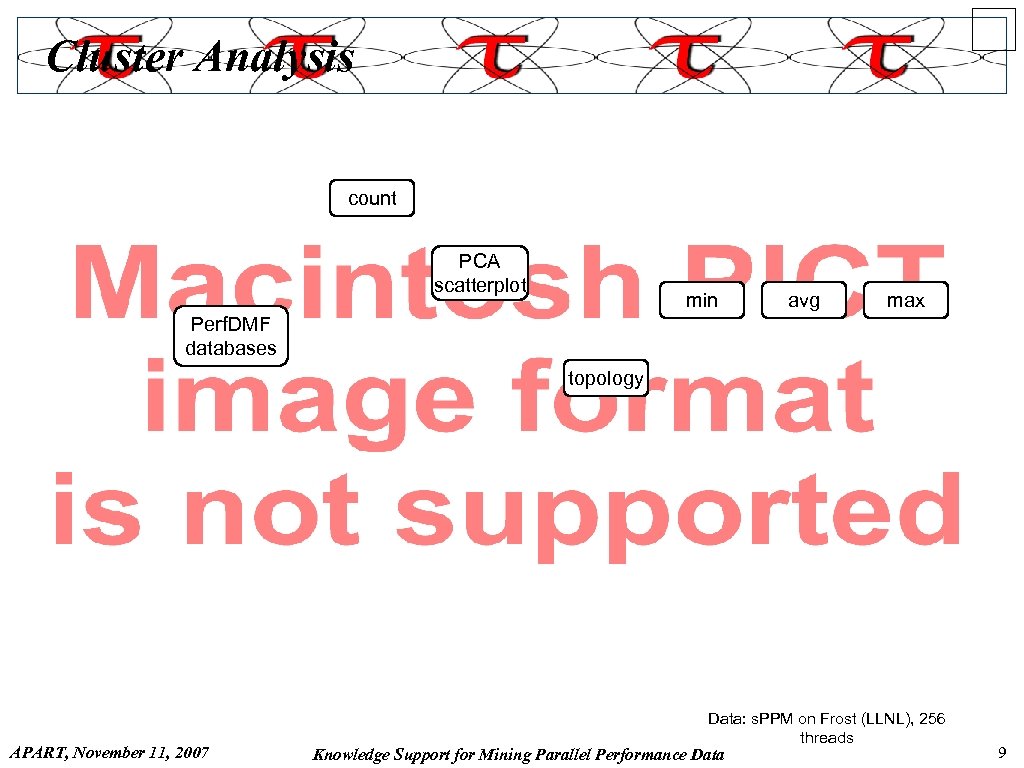

Cluster Analysis count PCA scatterplot min avg max Perf. DMF databases topology APART, November 11, 2007 Data: s. PPM on Frost (LLNL), 256 threads Knowledge Support for Mining Parallel Performance Data 9

Cluster Analysis count PCA scatterplot min avg max Perf. DMF databases topology APART, November 11, 2007 Data: s. PPM on Frost (LLNL), 256 threads Knowledge Support for Mining Parallel Performance Data 9

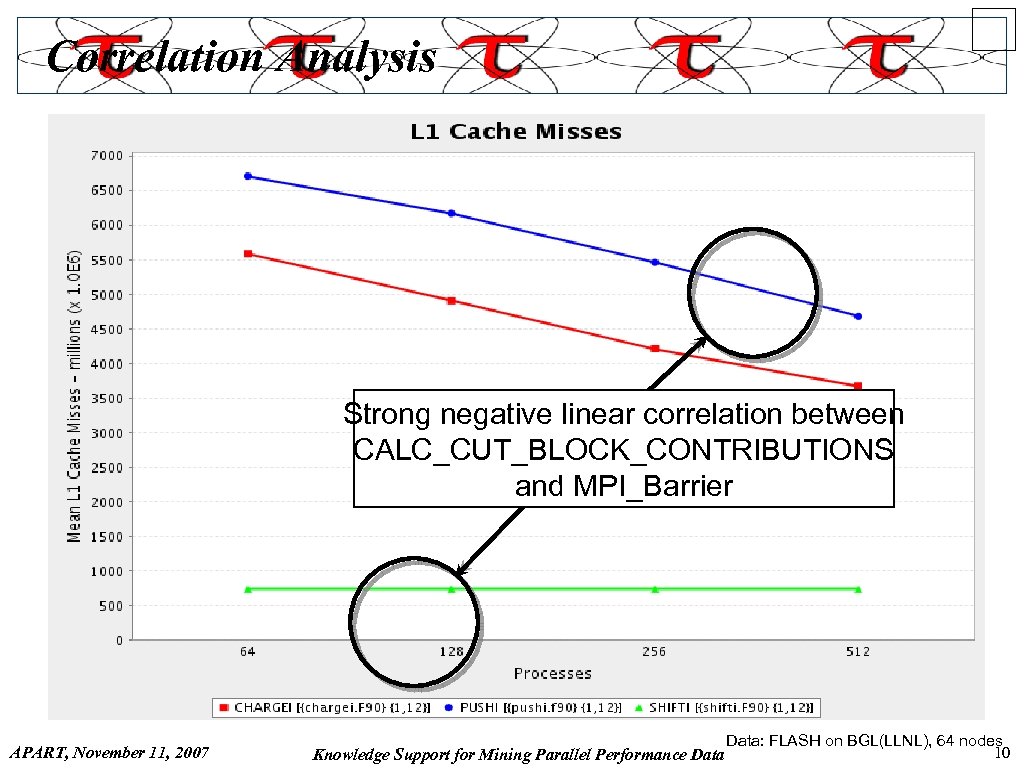

Correlation Analysis Strong negative linear correlation between CALC_CUT_BLOCK_CONTRIBUTIONS and MPI_Barrier APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data: FLASH on BGL(LLNL), 64 nodes 10

Correlation Analysis Strong negative linear correlation between CALC_CUT_BLOCK_CONTRIBUTIONS and MPI_Barrier APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data: FLASH on BGL(LLNL), 64 nodes 10

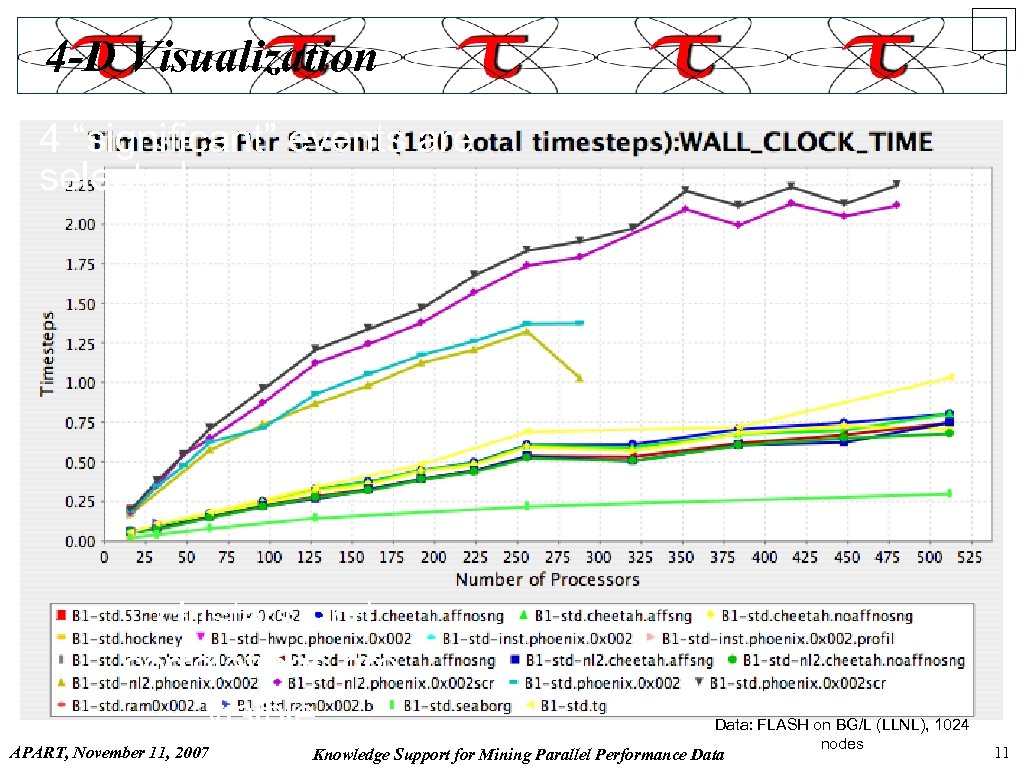

4 -D Visualization 4 “significant” events are selected clusters and correlations are visible APART, November 11, 2007 Data: FLASH on BG/L (LLNL), 1024 nodes Knowledge Support for Mining Parallel Performance Data 11

4 -D Visualization 4 “significant” events are selected clusters and correlations are visible APART, November 11, 2007 Data: FLASH on BG/L (LLNL), 1024 nodes Knowledge Support for Mining Parallel Performance Data 11

Perf. Explorer Critique (Describe vs. Explain) r r r Specific parametric study support (not general) No way to capture the analysis processes No analysis history - how were these results generated? Perf. Explorer just redescribed the performance results Perf. Explorer should explain performance phenomena ¦ ¦ ¦ r Automated analysis needs good informed feedback ¦ r What are the causes for performance observed? What are the factors and how do they interrelate? Performance analytics, forensics, and decision support Iterative tuning, performance regression testing Performance model generation requires interpretation APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 12

Perf. Explorer Critique (Describe vs. Explain) r r r Specific parametric study support (not general) No way to capture the analysis processes No analysis history - how were these results generated? Perf. Explorer just redescribed the performance results Perf. Explorer should explain performance phenomena ¦ ¦ ¦ r Automated analysis needs good informed feedback ¦ r What are the causes for performance observed? What are the factors and how do they interrelate? Performance analytics, forensics, and decision support Iterative tuning, performance regression testing Performance model generation requires interpretation APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 12

How to explain behavior? Add Knowledge! r r r Offline parallel performance tools should not have to treat the application and system as a “black box” Need to add knowledge to do more intelligent things Where does it come from? ¦ ¦ r Experiment context Application-specific information System-specific performance General performance expertise We need better methods and tools for ¦ ¦ Integrating meta-information Knowledge-based performance problem solving APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 13

How to explain behavior? Add Knowledge! r r r Offline parallel performance tools should not have to treat the application and system as a “black box” Need to add knowledge to do more intelligent things Where does it come from? ¦ ¦ r Experiment context Application-specific information System-specific performance General performance expertise We need better methods and tools for ¦ ¦ Integrating meta-information Knowledge-based performance problem solving APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 13

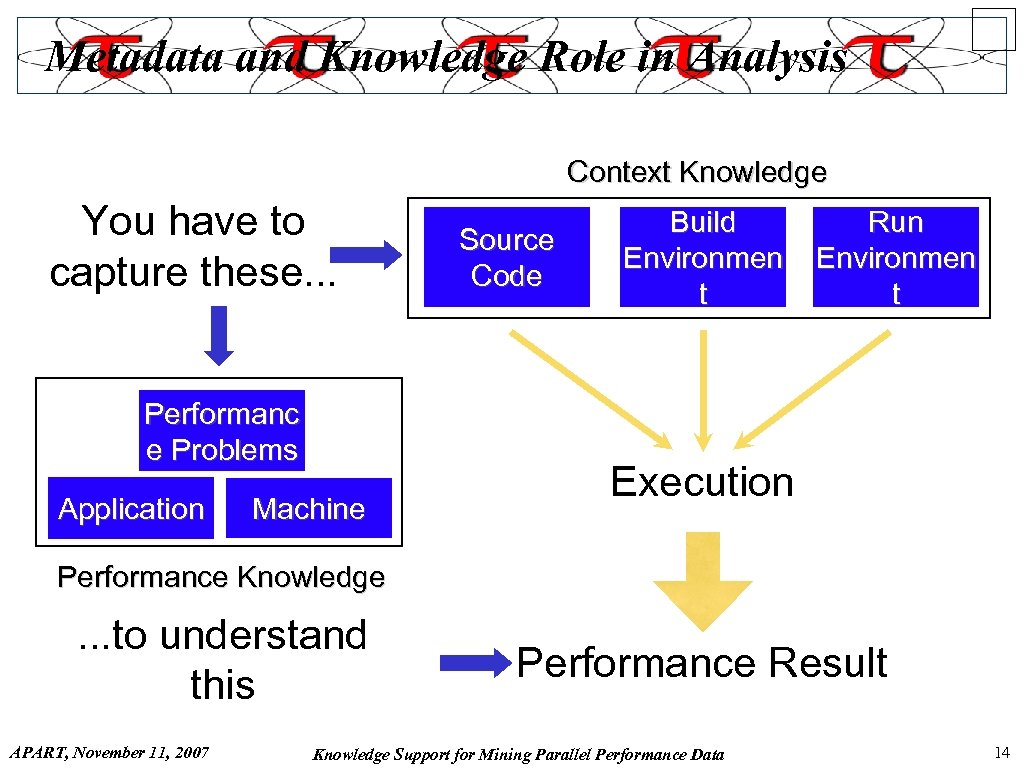

Metadata and Knowledge Role in Analysis Context Knowledge You have to capture these. . . Performanc e Problems Application Machine Source Code Build Environmen t Run Environmen t Execution Performance Knowledge . . . to understand this APART, November 11, 2007 Performance Result Knowledge Support for Mining Parallel Performance Data 14

Metadata and Knowledge Role in Analysis Context Knowledge You have to capture these. . . Performanc e Problems Application Machine Source Code Build Environmen t Run Environmen t Execution Performance Knowledge . . . to understand this APART, November 11, 2007 Performance Result Knowledge Support for Mining Parallel Performance Data 14

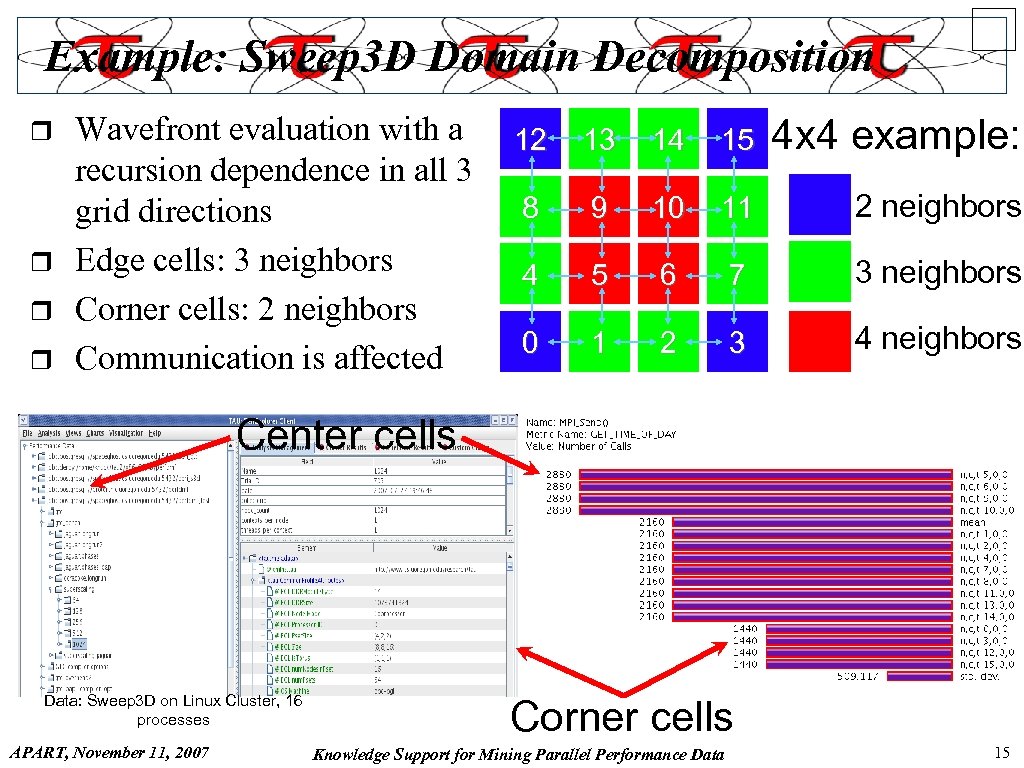

Example: Sweep 3 D Domain Decomposition r r Wavefront evaluation with a recursion dependence in all 3 grid directions Edge cells: 3 neighbors Corner cells: 2 neighbors Communication is affected 12 13 14 15 4 x 4 example: 8 9 10 11 2 neighbors 4 5 6 7 3 neighbors 0 1 2 3 4 neighbors Center cells Data: Sweep 3 D on Linux Cluster, 16 processes APART, November 11, 2007 Corner cells Knowledge Support for Mining Parallel Performance Data 15

Example: Sweep 3 D Domain Decomposition r r Wavefront evaluation with a recursion dependence in all 3 grid directions Edge cells: 3 neighbors Corner cells: 2 neighbors Communication is affected 12 13 14 15 4 x 4 example: 8 9 10 11 2 neighbors 4 5 6 7 3 neighbors 0 1 2 3 4 neighbors Center cells Data: Sweep 3 D on Linux Cluster, 16 processes APART, November 11, 2007 Corner cells Knowledge Support for Mining Parallel Performance Data 15

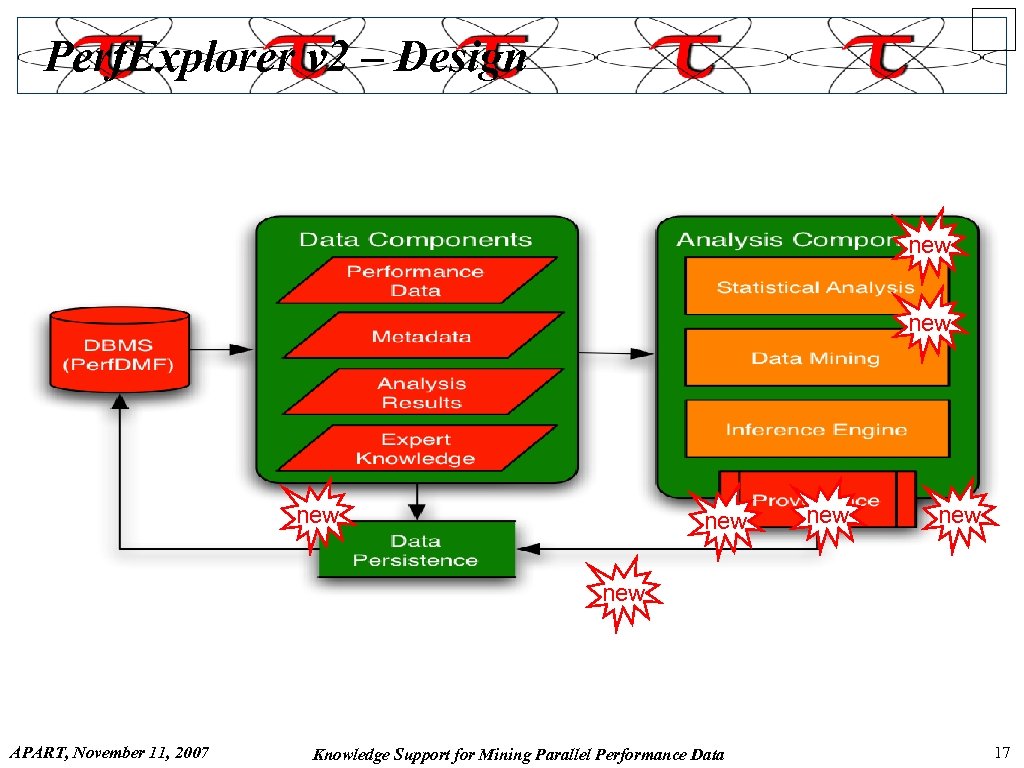

Perf. Explorer v 2 – Requirements and Features r Component-based analysis process ¦ ¦ r Scripting ¦ r r ¦ r Provides process/workflow development and automation Metadata input, management, and access Inference engine ¦ r Analysis operations implemented as modules Linked together in analysis process and workflow Reasoning about causes of performance phenomena Analysis knowledge captured in expert rules Persistence of intermediate results Provenance ¦ Provides historical record of analysis results APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 16

Perf. Explorer v 2 – Requirements and Features r Component-based analysis process ¦ ¦ r Scripting ¦ r r ¦ r Provides process/workflow development and automation Metadata input, management, and access Inference engine ¦ r Analysis operations implemented as modules Linked together in analysis process and workflow Reasoning about causes of performance phenomena Analysis knowledge captured in expert rules Persistence of intermediate results Provenance ¦ Provides historical record of analysis results APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 16

Perf. Explorer v 2 – Design new new APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 17

Perf. Explorer v 2 – Design new new APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 17

Component Interaction APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 18

Component Interaction APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 18

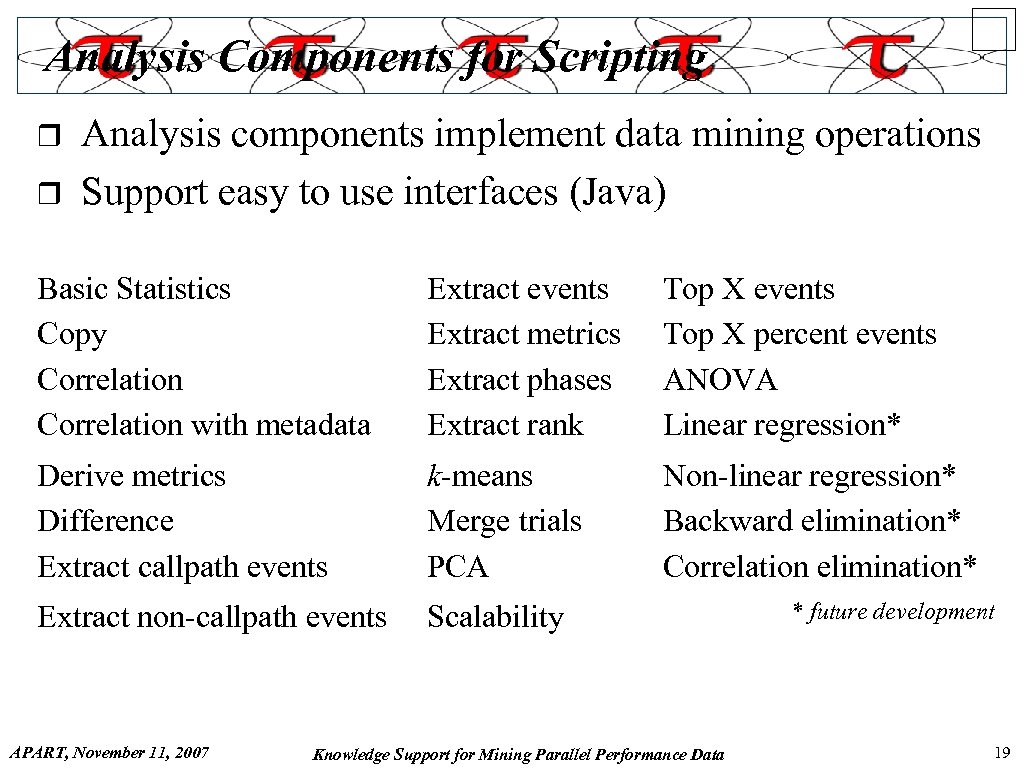

Analysis Components for Scripting r r Analysis components implement data mining operations Support easy to use interfaces (Java) Basic Statistics Copy Correlation with metadata Extract events Extract metrics Extract phases Extract rank Top X events Top X percent events ANOVA Linear regression* Derive metrics Difference Extract callpath events k-means Merge trials PCA Non-linear regression* Backward elimination* Correlation elimination* Extract non-callpath events Scalability APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data * future development 19

Analysis Components for Scripting r r Analysis components implement data mining operations Support easy to use interfaces (Java) Basic Statistics Copy Correlation with metadata Extract events Extract metrics Extract phases Extract rank Top X events Top X percent events ANOVA Linear regression* Derive metrics Difference Extract callpath events k-means Merge trials PCA Non-linear regression* Backward elimination* Correlation elimination* Extract non-callpath events Scalability APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data * future development 19

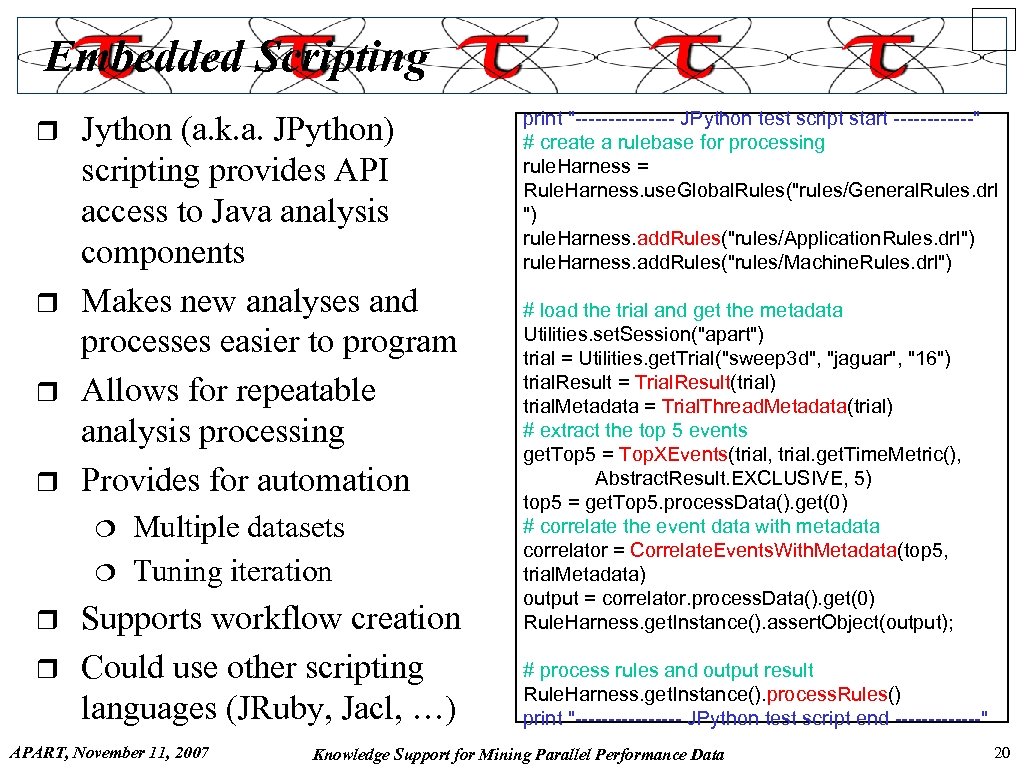

Embedded Scripting r r Jython (a. k. a. JPython) scripting provides API access to Java analysis components Makes new analyses and processes easier to program Allows for repeatable analysis processing Provides for automation ¦ ¦ r r Multiple datasets Tuning iteration Supports workflow creation Could use other scripting languages (JRuby, Jacl, …) APART, November 11, 2007 print "-------- JPython test script start ------" # create a rulebase for processing rule. Harness = Rule. Harness. use. Global. Rules("rules/General. Rules. drl ") rule. Harness. add. Rules("rules/Application. Rules. drl") rule. Harness. add. Rules("rules/Machine. Rules. drl") # load the trial and get the metadata Utilities. set. Session("apart") trial = Utilities. get. Trial("sweep 3 d", "jaguar", "16") trial. Result = Trial. Result(trial) trial. Metadata = Trial. Thread. Metadata(trial) # extract the top 5 events get. Top 5 = Top. XEvents(trial, trial. get. Time. Metric(), Abstract. Result. EXCLUSIVE, 5) top 5 = get. Top 5. process. Data(). get(0) # correlate the event data with metadata correlator = Correlate. Events. With. Metadata(top 5, trial. Metadata) output = correlator. process. Data(). get(0) Rule. Harness. get. Instance(). assert. Object(output); # process rules and output result Rule. Harness. get. Instance(). process. Rules() print "-------- JPython test script end -------" Knowledge Support for Mining Parallel Performance Data 20

Embedded Scripting r r Jython (a. k. a. JPython) scripting provides API access to Java analysis components Makes new analyses and processes easier to program Allows for repeatable analysis processing Provides for automation ¦ ¦ r r Multiple datasets Tuning iteration Supports workflow creation Could use other scripting languages (JRuby, Jacl, …) APART, November 11, 2007 print "-------- JPython test script start ------" # create a rulebase for processing rule. Harness = Rule. Harness. use. Global. Rules("rules/General. Rules. drl ") rule. Harness. add. Rules("rules/Application. Rules. drl") rule. Harness. add. Rules("rules/Machine. Rules. drl") # load the trial and get the metadata Utilities. set. Session("apart") trial = Utilities. get. Trial("sweep 3 d", "jaguar", "16") trial. Result = Trial. Result(trial) trial. Metadata = Trial. Thread. Metadata(trial) # extract the top 5 events get. Top 5 = Top. XEvents(trial, trial. get. Time. Metric(), Abstract. Result. EXCLUSIVE, 5) top 5 = get. Top 5. process. Data(). get(0) # correlate the event data with metadata correlator = Correlate. Events. With. Metadata(top 5, trial. Metadata) output = correlator. process. Data(). get(0) Rule. Harness. get. Instance(). assert. Object(output); # process rules and output result Rule. Harness. get. Instance(). process. Rules() print "-------- JPython test script end -------" Knowledge Support for Mining Parallel Performance Data 20

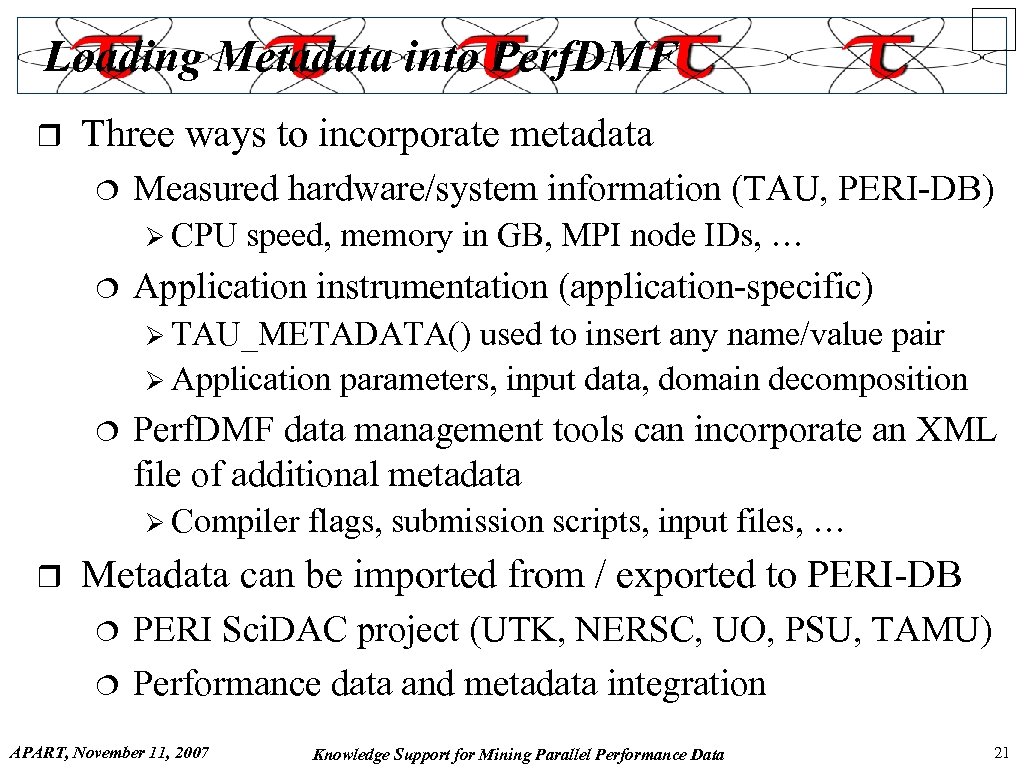

Loading Metadata into Perf. DMF r Three ways to incorporate metadata ¦ Measured hardware/system information (TAU, PERI-DB) Ø CPU ¦ speed, memory in GB, MPI node IDs, … Application instrumentation (application-specific) Ø TAU_METADATA() used to insert any name/value pair Ø Application parameters, input data, domain decomposition ¦ Perf. DMF data management tools can incorporate an XML file of additional metadata Ø Compiler r flags, submission scripts, input files, … Metadata can be imported from / exported to PERI-DB ¦ ¦ PERI Sci. DAC project (UTK, NERSC, UO, PSU, TAMU) Performance data and metadata integration APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 21

Loading Metadata into Perf. DMF r Three ways to incorporate metadata ¦ Measured hardware/system information (TAU, PERI-DB) Ø CPU ¦ speed, memory in GB, MPI node IDs, … Application instrumentation (application-specific) Ø TAU_METADATA() used to insert any name/value pair Ø Application parameters, input data, domain decomposition ¦ Perf. DMF data management tools can incorporate an XML file of additional metadata Ø Compiler r flags, submission scripts, input files, … Metadata can be imported from / exported to PERI-DB ¦ ¦ PERI Sci. DAC project (UTK, NERSC, UO, PSU, TAMU) Performance data and metadata integration APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 21

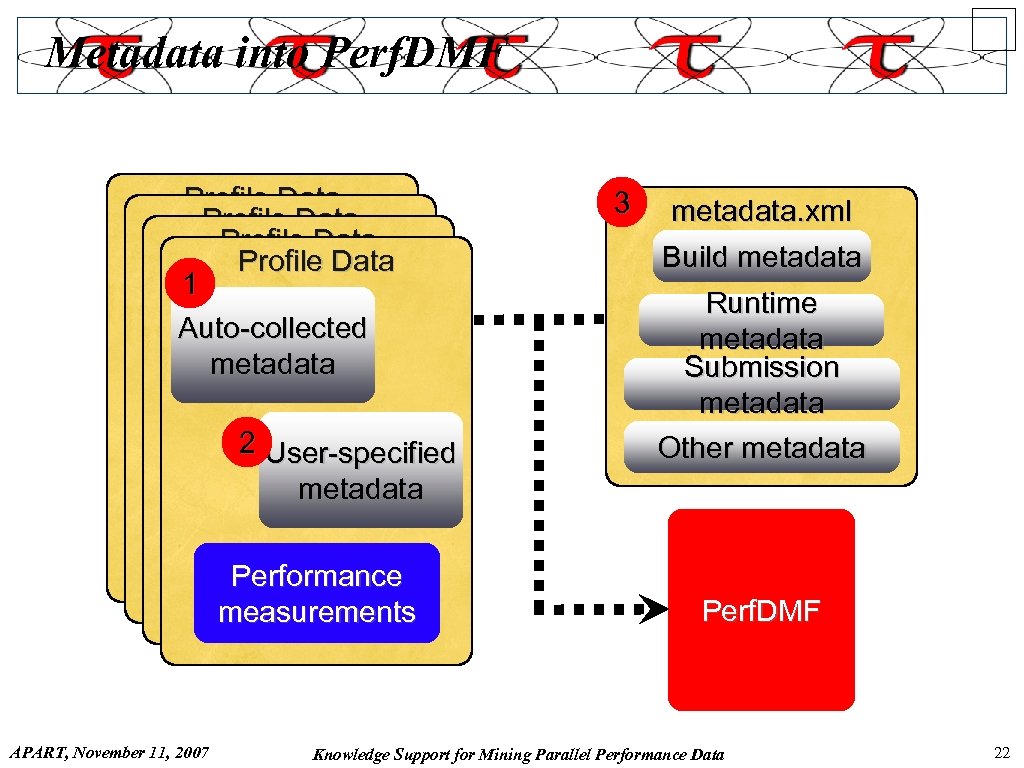

Metadata into Perf. DMF Profile Data 1 Auto-collected metadata 2 User-specified metadata Performance measurements APART, November 11, 2007 3 metadata. xml Build metadata Runtime metadata Submission metadata Other metadata Perf. DMF Knowledge Support for Mining Parallel Performance Data 22

Metadata into Perf. DMF Profile Data 1 Auto-collected metadata 2 User-specified metadata Performance measurements APART, November 11, 2007 3 metadata. xml Build metadata Runtime metadata Submission metadata Other metadata Perf. DMF Knowledge Support for Mining Parallel Performance Data 22

Metadata in Perf. Explorer r GTC on 1024 processors of Jaguar (Cray XT 3/4) APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 23

Metadata in Perf. Explorer r GTC on 1024 processors of Jaguar (Cray XT 3/4) APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 23

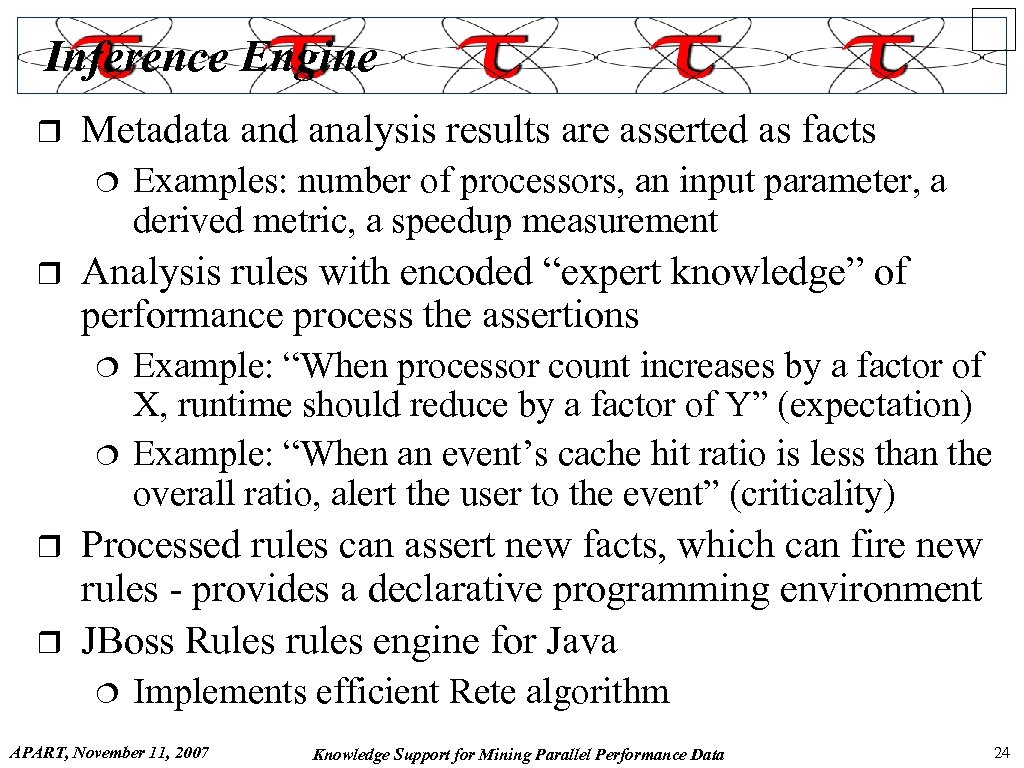

Inference Engine r Metadata and analysis results are asserted as facts ¦ r Analysis rules with encoded “expert knowledge” of performance process the assertions ¦ ¦ r r Examples: number of processors, an input parameter, a derived metric, a speedup measurement Example: “When processor count increases by a factor of X, runtime should reduce by a factor of Y” (expectation) Example: “When an event’s cache hit ratio is less than the overall ratio, alert the user to the event” (criticality) Processed rules can assert new facts, which can fire new rules - provides a declarative programming environment JBoss Rules rules engine for Java ¦ Implements efficient Rete algorithm APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 24

Inference Engine r Metadata and analysis results are asserted as facts ¦ r Analysis rules with encoded “expert knowledge” of performance process the assertions ¦ ¦ r r Examples: number of processors, an input parameter, a derived metric, a speedup measurement Example: “When processor count increases by a factor of X, runtime should reduce by a factor of Y” (expectation) Example: “When an event’s cache hit ratio is less than the overall ratio, alert the user to the event” (criticality) Processed rules can assert new facts, which can fire new rules - provides a declarative programming environment JBoss Rules rules engine for Java ¦ Implements efficient Rete algorithm APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 24

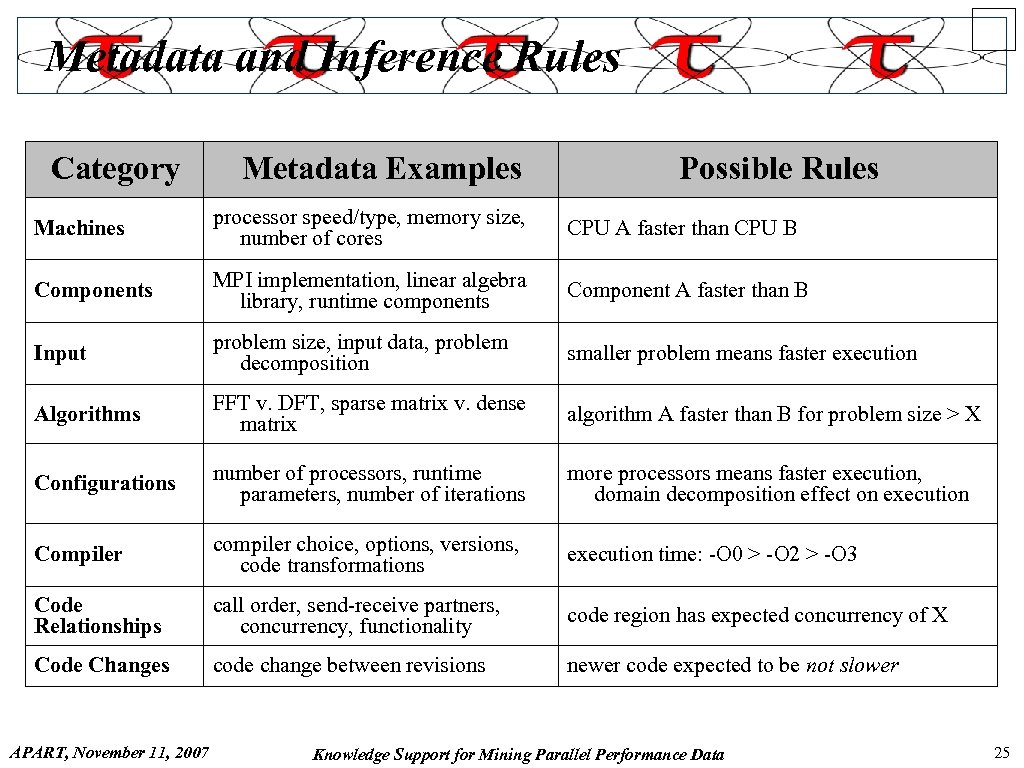

Metadata and Inference Rules Category Metadata Examples Possible Rules Machines processor speed/type, memory size, number of cores CPU A faster than CPU B Components MPI implementation, linear algebra library, runtime components Component A faster than B Input problem size, input data, problem decomposition smaller problem means faster execution Algorithms FFT v. DFT, sparse matrix v. dense matrix algorithm A faster than B for problem size > X Configurations number of processors, runtime parameters, number of iterations more processors means faster execution, domain decomposition effect on execution Compiler choice, options, versions, code transformations execution time: -O 0 > -O 2 > -O 3 Code Relationships call order, send-receive partners, concurrency, functionality code region has expected concurrency of X Code Changes code change between revisions newer code expected to be not slower APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 25

Metadata and Inference Rules Category Metadata Examples Possible Rules Machines processor speed/type, memory size, number of cores CPU A faster than CPU B Components MPI implementation, linear algebra library, runtime components Component A faster than B Input problem size, input data, problem decomposition smaller problem means faster execution Algorithms FFT v. DFT, sparse matrix v. dense matrix algorithm A faster than B for problem size > X Configurations number of processors, runtime parameters, number of iterations more processors means faster execution, domain decomposition effect on execution Compiler choice, options, versions, code transformations execution time: -O 0 > -O 2 > -O 3 Code Relationships call order, send-receive partners, concurrency, functionality code region has expected concurrency of X Code Changes code change between revisions newer code expected to be not slower APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 25

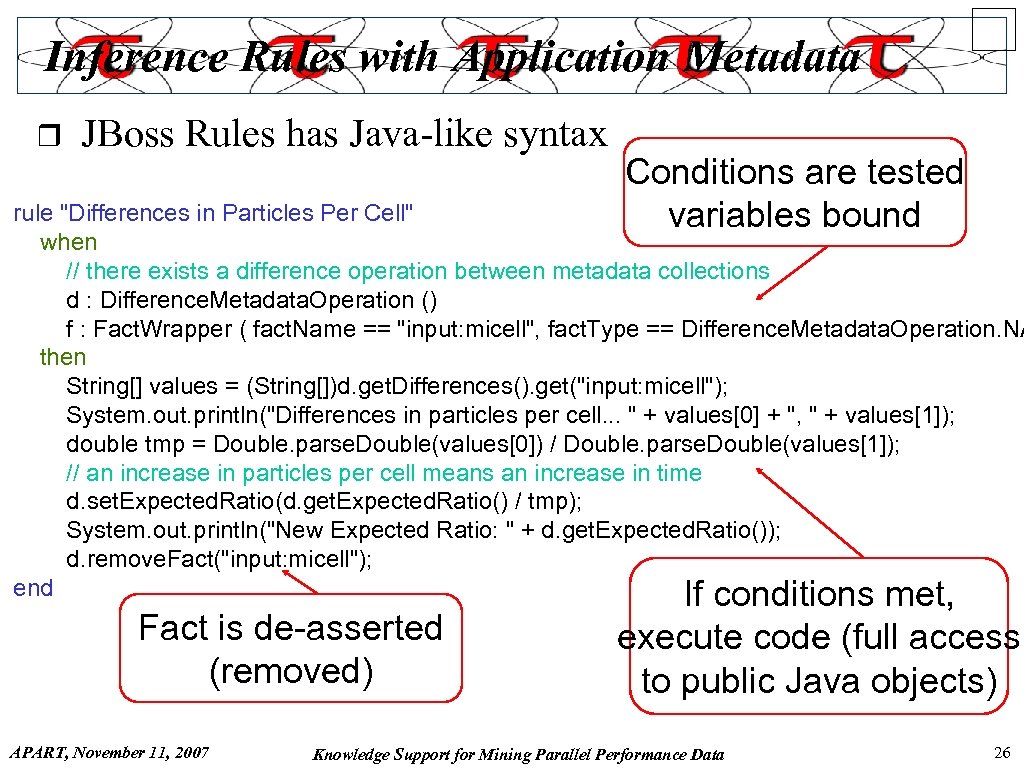

Inference Rules with Application Metadata r JBoss Rules has Java-like syntax Conditions are tested variables bound rule "Differences in Particles Per Cell" when // there exists a difference operation between metadata collections d : Difference. Metadata. Operation () f : Fact. Wrapper ( fact. Name == "input: micell", fact. Type == Difference. Metadata. Operation. NA then String[] values = (String[])d. get. Differences(). get("input: micell"); System. out. println("Differences in particles per cell. . . " + values[0] + ", " + values[1]); double tmp = Double. parse. Double(values[0]) / Double. parse. Double(values[1]); // an increase in particles per cell means an increase in time d. set. Expected. Ratio(d. get. Expected. Ratio() / tmp); System. out. println("New Expected Ratio: " + d. get. Expected. Ratio()); d. remove. Fact("input: micell"); end If conditions met, Fact is de-asserted (removed) APART, November 11, 2007 execute code (full access to public Java objects) Knowledge Support for Mining Parallel Performance Data 26

Inference Rules with Application Metadata r JBoss Rules has Java-like syntax Conditions are tested variables bound rule "Differences in Particles Per Cell" when // there exists a difference operation between metadata collections d : Difference. Metadata. Operation () f : Fact. Wrapper ( fact. Name == "input: micell", fact. Type == Difference. Metadata. Operation. NA then String[] values = (String[])d. get. Differences(). get("input: micell"); System. out. println("Differences in particles per cell. . . " + values[0] + ", " + values[1]); double tmp = Double. parse. Double(values[0]) / Double. parse. Double(values[1]); // an increase in particles per cell means an increase in time d. set. Expected. Ratio(d. get. Expected. Ratio() / tmp); System. out. println("New Expected Ratio: " + d. get. Expected. Ratio()); d. remove. Fact("input: micell"); end If conditions met, Fact is de-asserted (removed) APART, November 11, 2007 execute code (full access to public Java objects) Knowledge Support for Mining Parallel Performance Data 26

Data Persistence and Provenance r r r Analysis results should include where they came from Data persistence captures intermediate analysis results and final results and saves for later access Persistence allows analysis results to be reused ¦ ¦ r Storing all operations in the analysis workflow generates full provenance of the intermediate and final results ¦ r r Some analysis operations can take a long time Breadth-wise inference analysis and cross-workflow Supports confirmation and validation of analysis results Inference engine may need as well Persistence/Provence used to create “chain of evidence” APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 27

Data Persistence and Provenance r r r Analysis results should include where they came from Data persistence captures intermediate analysis results and final results and saves for later access Persistence allows analysis results to be reused ¦ ¦ r Storing all operations in the analysis workflow generates full provenance of the intermediate and final results ¦ r r Some analysis operations can take a long time Breadth-wise inference analysis and cross-workflow Supports confirmation and validation of analysis results Inference engine may need as well Persistence/Provence used to create “chain of evidence” APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 27

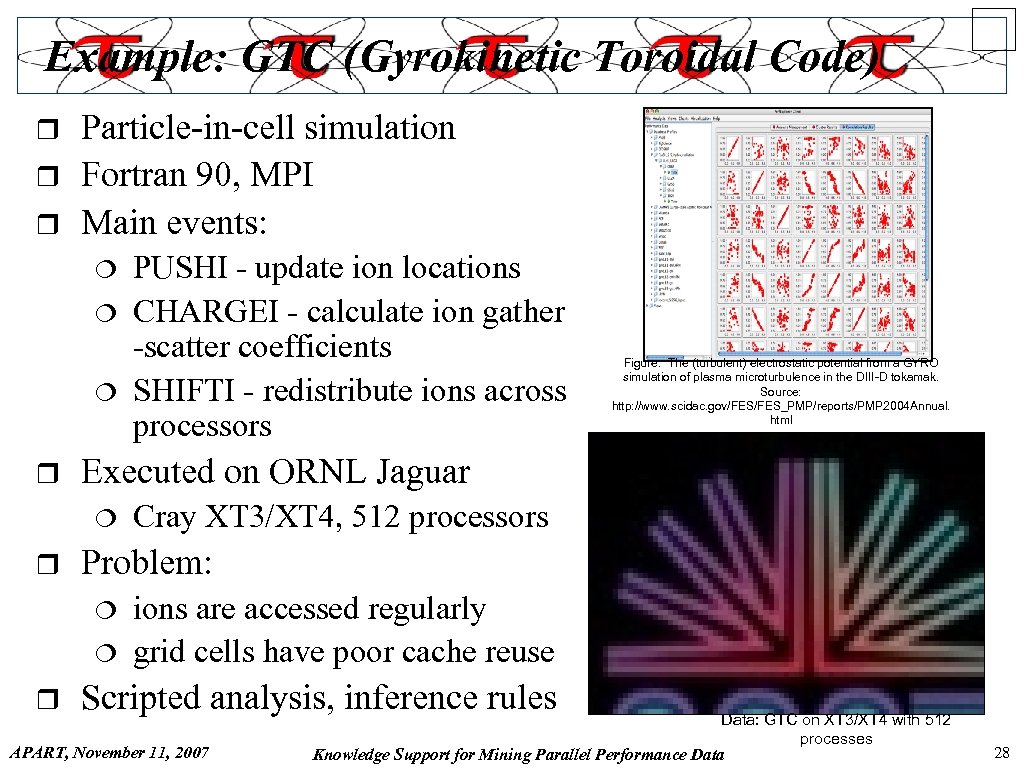

Example: GTC (Gyrokinetic Toroidal Code) r r r Particle-in-cell simulation Fortran 90, MPI Main events: ¦ ¦ ¦ r Cray XT 3/XT 4, 512 processors Problem: ¦ ¦ r Figure: The (turbulent) electrostatic potential from a GYRO simulation of plasma microturbulence in the DIII-D tokamak. Source: http: //www. scidac. gov/FES_PMP/reports/PMP 2004 Annual. html Executed on ORNL Jaguar ¦ r PUSHI - update ion locations CHARGEI - calculate ion gather -scatter coefficients SHIFTI - redistribute ions across processors ions are accessed regularly grid cells have poor cache reuse Scripted analysis, inference rules APART, November 11, 2007 Data: GTC on XT 3/XT 4 with 512 processes Knowledge Support for Mining Parallel Performance Data 28

Example: GTC (Gyrokinetic Toroidal Code) r r r Particle-in-cell simulation Fortran 90, MPI Main events: ¦ ¦ ¦ r Cray XT 3/XT 4, 512 processors Problem: ¦ ¦ r Figure: The (turbulent) electrostatic potential from a GYRO simulation of plasma microturbulence in the DIII-D tokamak. Source: http: //www. scidac. gov/FES_PMP/reports/PMP 2004 Annual. html Executed on ORNL Jaguar ¦ r PUSHI - update ion locations CHARGEI - calculate ion gather -scatter coefficients SHIFTI - redistribute ions across processors ions are accessed regularly grid cells have poor cache reuse Scripted analysis, inference rules APART, November 11, 2007 Data: GTC on XT 3/XT 4 with 512 processes Knowledge Support for Mining Parallel Performance Data 28

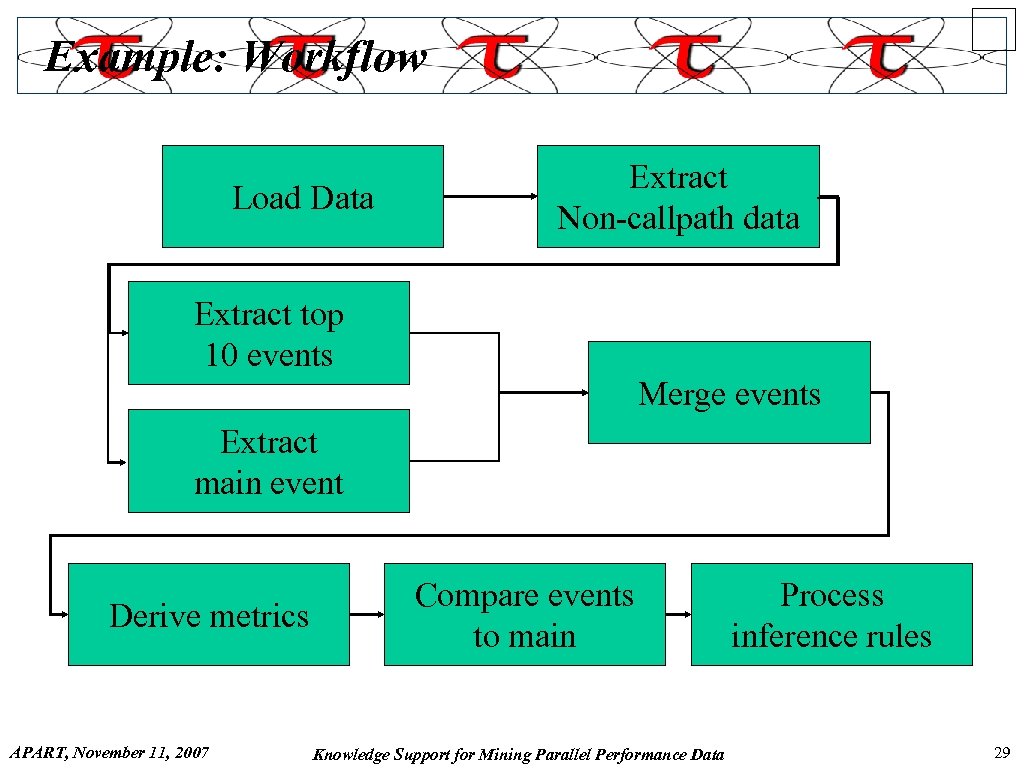

Example: Workflow Load Data Extract Non-callpath data Extract top 10 events Merge events Extract main event Derive metrics APART, November 11, 2007 Compare events to main Knowledge Support for Mining Parallel Performance Data Process inference rules 29

Example: Workflow Load Data Extract Non-callpath data Extract top 10 events Merge events Extract main event Derive metrics APART, November 11, 2007 Compare events to main Knowledge Support for Mining Parallel Performance Data Process inference rules 29

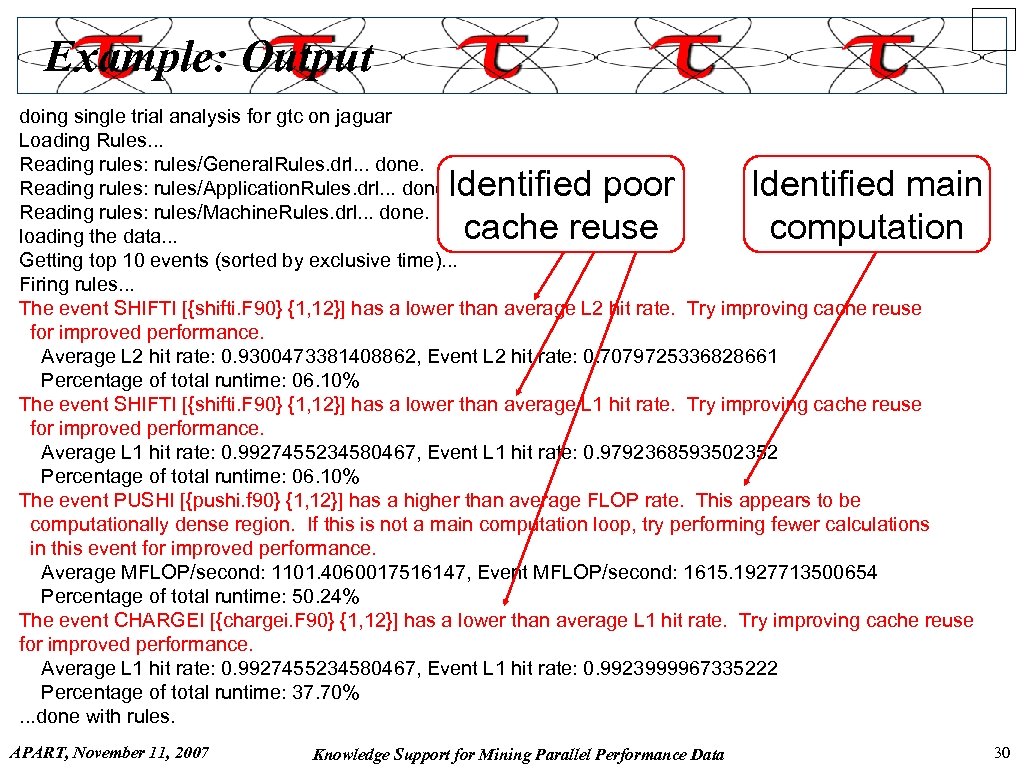

Example: Output doing single trial analysis for gtc on jaguar Loading Rules. . . Reading rules: rules/General. Rules. drl. . . done. Reading rules: rules/Application. Rules. drl. . . done. Reading rules: rules/Machine. Rules. drl. . . done. loading the data. . . Getting top 10 events (sorted by exclusive time). . . Firing rules. . . The event SHIFTI [{shifti. F 90} {1, 12}] has a lower than average L 2 hit rate. Try improving cache reuse for improved performance. Average L 2 hit rate: 0. 9300473381408862, Event L 2 hit rate: 0. 7079725336828661 Percentage of total runtime: 06. 10% The event SHIFTI [{shifti. F 90} {1, 12}] has a lower than average L 1 hit rate. Try improving cache reuse for improved performance. Average L 1 hit rate: 0. 9927455234580467, Event L 1 hit rate: 0. 9792368593502352 Percentage of total runtime: 06. 10% The event PUSHI [{pushi. f 90} {1, 12}] has a higher than average FLOP rate. This appears to be computationally dense region. If this is not a main computation loop, try performing fewer calculations in this event for improved performance. Average MFLOP/second: 1101. 4060017516147, Event MFLOP/second: 1615. 1927713500654 Percentage of total runtime: 50. 24% The event CHARGEI [{chargei. F 90} {1, 12}] has a lower than average L 1 hit rate. Try improving cache reuse for improved performance. Average L 1 hit rate: 0. 9927455234580467, Event L 1 hit rate: 0. 9923999967335222 Percentage of total runtime: 37. 70%. . . done with rules. Identified poor cache reuse APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data Identified main computation 30

Example: Output doing single trial analysis for gtc on jaguar Loading Rules. . . Reading rules: rules/General. Rules. drl. . . done. Reading rules: rules/Application. Rules. drl. . . done. Reading rules: rules/Machine. Rules. drl. . . done. loading the data. . . Getting top 10 events (sorted by exclusive time). . . Firing rules. . . The event SHIFTI [{shifti. F 90} {1, 12}] has a lower than average L 2 hit rate. Try improving cache reuse for improved performance. Average L 2 hit rate: 0. 9300473381408862, Event L 2 hit rate: 0. 7079725336828661 Percentage of total runtime: 06. 10% The event SHIFTI [{shifti. F 90} {1, 12}] has a lower than average L 1 hit rate. Try improving cache reuse for improved performance. Average L 1 hit rate: 0. 9927455234580467, Event L 1 hit rate: 0. 9792368593502352 Percentage of total runtime: 06. 10% The event PUSHI [{pushi. f 90} {1, 12}] has a higher than average FLOP rate. This appears to be computationally dense region. If this is not a main computation loop, try performing fewer calculations in this event for improved performance. Average MFLOP/second: 1101. 4060017516147, Event MFLOP/second: 1615. 1927713500654 Percentage of total runtime: 50. 24% The event CHARGEI [{chargei. F 90} {1, 12}] has a lower than average L 1 hit rate. Try improving cache reuse for improved performance. Average L 1 hit rate: 0. 9927455234580467, Event L 1 hit rate: 0. 9923999967335222 Percentage of total runtime: 37. 70%. . . done with rules. Identified poor cache reuse APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data Identified main computation 30

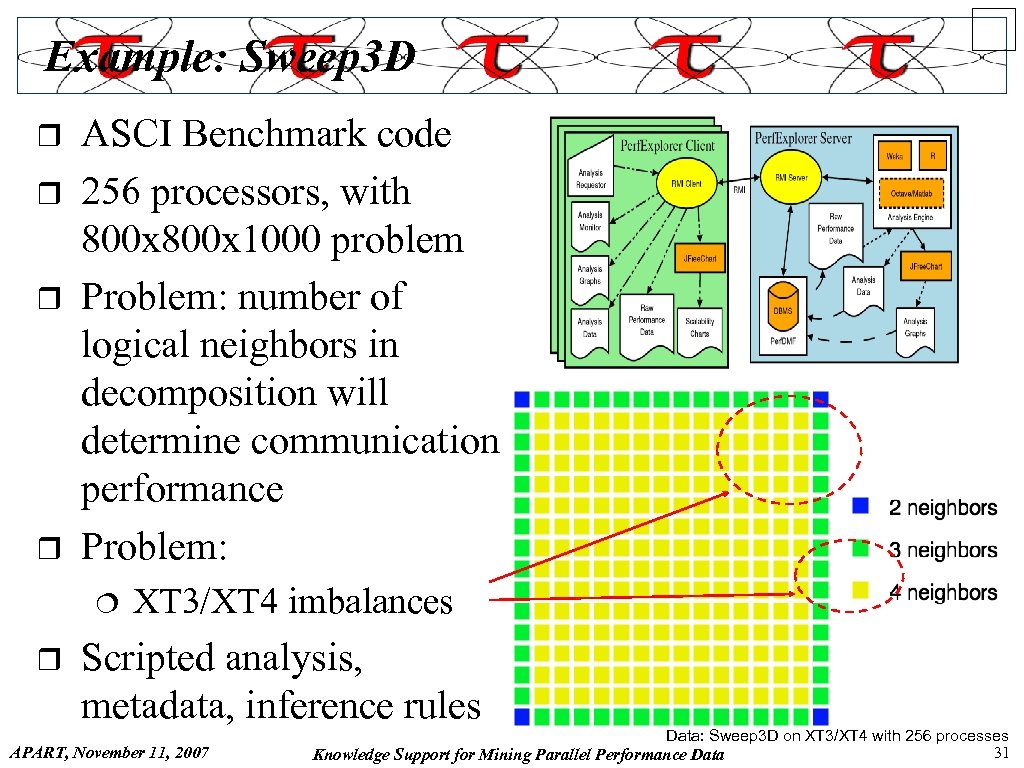

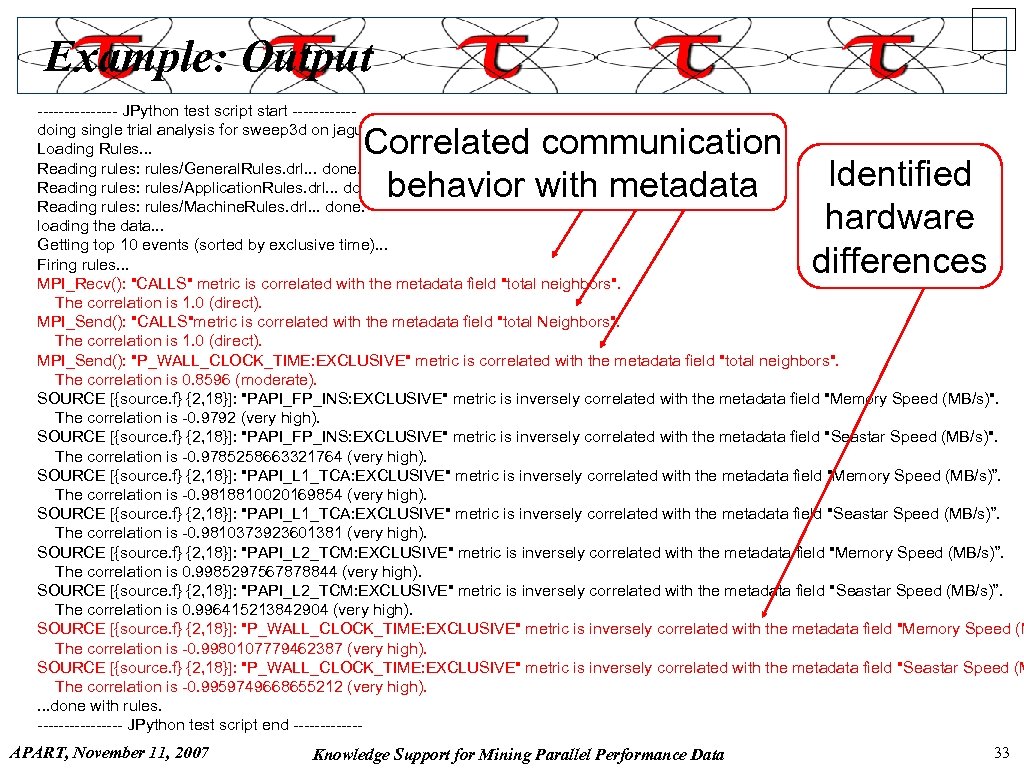

Example: Sweep 3 D r r ASCI Benchmark code 256 processors, with 800 x 1000 problem Problem: number of logical neighbors in decomposition will determine communication performance Problem: ¦ r XT 3/XT 4 imbalances Scripted analysis, metadata, inference rules APART, November 11, 2007 Data: Sweep 3 D on XT 3/XT 4 with 256 processes Knowledge Support for Mining Parallel Performance Data 31

Example: Sweep 3 D r r ASCI Benchmark code 256 processors, with 800 x 1000 problem Problem: number of logical neighbors in decomposition will determine communication performance Problem: ¦ r XT 3/XT 4 imbalances Scripted analysis, metadata, inference rules APART, November 11, 2007 Data: Sweep 3 D on XT 3/XT 4 with 256 processes Knowledge Support for Mining Parallel Performance Data 31

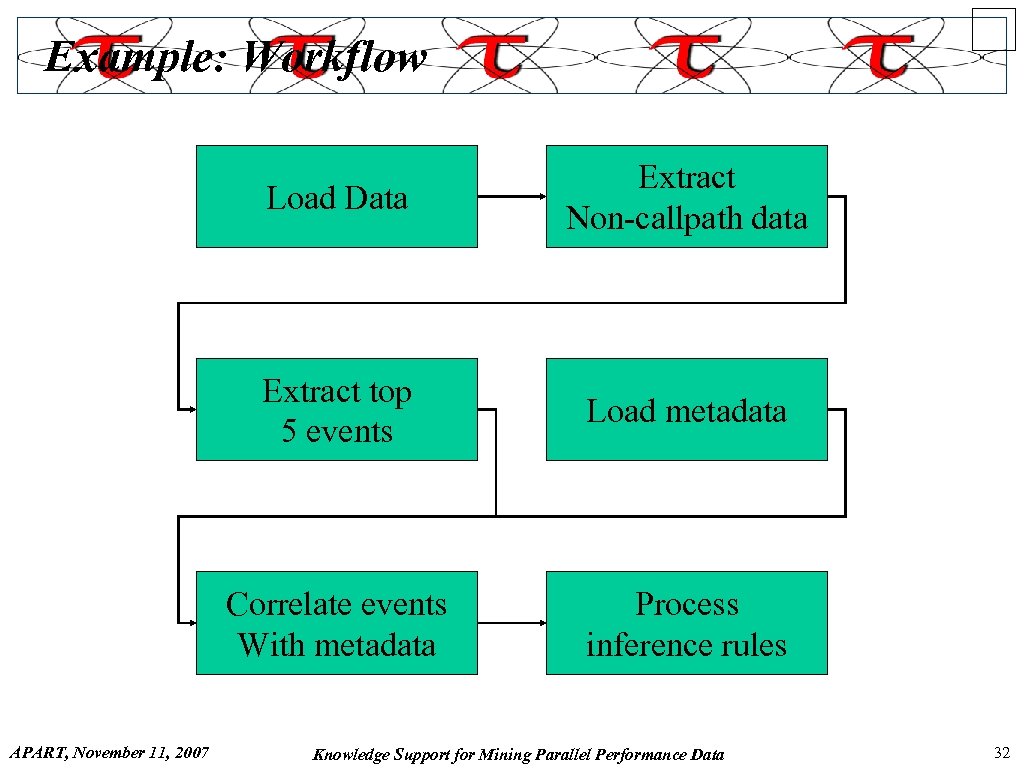

Example: Workflow Load Data Extract top 5 events Load metadata Correlate events With metadata APART, November 11, 2007 Extract Non-callpath data Process inference rules Knowledge Support for Mining Parallel Performance Data 32

Example: Workflow Load Data Extract top 5 events Load metadata Correlate events With metadata APART, November 11, 2007 Extract Non-callpath data Process inference rules Knowledge Support for Mining Parallel Performance Data 32

Example: Output -------- JPython test script start ------doing single trial analysis for sweep 3 d on jaguar Loading Rules. . . Reading rules: rules/General. Rules. drl. . . done. Reading rules: rules/Application. Rules. drl. . . done. Reading rules: rules/Machine. Rules. drl. . . done. loading the data. . . Getting top 10 events (sorted by exclusive time). . . Firing rules. . . MPI_Recv(): "CALLS" metric is correlated with the metadata field "total neighbors". The correlation is 1. 0 (direct). MPI_Send(): "CALLS"metric is correlated with the metadata field "total Neighbors". The correlation is 1. 0 (direct). MPI_Send(): "P_WALL_CLOCK_TIME: EXCLUSIVE" metric is correlated with the metadata field "total neighbors". The correlation is 0. 8596 (moderate). SOURCE [{source. f} {2, 18}]: "PAPI_FP_INS: EXCLUSIVE" metric is inversely correlated with the metadata field "Memory Speed (MB/s)". The correlation is -0. 9792 (very high). SOURCE [{source. f} {2, 18}]: "PAPI_FP_INS: EXCLUSIVE" metric is inversely correlated with the metadata field "Seastar Speed (MB/s)". The correlation is -0. 9785258663321764 (very high). SOURCE [{source. f} {2, 18}]: "PAPI_L 1_TCA: EXCLUSIVE" metric is inversely correlated with the metadata field "Memory Speed (MB/s)”. The correlation is -0. 9818810020169854 (very high). SOURCE [{source. f} {2, 18}]: "PAPI_L 1_TCA: EXCLUSIVE" metric is inversely correlated with the metadata field "Seastar Speed (MB/s)”. The correlation is -0. 9810373923601381 (very high). SOURCE [{source. f} {2, 18}]: "PAPI_L 2_TCM: EXCLUSIVE" metric is inversely correlated with the metadata field "Memory Speed (MB/s)”. The correlation is 0. 9985297567878844 (very high). SOURCE [{source. f} {2, 18}]: "PAPI_L 2_TCM: EXCLUSIVE" metric is inversely correlated with the metadata field "Seastar Speed (MB/s)”. The correlation is 0. 996415213842904 (very high). SOURCE [{source. f} {2, 18}]: "P_WALL_CLOCK_TIME: EXCLUSIVE" metric is inversely correlated with the metadata field "Memory Speed (M The correlation is -0. 9980107779462387 (very high). SOURCE [{source. f} {2, 18}]: "P_WALL_CLOCK_TIME: EXCLUSIVE" metric is inversely correlated with the metadata field "Seastar Speed (M The correlation is -0. 9959749668655212 (very high). . done with rules. -------- JPython test script end ------- Correlated communication behavior with metadata APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data Identified hardware differences 33

Example: Output -------- JPython test script start ------doing single trial analysis for sweep 3 d on jaguar Loading Rules. . . Reading rules: rules/General. Rules. drl. . . done. Reading rules: rules/Application. Rules. drl. . . done. Reading rules: rules/Machine. Rules. drl. . . done. loading the data. . . Getting top 10 events (sorted by exclusive time). . . Firing rules. . . MPI_Recv(): "CALLS" metric is correlated with the metadata field "total neighbors". The correlation is 1. 0 (direct). MPI_Send(): "CALLS"metric is correlated with the metadata field "total Neighbors". The correlation is 1. 0 (direct). MPI_Send(): "P_WALL_CLOCK_TIME: EXCLUSIVE" metric is correlated with the metadata field "total neighbors". The correlation is 0. 8596 (moderate). SOURCE [{source. f} {2, 18}]: "PAPI_FP_INS: EXCLUSIVE" metric is inversely correlated with the metadata field "Memory Speed (MB/s)". The correlation is -0. 9792 (very high). SOURCE [{source. f} {2, 18}]: "PAPI_FP_INS: EXCLUSIVE" metric is inversely correlated with the metadata field "Seastar Speed (MB/s)". The correlation is -0. 9785258663321764 (very high). SOURCE [{source. f} {2, 18}]: "PAPI_L 1_TCA: EXCLUSIVE" metric is inversely correlated with the metadata field "Memory Speed (MB/s)”. The correlation is -0. 9818810020169854 (very high). SOURCE [{source. f} {2, 18}]: "PAPI_L 1_TCA: EXCLUSIVE" metric is inversely correlated with the metadata field "Seastar Speed (MB/s)”. The correlation is -0. 9810373923601381 (very high). SOURCE [{source. f} {2, 18}]: "PAPI_L 2_TCM: EXCLUSIVE" metric is inversely correlated with the metadata field "Memory Speed (MB/s)”. The correlation is 0. 9985297567878844 (very high). SOURCE [{source. f} {2, 18}]: "PAPI_L 2_TCM: EXCLUSIVE" metric is inversely correlated with the metadata field "Seastar Speed (MB/s)”. The correlation is 0. 996415213842904 (very high). SOURCE [{source. f} {2, 18}]: "P_WALL_CLOCK_TIME: EXCLUSIVE" metric is inversely correlated with the metadata field "Memory Speed (M The correlation is -0. 9980107779462387 (very high). SOURCE [{source. f} {2, 18}]: "P_WALL_CLOCK_TIME: EXCLUSIVE" metric is inversely correlated with the metadata field "Seastar Speed (M The correlation is -0. 9959749668655212 (very high). . done with rules. -------- JPython test script end ------- Correlated communication behavior with metadata APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data Identified hardware differences 33

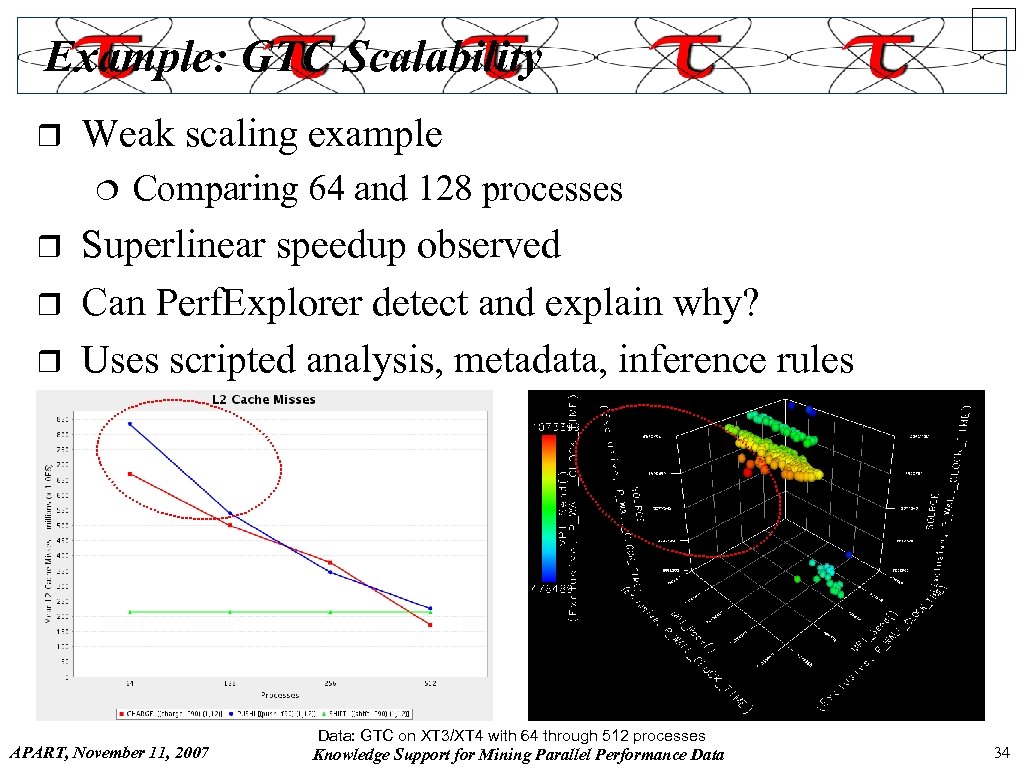

Example: GTC Scalability r Weak scaling example ¦ r r r Comparing 64 and 128 processes Superlinear speedup observed Can Perf. Explorer detect and explain why? Uses scripted analysis, metadata, inference rules APART, November 11, 2007 Data: GTC on XT 3/XT 4 with 64 through 512 processes Knowledge Support for Mining Parallel Performance Data 34

Example: GTC Scalability r Weak scaling example ¦ r r r Comparing 64 and 128 processes Superlinear speedup observed Can Perf. Explorer detect and explain why? Uses scripted analysis, metadata, inference rules APART, November 11, 2007 Data: GTC on XT 3/XT 4 with 64 through 512 processes Knowledge Support for Mining Parallel Performance Data 34

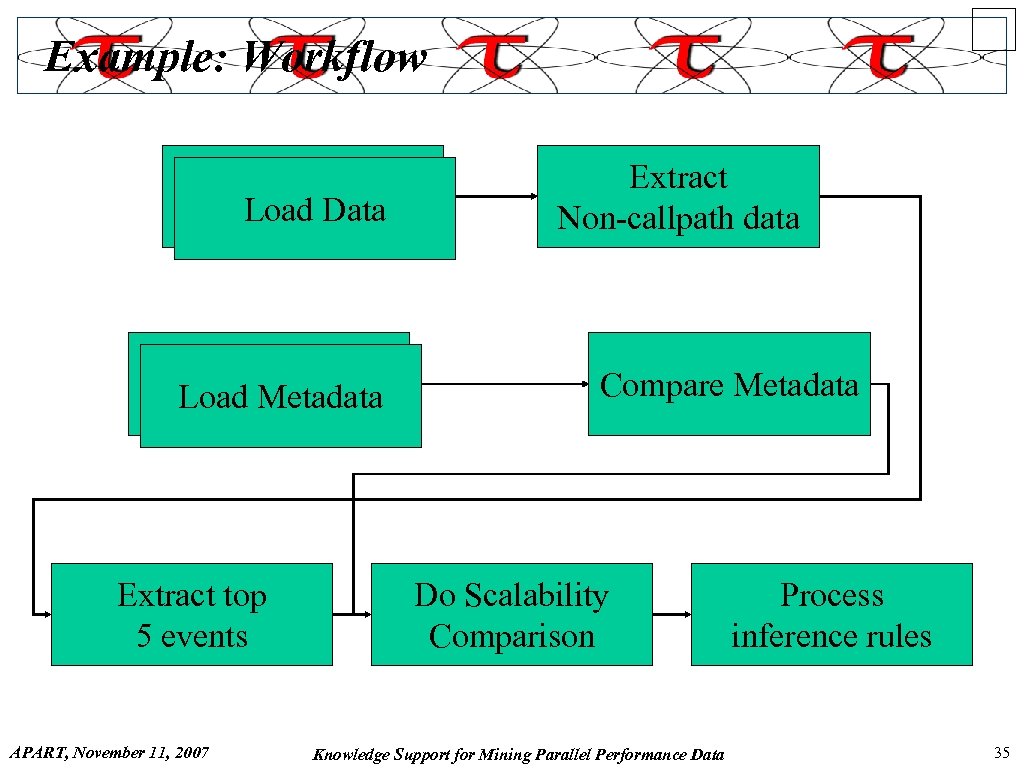

Example: Workflow Load Data Load Metadata Extract top 5 events APART, November 11, 2007 Extract Non-callpath data Compare Metadata Do Scalability Comparison Knowledge Support for Mining Parallel Performance Data Process inference rules 35

Example: Workflow Load Data Load Metadata Extract top 5 events APART, November 11, 2007 Extract Non-callpath data Compare Metadata Do Scalability Comparison Knowledge Support for Mining Parallel Performance Data Process inference rules 35

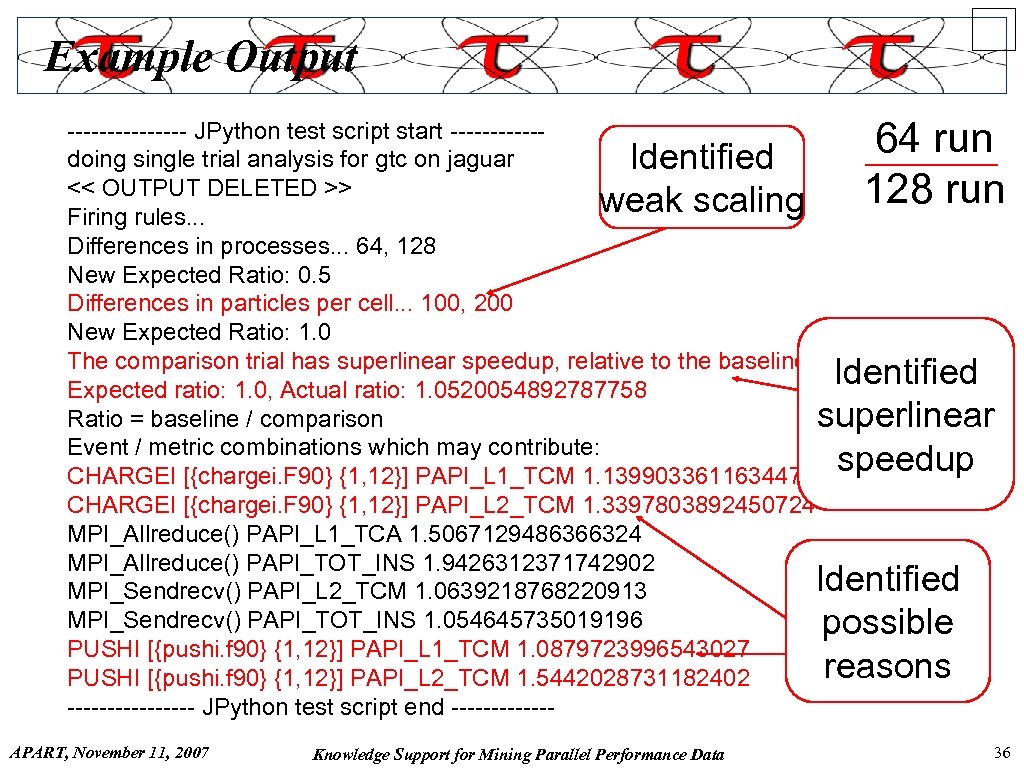

Example Output 64 run 128 run -------- JPython test script start ------doing single trial analysis for gtc on jaguar Identified << OUTPUT DELETED >> weak scaling Firing rules. . . Differences in processes. . . 64, 128 New Expected Ratio: 0. 5 Differences in particles per cell. . . 100, 200 New Expected Ratio: 1. 0 The comparison trial has superlinear speedup, relative to the baseline trial Identified Expected ratio: 1. 0, Actual ratio: 1. 0520054892787758 Ratio = baseline / comparison superlinear Event / metric combinations which may contribute: speedup CHARGEI [{chargei. F 90} {1, 12}] PAPI_L 1_TCM 1. 1399033611634473 CHARGEI [{chargei. F 90} {1, 12}] PAPI_L 2_TCM 1. 3397803892450724 MPI_Allreduce() PAPI_L 1_TCA 1. 5067129486366324 MPI_Allreduce() PAPI_TOT_INS 1. 9426312371742902 Identified MPI_Sendrecv() PAPI_L 2_TCM 1. 0639218768220913 MPI_Sendrecv() PAPI_TOT_INS 1. 054645735019196 possible PUSHI [{pushi. f 90} {1, 12}] PAPI_L 1_TCM 1. 0879723996543027 reasons PUSHI [{pushi. f 90} {1, 12}] PAPI_L 2_TCM 1. 5442028731182402 -------- JPython test script end ------APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 36

Example Output 64 run 128 run -------- JPython test script start ------doing single trial analysis for gtc on jaguar Identified << OUTPUT DELETED >> weak scaling Firing rules. . . Differences in processes. . . 64, 128 New Expected Ratio: 0. 5 Differences in particles per cell. . . 100, 200 New Expected Ratio: 1. 0 The comparison trial has superlinear speedup, relative to the baseline trial Identified Expected ratio: 1. 0, Actual ratio: 1. 0520054892787758 Ratio = baseline / comparison superlinear Event / metric combinations which may contribute: speedup CHARGEI [{chargei. F 90} {1, 12}] PAPI_L 1_TCM 1. 1399033611634473 CHARGEI [{chargei. F 90} {1, 12}] PAPI_L 2_TCM 1. 3397803892450724 MPI_Allreduce() PAPI_L 1_TCA 1. 5067129486366324 MPI_Allreduce() PAPI_TOT_INS 1. 9426312371742902 Identified MPI_Sendrecv() PAPI_L 2_TCM 1. 0639218768220913 MPI_Sendrecv() PAPI_TOT_INS 1. 054645735019196 possible PUSHI [{pushi. f 90} {1, 12}] PAPI_L 1_TCM 1. 0879723996543027 reasons PUSHI [{pushi. f 90} {1, 12}] PAPI_L 2_TCM 1. 5442028731182402 -------- JPython test script end ------APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 36

Current Status r Working now ¦ ¦ ¦ r Several analysis operations are written Metadata collection is available Scripting and inference engine are in place To be developed ¦ ¦ Results persistence Provenance capture and storage APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 37

Current Status r Working now ¦ ¦ ¦ r Several analysis operations are written Metadata collection is available Scripting and inference engine are in place To be developed ¦ ¦ Results persistence Provenance capture and storage APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 37

Conclusion r Mining performance data will depend on adding knowledge to analysis frameworks ¦ ¦ ¦ r r r Application, hardware, environment metadata Analysis processes and workflow Rules for inferencing and analysis search Expert knowledge combined with performance results can explain performance phenomena Redesigned Perf. Explorer framework is one approach Community performance knowledge engineering ¦ ¦ ¦ Developing inference rules Constructing analysis processes Application-specific metadata and analysis APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 38

Conclusion r Mining performance data will depend on adding knowledge to analysis frameworks ¦ ¦ ¦ r r r Application, hardware, environment metadata Analysis processes and workflow Rules for inferencing and analysis search Expert knowledge combined with performance results can explain performance phenomena Redesigned Perf. Explorer framework is one approach Community performance knowledge engineering ¦ ¦ ¦ Developing inference rules Constructing analysis processes Application-specific metadata and analysis APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 38

Acknowledgments r US Department of Energy (DOE) ¦ Office of Science Ø MICS, r NSF ¦ r r Software and Tools for High-End Computing PERI Sci. DAC ¦ r Argonne National Lab PERI-DB project TAU and Perf. Explorer demos: NNSA / ASC, booth #1617, various times daily PERI-DB demo: RENCI booth #3215, Wednesday at 2: 30 PM APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 39

Acknowledgments r US Department of Energy (DOE) ¦ Office of Science Ø MICS, r NSF ¦ r r Software and Tools for High-End Computing PERI Sci. DAC ¦ r Argonne National Lab PERI-DB project TAU and Perf. Explorer demos: NNSA / ASC, booth #1617, various times daily PERI-DB demo: RENCI booth #3215, Wednesday at 2: 30 PM APART, November 11, 2007 Knowledge Support for Mining Parallel Performance Data 39