b2faf5403e30113a043a1fec5765160f.ppt

- Количество слайдов: 39

KDD and Data Mining Instructor: Dragomir R. Radev Winter 2005 Fundamentals, Design, and Implementation, 9/e

KDD and Data Mining Instructor: Dragomir R. Radev Winter 2005 Fundamentals, Design, and Implementation, 9/e

The big problem § Billions of records § A small number of interesting patterns § “Data rich but information poor” Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 2

The big problem § Billions of records § A small number of interesting patterns § “Data rich but information poor” Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 2

Data mining § Knowledge discovery § Knowledge extraction § Data/pattern analysis Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 3

Data mining § Knowledge discovery § Knowledge extraction § Data/pattern analysis Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 3

Types of source data § § Relational databases Transactional databases Web logs Textual databases Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 4

Types of source data § § Relational databases Transactional databases Web logs Textual databases Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 4

Association rules § 65% of all customers who buy beer and tomato sauce also buy pasta and chicken wings § Association rules: X Y Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 5

Association rules § 65% of all customers who buy beer and tomato sauce also buy pasta and chicken wings § Association rules: X Y Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 5

Association analysis § IF 20 < age < 30 AND 20 K < INCOME < 30 K § THEN – Buys (“CD player”) § SUPPORT = 2%, CONFIDENCE = 60% Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 6

Association analysis § IF 20 < age < 30 AND 20 K < INCOME < 30 K § THEN – Buys (“CD player”) § SUPPORT = 2%, CONFIDENCE = 60% Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 6

Basic concepts § Minimum support threshold § Minimum confidence threshold § Itemsets § Occurrence frequency of an itemset Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 7

Basic concepts § Minimum support threshold § Minimum confidence threshold § Itemsets § Occurrence frequency of an itemset Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 7

Association rule mining § Find all frequent itemsets § Generate strong association rules from the frequent itemsets Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 8

Association rule mining § Find all frequent itemsets § Generate strong association rules from the frequent itemsets Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 8

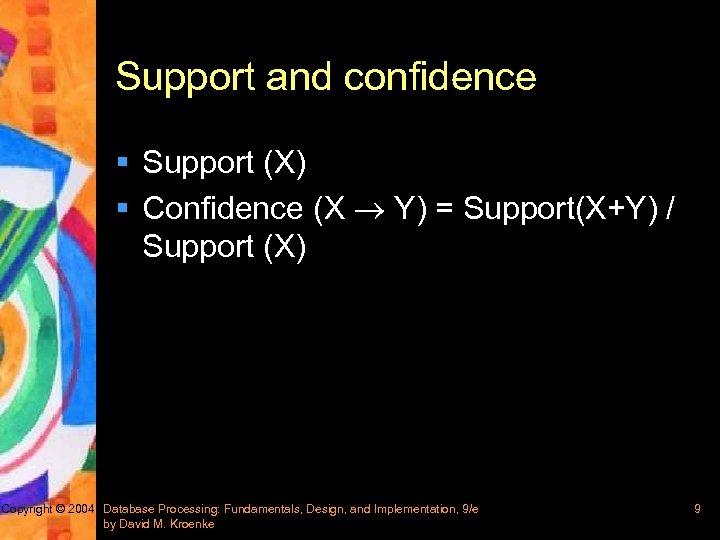

Support and confidence § Support (X) § Confidence (X Y) = Support(X+Y) / Support (X) Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 9

Support and confidence § Support (X) § Confidence (X Y) = Support(X+Y) / Support (X) Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 9

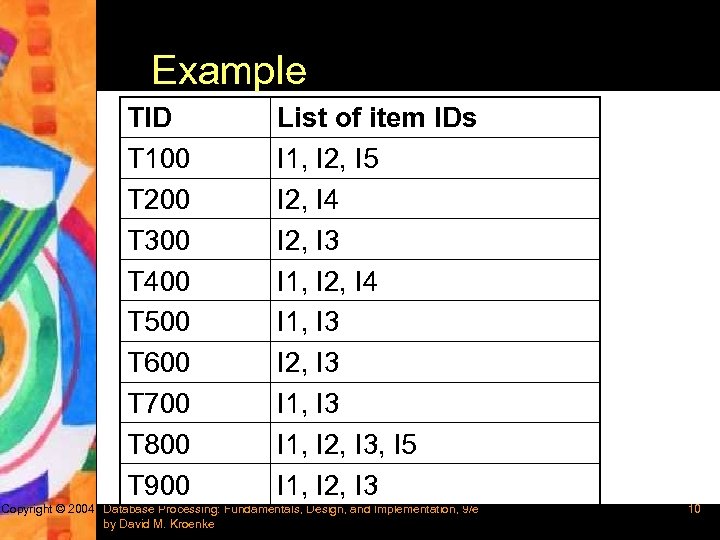

Example TID T 100 T 200 T 300 T 400 T 500 T 600 T 700 T 800 T 900 List of item IDs I 1, I 2, I 5 I 2, I 4 I 2, I 3 I 1, I 2, I 4 I 1, I 3 I 2, I 3 I 1, I 2, I 3, I 5 I 1, I 2, I 3 Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 10

Example TID T 100 T 200 T 300 T 400 T 500 T 600 T 700 T 800 T 900 List of item IDs I 1, I 2, I 5 I 2, I 4 I 2, I 3 I 1, I 2, I 4 I 1, I 3 I 2, I 3 I 1, I 2, I 3, I 5 I 1, I 2, I 3 Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 10

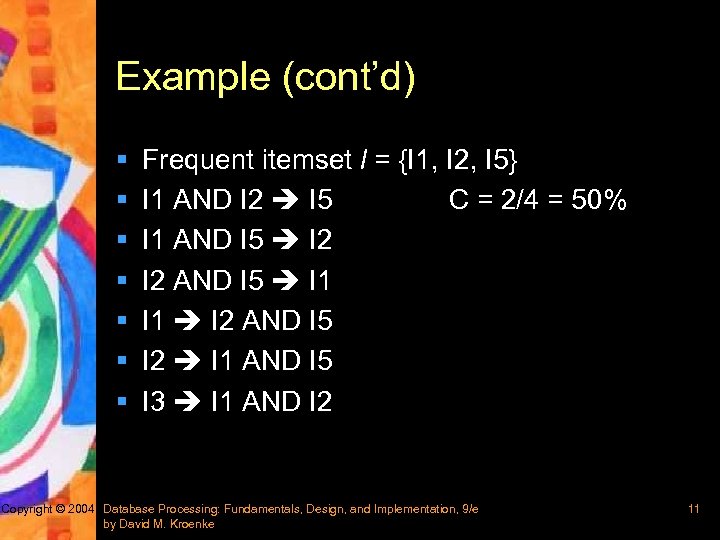

Example (cont’d) § § § § Frequent itemset l = {I 1, I 2, I 5} I 1 AND I 2 I 5 C = 2/4 = 50% I 1 AND I 5 I 2 AND I 5 I 1 I 2 AND I 5 I 2 I 1 AND I 5 I 3 I 1 AND I 2 Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 11

Example (cont’d) § § § § Frequent itemset l = {I 1, I 2, I 5} I 1 AND I 2 I 5 C = 2/4 = 50% I 1 AND I 5 I 2 AND I 5 I 1 I 2 AND I 5 I 2 I 1 AND I 5 I 3 I 1 AND I 2 Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 11

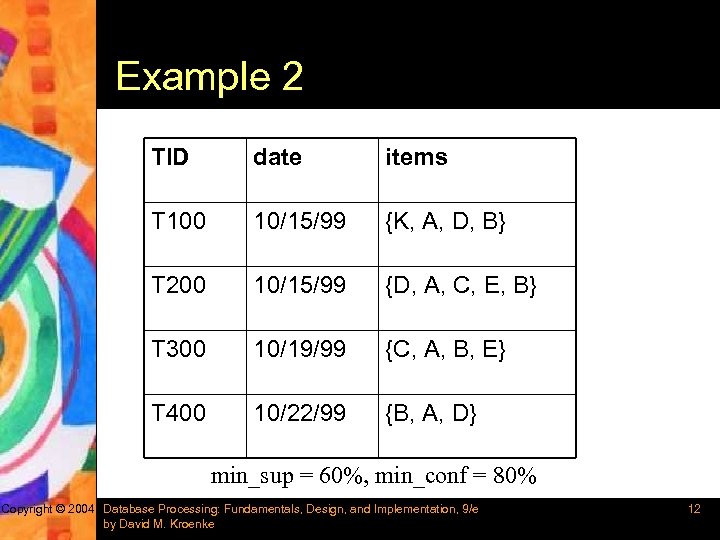

Example 2 TID date items T 100 10/15/99 {K, A, D, B} T 200 10/15/99 {D, A, C, E, B} T 300 10/19/99 {C, A, B, E} T 400 10/22/99 {B, A, D} min_sup = 60%, min_conf = 80% Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 12

Example 2 TID date items T 100 10/15/99 {K, A, D, B} T 200 10/15/99 {D, A, C, E, B} T 300 10/19/99 {C, A, B, E} T 400 10/22/99 {B, A, D} min_sup = 60%, min_conf = 80% Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 12

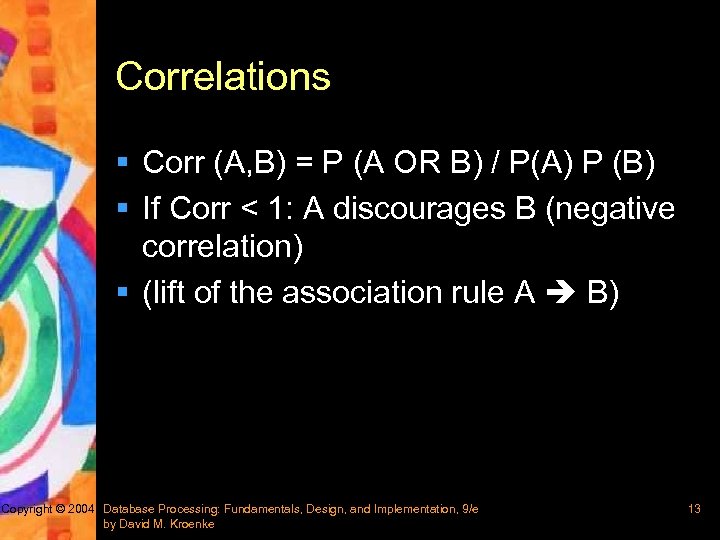

Correlations § Corr (A, B) = P (A OR B) / P(A) P (B) § If Corr < 1: A discourages B (negative correlation) § (lift of the association rule A B) Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 13

Correlations § Corr (A, B) = P (A OR B) / P(A) P (B) § If Corr < 1: A discourages B (negative correlation) § (lift of the association rule A B) Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 13

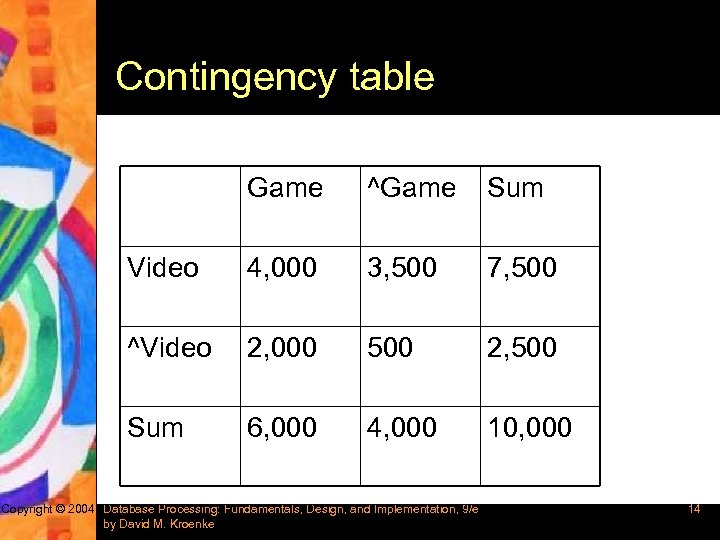

Contingency table Game ^Game Sum Video 4, 000 3, 500 7, 500 ^Video 2, 000 500 2, 500 Sum 6, 000 4, 000 10, 000 Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 14

Contingency table Game ^Game Sum Video 4, 000 3, 500 7, 500 ^Video 2, 000 500 2, 500 Sum 6, 000 4, 000 10, 000 Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 14

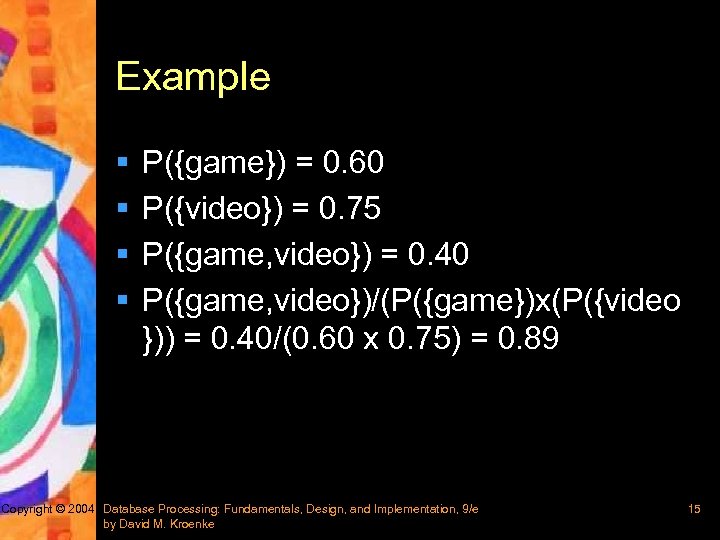

Example § § P({game}) = 0. 60 P({video}) = 0. 75 P({game, video}) = 0. 40 P({game, video})/(P({game})x(P({video })) = 0. 40/(0. 60 x 0. 75) = 0. 89 Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 15

Example § § P({game}) = 0. 60 P({video}) = 0. 75 P({game, video}) = 0. 40 P({game, video})/(P({game})x(P({video })) = 0. 40/(0. 60 x 0. 75) = 0. 89 Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 15

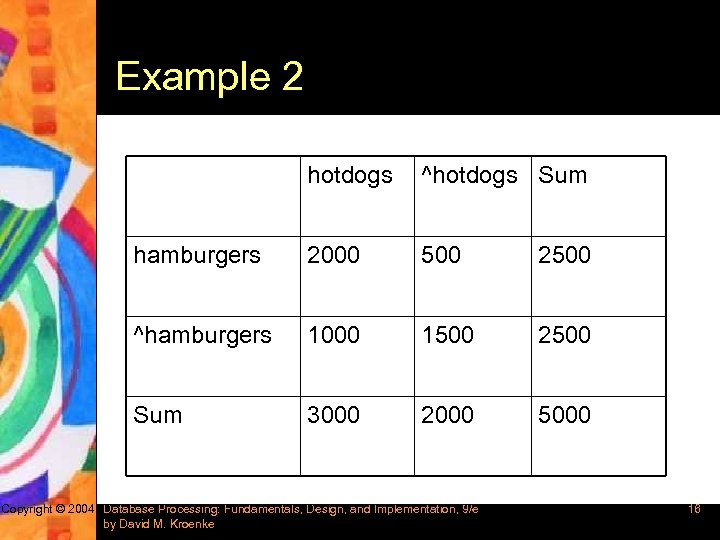

Example 2 hotdogs ^hotdogs Sum hamburgers 2000 500 2500 ^hamburgers 1000 1500 2500 Sum 3000 2000 5000 Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 16

Example 2 hotdogs ^hotdogs Sum hamburgers 2000 500 2500 ^hamburgers 1000 1500 2500 Sum 3000 2000 5000 Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 16

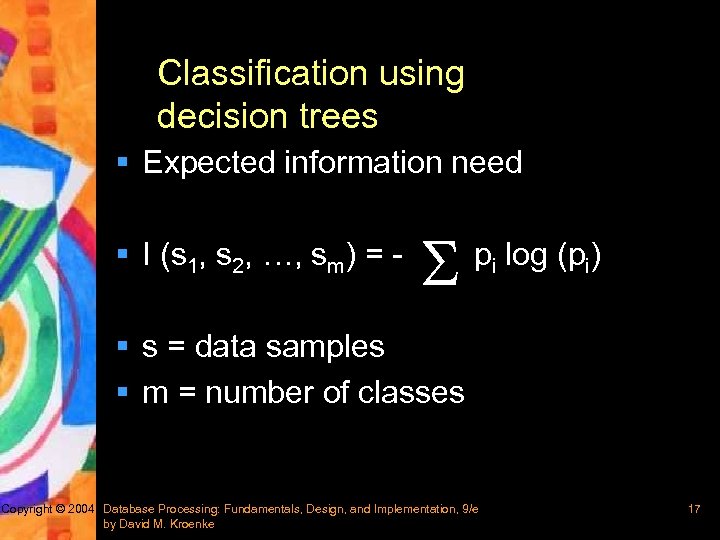

Classification using decision trees § Expected information need § I (s 1, s 2, …, sm) = - S pi log (pi) § s = data samples § m = number of classes Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 17

Classification using decision trees § Expected information need § I (s 1, s 2, …, sm) = - S pi log (pi) § s = data samples § m = number of classes Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 17

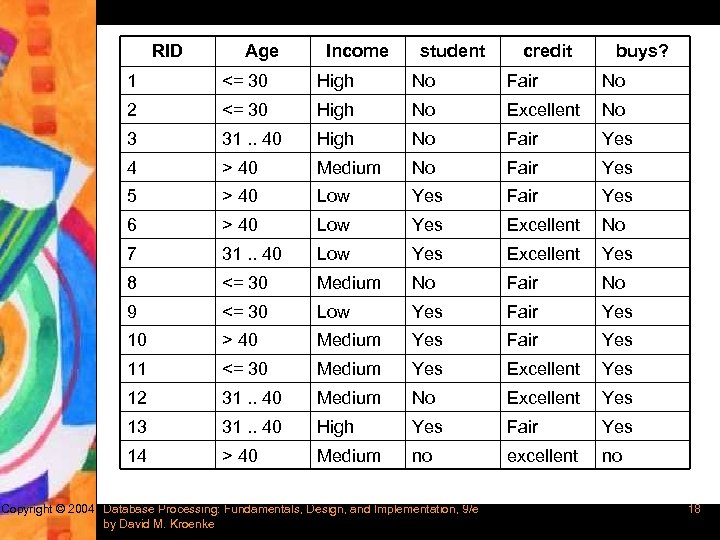

RID Age Income student credit buys? 1 <= 30 High No Fair No 2 <= 30 High No Excellent No 3 31. . 40 High No Fair Yes 4 > 40 Medium No Fair Yes 5 > 40 Low Yes Fair Yes 6 > 40 Low Yes Excellent No 7 31. . 40 Low Yes Excellent Yes 8 <= 30 Medium No Fair No 9 <= 30 Low Yes Fair Yes 10 > 40 Medium Yes Fair Yes 11 <= 30 Medium Yes Excellent Yes 12 31. . 40 Medium No Excellent Yes 13 31. . 40 High Yes Fair Yes 14 > 40 Medium no excellent no Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 18

RID Age Income student credit buys? 1 <= 30 High No Fair No 2 <= 30 High No Excellent No 3 31. . 40 High No Fair Yes 4 > 40 Medium No Fair Yes 5 > 40 Low Yes Fair Yes 6 > 40 Low Yes Excellent No 7 31. . 40 Low Yes Excellent Yes 8 <= 30 Medium No Fair No 9 <= 30 Low Yes Fair Yes 10 > 40 Medium Yes Fair Yes 11 <= 30 Medium Yes Excellent Yes 12 31. . 40 Medium No Excellent Yes 13 31. . 40 High Yes Fair Yes 14 > 40 Medium no excellent no Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 18

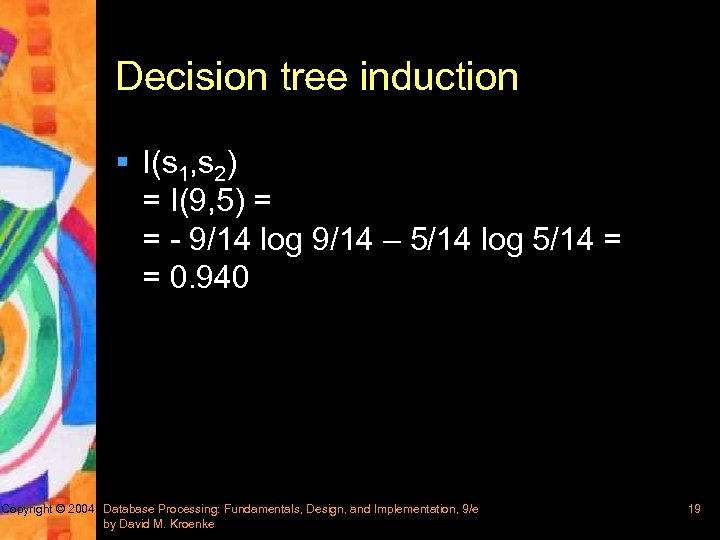

Decision tree induction § I(s 1, s 2) = I(9, 5) = = - 9/14 log 9/14 – 5/14 log 5/14 = = 0. 940 Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 19

Decision tree induction § I(s 1, s 2) = I(9, 5) = = - 9/14 log 9/14 – 5/14 log 5/14 = = 0. 940 Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 19

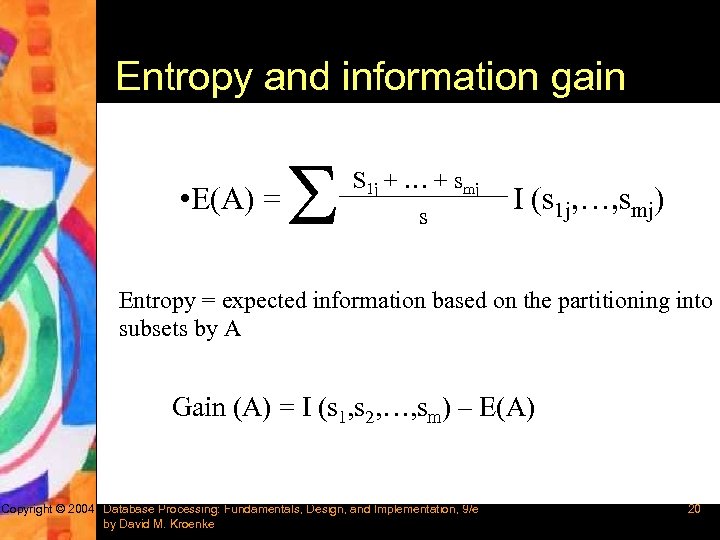

Entropy and information gain • E(A) = S S 1 j + … + smj s I (s 1 j, …, smj) Entropy = expected information based on the partitioning into subsets by A Gain (A) = I (s 1, s 2, …, sm) – E(A) Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 20

Entropy and information gain • E(A) = S S 1 j + … + smj s I (s 1 j, …, smj) Entropy = expected information based on the partitioning into subsets by A Gain (A) = I (s 1, s 2, …, sm) – E(A) Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 20

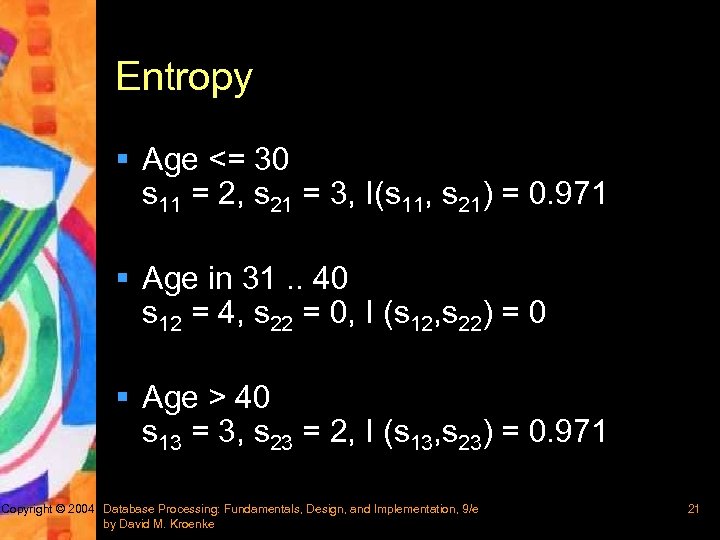

Entropy § Age <= 30 s 11 = 2, s 21 = 3, I(s 11, s 21) = 0. 971 § Age in 31. . 40 s 12 = 4, s 22 = 0, I (s 12, s 22) = 0 § Age > 40 s 13 = 3, s 23 = 2, I (s 13, s 23) = 0. 971 Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 21

Entropy § Age <= 30 s 11 = 2, s 21 = 3, I(s 11, s 21) = 0. 971 § Age in 31. . 40 s 12 = 4, s 22 = 0, I (s 12, s 22) = 0 § Age > 40 s 13 = 3, s 23 = 2, I (s 13, s 23) = 0. 971 Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 21

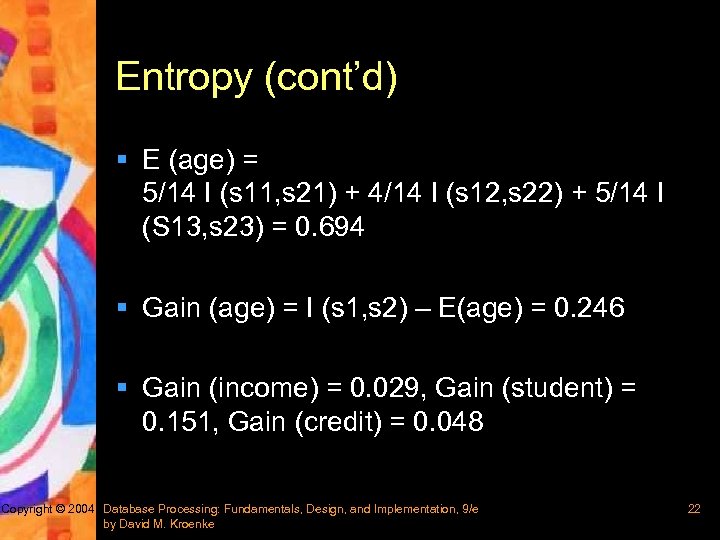

Entropy (cont’d) § E (age) = 5/14 I (s 11, s 21) + 4/14 I (s 12, s 22) + 5/14 I (S 13, s 23) = 0. 694 § Gain (age) = I (s 1, s 2) – E(age) = 0. 246 § Gain (income) = 0. 029, Gain (student) = 0. 151, Gain (credit) = 0. 048 Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 22

Entropy (cont’d) § E (age) = 5/14 I (s 11, s 21) + 4/14 I (s 12, s 22) + 5/14 I (S 13, s 23) = 0. 694 § Gain (age) = I (s 1, s 2) – E(age) = 0. 246 § Gain (income) = 0. 029, Gain (student) = 0. 151, Gain (credit) = 0. 048 Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 22

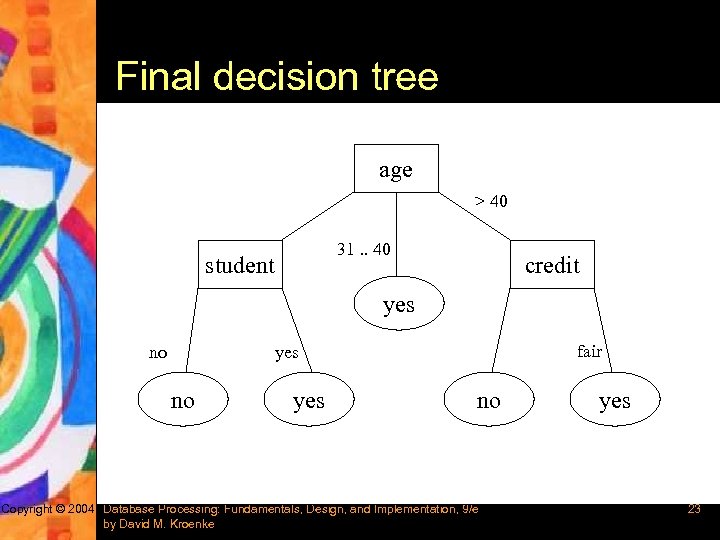

Final decision tree age > 40 31. . 40 student credit yes no yes excellent no Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke fair yes 23

Final decision tree age > 40 31. . 40 student credit yes no yes excellent no Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke fair yes 23

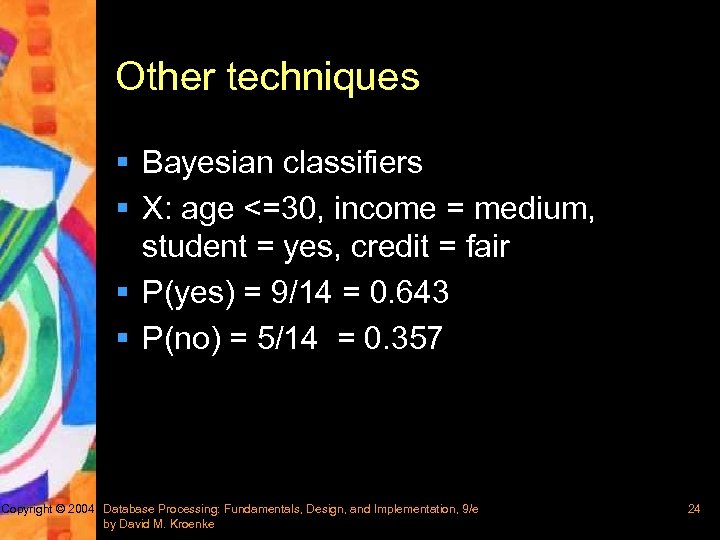

Other techniques § Bayesian classifiers § X: age <=30, income = medium, student = yes, credit = fair § P(yes) = 9/14 = 0. 643 § P(no) = 5/14 = 0. 357 Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 24

Other techniques § Bayesian classifiers § X: age <=30, income = medium, student = yes, credit = fair § P(yes) = 9/14 = 0. 643 § P(no) = 5/14 = 0. 357 Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 24

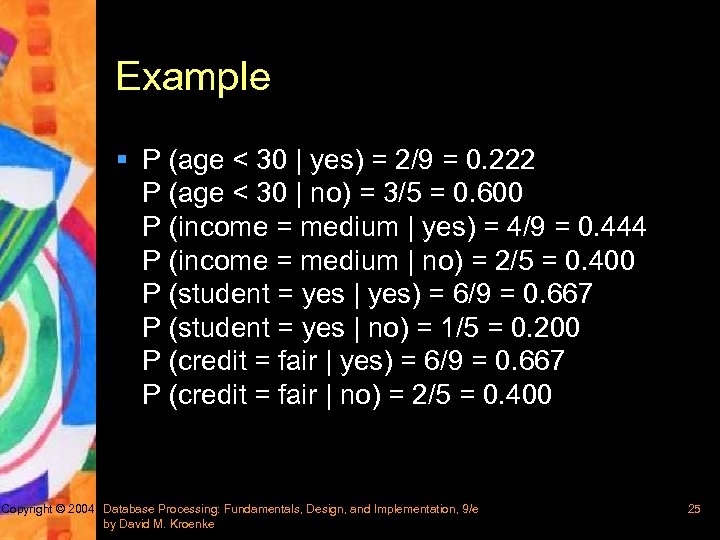

Example § P (age < 30 | yes) = 2/9 = 0. 222 P (age < 30 | no) = 3/5 = 0. 600 P (income = medium | yes) = 4/9 = 0. 444 P (income = medium | no) = 2/5 = 0. 400 P (student = yes | yes) = 6/9 = 0. 667 P (student = yes | no) = 1/5 = 0. 200 P (credit = fair | yes) = 6/9 = 0. 667 P (credit = fair | no) = 2/5 = 0. 400 Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 25

Example § P (age < 30 | yes) = 2/9 = 0. 222 P (age < 30 | no) = 3/5 = 0. 600 P (income = medium | yes) = 4/9 = 0. 444 P (income = medium | no) = 2/5 = 0. 400 P (student = yes | yes) = 6/9 = 0. 667 P (student = yes | no) = 1/5 = 0. 200 P (credit = fair | yes) = 6/9 = 0. 667 P (credit = fair | no) = 2/5 = 0. 400 Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 25

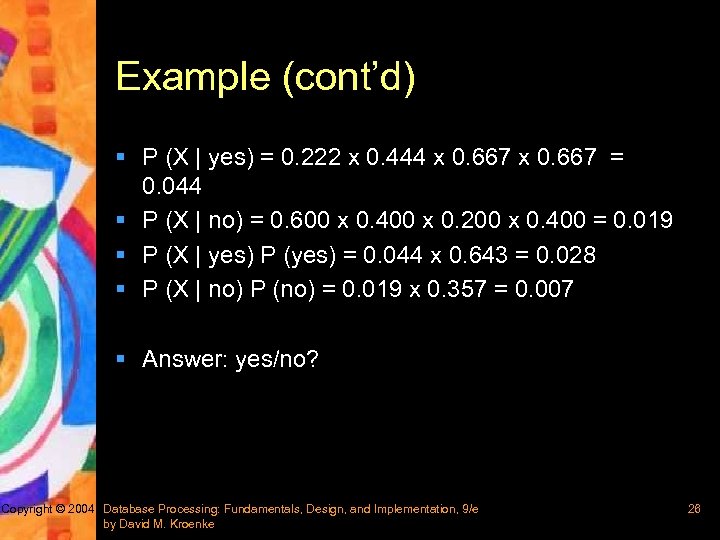

Example (cont’d) § P (X | yes) = 0. 222 x 0. 444 x 0. 667 = 0. 044 § P (X | no) = 0. 600 x 0. 400 x 0. 200 x 0. 400 = 0. 019 § P (X | yes) P (yes) = 0. 044 x 0. 643 = 0. 028 § P (X | no) P (no) = 0. 019 x 0. 357 = 0. 007 § Answer: yes/no? Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 26

Example (cont’d) § P (X | yes) = 0. 222 x 0. 444 x 0. 667 = 0. 044 § P (X | no) = 0. 600 x 0. 400 x 0. 200 x 0. 400 = 0. 019 § P (X | yes) P (yes) = 0. 044 x 0. 643 = 0. 028 § P (X | no) P (no) = 0. 019 x 0. 357 = 0. 007 § Answer: yes/no? Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 26

Predictive models § Inputs (e. g. , medical history, age) § Output (e. g. , will patient experience any side effects) § Some models are better than others Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 27

Predictive models § Inputs (e. g. , medical history, age) § Output (e. g. , will patient experience any side effects) § Some models are better than others Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 27

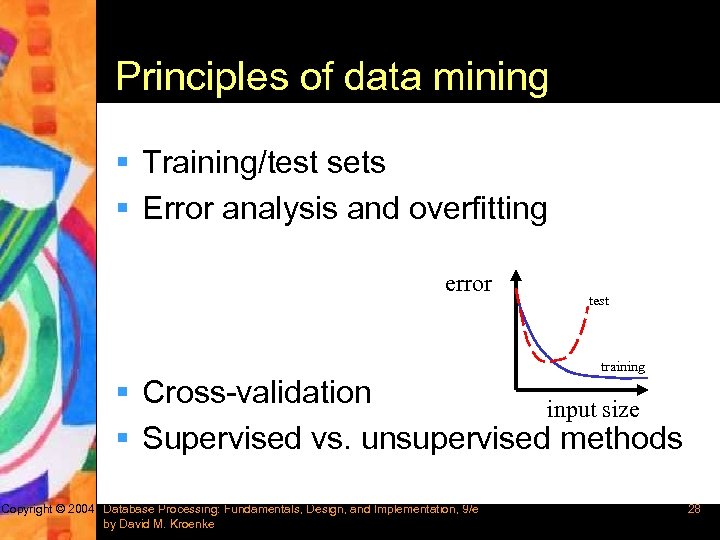

Principles of data mining § Training/test sets § Error analysis and overfitting error test training § Cross-validation input size § Supervised vs. unsupervised methods Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 28

Principles of data mining § Training/test sets § Error analysis and overfitting error test training § Cross-validation input size § Supervised vs. unsupervised methods Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 28

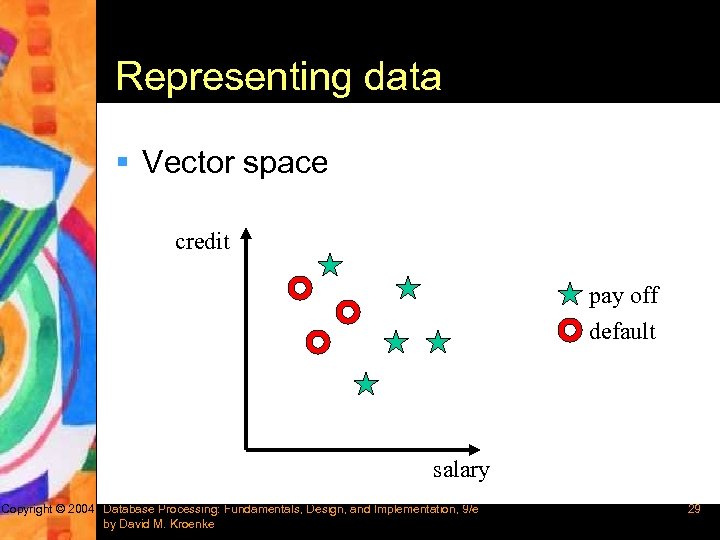

Representing data § Vector space credit pay off default salary Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 29

Representing data § Vector space credit pay off default salary Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 29

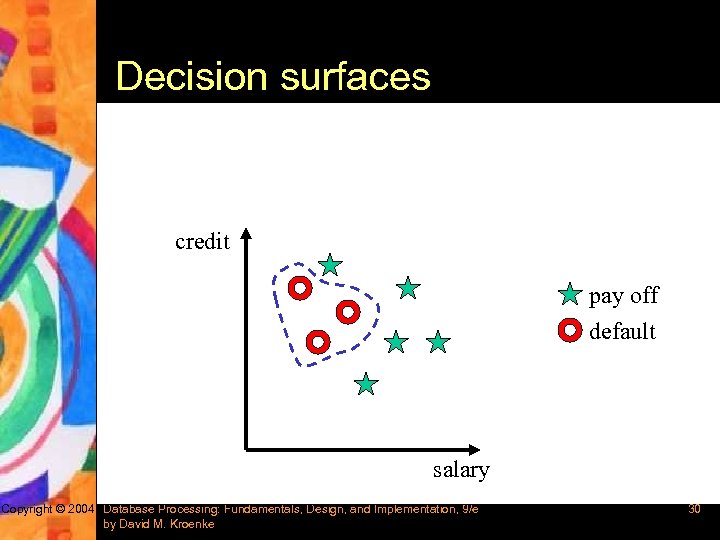

Decision surfaces credit pay off default salary Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 30

Decision surfaces credit pay off default salary Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 30

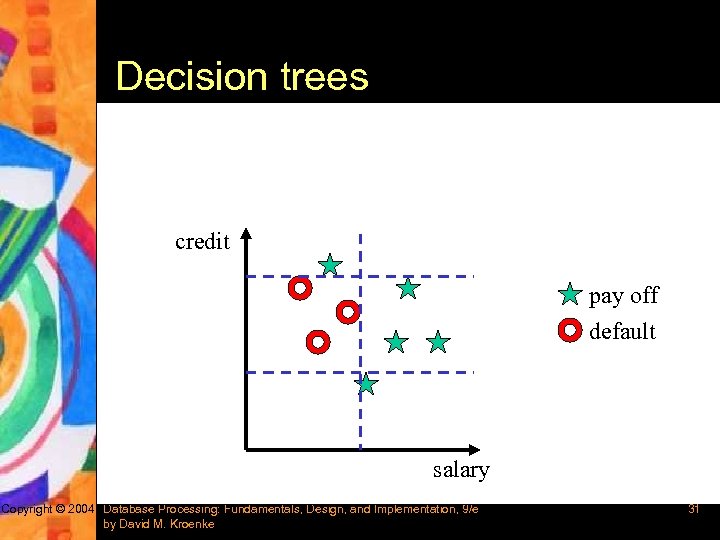

Decision trees credit pay off default salary Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 31

Decision trees credit pay off default salary Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 31

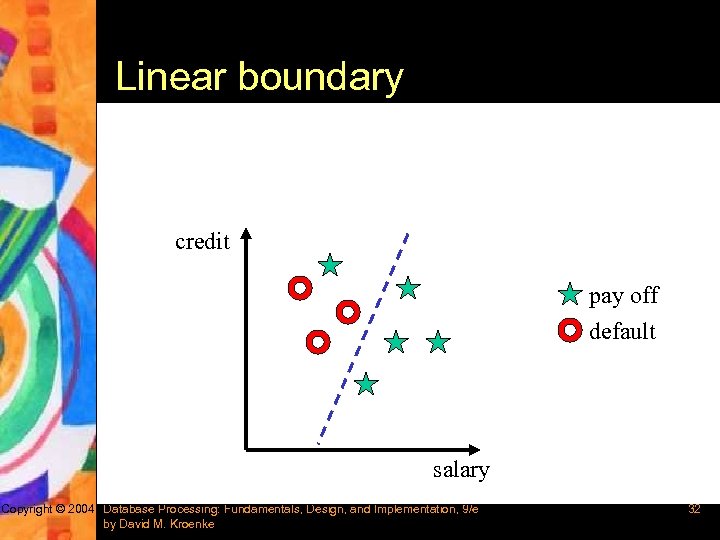

Linear boundary credit pay off default salary Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 32

Linear boundary credit pay off default salary Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 32

k. NN models § Assign each element to the closest cluster § Demos: – http: //www 2. cs. cmu. edu/~zhuxj/courseproject/knnd emo/KNN. html Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 33

k. NN models § Assign each element to the closest cluster § Demos: – http: //www 2. cs. cmu. edu/~zhuxj/courseproject/knnd emo/KNN. html Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 33

Other methods § § Decision trees Neural networks Support vector machines Demos – http: //www. cs. technion. ac. il/~rani/Loc. Bo ost/ Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 34

Other methods § § Decision trees Neural networks Support vector machines Demos – http: //www. cs. technion. ac. il/~rani/Loc. Bo ost/ Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 34

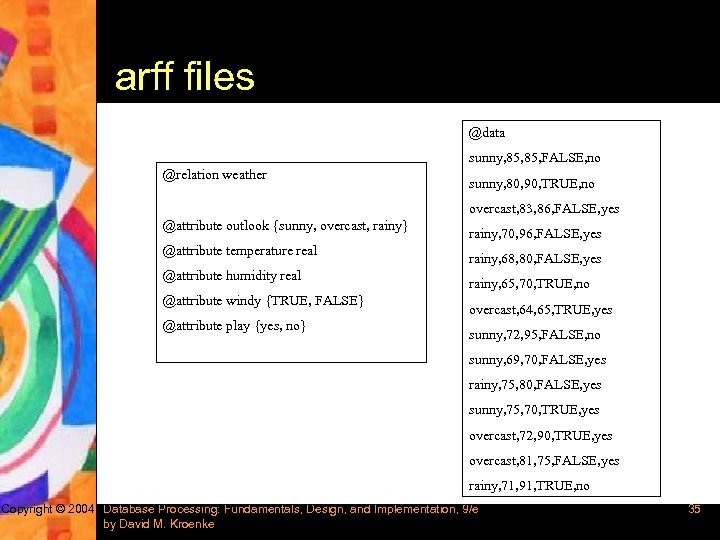

arff files @data sunny, 85, FALSE, no @relation weather sunny, 80, 90, TRUE, no overcast, 83, 86, FALSE, yes @attribute outlook {sunny, overcast, rainy} @attribute temperature real @attribute humidity real @attribute windy {TRUE, FALSE} @attribute play {yes, no} rainy, 70, 96, FALSE, yes rainy, 68, 80, FALSE, yes rainy, 65, 70, TRUE, no overcast, 64, 65, TRUE, yes sunny, 72, 95, FALSE, no sunny, 69, 70, FALSE, yes rainy, 75, 80, FALSE, yes sunny, 75, 70, TRUE, yes overcast, 72, 90, TRUE, yes overcast, 81, 75, FALSE, yes rainy, 71, 91, TRUE, no Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 35

arff files @data sunny, 85, FALSE, no @relation weather sunny, 80, 90, TRUE, no overcast, 83, 86, FALSE, yes @attribute outlook {sunny, overcast, rainy} @attribute temperature real @attribute humidity real @attribute windy {TRUE, FALSE} @attribute play {yes, no} rainy, 70, 96, FALSE, yes rainy, 68, 80, FALSE, yes rainy, 65, 70, TRUE, no overcast, 64, 65, TRUE, yes sunny, 72, 95, FALSE, no sunny, 69, 70, FALSE, yes rainy, 75, 80, FALSE, yes sunny, 75, 70, TRUE, yes overcast, 72, 90, TRUE, yes overcast, 81, 75, FALSE, yes rainy, 71, 91, TRUE, no Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 35

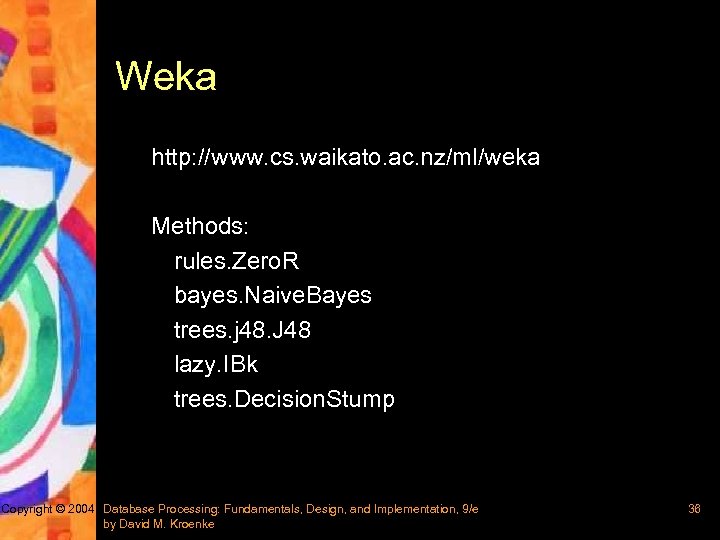

Weka http: //www. cs. waikato. ac. nz/ml/weka Methods: rules. Zero. R bayes. Naive. Bayes trees. j 48. J 48 lazy. IBk trees. Decision. Stump Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 36

Weka http: //www. cs. waikato. ac. nz/ml/weka Methods: rules. Zero. R bayes. Naive. Bayes trees. j 48. J 48 lazy. IBk trees. Decision. Stump Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 36

k. Means clustering § http: //www. cc. gatech. edu/~dellaert/html/sof tware. html § java weka. clusterers. Simple. KMeans -t data/weather. arff Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 37

k. Means clustering § http: //www. cc. gatech. edu/~dellaert/html/sof tware. html § java weka. clusterers. Simple. KMeans -t data/weather. arff Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 37

More useful pointers § http: //www. kdnuggets. com/ § http: //www. twocrows. com/booklet. htm Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 38

More useful pointers § http: //www. kdnuggets. com/ § http: //www. twocrows. com/booklet. htm Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 38

More types of data mining § § Classification and prediction Cluster analysis Outlier analysis Evolution analysis Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 39

More types of data mining § § Classification and prediction Cluster analysis Outlier analysis Evolution analysis Copyright © 2004 Database Processing: Fundamentals, Design, and Implementation, 9/e by David M. Kroenke 39