6c95de154ee2cfaa52c70ce632c3504a.ppt

- Количество слайдов: 35

K-nearest neighbor methods William Cohen 10 -601 April 2008 1

But first…. 2

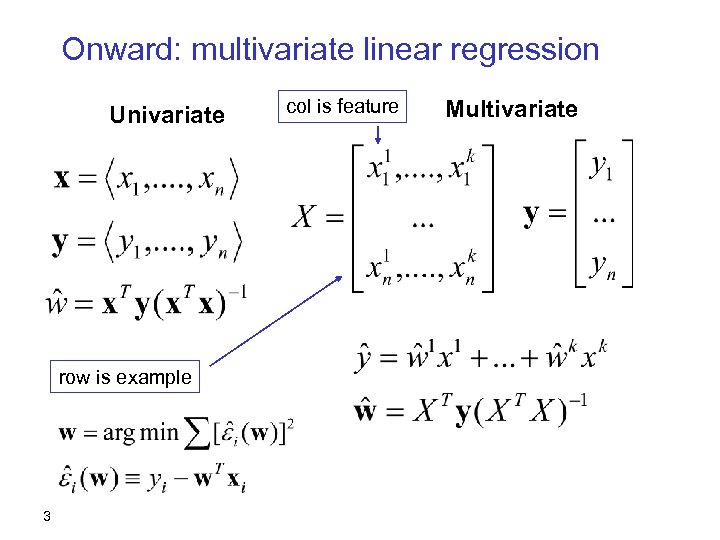

Onward: multivariate linear regression Univariate row is example 3 col is feature Multivariate

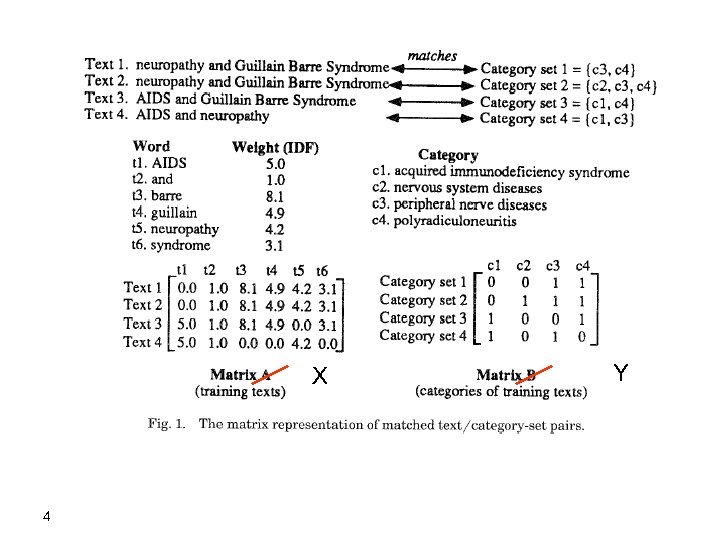

X 4 Y

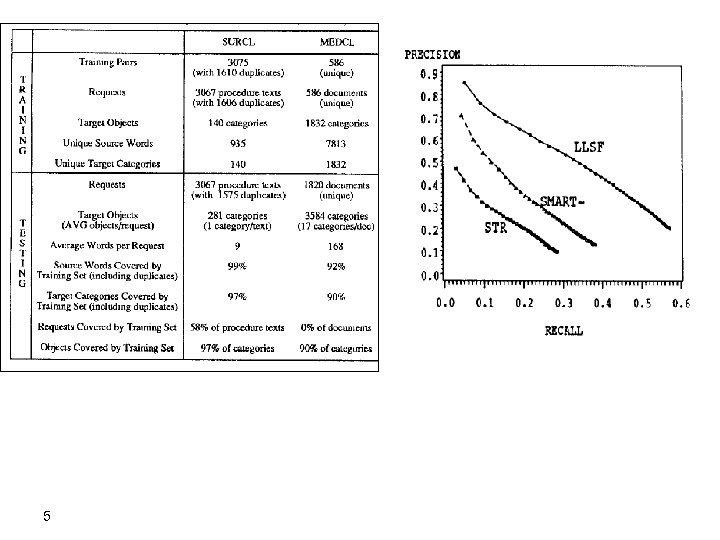

5

6

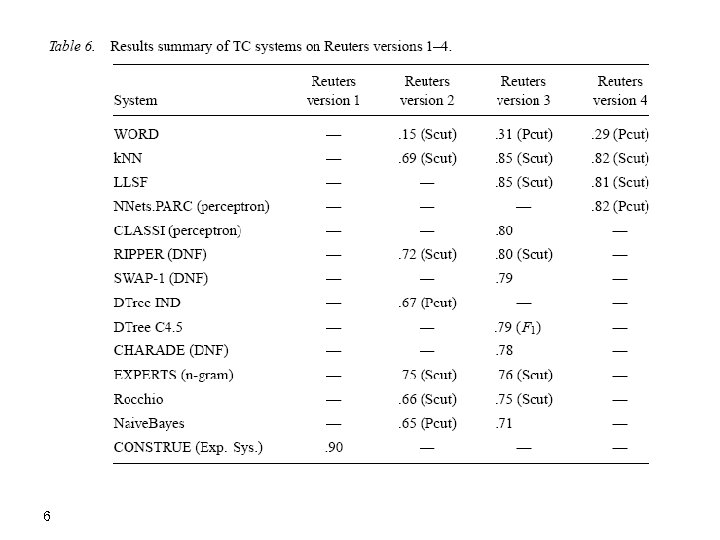

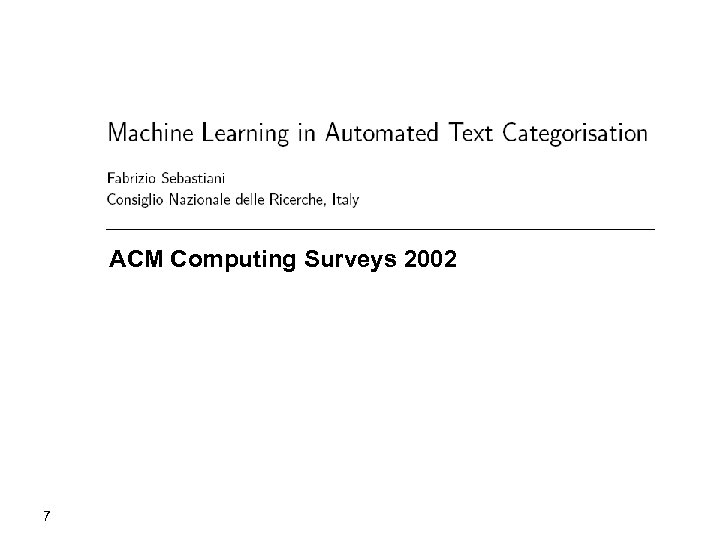

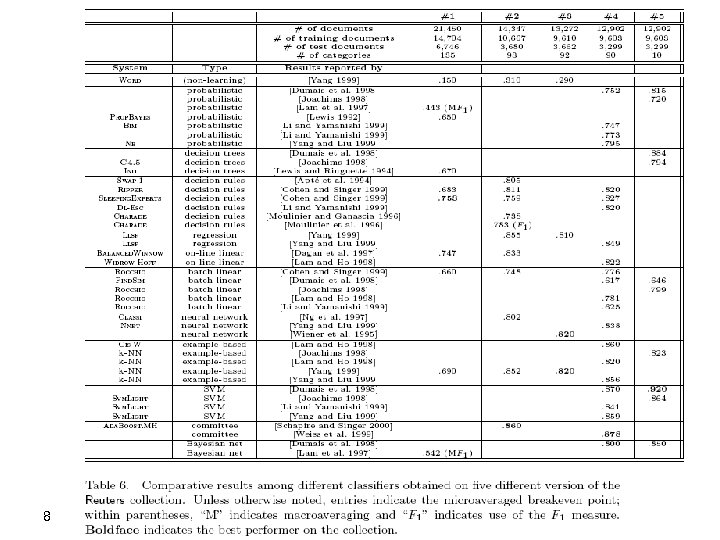

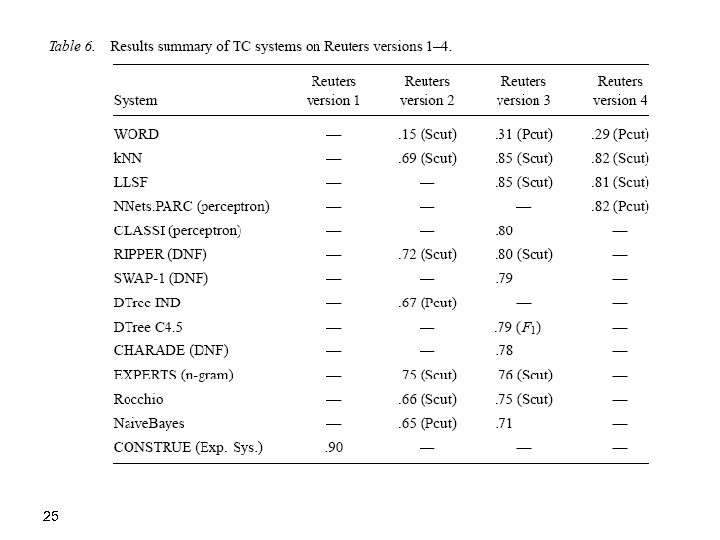

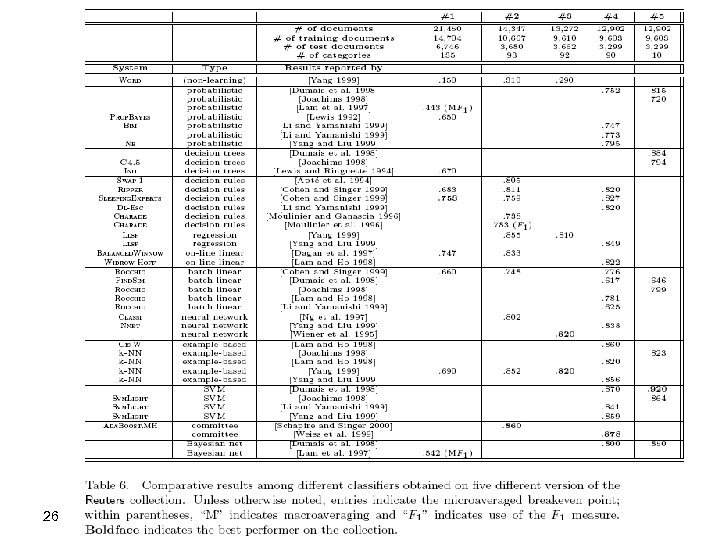

ACM Computing Surveys 2002 7

8

Review of K-NN methods (so far) 9

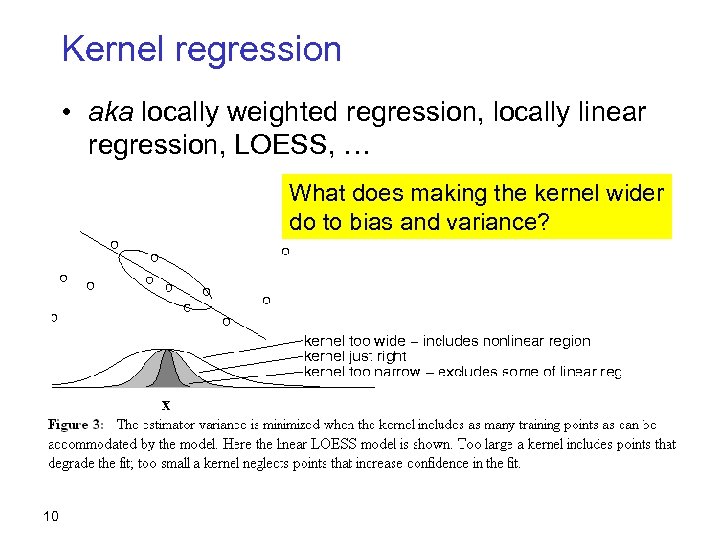

Kernel regression • aka locally weighted regression, locally linear regression, LOESS, … What does making the kernel wider do to bias and variance? 10

Bell. Core’s Movie. Recommender • Participants sent email to videos@bellcore. com • System replied with a list of 500 movies to rate on a 1 -10 scale (250 random, 250 popular) – Only subset need to be rated • New participant P sends in rated movies via email • System compares ratings for P to ratings of (a random sample of) previous users • Most similar users are used to predict scores for unrated movies (more later) • System returns recommendations in an email message. 11

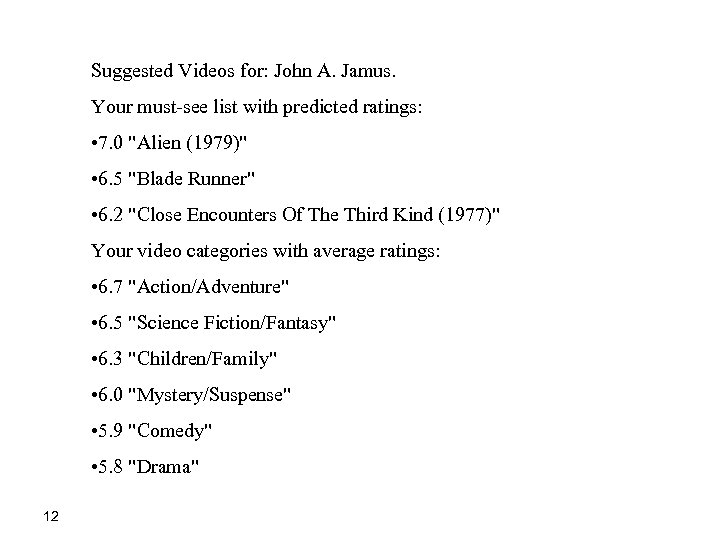

Suggested Videos for: John A. Jamus. Your must-see list with predicted ratings: • 7. 0 "Alien (1979)" • 6. 5 "Blade Runner" • 6. 2 "Close Encounters Of The Third Kind (1977)" Your video categories with average ratings: • 6. 7 "Action/Adventure" • 6. 5 "Science Fiction/Fantasy" • 6. 3 "Children/Family" • 6. 0 "Mystery/Suspense" • 5. 9 "Comedy" • 5. 8 "Drama" 12

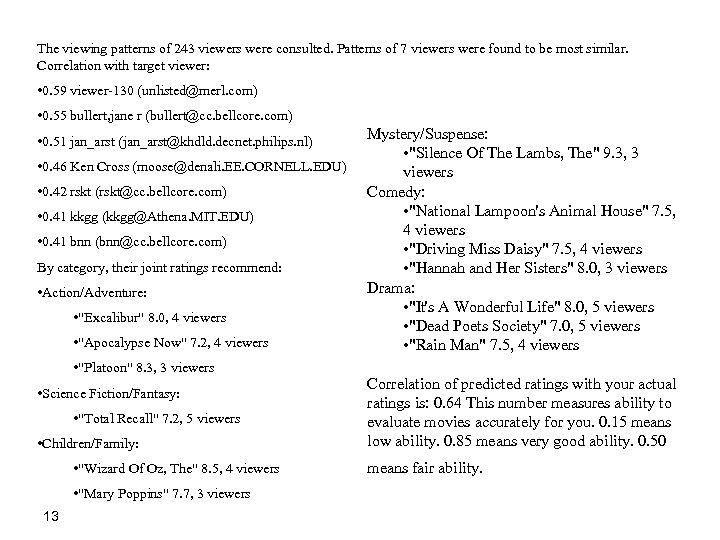

The viewing patterns of 243 viewers were consulted. Patterns of 7 viewers were found to be most similar. Correlation with target viewer: • 0. 59 viewer-130 (unlisted@merl. com) • 0. 55 bullert, jane r (bullert@cc. bellcore. com) • 0. 51 jan_arst (jan_arst@khdld. decnet. philips. nl) • 0. 46 Ken Cross (moose@denali. EE. CORNELL. EDU) • 0. 42 rskt (rskt@cc. bellcore. com) • 0. 41 kkgg (kkgg@Athena. MIT. EDU) • 0. 41 bnn (bnn@cc. bellcore. com) By category, their joint ratings recommend: • Action/Adventure: • "Excalibur" 8. 0, 4 viewers • "Apocalypse Now" 7. 2, 4 viewers • "Platoon" 8. 3, 3 viewers • Science Fiction/Fantasy: • "Total Recall" 7. 2, 5 viewers • Children/Family: • "Wizard Of Oz, The" 8. 5, 4 viewers • "Mary Poppins" 7. 7, 3 viewers 13 Mystery/Suspense: • "Silence Of The Lambs, The" 9. 3, 3 viewers Comedy: • "National Lampoon's Animal House" 7. 5, 4 viewers • "Driving Miss Daisy" 7. 5, 4 viewers • "Hannah and Her Sisters" 8. 0, 3 viewers Drama: • "It's A Wonderful Life" 8. 0, 5 viewers • "Dead Poets Society" 7. 0, 5 viewers • "Rain Man" 7. 5, 4 viewers Correlation of predicted ratings with your actual ratings is: 0. 64 This number measures ability to evaluate movies accurately for you. 0. 15 means low ability. 0. 85 means very good ability. 0. 50 means fair ability.

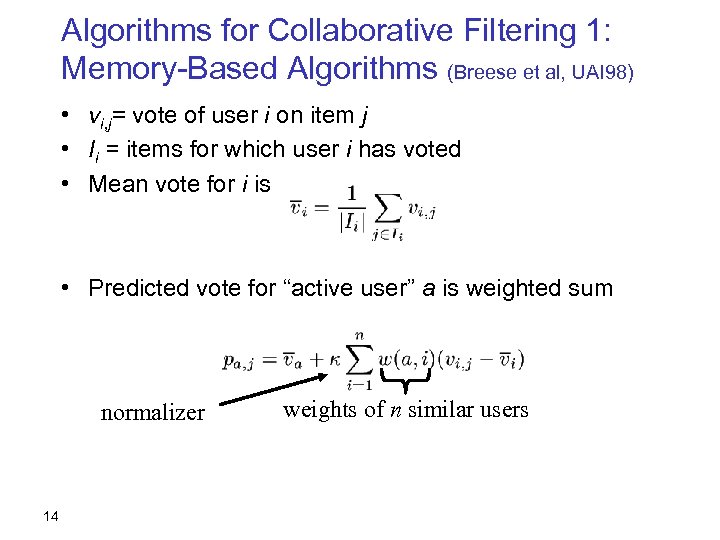

Algorithms for Collaborative Filtering 1: Memory-Based Algorithms (Breese et al, UAI 98) • vi, j= vote of user i on item j • Ii = items for which user i has voted • Mean vote for i is • Predicted vote for “active user” a is weighted sum normalizer 14 weights of n similar users

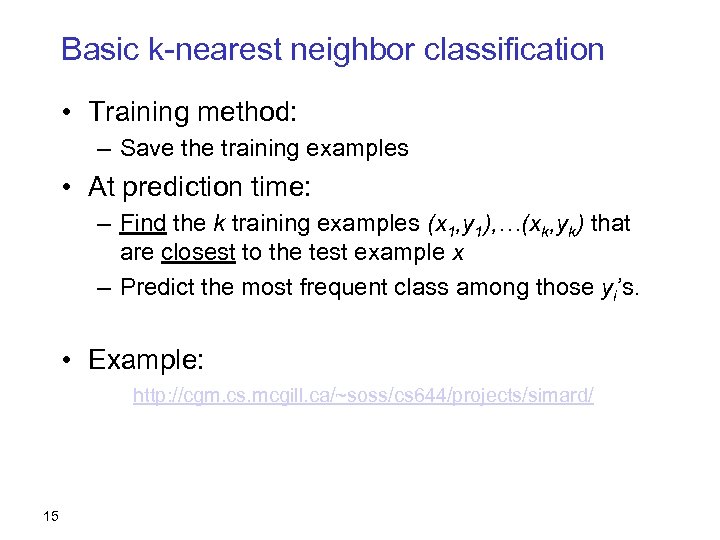

Basic k-nearest neighbor classification • Training method: – Save the training examples • At prediction time: – Find the k training examples (x 1, y 1), …(xk, yk) that are closest to the test example x – Predict the most frequent class among those yi’s. • Example: http: //cgm. cs. mcgill. ca/~soss/cs 644/projects/simard/ 15

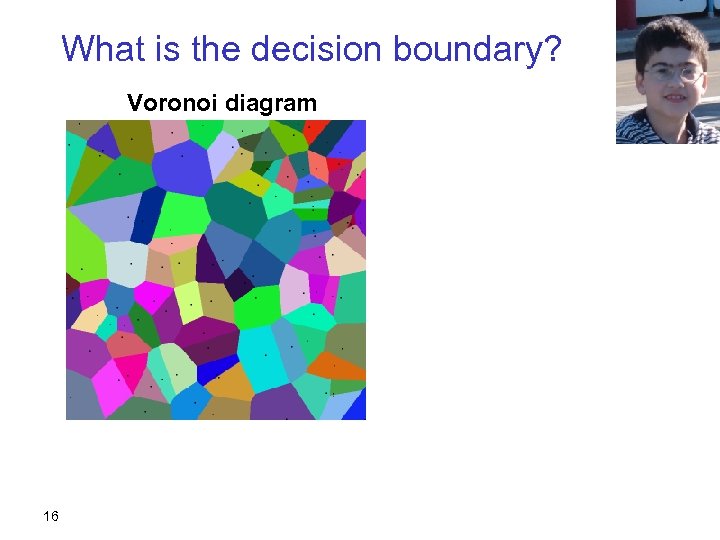

What is the decision boundary? Voronoi diagram 16

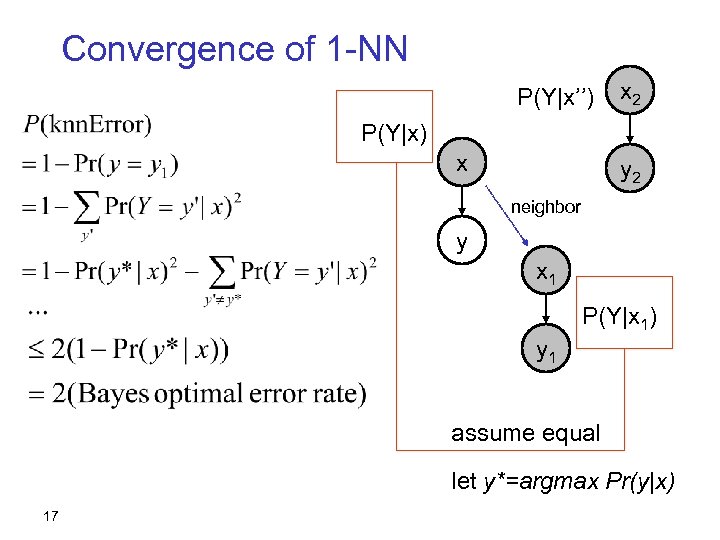

Convergence of 1 -NN P(Y|x’’) x 2 P(Y|x) x y 2 neighbor y x 1 P(Y|x 1) y 1 assume equal let y*=argmax Pr(y|x) 17

Basic k-nearest neighbor classification • Training method: – Save the training examples • At prediction time: – Find the k training examples (x 1, y 1), …(xk, yk) that are closest to the test example x – Predict the most frequent class among those yi’s. • Improvements: – Weighting examples from the neighborhood – Measuring “closeness” – Finding “close” examples in a large training set quickly 18

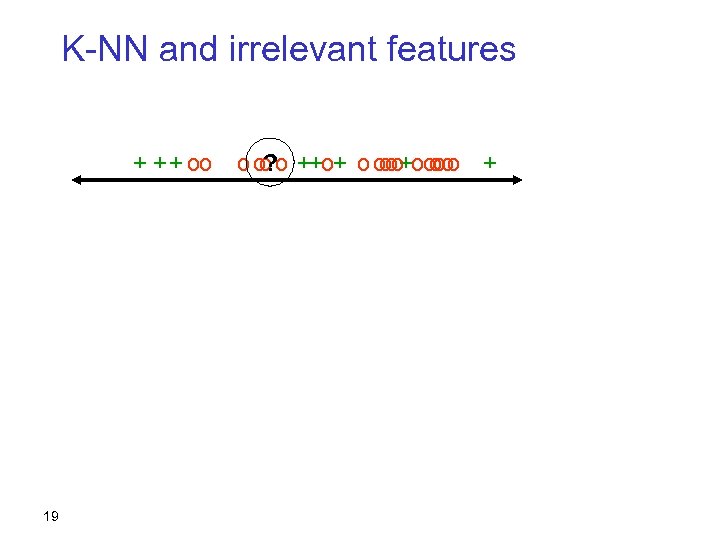

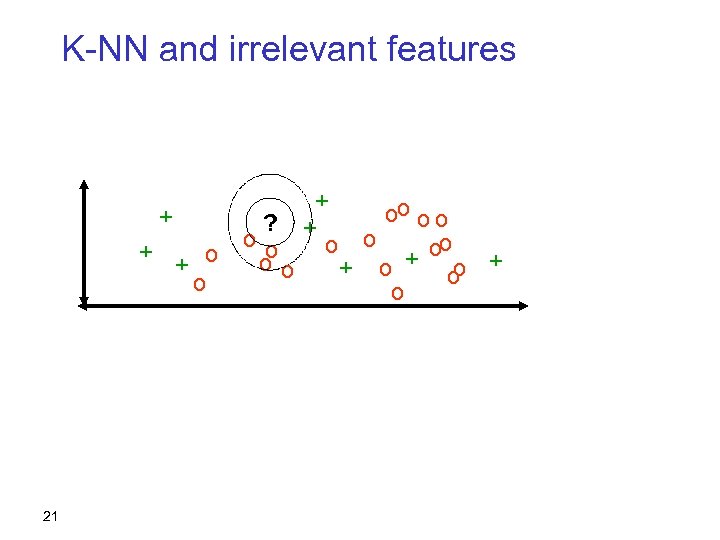

K-NN and irrelevant features + + + oo 19 o o? o ++o+ o oo+ooo o + o oo oo

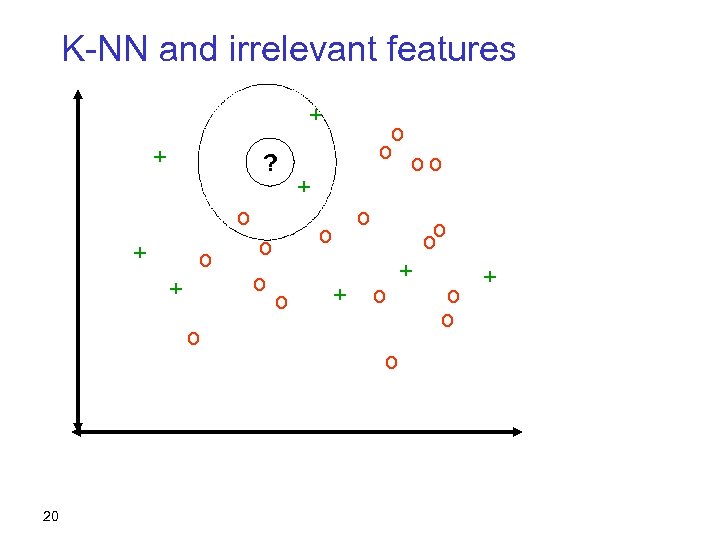

K-NN and irrelevant features + + ? o + o 20 o o oo + o o +

K-NN and irrelevant features + + 21 + o o ? oo oo + + o oo + o + o o

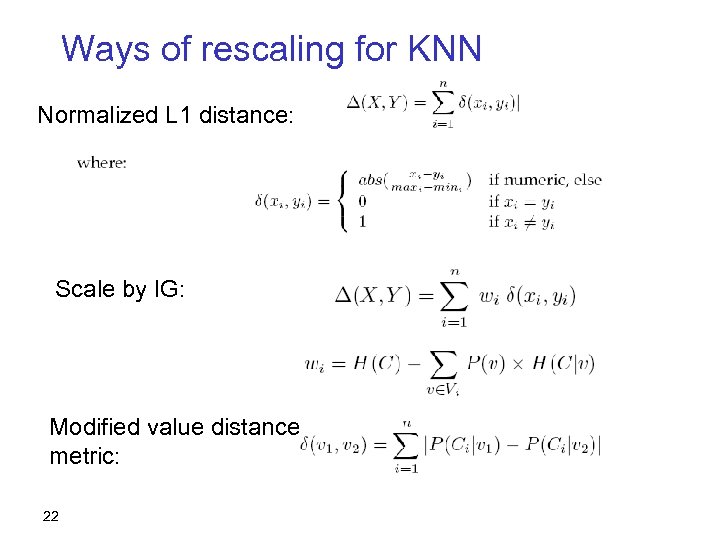

Ways of rescaling for KNN Normalized L 1 distance: Scale by IG: Modified value distance metric: 22

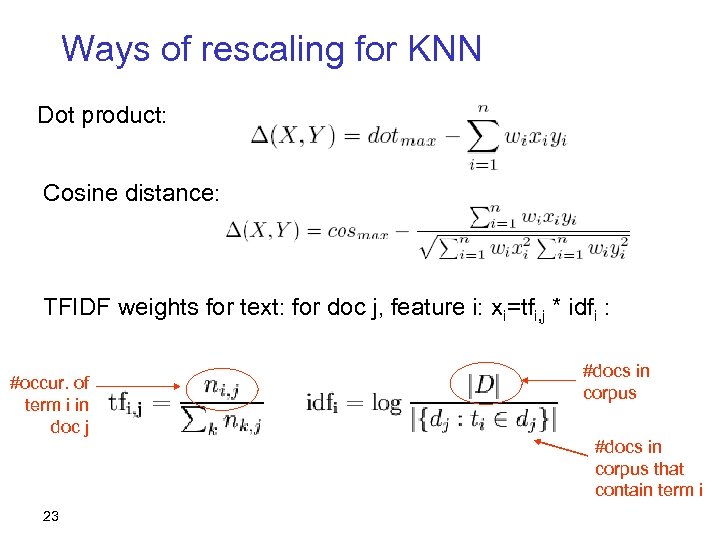

Ways of rescaling for KNN Dot product: Cosine distance: TFIDF weights for text: for doc j, feature i: xi=tfi, j * idfi : #occur. of term i in doc j #docs in corpus that contain term i 23

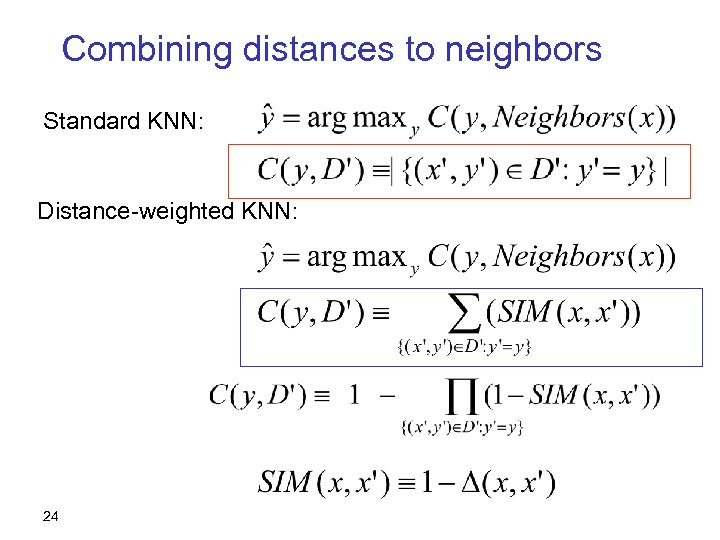

Combining distances to neighbors Standard KNN: Distance-weighted KNN: 24

25

26

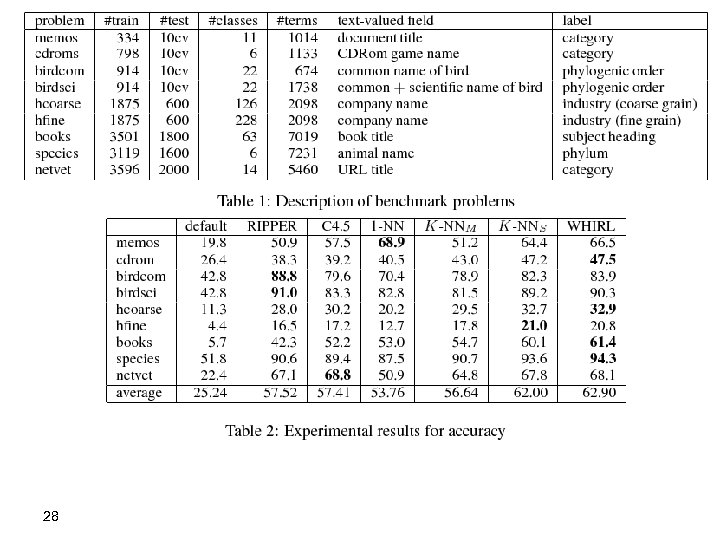

William W. Cohen & Haym Hirsh (1998): Joins that Generalize: Text Classification Using WHIRL in KDD 1998: 169 -173. 27

28

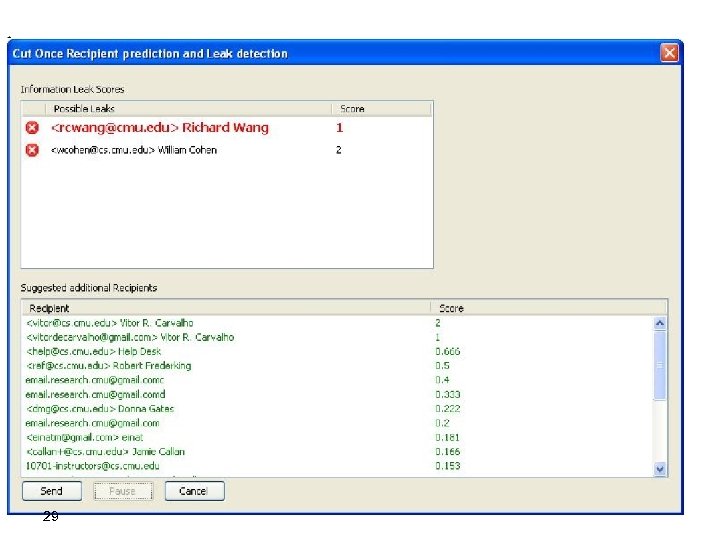

29

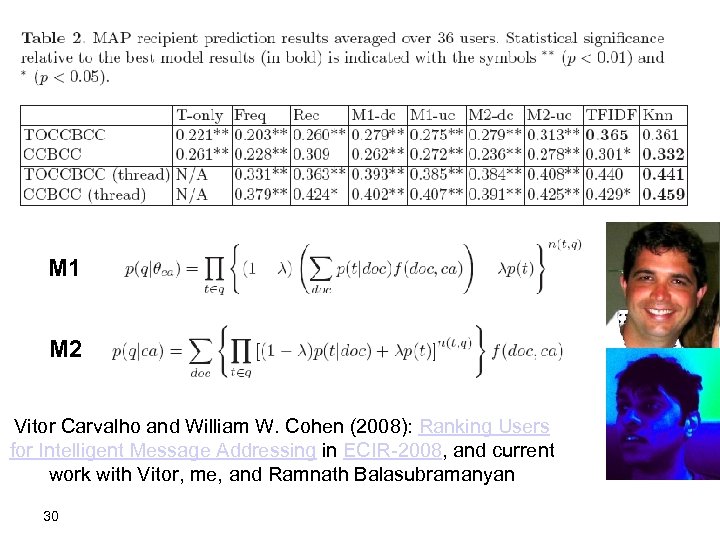

M 1 M 2 Vitor Carvalho and William W. Cohen (2008): Ranking Users for Intelligent Message Addressing in ECIR-2008, and current work with Vitor, me, and Ramnath Balasubramanyan 30

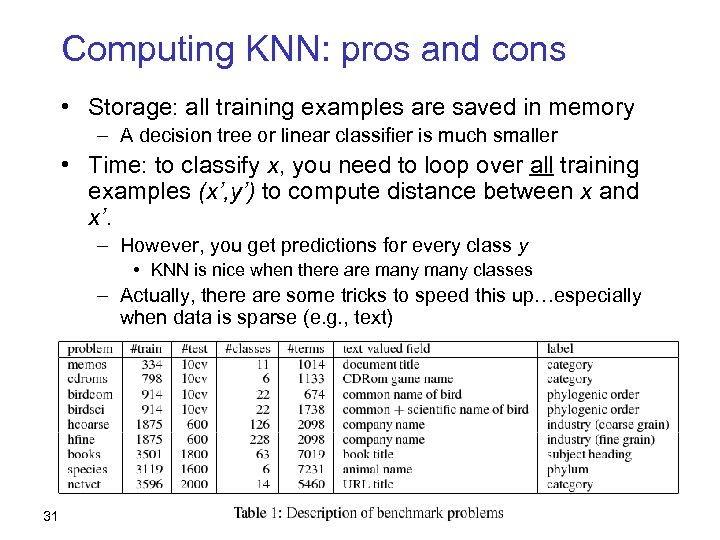

Computing KNN: pros and cons • Storage: all training examples are saved in memory – A decision tree or linear classifier is much smaller • Time: to classify x, you need to loop over all training examples (x’, y’) to compute distance between x and x’. – However, you get predictions for every class y • KNN is nice when there are many classes – Actually, there are some tricks to speed this up…especially when data is sparse (e. g. , text) 31

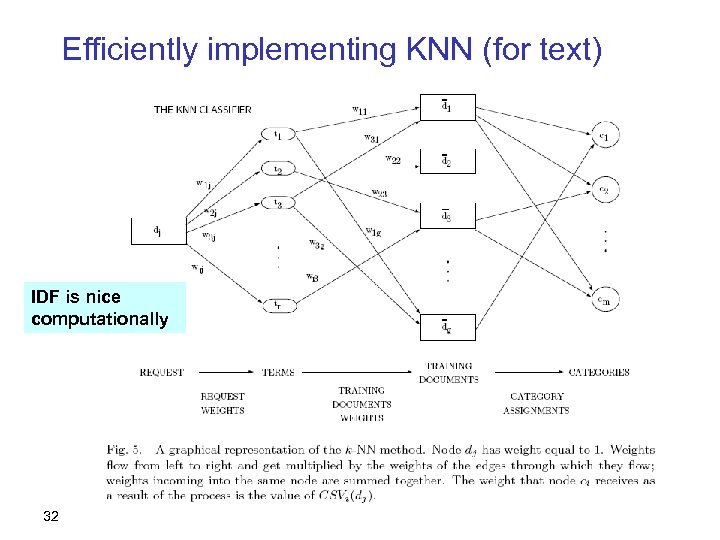

Efficiently implementing KNN (for text) IDF is nice computationally 32

Tricks with fast KNN K-means using r-NN 1. 2. 3. 4. 33 Pick k points c 1=x 1, …. , ck=xk as centers For each xi, find Di=Neighborhood(xi) For each xi, let ci=mean(Di) Go to step 2….

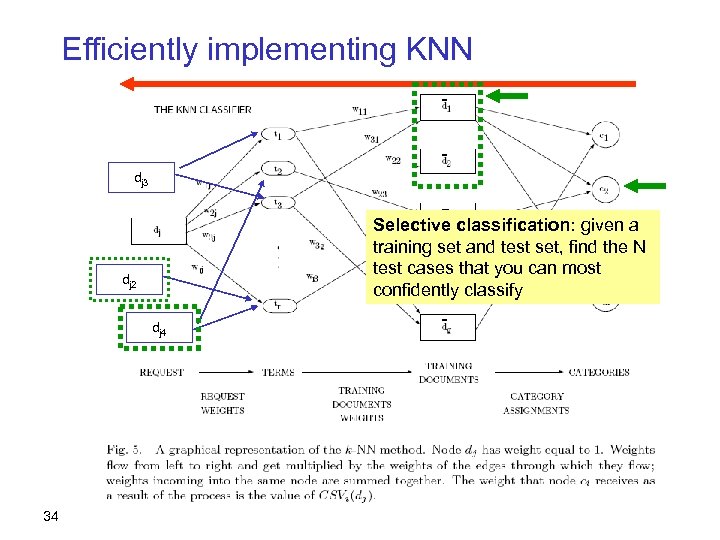

Efficiently implementing KNN dj 3 Selective classification: given a training set and test set, find the N test cases that you can most confidently classify dj 2 dj 4 34

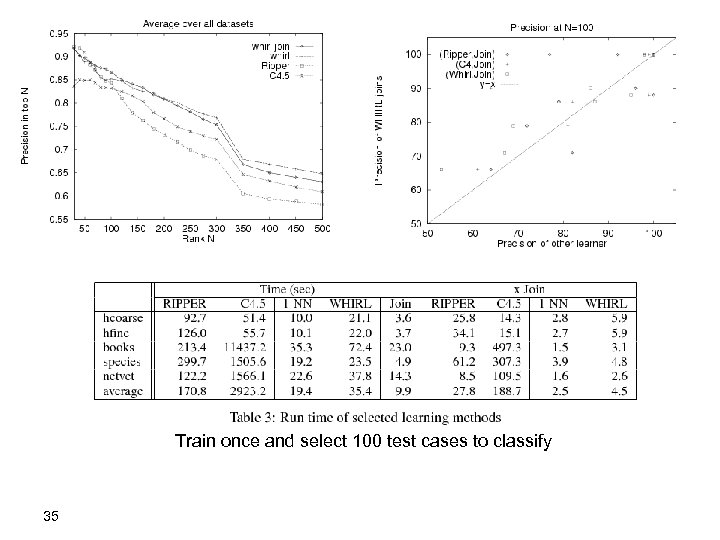

Train once and select 100 test cases to classify 35

6c95de154ee2cfaa52c70ce632c3504a.ppt