c6b4229637978904720eb4cade44d3a7.ppt

- Количество слайдов: 20

Jack Dongarra Piotr Łuszczek HPC Challenge Benchmark Suite 2006 SPEC Workshop January 23, 2006 Austin, TX http: //icl. cs. utk. edu/hpcc/

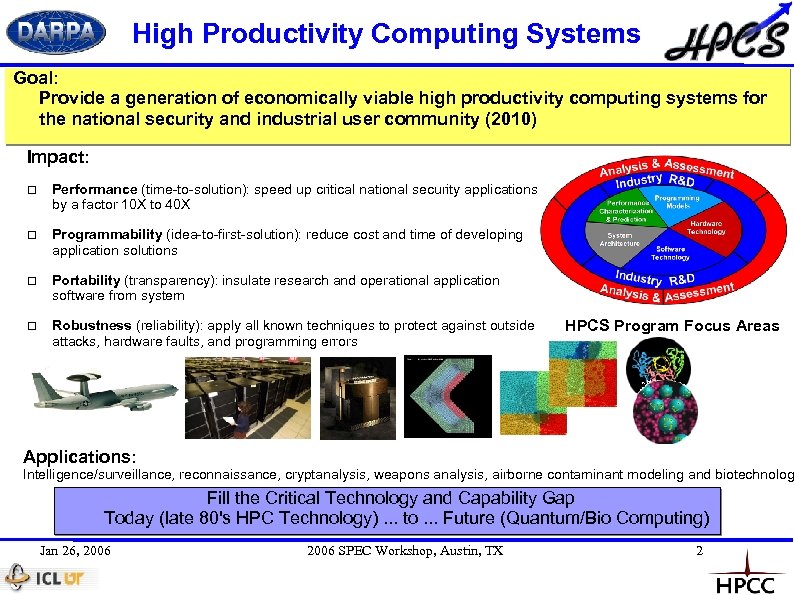

High Productivity Computing Systems Goal: Provide a generation of economically viable high productivity computing systems for the national security and industrial user community (2010) Impact: Performance (time-to-solution): speed up critical national security applications by a factor 10 X to 40 X Programmability (idea-to-first-solution): reduce cost and time of developing application solutions Portability (transparency): insulate research and operational application software from system Robustness (reliability): apply all known techniques to protect against outside attacks, hardware faults, and programming errors HPCS Program Focus Areas Applications: Intelligence/surveillance, reconnaissance, cryptanalysis, weapons analysis, airborne contaminant modeling and biotechnolog Fill the Critical Technology and Capability Gap Today (late 80's HPC Technology). . . to. . . Future (Quantum/Bio Computing) Jan 26, 2006 SPEC Workshop, Austin, TX 2

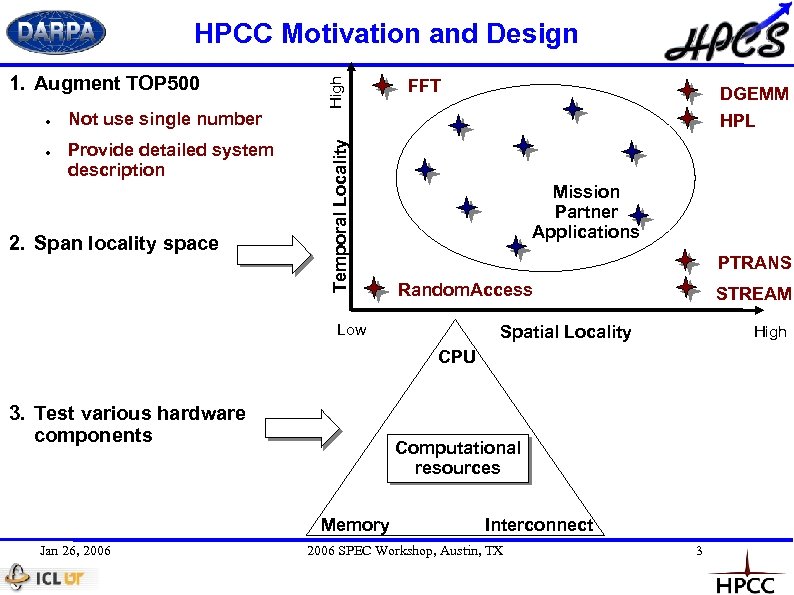

● ● Not use single number Provide detailed system description 2. Span locality space Temporal Locality 1. Augment TOP 500 High HPCC Motivation and Design FFT DGEMM HPL Mission Partner Applications PTRANS Random. Access Low STREAM Spatial Locality High CPU 3. Test various hardware components Computational resources Memory Jan 26, 2006 Interconnect 2006 SPEC Workshop, Austin, TX 3

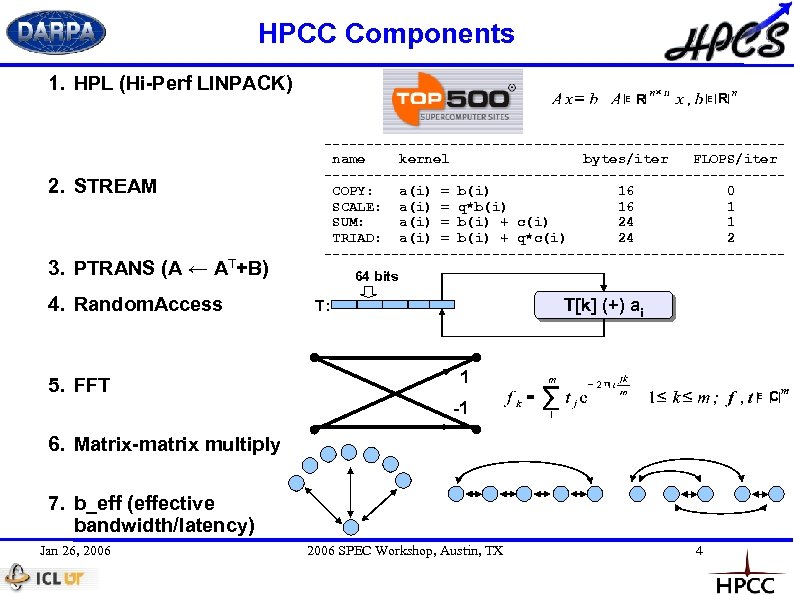

HPCC Components 1. HPL (Hi-Perf LINPACK) E 2. STREAM 3. PTRANS (A ← AT+B) 4. Random. Access 5. FFT R E ---------------------------name kernel bytes/iter FLOPS/iter ---------------------------COPY: a(i) = b(i) 16 0 SCALE: a(i) = q*b(i) 16 1 SUM: a(i) = b(i) + c(i) 24 1 TRIAD: a(i) = b(i) + q*c(i) 24 2 ---------------------------64 bits T[k] (+) ai T: 1 -1 π = E 6. Matrix-matrix multiply 7. b_eff (effective bandwidth/latency) Jan 26, 2006 R 2006 SPEC Workshop, Austin, TX 4 C

HPCC Test Variants 1. Local 2. Embarrassingly parallel 3. Global 4. Network only Jan 26, 2006 SPEC Workshop, Austin, TX 5

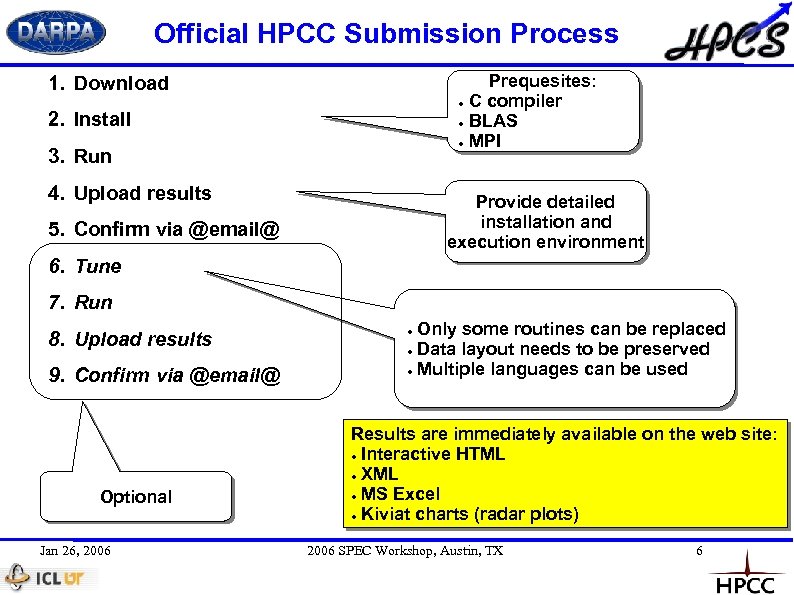

Official HPCC Submission Process Prequesites: ● C compiler ● BLAS ● MPI 1. Download 2. Install 3. Run 4. Upload results Provide detailed installation and execution environment 5. Confirm via @email@ 6. Tune 7. Run 8. Upload results 9. Confirm via @email@ Optional Jan 26, 2006 Only some routines can be replaced ● Data layout needs to be preserved ● Multiple languages can be used ● Results are immediately available on the web site: ● Interactive HTML ● XML ● MS Excel ● Kiviat charts (radar plots) 2006 SPEC Workshop, Austin, TX 6

Measuring Locality in Code Jan 26, 2006 SPEC Workshop, Austin, TX 7

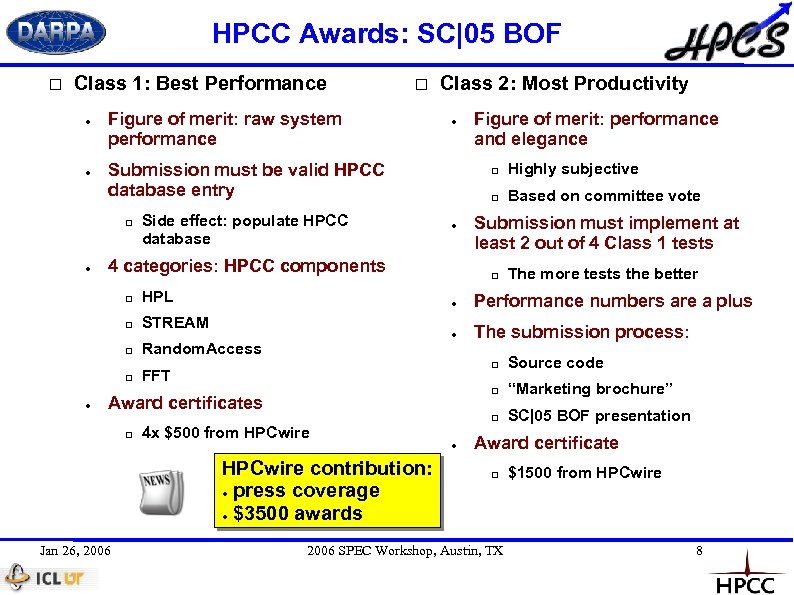

HPCC Awards: SC|05 BOF Class 1: Best Performance ● ● Figure of merit: raw system performance Class 2: Most Productivity ● Submission must be valid HPCC database entry ● Side effect: populate HPCC database ● HPL STREAM Highly subjective 4 categories: HPCC components Figure of merit: performance and elegance Based on committee vote Submission must implement at least 2 out of 4 Class 1 tests The more tests the better ● ● Random. Access Performance numbers are a plus The submission process: FFT Award certificates 4 x $500 from HPCwire contribution: ● press coverage ● $3500 awards Jan 26, 2006 ● Source code “Marketing brochure” ● SC|05 BOF presentation Award certificate 2006 SPEC Workshop, Austin, TX $1500 from HPCwire 8

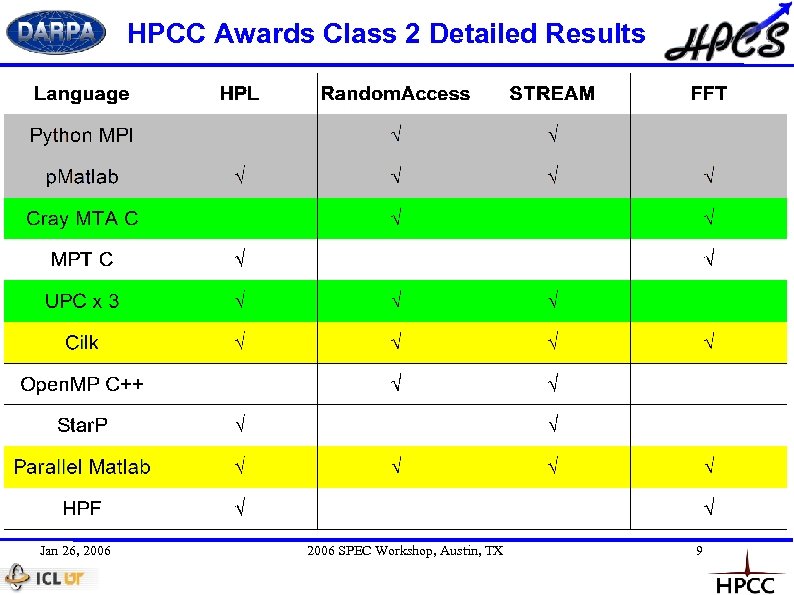

HPCC Awards Class 2 Detailed Results Jan 26, 2006 SPEC Workshop, Austin, TX 9

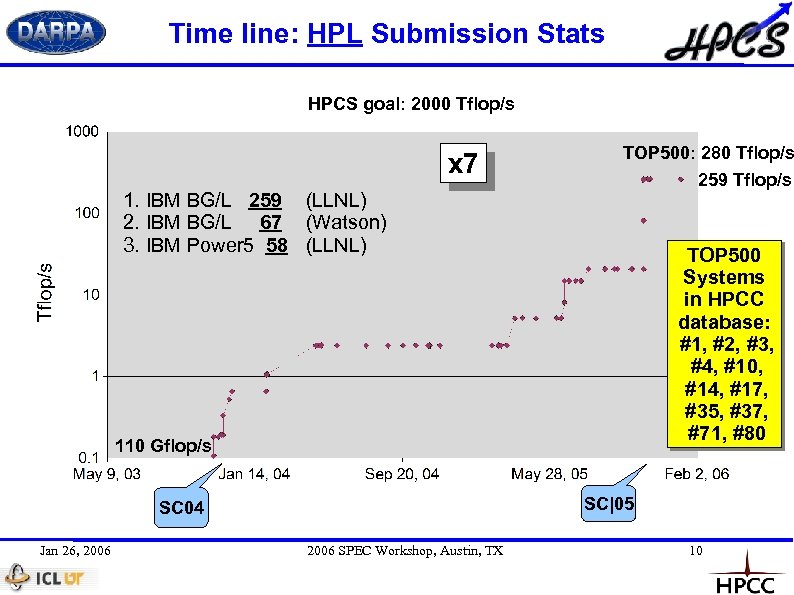

Time line: HPL Submission Stats HPCS goal: 2000 Tflop/s x 7 1. IBM BG/L 259 (LLNL) 2. IBM BG/L 67 (Watson) 3. IBM Power 5 58 (LLNL) TOP 500: 280 Tflop/s 259 Tflop/s TOP 500 Systems in HPCC database: #1, #2, #3, #4, #10, #14, #17, #35, #37, #71, #80 110 Gflop/s SC|05 SC 04 Jan 26, 2006 SPEC Workshop, Austin, TX 10

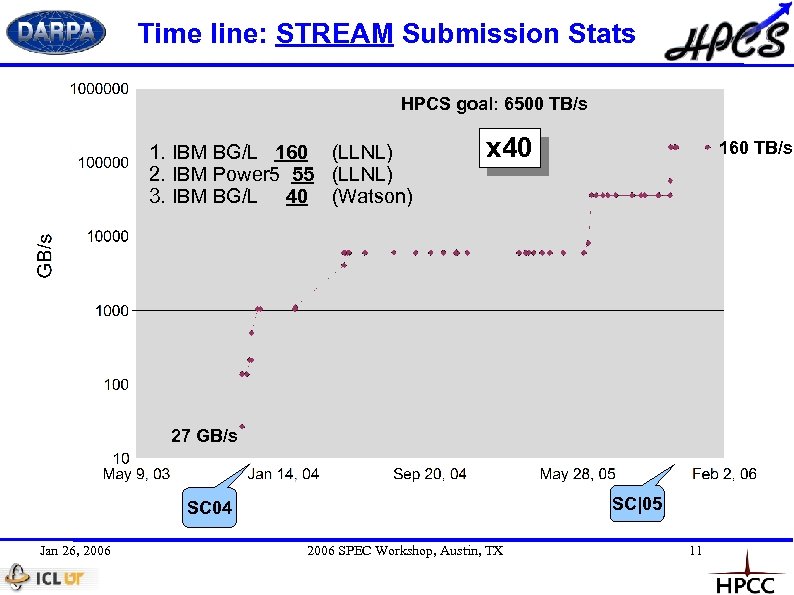

Time line: STREAM Submission Stats HPCS goal: 6500 TB/s 1. IBM BG/L 160 (LLNL) 2. IBM Power 5 55 (LLNL) 3. IBM BG/L 40 (Watson) x 40 160 TB/s 27 GB/s SC|05 SC 04 Jan 26, 2006 SPEC Workshop, Austin, TX 11

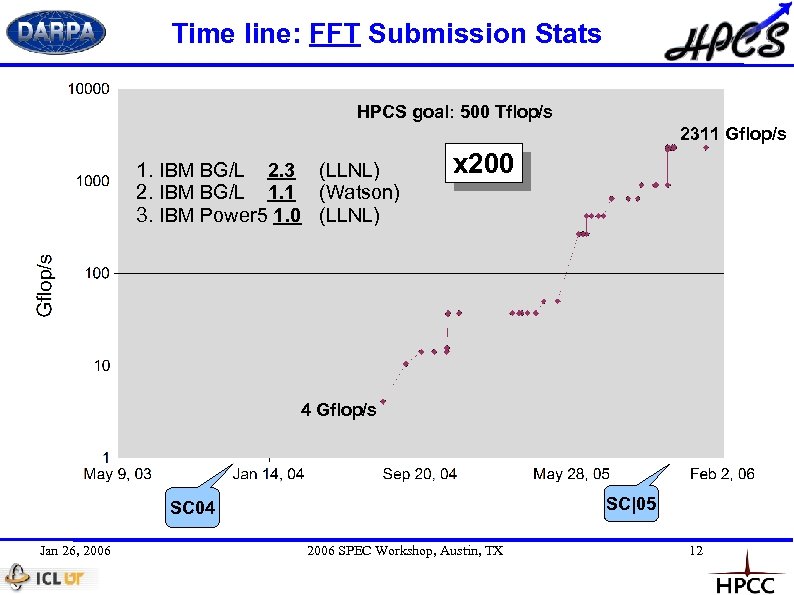

Time line: FFT Submission Stats HPCS goal: 500 Tflop/s 2311 Gflop/s 1. IBM BG/L 2. 3 (LLNL) 2. IBM BG/L 1. 1 (Watson) 3. IBM Power 5 1. 0 (LLNL) x 200 4 Gflop/s SC|05 SC 04 Jan 26, 2006 SPEC Workshop, Austin, TX 12

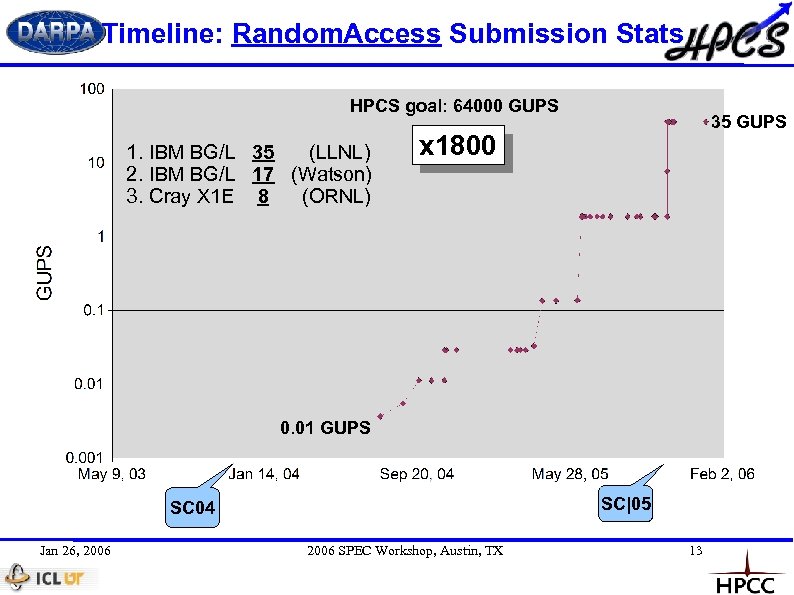

Timeline: Random. Access Submission Stats HPCS goal: 64000 GUPS 1. IBM BG/L 35 (LLNL) 2. IBM BG/L 17 (Watson) 3. Cray X 1 E 8 (ORNL) 35 GUPS x 1800 0. 01 GUPS SC|05 SC 04 Jan 26, 2006 SPEC Workshop, Austin, TX 13

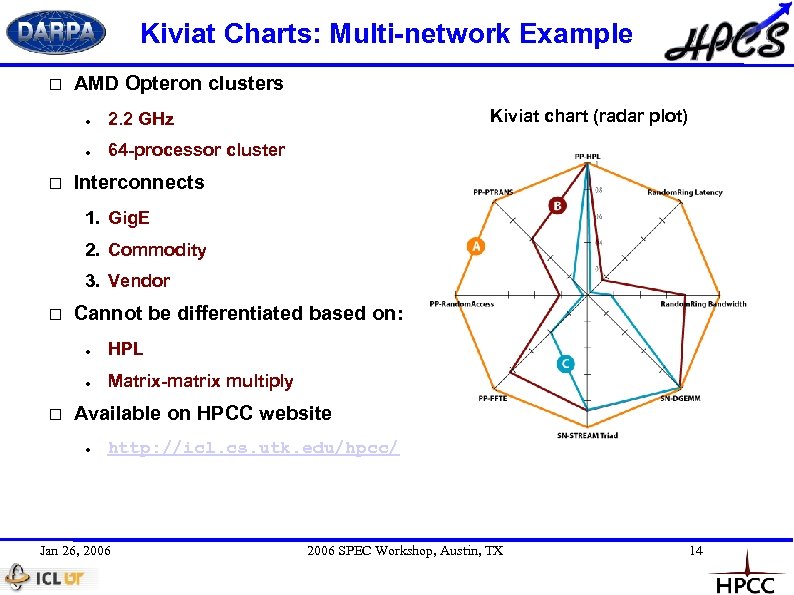

Kiviat Charts: Multi-network Example AMD Opteron clusters ● ● Kiviat chart (radar plot) 2. 2 GHz 64 -processor cluster Interconnects 1. Gig. E 2. Commodity 3. Vendor Cannot be differentiated based on: ● ● HPL Matrix-matrix multiply Available on HPCC website ● http: //icl. cs. utk. edu/hpcc/ Jan 26, 2006 SPEC Workshop, Austin, TX 14

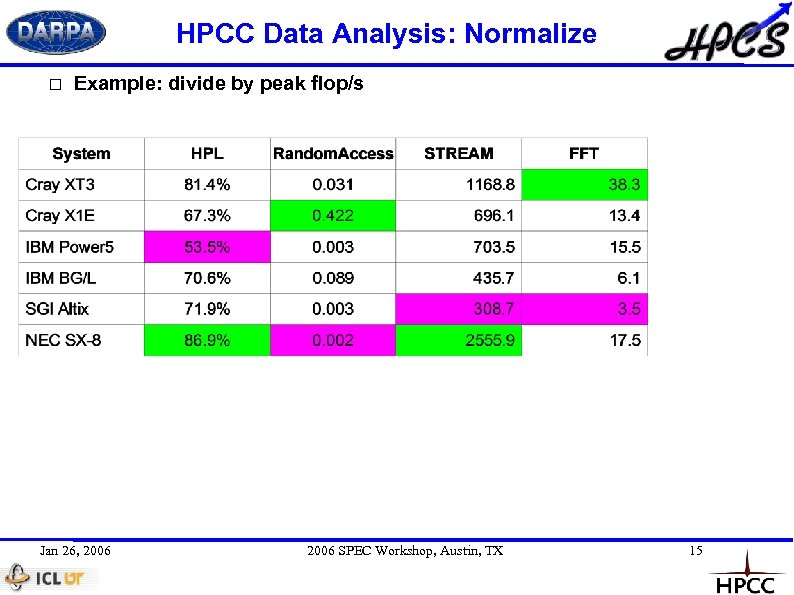

HPCC Data Analysis: Normalize Example: divide by peak flop/s Jan 26, 2006 SPEC Workshop, Austin, TX 15

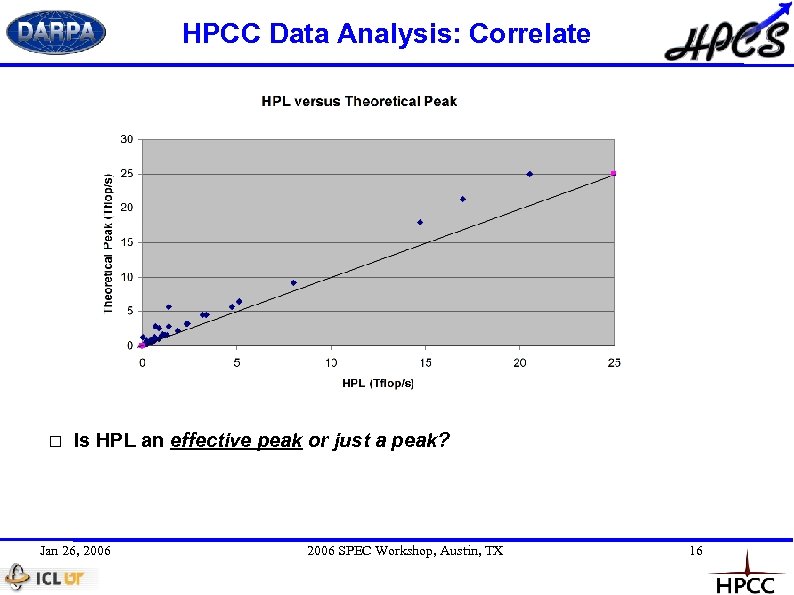

HPCC Data Analysis: Correlate Is HPL an effective peak or just a peak? Jan 26, 2006 SPEC Workshop, Austin, TX 16

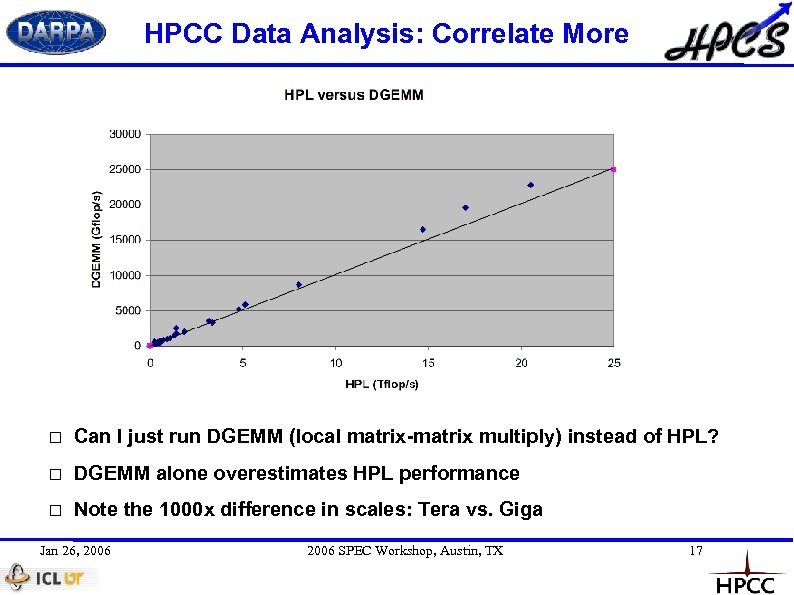

HPCC Data Analysis: Correlate More Can I just run DGEMM (local matrix-matrix multiply) instead of HPL? DGEMM alone overestimates HPL performance Note the 1000 x difference in scales: Tera vs. Giga Jan 26, 2006 SPEC Workshop, Austin, TX 17

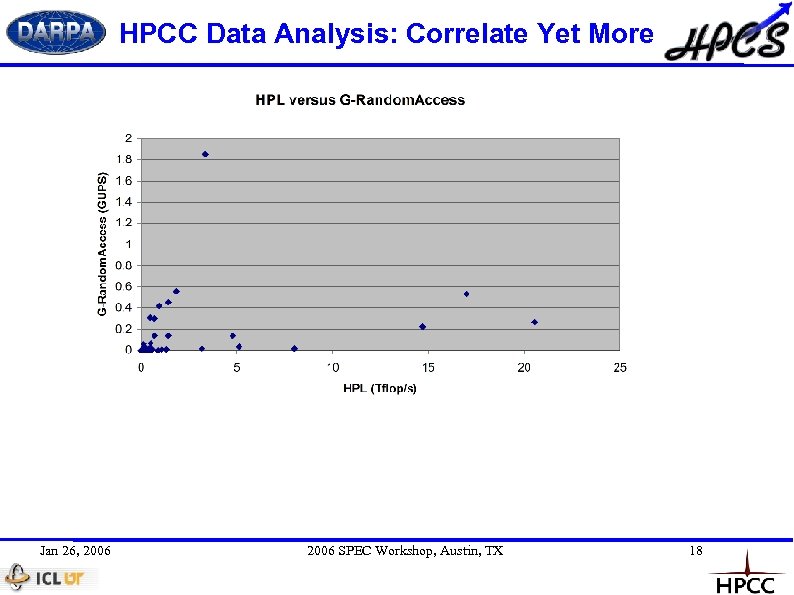

HPCC Data Analysis: Correlate Yet More Jan 26, 2006 SPEC Workshop, Austin, TX 18

Future Directions Reduce execution time ● Preserve relevance of existing results Add new tests but not duplicate effort ● ● I/O ● Sparse matrix operations Smith-Waterman (sequence alignment) Porting ● Cell/PS 3 ● Languages Co-Array Fortran HPCS languages: Chapel, Fortress, X 10 ● Environments ● Paradigms Jan 26, 2006 SPEC Workshop, Austin, TX 19

Collaborators David Bailey ● ● ISI/USC Jan 26, 2006 IBM Austin «» AMD Rolf Rabenseifner ● ANL John Mc. Calpin ● MITRE Bob Lucas Rusty Lusk ● MIT Lincoln Lab David Koester ● NERSC/LBL Jeremy Kepner ● HLRS Stuttgart Daisuke Takahashi ● Tsukuba, Japan 2006 SPEC Workshop, Austin, TX 20

c6b4229637978904720eb4cade44d3a7.ppt